Abstract

Background

The use of electronic medical records can improve the technical quality of care, but requires a computer in the exam room. This could adversely affect interpersonal aspects of care, particularly when physicians are inexperienced users of exam room computers.

Objective

To determine whether physician experience modifies the impact of exam room computers on the physician–patient interaction.

Design

Cross-sectional surveys of patients and physicians.

Setting and Participants

One hundred fifty five adults seen for scheduled visits by 11 faculty internists and 12 internal medicine residents in a VA primary care clinic.

Measurements

Physician and patient assessment of the effect of the computer on the clinical encounter.

Main Results

Patients seeing residents, compared to those seeing faculty, were more likely to agree that the computer adversely affected the amount of time the physician spent talking to (34% vs 15%, P = 0.01), looking at (45% vs 24%, P = 0.02), and examining them (32% vs 13%, P = 0.009). Moreover, they were more likely to agree that the computer made the visit feel less personal (20% vs 5%, P = 0.017). Few patients thought the computer interfered with their relationship with their physicians (8% vs 8%). Residents were more likely than faculty to report these same adverse effects, but these differences were smaller and not statistically significant.

Conclusion

Patients seen by residents more often agreed that exam room computers decreased the amount of interpersonal contact. More research is needed to elucidate key tasks and behaviors that facilitate doctor–patient communication in such a setting.

Key words: computers, electronic medical record, physician–patient relations

Background

Effective doctor–patient communication can influence a number of important outcomes, such as patient satisfaction, adherence with provider recommendations, resolution of concerns, pain control, and physiologic measures like blood pressure and blood sugar.1,2 Likewise, the doctor–patient relationship has been linked with physician behaviors during visits and to improved functional health status, increased adherence to antiretroviral therapy.3–5 Giving information and patient counseling during clinical encounters, in particular, were associated with patient satisfaction.6 Poor doctor–patient communication, including poor delivery of information and failure to understand patient perspectives, can have litigious outcomes.7,8 Compared to other western countries, patients in the United States often experience breakdowns in communication with physicians.9 Communication problems among colleagues and with patients can result in medical errors.10 Effective communication facilitated participatory decision-making and was associated with lower levels of medical errors.11,12

Computers and electronic medical records (EMR) have been adopted in healthcare settings in an attempt to provide efficient care and to reduce medical errors. Computerized physician order entry and clinical decision support systems have been effective in reducing medication errors.13 While fewer than 1 in 5 practices in the United States currently use EMR in the examination room,14 it is certain that the use of EMR will become increasingly widespread over the next several years. The Institute of Medicine has strongly argued for increased use of EMRs 15 based on considerable evidence that they will improve the technical quality of care, including provision of preventive services, monitoring of drug therapy, and adherence to evidence-based guidelines.16–18

The doctor–patient interaction is likely to be influenced by additional computer-related tasks and nonverbal behaviors related to further advances in information technology. Nonverbal behaviors, associated with emotional aspects of care, may influence patient adherence, satisfaction, and the doctor–patient relationship.19,20

Little research has addressed the impact of exam room computers on the doctor–patient interaction in primary care,21,22 where the development of effective therapeutic relationships may be as important as whether technical measures of quality care are satisfied. It is possible that, as a physician spends more time interacting with the computer, he or she may have less time to interact effectively with the patient. The use of the computer might lead to a loss of eye contact, less psychosocial talk, and decreased sensitivity to patient responses due to loss of access to nonverbal communication. These effects could diminish the quality of the doctor–patient communication.

To explore these issues we compared the impact of an exam room computer on doctor–patient interactions at the New York VA Medical Center during clinic encounters with faculty physicians versus those with resident physicians. We hypothesized that the computer would interfere less with the interaction when the physician was a faculty member because faculty are likely more experienced than residents at integrating clinical and computer tasks during a visit. This paper is a secondary analysis of data collected to compare patient and physician perceptions of the quality of care of individual visits.

Methods

Physician and Patient Recruitment

All Internal Medicine residents [32 post graduate year (PGY)-2 and PGY-3] and primary care faculty (18 internists) who were practicing at the VA New York Harbor Healthcare System Primary Care Clinic for at least 1 year were eligible to participate. Eligible physicians were recruited and enrolled if they signed an informed consent. We planned to recruit patients over a 4-month enrollment period (between March and June of 2003). All patients who had at least 2 visits with respective study physicians during the preceding 2 years were eligible. On each half-day of clinic, we created a list of eligible patients who had scheduled follow-up visits with study physicians. A research assistant asked eligible patients to participate at the time they arrived for their appointment. Both physicians and patients provided informed consent prior to participation. Participating physicians completed a baseline questionnaire at the time they agreed to participate. Immediately following a visit with a participating patient, both enrolled physicians and their patients were given questionnaires. Physicians completed a 1-page questionnaire in their office. Patients completed a 4-page questionnaire while waiting to be checked out in the waiting room. To optimize the distribution of patients among physicians, we sought to complete data collection on at least 6 patients for each physician and stopped enrollment when each had 10. The Institutional Review Boards at New York University and VA New York Harbor Healthcare System approved the study.

Study Questionnaires

We developed 3 questionnaires for the present study. The patient questionnaire had 32 items to determine patient demographics, visit satisfaction, quality of care, and the impact of the computer on doctor–patient interaction. We used a 5-point Likert scale (1 = poor, 5 = excellent) for visit satisfaction and quality-of-care items. The visit satisfaction items were taken from an instrument used in the Medical Outcomes Study to measure overall satisfaction and satisfaction with the most recent physician visit.23 Several clinician-educators experienced with the use of computers in patient care settings reviewed the instruments for face validity. We did cognitive testing of the questionnaire with nonenrolled patients to assess feasibility.

The baseline physician questionnaire had 31 items. It assessed the demographics of the study physicians, as well as their experience with computers and EMR as pertinent to clinical care. The postvisit physician questionnaire had 13 items and assessed visit satisfaction, quality of care, and the effect of the computer on the doctor–patient interaction. They were pilot tested prior to use.

We developed 7 items that assessed the impact of the exam room computer on the doctor–patient interaction, embedded in the postvisit questionnaires. We hypothesized that, in the presence of a computer in the room, a set of verbal and nonverbal behaviors may change, depending on the experience with EMR and medical use of computers. Therefore, in developing the doctor–patient interaction assessment tool, we asked physicians and patients to give opinions on the following: the degree to which the use of the computer decreased the time physicians spent talking to, looking at, and examining the patients; to what extent the computer interfered with the doctor–patient relationship; and to what extent the physician’s use of the computer made the visit feel less personal. These items used a 5-point Likert scale with the following anchors: 1 = strongly disagree, 3 = neutral, and 5 = strongly agree. Physicians and patients were also asked to estimate the duration of the visit, and the proportion of visit time that the doctor spent interacting directly with the computer. They were told that this included time spent working with the mouse or keyboard or looking at the computer screen. The wording of each item is presented in Table 1. Medical records were reviewed following the visit to determine the number of prior visits with the same provider, number of medical illnesses, history of psychiatric disorders, and number of active prescriptions.

Table 1.

Key Items from Postvisit Questionnaires Completed by Physician and Patient

| Patient questions | Physician questions |

|---|---|

| Because of the computer, the doctor spent less time looking at me than I liked during the visit | Because of the computer, I spent less time looking at the patient than I liked during the visit |

| Because of the computer, the doctor spent less time talking with me than I liked during the visit | Because of the computer, I spent less time talking with the patient than I liked during the visit |

| Because of the computer, the doctor spent less time on the physical examination than I liked during the visit | Because of the computer, I spent less time on the physical exam than I liked during the visit |

| The use of computers in the office interfered with my relationship with my doctor | The use of computers in the office interfered with my relationship with this patient |

| The use of the computer in the office made the visit with my doctor feel less personal | The use of the computer in the office made the visit feel less personal |

| The use of computers in the clinic has improved my relationship with my doctor | The use of computers in the clinic has improved my relationship with this patient |

| I am satisfied with the relationship I have with my doctor | I am satisfied with the relationship I have with this patient |

Likert scale: strongly agree; agree; neutral; disagree; strongly disagree.

Statistical Analyses

We compared characteristics of resident and faculty physicians using simple χ2 and t tests, as appropriate. We compared characteristics of patients seeing resident and faculty physicians using similar tests because there was no reason to suspect clustering by physician.

We created a dichotomous variable by collapsing the responses strongly agree, and agree to “agree” and strongly disagree, disagree, and neutral to “do not agree.” We had qualitatively similar results if neutral responses were dropped or if the Likert scale was not converted to a dichotomous variable.

For our analyses of items addressing the visit, we used the physician–patient dyad as the unit of analysis. Because it was likely that there would be clustering at the physician level, we adjusted for clustering using mixed effects models.24 We compared visits to attending and resident physicians using generalized estimating equations score tests, based on the mixed effects logistic models. We used these same models to control for potential confounders. For clarity, we present results as means and proportions, not adjusted for clustering, as is appropriate for this type of data. We report 2-sided P values. We considered P values less than 0.05 to be significant, without correction for multiple comparisons. We collected all data onto paper forms and transferred them to an Excel spreadsheet. We performed analyses using PC-SAS version 8.2.

Results

Baseline Characteristics of Physicians and Patients

Eleven faculty members (61%) agreed to participate, as did 12 (PGY-2 and PGY-3) residents (38%), giving us a sample of 23 physicians for analysis. As expected, resident physicians were younger and had not been working at the VA as long as faculty physicians. Resident and faculty physicians reported generally similar computer skills, but faculty rated their knowledge of EMR and medical computing skills slightly higher than that of resident physicians. As expected in a VA population, the 155 patient participants were elderly (mean age = 70.7 years old), male (97%), and on multiple medications (mean = 7.1). Patients seen by the resident and faculty physicians were similar. Nearly one-third of the patients in each group had 3 or fewer visits with the same physician. As expected, patients who saw resident physicians were less likely to have long-term relationships (10 or more visits) (Table 2).

Table 2.

Characteristics of Study Population by Status of Physician

| Residents n = 12 | Faculty physicians n = 11 | P value* | |

|---|---|---|---|

| Characteristics of physicians | |||

| Age in years (mean, SD) | 30.1 (2.7) | 42.3 (9.7) | 0.002 |

| Gender (% male) | 66.7 | 36.4 | 0.22 |

| Years at VA (mean, SD) | 2.2 (0.39) | 6.0 (4.8) | 0.02 |

| Typing skills (mean, SD) | 3.2 (1.1) | 3.6 (0.67) | 0.24 |

| Skill with CPRS (mean, SD) | 3.7 (0.89) | 3.9 (0.54) | 0.44 |

| General computer skills (mean, SD) | 3.2 (0.94) | 3.4 (0.92) | 0.62 |

| Knowledge of EMR (mean, SD) | 3.3 (0.78) | 3.9 (0.54) | 0.05 |

| Medical computing skills (mean, SD) | 2.9 (0.79) | 3.6 (0.92) | 0.06 |

| Characteristics of patients (N = 155) | |||

| Patient age in years (mean, SD) | 69.5 (11) | 71.8 (11) | 0.19 |

| Patient race (% non-Hispanic white) | 64.9 | 57.7 | 0.35 |

| Patient gender (% male) | 100 | 93.5 | 0.06 |

| Years going to VA (%) | |||

| 1–5 | 32.5 | 29.5 | |

| 6–19 | 37.7 | 33.3 | 0.43 |

| 20 or more | 30.0 | 37.2 | |

| Number of medications (mean, SD) | 7.2 (4.8) | 7.0 (4.7) | 0.73 |

| Number of prior visits to this doctor (%) | |||

| 1–3 | 28.6 | 30.8 | |

| 4–5 | 44.2 | 15.4 | 0.001 |

| 6–10 | 26.0 | 23.1 | |

| More than 10 | 1.3 | 30.8 | |

| Marital status | |||

| Single | 35.5 | 18.4 | |

| Married/domestic partner | 32.9 | 40.8 | 0.06 |

| Divorced/separated | 23.7 | 23.7 | |

| Widowed | 7.9 | 17.1 | |

| At least some college (%) | 40.3 | 49.3 | 0.26 |

*χ2 test; Mantel–Haenszel χ2 test for trend; 2-sample t test as appropriate

CPRS computerized patient record system, EMR electronic medical record.

Patients seeing resident physicians were less likely than patients seeing faculty to strongly agree that they were satisfied with their overall relationship with the physician (50% vs 71%, P = 0.02). Moreover, patients seeing resident physicians were less likely to rate the quality of the index visit as excellent (38% vs 62%, P = 0.005).

Effect of the Exam Room Computer on Doctor–Patient Interaction

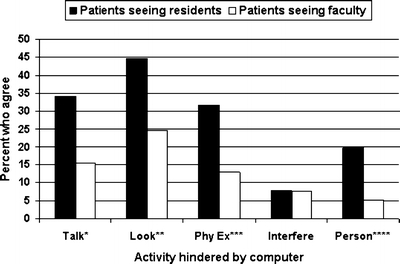

Patients seeing residents, compared to those seeing faculty, were more likely to agree that the computer adversely affected the amount of time the physician spent talking to (34% vs 15%, P = 0.01), looking at (45% vs 24%, P = 0.02), and examining them (32% vs 13%, P = 0.009). They were also more likely to agree that the computer made the visit feel less personal (20% vs 5%, P = 0.017). However, very few patients (8%) thought that the computer interfered with their relationship with the physician, and this was similar for patients seeing both residents and faculty (Fig. 1).

Figure 1.

Patient report of whether the computer adversely affects the doctor–patient interaction at a given clinic visit, comparing visits to resident and faculty physicians.

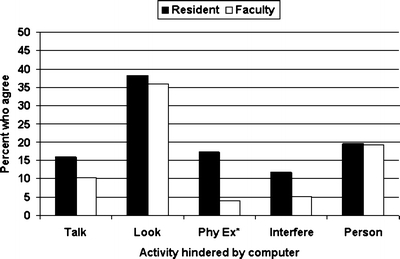

The pattern of responses was similar when physicians rated the effect of the exam room computer on the same activities (Fig. 2). None of the differences between resident and faculty physicians were statistically significant for physician ratings, though residents tended to be more likely to report that the presence of an exam room computer led them to spend less time performing the physical exam (17% vs 4%, P = 0.07).

Figure 2.

Physician report of whether the computer adversely affects the doctor–patient interaction at a given clinic visit, comparing visits to resident and faculty physicians.

Patients seeing faculty physicians estimated the physician spent a smaller proportion of visit time interacting with the computer than patients seeing resident physicians, although their estimates of visit duration were similar. This pattern was also seen in physician estimates, but did not achieve statistical significance (Table 3).

Table 3.

Comparison of Computer Use during Resident and Faculty Physician Visits as Reported by Patients

| Residents n = 12 | Faculty n = 11 | P value* | |

|---|---|---|---|

| Patient report | |||

| Visit duration in minutes (%) | |||

| 0–20 | 33.8 | 35.9 | |

| 20–30 | 33.8 | 42.3 | 0.28 |

| 31–40 | 19.5 | 12.8 | |

| >40 | 13.0 | 9.0 | |

| Proportion of visit where physician was interacting with the computer (%) | |||

| 0–10% | 29.3 | 41.3 | |

| 11–25% | 28.0 | 34.7 | 0.028 |

| 25–50% | 29.3 | 16.0 | |

| 50–100% | 13.3 | 8.0 | |

| Physician report | |||

| Visit duration in minutes (%) | |||

| 0–20 | 40.3 | 37.2 | |

| 20–30 | 39.0 | 38.5 | 0.45 |

| 31–40 | 18.2 | 18.0 | |

| >40 | 2.6 | 6.4 | |

| Proportion of visit where physician was interacting with the computer (%) | |||

| 0–10% | 9.1 | 9.0 | |

| 11–25% | 27.3 | 47.4 | 0.10 |

| 25–50% | 41.6 | 25.6 | |

| 50–100% | 22.1 | 18.0 | |

*P values calculated with Mantel–Haenszel χ2 test for trend

Discussion

There are theoretical reasons to suspect both positive and negative effects of the computer on doctor–patient interaction and relationship. For example, more ready access to clinical data might allow the physician to devote more time to the interpersonal aspects of patient care. On the other hand, if significant time and effort is required for data entry, there may be less time for those same aspects of patient care. Experience with computers and EMR may significantly impact interpersonal aspects of care. In a VA setting where the computer is an essential part of nearly every doctor–patient interaction, we found that patients seeing trainees were much more likely to report potentially negative effects of computers on the clinical interaction compared with patients seeing faculty physicians. In addition, 8% of patients seen by faculty and resident physicians agreed that the exam room computer interfered with their relationship with the doctor.

Our data, and a review of the literature, permit no definitive conclusions about whether computers have a negative effect on the doctor–patient interaction overall. Makoul and colleagues found that the use of an exam room computer was associated with a more active (better) role in encouraging questions and clarifying information, but less attention to outlining the patient’s agenda and exploring psychosocial issues.25 Work by Frankel and colleagues suggests a similar tension. In their qualitative study of videotaped encounters, the computer seemed to amplify both poor and good organizational and behavioral skills (both verbal and nonverbal).22 Interestingly, they found that facility with the use of the computer and optimizing location of the computer could facilitate communication, suggesting a mechanism for our observation of a more favorable effect of the computer for more experienced users.22 Although other studies have reached more positive 26–31 or negative 32–34 conclusions about the effect of computers on communication, methodological differences preclude direct comparisons with our findings.21

Prior research about residents’ use of computers focused on physician acceptance, which typically was dominated by usability concerns. A 1995 Canadian study found that first-year family medicine residents had limited computer knowledge and skills.35 In a 2001 study, residents recognized the benefits of computers and EMR in outpatient clinics, but showed ambivalence and frustration toward using EMR.36 Embi and colleagues found that both faculty and resident physicians in a VA setting felt that EMR could negatively affect communication and patient care.37 Our study, which assessed actual patient perceptions, confirms that suspicion, and suggests that residents may be more at risk for these negative effects. In the same vein, we found residents were less satisfied than faculty with visit quality and their relationship with patients and perceived that they spent more visit time directly interacting with the computer. One possible explanation is facility with the software. Although resident physicians reported as much general computer knowledge as faculty, they had less familiarity with the use of EMR. Although it is likely that both residents and faculty have limited formal training in exam room computing, experience may be able to compensate for the lack of training.

Our study has several limitations. Most importantly, the fact that exam room computing competence was the independent variable of interest, we did not measure this directly. Rather, we relied on comparison of resident versus faculty status. Because the patients of faculty physicians tended to rate their overall care more highly than those seeing residents, the association of physician experience with the effect of the computer may represent a halo effect in which patients rate faculty physicians more favorably in all areas, including use of the computer. Arguing against this conclusion is the fact that factor analyses statistically confirmed that the patients’ answers to the computer questions and their ratings of quality and general satisfaction loaded on distinct factors.

Additionally, controlling for patient-rated quality of care or satisfaction in multivariable analyses did not attenuate the relationship between resident status and patient reports of negative effects of the computer. Finally, we note that faculty rated their knowledge of EMR and their medical computing skills more highly than residents, even though residents and faculty gave similar ratings to their own general computer skills. This supports our theoretical assumption that faculty status is a reasonable surrogate for skill in using an exam room computer. We used faculty status rather than self-reported knowledge of EMR because it is less susceptible to bias. However, we did note a relationship between self-reported knowledge of the EMR and patient responses to several of the questions about negative effects of the computer on doctor–patient interaction (data not shown).

A second limitation is the possibility that our results might reflect patients’ greater familiarity with faculty physicians because of their longer time at the VA. We found, however, that years at the VA and number of previous visits were unrelated to negative patient ratings of faculty. Unfortunately, the high correlation between these variables and faculty versus resident status precluded multivariable analysis.

As the third limitation, because we studied physicians at a single site during a 4-month window, we may have discovered some deficit in the orientation of residents to the computerized record at that site. This particular clinical site has an overwhelmingly white, male, elderly patient population. However, all the residents had been using the computer for a year in the ambulatory setting and ward rotations and rated their familiarity with the software similar to the faculty. We have no reason to believe that these findings are somehow unique to the demographic group we studied, although certainly replication in other settings is warranted. Similarly, our low response rate among both faculty (61%) and residents (38%) raises the possibility that this is a biased sample. Again, we acknowledge that replication of our results in larger, more representative samples is warranted. Finally, because our data are based on surveys, we cannot comment on the behaviors that led to the differences in perception. Certainly, the observation that residents spent a greater proportion of the encounter interacting with the computer suggests that paying attention to the patient rather than the computer may be part of the effect.

Despite these limitations, we think that our study raises key issues about doctor–patient communication in the presence of a computer and has implications for communication skills training when a computer is an integral part of the primary care encounter. Future research should attempt to analyze videotaped clinical encounters of physicians and patients to determine key human- and technology-related factors that can positively or negatively influence doctor–patient interaction. Structured interviews and focus group studies of residents, faculty physicians, and patients should also be conducted on this topic to highlight specific issues and concerns that may lead to the different perceptions of exam room computing among different groups. With this knowledge in hand, communication skills curricula can be developed and refined to include relationship building tasks and behaviors that optimize the doctor–patient relationship where a computer is actively used in the care process.

Acknowledgment

We thank Dr. Stewart Babbott, MD, for his editorial review of the manuscript. The corresponding author conducted the research during his general Internal Medicine fellowship, which was supported by a grant from the Health Resources and Services Administration. The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of VA.

Potential Financial Conflicts of Interest None disclosed.

References

- 1.Inui TS, Carter WB. Problems and prospects for health services research on provider–patient communication. Med Care. 1985;23(5): 521–38. [DOI] [PubMed]

- 2.Stewart MA. Effective physician–patient communication and health outcomes: a review. CMAJ. 1995;152:1423–33. [PMC free article] [PubMed]

- 3.Hall JA, Horgan TJ, Stein TS, Roter DL. Liking in the physician–patient relationship. Patient Educ Couns. 2002 Sep;48(1):69–77. [DOI] [PubMed]

- 4.Kaplan SH, Greenfield S, Ware JE, J. Assessing the effects of physician–patient interactions on the outcomes of chronic disease. Med Care.1989;27:S110–S127. [DOI] [PubMed]

- 5.Schneider J, Kaplan SH, Greenfield S, Li W, Wilson IB. Better physician–patient relationships are associated with higher reported adherence to antiretroviral therapy in patients with HIV infection. J Gen Intern Med. 2004 Nov;19(11):1096–103. [DOI] [PMC free article] [PubMed]

- 6.Roter DL, Hall JA, Katz NR. Relations between physicians’ behaviors and analogue patients’ satisfaction, recall, and impressions. Med Care.1987;25:437–51. [DOI] [PubMed]

- 7.Beckman HB, Markakis KM, Suchman AL, Frankel RM. The doctor–patient relationship and malpractice. Lessons from plaintiff depositions. Arch Intern Med. 1994;154(12):1365–70. [DOI] [PubMed]

- 8.Lester GW, Smith SG. Listening and talking to patients. A remedy for malpractice suits? West J Med. 1993;158:268–72 [PMC free article] [PubMed]

- 9.Blendon RJ, Schoen C, Desroches C, Osborn R, Zapert K. Common concerns amid diverse systems: health care experiences in five countries. Health Aff (Millwood). 2003 May–Jun;22(3):106–21. [DOI] [PubMed]

- 10.Woolf SH, Kuzel AJ, Dovey SM, Philips RL, Jr. A String of mistakes: the importance of cascade analysis in describing, counting, and preventing medical errors. Ann Fam Med. 2004 Jul–Aug;2(4):292–93 [DOI] [PMC free article] [PubMed]

- 11.Greenfield S, Kaplan S, Ware, JE, Jr. Expanding patient involvement in care. Effects on patient outcomes. Ann Intern Med. 1985 Apr;102(4): 520–28. [DOI] [PubMed]

- 12.Hulka BS, Cassel JC, Kupper LL, Burdette JA. Communication, compliance, and concordance between physicians and patients with prescribed medications. Am J Public Health. 1976;66:847–53. [DOI] [PMC free article] [PubMed]

- 13.Bates DW, Teich JM, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc. Jul–Aug 1999;6(4):313–21. [DOI] [PMC free article] [PubMed]

- 14.Versel, N. One in five group practices now use EHRs. Health IT World News Newsletter 2. 1-25-2005. Health-IT World. 11-15-2005

- 15.Institute of Medicine, Committee on improving the patient record. The computer-based patient record: An essential technology for health care. Washington, DC: National Academy Press, 1997.

- 16.Rollman BL, Hanusa BH, Lowe HJ, Gilbert T, Kapoor WN, Schulberg HC. A randomized trial using computerized decision support to improve treatment of major depression in primary care. J Gen Intern Med. 2002; 17:493–503. [DOI] [PMC free article] [PubMed]

- 17.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med. 2003;163:1409–16. [DOI] [PubMed]

- 18.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280:1339–46. [DOI] [PubMed]

- 19.Roter DL, Frankel RM, Hall JA, Sluyter D. The expression of emotion through nonverbal behavior in medical visits. Mechanisms and Outcomes. J Gen Intern Med. 2006 Jan;21(Suppl 1):S28–S34. [DOI] [PMC free article] [PubMed]

- 20.Waitzkin H. Doctor–patient communication. Clinical implications of social scientific research. JAMA. 1984 Nov 2;252(17):2441–46. [DOI] [PubMed]

- 21.Mitchell E, Sullivan F. A descriptive feast but an evaluative famine: systematic review of published articles on primary care computing during 1980–97. BMJ. 2001;322:279–82. [DOI] [PMC free article] [PubMed]

- 22.Frankel R, Altschuler A, George S, et al. Effects of exam-room computing on clinician–patient communication: a longitudinal qualitative study. J Gen Intern Med. 2005;20:677–82. [DOI] [PMC free article] [PubMed]

- 23.Rubin HR, Gadnek B, Rogers WH, Kosinsky M. Patients’ ratings of outpatients visits in different practice settings: results from the Medical Outcome Study. JAMA. 1993:270:835–40. [DOI] [PubMed]

- 24.Liang KY, Zeger SL. Longitudinal data analysis using generalized linear models. Biometrika. 1986;73:13–22. [DOI]

- 25.Makoul G, Curry RH, Tang PC. The use of electronic medical records: communication patterns in outpatient encounters. J Am Med Inform Assoc. 2001;8:610–5. [DOI] [PMC free article] [PubMed]

- 26.Ridsdale L, Hudd S. Computers in the consultation: the patient’s view. Br J Gen Pract. 1994;44:367–9. [PMC free article] [PubMed]

- 27.Solomon GL, Dechter M. Are patients pleased with computer use in the examination room? J Fam Pract. 1995;41:241–4. [PubMed]

- 28.Ornstein S, Bearden A. Patient perspectives on computer-based medical records. J Fam Pract. 1994;38:606–10. [PubMed]

- 29.Gadd CS, Penrod LE. Dichotomy between physicians’ and patients’ attitudes regarding EMR use during outpatient encounters. Proc AMIA Symp. 2000;275–9. [PMC free article] [PubMed]

- 30.Legler JD, Oates R. Patients’ reactions to physician use of a computerized medical record system during clinical encounters. J Fam Pract. 1993;37:241–4. [PubMed]

- 31.Aydin CE, Rosen PN, Jewell SM, Felitti VJ. Computers in the examining room: the patient’s perspective. Proc Annu Symp Comput Appl Med Care. 1995;824–8. [PMC free article] [PubMed]

- 32.Herzmark G, Brownbridge G, Fitter M, Evans A. Consultation use of a computer by general practitioners. J R Coll Gen Pract. 1984;34:649–54. [PMC free article] [PubMed]

- 33.Warshawsky SS, Pliskin JS, Urkin J, et al. Physician use of a computerized medical record system during the patient encounter: a descriptive study. Comput Methods Programs Biomed. 1994;43:269–73. [DOI] [PubMed]

- 34.Als AB. The desktop computer as a magic box: patterns of behaviour connected with the desktop computer; GPs’ and patients’ perceptions. Fam Pract. 1997;14:17–23. [DOI] [PubMed]

- 35.Rowe BH, Ryan DT, Therrien S, Mulloy JV. First-year family medicine residents’ use of computers: knowledge, skills and attitudes. CMAJ. 1995;153:267–72. [PMC free article] [PubMed]

- 36.Aaronson JW, Murphy-Cullen CL, Chop WM, Frey RD. Electronic medical records: the family practice resident perspective. Fam Med. 2001; 33:128–32. [PubMed]

- 37.Embi PJ, Yackel TR, Logan JR, Bowen JL, Cooney TG, Gorman PN. Impacts of computerized physician documentation in a teaching hospital: perceptions of faculty and resident physicians. J Am Med Inform Assoc. 2004;11:300–9. [DOI] [PMC free article] [PubMed]