Abstract

Statistical reconstruction has become popular in emission computed tomography but suffers slow convergence (to the MAP or ML solution). Methods proposed to address this problem include the fast but non-convergent OSEM and the convergent RAMLA [1] for the ML case, and the convergent BSREM [2], relaxed OS-SPS and modified BSREM [3] for the MAP case. The convergent algorithms required a user-determined relaxation schedule. We proposed fast convergent OS reconstruction algorithms for both ML and MAP cases, called COSEM (Complete-data OSEM), which avoid the use of a relaxation schedule while maintaining convergence. COSEM is a form of incremental EM algorithm. Here, we provide a derivation of our COSEM algorithms and demonstrate COSEM using simulations. At early iterations, COSEM-ML is typically slower than RAMLA, and COSEM-MAP is typically slower than optimized BSREM while remaining much faster than conventional MAP-EM. We discuss how COSEM may be modified to overcome these limitations.

1. Introduction

In emission tomography, the reconstruction of vast quantities of noisy, low-count data in practical times remains a challenge. The convenient FBP (Filtered backprojection) reconstruction algorithm is widely used. However, FBP and its analytical relatives do not always lend themselves to specialized acquisition geometries. In addition, it has been shown that statistical reconstructions have outperformed FBP [4].

Statistical reconstruction is useful in emission computed tomography due to its ability to accurately model noise, imaging physics, and to incorporate prior knowledge about the object. Statistical reconstruction approaches use iterative algorithms that optimize objective functions based on maximum likelihood (ML) or maximum a posteriori (MAP, a.k.a. penalized likelihood) principles. An additional advantage of MAP and ML solutions is that their statistical properties such as covariance, mean and local point spread function can be predicted using theoretical expressions [5]. A drawback of statistical reconstruction algorithms is that they are slow. It is a challenge to devise convergent ML and MAP algorithms that require few iterations to approach the fixed-point solution and to reduce the number of the algorithm-specific parameters that must be hand-tuned. Earlier attempts to address the speed problem included preconditioned conjugate-gradient [7] and coordinate descent methods [8], but each of these had practical drawbacks.

In [9], an ordered subsets expectation maximization (OSEM) algorithm achieved an order of magnitude speedup over conventional (non-OS) EM by using only a subset of the projection data per sub-iteration. The OSEM algorithm is fast, parallelizable and preserves positivity. However, these early OS methods did not converge to the ML fixed-point solution. An algorithm [1], termed row-action maximum likelihood algorithm (RAMLA), used a relaxation schedule, i.e. a relaxation parameter at each iteration of an OSEM-like update, to attain convergence. The relaxation schedule had to satisfy certain properties as a prerequisite for convergence. In practice, this relaxation schedule must be determined by trial and error to ensure good speed. More recently, RAMLA was extended to the MAP case in BSREM (Block Sequential Modified EM) [2], modified BSREM [3], and relaxed OS-SPS (OS Separable Paraboloidal Surrogates) [3]. These MAP approaches also required a user-determined relaxation schedule. A practical solution to the problem of determining a relaxation schedule remains open.

To overcome the need of relaxation schedules in these OS algorithms, we formulated relaxation-free COSEM (Complete-Data OSEM) algorithms [11,12]. COSEM, while derived in terms of an algebraic transformation [13], is a form of incremental EM algorithm [14]. We independently introduced COSEM-ML and COSEM-MAP in terms of algebraic transformations [11,12], but learned shortly after that a COSEM-ML algorithm for emission tomography had been independently derived [15] using incremental-EM approaches [14]. No experimental results were presented in [15]. We later extended COSEM-MAP to a list-mode version [16]. Despite the appeal of COSEM as a relaxation-free convergent algorithm, simulations show that it is not as fast as the aforementioned convergent relaxation-based approaches.

In Sections 2 and 3, we state the problem and derive the COSEM-ML and MAP algorithms. In Section 4, we compare COSEM-MAP speed with that of competitors, and in Section 5, discuss how COSEM can be sped up to close the speed gap with its competitors while maintaining its advantages.

2. Statement of the Problem

Define the object to be an N-dim lexicographically ordered vector f with elements fj, j = 1, …, N. We model image formation by a simple Poisson model g ~ Poisson(ℋf), where ℋ is the system matrix whose element ℋij indicates the probability of receiving a count in detector bin i from pixel j, and g is the integer-valued random data vector (sinogram) with elements gi, i = 1, …, M.

The ML problem is written as the minimization of an objective function

| (1) |

where the ML objective corresponding to the usual (incomplete data) Poisson likelihood is given by

| (2) |

The MAP problem may be similarly stated as the minimization of the ML objective plus a prior objective Eprior. We consider quadratic priors of the form

| (3) |

with smoothing parameter β > 0, and a neighborhood system with weights wjj′. It will be convenient to define Einc–MAP ≡ Einc–ML + Eprior.

3. COSEM Objective and Algorithm

The direct minimization of Einc–ML is difficult. One way of carrying out the minimization is by the well-known ML-EM algorithm. The conventional EM derivation [6] is statistical in nature, but there exists an alternate means for deriving this algorithm via a minimization of a so-called complete data objective function. This objective function is derived from an algebraic transformation of Einc–ML(f) in Eq.(2), from the use of Jensen’s inequality [17]. We briefly summarize this step. From Jensen’s inequality and the convexity of − log(·), we have that

where the new ”complete data” Cij ≥ 0, ∀ij and and with equality occurring at This allows us to transform the original incomplete-data objective function in Eq.(2) to a new objective, Ecomp(f, C) given by:

| (4) |

Note that the sum over detector bins ∑i in Einc–ML in Eq.(2) is replaced in Eq.(4) by a sum over subsets ∑l∑i∈Sl where l = 1, …, L indexes subset number and Sl is the set of detector bins belonging to the lth subset. Here, Cij is the complete data, roughly analogous to the complete data as used in statistical derivations of EM-ML. From its Jensen’s inequality origin, we know that Cij is real and positive, and that it obeys the constraints ∑jCij = gi expressed in terms of a Lagrange parameter γi. Differentiating Eq.(4) w.r.t. C, setting the result to zero and solving for the Lagrange parameter vector γ by imposing the constraints ∑jCij = gi we get

| (5) |

Inserting the just established solution (5) into (4), we can write the identity

| (6) |

where Einc–ML(f) is the desired objective function in Eq.(2). We have shown that optimizing only over C (while keeping f fixed) in (4) results in Eq.(2). By optimizing Ecomp(f, C) to obtain solutions f̂, Ĉ, we also obtain the solution f̂ of Einc–ML [17].

One may maximize Ecomp(f, C) by a form of grouped coordinate ascent on subsets of C and f, which leads to a convergent and fast OS algorithm. Above, we showed that by first minimizing Eq.(4) w.r.t. C, we obtained Eq.(2). We now show that minimizing Eq.(4) w.r.t. subsets of C results in a convergent ordered subsets EM algorithm (COSEM). First, differentiate Eq.(4) w.r.t. a subset of C and set the result to zero. Solve for the subset Sl of C in terms of f and the Lagrange multiplier γi where i ∈ Sl. Then, as before, we can eliminate γi but only for i ∈ Sl by enforcing the constraints ∑jCij = gi, and obtain the update equation for the Sl subset of the complete data C. We then solve for f by setting the derivative of Eq.(4) w.r.t. f to zero. We then repeat the above procedure for the next subset and so on. One can obtain the final update for all subsets of C and f as shown below. The update, in fact, closely resembles that of OSEM itself, but the slight modifications introduced by COSEM-ML ensure convergence to the ML solution. It is worth examining the updates of both algorithms, and these are summarized below:

| (7) |

| (8) |

| (9) |

| (10) |

The updates Eq.(7) and Eq.(10) when combined, form the familiar OSEM update, while the updates Eq.(7), Eq.(8) and Eq.(9) constitute COSEM-ML [11]. The notation indicates the update of the jth voxel at the lth subiteration (corresponding to use of the lth subset) of iteration k. When all L subsets have been updated, k is incremented. A first look at details of these updates appears to indicate that COSEM-ML involves many more steps per iteration k than does OSEM, but in [17], we show that the amount of computation is about the same. The updates for COSEM-ML preserve positivity.

We are primarily interested in the MAP case, and note that a COSEM-MAP version is obtainable by adding a prior Eprior(f) to Eq.(4). The updates for C in the COSEM-MAP algorithm remain the same as in Eq.(7) and Eq.(8). When we attempt to derive an update analogous to Eq.(9), the prior introduces a coupling between voxels, and this causes difficulty in deriving a closed-form and parallel update for f. To solve this, we use the method of separable surrogates [21] replacing Eq.(3) by

| (11) |

at iteration k. Since the resulting MAP objective function including Eq.(4) and Eq.(11) is a simple, convex 1-D objective w.r.t. fj, we can trivially find that which minimizes it. Setting the derivative of the separable surrogate enhanced complete data MAP objective, Eqs.(4) plus (11), w.r.t. fj to zero, and defining vjj′ ≡ wjj′ + wj′j, and Dj = ∑iHij, we obtain the final COSEM-MAP update for f [12]:

| (12) |

where and

Note that the update preserves positivity and that the COSEM-MAP updates are parallel in the voxel space. The overall computation per (k, l) subiteration is close to that of OSEM.

4. Simulation Results

To assess COSEM-MAP speed we display the normalized objective difference (NOD), versus iteration number k defined as:

| (13) |

where fk ≡ f(k,0) and f0 is the initial estimate obtained via FBP. We define f* to be the true MAP solution at k = ∞, estimated from 5000 iterations of EM-MAP.

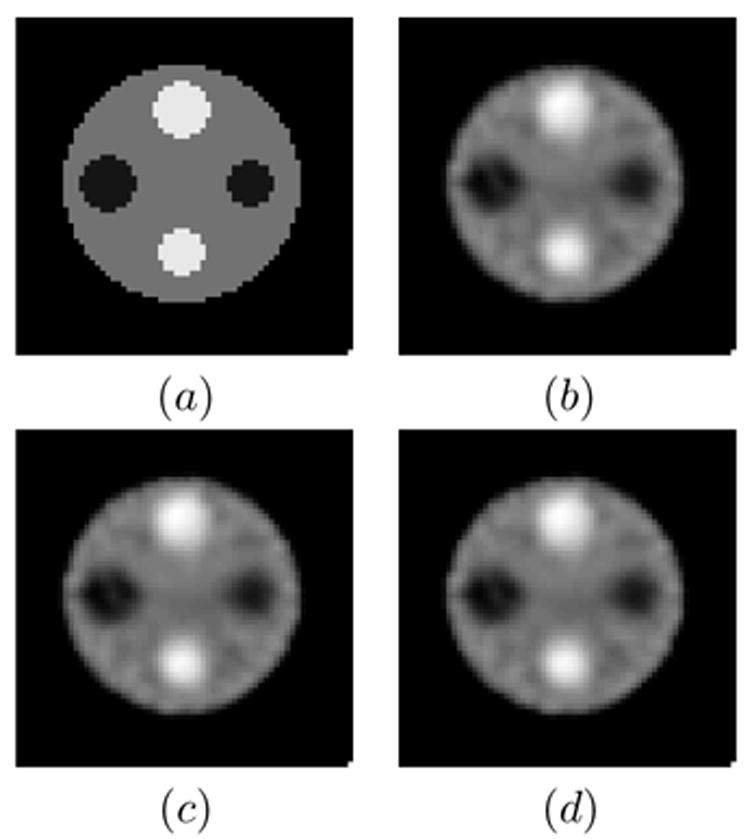

A sinogram (64 angles by 96 bins) with 300K counts was simulated using a 2D 64×64 phantom ( pixel = 5.6 mm), shown in Fig. 1(a). The phantom consists of a background and hot and cold lesions with a contrast ratio of 1:4:8 (cold:background:hot). Uniform attenuation (H2O at 140 KeV) and depth-dependent blur for a parallel collimator were modeled.

Figure 1.

The 2D 64×64 phantom is shown in (a), while the anecdotal reconstructions are displayed in (b) EM-MAP, (c) COSEM-MAP, and (d) BSREM.

Reconstructions were performed using EM-MAP, BSREM [2] and COSEM-MAP. (Note that EM-MAP is simply COSEM-MAP at L = 1 subset). We chose L = 8 subsets for BSREM and COSEM-MAP, and for all reconstructions set β = 0.06. The relaxation schedule for BSREM was chosen as: with α0 = 3.2. This schedule obeys the constraints in [2]. The schedule was chosen to attain rapid convergence at early iterations. Anecdotal reconstructions for each algorithm are shown in Fig. 1 at iteration 30.

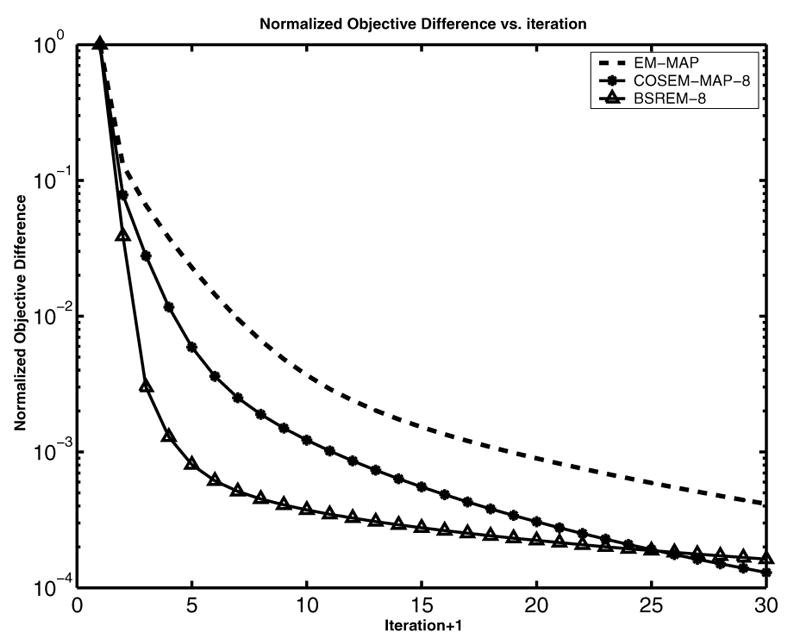

We plot NOD vs. k for each reconstruction in Fig. 2. At early iterations, the speed of COSEM-MAP lies between that of BSREM and EM-MAP, and by iteration 25, the NOD’s of COSEM-MAP and BSREM cross each other. We also plot NOD(k) (not shown here) for COSEM-MAP with varying numbers of subsets (L= 4, 8, 16, 32, 64). After an initial speedup at L = 4, further speedup with increasing L is modest and is not as good as that observed with competing OS-type algorithms.

Figure 2.

Normalized objective difference for EM-MAP, COSEM-MAP, and BSREM at L=8.

5. Discussion

We have derived new convergent complete data ordered subsets algorithms for ML (COSEM-ML) and MAP (COSEM-MAP) reconstruction. It is straightforward to include randoms or scatter via an affine term s̄, corresponding to , in the algorithms. Detailed convergence proofs are presented in [17].

It is unknown whether COSEM converges monotonically. (By monotonic, we mean that the objective decreases at each outer loop iteration k.) In our many simulations with clinically realistic imaging parameters, we have not yet observed non-monotonicity.

Though the simulations in Sec. 4 are not extensive, the results are quite typical, and the speed gap relative to relaxation-based methods cannot be closed by simply choosing a better L or initial condition. One might consider speeding up COSEM while maintaining its desirable properties. In separate publications [22,18,23], we have demonstrated that this is possible with an “enhanced” COSEM (ECOSEM) algorithm for MAP and ML versions.

In ECOSEM, the basic strategy is to apply an automatically computed parameter that controls a trade-off between a COSEM update and a faster OSEM update at each sub-iteration, while maintaining convergence. Simulations results for ECOSEM-ML [18] show its speed to approach that of optimized RAMLA.

In sum, we have presented COSEM-ML and COSEM-MAP for emission tomography based on our notion of a complete data energy. The COSEM algorithms needs no user-specified relaxation schedule as do competitors. While the early-iteration speed of COSEM is slower than that of competitors, we are developing faster enhanced ECOSEM versions competitive in speed with RAMLA and BSREM.

Acknowledgments

This work was supported in part by NSC93-2320-B-182-028 NSC Taiwan, CMRPD32013 and CM-RPD34005 from Chang Gung Research Fund, Taiwan, and by NIH-NIBIB RO1-EB02629.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Browne J, De Pierro A. IEEE Trans Med Imaging. 1996;15(5):687. doi: 10.1109/42.538946. [DOI] [PubMed] [Google Scholar]

- 2.De Pierro A, Yamagishi M. IEEE Trans Med Imaging. 2001;20(4):280. doi: 10.1109/42.921477. [DOI] [PubMed] [Google Scholar]

- 3.Ahn S, Fessler JA. IEEE Trans Med Imaging. 2003;22(5):613. doi: 10.1109/TMI.2003.812251. [DOI] [PubMed] [Google Scholar]

- 4.Qi J, Huesman R. IEEE Trans Med Imaging. 2001;20(8):815. doi: 10.1109/42.938249. [DOI] [PubMed] [Google Scholar]

- 5.Fessler JA. IEEE Trans Image Processing. 1996;5(3):493. doi: 10.1109/83.491322. [DOI] [PubMed] [Google Scholar]

- 6.Shepp L, Vardi Y. IEEE Trans Med Imaging. 1982;MI-1:113. doi: 10.1109/TMI.1982.4307558. [DOI] [PubMed] [Google Scholar]

- 7.Mumcuoglu EU, et al. IEEE Trans Med Imaging. 1994;13(4):687. doi: 10.1109/42.363099. [DOI] [PubMed] [Google Scholar]

- 8.Bouman CA, Sauer K. IEEE Trans Image Processing. 1996;5(3):480. doi: 10.1109/83.491321. [DOI] [PubMed] [Google Scholar]

- 9.Hudson HM, Larkin RS. IEEE Trans Med Imaging. 1994;13:601. doi: 10.1109/42.363108. [DOI] [PubMed] [Google Scholar]

- 10.Tanaka E, Kudo H. Phys Med Biol. 2003;48(10):1405. doi: 10.1088/0031-9155/48/10/312. [DOI] [PubMed] [Google Scholar]

- 11.Hsiao IT, et al. In: Proc SPIE. Sonka, Hanson, editors. Vol. 4684. 2002. p. 10. [Google Scholar]

- 12.Hsiao IT, et al. Proc IEEE Intl Symp on Biomedical Imaging. Washington DC: 2002. p. 409. [Google Scholar]

- 13.Mjolsness E, Garrett C. Neural Networks. 1990;3:651–669. [Google Scholar]

- 14.Neal R, Hinton GE. In: Learning in Graphical Models. Jordan MI, editor. Dordrecht: Kluwer; 1998. pp. 355–368. [Google Scholar]

- 15.Gunawardana AJR. PhD Thesis. Johns Hopkins University; 2001. [Google Scholar]

- 16.Khurd P, et al. IEEE Trans Nuc Sci. 2004;51:719. [Google Scholar]

- 17.Rangarajan A, et al. Tech Rep MIPL-03-1. SUNY Stony Brook, Dept Radiology, Medical Image Processing Laboratory; Dec, 2003. [Google Scholar]

- 18.Hsiao IT, et al. Phys Med Biol. 2004;49(11):2145. doi: 10.1088/0031-9155/49/11/002. [DOI] [PubMed] [Google Scholar]

- 19.Lee M. PhD Thesis. Yale University; New Haven, CT: 1994. [Google Scholar]

- 20.Rangarajan A. Maximum Entropy and Bayesian Methods. Kluwer Academic Publishers; Dordrecht, Netherlands: 1996. p. 117. [Google Scholar]

- 21.De Pierro A. IEEE Trans Med Imaging. 1995;14(1):132. doi: 10.1109/42.370409. [DOI] [PubMed] [Google Scholar]

- 22.Hsiao IT, et al. Proc VII Intl Conf. on Fully 3D Recon. in Rad. and Nucl. Med.; 2003. [Google Scholar]

- 23.Hsiao IT, et al. Proc 2004 IEEE Nuc. Sce. Sym. Med. Img. Conf., Rome, Italy; 2004. [Google Scholar]