Abstract

BACKGROUND

Internal medicine residents must be competent in advanced cardiac life support (ACLS) for board certification.

OBJECTIVE

To use a medical simulator to assess postgraduate year 2 (PGY-2) residents' baseline proficiency in ACLS scenarios and evaluate the impact of an educational intervention grounded in deliberate practice on skill development to mastery standards.

DESIGN

Pretest-posttest design without control group. After baseline evaluation, residents received 4, 2-hour ACLS education sessions using a medical simulator. Residents were then retested. Residents who did not achieve a research-derived minimum passing score (MPS) on each ACLS problem had more deliberate practice and were retested until the MPS was reached.

PARTICIPANTS

Forty-one PGY-2 internal medicine residents in a university-affiliated program.

MEASUREMENTS

Observational checklists based on American Heart Association (AHA) guidelines with interrater and internal consistency reliability estimates; deliberate practice time needed for residents to achieve minimum competency standards; demographics; United States Medical Licensing Examination Step 1 and Step 2 scores; and resident ratings of program quality and utility.

RESULTS

Performance improved significantly after simulator training. All residents met or exceeded the mastery competency standard. The amount of practice time needed to reach the MPS was a powerful (negative) predictor of posttest performance. The education program was rated highly.

CONCLUSIONS

A curriculum featuring deliberate practice dramatically increased the skills of residents in ACLS scenarios. Residents needed different amounts of training time to achieve minimum competency standards. Residents enjoy training, evaluation, and feedback in a simulated clinical environment. This mastery learning program and other competency-based efforts illustrate outcome-based medical education that is now prominent in accreditation reform of residency education.

Keywords: mastery learning, medical simulation, residency education

The American Board of Internal Medicine (ABIM) requires candidates for certification to be judged competent by their residency program director in 9 procedures including advanced cardiac life support (ACLS).1 Internal medicine residents frequently satisfy this requirement by completing an American Heart Association (AHA) ACLS provider course. These courses typically include 1 day of reading, lecture, and practical instruction about the recognition and treatment of ACLS events. Despite recommended ACLS renewal on a 2-year cycle,2 it has been shown that physicians, nurses, and laypersons display poor skill retention in shorter time periods.3–5 Several authors have argued that frequent refresher courses should be added to current training protocols to increase ACLS skill and knowledge retention.6,7

In addition to concerns about the adequacy of training in ACLS, another barrier to certifying residents as competent in these required procedures is the knowledge that in-hospital events prompting an ACLS response occur rarely. A recent review of 207 academic and community hospitals showed that the average number of annual events requiring an ACLS response was 54.1 per facility.8 Furthermore, data on in-hospital cardiac arrests from the University of Chicago demonstrated that the quality of resuscitation efforts varied and often did not meet published guidelines, even when performed by well-trained hospital personnel.9 Thus, internal medicine residents are expected to recognize and manage life-threatening events that occur infrequently, in which their performance is often not subject to audit or accountability assessment, and for which they may be poorly prepared and insufficiently practiced.

Medical education at all levels increasingly relies on simulation technology to provide a tool to increase learner knowledge, provide controlled, safe, and forgiving practice opportunities, and shape the acquisition of physicians' clinical skills.10–12 Simulations vary in fidelity from inert task trainers used to practice endotracheal intubation to standardized patients to sophisticated mannequins linked to computer systems that can mimic complex medical problems, display interacting physiologic and pharmacologic parameters, and present problems in “real time.”13 Combined with opportunities for controlled, deliberate practice with specific feedback,14,15 simulations are highly effective at promoting skill acquisition among medical learners15–17 and generalizing simulation-based learning into patient care settings.18,19 Gaining proficiency in clinical skills also gives rise to a sense of self-efficacy20 among medical learners, an affective outcome that accompanies mastery experiences.

Mastery learning,21 an especially stringent variety of competency-based education,22 means that learners acquire essential knowledge and skill, measured rigorously against fixed achievement standards, without regard to the time needed to reach the outcome. Mastery indicates a much higher level of performance than competence alone. In mastery learning, educational results are uniform, with little or no variation, while educational time varies among trainees. This approach to education has its origins in theory and data beginning at least 4 decades ago,23–28 and yet finds contemporary expression in statements about outcome-based medical education29 and calls for accreditation reform in internal medicine residency programs.30 To our knowledge, a genuine mastery learning model has never been used in U.S. medical education. This is the first empirical report of a mastery learning application in clinical medical education.

The study reported in this article amplifies a randomized-controlled trial (RCT) about ACLS skill acquisition among internal medicine residents reported previously by our research group.31 In the earlier RCT, we demonstrated a 38% improvement in ACLS skills after an 8-hour simulation-based educational intervention compared with clinical experience alone. This report presents original research data that extend the previous RCT in 2 ways: (a) it uses research-based mastery standards as floors for resident performance of 6 ACLS scenarios; and (b) unlike previous research where learning time was fixed and learning outcomes were varied, in this study each resident met or exceeded the minimum mastery standard for each procedure while learning time varied. The intent was to produce high achievement among internal medicine residents in ACLS procedures required for board certification with little or no outcome variation.

METHODS

Objectives and Design

The study was a pretest-posttest design without a control group32 of a simulation-based, mastery learning educational intervention designed to increase internal medicine residents' clinical skills in ACLS procedures. Primary measurements were obtained at baseline (pretest) and after the educational intervention (posttest).

Participants

Study participants were all 41 second-year residents at Northwestern University's Chicago campus internal medicine residency program from July 2004 to March 2005. The Northwestern University Feinberg School of Medicine Institutional Review Board approved the study. Participants provided informed consent before the baseline assessment.

The residency program is based at Northwestern Memorial Hospital and the Jesse Brown Veteran's Affairs Medical Center. Resident teams respond to all cardiac arrests at both hospitals. Teams are composed of 2 to 3 internal medicine residents and representatives from the anesthesia, surgery, and nursing services. All residents complete an AHA-approved ACLS provider course at the beginning of residency training and again 2 years later. However, only second- and third-year residents are designated as the code leader. First-year residents respond to cardiac arrests but do not serve as code leaders.

Procedure

The study began in July 2004 at the mid-point of the residents' ACLS 2-year renewal cycle. All 41 residents were retained as an intact group throughout the 8-month study. The research procedure had 2 phases. First, all residents underwent baseline pretesting on a random sample of 3 of 6 ACLS scenarios. Second, the residents received a minimum of 4, 2-hour education sessions with deliberate practice of ACLS events and procedures using a medical simulator. After training, the residents were retested and expected to meet or exceed a minimum passing score (MPS) on each of 6 ACLS scenarios. Those who failed to meet the MPS on any ACLS scenario engaged in more deliberate skills practice until the mastery standard was reached. The amount of extra training time that these residents needed to achieve the MPSs was recorded.

Residents were scheduled according to their clinical responsibilities and the capacities of the simulator center. Efforts were made to standardize the schedule so that training sessions occurred weekly and testing occurred no more than 1 to 2 weeks after the last training session.

Educational Intervention

The intervention was designed to help residents acquire, shape, and reinforce the clinical skills needed to respond to ACLS situations. The intent was to engage the residents in deliberate practice involving high-fidelity simulations of clinical events. Deliberate practice involves (a) repetitive performance of psychomotor skills in a focused domain, coupled with (b) rigorous skills assessment that provides learners (c) specific, informative feedback, which results in increasingly (d) better skills performance in a controlled setting.14 Research on the acquisition of expertise consistently shows the importance of intense, deliberate practice in a focused domain, in contrast with so-called innate abilities (e.g., measured intelligence) for the acquisition, demonstration, and maintenance of mastery.14 Thus, practice, feedback, and correction in a supportive environment were the operational rules of the educational intervention.

The study was conducted in Northwestern Memorial Hospital's Patient Safety Simulation Center using the life-size Human Patient Simulator (HPS®) developed by Medical Education Technologies Inc., Sarasota, FL. Using computer software, the mannequin displays multiple physiologic and pharmacologic responses observed in ACLS situations. Features of the mannequin include responses of the respiratory system, pupils, and eyelids as well as heart sounds and peripheral pulses. Monitoring of noninvasive blood pressure, arterial oxygen saturation, electrocardiogram, and arterial blood pressure is available. Simulator personnel can also give the mannequin voice through a speaker in the occipital area by talking into a microphone in an adjacent control room.

Six case scenarios were developed to assess resident proficiency in ACLS techniques. The scenarios are based on case studies described in the ACLS Provider Manual used by the AHA as instructional materials for ACLS provider courses.33 The 6 scenarios (asystole, ventricular fibrillation, supraventricular tachycardia, ventricular tachycardia, symptomatic bradycardia, and pulseless electrical activity) were selected because they were the ones most commonly encountered by residents in actual ACLS situations during a 15-month monitoring period during 2003 to 2004. Scenarios began with a brief clinical history also based on content found in the AHA text.

Simulator sessions were standardized and labeled as teaching or testing sessions. Teaching sessions gave groups of 2 to 4 residents time to practice protocols and procedures and to receive structured education from simulator faculty. Debriefing allowed the residents to ask questions, review algorithms, and receive feedback. The 4 teaching sessions were presented in uniform order: (a) procedures—intubation, central line placement, pericardiocentesis, and needle decompression of tension pneumothorax; (b) pulseless arrhythmias—asystole, ventricular fibrillation, and pulseless electrical activity; (c) tachycardias—supraventricular and ventricular; and (d) bradycardias—second- and third-degree AV block.

Two residents were present at each baseline pretesting session. One resident and an experienced ACLS instructor were present for posttest measurement. While one resident directed resuscitation efforts, the other individual performed cardiopulmonary resuscitation (CPR) or other tasks but did not make management decisions or lead the arrest scenario. The presentation order of the ACLS scenarios was randomized within each testing session. As described in the ACLS guidelines, residents were expected to obtain a history, perform a physical examination, request noninvasive and invasive monitoring, order medications, procedures, and tests, and direct resuscitative efforts of other participants. Residents did not review the scenarios before the session and were not permitted to use written materials while directing the simulations.

Measurements

A checklist was developed for each of the 6 conditions from the ACLS algorithms using rigorous step-by-step procedures.34,35 Within the checklists, each patient assessment, clinical examination, medication, or other action was listed in the order recommended by the AHA and given equal weight. None of the checklist items were weighted differentially. A dichotomous scoring scale of 0=not done/done incorrectly and 1=done correctly was imposed for each item. Checklists were reviewed for completeness and accuracy by 3 of the authors (D.B.W., J.B., and V.J.S), all of whom are ACLS providers. One author (V.J.S.) is an ACLS instructor.

The MPS for each ACLS procedure was determined in a previous research study involving 12 clinical experts using the Angoff and Hofstee standard setting methods.36 Each of the 6 MPSs is the average of the Angoff- and Hofstee-derived standards.

Evaluations of each resident's adherence to the 6 ACLS protocols were recorded by 1 of the 2 faculty raters on the checklists during the testing sessions. A 20% random sample of the testing sessions was rescored by the other rater from videotapes to assess interrater reliability. The rescoring was blind to the results of the first checklist recording.

Demographic data were obtained from the participants, including age, gender, ethnicity, medical school, and scores on the United States Medical Licensing Examination (USMLE) Steps 1 and 2. Each resident's experience in managing patients with any of the 6 conditions was collected at each test occasion.

Primary outcome measures were posttest checklist scores. Secondary outcome measures were the total training time needed to reach the MPS for all 6 ACLS problems and a course evaluation questionnaire completed at the end of the study period.

Raw checklist scores ranged from 16 to 31 items for the 6 ACLS simulations.

Data Analysis

Checklist score reliability was estimated in 2 ways: (a) interrater reliability was calculated using the κ coefficient37 adjusted using the formula of Brennan and Prediger,38 while (b) internal consistency reliability was derived using Cronbach's α coefficient.39 Within-group differences from pretest (baseline) to posttest (outcome) were analyzed using paired t tests. The association of ACLS posttest performance with (a) pretest ACLS performance, (b) medical knowledge measured by USMLE Steps 1 and 2, and (c) whether additional training was needed to master the 6 ACLS procedures was assessed using multiple regression analysis.

RESULTS

All residents consented to participate and completed the entire training protocol. The simulator operated without error or breakdown.

Table 1 presents demographic data about the 41 residents who participated in the study. These data provide a descriptive portrait of the physician trainees. A majority of the residents had little or no experience responding to actual ACLS situations during the first residency year.

Table 1.

Baseline Demographic Data

| Characteristic | PGY-2 Residents |

|---|---|

| Mean age (y) | 27.39 |

| SD | 1.53 |

| Male | 24 (58.5%) |

| Female | 17 (41.5%) |

| White | 21 (51.2%) |

| Asian | 17 (41.5%) |

| Other | 3 (7.3%) |

| U.S. medical school graduate | 40 (97.6%) |

| Foreign medical school graduate | 1 (2.4%) |

| Actual cardiac arrests participated in during first year of training | |

| 0 to 5 | 10 (24.4 %) |

| 5 to 10 | 21 (51.2%) |

| 10 to 15 | 7 (17.1%) |

| >15 | 3 (7.3%) |

PGY-2, postgraduate year 2

Table 2 reports descriptive statistics about resident performance on each of the 6 ACLS scenarios at the baseline pretest and at the posttest expressed in 2 ways. The Matched posttest presents resident performance on the same 3 ACLS problems that each resident received as a random sample at pretest. The Complete posttest displays resident performance on all 6 ACLS problems. The number of items on each checklist, interrater reliability coefficients expressed as the mean κ , Cronbach's α internal consistency reliability coefficients, and the MPS for each ACLS scenario are also given. Without exception, the reliabilities indicate a high degree of interrater agreement about resident scenario performance and good internal consistency.

, Cronbach's α internal consistency reliability coefficients, and the MPS for each ACLS scenario are also given. Without exception, the reliabilities indicate a high degree of interrater agreement about resident scenario performance and good internal consistency.

Table 2.

Checklist Descriptive Statistics and Reliabilities*

| Scenario | Pretest (3 Stations, n=41) | Matched posttest (3 Stations, n=41) | Complete posttest (6 Stations, n=41) |

|---|---|---|---|

| Mean (SD) | Mean (SD) | Mean (SD) | |

| Asystole (n†=17) | |||

| κ¯n=0.82,‡α=0.53,§ MPS=74.3 | 72.3 (12.6) | 91.9 (6.3) | 92.4 (5.8) |

| Ventricular fibrillation (n†=31) | |||

| κ¯n=0.86,‡α=0.82,§ MPS=76.4 | 75.4 (8.2) | 87.6 (6.5) | 86.0 (10.8) |

| Supraventricular tachycardia (n†=30) | |||

| κ¯n=0.82,‡α=0.68,§ MPS=72.4 | 72.3 (11.2) | 89.0 (5.4) | 88.2 (6.0) |

| Ventricular tachycardia (n†=22) | |||

| κ¯n=0.93,‡α=0.50,§ MPS=74.4 | 83.1 (8.8) | 92.9 (7.4) | 93.8 (6.7) |

| Symptomatic bradycardia (n†=19) | |||

| κ¯n=0.79,‡α=0.32,§ MPS=71.5 | 59.7 (8.1) | 85.8 (8.6) | 84.0 (8.7) |

| Pulseless electrical activity (n†=16) | |||

| κ¯n=0.84,‡α=0.43,§ MPS=76.6 | 75.6 (10.3) | 90.1 (5.9) | 89.1 (5.4) |

Tabular entries=percentage correct

n=number of checklist items

κ¯n=mean kappa inter-rater reliability coefficient

α=internal consistency coefficient Cronbach alpha

MPS, minimum passing score

Thirty-three of the 41 medicine residents (80.5%) achieved mastery within the standard 8-hour training and deliberate practice ACLS curriculum. The remaining 8 residents (19.5%) needed extra time to reach mastery ranging from 15 minutes to 1 hour. Only 5 residents needed a full extra hour of deliberate practice to reach all 6 ACLS scenario MPSs.

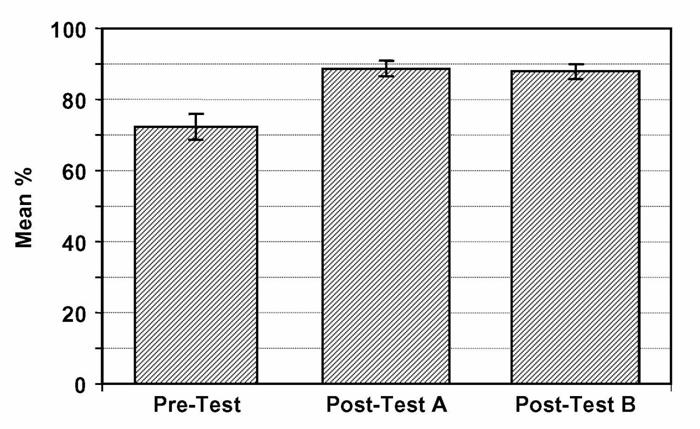

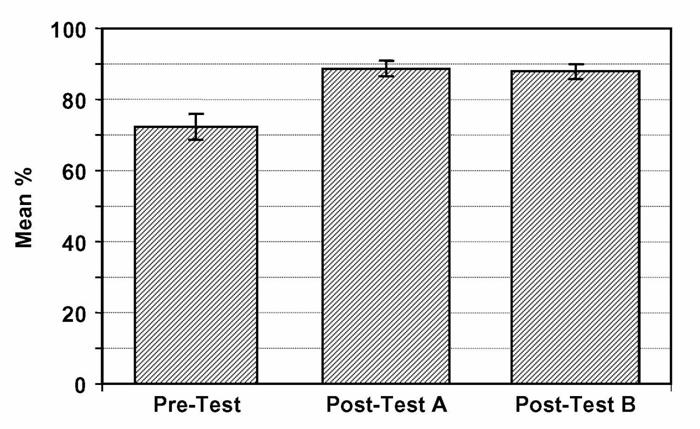

The aggregate research outcomes expressed as percentages31 for all 6 ACLS scenarios are reported as a figure in the Appendix (online). This figure shows that at the baseline pretest the residents' mean percent correct for the ACLS scenarios was 72.2% (95% confidence interval [CI] 71.0% to 73.4%). The Matched and Complete posttest scores were identical statistically (Matched=88.7%; 95% CI, 88.0% to 89.4% vs Complete=87.9%; 95% CI, 87.2% to 88.6%). The pretest to posttests contrasts in overall ACLS performance represents a 24% improvement, a highly significant difference (P≤.0001). Results from the regression analysis indicate that neither pretest ACLS scores nor USMLE Step 1 and 2 scores were significant predictors of posttest performance. However, the need for additional deliberate practice for those who failed to reach the overall mastery standard on the posttest was a powerful negative predictor of posttest performance. For the Matched posttest b=−7.1 (95% CI −11.3 to −2.9; P=.002; r2=.27). For the Complete posttest b=−8.2 (95% CI −11.8 to −4.5; P<.0001; r2=.37). On average, for those who required additional practice to achieve mastery, the predicted posttest scores were 7.1% and 8.2% lower than their peers for the Matched and Complete posttests, respectively. Thus, in both cases the need for extra deliberate practice was associated with relatively lower posttest scores despite the outcome that all residents met or exceeded the rigorous ACLS minimum passing standards.

Follow-up data from 40 of the 41 residents on a course evaluation questionnaire about the ACLS training experience were uniformly positive. Responses were recorded on a Likert scale where 1=strongly disagree, 2=disagree, 3=uncertain, 4=agree, and 5=strongly agree (Table 3). The questionnaire data show that residents strongly agree that practice with the medical simulator boosts their clinical skill and self-confidence, that practice with the simulator should be a required component of residency education, and that the medical simulator prepared them to be a code leader better than the AHA ACLS provider course. The data also reveal resident uncertainty that practice with the medical simulator has more educational value than patient care. This underscores the recognition that deliberate practice with medical simulators complements but does not replace patient care in graduate medical education.10–19

Table 3.

ACLS Course Evaluation Sample Items

| Mean | SD | |

|---|---|---|

| All Residents (n=40) | ||

| 1. Practice with the medical simulator boosts my clinical skill | 4.6 | 0.8 |

| 2. It is ok to make clinical mistakes using the medical simulator | 4.1 | 1.3 |

| 3. I receive useful educational feedback from the medical simulator | 4.4 | 1.2 |

| 4. Practice with the medical simulator boosts my clinical self-confidence | 4.4 | 0.9 |

| 5. Practice with the medical simulator has more educational value than patient care | 3.1 | 1.6 |

| 6. The simulator center staff is competent | 4.5 | 0.9 |

| 7. Practice sessions using the medical simulator should be a required component of residency education | 4.4 | 1.3 |

| 8. Practice with the medical simulator has helped prepare me to be a code leader better than clinical experience alone | 4.4 | 0.9 |

| 9. The medical simulator has helped prepare me to be a code leader better than the ACLS course I took | 4.7 | 0.6 |

| Extra Practice Residents (n=8) | ||

| 1. The additional training I had was necessary | 3.9 | 1.1 |

| 2. My questions were answered sufficiently | 4.5 | 1.0 |

| 3. I was angry that I had to return for more training | 1.8 | 1.0 |

| 4. I was embarrassed that I had to return for more training | 2.1 | 1.5 |

| 5. I felt the additional training increased my ability to lead a hospital code | 4.2 | 1.3 |

Strongly Disagree

Disagree

Uncertain

Agree

Strongly Agree

ACLS,advanced cardiac life support.

The 8 residents who needed extra practice time to reach the mastery standard provided additional positive endorsement about the educational experience. They acknowledged that the additional training time was needed, were not angry or embarrassed about returning for more training, and believed that the extra practice increased their ability to lead a hospital code (Table 3).

COMMENT

This study illustrates the application of a mastery-learning model to skill acquisition in internal medicine residency training. Use of a computer-enhanced mannequin in a structured educational program that features a combination of clear learning objectives, opportunities for deliberate practice with feedback, rigorous outcome measurement, and high achievement standards yielded large and consistent improvements in residents' ACLS skills. As shown in Table 2, despite fulfillment of an AHA provider course and clinical experience, the residents met the MPS for only 1 of the 6 ACLS skills (17%) before the educational intervention. By contrast, after the mastery-learning program, all of the residents met or exceeded the MPS for 100% of the skills. Given recent concerns about the quality and adherence to published guidelines of in-hospital cardiac arrests,9 programs such as this could be a useful adjunct to current ACLS certification procedures. As shown by our experience to date with several classes of residents (n=118), this model is effective, feasible, and practical for use in internal medicine residency training. In addition, the residents have consistently enjoyed participating in the mastery educational experience.

Our data also demonstrate that medical knowledge, measured by USMLE Step 1 and 2 scores, had no correlation with ACLS skill acquisition measured by checklists. This reinforces findings from our previous randomized trial of ACLS skill acquisition31 and supports the difference between professional and academic achievement described in earlier research.25,40 Pretest skill performance also had no correlation with posttest results. However, the amount of deliberate practice needed to reach the mastery standard was a powerful negative predictor of posttest scores. Even though all residents met or exceeded the 6 MPSs, the need for more deliberate practice is associated with a relatively lower posttest performance.

A key question about this and other simulation-based studies is whether performance in a highly controlled simulator environment will generalize to variable clinical practice settings. This is especially the case for critical yet infrequent clinical events like in-hospital ACLS events.8 Several studies have demonstrated a simulator to clinic relationship18,19; yet, the generalizability of simulator training to clinical practice warrants further study. Studies that aim to generalize skill acquisition in simulator environments to in vivo clinical practice should at minimum contain large and representative samples of medical trainees. However, such studies must also attend to such basic principles of causal generalization from laboratory to life as surface similarity, ruling out irrelevancies, making discriminations, interpolation and extrapolation, and causal explanation.32

This project is an operational expression of outcome-based education now at the heart of calls for reform of internal medicine residency program accreditation. It shows that shopworn expressions about medical skill acquisition such as “see one, do one, teach one” are inadequate and obsolete. Goroll et al.30 argue on behalf of the Residency Review Committee for Internal Medicine of the Accreditation Council for Graduate Medical Education for “… a new outcomes-based accreditation … [that] shifts residency program accreditation from external audit of educational process to continuous assessment and improvement of clinical competence” (p. 902). Our study is an objective, data-based demonstration of the proposed accreditation model. Further work at our institution is ongoing to expand the mastery model to include other required procedures, document compliance with published guidelines in actual ACLS events, and assess long-term retention of skill following initial simulator training.

This study has several limitations. It was conducted within one residency program at a single academic medical center. The sample size (n=41) was relatively small. The computer-enhanced simulation mannequin was used for both education and testing, potentially confounding the events. These limitations do not, however, diminish the pronounced impact that the simulation-based training produced among the medical residents.

This article also complies with recommendations for reporting nonrandomized evaluations of behavioral and public health interventions contained in the TREND statement.41

In conclusion, our study demonstrates the ability of deliberate practice using a medical simulator to produce mastery performance on ACLS scenarios at high achievement standards among internal medicine residents. The project was implemented successfully in a complex residency schedule, received high ratings from learners, and complies with new residency program accreditation requirements because it provides reliable assessments of residents' ACLS competence.

Acknowledgments

We thank the Northwestern University internal medicine residents for their dedication to patient care and education. We acknowledge Charles Watts, MD, and J. Larry Jameson, MD, PhD, for their support and encouragement of this work. We are indebted to S. Barry Issenberg, MD, and Emil R. Petrusa, PhD, for their editorial comments.

Financial Support: Excellence in Academic Medicine Act under the State of Illinois Department of Public Aid administered through Northwestern Memorial Hospital.

Supplementary Material

The following supplementary material is available for this article online:

REFERENCES

- 1.American Board of Internal Medicine. Requirements for certification in internal medicine. [July 7, 2005]; Available at http://www.abim.org/cert/policiesim.shtm#6.

- 2.Cummins RO, Sanders A, Mancini E, Hazinski MF. In-hospital resuscitation: a statement for healthcare professionals from the American Heart Association Emergency Cardiac Care Committee and the Advanced Cardiac Life Support, Basic Life Support, Pediatric Resuscitation, and Program Administration Subcommittees. Circulation. 1997;95:2211–2. doi: 10.1161/01.cir.95.8.2211. [DOI] [PubMed] [Google Scholar]

- 3.Kaye W, Mancini ME, Rallis SF. Advanced cardiac life support refresher course using standardized objective-based mega code testing. Crit Care Med. 1987;15:55–60. doi: 10.1097/00003246-198701000-00013. [DOI] [PubMed] [Google Scholar]

- 4.O'Steen DS, Kee CC, Minick MP. The retention of advanced cardiac life support knowledge among registered nurses. J Nurs Staff Dev. 1996;12:66–72. [PubMed] [Google Scholar]

- 5.Moser DK, Dracup K, Guzy PM, Taylor SE, Breu C. Cardiopulmonary resuscitation skills retention in family members of cardiac patients. Am J Emer Med. 1990;8:498–503. doi: 10.1016/0735-6757(90)90150-x. [DOI] [PubMed] [Google Scholar]

- 6.Makker R, Gray-Siracusa K, Evers M. Evaluation of advanced cardiac life support in a community teaching hospital by use of actual cardiac arrests. Heart Lung. 1995;24:116–20. doi: 10.1016/s0147-9563(05)80005-6. [DOI] [PubMed] [Google Scholar]

- 7.Kaye W. Research on ACLS training—which methods improve skill and knowledge retention? Respir Care. 1995;40:538–46. [PubMed] [Google Scholar]

- 8.Peberdy MA, Kaye W, Ornato JP, et al. Cardiopulmonary resuscitation of adults in the hospital: a report of 14,720 cardiac arrests from the National Registry of Cardiopulmonary Resuscitation. Resuscitation. 2003;58:297–308. doi: 10.1016/s0300-9572(03)00215-6. [DOI] [PubMed] [Google Scholar]

- 9.Abella BS, Alvarado JP, Myklebust H, et al. Quality of cardiopulmonary resuscitation during in-hospital cardiac arrest. JAMA. 2005;293:305–10. doi: 10.1001/jama.293.3.305. [DOI] [PubMed] [Google Scholar]

- 10.Issenberg SB, McGaghie WC, Hart IR, et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282:861–6. doi: 10.1001/jama.282.9.861. [DOI] [PubMed] [Google Scholar]

- 11.Simulators in Critical Care Education and Beyond. Des Plaines, IL: Society of Critical Care Medicine; 2004. [Google Scholar]

- 12.Innovative Simulations for Assessing Professional Competence. Chicago: Department of Medical Education, University of Illinois College of Medicine; 1999. [Google Scholar]

- 13.Gaba DM. Human work environment and simulators. In: Miller RD, editor. Anesthesia. 5. Philadelphia: Churchill Livingstone; 2000. pp. 2613–68. [Google Scholar]

- 14.Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med. 2004;79(suppl):S70–81. doi: 10.1097/00001888-200410001-00022. [DOI] [PubMed] [Google Scholar]

- 15.Issenberg SB, McGaghie WC, Petrusa ER, Gordon DL, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27:10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 16.Boulet JR, Murray D, Kras J, et al. Reliability and validity of a simulation-based acute care skills assessment for medical students and residents. Anesthesiology. 2003;99:1270–80. doi: 10.1097/00000542-200312000-00007. [DOI] [PubMed] [Google Scholar]

- 17.Issenberg SB, McGaghie WC, Gordon DL, et al. Effectiveness of a cardiology review course for internal medicine residents using simulation technology and deliberate practice. Teach Learn Med. 2002;14:223–8. doi: 10.1207/S15328015TLM1404_4. [DOI] [PubMed] [Google Scholar]

- 18.Ewy GA, Felner JM, Juul D, et al. Test of a cardiology patient simulator in fourth-year electives. J Med Educ. 1987;62:738–43. doi: 10.1097/00001888-198709000-00005. [DOI] [PubMed] [Google Scholar]

- 19.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance. Ann Surg. 2002;236:458–64. doi: 10.1097/00000658-200210000-00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bandura A. Self-Efficacy: The Exercise of Control. New York: W.H. Freeman; 1997. [Google Scholar]

- 21.Mastery Learning: Theory and Practice. New York: Holt, Rinehart and Winston; 1971. [Google Scholar]

- 22.McGaghie WC, Miller GE, Sajid A, Telder TV. Competency-Based Curriculum Development in Medical Education. Public Health Paper No. 68. Geneva, Switzerland: World Health Organization; 1978. [PubMed] [Google Scholar]

- 23.Carroll JB. A model of school learning. Teach Coll Rec. 1963;64:723–33. [Google Scholar]

- 24.Keller FS. “Good-bye, teacher ….”. J Appl Behav Anal. 1968;1:79–89. doi: 10.1901/jaba.1968.1-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.McClelland DC. Testing for competence rather than for “intelligence.”. Am Psychol. 1973;28:1–14. doi: 10.1037/h0034092. [DOI] [PubMed] [Google Scholar]

- 26.Bloom BS. Time and learning. Am Psychol. 1974;29:682–8. [Google Scholar]

- 27.Bloom BS. Human Characteristics and School Learning. New York: McGraw-Hill; 1976. [Google Scholar]

- 28.Kulik JA, Kulik C-LC, Cohen PA. A meta-analysis of outcome studies of Keller's personalized system of instruction. Am Psychol. 1979;34:307–18. [Google Scholar]

- 29.Harden RM. Developments in outcome-based education. Med Teach. 2002;24:117–20. doi: 10.1080/01421590220120669. [DOI] [PubMed] [Google Scholar]

- 30.Goroll AH, Sirio C, Duffy FD, et al. A new model for accreditation of residency programs in internal medicine. Ann Intern Med. 2004;140:902–9. doi: 10.7326/0003-4819-140-11-200406010-00012. [DOI] [PubMed] [Google Scholar]

- 31.Wayne DB, Butter J, Siddall VJ, et al. Simulation-based training of internal medicine residents in advanced cardiac life support protocols: a randomized trial. Teach Learn Med. 2005;17:210–6. doi: 10.1207/s15328015tlm1703_3. [DOI] [PubMed] [Google Scholar]

- 32.Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston: Houghton Mifflin; 2002. [Google Scholar]

- 33.ACLS Provider Manual. Dallas: American Heart Association; 2001. [Google Scholar]

- 34.McGaghie WC, Renner BR, Kowlowitz V, et al. Development and evaluation of musculoskeletal performance measures for an objective structured clinical examination. Teach Learn Med. 1994;6:59–63. [Google Scholar]

- 35.Stufflebeam DL. The Checklists Development Checklist. [03/15/2005];Western Michigan University Evaluation Center. July 2000. Available at http://www.wmich.edu/evalctr/checklists/cdc.htm.

- 36.Wayne DB, Fudala MJ, Butter J, et al. Comparison of two standard setting methods for advanced cardiac life support training. Acad Med. 2005;80(suppl):S63–S66. doi: 10.1097/00001888-200510001-00018. [DOI] [PubMed] [Google Scholar]

- 37.Fleiss JL. Statistical Methods for Rates and Proportions. 2. New York: John Wiley & Sons; 1981. [Google Scholar]

- 38.Brennan RL, Prediger DJ. Coefficient kappa: some uses, misuses, and alternatives. Educ Psychol Meas. 1981;41:687–99. [Google Scholar]

- 39.Cortina JM. What is coefficient alpha? An examination of theory and applications. J Appl Psychol. 1993;78:93–104. [Google Scholar]

- 40.Samson GE, Graue ME, Weinstein T, Walberg HJ. Academic and occupational performance: a quantitative synthesis. Am Educ Res J. 1984;21:311–21. [Google Scholar]

- 41.Des Jarlias DC, Lyles C, Crepaz N and the TREND Group. Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: the TREND statement. Am J Public Health. 2004;94:361–6. doi: 10.2105/ajph.94.3.361. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials