Over the last decade, health literacy has become a vibrant area of research. Investigators have elucidated the prevalence of limited health literacy and the relationship of limited health literacy with patients' knowledge, health behaviors, health outcomes, and medical costs, as summarized in reports by several prominent organizations.1–4 This special issue of JGIM devoted to the topic of health literacy is further evidence of the wide and diverse audience interested in this field.

Ironically, as the field of health literacy has expanded in scope and depth, the term “health literacy” itself has come to mean different things to various audiences and has become a source of confusion and debate. In 1999, the American Medical Association's Ad Hoc Committee on Health Literacy defined health literacy as “the constellation of skills, including the ability to perform basic reading and numerical tasks required to function in the health care environment,” including “the ability to read and comprehend prescription bottles, appointment slips, and other essential health-related materials.”2 The definitions used by Healthy People 20105 and the Institute of Medicine (IOM)3 were similar: “The degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions.” These definitions present health literacy as a set of individual capacities that allow the person to acquire and use new information. These capacities are relatively stable over time, although they may improve with educational programs or decline with aging or pathologic processes that impair cognitive function.6

Others have argued that if health literacy is the ability to function in the health care environment, it must depend upon characteristics of both the individual and the health care system. From this perspective, health literacy is a dynamic state of an individual during a health care encounter. An individual's health literacy may vary depending upon the medical problem being treated, the health care provider, and the system providing the care.

Some also view health knowledge as part of health literacy. For example, the IOM expert panel divided the domain of “health literacy” into (1) cultural and conceptual knowledge, (2) oral literacy, including speaking and listening skills, (3) print literacy, including writing and reading skills, and (4) numeracy. The American College of Physicians Foundation recently advertised a set of informational cards that physicians could give to their patients to “raise their level of health literacy.”7 From this perspective, health literacy is an achieved level of knowledge or proficiency that depends upon an individual's capacity (and motivation to learn) and the resources provided by the health care system.

All these perspectives represent reasoned ideas based on different orientations to the problem. Nevertheless, the lack of shared meaning for the central term in a field is obviously problematic. For example, there has been confusion and disagreement between the authors of research articles or grants and reviewers. It is not surprising that experts disagree about how health literacy should be measured as it may be that they are not really talking about the same underlying construct. If health literacy is a capacity of a person, measures of an individual's reading ability and vocabulary are appropriate. In contrast, if health literacy depends on the relationship between individual communication capacities, the health care system, and the broader society, measures at the individual level are clearly inadequate. If knowledge is part of the definition of health literacy, this too must be measured.

To address this problem, I will first present a conceptual model of the domains of health literacy and the relationship of health literacy with health outcomes, and suggest possible terms that may be used to describe these domains. This model is designed to supplement the models of health literacy presented within the IOM report to allow more specific and precise discussion of measures of health literacy. I hope this will serve as a first step in a longer process of achieving a shared terminology for researchers and other experts in the field, as recommended in the IOM report. I will then review available measures of these domains and discuss which measures may be most useful in research and clinical practice.

Conceptual Model

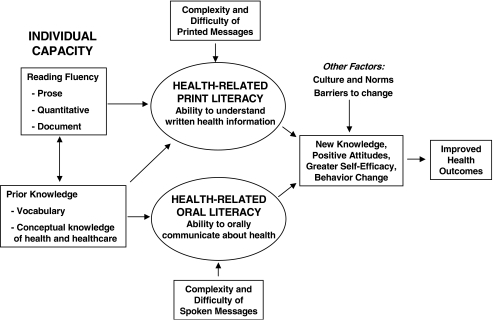

As illustrated in Figure 1, the first domain within the model is individual capacity. This is the set of resources that a person has to deal effectively with health information, health care personnel, and the health care system. For the purposes of this discussion, I focus on 2 subdomains of capacity: reading fluency, and prior knowledge, which includes vocabulary, and conceptual knowledge of health and health care.

FIGURE 1.

Conceptual model of the relationship between individual capacities, health-related print and oral literacy, and health outcomes

Reading fluency is the ability to mentally process written materials and form new knowledge. The first National Adult Literacy Study (NALS) divided reading fluency into 3 skill sets: (1) the ability to read and understand text (prose literacy), (2) the ability to locate and use information in documents (document literacy), and (3) the ability to apply arithmetic operations and use numerical information in printed materials (quantitative literacy).8 Other investigators have used the term “numeracy” for the latter skill.9–11 In the Test of Functional Health Literacy in Adults (TOFHLA), the “numeracy” items actually assess the NALS subdomains of quantitative and document literacy.12,13 Other “numeracy” tests focus on an individual's ability to understand probabilities and percentages.9

Prior knowledge (i.e., an individual's knowledge at the time before reading health-related materials or speaking to a health care professional) is composed of vocabulary (knowing what individual words mean) and conceptual knowledge (understanding aspects of the world, e.g., how different parts of the body work or what cancer is and how it injures the body). Vocabulary is distinct from reading fluency, although the 2 are very highly correlated because people acquire much of their vocabulary through reading. Although the more expansive definitions of health literacy discussed above (e.g., the IOM report) include conceptual knowledge as part of health literacy, this model views conceptual knowledge as a resource that a person has that facilitates health literacy but does not in itself constitute health literacy.

Reading fluency allows an individual to expand one's vocabulary and gain conceptual knowledge. The converse is also true. Vocabulary and background knowledge of the general topics covered in written materials improve an individual's comprehension of these materials, as shown by the double-headed arrow between the 2 individual capacity boxes in Figure 1. In other words, it is easier to read and comprehend materials that contain familiar vocabulary and concepts. Two individuals with similar general reading fluency may have different abilities to read and understand health-related material as a result of differences in their baseline knowledge of health vocabulary and concepts.

The notion that individuals will understand written and spoken communication better if they are familiar with the words and concepts presented makes sense. However, we do not know the average difference between an individual's (1) general reading fluency, vocabulary, and knowledge and (2) the person's health-related reading fluency, vocabulary, and knowledge. Nevertheless, the distinction between general reading fluency and health-related reading fluency is important for research because a measure of an individual's ability to read and understand health-related materials is likely to be more closely related to health outcomes than a measure of general literacy. Thus, a study that uses a measure of health-related reading fluency as a predictor variable will have greater power to detect associations with health outcomes than a study that uses a measure of general reading fluency.

The second major domain in the model is health literacy. The IOM report divided health literacy into health-related print literacy and health-related oral literacy, although it is unclear to what degree print and oral literacy are truly distinct. Health-related print and oral literacy depend upon an individual's health-related reading fluency, health-related vocabulary, familiarity with health concepts presented in materials or discussed, and the complexity and difficulty of the printed and spoken messages that a person encounters in the healthcare environment. Thus, “health literacy” is determined by characteristics of both the individual and the health care system. Health literacy is one of many factors (e.g., culture and social norms, health care access) that leads to the acquisition of new knowledge, more positive attitudes, greater self-efficacy, positive health behaviors, and better health outcomes.

Ideal Measurement of Health Literacy

Because health literacy in this model is determined by characteristics of an individual and that individual's environment (i.e., public health messages and health care setting), it is easier to conceptualize than to measure directly. It would be theoretically possible to measure individuals' reading fluency, vocabulary, and health knowledge and simultaneously measure the difficulty of written health materials and the complexity of health professionals' speech that these individuals would be likely to encounter in their unique health care environments. The match (or more likely, the mismatch) between individuals' reading fluency, vocabulary, background knowledge, and their oral and written communication demands would then be a measure of each person's health literacy. However, this type of comprehensive direct measurement of health literacy is impractical for almost all projects.

Measuring Individual Capacity

Although it is difficult to measure reading demands comprehensively, there are several reasonably good measures of individual reading capacities. If all public health and health care systems place similarly high reading and oral communication demands on individuals, then a measure of an individual's capacity will accurately reflect the person's health literacy (i.e., his or her ability to understand and use the health-related materials that the person will probably encounter in the future). In other words, measures of individuals' capacities are probably reasonably accurate surrogates for their health-related print literacy.

The most widely used measures are the Rapid Estimate of Adult Literacy in Medicine (REALM)14 and the Test of Functional Health Literacy in Adults (TOFHLA).12,13 Neither test is a comprehensive assessment of an individual's capacities. Rather, the tests measure selected domains that are thought to be markers for an individual's overall capacity. The REALM is a 66-item word recognition and pronunciation test that measures the domain of vocabulary. The TOFHLA measures reading fluency. It consists of a reading comprehension section (a 50-item test using the modified Cloze procedure) to measure prose literacy and a “numeracy” section with 17 items assessing individuals' capacity to read and understand actual hospital documents and labeled prescription vials. Although the 2 tests measure different capacities, the tests are highly correlated (Spearman's correlation coefficient 0.80).15 Both tests are also highly correlated with general vocabulary tests (i.e., the Wide Range Achievement Test, Revised).16

Baseline conceptual knowledge is another resource an individual possesses when trying to understand new health information. Studies have shown that most people in the United States have a poor understanding of science.17 It would be very helpful to have a comprehensive test of the general public's conceptual knowledge about health and illness to help plan health education programs, public health messages, and patient education. However, measuring general health knowledge is obviously challenging, and no instrument has been widely used. It is not clear whether it would be valuable to measure individual's general health knowledge in clinical research or for patient care. In some research studies and clinical settings, it may be helpful to measure specific aspects of baseline conceptual knowledge to understand a patient's learning needs before an educational program. For researchers, knowledge is more often viewed narrowly as an outcome (or intermediate outcome) that will be measured to see whether an intervention improved very specific knowledge, attitudes, and behaviors, as shown in the box on the right-hand side of Figure 1. To date, measures have focused on specific conditions such as asthma, hypertension, diabetes, and heart failure.18–21 These measures of disease-specific knowledge generally show a direct, linear correlation with measures of reading fluency.

Are More Comprehensive Measures of Capacity Needed?

It is essential that we learn more about how well Americans can read and comprehend health-related materials that they encounter in everyday life. We need to understand what written health materials need to be simplified (e.g., nutrition labels on foods) and what health concepts need to be taught more effectively in school (e.g., the basic concepts of cardiovascular anatomy and disease that individuals are likely to encounter during their lifetime). For this purpose, tests like the TOFHLA and the REALM are clearly inadequate, and more comprehensive measures are needed. The NALS contains some health-related questions, which have been compiled to create the Health Activities Literacy Scale (HALS).22 The HALS includes prose, quantitative, and document items in 5 health-related areas: health promotion, health protection, disease prevention, health care and maintenance, and systems navigation. Because the HALS is new, much is still unknown about its properties. However, it is likely that additional items will need to be developed and incorporated into the HALS or new instruments to cover fully all key areas for population health and medical care.

Despite its potential value for understanding health-related reading capacities at the population level, the length of the HALS will prohibit its use in most research studies. The full-length test yields a score from 0 to 500 in 5-point increments and takes approximately 1 hour to complete. The “locator” version of the HALS takes 30 to 40 minutes to complete and categorizes people into level 1 (lowest), 2, or 3 or higher without providing an actual score. This is substantially longer than either the REALM or the short version of the TOFHLA (S-TOFHLA). Moreover, we do not know whether increasing the comprehensiveness and length of tests to measure individual capacity will translate into greater predictive value and discriminatory ability. The REALM and the TOFHLA have been shown to predict knowledge, behaviors, and outcomes,2,3,23–28 and studies that use them should not be criticized because they are not comprehensive measures. Additional studies are needed to compare the REALM and the TOFHLA with more comprehensive tests such as the HALS to better understand their limitations for research.

Practical Tools for Clinical Settings: Short, Shorter, Shortest

While there are continued calls for more comprehensive measures of health literacy, there is just as much interest in identifying short practical measures to identify individuals with limited capacities (i.e., patients in clinical settings).29 Investigators have adopted 2 approaches. The first is to develop shorter tests of reading capacities. A short version of the TOFHLA (the S-TOFHLA) is available that takes approximately 7 minutes to complete.12 Although the original REALM was already quite short, requiring less than 3 minutes to complete the 66 items, Bass et al.30 identified 8 items from the REALM that had a correlation of 0.64 with the WRAT and were reasonably accurate at predicting individuals with low reading capacities based on the WRAT. However, only 157 patients participated, and these findings have not been replicated.

Most recently, Weiss et al.31 developed the Newest Vital Sign (NVS), consisting of a nutrition label for ice cream with 6 questions about the information contained in the label. The NVS takes approximately 3 minutes to complete. The first 4 questions require document and quantitative skills, including the ability to calculate percentages. The sensitivity of a score of <2 for detecting patients with inadequate or marginal health literacy was 72% for the English version (NVS-E) and 77% for the Spanish version (NVS-S); the specificity was 87% and 57%, respectively. The ability of the NVS-E to identify patients with low literacy was markedly higher than for education and age alone (area under the receiver operating characteristic (ROC) curves of 0.88, 0.72, 0.71, respectively); data for the NVS-S were not reported. Thus, the NVS is fairly short, moderately accurate, and appears better than demographics alone for identifying individuals with limited reading capacities.

The second approach is to measure self-assessed health literacy based upon individuals' self-reported difficulty in understanding health care professionals and the written materials they encounter in their health care setting. This directly assesses the mismatch between an individual's capacities and her communication demands and provides important information from the patient's perspective. Williams et al.32 found that 3 questions about the ability to read the newspaper, the ability to read forms, and other written materials from hospitals, and the use of a “surrogate reader” to help understand health information each had low sensitivity for detecting patients with “inadequate functional health literacy” as measured by the TOFHLA. Chew et al.33 assessed 16 questions with 5-point Likert-scale response options for their ability to identify patients with “inadequate health literacy” according to the S-TOFHLA. They found that 3 questions had a reasonably high predictive value for identifying 15 of 332 patients with inadequate literacy: (1) “How often do you have someone help you read hospital materials?” (2) “How confident are you filling out medical forms by yourself?” and (3) “How often do you have problems learning about your medical condition because of difficulty understanding written information?” (area under the ROC curves 0.87, 0.80, and 0.76, respectively). However, this study did not determine whether the predictive value of these questions was better than demographics alone (i.e., education, age, race/ethnicity) and whether the findings were replicable in a different population.

The study by Wallace et al.34 in this issue of JGIM advances our understanding of the value of these screening questions. The 3 questions described above were tested in 305 adults in a university-based primary care clinic. In contrast to Chew's findings, the question “How confident are you filling out medical forms by yourself?” had the best predictive value (area under the ROC curve of 0.82) for identifying individuals with a REALM score of ≤44 (≤6th grade). This was substantially better than the ROC curve for a model that included age (<65 vs ≥65), race/ethnicity (white vs nonwhite), and education (<high school vs ≥high school).

While we may be moving closer toward the goal of having a practical method to identify individuals with special communication needs, we still do not know whether this would improve communications and outcomes. Seligman et al.29 measured reading fluency using the S-TOFHLA and randomized physicians to be notified if their patients had limited health literacy skills. Intervention physicians were more likely than control physicians to use management strategies recommended for patients with limited health literacy, but they felt less satisfied with the visits (81% vs 93%, P=.01) and marginally less effective than control physicians (38% vs 53%, P=.10). Postvisit self-efficacy scores were similar for intervention and control patients (12.6 vs 12.9, P=.6). Thus, the utility of screening will depend on what is done with the information gained and the effectiveness of innovative communication strategies. Without better tools and provider training, screening alone is unlikely to be beneficial. However, if we can improve communication tools and training, it may become unnecessary to screen for health literacy. Instead of screening, it may be better to assume that all patients experience some degree of difficulty in understanding health information, and we should adopt the perspective of “universal precautions” and use plain language, communication tools (e.g., multimedia), and “teach back” (having an individual repeat back instructions to assess comprehension) with all patients.

How Should We Judge Screening Tests for Health Literacy?

Wallace's study provides additional evidence that it may actually be possible to develop 1 or more screening questions that can be used in a broad variety of settings to identify individuals who are likely to have special communication needs. However, the inconsistency between the findings of Chew and Wallace means that further studies are needed. How should the results of future studies be assessed?

All studies of screening questions or short tests have compared an instrument with a “gold standard,” such as the TOFHLA or the REALM. However, the more important question is whether the screening test predicts individual capacities above and beyond the powerful predictors of age, race/ethnicity, and years of school completed (as was done in the studies by Weiss and Wallace as described above). For example, in a study of Medicare managed care enrollees, demographics predicted inadequate or marginal literacy (according to the S-TOFHLA) with an area under the receiver-operator curve of 0.81 (unpublished data).35 Studies should always assess the change in the c-statistic when the screening question is added to a predictive model that already contains age, race/ethnicity, and education and determine whether the change is clinically important (vs statistically significant). Models should enter age and education as precisely as possible, either as continuous or polytomous variables (e.g., 0 to 8, 9 to 11, 12, and >12 years of school) instead of the more weakly predictive dichotomous variables used in the study by Wallace.

Finally, studies should assess the acceptability of the test to patients. Tests that use materials that patients are likely to encounter, such as the nutrition label used in the NVS, may feel more comfortable and natural for patients than word lists or other instruments that seem more like academic tests of reading ability.

Measuring Health-Related Oral Literacy

Comprehension of spoken instructions is critically important in health care, and the IOM identified health-related oral literacy as a separate domain from health-related print literacy. Although there is an extensive literature about processing of spoken information in the fields of education, communication studies, cognitive psychology, and gerontology, we know relatively little about individuals' ability to understand common spoken instructions and the relationship of this with reading fluency.

There is no established test to measure comprehension of spoken health-related information. Like health-related print literacy, health-related oral literacy depends on vocabulary and prior conceptual knowledge of the topic being discussed. While health-related oral literacy does not depend upon reading fluency, the cognitive processes necessary for understanding the spoken word and the printed word are deeply intertwined. For all of these reasons, individuals with limited health-related print literacy are likely to also have limited health-related oral literacy, and the 2 may really be part of a single latent variable. Thus, while health-related oral literacy seems to be an appealing, distinct concept, it may be hard to measure independently from print literacy.

Research is needed to understand these issues and to determine whether the ability to comprehend spoken language predicts knowledge, attitudes, behaviors, and health outcomes independently from or more strongly than health-related print literacy. However, studies of oral literacy should be interpreted cautiously. Comprehension of spoken information depends highly on cognitive abilities, such as memory and the ability to understand the relationships between multiple pieces of information. For example, 1 item from the Mini Mental State Examination,36 a screening test for cognitive impairment, asks patients to complete 3 tasks from memory according to the original sequence in which the tasks were presented. A similar question could theoretically be used to measure “health-related oral literacy.” Despite the challenge of interpreting what is really being measured with this type of question, it still may be helpful to conduct studies to determine what proportion of people do not understand common, complex oral instructions, such as “Take 1 to 2 tablets every 4 to 6 hours.” Such studies may be useful to encourage providers to use plain language in their speech in addition to simplifying print materials.

Conclusions

Health literacy is a complicated construct that depends on individual capacity to communicate and the demands posed by society and the health care system. More comprehensive tests are needed to understand the gap between capacities and current demands to help guide efforts to educate children and adults about health issues and to develop health-related information that more of the general public can understand. For research, new instruments are needed that will measure individuals' reading fluency more precisely without posing an undue response burden. Computer-assisted testing, which selects items from a bank of possible items according to a baseline-predicted reading ability and responses to previous questions, should allow more accurate measurement of individual capacity without increasing the time required to complete testing. It remains unclear whether it is possible to develop an accurate, practical “screening” test to identify individuals with limited health literacy. Even if this goal is achieved, it remains unclear whether it is better to screen patients or to adopt “universal precautions” to avoid miscommunication by using plain language in all oral and written communication and confirming understanding with all patients by having them repeat back their understanding of their diagnosis and treatment plan. George Bernard Shaw said, “The main problem with communication is the assumption that it has occurred.” This is a universal truth that transcends reading ability.

REFERENCES

- 1.Communicating with patients who have limited literacy skills. Report of the National Work Group on Literacy and Health. J Fam Pract. 1998;46:168–76. [PubMed] [Google Scholar]

- 2.Ad Hoc Committee on Health Literacy. Health literacy: report of the council on scientific affairs. JAMA. 1999;281:552–7. [PubMed] [Google Scholar]

- 3.Institute of Medicine. Health Literacy: A Prescription to End Confusion. Washington, DC: National Academies Press; 2004. [PubMed] [Google Scholar]

- 4.Berkman ND, DeWalt DA, Pignone MP, et al. Literacy and Health Outcomes. Evidence Report/Technology Assessment No. 87. Rockville, MD: Agency for Healthcare Research and Quality; 2004. [Google Scholar]

- 5.U.S. Department of Health and Human Services. Healthy People 2010: Understanding and Improving Health. 2. Washington, DC: U.S. Government Printing Office; 2000. Chapter 11. [Google Scholar]

- 6.Baker DW, Gazmararian JA, Sudano J, Patterson M. The association between age and health literacy among elderly persons. J Gerontol B Psychol Sci Soc Sci. 2000;55:S368–74. doi: 10.1093/geronb/55.6.s368. [DOI] [PubMed] [Google Scholar]

- 7.American College of Physicians. [April 1, 2006]; Downloadable Patient Health Care Information. Available at: http://www.doctorsforadults.com/download.htm?dfa.

- 8.Kirsch I, Jungeblut A, Jenkins L, Kolstad A. Adult Literacy in America: A First Look at the Results of the National Adult Literacy Survey. Washington, DC: National Center for Education, U.S. Department of Education; 1993. [Google Scholar]

- 9.Schwartz LM, Woloshin S, Black WC, Welch HG. The role of numeracy in understanding the benefit of screening mammography. Ann Intern Med. 1997;127:966–72. doi: 10.7326/0003-4819-127-11-199712010-00003. [DOI] [PubMed] [Google Scholar]

- 10.Lipkus IM, Samsa G, Rimer BK. General performance on a numeracy scale among highly educated samples. Med Decis Making. 2001;21:37–44. doi: 10.1177/0272989X0102100105. [DOI] [PubMed] [Google Scholar]

- 11.Adelsward V, Sachs L. The meaning of 6.8: numeracy and normality in health information talks. Soc Sci Med. 1996;43:1179–87. doi: 10.1016/0277-9536(95)00366-5. [DOI] [PubMed] [Google Scholar]

- 12.Baker DW, Williams MV, Parker RM, Gazmararian JA, Nurss J. Development of a brief test to measure functional health literacy. Patient Educ Couns. 1999;38:33–42. doi: 10.1016/s0738-3991(98)00116-5. [DOI] [PubMed] [Google Scholar]

- 13.Parker RM, Baker DW, Williams MV, Nurss JR. The Test of Functional Health Literacy in Adults (TOFHLA): a new instrument for measuring patient's literacy skills. J Gen Intern Med. 1995;10:537–42. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- 14.Davis TC, Long SW, Jackson RH, et al. Rapid estimate of adult literacy in medicine: a shortened screening instrument. Fam Med. 1993;25:391–5. [PubMed] [Google Scholar]

- 15.Fisher LD, van Belle G. Biostatistics: A Methodology for the Health Sciences. New York, NY: John Wiley and Sons; 1993. [Google Scholar]

- 16.Jastak S, Wilkinson GS. WRAT-R, Wide Range Achievement Test, Administration Manual, Revised Edition. Wilmington, DE: Jastak Assessment Systems; 1984. [Google Scholar]

- 17.Miller JD. The measurement of scientific literacy. Public Understand Sci. 1998;7:203–23. [Google Scholar]

- 18.Williams MV, Baker DW, Honig EG, Lee ML, Nowlan A. Inadequate literacy is a barrier to asthma knowledge and self-care. Chest. 1998;114:1008–15. doi: 10.1378/chest.114.4.1008. [DOI] [PubMed] [Google Scholar]

- 19.Williams MV, Baker DW, Parker RM, Nurss JR. Relationship of functional health literacy to patients' knowledge of their chronic disease. A study of patients with hypertension and diabetes. Arch Intern Med. 1998;158:166–72. doi: 10.1001/archinte.158.2.166. [DOI] [PubMed] [Google Scholar]

- 20.Gazmararian JA, Williams MV, Peel J, Baker DW. Health literacy and knowledge of chronic disease. Patient Educ Couns. 2003;51:267–75. doi: 10.1016/s0738-3991(02)00239-2. [DOI] [PubMed] [Google Scholar]

- 21.Baker DW, Brown J, Chan KS, Dracup KA, Keeler EB. A telephone survey to measure communication, education, self-management, and health status for patients with heart failure: the Improving Chronic Illness Care Evaluation (ICICE) J Card Fail. 2005;11:36–42. doi: 10.1016/j.cardfail.2004.05.003. [DOI] [PubMed] [Google Scholar]

- 22.Educational Testing Service. [April 1, 2006]; Test content for health activities literacy tests. Available at: http://www.ets.org/etsliteracy/

- 23.Wolf MS, Gazmararian JA, Baker DW. Health literacy and functional health status among older adults. Arch Intern Med. 2005;165:1946–52. doi: 10.1001/archinte.165.17.1946. [DOI] [PubMed] [Google Scholar]

- 24.Baker DW, Gazmararian JA, Williams MV, et al. Health literacy and use of outpatient physician services by medicare managed care enrollees. J Gen Intern Med. 2004;19:215–20. doi: 10.1111/j.1525-1497.2004.21130.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wolf MS, Davis TC, Arozullah A, et al. Relation between literacy and HIV treatment knowledge among patients on HAART regimens. AIDS Care. 2005;17:863–73. doi: 10.1080/09540120500038660. [DOI] [PubMed] [Google Scholar]

- 26.Dolan NC, Ferreira MR, Fitzgibbon ML, et al. Colorectal cancer screening among African-American and white male veterans. Am J Prev Med. 2005;28:479–82. doi: 10.1016/j.amepre.2005.02.002. [DOI] [PubMed] [Google Scholar]

- 27.Wolf MS, Davis TC, Cross JT, Marin E, Green K, Bennett CL. Health literacy and patient knowledge in a Southern US HIV clinic. Int J STD AIDS. 2004;15:747–52. doi: 10.1258/0956462042395131. [DOI] [PubMed] [Google Scholar]

- 28.Dolan NC, Ferreira MR, Davis TC, et al. Colorectal cancer screening knowledge, attitudes, and beliefs among veterans: does literacy make a difference? J Clin Oncol. 2004;22:2617–22. doi: 10.1200/JCO.2004.10.149. [DOI] [PubMed] [Google Scholar]

- 29.Seligman HK, Wang FF, Palacios JL, et al. Physician notification of their diabetes patients' limited health literacy. A randomized, controlled trial. J Gen Intern Med. 2005;20:1001–7. doi: 10.1111/j.1525-1497.2005.00189.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bass PF, III, Wilson JF, Griffith CH. A shortened instrument for literacy screening. J Gen Intern Med. 2003;18:1036–8. doi: 10.1111/j.1525-1497.2003.10651.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Weiss BD, Mays MZ, Martz W, et al. Quick assessment of literacy in primary care: the newest vital sign. Ann Fam Med. 2005;3:514–22. doi: 10.1370/afm.405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Williams MV, Parker RM, Baker DW, et al. Inadequate functional health literacy among patients at two public hospitals. JAMA. 1995;274:1677–82. [PubMed] [Google Scholar]

- 33.Chew LD, Bradley KA, Boyko EJ. Brief questions to identify patients with inadequate health literacy. Fam Med. 2004;36:588–94. [PubMed] [Google Scholar]

- 34.Wallace LS, Rogers ES, Roskos SE, Holiday DB, Weiss BD. Screening items to identify patients with limited health literacy skills. J Gen Intern Med. 2006;21:874–7. doi: 10.1111/j.1525-1497.2006.00532.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gazmararian JA, Baker DW, Williams MV, et al. Health literacy among medicare enrollees in a managed care organization. JAMA. 1999;281:545–51. doi: 10.1001/jama.281.6.545. [DOI] [PubMed] [Google Scholar]

- 36.Folstein MF, Folstein SE, McHugh PR. “Mini-mental State.” A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–98. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]