Abstract

The increasing complexity of decision-making has emerged as a risk factor in clinical medicine. The impact that decision task complexity has on the uptake and use of clinical decision support systems (DSS) is also not well understood. Antibiotic prescribing in critical care is a complex, cognitively demanding task, made under time pressure. A web-based experiment was conducted to explore the impact of decision complexity on DSS utilization, comparing utilization of antibiotic guidelines and an interactive probability calculator for ventilator associated pneumonia (VAP) plus laboratory data. Decision support was found to be used more often for less complex decisions. Prescribing decisions of higher complexity were associated with a lower frequency of DSS use, but required the use of the more cognitively demanding situation assessment tool for infection risk along with pathology data. Decision complexity thus seems to impact on the extent and type of information support used by individuals when decision-making.

Introduction

The increasing complexity of decision-making has recently emerged as a risk factor in clinical medicine.1,2 Task complexity affects information needs and the efficiency of decision-making, as more complex tasks require more cognitive effort for information processing.1,2,3,4 Task complexity has also been demonstrated to be one of the key characteristics that influences decision quality, the selection of communication channels and the adoption of new technologies.1,5,6 Computerized decision support systems (DSS) have been suggested as a strategy to maintain decision quality under conditions of reduced cognitive resource.7,8

Antibiotic prescribing in critical care has been reported as complex, cognitively demanding and multi-factorial with potentially conflicting objectives.8,9,10 The pressures of information overload and reduced decision time in critical care add to the potential need for DSS in this setting. However, while many DSS studies examine their effectiveness in improving decisions, the impact of decision task complexity on utilization, and therefore the ultimate impact, of clinical decision support is less understood.8 This paper reports the results of an online experiment to explore the impact of decision complexity on DSS utilization for antibiotic prescribing in ICU.

Methods

Study participants

Forty board-certified specialist intensive care (ICP) and infectious disease (IDP) physicians working full-time in tertiary referral hospitals participated in the study and thirty-one completed the experiment. 29 out of 31 (93.5%) held a professional college fellowship, accounting for 16.6% and 11.7% respectively of the total Australian IDP and ICP population.

Case scenarios and their complexity assessment

Eight hypothetical cases reflecting current clinical practice were designed to cover a range of causes of pulmonary infiltrates and reviewed by an expert panel with consensus determining the optimal prescribing decision for each. Details can be found in [11].

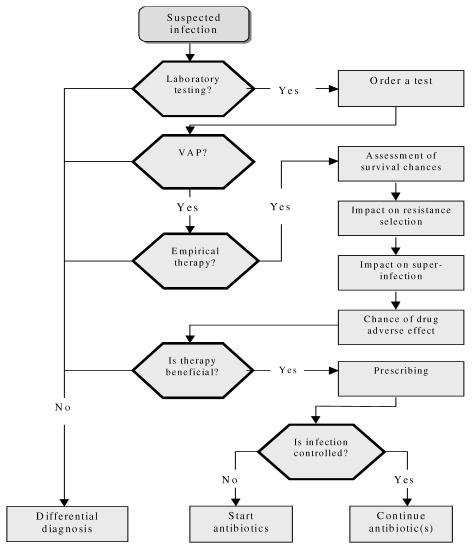

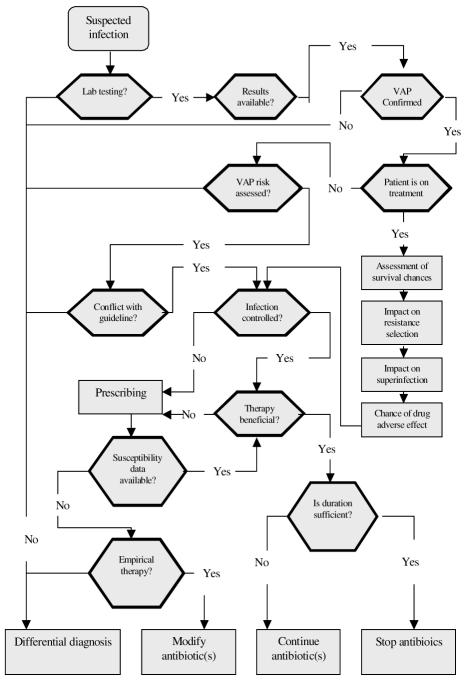

Prescribing tasks for the cases were represented as a decision tree or a clinical algorithm12 shown in Figures 1 and 2. The complexity of prescribing decisions for each case was estimated by three independent methods: (a) the sum of cognitive effort in processing each decision tree was calculated as the total elementary information processes4 (EIPs) needed to accomplish the decision task (e.g., comparing two values, reading a value etc), (b) Clinical Algorithm Structure Analysis (CASA)13, and (c) comparing the minimum message length14 (MML, the minimum number of bits of information required to describe a decision tree). Three domain experts assigned all scores independently with equal weights and discrepancies resolved by consensus.

Figure 1.

Clinical algorithm representation of the task to start or continue antibiotics “lower” complexity cases.

Figure 2.

Clinical algorithm representation of the task to modify or stop antibiotic therapy – “Higher” complexity cases.

Decision support

Two decision support tools were developed: (1) a web-accessible probability calculator for VAP with an evidence-based algorithm for VAP management14 and (2) laboratory reports were created for each case presenting the results of bacterial cultures and antibiotic susceptibility testing, along with a DSS module that allowed a clinician to enter these data and calculate the patient’s probabilities of VAP using the Clinical Pulmonary Infection Score.15

Experimental design

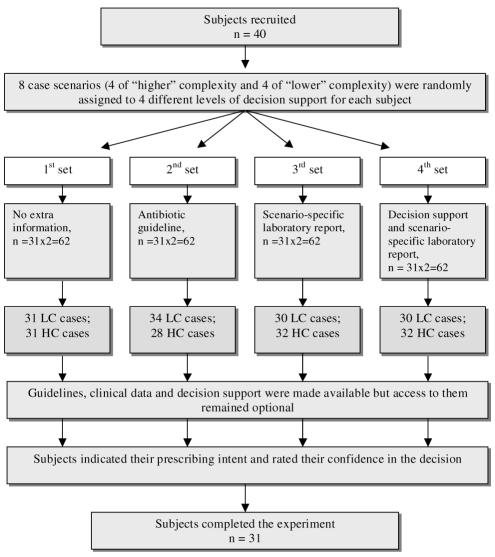

A cross-over design with intra-subject comparison was chosen to minimize the possible effects of variability between subjects.11 The design of the experiment is outlined in Figure 3.

Figure 3.

Online experiment design. LC – lower complexity; HC – higher complexity.

The participants were presented with a sequence of eight case scenarios of different complexity requiring a prescribing decision. For each scenario, one of three different levels of support was randomly offered: (a) antibiotic guidelines, (b) the case-specific microbiology laboratory report, and (c) DSS plus the microbiology report (Figure 3). For each subject, the case scenarios were randomly allocated across the different levels of decision support. However, the two initial cases presented to subjects were always with access to guidelines, the second offered laboratory reports and no guidelines, and the second two cases offered laboratory reports and DSS.

Participants were asked to make a prescribing decision (start, stop or modify antibiotic therapy) using information available and they consider important; and to indicate on a five-point Likert scale their levels of confidence in the diagnosis of VAP; and their prescribing decision for each case. There were no limits set on the time allowed for a decision. Pilot testing was done with five clinicians to determine the acceptability and clarity of the cases and questionnaire. The experiment was implemented as a series of static and dynamic web pages on the server of the University of NSW. Subjects could participate in the experiment at a location of their choosing.11

Outcome measures and analysis

The decision complexity for each prescribing case was estimated prior to analysis of the experimental data. The dependent variables of the analysis were decision accuracy and decision time. Participants’ decisions were compared with expert-consensus optimal decisions. Confidence in both the diagnosis of VAP and each individual prescribing decision was also determined on a five-point Likert scale. Using a log-file we monitored whether or not, and for how long, participants accessed each decision support feature offered.

Results

All doctors who participated in the study worked as consultants in tertiary referral hospitals. Seventy-three percent of ICP rated their computer skills as “good” or “excellent” compared with only 38% of IDP.

Complexity analysis using MML, CASA and EIP scores demonstrated that the four cases in which the correct decision was to modify or to stop antibiotic therapy had significantly higher scores (“higher complexity” decisions) than the four cases where the correct decisions was to start antibiotics (“lower complexity decisions) (p<0.001).

Overall, the rate of decision support usage varied between 39% and 60% across the 62 case scenarios for which they were provided. Specifically, antibiotic guidelines, microbiology reports and the DSS with microbiology reports were selected in 24 (39%), 36 (58%) and 37 (60%) cases, respectively. On average, it took 245 seconds to make a decision using the DSS features compared with 113 required for unaided prescribing intent (p<0.001). However, many clinicians chose not to use any of the DSS provided (n=8).

The ‘higher complexity’ cases were associated with a significantly lower quality of decision and a lower confidence in decision (Table 1). Participants achieved better agreement with the expert-derived quality options for the ‘lower complexity’ cases. ‘Higher complexity’ cases on average were harder to accomplish and demanded more time to complete (156 vs. 125 seconds on average, p=0.01) suggesting higher cognitive loads.

Table 1.

Relationship between complexity of the prescribing decision and decision outcomes

| Relative complexity of the decision

|

||||

|---|---|---|---|---|

| Lower | Higher | Statistical difference between groups | ||

| Mean complexity scores | ||||

| MML, bits | 35.8 | 67.7 | t=34.75 | p<0.001 |

| CASA | 17 | 33 | t=14.59 | p<0.001 |

| EIP | 164 | 388 | t=22.74 | p<0.001 |

| Agreement with the expert panel, n (%) | 99/124 (80%) | 79/124 (64%) | Chi S = 7.96 | p= 0.005 |

| Confident or highly confident of prescribing decision, n (%) | 97/124 (78%) | 79/124 (64%) | Chi S = 6.34 | p=0.012 |

| Time taken (seconds per case) | ||||

| Mean | 125 | 156 | t = 2.58 | p=0.010 |

| Median | 91 | 120 | ||

| SD | 95 | 94 | ||

Chi S = Chi square; MML, minimum message length; CASA, clinical algorithm structure analysis; EIP, elementary information process.

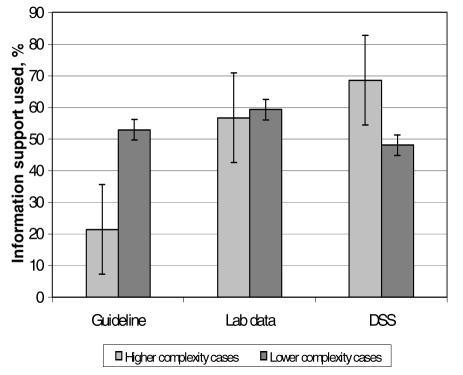

Decision support was used in 50% of the ‘more complex’ decisions and in 53% of prescribing decisions with ‘lower complexity’ (p=0.35). When a prescriber made a decision to use decision support then the decision complexity may have influenced the choice of a specific type of information support. Frequency of choice of prescribing guidelines, patient-specific laboratory data and VAP risk assessment tool for decisions of “lower” and ”higher’’ complexity were 53% and 22%, 59% and 57%, 48% and 69%, respectively (Figure 4).

Figure 4.

Uptake of different types of information support for cases with higher and lower complexity decisions.

Discussion

The limitations of this study were as follows. First, this experiment relied on expert physicians’ self-reported prescribing intentions. It is possible, that our results reflect clinicians’ perceived need for guidance than the impact of decision complexity in the real world. However, because the information was presented in a standard way and in a clinically meaningful context, it is plausible that their responses reflected actual clinical decision-making better than would responses to direct questions about treatment strategies. Decision-making in complex domains can be considered to be a function of the decision task and the expertise of the decision-maker. By controlling for the level of expertise (all participants were experienced specialist-level clinicians practicing in acute care) the experiment was able to treat the decision task as the main variable. To ensure that observed differences in decision-making were due to the different information sources available and not to other factors, we used a repeated measures experiment with simulated cases rather than randomisation of participants. We cannot exclude the possibility of sampling bias. However, the external validity of our study is confirmed by the observed frequency of ‘correct’ choices (65%) in the control set of unaided decisions, which is consistent with other studies of clinical decision-making.16

Task complexity seems to be an important feature that shapes the uptake and effectiveness of decision support in clinical decision-making. This study demonstrated the impact of prescribing complexity on the type of information support selected for aiding of individual decision-making. Specifically, prescribing guidelines were much more likely to be used for decisions of ‘lower’ complexity, while calculating infection risk with pathology data was preferred for prescribing decisions of the ‘higher complexity’. These findings support previous suggestions that less complex or better structured tasks require fact-oriented sources and rule-based processing.8 In contrast, more complex decision tasks appear to benefit from decision support that assists in problem structuring and understanding.

Decision complexity is also shaped by context, including the interactions between task attributes and the characteristics of the decision-maker. Information seeking by a decision-maker is connected to task complexity and structure. An individual’s information-seeking style thus probably integrates these different attributes and determines his or her information seeking. Although evidence to support this hypothesis is accumulating, more research is needed to explore the relationship between task complexity, decision support seeking and subsequent decision quality. This knowledge is critical for the development of successful interventions that change the behavior of health care practitioners.

There are several approaches to assessment of decision task complexity. A combination of approaches (e.g., MML, evaluation of cognitive effort, and CASA score) provided a concordant estimate of prescribing complexity for our purposes. Measuring decision complexity remains difficult outside of our experimental setting, given the potential impact of decision maker variables, which were controlled for here. Larger tasks than those studied here may decompose into a number of subtasks, inputs and products. Some decision elements may be probabilistic in behavior or evolve over time. Assigning a complexity measure in these cases is less straightforward.

In conclusion, decision complexity appeared to affect task performance and the extent and type of decision support used by individuals in decision-making. Computerized clinical guidelines are likely to be more suitable for less complex decision tasks, and more active computational tools seem better suited to more complex tasks. Studying the relationship between decision complexity and information seeking also opens up the possibility of helping predict the risk of human error when using decision support systems. Decision complexity may guide a designer’s choice of decision support allocation and functionality to different tasks. Measuring decision complexity also seems to help us understand how the adoption of DSS is related to complexity of decision process variables and which “form” of electronic decision support is most likely to be adopted by health care professionals for decisions of different complexity.

Acknowledgements

The authors acknowledge the cooperation of the volunteering participants without whom our experiment could not have been carried out. We are indebted to H. Garsden and A. Polyanovski for their input in the experiment’s software development. We also thank Drs J.R.Iredell and G.L.Gilbert for their expert advice. VS was supported by the National Institute of Clinical Studies.

References

- 1.Bystrom K, Jarvelin K. Task complexity affects information seeking and use. Inform Proc Manag. 1995;31:191–213. [Google Scholar]

- 2.Chinburapa V, Larson LN, Brucks M, Draugalis JL, Bootman JL, Puto CP. Physician prescribing decisions: The effects of situational involvement and task complexity on information acquisition and decision making. Soc Sci Med. 1993;36:1473–82. doi: 10.1016/0277-9536(93)90389-l. [DOI] [PubMed] [Google Scholar]

- 3.Vakkari P. Task complexity, problem structure and information actions: Integrating studies on information seeking and retrieval. Inform Proc Manag. 1999;35:819–37. [Google Scholar]

- 4.Chu PC, Spires EE. The joint effect of effort and quality on decision strategy choice with computerised decision aids. Dec Sci. 2000;31:259–92. [Google Scholar]

- 5.Meyer MH, Curley KF. The impact of knowledge and technology complexity on information system development. Expert Syst Applic. 1995;8:111–34. [Google Scholar]

- 6.Wood RE. Task complexity: Definition of the construct. Org Behav Human Dec Process. 1986;37:60–82. [Google Scholar]

- 7.Patel V, Kaufman D. Medical informatics and the science of cognition. J Am Med Inform Assoc. 1998;5:493–502. doi: 10.1136/jamia.1998.0050493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Patel VL, Arocha JF, Diermier M, How J, Mottur-Pilson C. Cognitive psychological studies of representation and use of clinical practice guidelines. Int J Mel Inform. 2001;63:147–67. doi: 10.1016/s1386-5056(01)00165-4. [DOI] [PubMed] [Google Scholar]

- 9.Horsky J, Kaufman DR, Oppenheim MI, Patel VL. A framework for analyzing the cognitive complexity of computer assisted clinical ordering. J Biomed Inform. 2003;36:4–22. doi: 10.1016/s1532-0464(03)00062-5. [DOI] [PubMed] [Google Scholar]

- 10.Sintchenko V, Coiera E. Which clinical decisions benefit from automation? A task complexity approach. Int J Med Inform. 2003;70:309–16. doi: 10.1016/s1386-5056(03)00040-6. [DOI] [PubMed] [Google Scholar]

- 11.Sintchenko V, Coiera EW, Iredell JR, Gilbert GL. Comparative impact of guidelines, clinical data and decision support on prescribing decisions: an interactive web experiment with simulated cases. J Am Med Inform Assoc. 2004;11:71–7. doi: 10.1197/jamia.M1166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Margolis CZ, Sokol N, Susskind O, et al. Proposal for clinical algorithm standards. Med Dec Making. 1992;12:149–54. [PubMed] [Google Scholar]

- 13.Sitter H, Prunte H, Lorenz W. A new version of the program ALGO for clinical algorithms. In: Brender J, Christensen JP, Scherrer JR, McNair P, editors. Medical Informatics Europe 1996/ Studies in health technology and informatics. IOS Press; Amsterdam: 1996. pp. 654–7. [Google Scholar]

- 14.Wallace CS, Patrick JD. Coding decision trees. Machine Learn. 1993;11:7–22. [Google Scholar]

- 15.Singh N, Rogers P, Atwood CW, Wagener MM, Yu VL. Short-course empiric antibiotic therapy for patients with pulmonary infiltrates in the intensive care unit. A proposed solution for indiscriminate antibiotic prescription. Am J Resp Crit Care Med. 2000;162:505–11. doi: 10.1164/ajrccm.162.2.9909095. [DOI] [PubMed] [Google Scholar]

- 16.Elting LS, Martin CG, Cantor SB, Rubinstein EB. Influence of data display formats on physician investigators’ decisions to stop clinical trials: prospective trial with repeated measures. Br Med J. 1999;318:1527–31. doi: 10.1136/bmj.318.7197.1527. [DOI] [PMC free article] [PubMed] [Google Scholar]