Abstract

Clinical research is vital to the translation of biomedical knowledge into standard clinical practice. Efforts are underway under the NIH Roadmap initiative to re-engineer the national research enterprise to sustain the rapid pace of innovation in the biomedical domain. As part of these efforts, we have embarked on an empirical evaluation of clinical research workflow in community practice settings. The reasons for this focus are three-fold. First, there is an increasing tendency by trial sponsors to conduct clinical trials in community, rather than academic, settings. Second, understanding workflow is critical to developing re-engineering strategies. Third, workflow associated with the conduct of clinical research in community practices have received virtually no attention in the scientific literature. In this paper, we describe a pilot study using time-motion observations, to determine the workflow of clinical research coordinators, the tools they use to conduct the constituent activities of those workflows, and their ultimate outcomes. The preliminary findings provide insights and understanding of clinical research workflow in community practice settings – knowledge that may significantly impact the way in which information technology based re-engineering can be deployed in such an environment.

Introduction

Clinical research is a vital phase in the continuum from biomedical discovery to actual clinical practice.1,2 Since the first randomized controlled trial3, the numbers of clinical studies have increased exponentially, while the studies themselves have become more complex, involving multiple stakeholders and complex processes. However, developments in basic sciences, particularly genomics, have surpassed the current capacity of the clinical research infrastructure to move this knowledge into clinical practice. There is an urgent need to modernize the mostly paper-based clinical research enterprise. The lack of supporting computational systems has been identified as an important barrier to be overcome for the clinical research bandwidth to be expanded.1 The National Institutes of Health (NIH) has embarked on the Roadmap initiative, which includes as one of its goals, to increase the clinical and translational research capacity in the United States.4 Such an increase in research capacity is predicated on significant re-engineering efforts, involving a wide spectrum of organizations, including both academic medical centers and community practices. An aim of this re-engineering effort is to use information technology (IT) to increase efficiency, communication and collaboration among diverse and distributed research networks and patient communities. A critical step in achieving this aim is the deployment of IT in a manner consistent with and complementary to existent workflow models.

Background

Information technology has greatly changed the way we work and collaborate. In healthcare, electronic medical records (EMRs) and computerized physician order entry (CPOE) have been recognized as essential to improving health outcomes, ensuring patient safety and decreasing costs.5,6 Similar technologies have also been proposed to accelerate the clinical research process.2 However, the healthcare industry has been slow to adopt IT. Several reasons, including lack of financial incentives, unifying data standards, adequate training, and in some cases, lack of user motivation have led to frequent failures, in the adoption and implementation of information systems. One oft-cited reason for poor IT adoption is lack of a user-centered focus during system design. Such disconnect between end-users and developers results in the development of systems that often do not integrate with the real world workflow for which they are intended. Introducing a new system significantly affects workflow and often times off-the-scene system developers do not understand the consequences of such deployments. Kukafka et al. have defined a three-point framework for IT adoption that attempts to address this deficiency in the system design and implementation process by placing emphasis on the fact that simply developing a system is not enough to ensure adoption.7 Using this premise, predisposing, enforcing and reinforcing factors must to be examined holistically to understand the entire ecosystem that surrounds end users when considering an IT deployment. For example, even a well-designed system will not be adopted if there is inadequate user training (a reinforcing factor).

Against this background, as part of the NIH Roadmap initiative, Columbia University’s InterTrial project (http://intertrial.columbia.edu) has employed a holistic, bottom-up and empirical approach to better understand the ‘what to’ and ‘how to’ questions essential to clinical research re-engineering. This process is based upon the premise that before imposing an intervention, including those involving IT, one must understand the dynamics of clinical research in community practice settings. Community practices are becoming increasingly important settings for clinical research because clinical trials are no longer being conducted exclusively within academic medical centers.1 The primary purpose of InterTrial’s empirical approach is to answer some very basic questions, which include:

How do research coordinators allocate their time during a typical workday?

What specific tasks/activities comprise the workflow associated with clinical research?

What tools are used to accomplish these tasks?

Our intention from the start has been to avoid the pitfall of claiming to judge and know what the users of the re-engineered solutions want as opposed to hearing their concerns and observing them in their environment. Central to our enquiry has been the clinical research coordinator, who is a primary stakeholder in clinical research and bears the burden of performing the day-to-day research tasks. Surprisingly, despite voluminous literature on clinical trials, there have been very few studies exploring the activities of clinical research coordinators. Rico-Villademoros et al.8 surveyed oncology trial coordinators to determine what activities they perform but did not provide information about the duration of activities or the tools used to accomplish them. In this study, our goal was to create a workflow model for clinical coordinators. The workflow management coalition defines workflow as ‘the automation of a business process, in whole or part, during which documents, information or tasks are passed from one participant to another for action, according to a set of procedural rules’.9 For the purposes of this study, we have limited the definition of workflow to include the determination of how coordinators spend their time, the tools they use to carry out clinical research related tasks, and the outcomes of their actions. Such determinations are focused on the measurement of work activities as performed by one role-player in a complex multi-stakeholder enterprise. To accomplish our objective, we conducted time-motion (TM) studies using a PDA-based software. While we report in this paper on our findings from the TM studies, we have supplemented the TM study data with other qualitative investigations which include surveys, interviews and focus groups. This triangulation of methods and combined analyses will eventually inform the re-engineering efforts of Columbia’s InterTrial project.

Methods

Time-motion Study

Numerous methods have been developed to study people’s time utilization of which time-motion (TM) studies are often considered the gold standard.10,11 A typical TM study involves an investigator following a subject and recording the temporal aspects of events (e.g. tasks) under evaluation. The development of TM software that run on PDAs has greatly increased the resolution and speed of data recording, which was previously done with a stop watch and paper, limiting the scope of such studies. This study utilized such a software tool, running on PalmOS™ PDAs.10 TM studies have been used occasionally in healthcare research, focusing on providers in settings like emergency departments or while using information systems.10,12 To our knowledge, this is the first study to use this technique to measure the workflow of clinical research coordinators in community practice settings.

Defining the Workflow Model and Vocabulary

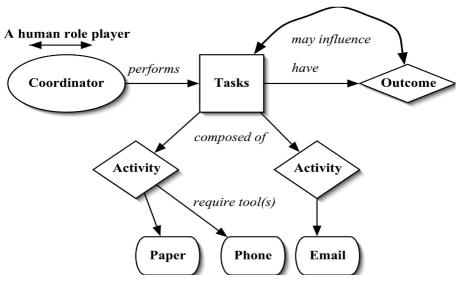

We conducted preliminary interviews and observations with research coordinators at four Columbia University Medical Center clinics in order to develop the workflow model and also to define the TM observation criteria (e.g. observation units). Using these interviews and observations, we were able to define a variety of tasks (Figure 1), where each task was composed of concrete and observable activities or actions. These activities were in turn performed using a tool, or a combination of tools. Each specific task had an observable or otherwise quantifiable outcome. To illustrate, a coordinator could be doing the task of documentation in which the specific activity would be filling a case report form using a paper form and leading to an outcome of completed and resolved.

Figure 1.

Clinical Research Coordinator Workflow Model.

The granularity of activities can vary substantially. For example, the act of filling a case report form could involve even smaller acts like picking the form, holding a pen and then writing. However, we chose not to subdivide activities further, because that would provide low marginal value of the information compared to the complexity of the data capture and analysis. Our method for capturing workflow focuses on an individual and is similar to models that have been used in comparable information systems research. For example, Marshak13 identifies work as being composed of action structures (sequence of actions or activities), actors, tools and information sources. Our model also draws parallels with Norman’s14 seven-stage theory of action, which has been widely used to define human-computer interaction models. To distinguish between a task and an activity, we defined tasks as high-level descriptors of work that could be expressed as a noun phrase (e.g. documentation). Activities, in turn, were defined as smaller atomic units of observable actions that could be expressed as a verb (e.g. filling a case report form). Identifying and naming the tasks and activities was made difficult and somewhat subjective because of the lack of a well accepted terminology related to clinical trials workflow. In addition to the four descriptors of work (task, activity, tool, outcome), we also added a fifth element named mode to express the mode of any observed dialogue – for example, whether a coordinator was receiving or responding to an information request.

Once the workflow model was established, a vocabulary was required to describe observed components of that model. Vocabulary terms were drawn from a previous study8 and from the pre-study clinic observations and interviews described earlier. Vocabulary terms were organized into sets based upon the workflow elements they were intended to describe (Table 1).

Table 1.

Sets and Example Terms included in the Vocabulary used to Define Workflow

| Set Name | Example Terms |

|---|---|

| Tasks | Documentation, recruitment, patient care |

| Tools | Paper, artifacts, computers, email, phone, fax, printer |

| Activities | Identify eligible patients, filling a CRF,, dispense medication |

| Outcomes | Completed and resolved, incomplete, new, old, spawn, interrupted |

| Mode | Ask, take, respond, give |

Data Collection

We observed three clinical research coordinators in three different community practices during this pilot study. The study protocol was approved by the Columbia University IRB and the principal investigators at each site. The purpose of the study and the role of the PDA-based TM tool were explained to each coordinator to relieve potential fears that the study may be used to evaluate their job performance. Observational data were entered directly into a PDA running the TM software.10 For data entry, the tool supported the creation of up to five mutually exclusive sets of descriptors for an observation. The items in each set were displayed as a drop-down list.9 These exclusion sets required that only one term from each set be used for documenting an observation. This data entry schema worked very well with our workflow model, which defined the entries into five nested exclusion sets (outcomes, tasks, activities, tools and nature).

Data Analysis

After completing the observations and data entry, raw data were processed using study-specific PERL scripts. The scripts organized the data into a relational model. Each observation episode (data from one site) or observation period (one observation sequence in an episode), along with corresponding tasks, activities and tools used, were stored in separate data structures. The scripts allowed for analysis of single observation periods, observation episodes, or combinations of such observations. The scripts also contained analytical functions (for example, to rank a set of activities by frequency of occurrence). Statistical analysis was performed by exporting the output of the scripts into Microsoft ExcelTM.

Results

In total, 20,000 seconds of observation data were recorded in the three observation episodes (one from each site). The sites chosen were involved in routine clinical care as well as clinical research in several trials. The data contained 105 tasks that included 125 activities and 210 instances of using a tool. Since an exhaustive description of the data analysis would be difficult to present within the constraints of this paper, we provide a summary of some statistical results. Across the entire data set, the time spent on a task, activity, or using a tool varied substantially, with some tasks or activities being performed repeatedly (frequency) and for longer durations than others (Table 2).

Table 2.

Time Spent and Frequency Ranges

| Set | Time Range(s) | Frequency Range (num. of occurrences) |

|---|---|---|

| Tasks | 25 – 4,787 | 1 – 22 |

| Tools | 04 – 4,638 | 1 – 48 |

| Activities | 23 – 3,093 | 2 – 20 |

Similarly, we determined the most commonly performed tasks, activities and the tools used to perform them. These were ranked by total time spent on each element and not on frequency. Overall, the three most commonly performed tasks were documentation (24%), administrative work (20%) and recruitment (16%). The results indicate that filling a case report form and finding trial related information were the two most common activities performed (Table 3).

Table 3.

Five Topmost Activities by Time Spent

| Activity | Total Time Spent (s) | % of Total Observation |

|---|---|---|

| Filling Case Report Form | 2,375 | 12% |

| Seeking Trial Information | 2,082 | 11% |

| Computerized data entry | 2,031 | 10% |

| Seeking Gen. Information | 1,976 | 10% |

| Finding eligible patients | 1,803 | 9% |

Paper-based tools (e.g. forms) are the most commonly used tools to perform an activity, accounting for 24% of the total observed time (Table 4). As regards to outcomes, of the 105 tasks, only 42 (40%) tasks were new (those voluntarily started by the coordinator), whereas the rest 63 were either spawned(15%), were continuation of old tasks(26%) or resulted from interruptions(18%). Only 47 (45%) tasks were completed and resolved (meaning successfully finished), 6 (5%) were completed but unresolved and most of the rest were incomplete (33%).

Table 4.

Five Most Commonly Used Tools

| Tool | Total Time Spent (s) | % of Total Observation |

|---|---|---|

| Paper-based tools | 4,638 | 24% |

| Verbal Person Communication | 3,548 | 18% |

| Information System | 2,594 | 13% |

| Manual work | 2,366 | 12% |

| Phone | 1,408 | 7% |

Further analysis revealed several other interesting results. First, activities were generally performed using a combination of several tools, with the coordinator switching from one to another fairly rapidly, at times every few seconds. For example, in one instance of scheduling a patient visit, the coordinator was recorded as having used a paper tool, verbal person communication (e.g. talking), and the phone. Second, the data also indicate that coordinators work in an interrupt-driven environment. Tasks are stopped and new ones are started based on the interrupt generated. In total, 19 tasks (18%) were interrupted and these were usually because the subject was stopped by a colleague to discuss a matter or by an incoming phone call. Third, for each activity, we calculated the time spent per occurrence of that activity by dividing the total time spent on the activity by its frequency. Using this ratio, we discovered that finding eligible patients took the most time, 901 seconds per event.

Discussion

This study demonstrates that a TM analysis provides a rich data set for understanding the work of clinical research coordinators. Our analysis demonstrated that activities like filling a case report form or finding eligible patients for a trial that have been reported in other studies to be rather cumbersome and time-consuming, were also found to be time-intensive in our observations. It can also be inferred from our results that the burden of documentation, the most time-consuming task, is largely due to reliance on inherently inefficient paper-based processes that also lead to decreased efficiency in the context of information retrieval tasks (e.g. finding trial information). The simplification or digitization of these activities could potentially increase efficiencies. Similarly, the use of multiple tools to accomplish an activity is suggestive of the distributed cognitive nature associated with these activities, a theory which emphasizes that human interactions with a computer system can be heavily dependent on other artifacts present in the user’s environment.15 This discovery could have immense importance for system developers. For example, the data suggests the use of artifacts (e.g. sticky notes) as important external memory or visual aids while performing such activities. Corresponding functions to mimic the usefulness of such artifacts would have to be provided by any IT solution that is introduced to aid coordinators in performing their work. Also, the reliance on multiple tools to perform an activity and the presence of interruptions suggests that IT system design must support the ability to preserve information about the execution states of an activity. This is because as coordinators move from one activity to another or from one tool to another, the system should always present the last state of use.

Having described the usefulness of the TM method, it would be instructive to mention the limitations in our study. First, our observation dataset is quite small and given the variability of clinical trials, many additional observations will be needed to draw certain conclusions. Second, since our work has focused on only one stakeholder (the coordinator) within the entire clinical research enterprise, our results are further limited in their generalizability. Third, we need to ensure that the work vocabulary used to code the observed events is sufficiently expressive, objective and interoperable with other similar terminologies.

Further conduct of this time-motion study may help us to answer and develop several hypotheses regarding workflow in clinical research as conducted in community practice settings. For example, it would be interesting to study if there are distinct differences between the activities performed in trials belonging to different therapeutic groups, if work patterns change with the time of the day or if work patterns change across coordinators from different sites conducting the same trials. It may also be useful to explore the use of audio-video recordings to have more detailed understanding of clinical trials workflow. Our future work will include the refinement of the PDA tool used in this study by adding needed features, such as the ability to load different observation schemas (definitions of tasks and activities that are used to encode observation data) and to provide an ability to pause the elapsed time of an observation of an activity that has to be stopped due to a non-related interruption. We also plan on developing a novel visualization tool to assist in the synthesis and evaluation of the results of our ongoing TM studies within this, and other, similar domains. The tool will make it possible to load an observation data file, compress the observation timescale and then view the events as an animated display.

Conclusion

Clinical research is a vital enterprise that needs fundamental re-engineering to ensure its future sustainability. Using several techniques, one of which we have described in detail here, we are trying to understand the nature of workflow associated with clinical research in community practices in a bottom-up and empirical manner. More research is required before a detailed workflow model and determinants of IT adoption can be elicited. However, our initial results are encouraging and we believe, illustrate the types of knowledge necessary to ensure the successful deployment of information technology in community-based clinical research.

Acknowledgements

This work was supported by the NHLBI contract HHSN268200455208C, for which Stephen Johnson is the principal investigator. The authors would also like to thank the community practices and specially the coordinators for contributing their time to this study.

References

- 1.Sung NS, Crowley WF, Jr, Genel M, et al. Central challenges facing the national clinical research enterprise. JAMA. 2003;289:1278–87. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- 2.Payne PR, Johnson SB, Starren JB, Tilson HH, Dowdy D. Breaking the translational barriers: the value of integrating biomedical informatics and translational research. J Investig Med. 2005 May;53(4):192–200. doi: 10.2310/6650.2005.00402. [DOI] [PubMed] [Google Scholar]

- 3.Ferguson RG, Simes AB. BCG vaccination of Indian Infants in Saskatchewan. Tubercle. 1949;30:5–11. doi: 10.1016/s0041-3879(49)80055-9. [DOI] [PubMed] [Google Scholar]

- 4.Zerhouni E. The NIH roadmap. Science. 2003 Oct 3;302(5642):63–72. doi: 10.1126/science.1091867. [DOI] [PubMed] [Google Scholar]

- 5.The Institute of Medicine. The computer-based patient record: an essential technology for health care. Revised Edition. 1997. [PubMed] [Google Scholar]

- 6.Sittig DF, Stead WW. Computer-based physician order entry: the state of the art. Journal of the American Medical Informatics Association. 1994;1 (2):108–23. doi: 10.1136/jamia.1994.95236142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kukafka R, Johnson SB, Linfante A, Allegrante JP. Grounding a new information technology implementation framework in behavioral science: a systematic analysis of the literature on IT use. J Biomed Inform. 2003;36 (3):218–27. doi: 10.1016/j.jbi.2003.09.002. [DOI] [PubMed] [Google Scholar]

- 8.Rico-Villademoros F, Hernando T, Sanz JL, et al. The role of the clinical research coordinator--data manager--in oncology clinical trials. BMC Med Res Methodol. 2004 Mar 25;4:6. doi: 10.1186/1471-2288-4-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Workflow Management Coalition. The workflow management coalition terminology and glossary. http://www.wfmc.org/standards/docs/TC-1011_term_glossary_v3.pdf.

- 10.Starren J, Chan S, Tahil F, White T. When seconds are counted: tools for mobile, high-resolution time-motion studies. Proc AMIA Symp. 2000:833–7. [PMC free article] [PubMed] [Google Scholar]

- 11.Finkler SA, Knickman JR, Hendrickson G, Lipkin M, Jr, Thompson WG. A comparison of work-sampling and time-and-motion techniques for studies in health services research. Health Services Research. 1993;28(5):577–597. [PMC free article] [PubMed] [Google Scholar]

- 12.Asaro PV. Synchronized time-motion study in the emergency department using a handheld computer application. Medinfo. 2004;11(Pt 1):701–5. [PubMed] [Google Scholar]

- 13.Marshak RT. Workflow: Applying automation to Group Processes. In: Coleman D, editor. Groupware - Collaborative Strategies for Corporate LANs and Intranets. Prentice Hall PTR; 1997. pp. 143–181. [Google Scholar]

- 14.Norman DA. Cognitive engineering. User centered system design: new perspectives on human–computer interaction. In: Norman DA, Draper SW, editors. Hillsdale; NJ: 1986. pp. 31–61. [Google Scholar]

- 15.Horsky J, Kaufman DR, Oppenheim MI, Patel VL. A framework for analyzing the cognitive complexity of computer-assisted clinical ordering. J Biomed Inform. 2003 Feb–Apr;36(1–2):4–22. doi: 10.1016/s1532-0464(03)00062-5. [DOI] [PubMed] [Google Scholar]