Abstract

Background

The costs and limitations of clinical encounter documentation using dictation/transcription have provided impetus for increased use of computerized structured data entry to enforce standardization and improve quality. The purpose of the present study is to compare exam report quality of Veterans Affairs (VA) disability exams documented by computerized protocol-guided templates with exams documented in the usual fashion (dictation).

Methods

Exam report quality for 17,490 VA compensation and pension (C&P) disability exams reviewed in 2005 was compared for exam reports completed by template and exam reports completed in routine fashion (dictation). An additional set of 2,903 exams reviewed for quality the last three months of 2004 were used for baseline comparison.

Results

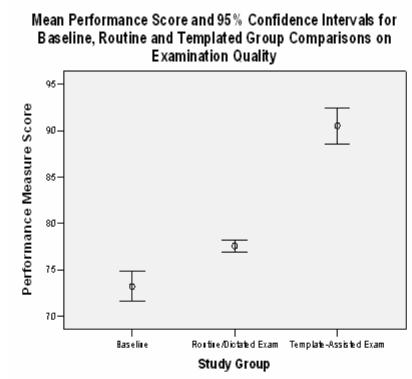

Mean template quality scores of 91 (95% CI 89, 92) showed significant improvement over routine exams conducted during the study period 78 (95% CI 77, 78) and at baseline 73 (95% CI 72, 75). The quality difference among examination types is presented.

Discussion

The results of the present study suggest that use of the standardized, guided documentation templates in VA disability exams produces significant improvement in quality compared with routinely completed exams (dictation). The templates demonstrate the opportunity and capacity for informatics tools to enhance delivery of care when operating in a health system with a sophisticated electronic medical record.

INTRODUCTION

Healthcare providers documenting clinical encounters have routinely relied on handwriting or dictation with transcription, despite widespread recognition of the limitations of these techniques.(1) Documentation by dictation is uncontrolled and unstructured, making re-use of the data difficult and leaving open the potential of reduced quality due to incompleteness or inaccuracy.(2, 3) To overcome these and other limitations, clinicians increasingly are using computerized structured data entry systems that are designed to enhance the process of clinical documentation. Computerized systems typically employ a graphical user interface (GUI) to facilitate flexible data input and to structure data gathering. The structured nature of these systems enforces standardization and may assure documentation completeness that result in improved quality.

Structured input and reporting in clinical documentation is a core competency in the field of informatics. System exemplars are: Stead’s TMR,(4) Barnett’s COSTAR,(5) Weed’s PROMIS systems,(6) Johnson’s Clictate,(7) Shortliffe ONCOCIN,(8) and Musen’s T-HELPER,(9) among others. The VA’s Computerized Patient Record System (CPRS) offers structured documentation in note templates and in reminder and ordering dialogs.(10) Yet, despite these ground breaking efforts, there remain significant challenges to large-scale adoption of computer-assisted clinical documentation tools.(11)

The Veterans Health Administration (VHA) has been recognized for its successful use of information technology for healthcare and the resultant improvements in quality, patient safety, and cost reduction.(12) As part of its continuing quality improvement efforts, the VA recently developed and deployed within the VistA (VistA) system a computerized GUI protocol-based template system called the CAPRI (CAPRI) Compensation and Pension Worksheet Module (CPWM) for VA disability exams. Through collaboration between the VA Compensation and Pension Examination Program (CPEP) and the VHA Office of Information, the CAPRI CPWM system of templates was designed to implement the all VA disability protocols in an electronic point-n-click format.

The CAPRI CPWM has been implemented at all VA medical centers and in 128 VistA systems over the last 18 months. In most instances the tool has been offered to clinicians for voluntary use. Concurrently, CPEP has been monitoring disability exams for national quality improvement purposes.(13) The purpose of the present study is to compare quality of exam reports generated by computerized CAPRI CPWM templates with reports created by dictation.

Methods

Study Design

The experimental design was a naturalistic study of template use in a real world application.

Exam Sampling

All 17,490 C&P disability exams randomly selected for CPEP quality review between January and December 2005 were included in the present study. These exams were separated into two groups, exam reports completed by template and exams reports completed in routine fashion (dictation). For comparison, all 2,903 CPEP quality reviewed exams for the preceding three-month period were selected as a baseline.

Examination Quality Measurement

CPEP review utilizes quality indicators to measure exam report quality for the ten most frequently requested C&P exam types. Quality indicators were constructed, validated, and vetted in a psychometrically rigorous fashion by two expert panels. An example quality indicator from a Joint exam is “Does the report provide the active range of motion in degrees?”

Exam Quality Scoring

Each C&P examination was assigned to two reviewers who independently completed quality assessments online using established quality indicators. If the scoring on the paired assessments revealed a disagreement, the examination was assigned to a third reviewer for an independent, tiebreaking review for each disagreement.

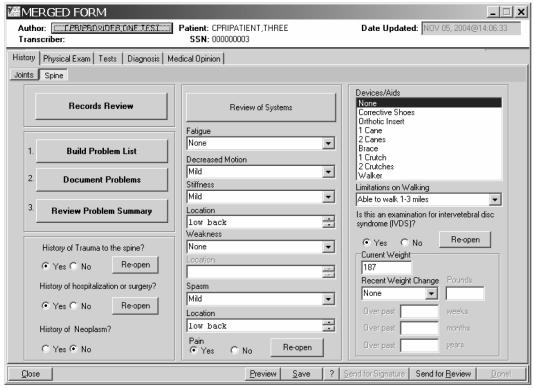

CAPRI CPWM Templates

Clinicians at each VA medical center were provided access to the CAPRI CPWM template system. Templates could be for a single exam, or for multiple exams as a merged template that combines the requirements of all selected templates into one. Figure 1 shows a merged template for an examination combining both the Joints and Spine exams. If two or more templates have been merged, answers to questions that exist on more than one of the merged templates will carry across to the other templates.

Figure 1.

Example of a merged CAPRI CPWM Template combining Joints and Spine Exams

RESULTS

Frequency of Template Use

Of the 17,490 CPEP reviewed exams randomly selected from 802,476 total VA disability exams done in 2005, 875 (5%) exams were completed by template. These 875 templated exams were conducted at 80 different VA medical center and VA clinic sites, yielding a mean of 10.8 (SD 13.3) template exams reviewed per site.

Quality Scores of Template vs. Routine Exams

The mean quality score for routine exams conducted at baseline was 73 ( 95% CI 72, 75) as shown in Figure 2. Quality scores for routine exams conducted during the study period showed a significant (p < .05) improvement to 78 (95% CI 77, 78). Mean template quality scores 91 (95% CI 89, 92) were significantly higher than dictated exam report quality scores exams conducted either at baseline or during the study period.

Figure 2.

Secular Trend and Computerized Template Improvement

Template vs. Routine Exam Quality Change Over Time

A comparison of the exam quality scores for C&P template exams vs. routine exams for the 12- month time period of 2005 using two-way analysis of variance (ANOVA) revealed a significant main effect for template use (F(1,23) = 59.5, p<.01), with no main effect for time (F(11,23) = 1.15, p=.32) and no template X time interaction (F(11,23) = 1.2, p=.31). Thus, the greatest difference in quality was between the template and routine exams, indicating a major enhancement in performance when the exams are template-assisted.

Template vs. Routine Exam Quality by Exam Type

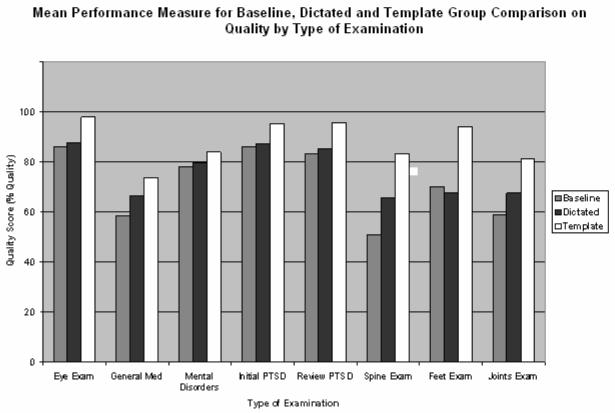

To determine whether quality scores of template vs. routine exams differ by type of exam, a series of t-test comparisons were conducted. As may be seen in Figure 3, quality scores of template exams were significantly higher than those of routine exams on 5 of the 10 exam types (figure 3): Eye Exam (98 vs 88, p<.01), Review PTSD (96 vs 85, p<.02), Spine Exam (83 vs 65, p<.01), Feet Exam (94 vs 68, p<.01) and Joints Exam (81 vs 68, p<.01). Skin exams had insufficient numbers of template exams (n = 2) to conduct an adequate statistical test, and Audio Exam (98 vs 99, p=.11) showed a ceiling effect. Of the remaining 3 exam types, Initial PTSD (95 vs 87, p<.07) showed a non-significant trend, and General Medical Exam (74 vs 67, p=.16), and Mental Disorder Exam (84 vs 80, p=.33) were not statistically different.

Figure 3.

Secondary Analysis on Examination Type

Discussion

The results of the present study suggest that use of the CAPRI CPWM standardized, guided documentation templates in compensation and pension exams produces breakthrough improvement in quality compared with routinely completed exams. While a secular trend in quality improvement is apparent between the baseline and study period for routine exams, the leap in quality was dramatic among exams conducted using templates. A further analysis showed that the template performance enhancement was driven by five of the eight exam types. Among the remaining three exam types, one had insufficient data for analysis and one had ceiling effects, leaving only one exam type with a non-significant trend. An examination of the differences across exam types revealed that template performance exceeded routine performance quality by a minimum difference of 10% (Eye Exam) up to a maximum difference of 26% (Feet Exam). These findings indicate that the use of documentation templates improves exam report quality across all examination types.

The present study used a convenience sample of reports from clinicians who voluntarily adopted or did not adopt the use of the templates. As such, this was a naturalistic study of template use in a real world application. A study limitation is the potential for selection bias where early adopters of a new technology may have characteristics that contribute to superior quality exams independent of their use of templates. A follow-up study that uses randomized assignment would be important to minimize these potential sources of variation and to further validate the potential beneficial effect of template use on exam report quality found in the present study. This study addresses only quality of resulting reports. Further evaluation is needed to assess other potential benefits and costs of using templates instead of dictation. Of prime importance is to compare data entry costs between dictation and template use.

Several possible reasons may explain the superiority of template-guided examinations to routinely completed exams. The template provides real time assistance and guidance in the conduct of an examination. This may help assure that the exam covers all relevant information. Template-guided exams help assure that all relevant data that was gathered during an exam is contained in the exam report, and not inadvertently omitted in dictation. C&P exams are quasi-legal examinations. Templates provide data entry screens and offer prompts to collect information at the level of detail required to meet legal guidelines, which an examiner may possibly not have fully recognized or may have under-estimated. For example, in musculoskeletal exams a court case, DeLuca v. Brown (14), required that assessing functional limitations be portrayed in terms of the degree of additional range of motion lost due to pain on repetitive use or during flare-ups. Template guidance directs the examination to cover both a detailed assessment of range of motion and any relevant history of limitations on range of motion on repetitive use or during flare-ups. The template assures coverage of the correct level of examination detail as mandated under established rules and law.

The potential broader implications of computerized templates include the ability to provide structured documentation that is customized “on-the-fly”, accounting for both to individual exam responses and overall clinical exam requirements. Templates demonstrate the opportunity and capacity for informatics tools to enhance delivery of care when operating in a health system with a sophisticated electronic medical record.

References

- 1.Lawler FH, Scheid DC, Viviani NJ. The cost of medical dictation transcription at an academic family practice center. Arch Fam Med. 1998;7(3):269–72. doi: 10.1001/archfami.7.3.269. [DOI] [PubMed] [Google Scholar]

- 2.Logan JR, Gorman PN, Middleton B. Measuring the quality of medical records: a method for comparing completeness and correctness of clinical encounter data. Proc AMIA Symp. 2001:408–12. [PMC free article] [PubMed] [Google Scholar]

- 3.Rose EA, Deshikachar AM, Schwartz KL, Severson RK. Use of a template to improve documentation and coding. Fam Med. 2001;33(7):516–21. [PubMed] [Google Scholar]

- 4.Stead WW, Heyman A, Thompson HK, Hammond WE. Computer-assisted interview of patients with functional headache. Arch Intern Med. 1972;129(6):950–5. [PubMed] [Google Scholar]

- 5.Barnett GO, Winickoff R, Dorsey JL, Morgan MM, Lurie RS. Quality assurance through automated monitoring and concurrent feedback using a computer-based medical information system. Med Care. 1978;16(11):962–70. doi: 10.1097/00005650-197811000-00007. [DOI] [PubMed] [Google Scholar]

- 6.Weed LL. Medical records that guide and teach. N Engl J Med. 1968;278(12):652–7. doi: 10.1056/NEJM196803212781204. concl. [DOI] [PubMed] [Google Scholar]

- 7.Johnson KB, Cowan J. Clictate: a computer-based documentation tool for guideline-based care. J Med Syst. 2002;26(1):47–60. doi: 10.1023/a:1013042920905. [DOI] [PubMed] [Google Scholar]

- 8.Shortliffe EH. Update on ONCOCIN: a chemotherapy advisor for clinical oncology. Med Inform (Lond) 1986;11(1):19–21. doi: 10.3109/14639238608994970. [DOI] [PubMed] [Google Scholar]

- 9.Musen MA, Carlson RW, Fagan LM, Deresinski SC, Shortliffe EH. T-HELPER: automated support for community-based clinical research. Proc Annu Symp Comput Appl Med Care. 1992:719–23. [PMC free article] [PubMed] [Google Scholar]

- 10.Payne TH, Hoey PJ, Nichol P, Lovis C. Preparation and use of preconstructed orders, order sets, and order menus in a computerized provider order entry system. J Am Med Inform Assoc. 2003;10(4):322–9. doi: 10.1197/jamia.M1090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McDonald CJ. The barriers to electronic medical record systems and how to overcome them. J Am Med Inform Assoc. 1997;4(3):213–21. doi: 10.1136/jamia.1997.0040213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Brown SH, Lincoln MJ, Groen PJ, Kolodner RM. VistA--U.S. Department of Veterans Affairs national-scale HIS. Int J Med Inform. 2003;69(2–3):135–56. doi: 10.1016/s1386-5056(02)00131-4. [DOI] [PubMed] [Google Scholar]

- 13.Weeks WB, Mills PD, Waldron J, Brown SH, Speroff T, Coulson LR. A model for improving the quality and timeliness of compensation and pension examinations in VA facilities. J Healthc Manag. 2003;48(4):252–61. discussion 262. [PubMed] [Google Scholar]

- 14.DeLuca v. Brown, 8 Vet. App. 202, 206 (1995)