Abstract

Objective

To describe the use of student self-assessments as a measure of the effectiveness of a drug information advanced pharmacy practice experience (APPE) and to determine whether other APPEs reinforced information-related skills.

Design

Students taking a drug information APPE completed a self-assessment survey instrument focusing on key information-related skills on the first day and again on the last day of that APPE. Findings were used to determine the effect of this and other APPEs on perceived information skills. Student ratings were compared with faculty ratings for items with similar wording.

Assessment

Student self-ratings improved after completing the drug information APPE. Other APPEs, gender, and course grade did not significantly impact student perceptions of their information-related knowledge and skills. Student and faculty ratings were similar, although individual variability occurred.

Conclusion

Student self-assessments, along with other direct and indirect data, can provide useful information needed to assess and change aspects of the experiential program and curriculum.

Keywords: self-assessment, assessment, advanced pharmacy practice experience, drug information

INTRODUCTION

The Accreditation Council for Pharmacy Education's new Accreditation Standards and Guidelines for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree, “Standards 2007,” clearly states the necessity of assessing and evaluating student learning and curricular effectiveness and using the analyses for continuous curricular improvement.1 Key aspects of their Standard No. 15 (Assessment and Evaluation of Student Learning and Curricular Effectiveness) include the need to use a variety of assessments, follow a plan, demonstrate and document competencies, incorporate formative and summative assessments, promote learning and skills beyond memorization, include self-assessments, and promote consistency and reliability of assessments.1 To accomplish these tasks, appropriate student learning outcome statements must first be developed,2 followed by a systematic plan for assessing these outcomes. This plan should address who (faculty members, students, other stakeholders) will be involved in the gathering, analysis, evaluation, reporting, and use of assessment data; what data will be obtained by what formats/methods and for which outcomes; when and where in the curriculum the various parts of the assessment plan and specific assessment methods will be implemented; and how the various aspects of the plan, including the use of results and implementation of curricular changes to correct problems identified, will be undertaken.3 Although this latter step is critical, it can be difficult to accomplish. Important considerations when implementing curricular changes include: why certain students are more successful than others in achieving desired learning outcomes; what criteria for success should be used (eg, benchmarks); how, when and where changes should be made; and the extent to which each potentially diverse advanced practice experience contributes to achievement of a specific outcome.

To minimize the resource requirements associated with assessment plans, course-embedded assessment can be useful. Course-embedded assessment is the use of existing exercises or assignments from within courses for program-learning outcomes assessment data.4 This would include the use of data obtained from APPE activities. However, the data from such embedded assessments must be gathered in a format that allows the desired aspects of specific program outcomes to be assessed.4

Direct measures (eg, examinations, essays, faculty observation and rating of student performance) are important for assessing actual student learning. In addition, indirect measures of student learning, ie, perceptions or opinions about the types and extent of learning that has occurred, can be valuable assessment tools. Indirect measures can add multiple perspectives and insight into student learning and the assessment process (ie, completeness) and can help explain or determine the extent of convergence (ie, agreement) among the direct measures.3,5 Indirect measures of student learning include surveys, student reflections, alumni feedback, and student self-assessments. Guideline 11.1 in Standards 2007 states that “…students should be encouraged to assume and assisted in assuming responsibility for their own learning (including assessment of their learning needs; development of personal learning plans, and self-assessment of their acquisition of knowledge, skills, attitudes, and values and their achievement of desired competencies and outcomes).”1 Measurement of student self-efficacy, defined as the personal confidence of students in their ability to perform specified tasks and achieve an outcome,6,7 has potential benefit for assessing pharmacy curricular changes and innovations, new courses, and specific APPEs.6

Using self-assessment data to measure students' skills or initiate curricular changes must be tempered by a potential lack of validity, defined as the agreement between student self-assessments and performance based upon observed or objective measurements. Weak, variable, or nonsignificant correlations between student self-assessment or self-efficacy determinations and their performance on examinations, clerkships, or an objective structured clinical examination (OSCE) have been reported.7-13 These correlations did not necessarily improve as students advanced in their program.8,9 With conventional education, training, and feedback, excellent students might still rank their performance less highly and poor students might persist in overestimating their performance. Studies of medical students found that individuals in the lower 25% to 33% of their classes were more likely to overestimate their grades or rotation-related knowledge and skills than students in the top one fourth to one third of their classes.13,14 Possible reasons for these findings include self-ratings based on potential ability and not actuality, “defense mechanisms” of lower performing students, the existence of already established internal assessments that are difficult to change, the application of more stringent standards by higher performing students, or regression to the mean.13,15 Despite varying correlations between self-assessments and other evaluation methods, self-assessments can be used as a tool to help identify curricular areas to enhance, particularly when combined with other data.

The advanced pharmacy practice experience (APPE) at the West Virginia University (WVU) School of Pharmacy currently consists of 11 four-week blocks. The West Virginia Center for Drug and Health Information (WVCDHI), a statewide center based at the School, provides an elective APPE and typically precepts 2 fourth-professional year students during each of the 11 blocks. Drug information is not an APPE requirement at WVU. Although a few additional sites also offer a drug information experience, about two thirds of students do not receive formal drug information practice exposure. In the didactic curriculum, students learn about tertiary references and MEDLINE searching primarily during their first-professional year, with reinforcement of these skills during their second- and third-professional years through assignments that require literature use. Students learn critical literature evaluation skills during a required 2-credit hour course in the second-professional year. However, questions arose as to whether sufficient practice opportunities are provided during APPEs in the fourth-professional year to allow all students to become proficient at using appropriate information resources, evaluating literature, and formulating complete, accurate responses to questions.

The WVCDHI preceptors use an outcomes-based form to assess experiential student performance. While this provides a direct measure of student knowledge and skills, student self-assessments could provide greater insight into ways to enhance the APPE. Perceived drug information abilities during the initial 4 months and the final 4 months of the APPE, ie, early or late in the APPE, could also be compared to help determine the effect of other practice experiences on drug information-related skills. This manuscript describes the use of student self-assessments as a measure of an advanced drug information experience's effectiveness and to help determine whether other APPEs reinforced the development of information-related skills.

DESIGN

Appropriate exemption was obtained from the Institutional Review Board (IRB) for use of the data in this report. The drug information APPE is designed to emphasize skills needed to be a thorough, efficient, and effective provider of drug information, regardless of the area in which the student ultimately intends to practice. During this APPE, the student gains hands-on experience using a wide variety of tertiary and secondary information resources, including the Internet, IPA and MEDLINE among others. The drug information APPE also focuses on the knowledge and skills needed to critically evaluate primary literature. At the start of the APPE, students are given a test covering statistics and their application. Incorrect answers must be reviewed and corrected by the students until a perfect score is obtained. The experiential student is an integral part of the WVCDHI and answers inquiries received from health care providers throughout the state. The WVCDHI staff works closely with the students as they respond to questions, and a staff member reviews and signs off on each completed response before the student provides the information to the requestor. Other student activities include providing 2 journal club presentations to the WVCDHI staff, working with the service-related projects of the WVCDHI, and writing a drug monograph and journal article abstracts.

The items for the self-assessment tool were developed from the drug information APPE objectives, the learning outcomes on the student evaluation form used by the preceptors, and by considering those skills that students have traditionally had more difficulty mastering, eg, critically evaluating published studies, searching MEDLINE, appropriately using Internet information resources. The items were prepared and reviewed by the Director and Assistant Director of the WVCDHI for face validity. Minor revisions to improve readability were made after the survey instrument was pilot-tested, resulting in the final Drug Information Skills Self-Assessment Survey instrument (available from authors).

The self-assessment survey instrument has been in use since May 2003. All students on the WVCDHI APPE complete the survey instrument on the first day. Responses are reviewed and the faculty preceptors keep the completed survey instruments. In addition to rating their abilities using a Likert-type scale (5 = excellent, 4 = very good, 3 = good, 2 = fair, 1 = poor), students are asked to indicate the 2 most important things they want to learn from the APPE and the 2 things they are most afraid of during the APPE; this information is used by the preceptors to address student concerns and optimize individual learning experiences. The survey instrument is administered again on the last day of the drug information APPE, with an additional question asking students to indicate the 2 most important things they learned from the APPE. The preceptors did not review the final survey instrument prior to preparing their performance evaluation of the student. The student received 1 evaluation, jointly completed by the Assistant Director and Director of the WVCDHI. The only significant change that occurred in the WVCDHI APPE with the potential to affect a few of the student self-ratings (items 12 and 13) was the addition of an assignment designed to teach students how to locate and evaluate patient-oriented information on the Internet, ie, Patient Internet Assignment (PIA). The PIA was added in January 2005 because an Internet evaluation assignment had been removed from the Medical Literature Evaluation course due to time constraints. In addition, students had indicated on the self-assessment survey instrument a perceived weakness in their ability to evaluate Internet information. For the PIA, students are asked to locate 5 different web sites each for 2 medical conditions and to evaluate the quality of that information using a specially designed checklist.

The self-assessment survey items were organized into 5 related skill areas: communications, resource use, critical evaluation, questions/answers, and other. Wilcoxon signed-rank tests were used to compare the students' initial and end ratings for each survey item. To determine whether other APPEs influenced perceived drug information skills, the first day mean scores obtained during the first third of the fourth-professional year APPEs (blocks 1 through 4) were compared to the first day mean scores obtained during the final third of the fourth-professional year APPEs (blocks 8 through 11) by the Mann-Whitney U test. The effect of gender on the self-ratings was determined using the Mann-Whitney U test. Critical literature evaluation skills as well as Micromedex and International Pharmaceutical Abstracts database searching skills are primarily learned in the required Medical Literature Evaluation course during the second-professional year. The Mann-Whitney U test was also used to determine whether receipt of an “A” grade vs. a “B” or lower grade in the required Medical Literature Evaluation course affected student self-ratings. To determine whether addition of the PIA affected self-rating means for knowledge of medical Internet sites and critically assessing this information, the Mann-Whitney U test was used to compare the initial and final self-ratings for these items before and after use of the PIA. Five items on the self-assessment survey had very similar wording to outcome statements used by the drug information faculty to evaluate the students. The student means for these items were compared with the faculty mean ratings using the Wilcoxon signed-rank test. The percentages of faculty ratings that were the same, higher, or lower than the student ratings were also determined.

RESULTS

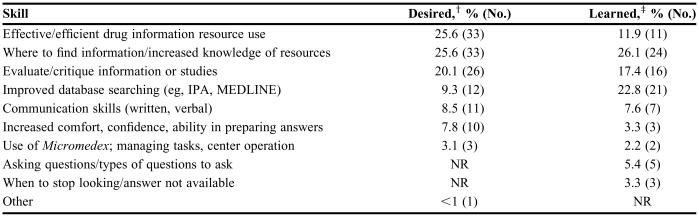

Survey data from all 56 fourth-professional year students (35 female, 21 male) who completed the WVCDHI APPE from May 2003 through January 2006 were compiled and analyzed. Table 1 summarizes the most important skills that students wished to learn from the APPE. Over half of the responses involved wanting to learn more about available resources, to effectively and efficiently use drug information resources, and where to best look to find needed information. Typical comments included, “I want to improve my ability to locate drug information in a timely manner,” “I would like to learn how to retrieve information more efficiently,” “I would like to know more than just one reference to find certain types of information,” and “I would like to learn what the ‘most’ appropriate reference books are to answer specific questions.” The next most frequent skill the students wanted to learn involved evaluating and critiquing information or studies, with specific responses including “How to evaluate a study to determine its strengths and weaknesses and its usefulness in answering questions,” and “To gain confidence in my ability to look at a source of information and determine its strengths and weaknesses, to allow me to make an accurate and informed decision.”

Table 1.

Pharmacy Students' Two Most Important Skills Desired and Learned in a Drug Information Advanced Pharmacy Practice Experience (N = 56)*

*% based on total responses (Number of responses)

†Total number of responses = 129 (some students listed more than 2 skills)

‡Total number of responses = 92 (some students listed 1 or 2 similar skills)

NR = not reported

Table 1 also shows the most important skills that students believed they learned from the drug information APPE upon its completion. Increased knowledge of resources, where to find information, and improved database searching accounted for almost 50% of the responses. Interestingly, about 5% of the responses involved asking questions and learning about the types of questions to ask requestors, which were not initially mentioned by the students. Representative comments included “I have learned to pull information together from multiple sources in order to answer a question,” “I've learned that there are many ways to do a PubMed search and find different answers each time,” and “You don't always find the answers you are looking for.”

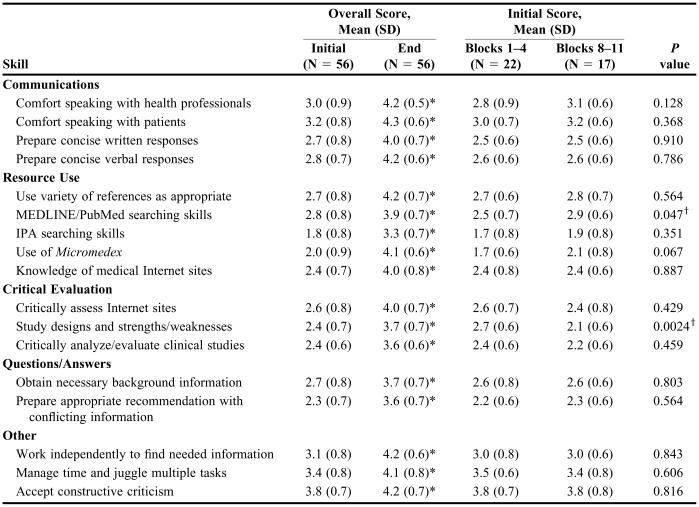

Upon beginning the APPE at WVCDHI, students' rated their skills in searching International Pharmaceutical Abstracts lowest (1.8 ± 0.8) followed by use of Micromedex (2.0 ± 0.9) (Table 2). This was anticipated since the pharmacy students' only formal exposure to these systems occurred during their Medical Literature Evaluation course and a laboratory session. Ability to accept constructive criticism was rated the highest (3.8 ± 0.7), followed by ability to manage time and juggle multiple tasks (3.4 ± 0.8). Mean student ratings for each of the 17 items in the self-assessment survey instruments significantly increased (P < 0.0001) from the first to the last day of the APPE. The greatest rating change occurred for Micromedex use, with an increase in the mean value of 2.1 points.

Table 2.

Pharmacy Students' Self-Assessment of Skills on the First and Last Day of a Dug Information Advanced Pharmacy Practice Experience

*P < 0.0001 compared to Day 1; Wilcoxon signed-rank

†P < 0.05 Blocks 1-4 compared to Blocks 8-11; Mann-Whitney U

Most initial self-ratings did not change significantly for students completing the drug information APPE during the first 4 blocks of the APPE compared to those completing it during the last 4 APPE blocks (Table 2). However, 2 significant differences were found: MEDLINE/PubMed searching ability ratings increased (P = 0.047) and ratings for knowledge of study designs and their strengths/weaknesses decreased (P = 0.0024) during the last 4 APPE blocks. Ratings for the other critical evaluation abilities (ie, critical analysis/evaluation of clinical studies and critical assessment of Internet sites) also slightly decreased (0.2 points) during the later APPE blocks, but the change was not statistically significant. Small, statistically insignificant increases (0.1 to 0.4 points) in the ratings for several items were also noted. No significant differences were present in any of the last day self-ratings between students completing the drug information APPE during the first 4 blocks and those completing it during the last 4 blocks.

On the initial survey instrument, male students' ratings were significantly higher than female students' ratings on ability to work independently (3.4 ± 0.7 vs. 2.9 ± 0.7, respectively; P = 0.035) and IPA searching skills (2.2 ± 0.9 vs. 1.6 ± 0.7, respectively; P = 0.015). All other initial self-ratings were not significantly different. There were also no significant differences between male and female students' ratings on the end of APPE self-assessments (P > 0.2 for all comparisons). Only 2 self-ratings, both on the initial survey, were found to significantly differ between students who had received an “A” grade in the Medical Literature Evaluation course (n = 22) and those who did not (n = 34). Students who received an “A” grade had higher self-ratings on knowledge of study designs (2.6 ± 0.7 vs. 2.2 ± 0.6, respectively; P = 0.02), but lower self-ratings in their ability to accept constructive criticism (3.5 ± 0.7 vs. 4.0 ± 0.7, respectively; P = 0.002).

There were no significant differences in self-ratings at the end of the APPE for knowledge of medical Internet sites or ability to critically assess Internet sites by students who did (n = 23) or did not (n = 33) complete the PIA as part of the APPE (P > 0.50 for both). However, students who did not complete the PIA had significantly higher initial self-ratings for their ability to critically assess Internet sites compared to those who did complete that assignment (2.9 ± 0.7 vs. 2.2 ± 0.7, respectively; P = 0.0005). When the difference in mean rating scores between the first day and last day of the APPE were determined, students who completed the assignment had a significantly greater increase in their scores (about a 1-point increase for those who did not complete the assignment vs. an almost 2-point increase for those who completed the assignment, P = 0.003).

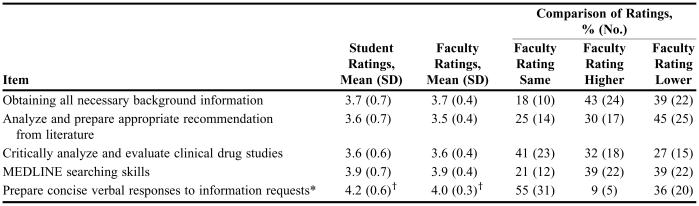

Table 3 shows the student and faculty ratings for similarly worded items. Faculty scores were the same as the student self-ratings 18% to 55% of the time, higher than the student self-ratings 9% to 43% of the time, and lower than the student self-ratings 27% to 45% of the time. However, most of the differences (63%) between faculty and student scores were 0.5 points or less and 23% were 1-point differences.

Table 3.

Comparison of Pharmacy Student and Faculty Ratings on Similar Items on a Survey on Drug Information Skills

*Faculty-rated outcome wording also included providing recommendations that were systematic, logical, and secured consensus

†P = 0.01; Wilcoxon signed-rank

DISCUSSION

Administering a self-assessment survey instrument prior to the start of individual APPEs can provide valuable assessment data for both that experience and program, as long as the potential limitations are considered. First, a self-assessment survey instrument can be used to determine changes in students' confidence in the skills needed for a specific APPE. The improved ratings on each survey item from the first day to the end of the drug information APPE indicate a positive effect of the APPE on students' perceptions of their drug information abilities. Preceptors can also review the self-assessments with students on the first day of an APPE and work with them to improve areas of student concern. However, students' perceptions of certain abilities might be higher than their actual skill level. For example, students often believe their MEDLINE searching skills are fairly strong upon beginning the drug information experience, even though faculty members have consistently identified problems with students' use of medical subject headings and search terms. This lack of recognition by students of searching-skill deficits was corroborated by the finding that “improved database searching” was mentioned only 9% of the time as one of the most important skills students wanted to learn from the APPE, but was listed as one of the most important skills actually learned 23% of the time (Table 1).

Perceived difficulties identified from the self-assessment survey instruments can be used to make changes in an individual practice experience or elsewhere in the curriculum to improve learning, particularly when used in conjunction with other data. For example, students indicated a desire to learn how to critique information or studies during the drug information experience, and it was the area they were most concerned about regardless of when they completed that APPE. In addition, faculty members consistently found that students had difficulty during the APPE with their required journal club presentations. Combined with a lack of emphasis of this skill during the third-professional year of the didactic curriculum, the drug information faculty recently added a required journal article critique assignment for the third-year students. Whether this curricular change will result in improved APPE performance on journal article critiques will be determined. Other planned changes resulting from the survey findings include adding structured IPA practice searches to the drug information APPE and adding completion of the online Micromedex tutorial (accompanied by focused questions) to the curriculum for the second-professional year.

Self-assessments can also be used at the start of each individual APPE to determine the impact of the entire APPE on the development of important knowledge and skills. Students' perceptions should gradually improve over time if each APPE provides sufficient practice opportunities to learn the desired skills. This was found to be true for medical students' self-assessments of skills as they progressed through clerkships.13 However, a concern identified from the present study is that almost all of the initial mean self-ratings did not significantly change from the early to late APPEs, with 35% of the item means being identical; 35% showing small, nonsignificant increases; 18% showing small, nonsignificant decreases; and 2 (12%) showing statistically significant changes. Mean MEDLINE searching ratings increased 0.4 points and mean ratings of knowledge of study design strengths/weaknesses decreased 0.6 points. A possible reason for the absence of consistent improvement in the initial survey item ratings is progressive underestimation by students of their abilities over time. Some studies have shown that medical students tend to underestimate or produce more conservative self-assessments of knowledge or performance when assessments are repeated over time,16,17 with this phenomenon being more pronounced among higher achievers.17 However, since all self-assessments ratings improved significantly from the first day to the last day of the drug information APPE, and no significant differences were observed in the end ratings based upon when the drug information APPE was completed, this explanation is unlikely. Also, almost all self-ratings were similar between students who received an “A” in the Medical Literature Evaluation course and those who received a lower grade.

Another possible explanation for the findings from the early to late APPEs is that higher achieving students might have completed the drug information APPE earlier in the fourth-professional year than poorer performing students. However, the distribution of these students in the first and last part of the APPE was comparable.

It is possible that information-related knowledge and skills are difficult for students to learn and require a specialized APPE with focus on these abilities to achieve competence. Many students have difficulty preparing concise responses to information requests, obtaining necessary background information, preparing appropriate recommendations in the face of conflicting information, and appropriately using critical literature evaluation skills. However, since these important abilities are used frequently in most if not all practice settings, the APPE should provide students with ongoing practice opportunities that allow for gradual skill development over time. At our institution, students are required on each APPE (except non-patient-care electives) to complete 1 written journal article summary and 1 written drug information question-and-answer that are reviewed by their preceptors. Inadequate student performance expectations or insufficient feedback about deficiencies may be at least partial explanations for the lack of self-assessment differences between the early and late APPEs. Students indicate they often rely upon standard references such as Drug Facts and Comparisons and the Drug Information Handbook to locate drug information throughout their other APPEs. Students have generally used MEDLINE in a variety of APPEs, consistent with their increased self-assessment of MEDLINE searching skills later in the APPE. However, the extent to which all APPE preceptors review students' search strategies and provide feedback regarding areas for improvement is unknown. Although several APPEs require journal club presentations from students, a consistent format is not used. The statistics test given to all students at the beginning of the drug information APPE uniformly results in scores less than 50%, providing additional evidence that these skills are not adequately stressed throughout the APPE. Since a recent survey found that only 73% of responding schools offered a drug information APPE and it was required at just 22% of the responding institutions,18 other schools may need to examine their students' proficiency in key information-related skills.

Self-assessment ratings have not shown consistent correlations with objective or faculty assessments.7,8,10-14,16,17 There are several reasons for the unpredictable findings from self-assessment studies, including variability in their methods. The 3 primary methodological approaches used in studies of self-ratings involve analyzing the correlations between self-assessment scores and an external measure, determining the proportion of self-ratings that correspond to expert ratings, and comparing the actual values, such as mean scores, between self-ratings and an external measure.15 All 3 of these methods have potential problems and limitations. The present study used the latter 2 approaches to compare student and faculty ratings. Despite the similarity of mean item scores between the groups, considerable variability existed among the individual comparisons. Part of this variability could be explained by some wording differences in items. In addition, individual students may differ greatly in their ability to accurately self-assess, and faculty members and students might place different emphasis on cognitive vs. noncognitive abilities.15 Although approaches can be taken to help improve self-assessment validity, student ratings should not be relied upon as a sufficient measure of learning outcome achievement.

Most self-rating scores between male and female students in the present study were not significantly different. Other studies of medical students or interns have not found gender differences in performance self-assessments,10,19 although one study found that male students were slightly more likely (OR = 1.7) to overestimate their performance than female students.14 In a study of undergraduate psychology students, male students exhibited significantly greater self-confidence in their responses on cognitive ability tests than female students.20

There are some limitations of the findings in this report. Although both the student self-assessment survey and the faculty assessment of student performance used a 5-point scale, the numerical definitions differed somewhat. The faculty member definition also incorporated the extent to which intervention was required to help the student perform that skill. This could help explain the variability in the rating comparisons between students and faculty members, although the mean scores did not differ significantly for 4 of the 5 similarly worded items. The power to detect a difference of 0.6 points in the initial survey ratings between students completing the drug information APPE early and those completing it late using a standard deviation of 0.7 and an alpha of 0.05, was approximately 76%. Although this power is lower than desired, the actual differences in ratings were small and not likely to be relevant. Also, there were too few drug information APPE students with a grade of “C” or less in the Medical Literature Evaluation course to determine whether their self-ratings would differ from those who received an “A” grade. Finally, whether the male students' slightly higher perceived ability to work independently and self-rated IPA searching skills represented a true gender difference, an anomaly, or a Type I error due to the number of comparisons performed would require further study.

CONCLUSION

The drug information APPE was found to significantly increase student self-ratings of their abilities. However, the initial self-assessments were not significantly different for most skills regardless of whether the drug information experience was completed during the early or later months of the APPE. Schools, particularly those that do not require a drug information APPE, should examine the extent to which students can master important information-related skills.

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Chicago, Ill: Accreditation Council for Pharmacy Education; 2006. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. [Google Scholar]

- 2.Anderson HM, Moore DL, Anaya G, Bird E. Student learning outcomes assessment: a component of program assessment. Am J Pharm Educ. 2005;69(2) Article 39. [Google Scholar]

- 3.Abate MA, Stamatakis MK, Haggett RR. Excellence in curriculum development and assessment. Am J Pharm Educ. 2003;67(3) Article 89. [Google Scholar]

- 4.Palomba CA, Banta TW. San Francisco: Jossey-Bass Publishers; 1999. Assessment essentials. [Google Scholar]

- 5.Miller PJ. The effect of scoring criteria specificity on peer and self-assessment. Assess Eval Higher Educ. 2003;28:383–94. [Google Scholar]

- 6.Plaza CM, Draugalis JR, Retterer J, Herrier RN. Curricular evaluation using self-efficacy measurements. Am J Pharm Educ. 2002;66:51–4. [Google Scholar]

- 7.Mavis B. Self-efficacy and OSCE performance among second year medical students. Adv Health Sci Educ. 2001;6:93–102. doi: 10.1023/a:1011404132508. [DOI] [PubMed] [Google Scholar]

- 8.Gordon MJ. A review of the validity and accuracy of self-assessments in health professions training. Acad Med. 1991;66:762–9. doi: 10.1097/00001888-199112000-00012. [DOI] [PubMed] [Google Scholar]

- 9.Lynn DJ, Holzer C, O'Neill P. Relationships between self-assessment skills, test performance, and demographic variables in psychiatry residents. Adv Health Sci Educ. 2006;11:51–60. doi: 10.1007/s10459-005-5473-4. [DOI] [PubMed] [Google Scholar]

- 10.Mattheos N, Nattestad A, Falk-Nilsson E, Attstrom R. The interactive examination: assessing students' self-assessment ability. Med Educ. 2004;38:378–89. doi: 10.1046/j.1365-2923.2004.01788.x. [DOI] [PubMed] [Google Scholar]

- 11.Eva KW, Cunnington JPW, Reiter HI, Keane DR, Norman GR. How can I know what I don't know? Poor self assessment in a well-defined domain. Adv Health Sci Educ. 2004;9:211–24. doi: 10.1023/B:AHSE.0000038209.65714.d4. [DOI] [PubMed] [Google Scholar]

- 12.Weiss PM, Koller CA, Hess LW, Wasser T. How do medical student self-assessments compare with their final clerkship grades? Med Teach. 2005;27:445–9. doi: 10.1080/01421590500046999. [DOI] [PubMed] [Google Scholar]

- 13.Woolliscroft JO, TenHaken J, Smith J, Calhoun JG. Medical students' clinical self-assessments: comparisons with external measures of performance and the students' self-assessments of overall performance and effort. Acad Med. 1993;68:285–94. doi: 10.1097/00001888-199304000-00016. [DOI] [PubMed] [Google Scholar]

- 14.Edwards RK, Kellner KR, Sistrom CL, Magyari EJ. Medical student self-assessment of performance on an obstetrics and gynecology clerkship. Am J Obstet Gynecol. 2003;188:1078–82. doi: 10.1067/mob.2003.249. [DOI] [PubMed] [Google Scholar]

- 15.Ward M, Gruppen L, Regehr G. Measuring self-assessment: current state of the art. Adv Health Sci Educ. 2002;7:63–80. doi: 10.1023/a:1014585522084. [DOI] [PubMed] [Google Scholar]

- 16.Frye AW, Richards BF, Bradley EW, Philp JR. The consistency of students' self-assessments in short-essay subject matter examinations. Med Educ. 1992;26:513. doi: 10.1111/j.1365-2923.1992.tb00174.x. [DOI] [PubMed] [Google Scholar]

- 17.Arnold L, Willoughby TL, Calkins EV. Self-evaluation in undergraduate medical education: a longitudinal perspective. J Med Educ. 1985;60:21–8. doi: 10.1097/00001888-198501000-00004. [DOI] [PubMed] [Google Scholar]

- 18.Kendrach MG, Weedman VK, Lauderdale SA, Kelly-Freeman M. Drug information advanced practice experiences in United States pharmacy schools [abstract] ASHP Midyear Clinical Meeting. 2005;40 PI-75. [Google Scholar]

- 19.Zonia SC, Stommel M. Interns' self-evaluations compared with their faculty's evaluations. Acad Med. 2000;75:742. doi: 10.1097/00001888-200007000-00020. [DOI] [PubMed] [Google Scholar]

- 20.Pallier G. Gender differences in the self-assessment of accuracy on cognitive tasks. Sex Roles. 2003;48:265–76. [Google Scholar]