Few issues in contemporary risk policy are as momentous or contentious as the precautionary principle. Since it first emerged in German environmental policy, it has been championed by environmentalists and consumer protection groups, and resisted by the industries they oppose (Raffensperger & Tickner, 1999). Various versions of the principle now proliferate across different national and international jurisdictions and policy areas (Fisher, 2002). From a guiding theme in European Commission (EC) environmental policy, it has become a general principle of EC law (CEC, 2000; Vos & Wendler, 2006). Its influence has extended from the regulation of environmental, technological and health risks to the wider governance of science, innovation and trade (O′Riordan & Cameron, 1994).

… when considering the relative strengths and weaknesses of the precautionary principle, we must also give equal attention to… conventional approaches to risk assessment

An early classic formulation neatly encapsulates its key features. According to Principle 15 of the Rio Declaration on Environment and Development: “In order to protect the environment, the precautionary approach shall be widely applied by States according to their capabilities. Where there are threats of serious or irreversible damage, lack of full scientific certainty shall not be used as a reason for postponing cost-effective measures to prevent environmental degradation” (UN, 1992). This injunction has given rise to a wide range of criticisms: sound scientific techniques of risk assessment already offer a comprehensive and rational set of ‘decision rules' for use in policy (Byrd & Cothern, 2000); these science-based approaches yield a robust and practically operational basis for decision-making under uncertainty (Morris, 2000); the precautionary principle fails as a basis for any similar operational type of decision rule in its own right (Peterson, 2006); the precautionary principle is of practical relevance only in risk management, and not in risk assessment (CEC, 2000); and, if applied to assessment, the precautionary principle threatens a rejection of useful and well-established risk assessment techniques (Woteki, 2000).

Each of these involves strong assumptions about the nature and standing of scientific rationality and rigour, the scope and character of uncertainty, the applicability and limits of risk assessment, and the particular implications of precaution. I hope to contribute to a more measured debate on these matters, and will briefly review each of these arguments in turn. In the process, I will explore more constructive ways to satisfy imperatives for robustness, rationality, rigour and precaution.

… we might already see the value of the precautionary principle as a salutary spur to greater humility

Most criticism of the precautionary principle is based on unfavourable comparisons with established ‘sound scientific' methods in the governance of risk. These include a range of quantitative and/or expert-based risk-assessment techniques, involving various forms of scientific experimentation and modelling, probability and statistical theory, cost–benefit and decision analysis, and Bayesian and Monte Carlo methods. These conventional methods are assumed—often implicitly—to offer a comprehensively rigorous basis for informing decision-making (Byrd & Cothern, 2000). In particular, they are held to provide decision rules that are applicable, appropriate and complete (Peterson, 2006). Therefore, when considering the relative strengths and weaknesses of the precautionary principle, we must also give equal attention to these conventional approaches to risk assessment.

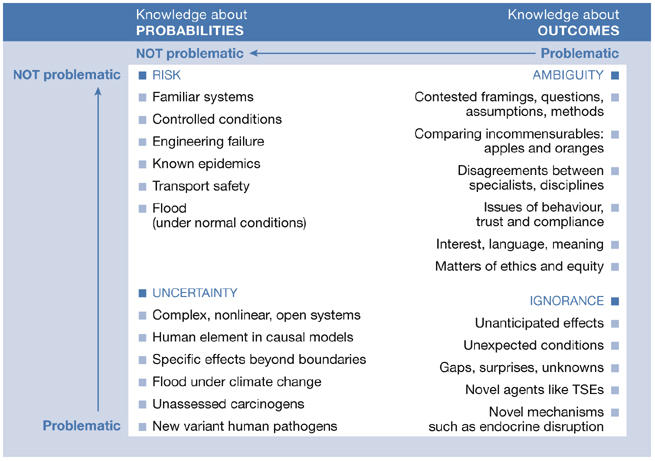

All scientific approaches are based on the articulation of two fundamental parameters, which are then reduced to an aggregated concept of risk. First are things that might happen: hazards, possibilities or outcomes. Second is the likelihood or probability associated with each. Either of these parameters might be subject to variously complete or problematic knowledge, in ways that are outlined below. This yields four logical permutations of possible states of incomplete knowledge—of course, these are neither discrete nor mutually exclusive and typically occur together in varying degrees in the real world (Fig 1; Stirling, 1999). Conventionally, risk assessment addresses each of these states essentially by applying the same battery of techniques: quantifying and aggregating different outcomes and multiplying by their respective probabilities to yield a single reductive picture of ‘risk'.

Figure 1.

Contrasting states of incomplete knowledge, with schematic examples. TSE, transmissible spongiform encephalopathy.

Fig 1 also provides examples of areas in which these possible states of knowledge might come to the fore in policy-making. In the top left of the matrix exist many fields in which past experience or scientific models are judged to foster high confidence in both the possible outcomes and their respective probabilities. In the strict sense of the term, this is the formal condition of risk and it is under these conditions that the conventional techniques of risk assessment offer a scientifically rigorous approach. However, it is also clear that this formal definition of risk also implies circumstances of uncertainty, ambiguity and ignorance under which the reductive techniques of risk assessment are not applicable.

Under the condition of uncertainty (Fig 1), we can characterize possible outcomes, but the available information or analytical models do not present a definitive basis for assigning probabilities. It is under these conditions that “probability does not exist” (de Finetti, 1974). Of course, we can still exercise subjective judgements and treat these as a basis for systematic analysis. However, the challenge of uncertainty is that such judgements might take several different—yet equally plausible—forms. Rather than reducing these to a single value or recommendation, the scientifically rigorous approach is therefore to acknowledge various possible interpretations. The point remains that, under uncertainty, attempts to assert a single aggregated picture of risk are neither rational nor ‘science-based'.

Under the condition of ambiguity, it is not the probabilities but the possible outcomes themselves that are problematic. This might be the case even for events that are certain or have occurred already (Wynne, 2002; Stirling, 2003). For example, in the regulation of genetically modified (GM) food, such ambiguities arise over ecological, agronomic, safety, economic or social criteria of harm (Stirling & Mayer, 1999). When faced with such questions over “contradictory certainties” (Thompson & Warburton, 1985), rational choice theory has shown that analysis alone is unable to guarantee definitive answers (Arrow, 1963). Where there is ambiguity, reduction to a single ‘sound scientific' picture of risk is also neither rigorous nor rational.

Finally, there is the condition of ignorance. Here, neither probabilities nor outcomes can be fully characterized (Collingridge, 1980). Ignorance differs from uncertainty, which focuses on agreed, known parameters such as carcinogenicity or flood damage. It also differs from ambiguity in that the parameters are not only contestable but also—at least in part—unknown. Some of the most important environmental issues were—at their outset—of this kind (Funtowicz & Ravetz, 1990). In the early histories of stratospheric ozone depletion, bovine spongiform encephalopathy and endocrine-disrupting chemicals, for example, the initial problem was not so much divergent expert views or mistakes over probability, but straightforward ignorance about the possibilities themselves. Again, it is irrational to represent ignorance as risk.

The picture summarized in Fig 1 is intrinsic to the scientific definition of risk itself and is therefore difficult to refute in these terms. Risk assessment offers a powerful suite of methods under a strict state of risk. However, these are not applicable under conditions of uncertainty, ambiguity and ignorance. Contrary to the impression given in calls for ‘science-based' risk assessment, persistent adherence to these reductive methods, under conditions other than the strict state of risk, are irrational, unscientific and potentially misleading.

From these fundamental issues of scientific rigour follow implications for the practical robustness of conventional, reductive risk assessment in decision-making. In political terms, a quantitative expression of risk or a definitive expert judgement on safety is typically of great instrumental value; however, these have little to do with scientific rationality. Any robust policy must go beyond short-term institutional issues and address the efficacy of policy outcomes. As such, robustness is a function of the accuracy of assessment results, not of their professed precision. This question of accuracy is more difficult to establish, but some impression can be obtained by looking across a range of comparable studies. Here, a rather striking picture emerges that underscores and compounds the theoretical challenges discussed above.

… underlying principles of ‘sound science' are rarely explicitly enunciated, but instead implicitly assumed to be intrinsic to rationality

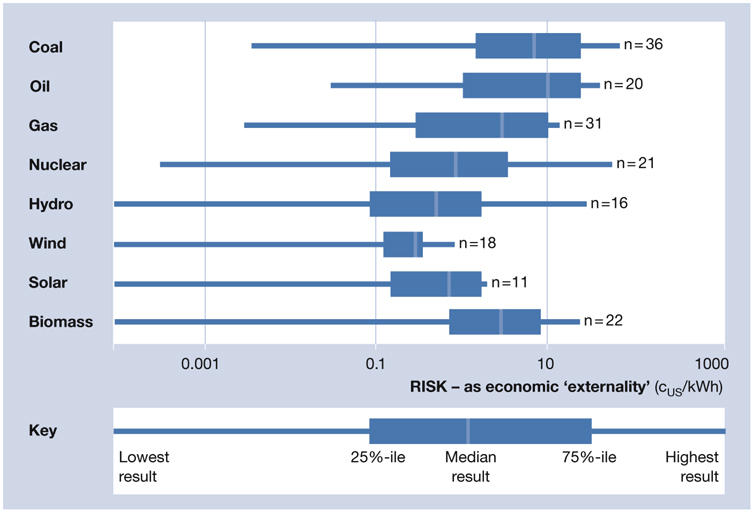

Nowhere are reductive, science-based approaches to risk more mature, sophisticated and elaborate than in energy policy. It is here that the greatest efforts have been expended over long periods to conduct comprehensive comparative assessments across a full range of policy options. These have influenced areas of policy-making such as climate change, nuclear power and nuclear waste. However, the apparently precise findings by specific studies typically understate the enormous variability inherent in the literature as a whole (Fig 2; Sundqvist et al, 2004).

Figure 2.

Practical limits to robustness in risk assessment. Results were obtained from 63 detailed risk–benefit and cost–benefit comparative studies of electricity supply. Based on data from Sundqvist et al, 2004.

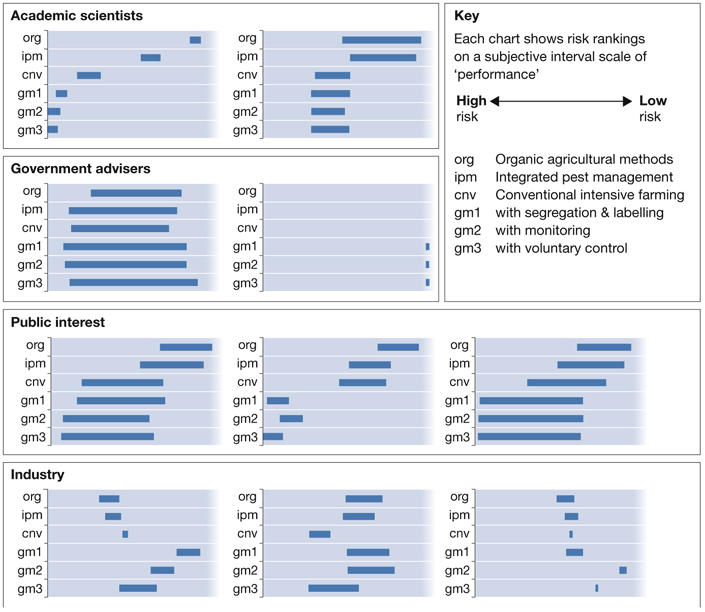

This understatement of variability and uncertainty is not restricted to formal quantitative analysis. Fig 3 shows various judgements from experts who advised the UK government on the regulation of GM technology in the late 1990s. By using a method called multi-criteria mapping, individual respondents express their judgements in quasi-quantitative graphical terms (Stirling & Mayer, 1999). The results reveal starkly contrasting understandings of the relative merits of GM when compared with other agricultural strategies. Despite the fact that the government advisory committees typically represented their collective judgements as precise prescriptive recommendations, it is clear that the underlying individual expert perspectives display significantly greater diversity.

Figure 3.

Divergent specialist judgements on risk. Figure adapted from Stirling & Gee, 2002.

The reason that these ‘sound scientific' procedures yield such contrasting pictures of risk is that the answers delivered in risk assessment typically depend on how the analysis is ‘framed'. Many factors can influence the framing of science for policy, which can lead to radically divergent answers to apparently straightforward questions (Table 1). The point is not that scientific discipline carries no value. For any particular framing conditions, scientific procedures offer important ways to make analysis more systematic, transparent, accountable and reproducible. The issue is not that ‘anything goes', but rather that, in complex areas, science-based techniques rarely deliver a single robust set of findings. To paraphrase an apocryphal remark by Winston Churchill, the message is that science is essential, but that it should remain “on tap, not on top” (Lindsay, 1995).

Table 1.

A selection of factors influencing the framing of scientific risk assessment

| Setting agendas |

| Defining problems |

| Characterizing options |

| Posing questions |

| Prioritizing issues |

| Formulating criteria |

| Deciding context |

| Setting baselines |

| Drawing boundaries |

| Discounting time |

| Choosing methods |

| Including disciplines |

| Handling uncertainties |

| Recruiting expertise |

| Commissioning research |

| Constituting ‘proof' |

| Exploring sensitivities |

| Interpreting results |

So what does this mean for the precautionary principle? As already mentioned, its criticisms are typically founded on unfavourable comparisons with ‘sound scientific' methods of risk assessment. The preceding discussion has shown that—for all their strengths under strict conditions of ‘risk'—these techniques are neither rational and rigorous nor practically robust under conditions of uncertainty, ambiguity and ignorance. It is on this basis that we might already see the value of the precautionary principle as a salutary spur to greater humility. However, there remain some significant questions. Does precaution offer greater or lesser rigour when formulating decision rules under uncertainty? In what ways and to what extent might these be considered more or less robust than conventional methods of risk assessment?

The precautionary principle is not—and cannot properly claim to be—a complete decision rule at all. Unlike many of the techniques with which it is compared, it is, as its name suggests, more a general principle than a specific methodology. In other words, it does not of itself purport to provide a detailed protocol for deriving a precise understanding of relative risks and uncertainties, much less justify particular detailed decisions. Instead, it provides a general normative guide to the effect that policy-making under uncertainty, ambiguity and ignorance should give the benefit of the doubt to the protection of human health and the environment, rather than to competing organizational or economic interests. This, in turn, holds important implications for the level of proof required to sustain an argument, the placing of the burden of persuasion and the allocation of responsibility for resourcing the gathering of evidence, and the performance of analysis. This is useful because none of these are matters on which there can be a uniquely firm ‘sound scientific' position.

… the precautionary principle draws attention to a broader range of non-reductive methods, which avoid spurious promises to determine ‘science-based' policy

Beyond this broad normative guidance, however, the procedural implications of the precautionary principle certainly do not compare with the detailed specifications of reductive methodologies. Instead, the precautionary principle is now more comparable with the general principles of rational choice that underlie these particular methods. Interestingly, these underlying principles of ‘sound science' are rarely explicitly enunciated, but instead implicitly assumed to be intrinsic to rationality. Examples include the quantification of likelihood using probabilities, the assumption of multiplicative relationships between probability and magnitude, an insistence on the universality of trade-offs, and an imperative to aggregate social preferences. Although not exposed to the same policy scrutiny as precaution, each of these is—as we have seen—contestable. Indeed, under conditions of uncertainty, ambiguity or ignorance, none is applicable.

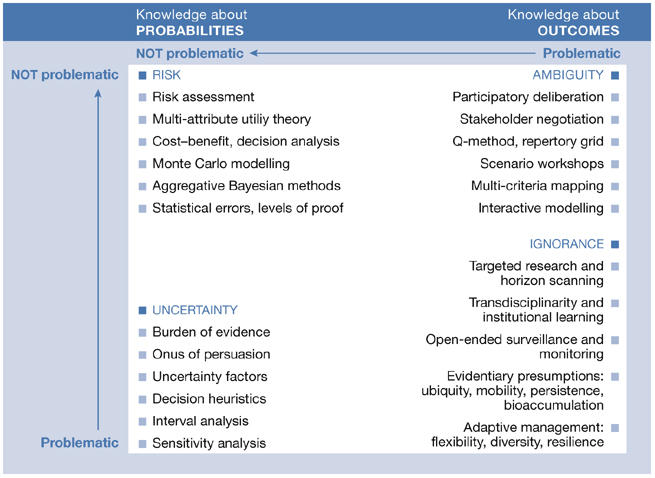

It is under these more intractable states of incertitude that the precautionary principle comes into its own (Stirling, 2003). The value here is not as a tightly prescriptive decision rule—by definition, that is not scientifically possible under these conditions. Instead, the precautionary principle draws attention to a broader range of non-reductive methods, which avoid spurious promises to determine ‘science-based' policy (Fig 4; Stirling, 2006). The intention is not to imply a neat one-to-one mapping of specific methods to individual states of knowledge, but rather to illustrate the rich variety of alternatives that exist if risk assessment is not properly applicable.

Figure 4.

Methodological responses to different forms of incertitude.

In this light, we can appreciate that the real failure as a decision rule is not that of the precautionary principle but the aspiration to a reductive, ‘science-based' risk assessment beyond the narrow confines of risk itself. If we seek simple rules to remove the need for subjectivity, argument, deliberation and politics, then precaution offers no such promise. Instead, it points to a rich array of methods that reveal the intrinsically normative and contestable basis for decisions, and the different ways in which our knowledge is incomplete. This is as good a ‘rule' for decision-making as we can reasonably get.

What is interesting about these implications of the precautionary principle is that they refute the often-repeated injunction—even at the highest levels of policy-making (CEC, 2000)—that precaution is relevant to risk management but not risk assessment. Various methodological responses to uncertainty, ambiguity and ignorance present alternative approaches (Fig 4). Of course, each might be seen as a complement to risk assessment, rather than as a potential substitute. The point is that insisting that precaution relates only to risk management entirely misses its real value in highlighting more diverse ways to gather relevant knowledge.

Policy understandings of precaution are now moving away from rigid ideas of a decision rule that is applicable only in risk management, towards more broad processes of social appraisal (Table 2; ESTO, 1999; Gee et al, 2001). In many ways, these qualities are simply common sense. In an ideal world, they would and could apply equally to the application of risk assessment. However, the incorporation of all these qualities as routine features would be prohibitively demanding of evidence, analysis, time and money. The question therefore arises as to how to identify those cases in which it is justifiable to adopt these approaches.

Table 2.

Key features of a precautionary appraisal process (after Gee et al, 2001)

| Precaution ‘broadens out' the inputs to appraisal beyond the scope that is typical in conventional regulatory risk assessment, in order to provide for the following points. |

| (i) Independence from vested institutional, disciplinary, economic and political interests |

| (ii) Examination of a greater range of uncertainties, sensitivities and possible scenarios |

| (iii) Deliberate search for ‘blind spots', gaps in knowledge and divergent scientific views |

| (iv) Attention to proxies for possible harm, i.e. mobility, bioaccumulation and persistence |

| (v) Contemplation of full life cycles and resource chains as they occur in the real world |

| (vi) Consideration of indirect effects, such as additivity, synergy and accumulation |

| (vii) Inclusion of industrial trends, institutional behaviour and issues of non-compliance |

| (viii) Explicit discussion over appropriate burdens of proof, persuasion, evidence and analysis |

| (ix) Comparison of a series of technology and policy options and potential substitutes |

| (x) Deliberation over justifications and possible wider benefits, as well as risks and costs |

| (xi) Drawing on relevant knowledge and experience arising beyond specialist disciplines |

| (xii) Engagement with the values and interests of all stakeholders who stand to be affected |

| (xiii) General citizen participation in order to provide independent validation of framing |

| (xiv) A shift from theoretical modelling towards systematic monitoring and surveillance |

| (xv) A greater priority on targeted scientific research to address unresolved questions |

| (xvi) Initiation at the earliest stages ‘upstream' in an innovation, strategy or policy process |

| (xvii) Emphasis on strategic qualities such as reversibility, flexibility, diversity and resilience |

The answer to this question is clearly stated in the precautionary principle itself. Since its canonical formulation in the Rio Declaration, precaution has been identified specifically as a response to ‘lack of scientific certainty', when there is a threat of serious or irreversible harm. As we have seen, this undifferentiated idea of incertitude might be further partitioned more accurately into strict uncertainty, ambiguity and ignorance. Either way, the practical implications are clear. In calling for more rigorous approaches to these states of incertitude, precaution need in no sense be seen as a blanket rejection of risk assessment. Under conditions in which uncertainty, ambiguity and ignorance are judged not to present significant challenges, the elegant reductive methods of risk assessment are powerful tools to inform decision-making.

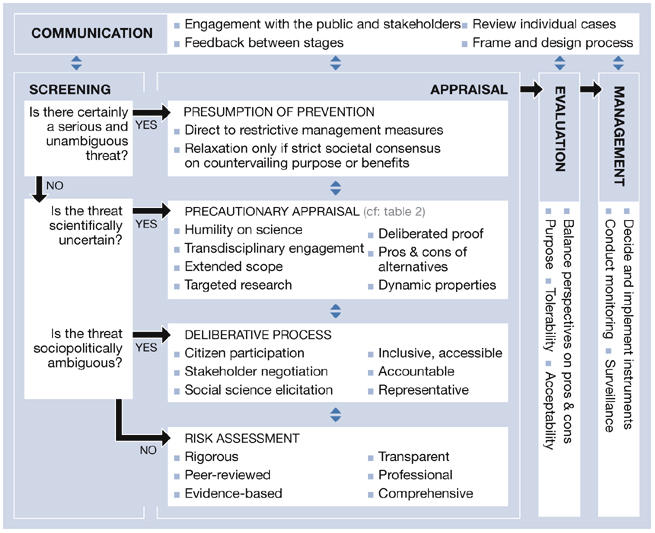

Fig 5 provides a general framework for the effective articulation of conventional risk assessment with the broader qualities and associated methods of the precautionary principle (Klinke & Renn, 2002; Renn et al, 2003; Stirling et al, 2006; Klinke et al, 2006). Just as current risk assessment is preceded by hazard characterization, so this framework uses a criteria-based screening process to identify crucial attributes of scientific uncertainty, or social or political ambiguity (Table 3). When none of these criteria is triggered, then the case in question is subject to conventional risk assessment. Only when there is uncertainty or ambiguity does the process initiate a more elaborate precautionary appraisal or deliberative process. Current work is refining the specific methodological, institutional and legal implications in the area of food safety (Stirling et al, 2006).

… precaution need in no sense be seen as a blanket rejection of risk assessment

Figure 5.

A framework for articulating precaution and risk assessment. Figure adapted from Stirling et al, 2006.

Table 3.

Illustrative criteria of seriousness, uncertainty and ambiguity (after Stirling et al, 2006)

| Criteria of seriousness |

| Clear evidence of carcinogenicity, mutagenicity, or reprotoxicity in components/residues |

| Clear evidence of virulent pathogens |

| Clear violation of risk-based concentration thresholds or standards |

| Criteria of uncertainty and ignorance |

| Scientifically founded doubts on theory |

| Scientific doubts on model sufficiency or applicability |

| Scientific doubts on data quality or applicability |

| Novel, unprecedented features of the product |

| Criteria of sociopolitical ambiguity |

| Divergent individual perceptions of risk |

| Institutional conflict between different agencies |

| Amplification effects in news media |

| Social/ethical concerns, distributional issues or political mobilization |

Of course, from any point of view, the devil will always be in the detail. However, the main points to make here are the following. First, the framework as a whole is itself precautionary, in that attention is given to ambiguity, uncertainty and ignorance. The default response to a certain, unambiguously serious threat is the immediate presumption of preventive measures. Second, the framework does not necessarily imply that the adoption of precaution will automatically entail any particular measure, such as bans or phase-outs. Third, the framework shows how adoption of the precautionary principle need not imply a blanket rejection of risk assessment, still less of science itself. Instead, it involves a carefully measured and targeted treatment of different states of knowledge. In this sense, this precautionary framework might be seen as more rigorous and rational—and potentially more robust—than the indiscriminate use of often-inapplicable methods.

This article began with a series of concerns about the precautionary principle. Taking each in turn, I have shown that the ‘sound scientific' methods of risk assessment—with which precaution is often compared—do not offer an unqualified rational, rigorous or robust basis for decision-making under uncertainty, ambiguity or ignorance. It is true that the precautionary principle also fails as a source of complete prescriptive ‘decision rules' under these challenging conditions. However, this failure is less acute in the case of precaution because prescriptive ‘decision rules' are neither the aim nor the claim of this general guidance.

Although falling short of prescriptive decision rules, the precautionary principle does suggest a range of more modest, open-ended, but nonetheless highly effective methodologies and general qualities, which offer ways to complement and improve on conventional risk assessment. As such, it is clear that—contrary to common assertions—the precautionary principle is of practical relevance as much to risk assessment as to risk management. Precaution does not automatically entail bans and phase-outs, but instead calls for deliberate and comprehensive attention to contending policy or technology pathways. Far from being in tension with science, precaution offers a way to be more measured and rational about uncertainty, ambiguity and ignorance.

Far from being in tension with science, precaution offers a way to be more measured and rational about uncertainty, ambiguity and ignorance

Of course, there remain unresolved issues. Precautionary appraisal is inherently comparative; therefore it is as likely to spur favoured directions for innovation as to inhibit those that are disfavoured. Here, we can expect—and should welcome—continuing criticism, concern and debate through open policy discourse and democratic accountability. What is not tenable is that these inherently political issues be concealed behind opaque, deterministic ideas of the role of science. In prompting more rational, balanced and measured understandings of ‘sound science' rhetorics on uncertainty, precaution has arguably made its greatest contribution.

References

- Arrow KJ (1963) Social Choice and Individual Values. New Haven, CT, USA: Yale University Press [Google Scholar]

- Byrd DM, Cothern CR (2000) Introduction to Risk Analysis: A Systematic Approach to Science-Based Decision Making. Rockville, MD, USA: Government Institutes [Google Scholar]

- CEC (2000) Communication from the Commission on the Precautionary Principle. COM (2000)1 final. Brussels, Belgium: Commission of the European Communities [Google Scholar]

- Collingridge D (1980) The Social Control of Technology. Milton Keynes, UK: Open University Press [Google Scholar]

- de Finetti B (1974) Theory of Probability. New York, NY, USA: Wiley [Google Scholar]

- ESTO (1999) On Science and Precaution in the Management of Technological Risk. Brussels, Belgium: European Science and Technology Observatory [Google Scholar]

- Fisher E (2002) Precaution, precaution everywhere: developing a ‘common understanding' of the precautionary principle in the European Community. Maastricht J Eur Comp Law 9: 7–28 [Google Scholar]

- Funtowicz SO, Ravetz JR (1990) Uncertainty and Quality in Science for Policy. Dordrecht, The Netherlands: Kluwer Academic [Google Scholar]

- Gee D, Harremoes P, Keys J, MacGarvin M, Stirling A, Vaz S, Wynne B (2001) Late Lesson from Early Warnings: The Precautionary Principle 1898–2000. Copenhagen, Denmark: European Environment Agency [Google Scholar]

- Klinke A, Renn O (2002) A new approach to risk evaluation and management: risk-based, precaution-based, and discourse-based strategies. Risk Anal 22: 1071–1094 [DOI] [PubMed] [Google Scholar]

- Klinke A, Dreyer M, Renn O, Stirling A, van Zwanenberg P (2006) Precautionary risk regulation in European governance. J Risk Res 9: 373–392 [Google Scholar]

- Lindsay R (1995) Galloping gertie and the precautionary principle: How is environmental impact assessment assessed? In Science for the Earth, T Wakeford, M Walters (eds), pp 44–57. London, UK: Wiley [Google Scholar]

- Morris J (ed; 2000) Rethinking Risk and the Precautionary Principle. Oxford, UK: Butterworth-Heinemann [Google Scholar]

- O′Riordan T, Cameron J (1994) Interpreting the Precautionary Principle. London, UK: Earthscan Publications [Google Scholar]

- Peterson M (2006) The precautionary principle is incoherent. Risk Anal 26: 595–601 [DOI] [PubMed] [Google Scholar]

- Raffensperger C, Tickner J (eds; 1999) Protecting Public Health and the Environment: Implementing the Precautionary Principle. Washington, DC, USA: Island [Google Scholar]

- Renn O, Dreyer M, Klinke A, Losert C, Stirling A, van Zwanenberg P, Muller-Herold U, Morosini M, Fisher E (2003) The application of the precautionary principle in the European Union. Final document of the EU project ‘Regulatory Strategies and Research Needs to Compose and Specify a European Policy on the Application of the Precautionary Principle' (PrecauPri). Stuttgart, Germany: Centre of Technology Assessment in Baden-Wuerttemberg [Google Scholar]

- Stirling A (1999) Risk at a turning point? J Env Med 1: 119–126 [Google Scholar]

- Stirling A (2003) Risk, uncertainty and precaution: some instrumental implications from the social sciences. In Negotiating Environmental Change, F Berkhout, M Leach, I Scoones (eds), pp 33–76. Cheltenham, UK: Edward Elgar [Google Scholar]

- Stirling A (2006) Uncertainty, precaution and sustainability: towards more reflective governance of technology. In Reflexive Governance for Sustainable Development, JP Voss, D Bauknecht, R Kemp (eds), pp 225–272. Cheltenham, UK: Edward Elgar [Google Scholar]

- Stirling A, Gee D (2002) Science, precaution, and practice. Public Health Rep 117: 521–533 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stirling A, Mayer S (1999) Rethinking Risk: a Pilot Multi-criteria Mapping of a Genetically Modified Crop in Agricultural Systems in the UK. Sussex, UK: Science Policy Research Unit, University of Sussex [Google Scholar]

- Stirling A, Ely A, Renn O, Dreyer M, Borkhart K, Vos E, Wendler F (2006) A General Framework for the Precautionary and Inclusive Governance of Food Safety: Accounting for Risks, Uncertainties and Ambiguities in the Appraisal and Management of Food Safety Threats. Stuttgart, Germany: University of Stuttgart [Google Scholar]

- Sundqvist T, Soderholm P, Stirling A (2004) Electric power generation: valuation of environmental costs. In Encyclopedia of Energy, CJ Cleveland (ed), pp 229–243. Boston, MA, USA: Elsevier Academic [Google Scholar]

- Thompson M, Warburton M (1985) Decision making under contradictory certainties: how to save the Himalayas when you can't find what's wrong with them. J Appl Sys Anal 12: 3–33 [Google Scholar]

- UN (1992) Report on the United Nations Conference on Environment and Development. A/CONF.151/26 (Vol 1). New York, NY, USA: United Nations [Google Scholar]

- Vos E, Wendler F (eds; 2006) Food Safety Regulation in Europe: A Comparative Institutional Analysis. Mortsel, Belgium: Intersentia [Google Scholar]

- Woteki C (2000) The Role of Precaution in Food Safety Decisions. Washington, DC, USA: US Department of Agriculture [Google Scholar]

- Wynne B (2002) Risk and environment as legitimatory discourses of technology: reflexivity inside out? Curr Sociol 50: 459–477 [Google Scholar]