Published scholarly articles commonly contain imperfections: punctuation errors, imprecise wording and occasionally more substantial flaws in scientific methodology, such as mistakes in experimental design, execution errors and even misconduct (Martinson et al, 2005). These imperfections are similar to manufacturing defects in man-made machines: most are not dangerous but a small minority have the potential to cause a disaster (Wohn & Normile, 2006; Stewart & Feder, 1987). Retracting a published scientific article is the academic counterpart of recalling a flawed industrial product (Budd et al, 1998).

However, not all articles that should be retracted are retracted. This is because the quality of a scientific article depends, among other things, on the effort and time invested in quality control. Mechanical micro-fractures in turbojet components are detected more readily than those in sculptures, as airplane parts are typically subjected to much more rigorous testing for mechanical integrity. Similarly, articles published in more prominent scientific journals receive increased attention and a concomitant increase in the level of scrutiny. This therefore raises the question of how many articles would have to be retracted if the highest standards of screening were universally applied to all journals.

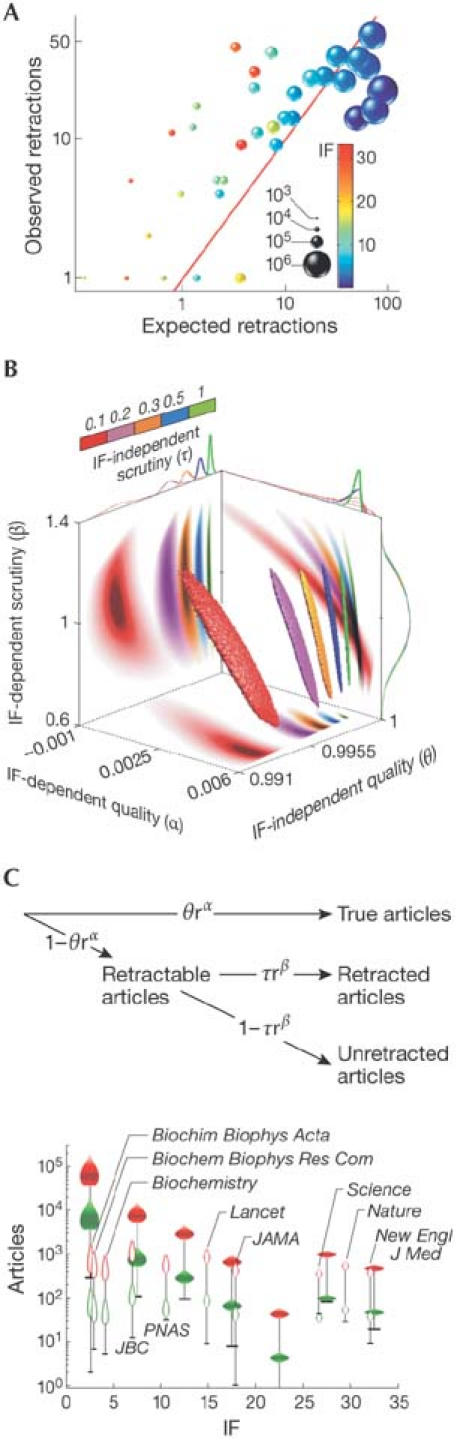

PubMed provides us with a ‘paleontological' record of articles published in 4,348 journals with a known impact factor (IF). Of the 9,398,715 articles published between 1950 and 2004, 596 were retracted. This wave of retraction hits high-impact journals significantly harder than lower-impact journals (Fig 1A), suggesting that high-impact journals are either more prone to publishing flawed manuscripts or scrutinized much more rigorously than low-impact journals.

Figure 1.

Dataset, model and estimation of the number of flawed articles in scientific literature. (A) Higher- and lower-impact factor (IF) journals have significantly more than expected and less than expected retracted articles, respectively. Each sphere represents the set of articles within the same IF range; the volume of the sphere is proportional to the set size and its colour represents the middle-of-the-bin IF value. The expected number of retractions is calculated under the assumption that all retractions are uniformly distributed among articles and journals. The red line indicates a hypothetical ideal correlation between the observed and the expected numbers of retractions. (B) and (C) explain the four-parameter graphical model describing our hypothetical stochastic publication–retraction process. (B) Estimated posterior distribution of parameter values for several values of impact-independent scrutiny. (C) Outline of the stochastic graphical model (top) and the posterior mean estimates of the number of articles that should be retracted (with 95% credible interval) plotted against different values of IF. Posterior distributions of estimated number of retractable articles: red- and green-coloured distributions correspond to τ = 0.1 and τ = 1, respectively; horizontal black solid lines indicate the actual number of retracted articles for individual IF bins and journals. The contour distributions represent individual journals, whereas the solid distributions correspond to the whole PubMed corpus binned by the IF value.

Here, we introduce a four-parameter stochastic model of the publication process (Fig 1C), which allows us to investigate both possibilities (see Supplementary Information for full details). The model describes the two sides of the publication–retraction process: the rigour of a journal in accepting a smaller fraction of flawed manuscripts (quality parameters α and θ), and post-publication scrutiny on the part of the scientific community (scrutiny parameters β and τ). We need four parameters to account for the possibility of both IF-independent and IF-dependent changes in quality and scrutiny (see Fig 1C, top). For each journal we compute a normalized impact r (a journal-specific IF divided by the maximum IF value in our collection of journals), and use it to define the strength of IF-dependent quality (rα) and IF-dependent scrutiny (rβ).

This probabilistic description allows us to distinguish between the two scenarios described above for the higher incidence of retractions in high-impact journals. For all values of τ tested, we find that the posterior mode of the IF-dependent quality parameter (α) is close to 0 (Fig 1B), indicating a nearly uniform and IF-independent rigour in pre-publication quality control. Conversely, the posterior mode of the IF-dependent scrutiny parameter (β) is essentially 1 (Fig 1B), which translates into a linear dependence between the IF of the journal and the rigour of post-publication scrutiny. Our data therefore suggest that high-impact journals are similar to their lower-impact peers in pre-publication scrutiny, but are much more meticulously tested after publication.

Our model also allows us to estimate the number of papers that should have been retracted under all plausible explanations of reality permitted by the model (Fig 1C). We estimate the number of articles published between 1950 and 2004 that ought to be retracted to be more than 100,000 under the more pessimistic scenario (τ = 0.1; red, Fig 1C), and greater than 10,000 under the most optimistic scenario (τ = 1; green, Fig 1C). The gap between retractable and retracted papers is much wider for the lower-impact journals (Fig 1C). For example, for Nature (1999–2004 average IF = 29.5), with τ = 1 (optimistic), we estimate that 45–67 articles should have been retracted, whereas only 30 actually were retracted. Science (IF = 26.7) and Biochemistry (IF = 4.1) have nearly identical numbers of papers published, but the actual numbers of retracted papers for these two journals are 45 and 5 respectively.

Our analysis indicates that although high-impact journals tend to have fewer undetected flawed articles than their lower-impact peers, even the most vigilant journals potentially host papers that should be retracted. However, the positive relationship between visibility of research and post-publication scrutiny suggests that the technical and sociological progress in information dissemination—the internet, omnipresent electronic publishing and the open access initiative—inadvertently improves the self-correction of science by making scientific publications more visible and accessible.

Supplementary information is available at EMBO reports online (http://www.emboreports.org).

Supplementary Material

References

- Budd JM, Sievert M, Schultz TR (1998) Phenomena of retraction: reasons for retraction and citations to the publications. JAMA 280: 296–297 [DOI] [PubMed] [Google Scholar]

- Martinson BC, Anderson MS, de Vries R (2005) Scientists behaving badly. Nature 435: 737–738 [DOI] [PubMed] [Google Scholar]

- Stewart WW, Feder N (1987) The integrity of the scientific literature. Nature 325: 207–214 [DOI] [PubMed] [Google Scholar]

- Wohn DY, Normile D (2006) Korean cloning scandal. Science 312: 980–981 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.