Abstract

Aims

This study describes development and field testing of a set of indicators for drug and therapeutics committees (DTCs) in hospitals. It was intended that these indicators should be accessible, useful and relevant in the Australian setting.

Methods

Candidate indicators were written following consultation and data collection. A framework of outcome, impact and process indicators was based on DTC goals, objectives and strategies. The candidate indicators were field tested over a 2 month period in teaching, city non-teaching, rural and private hospitals. The field tests provided response data for each indicator and evaluation of the indicators against criteria for accessibility, relevance, usefulness, clarity and resource utilisation. Consensus on which indicators to accept, modify or reject was reached at a workshop of stakeholders and experts, taking account of the field test results.

Results

Thirty-five candidate indicators were tested in 16 hospitals. Twenty-two had a response from >80% of sites, 23 had a mean relevance rating >3.5, 19 had a mean usefulness rating >3.5, 27 were correctly interpreted by >90% of sites and 25 could be collected in an acceptable time. The most acceptable indicators required least data collection or provided data deemed useful for purposes other than the field test. At the consensus workshop 13 indicators were accepted with no or minor change, nine were accepted after major modification and eight were discarded. It was recommended that a further five indicators should be merged or subsumed into one indicator.

Conclusions

This study has developed and field tested a set of indicators for DTCs in Australia. The indicators have been taken up enthusiastically as a first attempt to monitor DTC performance but require ongoing validation and development to ensure continuing relevance and usefulness.

Keywords: Pharmacy and Therapeutics Committees, indicators

Introduction

Drug and Therapeutics Committees (DTCs) have an established place in hospitals and many consider them pivotal to rational drug use. The proportion of hospitals in Australia with a DTC has risen in recent years; 94% in 1995 [1] compared with 70% in 1982 [2]. The activities of DTCs can be variously grouped into policy, regulation and educational activities, with the best mix dependent on the problems confronted and the organisational structure in which the committee operates. A survey of DTC activities found these related to the type and size of the hospital but that there was considerable variation even between hospitals of the same type [1]. Moreover, the level of activity fell short of that expected by stakeholders such as clinicians, administrators and patients.

Several authors [3–5] have discussed the requirements for a successful DTC based on the experience of DTC members. There has been little empirical work, however, to measure the performance of DTCs in an objective way. DTC members attending a seminar in 1994 [6] cited a need for measures to monitor performance and the effect of reforms or educational initiatives. Indicators were identified as a useful tool in this context. This paper reports a study to develop and field test indicators for DTCs that are accessible, useful and relevant in the Australian hospital setting.

Methods

A steering committee oversaw the study. Its membership included clinicians, quality improvement experts, DTC members, pharmacists and consumer representatives.

Candidate indicators

The goals, objectives and strategies necessary for effective DTC operation were identified by reference to previous data [1], published literature and consultation with experts on hospital-based drug use. The appropriateness of the goals, objectives and strategies was subsequently confirmed with the DTC in each field test site.

Candidate indicators were written using the framework established in the National Manual of Indicators for Quality Use of Medicines [7] using process, impact and outcome classifications.

The candidate indicators were drafted by the study team, reviewed by the steering committee and pilot tested by 10 Directors of Pharmacy from a range of teaching, non-teaching, city and rural hospitals.

Field tests

Hospitals were invited to be field test sites for the project. The invitation to participate was made specifically to the DTC, most commonly through the Chairman. Selection of field test sites included a mix of hospital types (teaching, non-teaching, public and private), settings (city, regional and rural) and geography (four Australian States). Most important, however, was willingness of sites to participate in the field tests which required a significant time commitment from DTC members.

A project officer visited each site and met with, typically, two to four DTC members to explain the data collection and evaluation requirements of the field test. The use of standardised data collection forms which recorded raw data was explained. The DTC at each site was asked to provide responses to as many indicators as possible for the previous 12 month period (January–December 1994). For indicators requiring survey data it was suggested that the DTC use any relevant data they may have or otherwise indicate, in principle, if the data could be collected over a 12 month period.

At the end of the 2 month field test period, the Project Officer revisited the sites and using a semi-structured interview recorded the response to the indicator question, accuracy of interpretation and data collection time. Participants were asked to score the relevance of each indicator for the hospital or health system and its usefulness as a management or reform instrument for the DTC. The scores were made on visual analogue scales from 0 (of no value) to 5 (essential).

Evaluation

The evaluation criteria required that: the data were accessible—assessed as a response from >80% of sites; the indicator was useful for management and reform of the DTC—assessed as a mean score of >3.5; the indicator was relevant to the hospital or health system—assessed as a mean score of >3.5; the indicator was unambiguous—assessed as correctly interpreted by >90% of sites; and the data collection time was acceptable—assessed as acceptable by >90% of sites. Failure to meet these criteria was a signal to the consensus workshop to modify or discard an indicator.

Consensus workshop

The field test results were considered by a consensus workshop which was charged with accepting, modifying or rejecting each indicator. The workshop categorised each indicator as core—suitable for interhospital comparison—or complementary—suitable for intrahospital comparison over time. Participation at the workshop was by invitation and included representatives from the field test sites, clinical pharmacologists, DTC members, pharmacists, consumer representatives, insurers, administrators and government representatives.

Results

Candidate indicators

Thirty-five candidate indicators were developed. These comprised four outcome, seven impact and 24 process indicators based on the DTC goals, objectives and strategies below:

-

Goal

—To improve the health and economic outcomes of hospital care resulting from drug use.

Objectives

—To ensure availability of safe, efficacious and cost-effective medicines

—To ensure affordability of medicines to the hospital

—To ensure quality (judicious, appropriate and safe) use of medicines.

Strategies

—Policies for availability, affordability and quality use of medicines.

—The Committee and its decisions

—an active, credible and sustainable committee

—sound and transparent decision making processes

—efficient management of resources.

—Promotion of quality therapeutics through

—implementation of standards of care.

—educational and behavioural interventions.

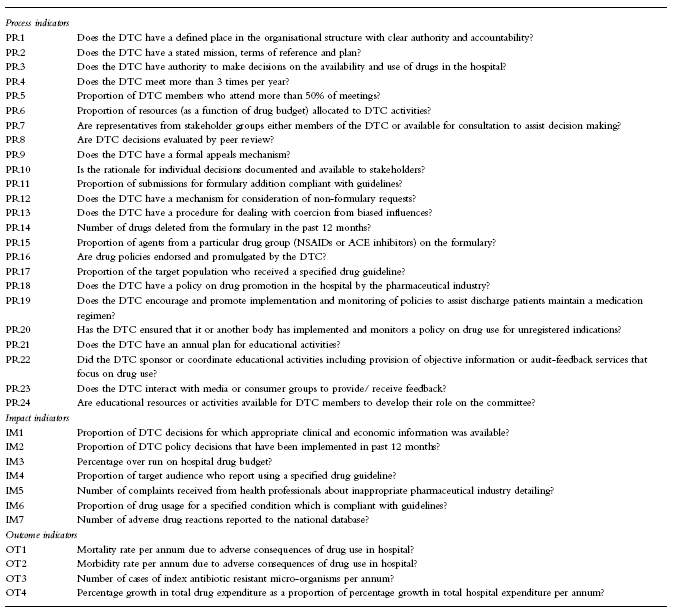

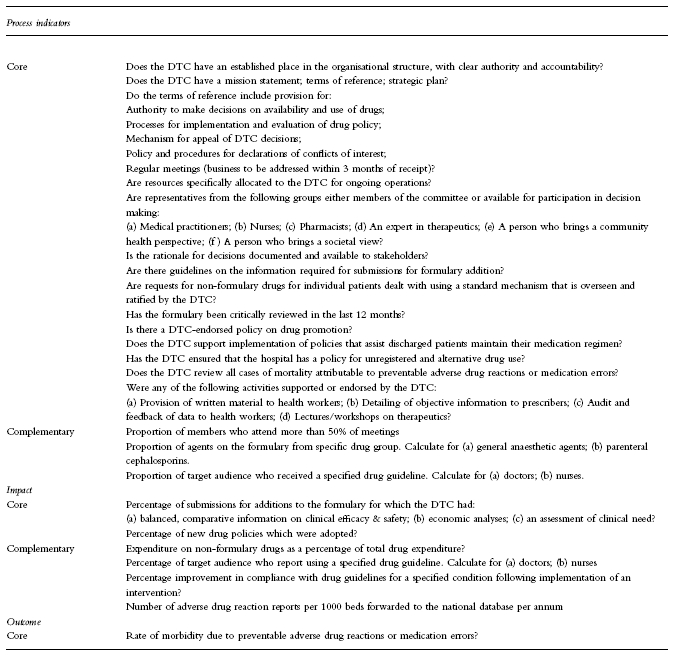

The candidate indicators (Table 1) were presented in standard format, stating the indicator question, response type (e.g., percentage), definitions, purpose, scope, source and method for data collection and limitations of the indicator.

Table 1.

Candidate indicators used in field tests.

Field tests

Indicators were field tested in 16 hospitals: five teaching, three city non-teaching, four regional or rural and four private hospitals. Fifteen hospitals attempted all indicators and one private hospital attempted only a self-selected sample.

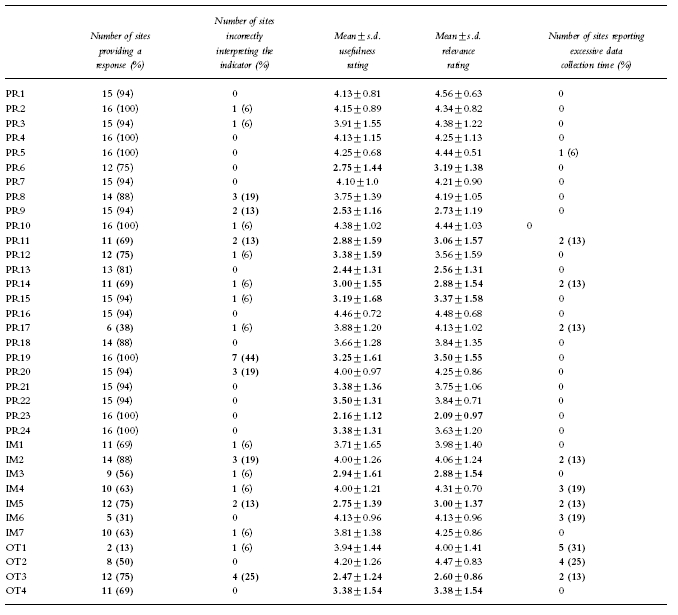

Of the 35 indicators, 22 had a response from >80% of sites. The indicators with a poor response rate all required some data collection although two (OT1, OT2) should have been available through automatic data capture. The most common reason for not providing a response was the activity had not been undertaken in 1996 or lack of time during the field test period. The sites which did not provide a response to the indicator were still asked to provide usefulness and relevance ratings (Table 2).

Table 2.

Aggregate responses from the field test sites (n = 16).

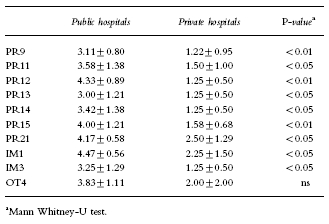

Nineteen of the indicators had a mean usefulness rating >3.5 and 23 had a mean relevance rating >3.5. The reasons for low ratings of indicators included: the indicator was perceived as a clumsy or inaccurate measure (PR6, PR14, PR15, IM5, OT3); the indicator measured a low priority activity (PR19, PR23, IM3); or, the activity while potentially desirable was not part of current practice (PR9, PR11, PR13). The latter group of indicators scored significantly worse in private hospitals (Table 3).

Table 3.

Mean scores (±s.d.) for public and private hospitals for indicators that demonstrated a significant difference in relevance ratings.

Twenty-seven of the indicators were correctly interpreted by >90% of sites and 25 could be collected in a time frame acceptable to >90% of test sites.

Post hocanalysis revealed that private hospitals often responded differently to public hospitals. Indicators considered irrelevant by all private hospitals (mean relevance score ≤2.0) included PR9, PR11, PR12, PR13, PR14, PR15, PR23, IM1, IM3, OT4. The mean relevance scores for indicators which related to formulary management and cost containment were significantly lower in private hospitals (Table 3).

Some the indicators had low response rates (PR17, IM4, IM6, OT1, OT2) because of the complexity of data collection. The high usefulness and relevance ratings for these indicators were consistent with comments from sites that they would have provided data if the test period had been longer.

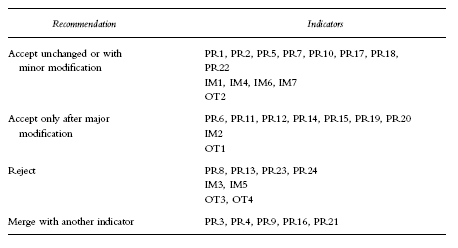

Consensus workshop

Workshop participants recommended that 13 indicators should be accepted essentially unchanged, nine should be accepted after substantial changes and eight should be rejected (Table 4). It was recommended that five indicators should be merged as a discrete sub-question or subsumed into a single question. (Table 5).

Table 4.

Recommendations of the consensus workshop.

Table 5.

Final set of indicators for drugs and therapeutics committees.

Discussion

This study reports the development and field testing of a set of indicators for drug and therapeutics committees. A collaborative approach was taken to encourage ‘grass roots’ participation in design and selection of indicators. This emphasis was considered essential to the success of the project which required substantial time commitments from those involved.

The design of the indicators has been based on goals, objectives and strategies identified as important to effective operation of DTCs. Their usefulness for management and reform, relevance to the health system and clarity have been tested. This permits confidence in the face and concurrent validity of the indicators but does not address the more critical issue of correlating compliance with indicators with quality drug use or indeed desirable patient outcomes. Such a validation was beyond the scope of this study but remains an important subject for future work. This set of indicators, therefore, could be used to monitor DTC performance assuming the current consensus of best practice is well founded but would be unlikely to cause a shift to a more effective paradigm.

The indicators which were found to be most acceptable to field testers were those requiring least data collection or providing information which was valuable for other management tasks. This is consistent with other work on implementation of performance indicators. A shortcoming, however, is that such indicators are more likely to monitor strategies than objectives or goal attainment. They typically identify if processes and policies are in place but not whether they influence practice or outcomes. It would be important in subsequent work to move toward more impact and outcome indicators but this will require better integrated information systems.

There is potential for selection bias in this study. The selected sites agreed to undertake substantial data collection, consequently only those who believed there may be some value in indicators were likely to take part. However, of 18 sites invited to partcipate in the study only two declined.

Anecdotally, we have observed changes in several DTCs since field testing. These have included introduction of drug utilisation evaluation, inclusion of consumer representation on the committee and introduction of strategic planning to DTC work.

Other authors have identified several strategies as necessary to DTC effectiveness. Bochner et al. [8] commented that formulary management and drug use evaluation were important for DTCs dealing with capped budgets. Jarry & Fish [9] supported involvement of practicing physicians in DTC decision making for acceptance of decisions and consequently effective DTC operation. These commentators also drew attention to the goal of DTCs being concerned with total patient care and the total cost of care. Summers & Szeinbach [10] suggested that an effective DTC had educational, communication and advisory roles and was composed of physicians, pharmacists and nursing representatives.

Mannebach et al. [11] have reported a study which focused on the structural and organisational factors that affect DTCs. They identified performance measures such as average length of stay, number of drugs added and number of drugs deleted from the formulary, percentage of nonformulary requests approved, efficiency index and casemix index. Some of these indicators were investigated in our study. Field testing found the number of drugs deleted from the formulary to be a fairly meaningless indicator when used in isolation. Several test sites commented that variations over time were confounded by the cycle of formulary review which may be longer than a year. One of the principal findings of the study by Mannebach was that the type and number of drugs on the hospital formulary was a significant factor for all performance measures which leads the authors to conclude that formulary decisions were important determinants of DTC and institutional performance [11]. This finding is supported by our own study in public hospitals where indicators concerning decision making and formulary management were highly scored. However, we did not find the same to be the case in private hospitals which were subject to a different funding system. This highlights the importance of understanding the environment in which the DTC is operating before implementing performance measures.

In conclusion, this study has developed and field tested indicators for DTCs in Australia. These indicators have been taken up enthusiastically as a first attempt to monitor performance. Further validation and ongoing development will be essential to ensure the indicators remain relevant and useful to decision makers on DTCs.

Acknowledgments

The authors wish to acknowledge major contributor to this study: Members of the Steering Committee, personnel at the field test sites and participants of the consensus workshop. The study was funded by a grant from the Pharmaceutical Education Program of the Department of Health and Family Services of the Commonwealth of Australia. The authors thank Professor Andrea Mant for her advice in reviewing this manuscript.

References

- 1.Weekes LM, Brooks C. Drug and Therapeutics Committees in Australia: expected and actual performance. Br J Clin Pharmacol. 1996;42:551–557. doi: 10.1111/j.1365-2125.1996.tb00048.x. [DOI] [PubMed] [Google Scholar]

- 2.Miller BR, Plumridge RJ. Drug and therapeutics committees in Australian hospitals: an assessment of effectiveness. Aust J Hosp Pharm. 1983;13:61–64. [Google Scholar]

- 3.Anon Editors’ roundtable: current formulas for P&T committee success. Hosp Formul. 1987;22(Part 1):288–291. [PubMed] [Google Scholar]

- 4.Anon Editors’ roundtable: current formulas for P&T committee success. Hosp Formul. 1987;22(Part 2):373–375. [PubMed] [Google Scholar]

- 5.Abramowitz PW, Godwin HN, Latiolais CJ, McDougal TR, Ravin RL, et al. Developing an effective P&T committee. Hosp Formul. 1985;6(Part 1):841–843. [PubMed] [Google Scholar]

- 6.Harvey R. Outcome measures and accountability. Aust Pres. 1995;18(suppl 1):20. [Google Scholar]

- 7.National Manual of Indicators to measure the effect of initiatives under the Quality Use of Medicine arm of the National Medicinal Drug Policy. Canberra: Commonwealth Department of Human Services and Health, Australian Government Publishing Service; 1994. [Google Scholar]

- 8.Bochner F, Martin ED, Burgess NG, Somogyi AA, Misan GMH. Drug rationing in a teaching hospital: a method to assign priorities. Br Med J. 1994;308:901. doi: 10.1136/bmj.308.6933.901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jarry PD, Fish L. Insights on outpatient formulary management in a vertically integrated health care system. Formulary. 1997;32:500–514. [Google Scholar]

- 10.Summers KH, Szeinbach SL. Formularies: The role of pharmacy and therapeutics (P&T) committees. Clin Ther. 1993;15:433–441. [PubMed] [Google Scholar]

- 11.Mannebach MA, Ascione FJ, Christian R. Measuring performance of pharmacy and therapeutics committees. Proc Am Pharm Assoc Ann Meet. 1996;143:10. [Google Scholar]