Abstract

The histories of selected public and environmental hazards, from the first scientifically based early warnings about potential harm to the subsequent precautionary and preventive measures, have been reviewed by the European Environment Agency. This article relates the “late lessons” from these early warnings to the current debates on the application of the precautionary principle to the hazards posed by endocrine-disrupting substances (EDSs). Here, I summarize some of the definitional and interpretative issues that arise. These issues include the contingent nature of knowledge; the definitions of precaution, prevention, risk, uncertainty, and ignorance; the use of differential levels of proof; and the nature and main direction of the methodological and cultural biases within the environmental health sciences. It is argued that scientific methods need to reflect better the realities of multicausality, mixtures, timing of dose, and system dynamics, which characterize the exposures and impacts of EDSs. This improved science could provide a more robust basis for the wider and wise use of the precautionary principle in the assessment and management of the threats posed by EDSs. The evaluation of such scientific evidence requires assessments that also account for multicausal reality. Two of the often used, and sometimes misused, Bradford Hill “criteria,” consistency and temporality, are critically reviewed in light of multicausality, thereby illustrating the need to review all of the criteria in light of 40 years of progress in science and policymaking.

Keywords: causality, early warnings, endocrine-disrupting substances, false negatives, ignorance, uncertainty, prevention, proof, surprises

In this article I identify some of the science/policy interface issues relevant to the EDS debate, drawing on several case studies about some relatively well-known hazards of the last 150 years or so. I deal briefly with the contingent nature of scientific knowledge, with uncertainty and ignorance, and with some of the conceptual and definitional issues that arise from the wider and wise application of the precautionary principle to the management of EDSs. As Christoforou (2002) has pointed out, “discussing the precautionary principle is probably the best analytical tool we have to comprehend the complex relationship that exists between science and risk regulation.”

Much of the argument in this article is taken from the report by the European Environment Agency (EEA), “Late Lessons from Early Warnings: the Precautionary Principle 1896–2000” (EEA 2001). I supplement the narrative with observations on several broader but related issues that have dominated discussions on the practical application of the precautionary principle since publication of the EEA report.

Early Use of Precaution

The Vorsorgeprinzip, or “foresight” principle (Kriebel and Tickner 2001; Tickner 2002), emerged as a specific policy tool only during the German debates on the possible role of air pollution as a cause of “forest death” in the 1970s and 1980s (Boehmer-Christiansen 1994; Kriebel and Tichner 2001). John Graham, one of President Bush’s science policy advisors and an otherwise trenchant critic of the precautionary principle, has noted that

Precaution, whether or not described as a formal principle, has served mankind well in the past and the history of public health instructs us to keep the spirit of precaution alive and well. (Graham 2002)

Graham might have been thinking of the Broad Street pump episode of 1854, when precaution did indeed serve the people of London well. At that time, John Snow, a London physician, used the foresight principle to restrict access to polluted water coming from the Broad Street pump, because he suspected that it was the cause of the cholera outbreaks that were plaguing such urban centers in those days (Snow 1936).

Snow’s views on cholera causation were not shared by The Royal College of Physicians, who considered Snow’s thesis and rejected it as untenable (Barau and Greenbough 1992). They and other “authorities” of the day believed that cholera was caused by airborne contamination. This particular scientific “certainty” soon turned out to be certainly mistaken, with the last remaining doubt being removed when Koch in Germany isolated the cholera vibrio (Vibrio cholerae) from water in 1883 (Brock 1998).

From the association between exposure to water polluted with human feces, and cholera, observed by Snow in 1854, to Koch’s discovery of the mechanism of action, took 30 years of further scientific inquiry. Such a long timelag between acknowledging compelling associations and understanding their mechanisms of action is a common feature of scientific inquiry, as illustrated by the unfolding of the role of endocrine disruption in the histories of tributylin (TBT), polychlorinated biphenyls (PCBs), diethylstilbestrol (DES), the Great Lakes pollution, and of beef hormones (EEA 2001).

For example, by the 1930s, evidence already existed, albeit at a low level of proof, that PCBs could poison people. This information was not widely circulated among policy-makers or other stakeholders until 30 years later when there was a higher level of proof that PCBs could cause serious harm to human health and could accumulate in the Baltic food chain. Had precautionary action at a level of proof less than “beyond reasonable doubt” been acceptable to and applied by policy members of that era, many years of PCB use would have been avoided. It was not until the 1970s, however, that the first regulatory actions were taken by Sweden to ban these chemicals. The European Union (EU) Directive 96/58/EC to eliminate PCBs was not implemented until 1996, with a total phaseout planned by 2010 (Koppe and Keys 2001). Similarly, it is only after more than half a century of human and wildlife exposures to organochlorine compounds from the Great Lakes that scientists are beginning to comprehend the scale of damage that occurred during that period. This damage happened despite the publication of the book Silent Spring (Carson 1962), which warned us about the potential effects of organochlorine pesticides on wildlife and humans (Gilbertson 2001). A final (but by no means the only) example is that of the history of the anti-miscarriage drug DES, first synthesized in 1938 and identified as an animal carcinogen in the same year. Despite having been found to be ineffective in preventing miscarriage in 1953, DES was widely marketed for the next 17 years, until 1970, when the first evidence of reproductive cancer in humans who had been exposed to the drug in utero was reported. Had DES been withdrawn for use during pregnancy in 1953, the unecessary tragic exposure of millions of mothers, sons, and daughters could have been avoided (Ibarreta and Swan 2001).

There are, however, historical examples of chemical contamination in which the application of the precautionary principle to EDSs has occurred. In Arachon, France, for example, the precautionary principle was applied in the early 1980s when the collapse of the valuable oyster beds was associated with the increased use of boat paint containing the antifoulant TBT (Gibbs 1993). These observations predated analytical techniques sensitive enough to detect the environmental distributions of TBT (Alzieu et al. 1986). However, the association between the explosive increase in the use of TBT, the collapse of the oyster beds, and the known acute toxicity of this compound was sufficient to result in action. The later discovery of widespread imposex in dog-whelks, which was also strongly associated with TBT pollution, gradually led to further national and international actions to ban TBT from the paints used on both inshore and oceangoing vessels (Santillo et al. 2001).

Knowledge and Ignorance Requires both Prevention and Precaution

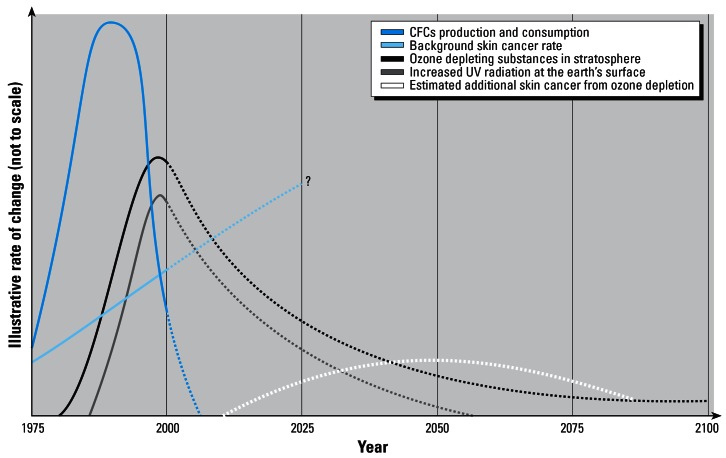

Examples of the Broad Street pump, TBT, DES, PCBs, and Great Lakes pollution described here also illustrate the contingent nature of knowledge. Today’s scientific certainties can be tomorrow’s mistakes, and today’s research can both reduce and increase scientific uncertainties, as the boundaries of the known and the unknown expand. Waiting for the results of more research before taking action to reduce threatening exposures may not only take decades but the new knowledge may identify previously unknown sources of both uncertainty and ignorance, as awareness of what we do not know expands (Figure 1), thereby supplying further reasons for inaction.

Figure 1.

Knowing and not knowing: a dynamic expansion.

“The more we know, the more we realize what we don’t know” is not an uncommon scientific experience. Socrates observed some time ago: “I am the wisest man alive, for I know one thing, and that is that I know nothing”. (Helm 1997).

This observation was an early lesson that has been forgotten lately by many scientists and politicians, who often put misplaced certainty in today’s scientific knowledge or assume that uncertainty can only be reduced and not increased by further research.

The distinction between uncertainty and ignorance is important. Ignorance is not knowing what we do not know and is the source of scientific surprises. It is distinct from uncertainties that arise from gaps in knowledge and from variances in sampling and monitoring, parameter variability, model assumptions, and in the other attempts to approximate reality.

Forseeing and preventing hazards in the context of ignorance presents particular challenges to decision makers. At first sight it seems impossible to do anything to avoid or mitigate surprises such as the appearance of the ozone hole, or of imposex in sea snails when we have no idea that they are going to occur. Ignorance ensures that there will always be surprises. Notwithstanding this, there are some measures that could help the consequences of ignorance and the impacts of surprises:

Using intrinsic properties as generic predictors for unknown but possible impacts, for example, the persistence, bioaccumulation, and spatial range potential of chemical substances (Stroebe et al. 2004).

Reducing specific exposures to potentially harmful agents on the basis of credible early warnings of initial harmful impacts, thus limiting the size of any other surprise impacts from the same agent, such as the asbestos cancers that followed asbestosis; and the PCB neurotoxicological effects that followed its wildlife impacts.

Promoting a diversity of robust and adaptable technological and social options to meet needs, which limits technological monopolies (such as asbestos, chlorofluorocarbons (CFCs), PCBs) and therefore reduces the scale of any surprise from any one option.

Using scenarios and long-term research and monitoring of surprise-sensitive sentinels, such as, possibly, amphibians (Hayes et al. 2006a) to anticipate surprises more readily.

The distinction between prevention and precaution is also important. Preventing hazards from known risks is relatively easy and does not require precaution. Banning smoking, or asbestos, today requires only acts of prevention to avoid the well-known risks. However, it would have required precaution (or foresight, based on sufficient evidence) to have justified acts to avoid exposure to the then uncertain hazards of asbestos in the 1930s–1950s, or to those of tobacco smoke in the 1960s. Such precautionary acts at those times, if implemented successfully, would have saved many more lives in Europe than today’s acts of prevention are doing.

There is much discussion generated by the many different interpretations of concepts such as prevention, precaution, risk, uncertainty, and ignorance in debates on the precautionary principle. In Table 1 I provide information to clarify these and other key terms to help reduce unnecessary arguing about their meanings.

Table 1.

Toward a clarification of key terms.

| Situation | State and dates of knowledge | Examples of action |

|---|---|---|

| Risk | Known impacts; known probabilities, e.g., asbestos | Prevention: action taken to reduce known hazards, e.g., eliminate exposure to asbestos dust |

| Uncertainty | Known impacts; unknown probabilities, e.g., antibiotics in animal feed and associated human resistance to those antibiotics | Precautionary prevention: action taken to reduce exposure to potential hazards |

| Ignorance | Unknown impacts and therefore unknown probabilities, e.g., the surprise of chlorofluorcarbons (CFCs), pre-1974. | Precaution: action taken to anticipate, identify, and reduce the impact of surprises |

From “Late Lessons” (EEA 2001).

The relatively rare but successful acts of “precautionary prevention” in 1854 regarding cholera in England and in the 1980s regarding TBT in France, together with many examples of failure to use the precautionary principle in other case studies (EEA 2001), illustrate the need for appropriate precautionary action to avoid serious threats to health or environments. Such precautionary action needs to be justified by an appropriate level of scientific evidence regarding the association between hazardous exposures and potential ill health (or environmental damage) without waiting for the certainty of causation, or for the knowledge about mechanisms of action to appear, which can take many decades of further research. As Hegel observed, “the owl of Minerva spreads its wings only with the falling of the dusk” (Hegel 2001). Waiting for the “dusk” of certain knowledge before taking action to reduce exposures, especially when there is a long time between such exposures and serious impacts, would often be too late to avoid costly damage. Precautionary action therefore may need to be taken while the “owl” is still sleeping.

The Precautionary Principle: Some Definitions and Interpretations

The debates on the Vorsorgeprinzip shifted from the national level in Germany to the international level in the 1980s and 1990s, initially in the field of conservation (United Nations 1982), but then particularly in marine pollution, where an overload of data accompanied an insufficiency of knowledge. This situation generated the need to act with precaution to reduce the large amounts of chemical pollution entering the North Sea (Sheppard 1997). Since then, many international treaties have included reference to the precautionary principle, or, as they refer to it in the United States, the precautionary approach. In Appendix 1 I provide examples, including the often-cited version from the Third North Sea Ministerial Conference held 7–8 March 1990 in The Hague. This conference called for action to avoid potentially damaging impacts of substances, even where there is no scientific evidence to prove a causal link between emissions and effects.

This North Sea definition has often and sometimes mischievously been used to deride the precautionary principle by claims that it appears to justify action even when there is no scientific evidence that associates exposures with effects. However, the North Sea conference text on the precautionary principle clearly links the words “no scientific evidence” with the words “to prove a causal link.” We have already seen with the Broad Street pump and TBT examples that there significant difference exists between evidence about an association and evidence that is robust enough to establish a causal link. (Bradford Hill 1965). All official definitions require some scientific evidence of an actual or potential association between exposures and current, or potential, impacts. This linkage is made clearer in the widely quoted Wingspread statement on the precautionary principle, which stated the following:

When an activity raises threats of harm to human health or the environment, precautionary measures should be taken, even if some cause-and-effect relationships are not fully established scientifically. In this context the proponent of an activity, rather than the public, should bear the burden of proof. The process of applying the Precautionary Principle must be open, informed and democratic, and must include potentially affected parties. It must also involve an examination of the full range of alternatives, including no action. (Raffensperger and Tickner 1999)

The later international treaties that cite the precautionary principle, such as the Stockholm Convention on Persistent Organic Pollutants (2005), also tend to encourage public participation and transparency.

However, there is still much disagreement and discussion about the interpretation and practical application of the precautionary principle, because of, in part, this lack of clarity over its definition, and particularly over the sufficiency of good scientific evidence needed to justify public policy action. For example, most definitions use a double negative to define the precautionary principle; that is, they identify reasons that cannot be used to justify not acting. They also fail to identify that a sufficiency of evidence is needed to justify the case-specific action required to avoid serious hazards.

The communication from the EU on the precautionary principle (European Commission 2000) does specify that “reasonable grounds for concern” are needed to justify action under the precautionary principle, but it neither makes explicit that these grounds will be case-specific nor does it explicitly distinguish between risk, uncertainty, and ignorance.

The European Commission communication has been a very useful input to the debates on the precautionary principle, and it has helped initiate what the Commission stressed was “not the last word” but was, rather, the point of departure for a broader study of the conditions in which risks should be assessed, appraised, managed, and communicated. Since the EC Communication, both EU case law and the regulation establishing the new European Food Safety Authority (EFSA; EU 2002) have further clarified the circumstances of use and application of the precautionary principle.

EEA experience over the last 5 years is that much unnecessary debate still arises from the lack of a clearer definition of the precautionary principle itself, as opposed to the clarifications on its application that have emerged from EU case law and secondary legislation. The working EEA definition used in the “Late Lessons” report (EEA 2001) has therefore been improved in light of subsequent discussions and legal developments, and it is provided below in the hope that it will facilitate constructive debate on its interpretation and application.

The Precautionary Principle provides justification for public policy actions in situations of scientific complexity, uncertainty and ignorance, where there may be a need to act in order to avoid, or reduce, potentially serious or irreversible threats to health or the environment, using an appropriate level of scientific evidence, and taking into account the likely pros and cons of action and inaction.

The EEA definition explicitly specifies complexity, uncertainty, and ignorance as contexts for applying the precautionary principle. It also makes clear that a case-specific sufficiency of scientific evidence is needed to justify public policy action to avoid or reduce hazards. The definition is explicit about the tradeoff between action and inaction, and it widens the conventionally narrow, and usually quantifiable, interpretation of costs and benefits to embrace the wider and sometimes unquantifiable pros and cons. Some of these wider issues such as loss of the ozone layer, or of public trust in science are unquantifiable, but they can sometimes be more damaging to society than the quantifiable impacts. Moreover, they need to be included in comprehensive risk assessments.

Different Levels of Proof for Different Purposes

The level of proof (or strength of scientific evidence) that would be appropriate to justify public action in each case varies with the pros and cons of action or inaction. These factors include the nature and distribution of potential harm, the benefits of the agent or activity under suspicion, the availability of feasible alternatives, and the overall goals of public policy. Such policy goals can include the achievement of the high levels of protection of public health, of consumer safety, and of the environment.

The use of different levels of proof is not a new idea; societies often use different levels of proof for different purposes. For example, a high level of proof (or strength of evidence) such as beyond all reasonable doubt is used to achieve good science where A is seen to cause B only when the evidence is very strong. Such a high level of proof is also used to minimize the costs of being wrong in the criminal trial of a suspected murderer, where it is usually regarded as better to let several guilty men go free than it is to wrongly convict an innocent man. However, in a civil trial setting where, for example, a citizen seeks compensation for neglectful treatment at work, which has resulted in an accident or ill health, the court often uses a lower level of proof commensurate with the costs of being wrong in this different situation. In compensation cases, an already injured party is usually given the benefit of the doubt by the use of a medium level of proof, such as balance of evidence or probability. It is seen as being less damaging (or less costly in the wider sense) to give compensation to someone who was not treated negligently than it is not to provide compensation to someone who was treated negligently. The “broad shoulders” of insurance companies are seen as able to bear the costs of mistaken judgments rather better than the much narrower shoulders of an injured citizen. In each of these two illustrations, it is the nature and distribution of the costs of being wrong that determines the level of proof (or strength of evidence) that is appropriate to the particular case.

Identifying an appropriate level of proof has also been an important issue in the climate change debates. The International Panel on Climate Change (IPCC) discussed this issue at length before formulating their 1995 conclusion (IPCC 1995) that “on the balance of evidence,” mankind is disturbing the global climate. They further elaborated on this issue in their 2001 report (IPCC 2001, where they identified seven levels of proof (or strengths of evidence) that can be used to characterize the scientific evidence for a particular climate change hypothesis. Table 2 provides the middle five of these levels of proof and illustrates their practical application to a variety of different societal purposes.

Table 2.

Different levels of proof for different purposes: some examples and illustrations.

| Probability (%) | Quantitative descriptora | Qualitative descriptor | Illustrations |

|---|---|---|---|

| 100 | Very likely (90–99%) | Statistical significance | Part of strong scientific evidence for causation |

| Beyond all reasonable doubt | Most criminal law and the Swedish Chemical Law 1973 (McCormick 2001), for evidence of safety of substances under suspicion; burden of proof on manufacturers | ||

| Likely (66–90%) | Reasonable certainty | Food Quality Protection Act (1996) | |

| Sufficient scientific evidence | To justify a trade restriction designed to protect human, animal, or plant health under World Trade Organisation (WTO) Sanitary and Phytosanitary (SPS) Agreement, Article 2.2, (WTO 1994a) | ||

| Medium likelihood (33–66%) | Balance of evidence | IPCC (1995, 2001) | |

| Balance of probabilities | Much civil and some administrative law | ||

| Reasonable grounds for concern | European Commission communication on the precautionary principle (European Commission 2000) | ||

| Strong possibility | British Nuclear Fuels occupational radiation compensation scheme (20–50% probabilities triggering different awards ≥50%, which then triggers full compensation) (Mummery and Alderson 1989) | ||

| Low likelihood (10–33%) | Scientific suspicion of risk | Swedish Chemical Law 1973 (McCormick 2001), for sufficient evidence to take precautionary action on potential harm from substances; burden of proof on regulators | |

| Available pertinent information | To justify a provisional trade restriction under WTO SPS Agreement, Article 5.7, where scientific information is insufficient (WTO 1994b) | ||

| Very unlikely (1–10%) | Low risk | Household fire insurance | |

| 0 | Negligible and insignificant | Food Quality Protection Act (1996) |

Probability bands based on IPCC (2001).

Bradford Hill was very concerned about the social responsibility of scientists, and he concluded his classic 1965 paper on causation with a “call for action” in which he also proposed the concept of case-specific and differential levels of proof. His three examples ranged from “relatively slight” to “very strong” evidence, depending on the nature of the potential impacts and of the pros and cons in each specific case. These examples included “possibly teratogenic medicine for pregnant women,” probable carcinogen in the workplace, and restrictions on public smoking or diets, respectively (Bradford Hill 1965).

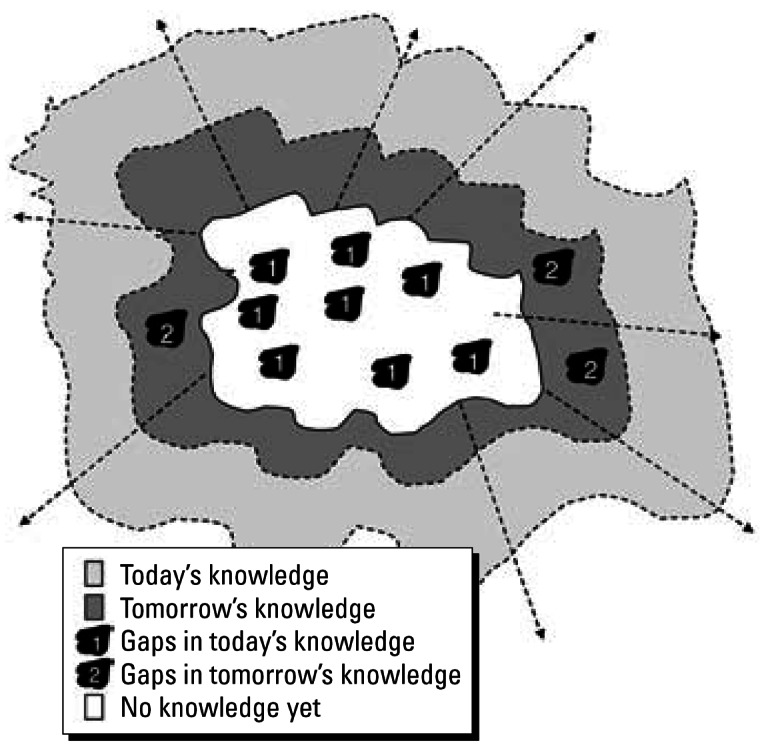

Public Participation in Risk Analysis

Choosing an appropriate level of proof for a particular case is clearly based on value judgments about the acceptability of the costs, and of their distribution, of being wrong in both directions, namely, of acting or not acting to reduce threatening exposures. Therefore, it is necessary to involve the public in decisions about serious hazards and their avoidance: and to do so for all stages of the risk analysis process, as Figure 2 illustrates.

Figure 2.

A framework for risk analysis and hazard control.

The EEA Report or the Precautionary Principle (EEA 2001) concludes with 12 late lessons. These lessons represent an attempt to synthesize the 14 different experiences from the very different case study chapters into generic knowledge that can help inform policymaking for regulating new technologies, including genetically modified organisms (GMOs), nanotechnologies, and mobile phones. The lessons can also provide guidance for regulating EDSs as phthalates, atrazine, and bisphenol A, for which the luxury of hindsight is not yet available.

The idea of the 12 late lessons is to make the most of past experience to help anticipate future surprises while recognizing that history never exactly repeats itself. When adopted along with the best available science, the lessons will help minimize hazards without compromising innovation. The lessons are listed in Table 3.

Table 3.

Twelve late lessons.

| Identify/clarify the framing and assumptions |

| 1. Manage risk, uncertainty, and ignorance |

| 2. Identify/reduce “blind spots” in the science |

| 3. Assess/account for all pros and cons of action/inaction |

| 4. Analyze/evaluate alternative options |

| 5. Take account of stakeholder values |

| 6. Avoid “paralysis by analysis” by acting to reduce hazards via the precautionary principle. |

| Broaden assessment information |

| 7. Identify/reduce interdisciplinary obstacles to learning |

| 8. Identify/reduce institutional obstacles to learning |

| 9. Use lay, local, and specialist knowledge |

| 10. Identify/anticipate real world conditions |

| 11. Ensure regulatory and informational independence |

| 12. Use more long-term (i.e., decades long) monitoring and research |

Three of the twelve late lessons (numbers 5, 9, and 10) explicitly invite early involvement of the public and other stakeholders at all stages of risk analysis. This approach has been actively encouraged in many other influential reports during the last decade (National Research Council 1994; WBGU 2000; Royal Commission on Environmental Pollution 1998; U.S. Presidential/Congressional Commission on Risk Assessment and Risk Management (1997).

The best available science is therefore a necessary but insufficient condition for sound public policymaking on potential threats to health and the environment. Where there is scientific uncertainty and ignorance, “it is primarily the task of the risk managers to provide risk assessors with guidance on the science policy to apply in their risk assessments” (Christoforou 2003). The content of this science policy advice, as well as the nature and scope of the questions to be addressed by the risk assessors, need to be formulated by the risk managers and relevant stakeholders at the initial stages of the risk analysis, as illustrated in Figure 2. Involving the public not only in all stages of risk analysis but also in helping to set research agendas and technological trajectories (Wilsdon and Willis 2004) is not easy. Many experiments, in both Europe and the the United States, with focus groups, deliberative polling, citizen juries, and extended peer review (Funtovicz and Ravetz 1990, 1992), are exploring appropriate ways forward.

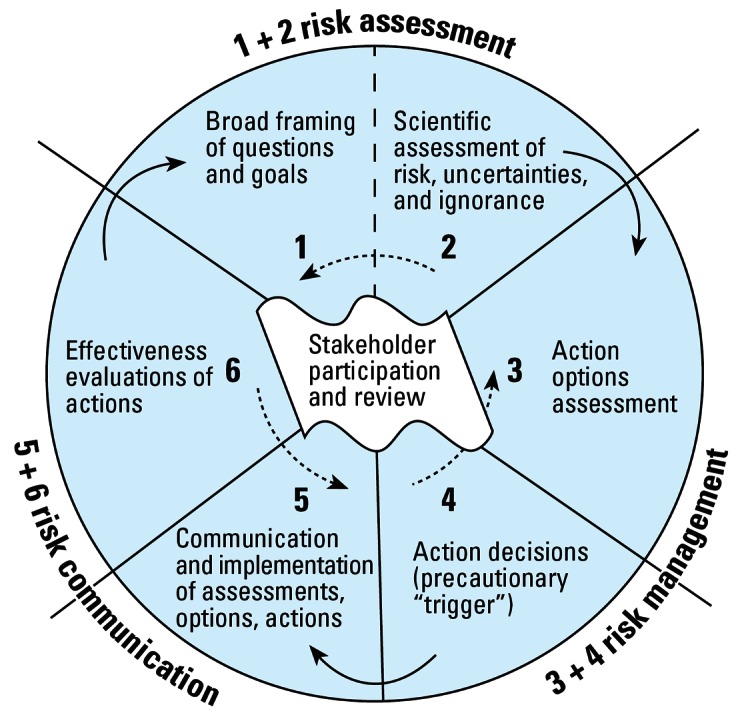

The issue of time is also critical for risk analysis and application of the precautionary principle. For example, the time from the first scientifically based early warnings (1896 for medical X rays, 1897 for benzene, 1898 for asbestos) to the time of policy action that effectively reduced damage, was often 30–100 years. Some consequences of the failures to act in good time (e.g., on CFCs or asbestos) continue to cause damage over even longer time periods. Figure 3 illustrates, with the CFCs example, the importance of such time lags and associated “long tail” consequences. The ozone hole will cause many thousands of extra skin cancers in today’s children but the cancers will only peak around the middle of this century because of the long latent period between exposure and effect.

Figure 3.

CFCs: skin cancer and time lags (EEA 2001).

EDSs also have multigenerational impacts that invite serious consideration of the time lag implications of exposures. Such long-term but foreseeable impacts raise liability and compensation issues, including appropriate discount rates (if any) on future costs and benefits, which, as value-laden choices, also need to be discussed by stakeholder groups. Again, experience with these long-term issues in the climate change field may be helpful in managing them in the EDS field.

Some Early Warnings

The main issues discussed so far, such as the contingency of knowledge, ignorance and surprises, appropriate levels of evidence for policy actions, and public participation in risk analysis, are critical to the successful application of both scientific knowledge and the precautionary principle to public policymaking. They are therefore relevant to discussions about the potentially new hazards that are now emerging, for example, from nanotechnology (Royal Society 2003), from nonionizing radiation arising from the use of mobile phones (Stewart Reports 2004), and from EDSs [World Health Organization (WHO) 2002].

With such newly emerging hazards, it can be helpful to use historical examples to illustrate what a scientifically based early warning looks like because it is often difficult to recognize such warnings properly when they occur. A good example is that provided by the U.K. Medical Research Council’s Swann Committee in 1969. They were asked to assess the evidence for risks of resistance to antibiotics in humans following the prolonged ingestion of trace amounts of antibiotics arising from their use as growth promoters in animal feed (Edqvist and Pedersen 2001). They concluded that

Despite the gaps in our knowledge . . . we believe . . . on the basis of evidence presented to us, that this assessment is a sufficiently sound basis for action. . . . The cry for more research should not be allowed to hold up our recommendations . . . sales/use of AFA should be strictly controlled via tight criteria, despite not knowing mechanisms of action, nor foreseeing all effects. (Swann 1969)

The Swann Committee also concluded that it would be more rewarding and innovative to improve animal husbandry as a means of encouraging disease-free animal growth rather than to employ the cruder approach of feeding the animals diets containing antimicrobials.

Despite the gaps in knowledge, the need for much more research, and considerable ignorance about the mechanisms of action, sufficient evidence was identified and described by the Swann Report to justify the need for public authorities to restrict the possibility of exposures to antibiotics from animal growth promoters.

This early warning was initially heeded, but it was then progressively ignored by the pharmaceutical companies and regulatory authorities, who wanted more scientific justification for restricting antimicrobial growth promoters. However, in 1985 in Sweden, and then in the EU in 1999, the use of antibiotics as growth promoters was finally banned.

Pfizer, the main supplier of such antibiotics in Europe, appealed against the European Commission banning decision, pleading an insufficiency of scientific evidence. They lost this case at the European Court of Justice (2002a, 2002b), and the case further clarified the proper use and application of the precautionary principle in circumstances of scientific uncertainty and of widespread, if low, public exposures to a potentially serious threat.

An example in the United States of an early warning is from the lead in gasoline story. This warning was largely ignored for over 50 years, resulting in much damage to the intelligence and behavior of children in America, Europe, and the rest of the motorized world. Yandell Hendersson, Chair of the Medical Research Board, U.S. Aviation Service, who had been asked to look at the scientific evidence on the possible hazards of tetraethyl lead during the temporary ban on lead in gasoline, concluded in 1925 that

It seems likely that the development of lead poisoning will come on so insidiously that leaded gasoline will be in nearly universal use . . . before the public and the government awakens to the situation. (Rosner and Markowitz 2002)

Motorized societies would have gained much in dollars, brainpower, and social cohesion had they heeded this foresight.

An early warning from the EDS field in the United States came in 1966 when Hickey and co-workers documented the effects of DDT and dieldrin on the reproductive health of Lake Michigan herring gulls (Gilbertson 2001; Hickey et al. 1966). A simultaneous EDS early warning in Europe also came from 1966 to 1969, when Jensen observed PCBs in Baltic sea eagles (Koppe and Keys 2001).

False Negatives and False Positives

The 14 case studies in the “Late Lessons” Report (EEA 2001) include several chemicals (TBT, benzene, PCBs, CFCs, methyl tertiary butyl ether, sulfur dioxide, and other Great Lakes pollutants); two pharmaceuticals (DES and beef hormones); two physical agents (asbestos and medical X rays); one pathogen (bovine spongiform encephalopathy); and contaminated fisheries. These case studies are all examples of false negatives because the agents or activities were regarded as not harmful for some time before evidence showed that they were indeed hazardous. We tried to include a false positive case study in the report (i.e., where actions to reduce potential hazards were unnecessary), but we failed to find either authors or sufficiently robust examples to use. Providing evidence of false positives is more difficult than with false negatives (Mazur 2004). How robust should the evidence be and over what periods of time should the evidence be obtained on the absence of harm before investigators conclude that a restricted substance or activity is without significant risk?

Volume 2 of “Late Lessons,” which the EEA intends to publish in partnership with Collegium Ramazzini in 2006, will include a chapter exploring the issues raised by false positives. This chapter will include lessons to be learned from such apparent examples as the EU ban on food irradiation and hazard labeling on saccharin in the United States. The Y2K computer bug story may also carry some interesting lessons. The remaining chapters in Volume 2 will describe false negatives such as those associated with the Aral Sea disaster, bis(chloromethyl)ether, mercury, climate change, and vinyl chloride.

Why are there so many false negatives to write about, and is this relevant to EDSs? Conclusions based on the first “Late Lessons” volume of case studies point in two primary directions. First, there is a bias within the health and environmental sciences toward avoiding false positives, thereby generating more false negatives. Second, there is a dominance among decision makers of short-term, specific, economic and political interests over the longer term, diffuse, and overall interests and welfare of society. The latter point needs to be further explored, particularly within the political sciences. Researchers could examine the ways in which society’s long-term interests can be promoted more effectively within political and institutional arrangements that have, or could have, an explicit mandate to look after the longer-term welfare of society. Such efforts could thereby better resist the short-term pressures of particular economic or political interests. The judiciary in democracies can play part of this role, as can long-running advisory bodies such as the Royal Commission on Environmental Pollution (U.K.) or the German Advisory Council on Global Change (WBGU).

The current and increasing dominance of the short term in markets and in parliamentary democracies makes this kind of proactive foresight an important issue. The experiments we are conducting with planet earth and its ecosystems require more long-term monitoring of surprise-sensitive parameters that could, we hope, give us early warnings of impending harm. Such long-term monitoring requires long-term funding from appropriately designed institutions, and such funding and institutions are in short supply. The case studies in Volume 1 of “Late Lessons” (EEA 2001) illustrate both the great value, (e.g., in the TBT, DES, Great Lakes, and CFCs stories), yet relative paucity, of long-term monitoring. Such monitoring can contribute to the “patient science” that slowly evolving natural systems require in order that they may be understood better.

Since the publication of “Late Lessons,” we have explored further the second cause of false negatives, namely, the issue of bias within the health and environmental sciences. Table 4 lists 16 features of methods and culture in the environmental and health sciences and shows their main directions of error. Of these, only 3 features tend toward generating false positives, whereas 12 tend toward generating false negatives. This proportion produces robust science, based on strong foundations of knowledge, but it can thereby encourage poor public policy regarding hazard prevention. The goals of science and public policymaking for health and environmental hazards are different. Science places greater priority on avoiding false positives by accepting only very high levels of proof of causality, whereas public policy tries to prioritize the avoidance of false negatives on the basis of sufficient evidence of potential harm.

Table 4.

On being wrong: environmental and health sciences and their directions of error.

| Scientific studies | Some methodological features | Maina directions of error increase chances of detecting: |

|---|---|---|

| Experimental studies (animal laboratory) | High doses | False positive |

| Short (in biological terms) range of doses | False negative | |

| Low genetic variability | False negative | |

| Few exposures to mixtures | False negative | |

| Few fetal–lifetime exposures | False negative | |

| High fertility strains | False negative (developmental/reproductive end points) | |

| Observational studies (wildlife and humans) | Confounders | False positive |

| Inappropriate controls | False positive/negative | |

| Nondifferential exposure misclassification | False negative | |

| Inadequate followup | False negative | |

| Lost cases | False negative | |

| Simple models that do not reflect complexity | False negative | |

| Both experimental and observational studies | Publication bias toward positives | False positive |

| Scientific/cultural pressure to avoid false positives | False negative | |

| Low statistical power (e.g., from small studies) | False negative | |

| Use of 5% probability level to minimize chances of false positives | False negative |

Some features can go either way (e.g., inappropriate controls), but most of the features err mainly in the direction shown in this table.

Table 4 is derived from papers presented on the precautionary principle to a conference organized by the Collegium Ramazzini, the EEA, the WHO, and the National Institute of Environmental Health Sciences in 2002 (Grandjean et al. 2003). This table provides a first and tentative step in trying to capture and communicate the main directions of this bias within the environmental and health sciences, a bias of which decision makers and the public should be aware.

Toward Greater Realism in the Science of EDSs?

The appropriate balance between false negatives and positives was addressed at a Joint Research Council/EEA workshop on the pre-cautionary principle and scientific uncertainty. This workshop was held during the “Bridging the Gap” Conference, 2001, organized by the Swedish Presidency of the EU, in partnership with the EEA and the European Commission Directorate General of the Environment. It drew the following conclusion:

Improved scientific methods to achieve a more ethically acceptable and economically efficient balance between the generation of “false negatives” and “false positives” are needed. (Swedish Environmental Protection Agency 2001)

How this goal can be achieved without compromising science remains to be explored (Grandjean 2004; Grandjean et al. 2004). However, it is clearly necessary, particularly when dealing with EDSs, for scientific methods not only to take into account the false negative/positive bias in methodologies but also to reflect other realities more clearly, including multicausality, thresholds, timing of dose, and mixtures. For example, Hayes et al. (2006a, 2006b) has shown that synergistic effects of atrazine in mixtures of other pesticides at ecologically relevant concentrations can have delayed negative impacts.

Hayes (2006a) also found that pesticide exposures have even more pronounced adverse effects when combined with other environmental stressors, including decreased water level, parasites, and increased population density. Also, Relyea (2003) found that some pesticides were detrimental at much lower concentrations when combined with predator stress.

The case studies on TBT and DES illustrate the relevance of these real-life complexities to the wider EDS issue. For example, the unfolding of the TBT story was accompanied by an increased appreciation of scientific complexity arising from the discoveries that adverse impacts were caused by very low doses (i.e., in parts per trillion); that high exposure concentrations were found in unexpected places (e.g., in the marine microlayer); and that bioaccumulation in higher marine animals, including seafood for human consumption, was greater than expected. The early actions on exposure reduction in France and the United Kingdom from 1982 to 1985 were based on a strength of evidence for the association only; knowledge about causality, mechanisms of action and other complexities came much later.

More recently, pesticides such as atrazine are causing a repeat of some of the lessons of TBT. For example, Hayes et al. (2006a, 2006b) claims that atrazine can cause hermaphroditism and multiple testes in male amphibians at doses as low as 0.01 ppb, [which is 30 times lower than the current U.S. Environmental Protection Agency drinking water standard (U.S EPA 2006)]. Moreover, chemical castration of amphibians by atrazine was increased when it was combined with other pesticides typically used on cornfields in Nebraska (Hayes et al. 2006a).

We were lucky in some ways with the TBT story. A highly specific, initially uncommon impact (imposex) was quickly linked to one chemical—TBT. This relatively easily identified linkage is not likely for the more common and multicausal impacts on development and reproduction in animals or in humans, where poor sperm quality, infertility, and breast cancer are the impacts under suspicion.

Key lessons from the DES story are also instructive because it provides the clearest example of endocrine disruption in humans. These lessons include the absence of visible and immediate teratogenic effects (which was wrongly considered robust evidence for the absence of reproductive toxicity) and the timing of the dose determining the poison [in contrast to the well-known “dose determines the poison” dictum of Paracelsus (Ottoboni 1997)]. Timing is also relevant to other biological end points. For example, “the time of life when exposures take place may be critical in defining dose–response relationships of EDSs for breast cancer as well as for other health effects”(WHO 2002). Although the exposure levels were higher than the usual environmental levels of other EDSs, the DES story provides a clear warning about the potential dangers of perturbing the endocrine system with synthetic chemicals.

With over 20,000 publications, DES is a well-studied compound, yet many doubts persist about its mechanisms of action. Because no dose–effect relationship has been found in humans, it cannot be ruled out that DES could have been toxic at low doses, and that other less potent xenoestrogens could have similar effects.

If we still have few certainties about DES after so much time and research, what should our attitude be toward other EDSs, about which we know much less? Many EDSs have either not been studied or have often been studied in isolation in particular strains of laboratory animals that sometimes may be the least susceptible strains.

It is clear from the EDS case studies in the EEA report (2001) and from the science now being reported in this monograph that estrogens and antiandrogens have many effects. These effects can be dose related, dose independent, or even reversed according to the dose, its timing, and the genetic, sexual, and other differences in the host.

It is clear that multiple complexities of reality must be considered in any reasonable assessment of the potential impact of a chemical on the environment. These complexities can include concomitant exposures to natural and synthetic EDSs, as well as to mixtures; hormonal imprinting; cross-talk among endocrine systems; generational impacts; the difficulty of differentiating between benign and adverse impacts; and the effects arising from the disturbance to balances between opposing elements in complex systems. When considering these complications, it is apparent that we are going to be unpleasantly surprised by future EDS stories. We are not capable of avoiding many surprises, but we need much more realistic science and wider use of precaution if we are to minimize their impacts.

The reality of mixtures particularly needs to considered, as Hayes et al. (2006a) points out. Boone and James (2003) have similarly pointed out that

if we fail to test multifactor hypotheses, we risk proposing solutions that are too simplistic, thus failing to solve environmental problems at the cost of population and species extinction. Single-factor explanations simply may not be sufficient to explain widespread phenomenoa such as amphibian declines.

The science of EDSs requires more than such realism about mixtures and multicausality. EDS science also needs to consider the asymmetry of measurement precision between gene typing and environmental exposure assessment. As Vineis (2004) has observed, this asymmetry is likely to lead to an underestimation of the effects of the environment and an overestimation of the effects of genes within their interactions.

The implications of multicausality and of interactions among genes, the host condition, and environmental stressors for research seem not to have been fully recognized elsewhere in the environmental and health science literature. Sing (2004) has noted that

neither genes nor their environments, but their interactions are causations. . . . pretending that the aetiology of common diseases like CVD, cancer, diabetes, and psychiatric disorders are caused by the independent actions of multiple agents is deterring progress.

He calls for “research that reflects the reality of the problem” and notes that

a reductionist approach that has no interest in complexity discourages imaginative solutions. . . . we need an academic environment that puts greater value on how the parts are put together.

The implications of complexity and multicausality for evaluating evidence also need to be addressed. Since 1965 overall evaluations of scientific evidence for policymaking on health hazards have often been based, implicitly or explicitly, on the nine “Bradford Hill Criteria” (which Bradford Hill actually called “features” of evidence rather than “criteria”) that were produced in response to the smoking and health controversy of the 1960s. (U.S. Surgeon General 1964; Bradford Hill 1965). However, the criteria have often been misused in debates on EDSs to show that there is little evidence of an association between exposures and harm, when the evidence actually suggests that there may be such a link (Ashby et al. 1997; WHO 2002).

Complexity and gene/host variability, two of the apparently more robust of the nine criteria, may not be so robust in the context of multicausality. For example, consistency of study findings is not always to be expected. Needleman et al. (1979) provided the first of what could be called the second generation of early warnings on lead in gasoline in 1979 and observed that

Consistency in nature does not require that all or even a majority of studies find the same effect. If all studies of lead showed the same relationship between variables, one would be startled, perhaps justifiably suspicious.

A similar conclusion is valid for EDSs because of multicausality and variable exposure conditions as Oehlmann points out (Oehlmann et al. 2006).

It follows that consistency of results between studies on the same hazard can provide robust evidence for a causal link, but the absence of such consistency may not provide very robust evidence for the absence of a real association. In other words, the criterion of consistency is asymmetrical, like most of the other Bradford Hill criteria.

Similarly, the criterion of temporality, which says that the putative cause X of harm Y must come before Y appears, is robust in a simple, unicausal world. In a multicausal, complex world of common biological end points that have several chains of causation, this condition of robustness may not necessarily be so. For example, falling sperm counts can have multiple, co-causal factors, some of which may have been effective at increasing the incidence of the biological end point in question in advance of the stressors in focus, thereby confounding the analysis of temporality. It follows that chlorine-based chemicals cannot be dismissed on temporality grounds as a possible causal factor in falling sperm counts just because sperm counts started to fall in some regions before chlorine chemical production took off, as has been argued previously (WHO 2002). Other causal factors responsible for the earlier fall in sperm counts could have been later joined by chlorinated chemicals, whose new, additional effects on sperm counts could have been combined with the impacts of the other, and differentially changing, co-causal factors.

The resulting overall sperm count trends could then be rising, falling, or static, depending on the combined direction and strengths of the co-causal factors and the time lags of their impacts. Chlorine chemicals may or may not be co-causal factors in falling sperm counts, but the use of the temporality argument by the WHO does not provide robust evidence that they are not causally involved.

The presence of temporality, such as consistency,” may be robust evidence for an association being causal, but its absence may not provide robust evidence against an association. Bradford Hill was well aware of the asymmetrical nature of his criteria; however, his followers have not always been so aware.

The capacity of homo sapiens (who should perhaps be called, with less hubris, “homo stultus” because few, if any, other species, consciously destroy their habitats) to foresee and forestall disasters is limited. Armed with greater humility, less hubris, and a wider and wise application of the precautionary principle, we could use the best of more realistic systems and science to foresee and forestall hazards. In doing so, it is hoped, we will have more success than we have had in the last 100 years, while stimulating innovation through the encouragement of diverse and robust technologies.

Appendix 1. The precautionary principle in some international treaties and agreements

Montreal Protocol on Substances that Deplete the Ozone Layer, 1987

“Parties to this protocol... determined to protect the ozone layer by taking precautionary measures to control equitably total global emissions of substances that deplete it. . . .” (United Nations Environment Programme 2000)

Third North Sea Conference, 1990

“The participants . . . will continue to apply the precautionary principle, that is to take action to avoid potentially damaging impacts of substances that are persistent, toxic, and liable to bioaccumulate even where there is no scientific evidence to prove a causal link between emissions and effects.” (Final Declaration of the Third International Conference on Protection of the North Sea 1990)

The Rio Declaration on Environment and Development, 1992

“In order to protect the environment the Precautionary Approach shall be widely applied by states according to their capabilities. Where there are threats of serious or irreversible damage, lack of full scientific certainty shall not be used as a reason for postponing cost-effective measures to prevent environmental degradation.” (United Nations Environment Programme 1992)

Framework Convention on Climate Change, 1992

“The Parties should take precautionary measures to anticipate, prevent, or minimize the causes of climate change and mitigate its adverse effects. Where there are threats of serious or irreversible damage, lack of full scientific certainty should not be used as a reason for postponing such measures, taking into account that policies and measures to deal with climate change should be cost-effective so as to ensure global benefits at the lowest possible cost.” (UNFCC 1992)

Treaty of the European Union (Maastricht Treaty), 1992

“Community policy on the environment . . . shall be based on the precautionary principle and on the principles that preventive actions should be taken, that the environmental damage should as a priority be rectified at source, and that the polluter should pay.” (Treaty of the European Union 1992)

Cartagena Protocol on Biosafety, 2000

“In accordance with the precautionary approach the objective of this Protocol is to contribute to ensuring an adequate level of protection in the field of the safe transfer, handling and use of living modified organisms resulting from modern biotechnology that may have adverse effects on the conservation and sustainable use of biological diversity, taking also into account risks to human health, and specifically focusing on transboundary movements.” (Cartagena Protocol on Biosafety 2000)

Stockholm Convention on Persistent Organic Pollutants (POPs), 2001

Precaution, including transparency and public participation, is operationalized throughout the treaty, with explicit references in the preamble, objectives, provisions for adding POPs and determination of best available technologies. The objective states: “Mindful of the Precautionary Approach as set forth in Principle 15 of the Rio Declaration on Environment and Development, the objective of this Convention is to protect human health and the environment from persistent organic pollutants.” (Stockholm Convention on Persistent Organic Pollutants 2001)

References

- Cartagena Protocol on Biosafety. 2000. Article 1, Objective. Montreal, Quebec, CN:Convention of Biological Diversity. Available: http://www.biodiv.org/biosafety/default.aspx [accessed 11 April 2006].

- Final Declaration of the Third International Conference on Protection of the North Sea. Third International Conference on Protection of the North Sea, 7–8 March 1990, The Hague. 1 YB . Intl Environ Law. 1990;658:662–673. [Google Scholar]

- United Nations Environment Programme. 2000. Montreal Protocol on Substances that Deplete the Ozone Layer, 1987. Geneva:United Nations Environment Programme. Available: http://www.unep.org/ozone/pdfs/Montreal-Protocol2000.pdf [accessed April 2006].

- United Nations Environment Programme. 1992. Rio Declaration on Environment and Development. Report of the United Nations Conference on Environment and Development, 1992. Principle 15. Geneva:United Nations Environment Programme. Available: http://www.unep.org/Documents.multilingual/Default.asp?DocumentID=78&ArticleID=1163 [accessed 11 April 2006].

- UNFCCC. 1992. United Nations Framework Convention on Climate Change, 1992. Geneva:Climate Change Secretariat. Available: http://unfccc.int/2860.php [accessed 14 April 2006].

- Stockholm Convention on Persistent Organic Pollutants. 2001. Home Page. Geneva:Stockholm Convention on Persistent Organic Pollutants. Available: http://www.pops.int/documents/convtext/convtext_en.pdf [accessed 11 April 2006].

- Treaty of the European Union. 1992. Eurotreaties: Maastricht Treaty. Gloucestershire, UK:British Management Data Foundation. Available: http://www.eurotreaties.com/maastrichtext.html [accessed 11 April 2006].

Footnotes

This article is part of the monograph “The Ecological Relevance of Chemically Induced Endocrine Disruption in Wildlife.”

The author thanks the European Environment Agency for the opportunity and time to develop the ideas expressed in this article, J. Bailar for his help in producing Table 4, and G. Boschetto and B. Nielsen for helping with the preparation of the article.

Responsibility for the contents of the article lies solely with the author, and the views expressed are his and not those of the EEA or its Management Board.

References

- Alzieu C, Sanjuan J, Deltriel JP, Borel M. Tin contamination in Arcachon Bay: effects on oyster shell anomalies. Mar Poll Bull. 1986;17:494–498. [Google Scholar]

- Ashby J, Houthoff E, Kennedy SJ, Stevens J, Bars R, Jekat FW, et al. The challenge posed by endocrine-disrupting chemicals. Environ Health Perspect. 1997;105:164–169. doi: 10.1289/ehp.97105164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barua D, Greenhough WB III, eds. 1992. Cholera. New York: Plenum.

- Boehmer-Christiansen S. 1994. The precautionary principle in Germany: enabling government. In: Interpreting the Precautionary Principle (O’Riordan T, Cameron J, eds). London:Cameron and May, 31–68.

- Boone M, James S. Interactions of an insecticide, herbicide, and natural stressors in amphibian community mesocosms. Ecol Appl. 2003;13:829–841. [Google Scholar]

- Bradford Hill A. The environment and disease: association or causation? Proc Royal Soc Med. 1965;58:295–300. doi: 10.1177/003591576505800503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brock TD (ed/transl). 1998. In Milestones in Microbiology: 1556 to 1940. Washington, DC:ASM Press.

- Carson R. 1962. Silent Spring. Boston:Houghton Mifflin.

- Christoforou T. 2002. Science, law, and precaution in dispute resolution on health and environmental protection: what role for scientific experts? In: Le commerce international des organisms genetiquement modifies. Marsailles:Centre d’Etudes et de Recherches Internationales et Communautaires, Universite d’Àix-Marseille, 111.

- Christoforou T. The precautionary principle and democratising expertise: a European legal perspective. Science Public Policy. 2003;30:205–221. [Google Scholar]

- Edqvist L, Pedersen KB. 2001. Antimicrobials as growth promoters: resistence to common sense. In: Late Lessons from Early Warnings: The Precautionary Principle 1896–2000 (Harremoes P, Gee D, MacGarvin M, Stirling A, Keys J, Wynne B, et al., eds). Environment Issue Report, no. 22. Copenhagen:European Environment Agency, 64–72.

- EEA. 2001. Late Lessons from Early Warnings: The Precautionary Principle 1896–2000. Environment Issue Report, no. 22. (Harremoes P, Gee D, MacGarvin M, Stirling A, Keys J, Wynne B, et al., eds). Copenhagen:European Environment Agency.

- EU (European Union). General Food Law Regulation. EC no. 178/2002. Off J EU L31:0.02.2002.

- European Commission. 2000. Communication from the Commission on the Precautionary Principle. COM (2000) 1 Brussels:European Commission.

- European Court of Justice. 2002a. Case T-13/99, Pfizer. ECR II-3305, 11 September 2002.

- European Court of Justice. 2002b. Case T-70/99, Alpharma. ECR II-3495, 11 September 2002.

- Food Quality Protection Act. 1996. Public Law 104–17.

- Funtowicz S, Ravetz J. 1990. Uncertainty and Quality in Science for Policy. Amsterdam:Kluwer.

- Funtowicz S, Ravetz J. 1992. Three types of risk assessment and the emergency of post-normal science. In: Social Theories of Risk (Krimsky S, Golding D, eds). Westport, CT:Praeger, 251–273.

- Gibbs PE. A male genital defect in the dog-whelk, Nucella lapillus (Neogastropoda), favouring survival in a TBT-polluted area. J Mar Biol Assoc UK. 1993;73:667–678. [Google Scholar]

- Gilbertson M. 2001. The precautionary principle and early warnings of chemical contamination of the Great Lakes. In: Late Lessons from Early Warnings: The Precautionary Principle 1896–2000 (Harremoes P, Gee D, MacGarvin M, Stirling A, Keys J, Wynne B, et al., eds). Environment Issue Report, no. 22. Copenhagen:European Environment Agency, 126–134.

- Graham J. Europe’s precautionary principles: promise and pitfalls. J Risk Res. 2002;5(4):371–390. [Google Scholar]

- Grandjean P. Implications of the precautionary principle for primary prevention and research. Annu Rev Public Health. 2004;25:199–223. doi: 10.1146/annurev.publhealth.25.050503.153941. [DOI] [PubMed] [Google Scholar]

- Grandjean P, Soffritti M, Minardi F, Brazier JV. eds. 2003. The Precautionary Principle: Implications of Research and Prevention in Environmental and Occupational Health. An International Conference. Vol 2. Bologna, Italy:Ramazzini Foundation Library. Available: http://www.collegiumra-mazzini.org/links/PPcontentspage.htm [12 April 2006]

- Grandjean P, Bailar JC, Gee D, Needleman HL, Ozonoff DM, Richter E, et al. Implications of the precautionary principle in research and policy making. Am J Ind Med. 2004;45(4):382–385. doi: 10.1002/ajim.10361. [DOI] [PubMed] [Google Scholar]

- Hayes TB, Case P, Chui S, Chung D, Haeffele C, Haston K, et al. Pesticide mixtures, endocrine disruption, and amphibian declines: are we underestimating the impact? Environ Health Perspect. 2006a;114(suppl 1):40–50. doi: 10.1289/ehp.8051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes TB, Haston K, Tsui M, Hoang A, Haeffele C, Vonk A. Atrazine-induced hermaphroditism at 0.1 ppb in American leopard frogs (Rana pipiens): laboratory and field evidence. Environ Health Perspect. 2000;111:568–575. doi: 10.1289/ehp.5932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes TB, Stuart AA, Mendoza M, Collins A, Noriega N, Vonk A, et al. Characterization of atrazine-induced gonadal malformations in African clawed frogs (Xenopus laevis) and comparisons with effects of an androgen antagonist (cyproterone acetate) and exogenous estrogen (17β-estradiol): support for the demasculinization/feminization hypothesis. Environ Health Perspect. 2006b;114(suppl 1):134–141. doi: 10.1289/ehp.8067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hegel GWF. 2001. Philosophy of Right. Ontario, Canada:Batoche Books. Available: http://socserv2.mcmaster.ca/~econ/ugcm/3ll3/hegel/history.pdf [accessed 5 August 2005].

- Helm JJ, ed. 1997. Plato’s Apology. Revised ed. Wauconda, IL:Bolchazy-Carducci Publishers. Available: http://www.literaturepage.com/read/plato-apology.html [accessed 8 August 2005].

- Hickey JJ, Keith JA, Coon FB. An exploration of pesticides in a Lake Michigan ecosystem. Pesticides in the environment and their effects on wildlife. J Appl Ecol. 1966;3(suppl):141–154. [Google Scholar]

- Ibarreta D, Swan SH. 2001. The DES story: long-term consequences of prenatal exposure. In: Late Lessons from Early Warnings: the Precautionary Principle 1896–2000. (Harremoes P, Gee D, MacGarvin M, Stirling A, Keys J, Wynne B, et al., eds). Environment Issue Report, no. 22. Copenhagen:European Environment Agency, 84–92.

- IPCC. 1995. Second Assessment Report—Climate Change 1995. Intergovernmental Panel on Climate Change. Available: http://www.ipcc.ch/pub/reports.htm [accessed 11 August 2005].

- IPCC. 2001. Third Assessment Report—Climate Change 2001. Intergovernmental Panel on Climate Change. Available: http://www.ipcc.ch/pub/reports.htm [accessed 11 August 2005].

- Koppe JG, Keys J 2001. PCBs and the precautionary principle. In: Late Lessons from Early Warnings: The Precautionary Principle 1896–2000 (Harremoes P, Gee D, MacGarvin M, Stirling A, Keys J, Wynne B, et al., eds). Environment Issue Report, no. 22. Copenhagen:European Environment Agency, 64–72.

- Kriebel D, Tickner J. Reenergizing public health through precaution. Am J Public Health. 2001;91(9):1351–1355. doi: 10.2105/ajph.91.9.1351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur A. 2004. True Warnings and False Alarms—Evaluating Fears about the Health Risks of Technology, 1948–1971. Washington, DC:Resources for the Future.

- McCormick J. 2001. Swedish Environmental Law. 1973. In: Environmental Policy in the European Union. The European Union Series (McCormick J, ed). New York:Palgrave MacMillan, 12–19.

- Mummery PW, Alderson BA. The BNFL Compensation Agreement for Radiation Linked Disease. J Radiol Prot. 1989;9:179–184. [Google Scholar]

- National Research Council. 1994. Science and Judgment in Risk Assessment. Washington, DC:National Academy Press.

- Needleman HL, Gunnoe C, Leviton A, Peresie H, Maher C, Barret P. Deficits in psychological and classroom performance of children with elevated dentine lead levels. N Engl J Med. 1979;300:689–695. doi: 10.1056/NEJM197903293001301. [DOI] [PubMed] [Google Scholar]

- Oehlmann J, Schulte-Oehlmann U, Bachmann J, Oetken M, Lutz I, Kloas W, et al. Bisphenol A induces superfeminization in the ramshorn snail Marisa cornuarietis (Gastropoda: Prosobranchia) at environmentally relevant concentrations. Environ Health Perspect. 2006;114(suppl 1):127–133. doi: 10.1289/ehp.8065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ottoboni MA. 1997. The Dose Makes the Poison: A Plain-Language Guide to Toxicology, 2nd ed. New York:John Wiley & Sons.

- Raffensperger C, Tickner JA. 1999. Protecting Public Health and the Environment: Implementing the Precautionary Principle. Washington, DC:Island Press.

- Relyea R. Predator cues and pesticides: a double dose of danger for amphibians. Ecol Applic. 2003;13:1515–1521. [Google Scholar]

- Rosner D, Markowitz G. 2002. Deceit and Denial—The Deadly Politics of Industrial Pollution. Berkeley:University of California Press.

- Royal Commission on Environmental Pollution. 1998. Environmental Standards. London:Royal Commission on Environmental Pollution.

- Royal Society. 2003. Nanoscience and Nanotechnologies: Opportunities and Uncertainties. London:Royal Society. Available: http://www.nanotec.org.uk/finalReport.htm accessed 5 August 2005]

- Santillo D, Johnston P, Langston William J. 2001. Tributyltin (TBT) antifoulants: a tale of ships, snails and imposex. In: Late Lessons from Early Warnings: The Precautionary Principle 1896–2000 (Harremoes P, Gee D, MacGarvin M, Stirling A, Keys J, Wynne B, et al., eds). Environment Issue Report, no. 22. Copenhagen:European Environment Agency, 135–148.

- Sheppard C. Biodiversity, productivity and system integrity. Mar Poll Bull. 1997;34(9):680–681. [Google Scholar]

- Sing DF, Stengard JH, Kardia SLR. Dynamic relationships between the genome and exposures to environments as causes of common human disease. World Rev Nutr Diet. 2004;93:77–91. doi: 10.1159/000081252. [DOI] [PubMed] [Google Scholar]

- Snow J. 1936. On the Mode of Communication of Cholera. 2nd ed. Reprinted from 1855. New York:Commonwealth Fund,

- Stewart Report. 2004. Mobile Phones and Health. Independent Expert Group on Mobile Phones Reports. Chilton, UK:National Radiological Protection Board. Available: http://www.iegmp.org.uk/report/text.htm [accessed 8 September 2005].

- Stockholm Convention on Persistent Organic Pollutants. 2005. Home Page. Available: http://www.pops.int/ [accessed August 2005].

- Stroebe M, Scheringer M, Hungerbuhler K. Measures of overall persistence and the temporal remote state. Environ Sci Technol. 2004;38:5665–5673. doi: 10.1021/es035443s. [DOI] [PubMed] [Google Scholar]

- Swann MM. 1969. Report of Joint Committee on the Use of Antibiotics in Animal Husbandry and Veterinary Medicine. London:Her Majesty’s Stationery Office.

- Swedish Environmental Protection Agency. 2001. Bridging Sustainability Research and Sectoral Integration. Stockholm: Swedish Environmental Protection Agency. Available: http://www.naturvardsverket.se/dokument/omverket/forskn/fokonf/dokument/bridging_arkiv/index.htm [accessed 30 August 2005].

- Tickner JA. Precautionary principle encourages policies that protect human health and the environment in the face of uncertain risks. Public Health Rep. 2002;117(6):493–497. doi: 10.1093/phr/117.6.493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- United Nations. 1982. World Charter for Nature. UN General Assembly, 37th Session (UN/GA/RES/37/7). New York:United Nations.

- U.S. EPA. Ground Water & Drinking Water. Consumer Factsheet on: ATRAZINE. Washington, DC:U.S. Environmental Protection Agency. Available: http://www.epa.gov/safewater/dwh/c-soc/atrazine.html [accessed 15 April 2006].

- U.S. Presidential/Congressional Commission on Risk Assessment and Risk Management. 1997. Framework for Environmental Health Risk Assessment. Final Report. Vol 1. Available: http://www.riskworld.com/Nreports/1997/risk-rpt/pdf/EPAJAN.PDF [accessed 7 September 2005].

- U.S. Surgeon General. 1964. Smoking and Health. Washington, DC:Department of Health and Human Services.

- Vineis P. A self-fulfilling prophecy: are we underestimating the role of the environment in gene-environment interaction research? Int J Epidemiol. 2004;33:945–946. doi: 10.1093/ije/dyh277. [DOI] [PubMed] [Google Scholar]

- WBGU (Welt im Wande). 2000. Handlungsstrategien zur Bewaltigung globaler Umweltrisiken. Jahresgutachten 1998. [World in Transition: Strategies for Managing Global Environmental Risks. Annual Report 1998]. Berlin:Springer.

- WHO. 2002. Global Assessment of the State-of-the-Science of Endocrine Disruptors. Geneva:World Health Organization. Available: http://www.who.int/ipcs/publications/new_issues/endocrine_disruptors/en/print.html [accessed 13 September 2005].

- WTO. 1994a. Sanitary and Phytosanitary Agreement. Article 2.2. Marrakesh Agreement Establishing the World Trade Organization. Annex 1A. Geneva:World Trade Organisation. Available: http://www.wto.org/english/docs_e/legal_e/legal [accessed 5 August 2005].

- WTO. 1994b. Sanitary and Phytosanitary Agreement. Art. 5.7. Marrakesh Agreement Establishing the World Trade Organization, Annex 1A. Geneva:World Trade Organisation. http://www.wto.org/english/docs_e/legal_e/legal [accessed 5 August 2005].