Abstract

The current study used fMRI to explore the extent to which neural activation patterns in the processing of speech are driven by the quality of a speech sound as a member of its phonetic category, that is, its category typicality, or by the competition inherent in resolving the category membership of stimuli which are similar to other possible speech sounds. Subjects performed a phonetic categorization task on synthetic stimuli ranging along a voice-onset time continuum from [da] to [ta]. The stimulus set included sounds at the extreme ends of the voicing continuum which were poor phonetic category exemplars, but which were minimally competitive, stimuli near the phonetic category boundary, which were both poor exemplars of their phonetic category and maximally competitive, and stimuli in the middle of the range which were good exemplars of their phonetic category. Results revealed greater activation in bilateral inferior frontal areas for stimuli with the greatest degree of competition, consistent with the view that these areas are involved in selection between competing alternatives. In contrast, greater activation was observed in bilateral superior temporal gyri for the least prototypical phonetic category exemplars, irrespective of competition, consistent with the view that these areas process the acoustic-phonetic details of speech to resolve a token’s category membership. Taken together, these results implicate separable neural regions in two different aspects of phonetic categorization.

Keywords: Neuroimaging, phonetic processing, phonetic category structure, superior temporal gyrus, inferior frontal gyrus

Introduction

The grouping of perceptual objects into categories is an important component of human cognition. Complicating the process of categorization is the fact categories do not appear to be uniform in their structure. Even in formally defined categories, a given token may be considered to be a better or poorer exemplar of its category depending on the typicality of its features. For instance, a bed is rated as poorer exemplar of the ‘furniture’ category than is a chair (Rosch, 1975). Additionally, boundaries between categories which exist in close proximity to each other in perceptual space appear to be fuzzy. For instance, the categories of ‘cups’ and ‘bowls’ share a number of physical attributes, such that the boundary between a cup and bowl may vary depending on the physical dimensions of the stimulus, whether or not it has a handle, or the context in which it is displayed (Labov, 1973). As a consequence, the ease or difficulty with which an incoming exemplar is categorized depends on both the typicality of the exemplar as a member of its category, as well as the degree to which that exemplar is similar to items in a competing category. Speech is a domain in which both of these factors exert an influence on the categorization of speech sounds.

The categorization of an incoming speech sound is contingent on both the quality of the speech sound as a member of its phonetic category as well as the similarity of the sound to other speech sounds in the listener’s inventory. In an interactive spreading activation model of language processing such as TRACE (McClelland & Elman, 1986), the phonetic categories of speech form a network of connections such that the activation of one phonetic category may compete with or influence the activation of another phonetic category. In such a model, a poorer, less prototypical exemplar of the phonetic category activates its category representation more weakly than a prototypical exemplar. This weak activation results in greater time for the activated phonetic category to reach a decision threshold, and should therefore increase the difficulty of the phonetic categorization decision. Thus the ease with which the system resolves phonetic category membership depends on the token’s distance in acoustic-phonetic space from the best or prototypical exemplars of that phonetic category. In addition, the activated phonetic category competes with other phonetic categories for selection. Although any phonetic category may in theory compete with any other phonetic category, more competition is expected when the input is closer in acoustic space and hence is more similar to other categories in the language inventory - for instance if the stimulus falls near a phonetic category boundary.

Behavioral data are consistent with this model. Increased reaction times are observed when subjects are asked to identify tokens which fall near a phonetic category boundary (Pisoni & Tash, 1974). Additionally, reaction-time latencies increase (Kessinger, 1998) and goodness judgments decrease when within category stimuli are poorer exemplars of their phonetic category (Miller & Volaitis, 1989). Therefore, the degree of competition of a speech sound as a function of its similarity to other phonetic categories as well as the typicality of a speech sound as a member of its phonetic category are both intrinsic parts of the phonetic categorization process.

As yet, the neural substrates underlying phonetic competition and category typicality are unclear. Earlier work has investigated the neural substrates of phonetic category structure in the context of the perception of voicing in stop consonants (Blumstein, Myers, & Rissman, 2005), and the perception of prototypical and non-prototypical vowels (Guenther, Nieto-Castanon, Ghosh, & Tourville, 2004). However, in both of these studies, the tokens which were poorer exemplars of their phonetic category were those near the phonetic category boundary, and were thus also subject to a greater degree of phonetic competition. Thus, it is not clear whether the activation patterns observed in these studies for near-boundary tokens are driven by the typicality of a speech sound as a member of its phonetic category or by the competition inherent in resolving category membership of stimuli with similar acoustic-phonetic structure. The goal of the present study is to dissociate the effects of competition and category typicality, and to investigate the relative sensitivity of anterior and posterior brain structures to these two aspects of phonetic processing. To this end, it is necessary to identify a speech parameter that allows for a continuum of sounds for which category typicality and competition do not co-vary. As discussed below, a voice-onset continuum distinguishing voiced and voiceless stop consonants provides such a set. We will examine activation patterns for stimuli which are acoustically very far from the phonetic boundary. Although such stimuli are less-typical exemplars of their phonetic category, they are minimally influenced by competition from the contrasting phonetic category. These activation patterns will be compared to stimuli near the voiced-voiceless phonetic boundary. These stimuli are also less typical of their phonetic category but, in contrast to the stimuli which are acoustically distant from the phonetic boundary, they manifest a high degree of acoustic-phonetic competition. Thus, it should be possible to separately assess the effects of competition and category typicality on phonetic categorization.

Internal structure of voiceless and voiced stop categories

Structure exists within a phonetic category, with some members of that category serving as better exemplars of that category than others (Kuhl, 1991; Miller & Volaitis, 1989). This is apparent despite the fact that subjects are often poor at discriminating poor and good exemplars within the same category (Liberman, Harris, Hoffman, & Griffith, 1957). For instance, subjects show increased reaction times to stimuli along a voice-onset time (VOT) continuum as tokens approach the category boundary (Pisoni & Tash, 1974). It is also the case that stimuli which are unequivocal in their phonetic category membership may also be poorer members of a phonetic category. Volaitis and Miller showed that voiceless tokens with very long VOTs of 165 msec and greater were judged as poorer phonetic category members than those with shorter VOTs (Miller & Volaitis, 1989; Volaitis & Miller, 1992). Kessinger and Blumstein (manuscript) also showed that stimuli beginning with voiceless stops whose VOT was increased by 4/3 (mean VOT=180 msec, range: 136–225 msec) were rated as poorer exemplars than either unaltered stimuli or those that were increased by 2/3 (mean VOT=129 msec, range: 103–154 msec). It appears, therefore, that phonetic category goodness for voiceless stops begins to decrease for tokens with VOTs of approximately 165–180 msec or greater.

Less is known about the structure of the voiced end of the phonetic category in English speakers. Voiced stops typically have VOTs from 0 to about 30 msec, but many speakers of English also produce pre-voiced stops, where the onset of voicing precedes the burst. Because VOT is defined as the latency between the burst and the onset of voicing, prevoiced stops have negative VOT values. A study by Ryalls et al. (Ryalls, Zipprer, & Baldauff, 1997) which investigated VOT production as a function of race and gender found that the mean VOT for voiced stops ranged from −46 msec to +12 msec VOT depending on the place of articulation of the stop consonant (labial, alveolar, or velar), race and gender. More specifically, VOTs for [d] had the most prevoicing (average VOT ranged from −46 to −7 msec depending on the race and gender of the speaker). Thus, it is likely that tokens with prevoicing of at least −46 msec are still good exemplars of the [d] phonetic category. To date, no studies have investigated the perceptual effects of extreme amounts of prevoicing in English. It is hypothesized that stops in English with very long prevoicing will, like those with very long VOTs, be perceived as poorer members of their phonetic category, and hence will show slower reaction times in identification tasks.

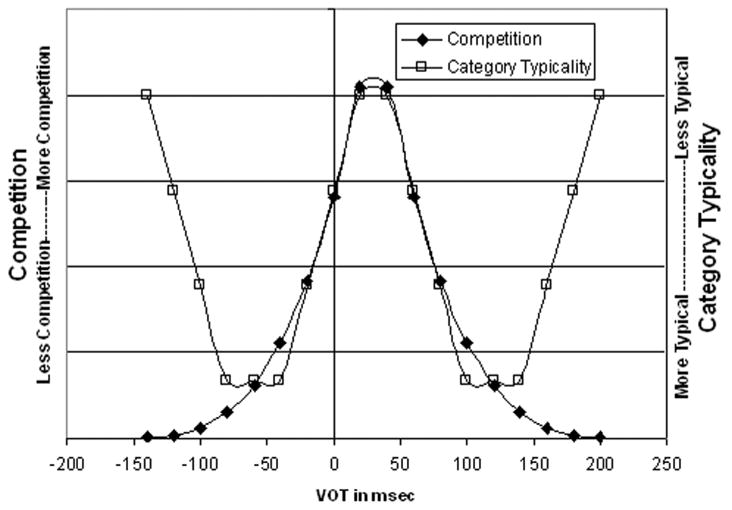

Both phonetic competition and category typicality are expected to vary as a function of internal phonetic category structure. Competition is expected to increase as stimuli become more similar acoustically to the contrasting phonetic category, and as such, competition should be maximal near the category boundary. In contrast, it is expected that the most prototypical exemplars should be near the center of the phonetic category, and that tokens should become less prototypical further from that center, be they near the boundary, or furthest away from the boundary and hence not competing with the contrasting phonetic category (see Figure 1 for a schematic of the hypothesized effects of competition and category typicality along a VOT continuum).

Figure 1.

Hypothesized pattern of competition and category typicality along a voicing continuum. Y-axis scale is in arbitrary units

In this study, subjects performed a phonetic categorization task (i.e. [da] or [ta]) on stimuli from a VOT continuum. Activation patterns for tokens both near the category boundary (Near-Boundary) and at the ends of the continuum (Extreme) were contrasted with ‘good’ tokens (Exemplar) in order to isolate the effects of phonetic competition from phonetic category typicality. Likewise, activation patterns to tokens near the phonetic boundary (Near-Boundary) were contrasted with tokens further away from the phonetic boundary (Extreme and Exemplar), in order to isolate the effects of competition on activation levels. It is hypothesized that greater activation should be seen in frontal areas for Near-Boundary stimuli due to the increased competition of these tokens to the contrasting (voiced) phonetic category. In contrast, activation for Near-Boundary and Extreme tokens should be greater than that for Exemplar tokens in the STG if this area is, as hypothesized, sensitive to category typicality.

Methods & Materials

Stimuli

Stimuli were generated using the KlattWorks interface (McMurray, in preparation) to the Klatt synthesizer, which combines cascade and parallel methods of synthesis (Klatt, 1980). First, a four-formant pattern with a VOT of 0 msec was generated. Formant values were patterned after Stevens & Blumstein (1978) values given for [da]. Onset values for F1, F2 and F3 were 200, 1700, and 3300 Hz followed by a piece-wise linear transition of 40 ms to steady state values of 720, 1240, and 2500 Hz. F4 remained steady at 3300 Hz throughout the stimulus. An initial burst was synthesized by manipulating the amplitude of frication, and aspiration noise was added by manipulating the amplitude of aspiration. For the 0 msec token, the aspiration noise was present during the burst alone. The amplitude of voicing started at the onset of the stimulus at a value of 57 dB, transitioned to 60 dB over the next 5 msec, and remained at 60 dB until 30 msec from the end of the stimulus, at which point it dropped piece-wise linearly to 30 dB over those 30 msec. Stimuli with increasing VOTs were generated from this token by delaying the onset of voicing and increasing the duration of the aspiration noise in 10 msec increments. In this way, stimuli were generated varying in 10 msec steps from 0 msec to 190 msec VOT. Total stimulus duration was kept constant at 330 msec by removing 10 msec increments from the steady state portion of the middle of the vowel.

Prevoiced stimuli were also generated from the 0 msec VOT token by using the amplitude of voicing parameter from the parallel synthesizer set at 50 dB beginning before the burst in successive 10 msec intervals. At the same time, a portion of the midpoint of the vowel which was of the same duration as the added prevoicing was removed in order to preserve the stimulus length at 330 msec. Prevoiced stimuli ranging from 0 msec to −190 VOT were generated using this method.

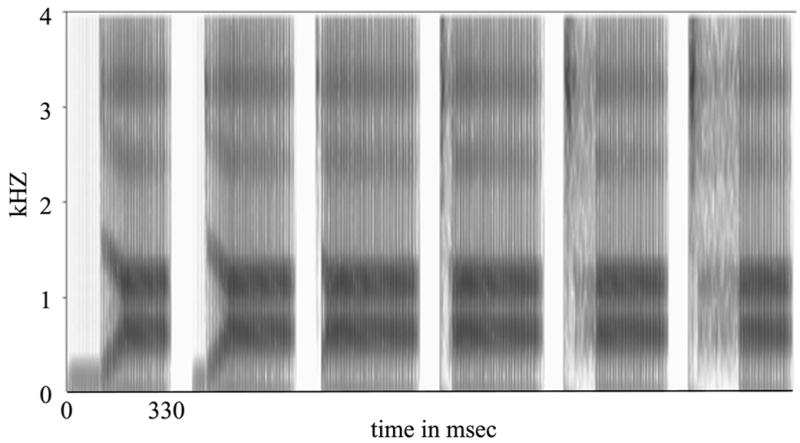

For the stimulus with VOT of 0 msec, F0 was 104 Hz at the onset of the stimulus (coincident with the burst), increased to 125 Hz at 75 msec after the burst, and then fell piece-wise linearly to 97 Hz at the end of the stimulus. For stimuli with a VOT greater than or equal to 0 msec, this pitch contour was kept constant. For prevoiced stimuli, F0 was 104 during the prevoicing period, then followed the same contour as for stimuli with VOTs greater than 0. Spectrograms of representative stimuli are shown in Figure 2.

Figure 2.

Spectrogram of stimuli used. From left, stimuli have VOTs of −100, −40, 20, 40, 100, and 160 msec.

Based on a series of pilot experiments, six stimuli were selected for subsequent use in the pilot and fMRI experiments. Tokens were chosen which had equal spacing in VOT between members of the same phonetic category, and in which reaction times in pilot studies were not significantly different between those near the phonetic category boundary and those at the extreme ends of the continuum. The stimuli were equated for for reaction-time latencies so that potential differences in activation between these two categories cannot be attributed to differences in general difficulty. These were tokens at −100, −40, 20, 40, 100, and 160 msec VOT. Stimuli from the ends of the continuum (−100 and 160 msec VOT) were referred to as Extreme stimuli, those from the middle of the continuum (−40 and 100 msec VOT) were referred to as Exemplar stimuli, and those close to the voiced-voiceless phonetic category boundary (20 and 40 msec VOT) were referred to as Near-Boundary stimuli.

For the fMRI experiment, two sine wave tones were generated at 720 Hz (“Low” tone) and 1250 Hz (“High” tone), which corresponded to the steady-state frequencies of F1 and F2 respectively for the syllable stimuli. These tones were equal in length (330 msec) to the syllable stimuli.

Participants

Nine subjects participated in the behavioral pilot experiment in the lab. Fifteen subjects who did not participate in the pilot experiment (7 males) participated in the MRI experiment. MR subjects ranged in age from 18 to 27 (mean age = 23.2 ± 5.00). All subjects were native speakers of English. MR subjects were strongly right-handed, as confirmed with an adapted version of the Oldfield Handedness Inventory (Oldfield, 1971). MR subjects reported no known hearing loss or neurological impairment, and were screened for MR compatibility before entering the scanner. Subjects in both pilot and MRI experiment gave informed consent in accordance with the Human Subjects policies of Brown University and Memorial Hospital of Rhode Island, and received modest monetary compensation for their participation.

Behavioral Procedure and Apparatus

Pilot experiment

In the pilot experiment, subjects were asked to listen to each stimulus and decide whether it began with a [t] or a [d] sound, and to press a corresponding button with their dominant hand. Subjects were instructed to respond as quickly and accurately as possible. They were presented with 20 repetitions of each of six stimuli, presented in a random order.

MR experiment

The MR experiment consisted of four runs: two phonetic categorization runs and two tone categorization runs. During each of the phonetic categorization runs, subjects heard 20 repetitions of each VOT stimulus, for 40 total repetitions of each stimulus in the entire experiment. Likewise, during each tone categorization run, subjects heard 20 repetitions of each tone stimulus, for 40 total repetitions of each tone stimulus. Stimuli within each run were presented in a fixed, pseudo-randomized order. Stimuli were presented via noise-attenuating headphones (Resonance Technologies) at a comfortable volume. Responses were registered via a button box placed next to the subject’s right hand, and RT and accuracy data were collected. Subjects received alternating phonetic and tone categorization runs, starting with a phonetic categorization run.

The instructions to the subjects for the phonetic categorization task were the same as for the pilot experiment. For the tone task, subjects were asked to indicate whether the tone they heard was the Low or High tone, and to press a corresponding button. In addition, subjects were instructed to keep their eyes closed and to remain as still as possible during functional scanning. Subjects were familiarized with all stimuli during the anatomical scan, and were given 10 practice trials of each task type.

MR Imaging

Scanning was performed using a 1.5 T Symphony Magnetom MR system (Siemens Medical Systems, Erlangen, Germany). Participants’ heads were aligned to the center of the magnetic field, and a 3D T1-weighted magnetization prepared rapid acquisition gradient echo (MPRAGE) sequence was acquired for anatomical co-registration (TR=1900 msec, TE=4.15 msec, TI=1100 msec, 1mm3 isotropic voxel size, 256 x 256 matrix). Functional scans were acquired using a multi-slice, ascending interleaved echo-planar imaging (EPI) sequence (15 slices, 5mm thick, 3mm2 axial in-plane resolution, 64 x 64 matrix, 192 mm3 FOV, FA=90°, TE=38 msec, TR=2000 msec). The center of the imaged slab was aligned to the center of the thalamus, which allowed for imaging of the bilateral peri-sylvian cortex in all subjects, including at least the entire IFG, STG, and posterior two-thirds of the MTG in all subjects. Each functional volume acquisition lasted 1200 msec, followed by 20 msec of scanner-added time, plus 780 msec of added silence which was programmed into the acquisition sequence. The result was a clustered design (Blumstein et al., 2005; Edmister, Talavage, Ledden, & Weisskoff, 1999) in which each TR consisted of 1200 msec of scanning, followed by 800 msec of silence, for a total TR of 2 seconds.

Auditory stimulus presentation was timed such that each stimulus fell in the center of the silent period between scans, starting 235 msec into the silent period. Stimuli were distributed into trial onset asynchrony (TOA) bins of 2, 4, 6, 8, 10 and 12 seconds using a Latin Squares design. The average TOA was 7 seconds. Each syllable run lasted 14 minutes, 8 seconds (422 EP volumes collected), and each tone run lasted 4 minutes and 48 seconds (142 EP volumes collected).

In addition, two functional volumes were automatically added to the beginning of each run to account for T1 saturation effects. These volumes were not saved by the scanner. Stimulus presentation began four total volumes after the onset of each run to avoid saturation effects.

Data Analysis

Behavioral analysis: Pilot and MR Experiments

Reaction time means and categorization data were collected and binned into the stimulus categories: Extreme, Exemplar, and Near-Boundary. Repeated-measures within-subjects ANOVAs were carried out on the RT and categorization variables for syllables in these three categories.

MR analysis

Image Preprocessing

Functional datasets were co-registered to the anatomical dataset using parameters from the scanner. Functional datasets were corrected for slice acquisition time (Van de Moortele et al., 1997) on each run separately, using the slice acquisition times given by the scanning protocol. Runs were concatenated, and motion-corrected using a six-parameter rigid-body transform (Cox & Jesmanowicz, 1999). Functional datasets were resampled to 3mm isotropic voxels, transformed to Talairach and Tournoux space through the selection of landmarks in the anatomical dataset, and spatially smoothed with a 6mm wide at half-maximum Gaussian kernel.

Statistical Analysis

Each subject’s preprocessed functional data was submitted to a regression analysis using AFNI’s 3dDeconvolve. For this analysis a vector was created for each stimulus type (one for each of six VOT stimuli, one each of the two tone stimulus) which indicated the start time of each stimulus. These functions were convolved with a stereotypic gamma-variate function (Cohen, 1997) in order to model the predicted time course of activation across the experiment. These eight reference functions were included as regressors in the analysis. The six parameters output by the motion-correction process (roll, pitch, and yaw, plus x, y, and z, translation) were also included as regressors in order to remove motion artifacts, and mean and linear trends were also removed from the data. The 3dDeconvolve analysis returned by-voxel fit coefficients for each condition and each subject’s data. Voxels which were not sampled for all fifteen subjects were masked from the final results. The raw fit coefficients for each subject and condition were then converted to percent change for each subject by dividing each voxel-wise fit coefficient for each condition by the experiment-wise mean for that voxel.

A mixed-factor ANOVA was carried out on the percent signal change maps with subjects as a random factor and stimulus condition as a fixed factor. The planned comparisons for this study were Exemplars vs Tones, Near-Boundary vs. Extreme, Near-Boundary vs. Exemplar, and Extreme vs. Exemplar. Statistical maps for each comparison were submitted to a clustering analysis which removed all activation which was not part of a cluster of at least 63 contiguous voxels activated at a voxel-wise level of p<0.025, which based on Monte Carlo simulations, yielded a corrected cluster-wise threshold of p<0.05.

In order to explore patterns of activation within these functionally defined clusters, mean percent signal change was extracted for each syllable condition and each subject, and those means were entered into a within-subjects ANOVA with Syllable Condition as the fixed factor. This was done for those clusters which fell within areas which were hypothesized on the basis of previous research to play a role in phonetic competition or category typicality, namely the bilateral STG, the bilateral IFG, and cingulate gyrus.

Results

Pilot Experiment

Because behavioral responses of English-speaking participants to extremely prevoiced tokens has to our knowledge been untested, a pilot experiment was run in the lab in which participants performed a phonetic categorization task on [da]-[ta] tokens varying in VOT. Based on preliminary pilot data six stimuli were chosen, three of these (−100, −40, and 20 msec VOT) fell into the [da] category, and three (40, 100, and 160 msec VOT) fell into the [ta] category. Stimuli from the ends of the continuum (−100 and 160 msec VOT) were referred to as Extreme stimuli, those from the centers of their respective phonetic categories (−40 and 100 msec VOT) were referred to as Exemplar stimuli, and those close to the voiced-voiceless phonetic category boundary (20 and 40 msec VOT) were referred to as Near-Boundary stimuli. Subjects heard 20 repetitions of each stimulus, and were asked to decide for each stimulus presentation whether they heard [ta] or [da].

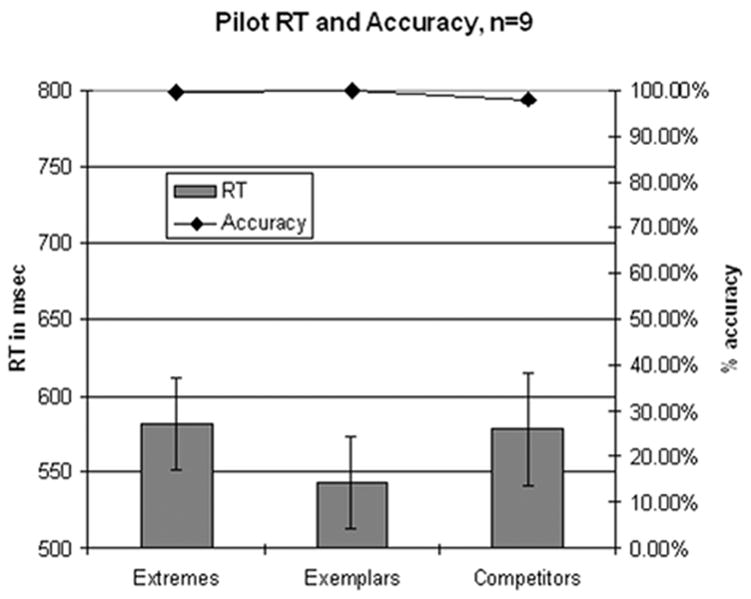

Reaction time and percent [da] responses are displayed grouped by stimulus category in Figure 3. As expected, subjects showed near-perfect consistency in identification of all stimuli, with categorizations of 96% or better. Reaction times increased both for the Extreme stimuli as well as the Near-Boundary stimuli. A within-subject repeated measures ANOVA was performed on the mean RTs for the Extreme, Exemplar, and Near-Boundary categories. There was a main effect of VOT category (F(2,16)=3.087, MSE=1304.7, p<0.073) which approached significance. In post-hoc tests, a significant difference was found between Extreme and Exemplar stimuli, but no other significant differences emerged. These six stimuli became the stimulus set for the subsequent fMRI experiment.

Figure 3.

Reaction time and identification results from the pilot experiment (n=9). Results shown grouped by stimulus category, error bars indicate standard error of the mean.

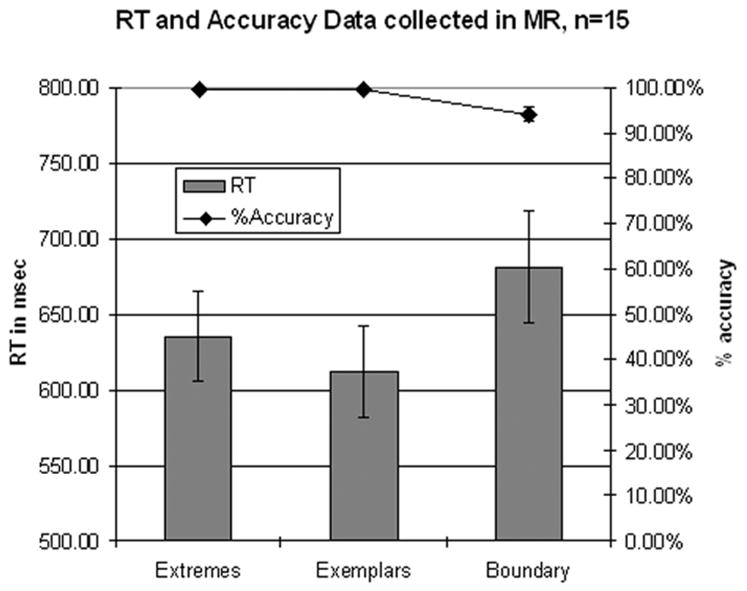

Behavioral results in the scanner

Categorization and reaction time data for all subjects run in the scanner are shown as a function of VOT category in Figure 4. The categorization data showed that subjects perceived stimuli categorically with near perfect consistency on all tokens except the 20 msec VOT token, which was identified as a [d] 83% of the time. Results of an ANOVA performed on the categorization data for stimuli binned into Extreme, Exemplar, and Near-Boundary categories revealed a main effect of VOT category on accuracy (F(2,28)=12.084, MSE=0.001, p<0.001). Post-hoc Newman Keuls tests revealed significant differences between the Near-Boundary stimuli and the other categories, but no difference between the Extreme and Exemplar categories.

Figure 4.

Behavioral data collected in the scanner (n=15). Error bars indicate standard error of the mean. Data grouped by stimulus category

Reaction time data showed the expected pattern, with slower reaction times for stimuli near the voiced-voiceless boundary (20 and 40 msec VOT), and at the ends of the continuum (−100 and 160 msec) than those closer to the center of the phonetic category (−40 and 100 msec VOT). An ANOVA performed on the RT means for the Extreme, Exemplar, and Near-Boundary categories revealed a significant effect of VOT category on RT (F(2,28)=15.535, MSE=1196.3, p<0.001). Post-hoc tests showed that this effect was due to a significant difference between Near-Boundary stimuli and both Extreme and Exemplar categories. No difference was observed between Extreme and Exemplar categories.

Statistical Comparisons

Statistical maps were generated for all of the planned comparisons, namely Near-Boundary vs. Extreme, Near-Boundary vs. Exemplar, Extreme vs. Exemplar, and Tone vs. Exemplar. The Tone vs. Exemplar comparison is not discussed here (although see Table 1 for the results of this analysis), as it does not bring evidence to bear on the distinction between category typicality and competition processes in phonetic categorization. These maps were corrected for multiple comparisons at a cluster-level significance of p<0.05 (63 contiguous voxels activated at p<0.025 or greater) and are shown in Table 1.

Table 1.

Areas of activation revealed in planned comparisons, thresholded at a voxel-level threshold of p<0.025, cluster-level threshold of p<0.05 (63 contiguous voxels). Coordinates indicate the t-value corresponding to the maximum intensity voxel for that cluster in Talairach and Tournoux (1988) space.

| Maximum Intensity | |||||

|---|---|---|---|---|---|

| Anatomical Region | x | y | z | Number of activated voxels | t-value |

| Near-Boundary > Extreme | |||||

| L Inferior Frontal Gyrus

R Inferior Frontal Gyrus L Cingulate Cortex R Superior Parietal Lobule |

−50

47 −2 41 |

20

17 23 −59 |

−1

−4 39 51 |

672

365 297 146 |

3.053

2.577 4.264 3.585 |

| Near-Boundary > Exemplar | |||||

| L Inferior Frontal Gyrus

L Cingulate Cortex R Inferior Frontal Gyrus R Cuneus R Superior Parietal Lobule L Superior Parietal Lobule L Middle Temporal Gyrus R Middle Temporal Gyrus |

−53

−2 47 2 26 −32 −65 62 |

14

17 17 −92 −68 −65 −32 −38 |

−4

42 −4 27 54 54 3 3 |

949

583 518 214 201 194 191 114 |

4.939

6.191 4.429 3.554 4.270 3.241 3.490 3.041 |

| Extreme > Exemplar | |||||

| R Middle Temporal Gyrus

L Superior Temporal Gyrus L. Posterior Cingulate |

65

−62 −2 |

−26

−11 −44 |

−1

9 15 |

119

78 63 |

3.055

2.409 2.422 |

| Tones > Exemplar | |||||

| R Inferior Frontal Gyrus

L Precentral Gyrus L Superior Temporal Gyrus R Precentral Gyrus L Inferior Parietal Lobule L Superior Temporal Gyrus |

47

−59 −59 59 −38 −47 |

17

−14 −2 −20 −41 −11 |

−4

36 9 21 45 3 |

351

243 125 122 78 63 |

2.929

3.739 2.406 3.238 4.082 3.571 |

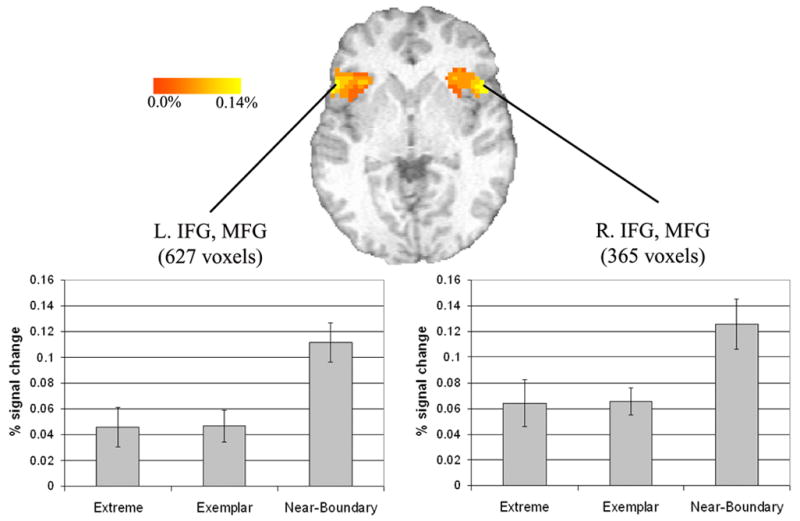

Near Boundary vs. Extreme

This comparison was designed to isolate the effects of competition from the effects of category typicality with near-boundary stimuli expected to show increased frontal activation compared to the extreme stimuli owing to increased phonetic competition. All clusters active in this comparison were more active for the Near-Boundary than the Extreme condition. There were two large clusters in the bilateral inferior frontal gyri, in both cases extending dorsally into the middle frontal gyri (Figure 5). Post-hoc Newman-Keuls tests on mean percent signal change data extracted from the clusters (Figure 5, bottom) revealed that in both areas, the Near-Boundary stimuli had significantly greater activation than the other two categories (p<0.01), but there was no significant difference between Exemplar and Extreme categories.

Figure 5.

Left and right inferior frontal clusters active in the Near-Boundary vs. Extreme contrast. Axial slice shown at z=0, clusters active at a corrected threshold of p<0.05. Bar graphs show the extracted mean percent signal change across all voxels in the pictured cluster. Error bars indicate standard error of the mean.

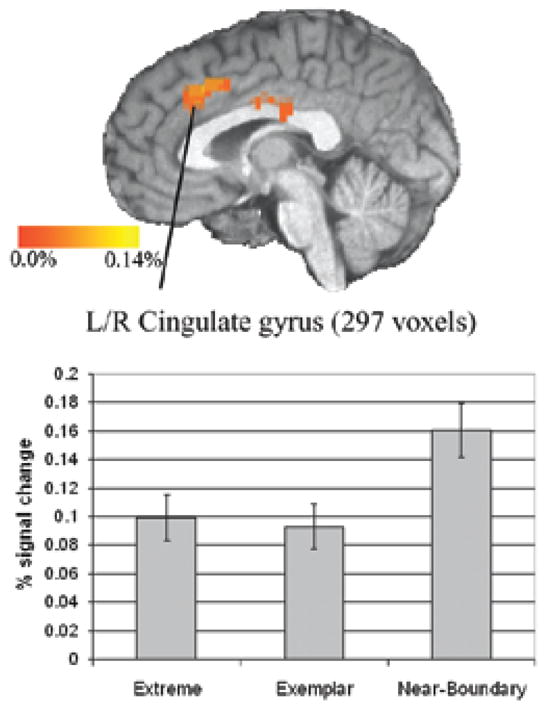

In addition to the bilateral inferior frontal gyrus clusters, there was a large midline cluster activated which was centered in the cingulate gyrus and extended into the anterior cingulate (Figure 6). Similar to the inferior frontal clusters, post hoc test revealed that this cluster showed significantly greater activation for Near-Boundary stimuli than the other two categories, and no difference between Extreme and Exemplar categories. Finally, there was a cluster in the right superior parietal lobule which extended into the IPL.

Figure 6.

Cluster in the cingulate gyrus active for the Near-Boundary vs. Extreme comparison. Sagittal slice shown at x=0, clusters active at a corrected threshold of p<0.05. Bar graphs show the extracted mean percent signal change across all voxels in the pictured cluster. Bars indicate standard error of the mean.

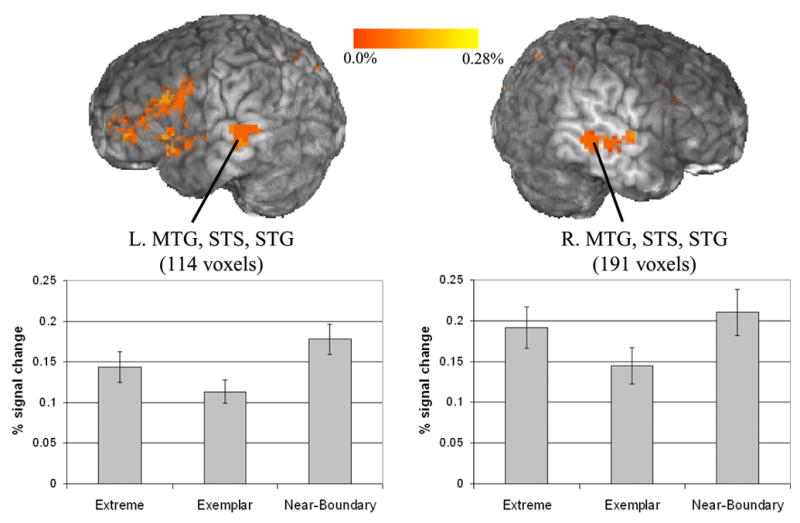

Near-Boundary vs. Exemplar

This comparison was designed to test the effects of both competition and typicality on activation levels with increased activation for the near-boundary stimuli expected in frontal areas owing to increased competition and increased activation expected in temporal areas owing to typicality. A number of clusters were activated for the comparison between Near-Boundary and Exemplar stimuli. As expected, all clusters showed more activation for Near-Boundary stimuli than Exemplar stimuli. Four of these clusters, including the left and right IFG, cingulate, and right superior parietal lobule, were nearly identical in placement and extent to those activated in the Near-Boundary vs. Extreme comparison.

Of interest, a cluster emerged which was centered in the left medial MTG, and which extended into the left superior temporal sulcus, and then into the left STG. A symmetric cluster was observed in the right MTG, STS, and STG as well (Figure 7). Mean percent change data extracted from both clusters revealed greater activation for both Extreme and Near-Boundary stimuli than Exemplar stimuli. Post hoc tests for mean percent change values in the left MTG cluster revealed significant differences between all stimuli. In the right MTG, the Exemplar stimuli were significantly less active than both the Near-Boundary and Extreme stimuli, but no difference emerged between the Near-Boundary and Extreme categories.

Figure 7.

Left and right superior temporal clusters active in the Near-Boundary vs. Exemplar contrast. Clusters active at a corrected threshold of p<0.05. Bar graphs show the extracted mean percent signal change across all voxels in the pictured cluster. Error bars indicate standard error.

Significant activation also emerged in a midline cluster centered in the cuneus and extending into the posterior cingulate, and in the left superior parietal lobule, extending into the left IPL.

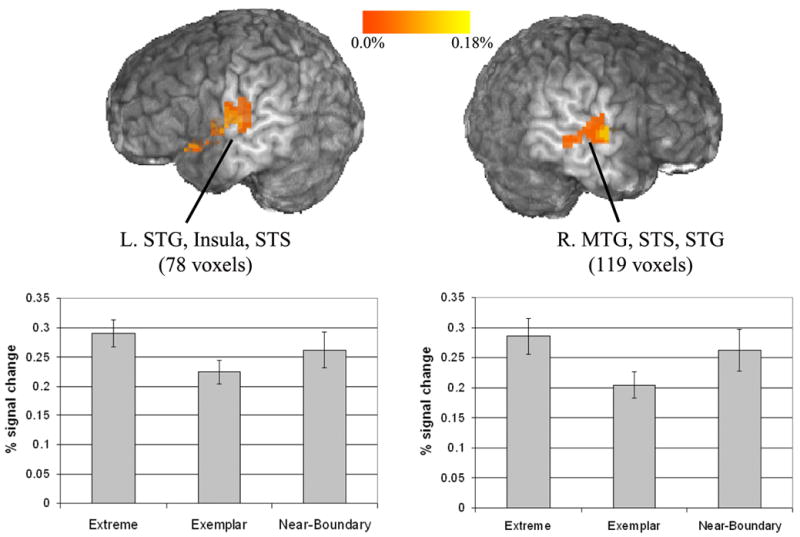

Extreme vs. Exemplar

The comparison between Extreme and Exemplar stimuli was designed to isolate the effects of typicality from the effects of phonetic competition with increased activation expected in temporal areas owing to the poor category goodness for the Extreme stimuli. Two temporal clusters were activated, both with more activation for the Extreme than the Exemplar tokens. The right temporal cluster was very similar to the right MTG cluster defined in the Near-Boundary vs. Exemplar contrast. The left hemisphere cluster was centered in the left STG, and extended into the superior temporal sulcus and into the left insula, but did not reach the left MTG (Figure 8).

Figure 8.

Left and right superior temporal clusters active in the Extreme vs. Exemplar contrast. Clusters active at a corrected threshold of p<0.05. Bar graphs show the extracted mean percent signal change across all voxels in the pictured cluster. Error bars indicate standard error.

Post hoc Newman-Keuls tests on the mean percent signal change extracted from the left temporal cluster revealed that the difference between Exemplar and Near-Boundary conditions was significant (p<0.01), while the difference between Exemplar and Extreme tokens approached significance. There was no significant difference between Extreme and Near-Boundary conditions. Likewise, post hoc tests in the right temporal cluster showed that Exemplar stimuli had significantly less activation than either Extreme or Near-Boundary stimuli, while there was no difference between Extreme and Near-Boundary stimuli.

General Discussion & Conclusion

The goal of the present study was to decouple the relative effects of phonetic category prototypicality and phonetic competition on activation in anterior and posterior peri-sylvian areas as subjects performed a phonetic categorization task. Results were consistent with the hypothesis that inferior frontal areas are maximally sensitive to the competition between phonetic categories which arises especially when stimuli are near the phonetic category boundary. In contrast, superior temporal areas were shown to be sensitive to the category typicality of stimuli, with more activation for less-prototypical exemplars, regardless of whether they were close to the phonetic boundary (Near-Boundary) or far from that boundary (Extreme).

Behavioral Results

The pattern of behavioral results for subjects run in the scanner showed increased reaction times as stimuli neared the phonetic category boundary. Such an increase may be due to the fact that these exemplars were less-prototypical exemplars of their phonetic category, due to the competition which arises due to their proximity to the phonetic category boundary or due to both. Reaction times also increased for those stimuli which were less-prototypical exemplars of the phonetic category but which minimized competition by having extreme VOT values. These results indicate that subjects were sensitive to the typicality of a token as a member of its phonetic category, irrespective of the extent of phonetic competition. In contrast to the pilot experiment, however, which showed significantly longer RTs to only Extreme stimuli, reaction times for subjects run in the scanner were significantly longer for stimuli near the category boundary than those at the extreme ends of the continuum. This result appears to be due in large part to the effect of the 20 msec VOT token, which showed much longer reaction times than any of the other tokens. Comparison of the reaction-time latencies to the 40 msec near-boundary stimulus and the extreme 160 msec voiceless stimulus were very similar (638msec ±35.0 vs. 618 msec ±30.0 respectively).

fMRI Results

Competition and the inferior frontal gyri

Similar to data reported in Blumstein et al (2005), activation emerged in the left inferior frontal gyrus as a function of the degree of competition expected to arise from the contrasting phonetic category. Namely, there was increased activation for the near-boundary stimuli compared to either the exemplar or extreme stimuli. Unlike the Blumstein et al. study, however, this activation emerged in not only the left but also in the right inferior frontal gyrus. Importantly, increased activation did not emerge in frontal areas for less-prototypical exemplars which were not near the phonetic category boundary when compared to prototypical exemplars (Extreme vs. Exemplar). These results suggest a role for the bilateral inferior frontal gyrus in resolving competition, and also indicate that this area is not generally sensitive to phonetic category typicality. These results also provide evidence that activation in the inferior frontal gyrus is not strictly coupled to the general difficulty of the phonetic decision as measured by reaction time (Binder, Liebenthal, Possing, Medler, & Ward, 2004; Blumstein et al., 2005). Extreme stimuli were poor exemplars of their phonetic category, and as such showed increased reaction times when compared to Exemplar stimuli, but no concomitant increase in activation in the inferior frontal lobes was observed for the Extreme vs. Exemplar comparison.

Activation in the left inferior frontal gyrus often emerges when subjects are required to make an executive decision about a phonetic or phonological property of the incoming stimulus (see Poeppel, 1996 for review), and when subjects are required to make decisions about linguistic stimuli generally. Typically the pattern of activation is such that increased activation is shown for the more difficult condition or task in general. Thompson-Schill and colleagues (Kan & Thompson-Schill, 2004a; Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997; Thompson-Schill, D’Esposito, & Kan, 1999) refocused this view and posited that the role of the left inferior frontal gyrus was in selecting between competing alternatives. The pattern of activation observed in the present study supports the view that the left inferior gyrus is involved not simply in selecting between different semantic representations, but also in resolving competition between competing phonetic categories.

Of interest, competition-modulated activity emerged bilaterally in this study, namely in the right inferior frontal gyrus and surrounding areas, as well as in the more expected left-hemisphere region. Modulation of activation of the right inferior frontal gyrus is less reported in language tasks than is its left-hemisphere homologue, and its role in language processing is as yet unclear. Poldrack and colleagues (Poldrack et al., 2001) showed increasing activation in the bilateral IFG as auditory stimuli were compressed in time, presumably reflecting the increasing processing requirements necessary to understand the speeded speech. However, activation levels fell off when stimuli were so speeded as to be incomprehensible, which additionally suggests that the right IFG is sensitive to speech intelligibility, not simply to speech rate. A recent study in our lab investigating the discrimination of voice-onset time showed a compatible pattern of results (Hutchison, Blumstein, & Myers, submitted). Subjects engaged in a phonetic discrimination task to tokens which either fell within a phonetic category or between two categories. Activation in the right inferior frontal gyrus was greater for discrimination of pairs which were acoustically similar than those which were more different, but only when those stimulus pairs could be reliably discriminated by subjects. Like the Poldrack study, these results suggest that the right IFG has a role in mediating the computational resources required for speech processing, but only when the task is one which may be consistently performed by subjects.

Bilateral dorsolateral prefrontal cortex, of which the inferior and middle frontal gyri are subsections, has been also implicated in perceptual and conceptual selection in non-linguistic as well as linguistic tasks (c.f. Kan & Thompson-Schill, 2004b and; Miller & Cohen, 2001 for reviews). Given that the phonetic categorization task used in this study may involve competition at the perceptual (i.e. acoustic-phonetic) level as well as the conceptual (i.e. phonetic category) level, it is difficult with the present data to determine whether the activation observed in this study reflects the more domain-general conceptual selection role proposed for the bilateral frontal cortices, or whether it specifically reflects competition between items which exist close to each other in perceptual space.

Results from this study clearly suggest that competition, and not phonetic category typicality in general, drives activation in the inferior frontal lobes. What remains unresolved is precisely what aspect of competition is responsible for increased reaction times to stimuli close to the phonetic boundary. In the phonetic categorization task, competition is inherent in several stages of processing. When subjects hear a token which falls near a phonetic boundary they must resolve the competition between the presented category and the competing phonetic category. This translates into increased competition in selecting between the two possible responses, [da], and [ta], and may in turn affect the difficulty of the mapping between that chosen response and subsequently the motor response selected to carry out the button press. Thus, in the processing of near-boundary stimuli, there is increased competition inherent in the acoustic-phonetic stage, the decision stage, and the motor-planning stage of the task. If, as proposed, the inferior frontal gyri are involved in competition at the phonetic level of processing, competition-driven activation should be evident even when no overt response is required. In contrast, if the inferior frontal lobes are involved specifically in executive aspects of language processing, activation for near-boundary stimuli might not be expected in tasks which require no overt response, for instance, in a passive listening task. Further research is necessary to further delineate the influence of competition at these various stages of processing.

Evidence does exist suggesting that the left inferior frontal gyrus is at least implicated in a variety of phonetic-phonological tasks which do require an overt response. The left inferior frontal lobe has been implicated in phonetic-phonological processing in a number of neuroimaging studies (c.f. Burton, 2001 for review; Joanisse & Gati, 2003; Poldrack et al., 2001; Poldrack et al., 1999). Perhaps more importantly, Broca’s aphasics with lesions involving frontal structures including the inferior frontal gyrus often show deficits in tasks which require explicit access to phonetic categories, with abnormal performance on both identification and discrimination tasks (c.f. Blumstein, 1995 for review; Blumstein, Baker, & Goodglass, 1977; Blumstein, Cooper, Zurif, & Caramazza, 1977). Additionally, Broca’s aphasics show loss of semantic priming when the prime word (‘pear’) has an initial VOT which is decreased so it is closer to the phonetic boundary, and when that word also has a voicing competitor (‘bear’) (Utman, Blumstein, & Sullivan, 2001). Importantly, the loss of priming emerges only when phonetic-phonological competition (i.e. voiceless prime with a reduced VOT) co-varies with lexical competition (i.e. ‘pear’ vs. ‘bear’). Taken together, these results suggest a role for the left inferior frontal gyrus in resolving competition at multiple levels of linguistic processing.

Phonetic category typicality and the superior temporal lobes

Increased activation was seen in medial portions of the bilateral STG and MTG for stimuli which were less-prototypical exemplars of their phonetic category (Extreme, Near-Boundary) compared to those which were more prototypical exemplars (Exemplar). These results support the hypothesis that the superior temporal gyri are involved in the acoustic-phonetic processing necessary to map a stimulus to its phonetic category.

Previous studies (Blumstein et al., 2005) showed that, in a regression analysis, activation in the bilateral superior temporal lobes correlated with the typicality of a stimulus as a member of its phonetic category, but not with the overall difficulty of the phonetic decision as measured by reaction time. As mentioned previously, similar results were found by Binder and colleagues (Binder et al., 2004), who found that activation in the anterior superior temporal lobes was correlated with accuracy scores on a phonetic discrimination task. These accuracy scores were presumed to reflect the ‘perceptibility’ of stimuli presented in noise. In that study, accuracy and perceptibility were confounded, raising the possibility that the temporal activation observed was not the result of the increased processing required as the signal to noise ratio decreased, but to factors related to accuracy - for instance, response certainty or degree of attentional engagement. In the present study, all stimuli with the exception of one token were perceived with near ceiling consistency. These results therefore support Binder et al.’s claim that activation in the temporal lobes is related to perceptual processing rather than to other factors.

Curiously, the direction of correlation between activation levels and perceptual measures was opposite in the present study to the findings in the Binder et al. (2004) study. That is, Binder and colleagues showed increasing activation to tokens which were presented with a higher signal to noise ratio, and which would presumably require less processing to resolve their phonetic identity. In contrast, the results of the present study showed increasing activation to poorer tokens in a phonetic category, which should require more processing to resolve their phonetic identity. This difference in results cannot be resolved on neuroanatomical grounds - although the clusters reported in the Binder study are anterior to those reported in the current study, there appears to be a large degree of overlap between the clusters reported in these two studies.

One possibility for the difference between the two studies lies in the difference between the stimuli used. The Binder study used two synthesized speech tokens, ‘ba’ and ‘da’ which were both presumably good exemplars of their phonetic category. These tokens were presented in pairs at a variety of signal to noise ratios. Thus, the difficulty of the task varied as a function of the perceptibility of the stimuli in noise, not as a function of the processing resources needed for resolving variations within a phonetic category. Given that the superior temporal lobes have been shown to be sensitive to the acoustic properties characteristic of human speech (Belin, Zatorre, & Ahad, 2002; Belin, Zatorre, Lafaille, Ahad, & Pike, 2000; Zatorre, Belin, & Penhune, 2002), an increase in activation in the Binder (2004) study may reflect simply the perceptibility of the stimuli as speech. In contrast, in the current study, all stimuli were equally perceptible as speech tokens. As such, the activation patterns in the current study may reflect the temporal lobes’ responsiveness to human speech, modulated by the degree of fit of each token to its phonetic category. In sum, the superior temporal lobes seem to show sensitivity to tokens which deviate from the expected or prototypical stimulus, whether this deviation is the result of global changes in perceptibility due to presentation in noise (Binder et al., 2004) or due to the graded structure of phonetic categories (Blumstein et al., 2005; Guenther et al., 2004).

Callan and colleagues (Callan, Callan, Tajima, & Akahane-Yamada, 2006; Callan, Jones, Callan, & Akahane-Yamada, 2004) have shown that similar clusters in the STG emerge when subjects identify non-phonemic speech contrasts, such as the r/l distinction in Japanese. This evidence is in line with the notion that the STG are involved in processing the fine acoustic detail necessary to map a speech sound to a phonetic category label. The mapping process should increase in difficulty for sounds which are not contrastive in one’s native language, and may therefore require finer discrimination of acoustic detail.

The current findings provide strong evidence for the role of anterior and posterior brain structures in mediating two aspects of the phonetic categorization process. Results indicate that both anterior and posterior structures are sensitive to phonetic category structure. Specifically, results support the conclusion that frontal structures such as the left and right IFG are involved in mediating competition between phonetic categories. The bilateral temporal lobes, in contrast, are sensitive to the typicality of a stimulus as a member of its phonetic category, and as such, have a role in resolving phonetic category membership, irrespective of the effects of competition.

Acknowledgments

This work was supported by NIH grants F31 DC006520 and DC006220 to Brown University and by the Ittleson Foundation. I thank Sheila Blumstein for invaluable guidance in all phases of this project. Thanks are also due to Emmette Hutchison for assistance during data collection and analysis.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Belin P, Zatorre RJ, Ahad P. Human temporal-lobe response to vocal sounds. Cognitive Brain Research. 2002;13(1):17–26. doi: 10.1016/s0926-6410(01)00084-2. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403(6767):309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Binder JR, Liebenthal E, Possing ET, Medler DA, Ward BD. Neural correlates of sensory and decision processes in auditory object identification. Nature Neuroscience. 2004;7(3):295–301. doi: 10.1038/nn1198. [DOI] [PubMed] [Google Scholar]

- Blumstein SE. The Neurobiology of the Sound Structure of Language. In: Gazzaniga MS, editor. The Cognitive Neurosciences. Cambridge: MIT Press; 1995. pp. 915–930. [Google Scholar]

- Blumstein SE, Baker E, Goodglass H. Phonological factors in auditory comprehension in aphasia. Neuropsychologia. 1977;15(1):19–30. doi: 10.1016/0028-3932(77)90111-7. [DOI] [PubMed] [Google Scholar]

- Blumstein SE, Cooper WE, Zurif EB, Caramazza A. The perception and production of voice-onset time in aphasia. Neuropsychologia. 1977;15(3):371–383. doi: 10.1016/0028-3932(77)90089-6. [DOI] [PubMed] [Google Scholar]

- Blumstein SE, Myers EB, Rissman J. The perception of voice onset time: an fMRI investigation of phonetic category structure. Journal of Cognitive Neuroscience. 2005;17(9):1353–1366. doi: 10.1162/0898929054985473. [DOI] [PubMed] [Google Scholar]

- Burton MW. The role of inferior frontal cortex in phonological processing. Cognitive Science. 2001;25(5):695–709. [Google Scholar]

- Callan AM, Callan DE, Tajima K, Akahane-Yamada R. Neural processes involved with perception of non-native durational contrasts. Neuroreport. 2006;17(12):1353–1357. doi: 10.1097/01.wnr.0000224774.66904.29. [DOI] [PubMed] [Google Scholar]

- Callan DE, Jones JA, Callan AM, Akahane-Yamada R. Phonetic perceptual identification by native- and second-language speakers differentially activates brain regions involved with acoustic phonetic processing and those involved with articulatory-auditory/orosensory internal models. Neuroimage. 2004;22(3):1182–1194. doi: 10.1016/j.neuroimage.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Cohen MS. Parametric analysis of fMRI data using linear systems methods. Neuroimage. 1997;6(2):93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- Cox RW, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magnetic Resonance in Medicine. 1999;42(6):1014–1018. doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- Edmister WB, Talavage TM, Ledden PJ, Weisskoff RM. Improved auditory cortex imaging using clustered volume acquisitions. Human Brain Mapping. 1999;7(2):89–97. doi: 10.1002/(SICI)1097-0193(1999)7:2<89::AID-HBM2>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guenther FH, Nieto-Castanon A, Ghosh SS, Tourville JA. Representation of sound categories in auditory cortical maps. Journal of Speech Language and Hearing Research. 2004;47(1):46–57. doi: 10.1044/1092-4388(2004/005). [DOI] [PubMed] [Google Scholar]

- Hutchison E, Blumstein SE, Myers EB. An event-related fMRI investigation of voice-onset time discrimination. (submitted) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joanisse MF, Gati JS. Overlapping neural regions for processing rapid temporal cues in speech and nonspeech signals. Neuroimage. 2003;19(1):64–79. doi: 10.1016/s1053-8119(03)00046-6. [DOI] [PubMed] [Google Scholar]

- Kan IP, Thompson-Schill SL. Effect of name agreement on prefrontal activity during overt and covert picture naming. Cognitive, Affective and Behavioral Neuroscience. 2004a;4(1):43–57. doi: 10.3758/cabn.4.1.43. [DOI] [PubMed] [Google Scholar]

- Kan IP, Thompson-Schill SL. Selection from perceptual and conceptual representations. Cognitive, Affective, and Behavioral Neuroscience. 2004b;4(4):466–482. doi: 10.3758/cabn.4.4.466. [DOI] [PubMed] [Google Scholar]

- Kessinger RH. The mapping from sound structure to the lexicon: A study of normal and aphasic subjects. Unpublished doctoral dissertation; Brown University: 1998. [Google Scholar]

- Kessinger RH, Blumstein SE. The influence of phonetic category structure on lexical access. manuscript. [Google Scholar]

- Klatt DH. Software for a cascade/parallel formant synthesizer. Journal of the Acoustical Society of America. 1980;67(3):971–995. [Google Scholar]

- Kuhl PK. Human adults and human infants show a “perceptual magnet effect” for the prototypes of speech categories, monkeys do not. Perception and Psychophysics. 1991;50(2):93–107. doi: 10.3758/bf03212211. [DOI] [PubMed] [Google Scholar]

- Labov W. The boundaries of words and their meanings. In: Bailey CJN, Shuy R, editors. New Ways of Analyzing Variation in English. Washington, D.C: Georgetown University Press; 1973. [Google Scholar]

- Liberman AM, Harris KS, Hoffman HS, Griffith BC. The discrimination of speech sounds within and across phoneme boundaries. Journal of Experimental Psychology. 1957 doi: 10.1037/h0044417. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Elman JL. The TRACE model of speech perception. Cognitive Psychology. 1986;18(1):1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- McMurray B. A [somewhat] new systematic approach to formant-based speech synthesis for empirical research. in preparation. [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Miller JL, Volaitis LE. Effect of speaking rate on the perceptual structure of a phonetic category. Perception and Psychophysics. 1989;46(6):505–512. doi: 10.3758/bf03208147. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pisoni DB, Tash J. Reaction times to comparisons within and across phonetic categories. Perception and Psychophysics. 1974;15:289–290. doi: 10.3758/bf03213946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D. A critical review of PET studies of phonological processing. Brain and Language. 1996;55(3):317–351. doi: 10.1006/brln.1996.0108. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Temple E, Protopapas A, Nagarajan S, Tallal P, Merzenich M, et al. Relations between the neural bases of dynamic auditory processing and phonological processing: Evidence from fMRI. Journal of Cognitive Neuroscience. 2001;13(5):687–697. doi: 10.1162/089892901750363235. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JDE. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10(1):15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Rosch E. Cognitive Representation of Semantic Categories. Journal of Experimental Psychology: Human Perception and Performance. 1975;104:192–233. [Google Scholar]

- Ryalls J, Zipprer A, Baldauff P. A preliminary investigation of the effects of gender and race on voice onset time. Journal of Speech and Hearing Research. 1997;40(3):642–645. doi: 10.1044/jslhr.4003.642. [DOI] [PubMed] [Google Scholar]

- Stevens KN, Blumstein SE. Invariant cues for place of articulation in stop consonants. Journal of the Acoustical Society of America. 1978;64(5):1358–1368. doi: 10.1121/1.382102. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. A co-planar stereotaxic atlas of a human brain. Stuttgart; Thieme: 1988. [Google Scholar]

- Thompson-Schill SL, D’Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proceedings of the National Academy of Sciences. 1997;94(26):14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL, D’Esposito M, Kan IP. Effects of repetition and competition on activity in left prefrontal cortex during word generation. Neuron. 1999;23(3):513–522. doi: 10.1016/s0896-6273(00)80804-1. [DOI] [PubMed] [Google Scholar]

- Utman JA, Blumstein SE, Sullivan K. Mapping from sound to meaning: Reduced lexical activation in Broca’s aphasics. Brain and Language. 2001;79(3):444–472. doi: 10.1006/brln.2001.2500. [DOI] [PubMed] [Google Scholar]

- Van de Moortele PF, Cerf B, Lobel E, Paradis AL, Faurion A, Le Bihan D. Latencies in fMRI time-series: effect of slice acquisition order and perception. NMR in Biomedicine. 1997;10(4–5):230–236. doi: 10.1002/(sici)1099-1492(199706/08)10:4/5<230::aid-nbm470>3.0.co;2-w. [DOI] [PubMed] [Google Scholar]

- Volaitis LE, Miller JL. Phonetic prototypes: Influence of place of articulation and speaking rate on the internal structure of voicing categories. Journal of the Acoustical Society of America. 1992;92(2 Pt 1):723–735. doi: 10.1121/1.403997. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends in Cognitive Sciences. 2002;6(1):37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]