Abstract

The Mismatch Negativity component of the auditory event-related brain potentials can be used as a probe to study the representation of sounds in Auditory Sensory Memory (ASM). Yet, it has been shown that an auditory MMN can also be elicited by an illusory auditory deviance induced by visual changes. This suggests that some visual information may be encoded in ASM and is accessible to the auditory MMN process. However, it is not known whether visual information influences ASM representation for any audiovisual event or whether this phenomenon is limited to specific domains in which strong audiovisual illusions occur. To highlight this issue, we have compared the topographies of MMNs elicited by non-speech audiovisual stimuli deviating from audiovisual standards on the visual, the auditory or both dimensions. Contrary to what occurs with audiovisual illusions, each unimodal deviants elicited sensory-specific MMNs and the MMN to audiovisual deviants included both sensory components. The visual MMN was however different from a genuine visual MMN obtained in a visual-only control oddbbal paradigm, suggesting that auditory and visual information interacts before the MMN process occurs. Furthermore, the MMN to audiovisual deviants was significantly different from the sum of the two sensory-specific MMNs, showing that the processes of visual and auditory change detection are not completely independent.

Keywords: Acoustic Stimulation; Adult; Attention; physiology; Auditory Perception; physiology; Electroencephalography; Evoked Potentials, Auditory; physiology; Evoked Potentials, Visual; physiology; Female; Humans; Male; Memory; physiology; Photic Stimulation; Visual Perception; physiology

Keywords: Mismatch Negativity, Multisensory Integration, Sensory Memory, Integration Multisensorielle, Mémoire sensorielle

Introduction

The most counter-intuitive effect of audiovisual interactions in the brain is perhaps the fact that sensory-specific cortices (e.g. the auditory cortex) appear to be sensitive to information from other modalities, even in primary cortices (Bental et al. 1968) and at very early stages of sensory processing (Fort and Giard 2004).

The Mismatch Negativity (MMN) component of the Event-Related Potentials (ERPs) is elicited in the auditory cortex when incoming sounds are detected as deviating from a neural representation of acoustic regularities and is computed by subtracting the responses to frequent standard sounds from those to infrequent deviant sounds. MMN implies the existence of an Auditory Sensory Memory (ASM) that stores a neural representation of the standard against which any incoming auditory input is compared (Ritter et al. 1995). It is mainly generated in the auditory cortex (Kropotov et al. 1995; Alain et al. 1998) and has long been considered as specific to the auditory modality (Nyman et al. 1990; Näätänen 1992).

However it recently turned out that the MMN is not completely impervious to crossmodal influences. For example, in bimodal speech processing, an MMN has been shown to be elicited by deviant syllables differing from the standards only on their visual dimension. In this so-called McGurk illusion (McGurk and McDonald 1976), the same physical sound is therefore differently perceived and processed in ASM, depending on the lip movements that are simultaneously seen (Sams et al. 1991; Möttönen et al. 2002; Colin et al. 2002b; Colin et al. 2004). To keep in line with the auditory-specificity assumption, several non-exclusive explanations have been proposed, that are related to the special status of speech. Either there would exist a phonetic MMN process that is sensitive to the phonetic nature of articulatory movements (Colin et al. 2002b) or visual speech cues could have a specific access to the MMN generators in auditory cortex because, like auditory speech, they carry time-varying information (Möttönen et al. 2002).

Nonetheless, generation of an MMN by visual-only deviants is not restricted to the speech domain, since it can also be observed with the ventriloquist illusion, in which the perceived location of a sound is shifted by a spatially disparate visual stimulus (Stekelenburg et al. 2004; see also Colin et al. 2002a). Rather, what these two phenomena have in common is that they give rise to irrepressible audiovisual illusions that seem to occur at a sensory level of representation (McGurk effect: Soto-Faraco et al. 2004; ventriloquist effect: Bertelson and Aschersleben 1998; Vroomen et al. 2001).

The question therefore arises whether any visual change of an audiovisual event, even in the absence of perceived audiovisual illusion, is likely to access the ASM indexed by the MMN. In other words, does the ASM encode more than the auditory part of an audiovisual event?

When replacing articulatory lip movements by non-speech visual stimuli in a McGurk MMN paradigm, Sams et al. (1991) found no evidence of an auditory MMN elicited by visual variations alone of the audiovisual event. However it is very possible that in the absence of strong illusion, the effect is of much less amplitude. Moreover, the influence of visual deviance on the MMN process could occur only in a suitable auditory-deviance context: thus the MMN elicited by auditory and visual deviances of an audiovisual event should be different from the MMN elicited by auditory deviances alone, while a visual deviance alone would not be detected by the auditory system.

We therefore conducted an audiovisual oddball paradigm in which audiovisual deviants differed from audiovisual standards (AV) either on the visual dimension (AV’), on the auditory dimension (A’V) or on both dimensions (A’V’), with the following hypothesis: If visual information is represented in ASM, AV’ deviants should elicit an auditory MMN, or A’V’ deviants should at least elicit an MMN different from those elicited by A’V deviants.

This would be the whole story if there were not a spoilsport: the visual Mismatch Negativity (vMMN). Several studies have recently shown that visual stimuli deviating from repetitive visual standards can elicit a visual analogue of the MMN in the same latency range (review in Pazo-Alvarez et al. 2003). This vMMN seems to be mainly generated in occipital areas (Berti and Schroger 2004) with possibly a more anterior component (Heslenfeld 2003; Czigler et al. 2002), to be independent of attention (Heslenfeld 2003) and to rely also on memory processes (Stagg et al. 2004; Czigler et al. 2002; see however Kenemans et al. 2003). However, it seems that a greater amount of deviance is necessary to evoke a vMMN than an auditory MMN (Pazo-Alvarez et al. 2003).

If visual-specific components are evoked by visual deviances, then it is necessary in our audiovisual paradigm to separate them from the influence of visual information on the auditory-specific MMN process. To disentangle the contributions of each unisensory process (vMMN and auditory MMN) and isolate the influence of visual information on the auditory MMN, we have therefore (i) conducted an additional visual oddball paradigm1 using the same visual inputs as in our main experiment, so as to elicit a genuine visual MMN (V’MMN) and (ii), analyzed the voltage and scalp current density (SCD) distributions of that V’MMN relative to the AV’ MMN elicited in the audiovisual paradigm. On the other hand, if the two unisensory MMN processes do not somehow interact, then the two unisensory MMNs should be strictly additive.

Methods

Participants

Fifteen right-handed adults (eight female, ages 20–25 years, mean age 23.1 years) were paid to participate in the study, for which they gave a written informed consent in accordance with the Code of Ethics of the World Medical Association (Declaration of Helsinki). All subjects were free of neurological disease, had normal hearing and normal or corrected-to-normal vision.

Stimuli

The stimuli were inspired from those previously used by our group in various experimental paradigms, which have revealed a variety of crossmodal interactions in the first 200 ms of processing (Giard and Peronnet 1999; Fort et al. 2002a; Fort et al. 2002b; Fort and Giard 2004).

Visual stimuli consisted in the deformation of a circle into an ellipse either in the horizontal or in the vertical direction (see Giard and Peronnet 1999). The basic circle had a diameter of 4.55 cm and was displayed on a video screen placed 130 cm in front of the subjects’ eyes, subtending a visual angle of 2°. The amount of deformation in either direction relative to the diameter of the circle was 33% and lasted 140 ms. Between each deformation, the circle remained present on the screen; a cross at its centre served as the fixation point.

Auditory stimuli were rich tones (the fundamental, the second and the fourth harmonics) shifting linearly in frequency (fundamental) either from 500Hz to 540Hz or from 500Hz to 600Hz. Their duration was also 140 ms, including 14 ms rise/fall time.

All stimuli consisted in the synchronous presentation of a visual and an auditory feature. One association (for example an elongation in the horizontal direction and a frequency shift from 500 to 540 Hz) was delivered in 76% of the trials (AV standard). Each remaining association was presented in 8% of the trials: the A’V deviant had the same visual feature as the standard but a different auditory feature, the AV’ deviant had the same auditory but a different visual feature, and the A’V’ deviant differed from the AV standard on both dimensions. To insure that the obtained MMNs could not be attributed to physical differences between the standard and deviants, the features of the standard and the A’V’ deviant were exchanged in half of the experimental blocks (and so were the features of A’V and AV’ deviants)

Distractive task

An important characteristic of the auditory (and visual) MMN is that it is automatic and pre-attentive. A “pure” MMN (that is not contaminated by attentional processes) is elicited by stimuli that are irrelevant to the subject. This is a more difficult constraint for visual or audiovisual oddball paradigms, because visual stimuli have to be presented in the visual field of the subjects, but outside their attentionnal focus. We therefore required a task on the fixation cross. From time to time (13% of the trials) the fixation cross disappeared for 120 ms. This disappearance occurred unpredictably within a standard trial but it was desynchronised relative to the trial’s onset and could not occur in a standard preceding a deviant trial. Subjects had to stare at the fixation cross and click a button as quickly and accurately as possible when the cross disappeared.

Visual control

To control for the existence of a vMMN and to study its topography, a visual oddball paradigm was conducted. The experimental parameters were those used in the audiovisual paradigm with the sound off: standard visual stimuli occurred in 84% of the trials (V standards) and the other visual feature occurred in 16% of the trials (V’ deviants). Standards and deviants were exchanged in half of the experimental blocks

Procedure

After setting the ERP recording apparatus, subjects were seated in a dark, sound-attenuating room and were given instructions describing the distractive task along with an audiovisual practice block of 267 trials. They were told to stare at the fixation cross at the centre of the screen, to respond as accurately and as quickly as possible to the cross disappearance and not to pay attention to the circle or the tones. In the audiovisual paradigm, 256 deviant trials of each type (AV’, A’V and A’V’) were randomly delivered among 2432 AVstandard trials, over 12 blocks including 267 trials each (except the last block that included 263 trials) at a fixed ISI of 560 ms with the constraint that two deviants could not occur in a row. In the visual oddball paradigm, 256 deviant trials were randomly delivered among 1344 V-standard trials, over 6 blocks including 267 trials each, except the last block that included 265 trials (same ISI). The 12 audiovisual and the 6 visual blocks were randomly presented to the subjects.

EEG recording

EEG was continuously recorded via a Neuroscan Compumedics system through Synamps AC coupled amplifiers (0.1 to 200 Hz analogue bandwidth; sampling rate: 1 kHz) from 36 Ag-AgCl scalp electrodes referred to the nose and placed according to the International 10–20 System: Fz, Cz, Pz, POz, Iz; Fp1, F3, F7, FT3, FC1, T3, C3, TP3, CP1, T7, P3, P7, PO3, O1, and their counterparts on the right hemiscalp; Ma1 and Ma2 (left and right mastoids, respectively); IMa and IMb (midway between Iz-Ma1 and Iz-Ma2, respectively). Electrode impedances were kept below 5 kΩ. Horizontal eye movements were recorded from the outer canthus of the right eye; eye blinks and vertical eye movements were measured in channels Fp1 and Fp2.

Data analysis

EEG analysis was undertaken with the ELAN Pack software developed at the INSERM U280 laboratory (Lyon, France). Trials with signal amplitudes exceeding 100 μV at any electrode from 300 ms before time zero to 500 ms after were automatically rejected to discard the responses contaminated by eye movements or muscular activities.

ERPs to audiovisual stimuli were averaged offline separately for the six different stimulus types (AV and V standards, A’V, AV’, A’V’ and V’ deviants), over a time period of 800 ms including 300 ms pre-stimulus. Trials including disappearance of the fixation cross were not taken into account when averaging. The mean numbers of averaged trials (by subject) were 1299, 649 and 204 for AV-standard, V-standard and deviants of each type respectively (about 20.4% of the trials were discarded due to eye movements).

ERPs were finally digitally filtered (bandwidth: 1.5–30 Hz, slope: 24 dB/octave). The mean amplitude over the [−100 to 0 ms] pre-stimulus period was taken as the baseline for all amplitude measurements.

Topographic Analysis

To facilitate the interpretation of the voltage values recorded at multiple electrodes over the scalp surface, we analyzed the topographic distributions of the potentials and the associated scalp current densities. Scalp potential maps were generated using two-dimensional spherical spline interpolation and radial projection from T3, T4 or Oz (left, right and back views, respectively), which respects the length of the meridian arcs. SCDs were obtained by computing the second spatial derivative of the spline functions used in interpolation (Perrin et al. 1989; Perrin et al. 1987). SCDs do not depend on any assumption about the brain generators or the properties of deeper media, and they are reference free. In addition, SCDs reduce the spatial smearing of the potential fields due to the volume conduction of the different anatomical structures, and thus enhance the contribution of local intracranial sources (Pernier et al. 1988).

Statistical Analysis

MMNs were statistically assessed by t-tests comparing the averaged amplitude of the deviant minus standard difference waveform to zero in the 40 ms time-window around the latency of the peak in the grand-average responses. Results are displayed as statistical probability maps associated to the t-tests at each electrode.

Results

Behavioral measures

Mean reaction time to respond to the disappearance of the fixation cross was 404 ms (SD = 51 ms) in the audiovisual oddball paradigm and 409 ms (SD = 52 ms) in the visual paradigm. The mean error ratios were respectively 3.51% (SD = 3,13%) and 3,24 % (SD = 3,11%). Neither the reaction times nor the error rates significantly differed between the two paradigms.

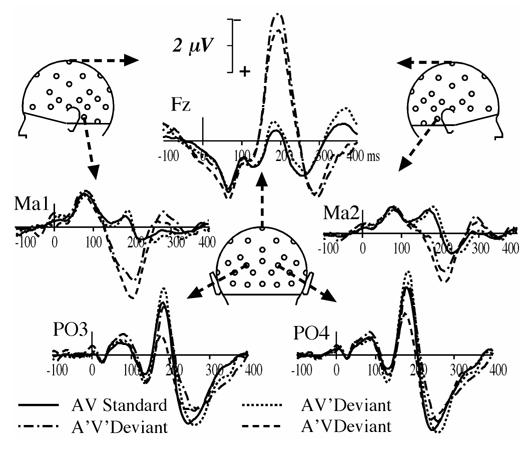

A’V MMN

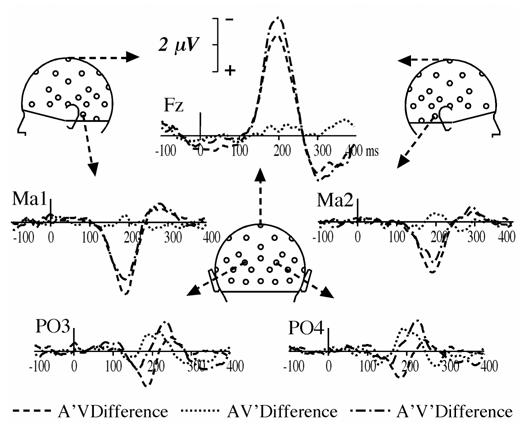

The response to A’V deviants in the audiovisual paradigm began to differ from AV standards at about 120 ms of processing, being more negative at Fz, and more positive at mastoid sites (Ma1 and Ma2) until about 250 ms (Fig. 1). As can be seen on difference waveforms (Fig. 2), the MMN elicited by A’V deviants was maximum at 199 ms at Fz (−3.75 μV) and at 190 ms around the mastoids sites (2.77 μV at Ma1 and 2.14 μV at Imb).

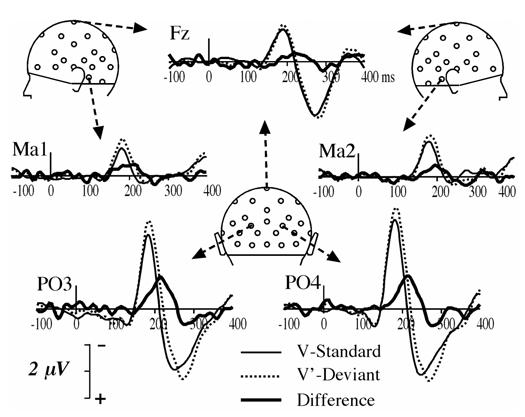

Fig. 1.

Potential waveforms elicited by AV standards, AV’, A’V and A’V’ deviants at a subset of 5 electrodes (Fz, Ma1, Ma2, PO3 and PO4) from 100 ms pre-stimulus to 400 ms post-stimulus. Negative values are plotted upward.

Fig. 2.

Deviant minus AV standard difference waveforms for each AV’, A’V and A’V’ deviant type in the audiovisual oddball paradigm at the same subset of electrodes as in Fig. 1.

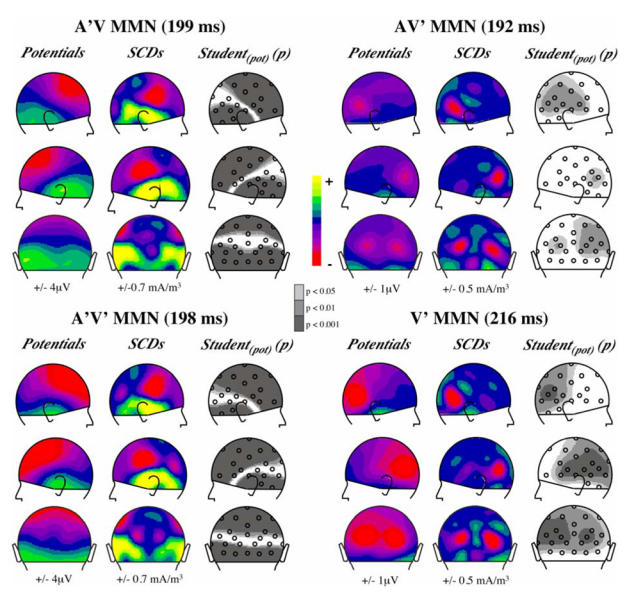

Scalp potential and current density topographies (Fig. 3. upper left panel) display a clear-cut polarity reversal around the supra-temporal plane, as expected from an auditory MMN generated in the auditory cortex (Giard et al. 1990). Student t-tests on the MMN amplitude around its peak latency (199 ms) are significant at most electrodes around the reversal plane.

Fig. 3.

Topographies of the MMNs elicited by each deviant type in the audiovisual oddball paradigm and by the visual deviant in the visual oddball paradigm (lower right panel). Scalp potentials (1st column of each panel), scalp current densities (2nd column) and probability maps associated to Student t-tests (3rd column) are presented in right, left and back views at the latency of the MMN peak indicated above each panel. In potential and SCD maps, half the range of the colour scale is given below each column. In Student t-maps grey areas include electrodes where the averaged potential in a 40 ms time-window around the indicated latency significantly differs from zero.

AV’ MMN

Responses to AV’ deviants and to AV standards are hardly different (Fig. 1). However, the deviant minus standard difference curves (Fig. 2) reveal an occipital deflection that peaks bilaterally at a latency of 192 ms (−0.87 μV at PO4 and −0.63 μV at PO3), with a second peak around 215 ms (−0.72 μV at PO3 and PO4). Fig. 3 (upper right panel) illustrates the bilateral occipital topography of the AV’ MMN at the latency of its largest peak and the statistical significance of its amplitude on the scalp around its peak latency.

A’V’ MMN

Although the responses elicited by A’V’ deviants most resemble those elicited by A’V deviants at fronto-central and mastoid sites (Fig. 1 and Fig. 2), they tend to come near the curves elicited by AV’ deviants at occipital sites (Fig. 2). Fig. 3 (lower left panel) displays the SCD distribution of A’V’ MMN at the latency of its peak in ERPs (198 ms). It clearly shows that it consists of the SCD patterns observed in both the auditory MMN component and the component elicited by AV’ deviants at occipital sites.

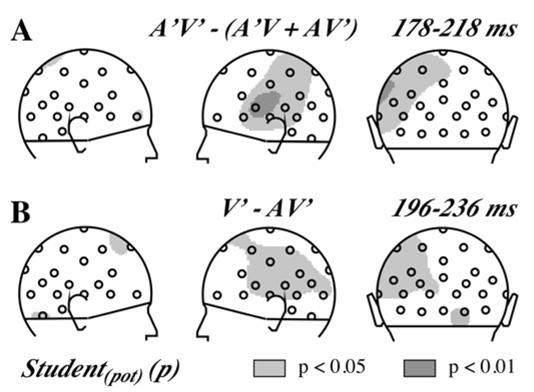

Additivity of the MMNs

The additivity of the MMNs elicited by each deviant type was tested by Student t-tests comparing the A’V’ MMN to the sum of the two AV’ MMN and A’V MMN, averaged in the 178–218 ms latency window (around the peak latency of both the A’V and the A’V’ MMNs at Fz). Fig. 4A shows that additivity is violated at several left parieto-temporal electrodes.

Fig 4.

A. Test of the additivity of the three MMNs elicited in the audiovisual oddball paradigm presented as probability maps associated to Student t-tests in right, left and back views. Grey areas include electrodes where the averaged potential in the indicated time-window significantly differs between the MMN elicited by A’V’ deviants and the sum of the MMNs elicited by A’V and AV’ deviants.

B. Comparison of the MMNs elicited in the audiovisual and the visual oddball paradigm presented as probability maps associated to Student t-tests in right, left and back views. Grey areas include electrodes where the averaged potential in the indicated time-window significantly differs between the AV’ MMN and V’ MMN.

Visual oddball paradigm

Fig. 5 displays the ERPs elicited by V standards and V’ deviants in the visual oddball paradigm, as well as the deviant minus standard difference curve. Like in the audiovisual paradigm, the V’ deviants elicited a bilateral occipital component that peaked at a latency of 215 ms (that is at the latency of the second peak of the AV’ MMN), with a larger amplitude (−1.19 μV at PO3 and −1.21 μV at PO4). Fig. 3 (lower right panel) displays the topography of the vMMN and the brain areas where its amplitude is statistically significant. There was no hint of an anterior component as was found in other studies investigating the visual MMN.

Fig. 5.

ERPs elicited by V standards and V’ deviants and deviant minus standard difference waveforms at a subset of 5 electrodes (Fz, Ma1, Ma2, PO3 and PO4) from 100 ms pre-stimulus to 400 ms post-stimulus.

We further compared the vMMN elicited in the visual paradigm (V’ deviants) and the audiovisual paradigm (AV’ deviants) with Student t-tests in the 40 ms time-window around the peak latency that was common to both vMMNs (216 ms). As shown in Fig. 4B, tests were significant at several electrodes over the left hemiscalp.

Discussion

Auditory deviance of an audiovisual event elicited a classical MMN with a topography typical of activities in the auditory cortex. Visual deviance of an audiovisual object elicited a bilateral occipital component in the same latency range as the auditory MMN. This component was very similar to that found in the visual oddball paradigm. The spatio-temporal characteristics of these two components are consistent with previous reports of an analogue of the MMN in the visual modality (vMMN), and especially with the study by Berti & Schröger (2004) who reported a vMMN with a bilateral occipital topography at a latency of 240 ms.

Thus in our data, the visual variation of an audiovisual event does not appear to elicit an auditory MMN, unlike what has been observed in McGurk and ventriloquist illusions (e.g. Möttönen et al. 2002; Stekelenburg et al. 2004), suggesting that real or illusory perception of an auditory change is necessary to elicit a clear MMN response in the auditory cortex.

In addition, we found that the MMN to deviance on both the auditory and visual dimensions of a bimodal event includes both supratemporal and occipital components, suggesting that the deviance detection processes operate separately in each modality.

However the vMMNs elicited in the visual (V’ MMN) and the audiovisual (AV’ MMN) oddball paradigms were found to significantly differ, while the only difference between these paradigms was the presence or absence of the same sound that was constantly associated with the visual standards and deviants. Two mutually nonexclusive explanations could account for this finding: (1) either an auditory MMN of small amplitude induced by visual change of the audiovisual event by the same phenomenon as in audiovisual illusions, is superimposed to the vMMN and alters its topography; (2) or, if the vMMN reflects a memory-based process (Czigler et al. 2002), an audiovisual event would be encoded differently than a visual-only event in that memory. The first possibility is little supported by the topographies displayed in Figure 3, and the scalp distribution of the significant differences between the V’ MMN and the AV’ MMN is difficult to interpret regarding either hypothesis.

Whatever which hypothesis is correct, the difference between V’ MMN and AV’ MMN implies that the auditory and visual features of the bimodal input have been already partly combined in the afferent sensory systems before the MMN process occurs. This assumption fits with several recent observations that the construction of an integrated percept from bimodal inputs begins at very early stages of sensory analysis, well before the latency of the MMN processes (e.g. Giard and Peronnet 1999; Fort et al. 2002a; Molholm et al. 2002; Lebib et al. 2003; Besle et al. 2004) In addition, the fact that MMN is sensitive to the perceptual dimension of the stimulus (here its multimodal status) rather than to its physical dimension has been well documented in the auditory modality (review in Näätänen and Winkler 1999) and can also explain the existence of an auditory MMN in the McGurk illusion.

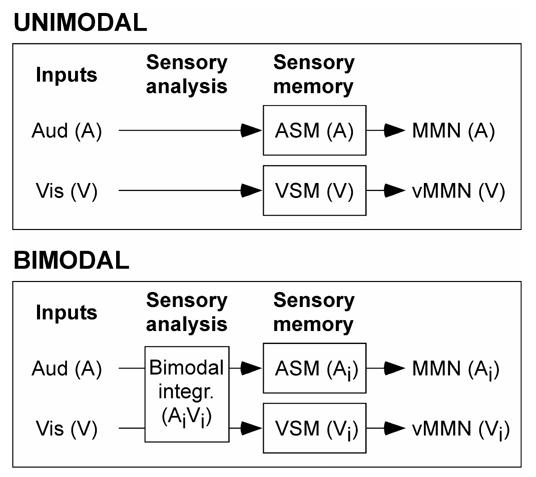

However, neither the auditory nor the visual sensory memory appears to encode an integrated trace of the audiovisual deviants. Indeed, in this case, assuming that the source of a MMN is likely to be close to or at the location of the memory upon which that MMN is based, the three kinds of deviants (A’V, AV’, A’V’) should have elicited MMNs with similar topographies since they would be all based on the same integrated memory representation. Rather, the three MMNs were found to have clearly different topographies with components typical of activities in the respective sensory-specific cortices, indicating that the MMN processes would operate mostly separately in each modality on the different sensory components of the multimodal representation under construction (Fig. 6).

Fig. 6.

A schematic model of the MMN processes for unimodal and bimodal inputs. In unimodal conditions, the model refers to that proposed by Näätänen (1992) for auditory MMN. When auditory (A) and visual (V) inputs are synchronously presented, crossmodal interactions underlying the construction of a multimodal percept can start at early stages of analysis in the afferent sensory sytems and modify the input signals before they are encoded in the respective sensory memories (ASM and VSM). The auditory and visual MMN processes would operate on these new sensory signals (Ai and Vi). Note that, although the stage of crossmodal interactions is outlined exclusively before the MMN processes in this figure for simplification, these interactions may persist for several hundred of milliseconds.

Nonetheless, the hypothesis of complete independence of auditory and visual MMN processes is unlikely because the MMN to the double deviants in the audiovisual paradigm slightly departs from the mere addition of its unisensory components. (For sake of simplification, this interpretation has not been illustrated in Fig. 6). Future experimentation should be conducted to further assess the relationships between the auditory and visual sensory memories and MMN processes.

Footnotes

As, on the one hand, the topography of the auditory MMN is well known and on the other hand, it would have needlessly lengthened the recording session, we chose not to conduct an auditory oddball paradigm.

References

- Alain C, Woods DL, Knight RT. A distributed cortical network for auditory sensory memory in humans. Brain Res. 1998;812:23–37. doi: 10.1016/s0006-8993(98)00851-8. [DOI] [PubMed] [Google Scholar]

- Bental E, Dafny N, Feldman S. Convergence of auditory and visual stimuli on single cells in the primary visual cortex of unanesthetized unrestrained cats. Exp Neurol. 1968;20:341–351. doi: 10.1016/0014-4886(68)90077-0. [DOI] [PubMed] [Google Scholar]

- Bertelson P, Aschersleben G. Automatic visual bias of perceived auditory location. Psychon Bull Rev. 1998;5:482–489. [Google Scholar]

- Berti S, Schroger E. Distraction effects in vision: behavioral and event-related potential indices. Neuroreport. 2004;15:665–669. doi: 10.1097/00001756-200403220-00018. [DOI] [PubMed] [Google Scholar]

- Besle J, Fort A, Delpuech C, Giard M-H. Bimodal speech: Early suppressive visual effects in the human auditory cortex. Eur J Neurosci. 2004;20:2225–2234. doi: 10.1111/j.1460-9568.2004.03670.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruneau N, Roux S, Garreau B, Martineau J, Lelord G. Cortical Evoked Potentials as Indicators of Auditory-Visual Cross-Modal Association in Young Adults. The Pavlovian Journal of Biological Science. 1990;25:189–204. doi: 10.1007/BF02900703. [DOI] [PubMed] [Google Scholar]

- Cahill L, Ohl F, Scheich H. Alteration of auditory cortex activity with a visual stimulus through conditioning: a 2-deoxyglucose analysis. Neurobiol Learn Mem. 1996;65:213–222. doi: 10.1006/nlme.1996.0026. [DOI] [PubMed] [Google Scholar]

- Colin C, Radeau M, Soquet A, Dachy B, Deltenre P. Electrophysiology of spatial scene analysis: the mismatch negativity (MMN) is sensitive to the ventriloquism illusion. Clin Neurophysiol. 2002a;113:507–518. doi: 10.1016/s1388-2457(02)00028-7. [DOI] [PubMed] [Google Scholar]

- Colin C, Radeau M, Soquet A, Deltenre P. Clin Neurophysiol. 2004. Generalization of the generation of an MMN by illusory McGurk percepts: voiceless consonants. [DOI] [PubMed] [Google Scholar]

- Colin C, Radeau M, Soquet A, Demolin D, Colin F, Deltenre P. Mismatch negativity evoked by the McGurk-MacDonald effect: a phonetic representation within short-term memory. Clin Neurophysiol. 2002b;113:495–506. doi: 10.1016/s1388-2457(02)00024-x. [DOI] [PubMed] [Google Scholar]

- Czigler I, Balazs L, Winkler I. Memory-based detection of task-irrelevant visual changes. Psychophysiology. 2002;39:869–873. doi: 10.1111/1469-8986.3960869. [DOI] [PubMed] [Google Scholar]

- Fort A, Delpuech C, Pernier J, Giard MH. Dynamics of cortico-subcorical crossmodal operations involved in audio-visual object detection in humans. Cereb Cortex. 2002a;12:1031–1039. doi: 10.1093/cercor/12.10.1031. [DOI] [PubMed] [Google Scholar]

- Fort A, Delpuech C, Pernier J, Giard MH. Early auditory-visual interactions in human cortex during nonredundant target identification. Brain Res Cogn Brain Res. 2002b;14:20–30. doi: 10.1016/s0926-6410(02)00058-7. [DOI] [PubMed] [Google Scholar]

- Fort A, Giard M-H. Multiple electrophysiological mechanisms of audio-visual integration in human perception. In: Calvert G, Spence C, Stein B, editors. The Handbook of Multisensory Processes. MIT Press; Cambridge: 2004. [Google Scholar]

- Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Giard MH, Perrin F, Pernier J. Brain generators implicated in processing of auditory stimulus deviance. A topographic ERP study. Psychophysiology. 1990;27:627–640. doi: 10.1111/j.1469-8986.1990.tb03184.x. [DOI] [PubMed] [Google Scholar]

- Heslenfeld DJ. Visual mismatch negativity. In: Polich J, editor. Detection of change: event-related potential and fMRI findings. Kluwer Academic Publishers; Dordrecht: 2003. pp. 41–60. [Google Scholar]

- Kenemans JL, Jong TG, Verbaten MN. Detection of visual change: mismatch or rareness? Neuroreport. 2003;14:1239–1242. doi: 10.1097/00001756-200307010-00010. [DOI] [PubMed] [Google Scholar]

- Kropotov JD, Näätänen R, Sevostianov AV, Alho K, Reinikainen K, Kropotova OV. Mismatch negativity to auditory stimulus change recorded directly from the human temporal cortex. Psychophysiology. 1995;32:418–422. doi: 10.1111/j.1469-8986.1995.tb01226.x. [DOI] [PubMed] [Google Scholar]

- Lebib R, Papo D, de Bode S, Baudonniere PM. Evidence of a visual-to-auditory cross-modal sensory gating phenomenon as reflected by the human P50 event-related brain potential modulation. Neurosci Lett. 2003;341:185–188. doi: 10.1016/s0304-3940(03)00131-9. [DOI] [PubMed] [Google Scholar]

- McGurk H, McDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Möttönen R, Krause CM, Tiippana K, Sams M. Processing of changes in visual speech in the human auditory cortex. Brain Res Cogn Brain Res. 2002;13:417–425. doi: 10.1016/s0926-6410(02)00053-8. [DOI] [PubMed] [Google Scholar]

- Näätänen R. Attention and Brain Function. Hillsdale, NJ: 1992. [Google Scholar]

- Näätänen R, Winkler I. The concept of auditory stimulus representation in cognitive neuroscience. Psychol Bull. 1999;125:826–859. doi: 10.1037/0033-2909.125.6.826. [DOI] [PubMed] [Google Scholar]

- Nyman g, Alho K, laurinen P, Paavilainen P, Radil T, Rainikainen K, Sams M, Näätänen R. Mismatch negativity (MMN) for sequences of auditory and visual stimuli: evidence for a mechanism specific to the auditory modality. Electroencephalogr Clin Neurophysiol. 1990;77:436–444. doi: 10.1016/0168-5597(90)90004-w. [DOI] [PubMed] [Google Scholar]

- Pazo-Alvarez P, Cadaveira F, Amenedo E. MMN in the visual modality: a review. Biol Psychol. 2003;63:199–236. doi: 10.1016/s0301-0511(03)00049-8. [DOI] [PubMed] [Google Scholar]

- Pernier J, Perrin F, Bertrand O. Scalp current density fields: concept and properties. Electroencephalogr Clin Neuro. 1988;69:385–389. doi: 10.1016/0013-4694(88)90009-0. [DOI] [PubMed] [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Echallier JF. Spherical splines for scalp potential and current density mapping. Electroencephalogr Clin Neuro. 1989;72:184–187. doi: 10.1016/0013-4694(89)90180-6. [DOI] [PubMed] [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Giard M-H. Mapping of scalp potentials by surface spline interpolation. Electroencephalogr Clin Neuro. 1987;66:75–81. doi: 10.1016/0013-4694(87)90141-6. [DOI] [PubMed] [Google Scholar]

- Ritter W, Deacon D, Gomes H, Javitt DC, Vaughan HG., Jr The mismatch negativity of event-related potentials as a probe of transient auditory memory: a review. Ear Hear. 1995;16:52–67. doi: 10.1097/00003446-199502000-00005. [DOI] [PubMed] [Google Scholar]

- Rosenblum LD, Fowler CA. Audiovisual investigation of the loudness-effort effect for speech and nonspeech events. J Exp Psychol Hum Percept Perform. 1991;17:976–985. doi: 10.1037//0096-1523.17.4.976. [DOI] [PubMed] [Google Scholar]

- Saldana HM, Rosenblum LD. Visual influences on auditory pluck and bow judgments. Percept Psychophys. 1993;54:406–416. doi: 10.3758/bf03205276. [DOI] [PubMed] [Google Scholar]

- Sams M, Aulanko R, Hamalainen H, Hari R, Lounasmaa OV, Lu ST, Simola J. Seeing speech: Visual information from lip movements modifies activity in the human auditory cortex. Neurosci Lett. 1991;127:141–145. doi: 10.1016/0304-3940(91)90914-f. [DOI] [PubMed] [Google Scholar]

- Soto-Faraco S, Navarra J, Alsius A. Assessing automaticity in audiovisual speech integration: evidence from the speeded classification task. Cognition. 2004;92:B13–23. doi: 10.1016/j.cognition.2003.10.005. [DOI] [PubMed] [Google Scholar]

- Stagg C, Hindley P, Tales A, Butler S. Visual mismatch negativity: the detection of stimulus change. Neuroreport. 2004;15:659–663. doi: 10.1097/00001756-200403220-00017. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, Vroomen J, de Gelder B. Illusory sound shifts induced by the ventriloquist illusion evoke the mismatch negativity. Neurosci Lett. 2004;357:163–166. doi: 10.1016/j.neulet.2003.12.085. [DOI] [PubMed] [Google Scholar]

- Vroomen J, Bertelson P, de Gelder B. The ventriloquist effect does not depend on the direction of automatic visual attention. Percept Psychophys. 2001;63:651–659. doi: 10.3758/bf03194427. [DOI] [PubMed] [Google Scholar]