Abstract

This study explores how the lack of first-hand experience with color, as a result of congenital blindness, affects implicit judgments about “higher-order” concepts, such as “fruits and vegetables” (FV), but not others, such as “household items” (HHI). We demonstrate how the differential diagnosticity of color across our test categories interacts with visual experience to produce, in effect, a category-specific difference in implicit similarity. Implicit pair-wise similarity judgments were collected by using an odd-man-out triad task. Pair-wise similarities for both FV and for HHI were derived from this task and were compared by using cluster analysis and regression analyses. Color was found to be a significant component in the structure of implicit similarity for FV for sighted participants but not for blind participants; and this pattern remained even when the analysis was restricted to blind participants who had good explicit color knowledge of the stimulus items. There was also no evidence that either subject group used color knowledge in making decisions about HHI, nor was there an indication of any qualitative differences between blind and sighted subjects' judgments on HHI.

Keywords: additive clustering, color knowledge, similarity

The example of color knowledge in the blind has long been a paradigm of rhetorical evidence for concept empiricism [for examples, see Locke (1) and Hume (2)]; however, research suggests that much more knowledge about color may be available to the blind than these commentators might have assumed. For example, Landau and Gleitman (3) documented how a blind child as young as 5 years had acquired the common color terms and learned the semantic generalization that these terms could apply only to objects with spatiotemporal extent, i.e., cars and dogs, but not ideas or stories. Perhaps more remarkably, Marmor (4) found that similarity judgments about color terms made by 16 congenitally blind college students produced an approximation of Newton's (5) color wheel when subjected to multidimensional scaling, similar to patterns found in sighted participants (e.g., ref. 6, although see ref. 7). It has been proposed that language provides a rich source of information about color terms that forms a basis for such accurate judgments in the blind. Thus, it may become clear from usage that some kinds of categories are marked for color, or that some colors are marked as “warm” or “cool” (e.g., “red hot” vs. “icy blue”), etc. However, the usefulness and generality of such knowledge remains an open question. The present study explores the limits of color knowledge that is merely stipulated through language unaccompanied by the qualia associated with sensual experience; in particular, we highlight how the lack of first-hand experience with color affects implicit judgments about “higher-order” concepts, such as “fruits and vegetables” (FV), but not others, such as “household items” (HHI), and in this regard, we demonstrate the potential of a new line of research in the representation of categories and concepts.

The information approach to explaining conceptual representation, the idea that categories of object concepts can be distinguished from one another by systematic differences in information structure across categories, has gained currency in recent years, especially in the cognitive neuroscience literature, drawing primarily on evidence of category-specific deficits due to focal brain damage (e.g., refs. 8–16; for reviews, see refs. 17–19) and category-specific brain activation patterns observed through functional neuroimaging (refs. 20–28; for a review, see ref. 29). According to a rather stark version of this view (e.g., ref. 9), the broad dissociation of living things from man-made objects is explained by a differential reliance on sensory (weighted more heavily for living things) and functional information (weighted more heavily for man-made objects). Although this dichotomy is surely too sweeping to account for all of the extant data as some commentators have pointed out (e.g., refs. 16 and 30), the information approach provides a useful framework for uncovering meaningful differences in representation and/or discovery procedures across categories. For example, it is likely that the purely visual property of color is more diagnostic (31–33) for the category of FV than for certain other categories. By “diagnostic” we mean that some property or characteristic is used critically in identifying exemplars of a category (in assigning them as members of a category). For instance, although both a banana and a telephone may be yellow, the banana is yellow because it is a banana, but not so for the telephone. Relatedly, one is more inclined to eat a yellow banana than, say, a green or black one, whereas the likelihood of calling one's grandmother is unlikely to change if the telephone goes from green to black. This does not imply that color is among the primary diagnostic properties for FV but rather that color is more diagnostic for these categories than it is for others such as HHI or “vehicles” and that this observation provides a useful wedge for investigating the underlying structure of categories in the mind and brain. Finally, diagnosticity is not a definitional or constitutive relationship; as earlier implied, there are atypical cases of nonyellow fruits that are bananas all the same.

In the experiment reported below, we used the information approach to demonstrate how the differential diagnosticity of color across our test categories interacts with the visual experience of our subjects (blind and sighted) to produce, in effect, a category-specific difference in implicit similarity. More specifically, we tested the hypothesis that there would be differences between blind and sighted participants' similarity judgments for the color-implicating category of FV, especially along the color dimension, but that no noticeable differences would arise between these groups for the category of HHI for which color does not play a conspicuous role.

Implicit pair-wise similarity judgments for all pairs of items within each of our test categories were derived from the results of an odd-man-out triad task, in which, on a given trial, participants chose the semantic outlier given three items. This method, although time consuming, is preferable to pair-wise similarity judgments because each pair is presented many times in several different contexts, i.e., with a different third item, producing implicit judgments of similarity as opposed to the explicit judgments required of a pair-wise task. This is important because subjective similarity is highly context dependent. Consider the great difference between Bill Clinton and George W. Bush in the context of Ronald Reagan, but how similar they become when compared with Winston Churchill (31, 34). Because they are established over many different contexts, similarities based on triad decisions provide a good implicit measure of similarity that allows similarity along multiple dimensions to be naturally incorporated into a single measure (35, 36). The derived similarities for both FV and for HHI for both blind and sighted participants were compared by using cluster analysis and regression analyses. In addition, a separate set of sighted participants were instructed in the odd-man-out task to make their decisions based solely on color for both FV and for HHI. We used these latter similarities as a regressor variable on the “naïve” blind and sighted subjects' data to further assess the extent to which color played a role in shaping their judgments.

Results and Discussion

Correlations Between Similarity Measures.

We calculated correlations to see how well similarity scores produced by sighted participants for each pair (n = 276) of stimulus items predicted those produced by the blind participants. The overall correlations are high for both FV (Pearson r = 0.93) and HHI (Pearson r = 0.97) (Table 1), however significantly lower for FV (z = 3.36; P < 0.01). To attribute the source of this difference to a group difference or to a difference between the categories, it was necessary to determine the baseline level of agreement in similarity scores one could expect for any given set of items. To do this, we calculated the correlations between the similarities produced by each pair of “super-subjects”¶. Table 1 summarizes these comparisons. This analysis reveals significantly higher agreement among sighted participants for HHI (Pearson r = 0.96) than for FV (Pearson r = 0.93; z = 3.35; P < 0.01); a pattern consistent with the overall correlations between blind and sighted and with comparable r values, indicating that the difference is due to a difference in the baseline level of agreement to be expected of each stimulus category. There is also significantly less shared variance among blind participants than among sighted participants for both stimulus categories (HHI: r = 0.92 vs. r = 0.96, z = 4.15, P < 0.01; FV: r = 0.89 vs. r = 0.93, z = 2.75, P < 0.01), indicating more variability in general in the judgments produced by blind participants. Thus, this analysis reveals a main effect of category through higher overall agreement in similarity scores for HHI than for FV and also a main effect of participant group in that for both categories similarity scores produced by blind participants are more variable than those produced by sighted participants. Furthermore, because the blind super-subjects' data correlated as well or better with the sighted super-subjects' data as they did with each other [i.e., blind (B1) vs. blind (B2); see Table 1], there is no evidence of a category by group interaction.

Table 1.

Correlation matrices (Pearson r) for similarity scores

| Higher-order concept | Matrix of comparison of super-subjects | |||

|---|---|---|---|---|

| Household items* | B1 | B2 | S1 | |

| B2 | 0.92 | |||

| S1 | 0.93 | 0.94 | ||

| S2 | 0.94 | 0.95 | 0.96 | |

| Fruits and vegetables† | B1 | B2 | S1 | |

| B2 | 0.89 | |||

| S1 | 0.89 | 0.88 | ||

| S2 | 0.90 | 0.89 | 0.93 |

z score of difference between S and B weights on clusters based on distribution from permutation analysis. B, blind; S, sighted; B1, blind super-subject 1; B2, blind super-subject 2; S1, sighted super-subject 1; S2, sighted super-subject 2.

*B − S = 0.97.

†B − S = 0.93.

This would seem to contradict the hypothesis that differences between blind and sighted participants would emerge for visually diagnostic categories (i.e., FV); however, a more fine-grained analysis, to which we turn presently, reveals that reliable differences between the similarities produced by blind and sighted participants emerge that are restricted to color dimensions and to the category FV.

Analysis of the Structure of Similarity, Using the INDCLUS Model.

In an attempt to reveal the structure present in our similarity data and also to directly compare the similarities produced by blind and sighted participants, we analyzed the similarity data, using the INDCLUS model (37), an individual differences generalization of the ADCLUS additive clustering model (38). This nonhierarchical overlapping clustering technique fits a model wherein the similarity between any two items in the dataset is predicted by the sum of the weights of their shared clusters. The weight on a cluster reflects the relative importance of that cluster in partitioning the similarity data. Both the clusters and the weights are determined by the INDCLUS algorithm based on pair-wise proximity data (i.e., similarities or distances). Individual differences are reflected in the model by the differential assignment of weights to the assigned clusters for different data sources (e.g., individual subjects or separate groups of subjects), blind vs. sighted in our case. Additive clustering can be viewed as a discrete counterpart to multidimensional scaling (see refs. 39 and 40), and is held to be better suited to datasets where the stimuli are more conceptual as opposed to perceptual in nature (38, 41). In the case of the present study, clusters can be explicitly construed as sorting criteria. In more abstract terms, the clusters can be interpreted as reflecting common salient properties of the stimuli, where the properties may be taxonomic categories, functional properties, or perceptual properties, among other possibilities.

The results of the INDCLUS analyses for each of the stimulus categories are summarized in Table 2(for HHI) and Table 3 (for FV). It should be noted that several variables important to these analyses are under the control of the experimenter, including the number of clusters. Deciding how many clusters to include in a given solution is a heuristic function of maximizing the variance accounted for (VAF), maximizing the plausibility of interpretation of the clusters, and minimizing redundancy across clusters.

Table 2.

INDCLUS solution for HHI

| Blind weights | Sighted weights | z score of difference | P | Elements of subset | Interpretation |

|---|---|---|---|---|---|

| 0.492 | 0.521 | −0.68 | 0.25 | Air conditioner, clock, clothes dryer, coffeemaker, dishwasher, fridge, lamp, microwave, radio, stove, television, toaster | Appliances |

| 0.321 | 0.410 | −1.35 | 0.09 | Comb, fork, knife, ladle, nail file, razor, scissors, spatula, spoon, tongs, toothbrush, tweezers | Implements |

| 0.392 | 0.364 | 0.61 | 0.27 | Comb, nail file, razor, scissors, toothbrush, tweezers | Personal hygiene items |

| 0.258 | 0.247 | 0.36 | 0.36 | Coffeemaker, dishwasher, fork, fridge, knife, ladle, microwave, spatula, spoon, stove, toaster, tongs | Kitchen items |

| 0.201 | 0.245 | −0.58 | 0.28 | Clock, lamp, radio, television | Living-space items |

| 0.189 | 0.137 | 1.74 | 0.08 | Fork, knife, ladle, scissors, spatula, spoon, tongs, tweezers | Kitchen/metal implements |

| 0.040 | 0.020 | Additive constants | |||

| 93.0% | 92.8% | VAF within group | Overall VAF = 92.9% |

z score of difference between S and B weights on clusters based on distribution from permutation analysis.

Table 3.

INDCLUS solution for FV

| Blind weights | Sighted weights | z score of difference | P | Elements of subset | Interpretation |

|---|---|---|---|---|---|

| 0.482 | 0.520 | 0.93 | 0.18 | Apple, apricot, banana, cantaloupe, cherry, grapefruit, lemon, lime, pineapple, strawberry, tangerine | Fruits |

| 0.380 | 0.436 | 1.43 | 0.08 | Asparagus, avocado, beet, broccoli, carrot, corn, cucumber, olive, pumpkin, radish, squash, tomato, yam | Vegetables |

| 0.221 | 0.265 | 0.41 | 0.34 | Pumpkin, squash, yam | Winter vegetable |

| 0.298 | 0.263 | −0.69 | 0.25 | Grapefruit, lemon, lime, tangerine | Citrus |

| 0.091 | 0.223 | 2.12 | 0.03* | Apple, cherry, strawberry, tomato | Red things |

| 0.220 | 0.211 | −0.24 | 0.41 | Asparagus, beet, broccoli, carrot, corn, cucumber, radish, squash, yam | Vegetables-2 |

| 0.026 | 0.118 | 2.02 | 0.04* | Asparagus, avocado, broccoli, cucumber, lemon, lime, olive | Green things |

| 0.091 | 0.113 | 1.20 | 0.12 | Apple, apricot, avocado, banana, cantaloupe, cherry, grapefruit, pineapple, pumpkin, strawberry, tangerine, tomato | Fruit-2 |

| 0.081 | 0.044 | Additive constants | |||

| 91.1% | 93.5% | VAF within group | Overall VAF = 92.4% |

z score of difference between S and B weights on clusters based on distribution from permutation analysis.

The six-cluster solution for HHI (Table 2) accounts for 92.9% of the overall variance in the data, accounts for nearly identical amounts of variance in blind (VAF = 93.0%) and sighted (VAF = 92.8%), and assigns comparable weights for blind and sighted for all of the clusters.

The eight-cluster solution for FV (Table 3) accounts for 92.4% of the overall variance in the data, accounts for slightly less of the variance in the blind data (VAF = 91.1%) than in the sighted data (VAF = 93.5%; although not significantly less: z = 1.31, P = 0.19, two-tailed), and assigns comparable weights for blind and sighted to all of the clusters with the exception of the clusters that have been assigned the interpretations “red things” and “green things” (see below).

Testing for significant differences between blind and sighted subjects in the weights on the clusters was accomplished by using a Monte Carlo method to determine critical values. We produced randomizations of the data by randomly assigning all participants, blind and sighted, to artificial blind and sighted groups. We produced 100 randomizations for each of the stimulus categories to produce 100 pairs of similarity matrices for each category. We then analyzed these data with INDLCUS, but instead of having INDCLUS produce the clusters, the clusters were stipulated to be the same as those reproduced in Tables 2 and 3. The distribution of differences in the weights (blind minus sighted) produced by the randomizations (100 differences for each cluster) were then used to perform two-tailed z tests to see whether any of the differences between the weights assigned to true blind and sighted subjects were not explained by chance variation. Of all of the clusters from both HHI and FV, only the red things (z = 2.12; P < 0.05) and the green things (z = 2.02; P < 0.05) clusters produced significant differences in weights between blind and sighted.

We conclude from these data that these differences reflect differences in how color information is used by blind and sighted participants to make similarity judgments on FV, i.e., sighted participants sometimes sort FV by color, but blind participants do not. We now report further evidence bolstering this claim.

Self-Reported Strategies.

The first source of corroborating evidence that sighted participants but not blind participants used color information in making triad decisions comes from postexperiment self-reporting of the strategies that participants used during the triad task. Although 14 of 16 sighted participants reported using color when making triad decisions for FV, only 3 of 16 blind participants reported doing so. This was by far the largest discrepancy observed in self-reported strategies between the two groups (see Table 4). It is noteworthy that color was the second most reported strategy among sighted participants behind the strategy of fruit vs. vegetable. No participants, blind or sighted, reported using color as a criterion for making judgments about HHI.

Table 4.

Subject-reported strategies for odd-man-out triad task

| Strategies reported | Blind who reported strategy, no. | Sighted who reported strategy, no. |

|---|---|---|

| HHI task | ||

| Purpose | 9 | 11 |

| Kitchen/cooking | 10 | 8 |

| Used electricity | 9 | 7 |

| Location in house | 7 | 7 |

| Personal hygiene | 5 | 7 |

| Manipulated temp. | 3 | 7 |

| Size | 3 | 7 |

| Appliances | 7 | 2 |

| How it is used | 3 | 5 |

| Whether sharp | 6 | 1 |

| Appearance | 0 | 5 |

| FV task | ||

| Fruit vs. vegetable | 13 | 16 |

| Color | 3 | 14 |

| Size | 7 | 9 |

| Shape | 8 | 7 |

| How it grows | 4 | 6 |

| Eaten together | 7 | 4 |

| Citrus | 5 | 6 |

| Taste | 3 | 4 |

Comparison with Color-Based Similarities.

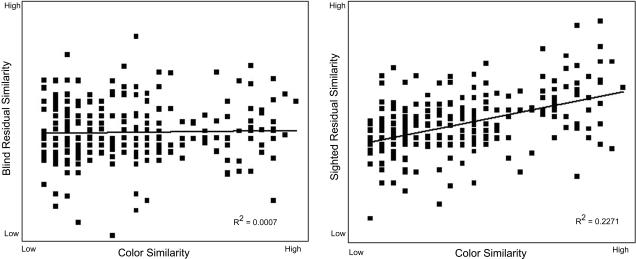

To better assess the amount of variance in the similarity data attributable to color and to corroborate the outcome of the INDCLUS analysis, we compared the blind and sighted subjects' similarity data to independently derived color-based similarities for each set of stimuli. These additional sets of similarities were produced by having 16 sighted participants, who had not participated previously, perform the triad task with the explicit instructions to make decisions “based solely on the colors of the items” in the stimulus set. (Blind participants were not given these instructions.) The resulting color-based similarities were then used as a regressor on the residual variance in the FV data after partialling out VAF by the INDCLUS clusters with the exceptions of the red and green clusters. (The multiple linear regression that included the INDCLUS clusters minus red and green as predictor variables accounted for 91.2% of the total variance in the sighted data and 90.4% of the total variance in the blind data.) The color similarities accounted for 23% (R2 = 0.23; see Fig. 1) of the residual variance in the sighted data and none of the residual variance in the blind data (R2 = 0.0007). A parallel analysis was performed for the HHI data; however, the color similarities for HHI were collinear with four of the binary INDCLUS variables (appliances, implements, kitchen items, and kitchen implements) as revealed by multiple linear regression complicating the interpretation of the result. This analysis revealed no differences between the sighted and blind subjects' similarities, and there was no indication that either group used color for making judgments about HHI.

Fig. 1.

Scatter diagrams of the fit between the pair-wise similarities produced by the color-instructed group (x axes) and the residual similarity in the blind (Left) and sighted (Right) similarities for FV after partialling out VAF by the INDCLUS model minus the “red” and “green” clusters. The color similarities accounted for 23% of the residual variance in the sighted data and none in the blind data. Each point represents one pair of items, e.g., pineapple–banana.

Color Was Not Used Even by Blind Participants with Good Explicit Color Knowledge.

The lack of an effect of color in the similarity scores of the blind participants would be less interesting if it were the case that our blind participants did not know the colors of the items in the stimulus set. Because of this, we repeated the color-based similarity analysis for FV for a subset of the data for which our blind participants had good explicit knowledge of the colors of the items. We excluded those blind participants who performed poorly on an explicit color knowledge test administered in a follow-up interview; and we also excluded items for which accuracy across subjects was poor. Four of the 16 blind participants were unavailable for the follow-up interview, and their data were excluded from the analysis. The twelve participants who participated were asked to provide the colors commonly associated with each stimulus item in the study. The task was straightforward: the experimenter said the name of the test item (e.g., “apple”) and the subject was to respond by saying the most likely color associated with the item (e.g., “red”). We calculated the accuracy across participants for naming the correct color for each of the FV stimuli. Participant's answers were scored as correct (1.0) if they produced the canonical color associated with the item (i.e., red for apple), incorrect (0.0) if they produced an aberrant color (e.g., purple for apple), and with an intermediate value (0.5) if they produced an acceptable but noncanonical color (e.g., green for apple)‖. These scores were averaged across participants, and items that produced an average accuracy of <0.67 were excluded from further analysis. The excluded items (with their accuracy scores) were APRICOT (0.42), AVOCADO (0.17), CANTALOUPE (0.0), CARROT (0.08), CUCUMBER (0.58), GRAPEFRUIT (0.29), PINEAPPLE (0.33), PUMPKIN (0.25), RADISH (0.50), and YAM (0.33). After removing these items, we excluded from further analysis individual participants whose accuracy on the remaining 14 items was <0.67. This resulted in the exclusion of two participants, leaving 10 in the final analysis. The remaining 14 items were APPLE (0.95), ASPARAGUS (0.90), BANANA (0.75), BEET (0.90), BROCCOLI (0.90), CHERRY (1.0), CORN (0.80), LEMON (0.85), LIME (0.80), OLIVE (1.0), SQUASH (0.80), STRAWBERRY (0.90), TANGERINE (0.70), and TOMATO (0.95). The overall mean accuracy was 87% correct; the modal accuracy for participants was 86% correct (min = 71%; max = 100%).

Similarity scores were recalculated by using data from these 10 participants, and using only those triads comprised by the 14 remaining items. For comparison, the sighted similarities were recalculated by using data from 10 corresponding sighted participants and containing only the 14 remaining items. The color similarities accounted for 16% of the residual variance in the sighted and an insignificant amount (R2 = 0.012) in the blind data after accounting for the INDCLUS clusters minus red and green, a pattern consistent with the result reported above.

Summary and Conclusions.

In summary, we have demonstrated that color was a significant component in the structure of implicit similarity for FV for sighted participants but not for blind participants; and this pattern remains even when the analysis was restricted to blind participants who had good explicit color knowledge of the stimulus items. There was also no evidence that either subject group used color knowledge in making decisions about HHI, nor was there an indication of any qualitative differences between blind and sighted subjects' judgments on HHI. These results are in line with claims that the category of FV is unique in part because color attributes are weighted more heavily in terms of salience and diagnosticity for this category than for other categories, e.g., HHI. Silver may be a very reliable color for utensils, perhaps as reliable as redness for apples; yet, color has minimal status conceptually in the case of utensils. Here, we have two visible categories with rather reliable color features, and yet, color is a factor for the sighted for the one category and not the other, and, as a corollary, the similarity spaces differ more from each other for blind and sighted in the one category than in the other. These findings thus represent a category-specific difference in implicit similarity that emerges as a result of the interaction of life-long visual experience (or lack thereof) and the differential diagnosticity of color information across object categories. It remains a question for future research how this difference may be reflected in the neural structure of semantic memory in the blind (for a recent review of plasticity of function in the blind, see ref. 42).

Our results do not indicate that there is no appreciation of concepts such as color in the absence of visual experience. However, they reveal a fundamental difference in the status of color knowledge between the sighted and the blind, and they show how this difference affects the representations of higher-order concepts like FV, in as much as the structure of implicit similarity can be construed to reflect conceptual representation. In sighted individuals, knowledge of the colors of things represents an immediate, possibly automatic, form of knowledge, at least for categories like FV. We take as evidence for this the fact that sighted participants used color spontaneously in the triad task. In contrast, in blind individuals, color knowledge is merely stipulated. Even if strawberries are known to be red, nothing follows in terms of the usefulness of this fact in reasoning about strawberries. We assume that color knowledge in the blind comes primarily from how color terms are used by the sighted community in making everyday linguistic reference and in commonly used expressions (one is more likely to hear “reddish brown” than “reddish green”). Although such knowledge may accurately reflect some of the more well known (and more widely discussed) color associations among objects, as our data from the explicit color knowledge test reflect, there are limits to the usefulness and generality of this knowledge, as suggested by the fact that color did not play a role for blind participants in organizing similarity relations among the concepts represented in our stimuli.

Finally, we hope that emphasizing the differences between our subject populations does not obscure or diminish the relevant and interesting similarities. Perhaps a primary fact to notice is the equivalences in the organization of these concepts in the blind and the sighted, remarkable in light of the consistent differences in their perceptual access to them. The difference being where color (a dimension open to “one sense only,” in Locke's words) is relevantly involved; and even here, the difference is small, accounting only for ≈2% of the overall variance, whereas >90% of the variance was shared between blind and sighted for FV and HHI. This, despite (what must be) pervasive differences in “the world” as confronted by blind and sighted populations.

Materials and Methods

Participants.

Sixteen congenitally blind individuals (seven males, nine females; age range: 27–61, mean = 49 years) from the Philadelphia area participated in the study. Eleven reported being totally blind (no light perception); of these, three reported having had some light perception as a child, which was subsequently lost. The other five reported having some light perception (light and shadow), but no perception of form or color. For the majority of participants (11), the cause of blindness was retinopathy of prematurity. Other causes were chorioretinitis, congenital cataracts, congenital optic atrophy, hereditary glaucoma, and micropthalmia. Six participants held master's degrees, five held bachelor's degrees, and five were high school graduates. They were paid $15 an hour for participating in the study. Each blind participant participated in two testing sessions, one session for FV and one session for HHI. A total of 48 sighted participants recruited from the student population of University of Pennsylvania participated in various control conditions. Thirty-two sighted subjects participated in the naïve version of the triad task, with 16 in the FV condition and 16 in the HHI condition; whereas 16 sighted subjects participated in the color-instruction version of the triad task with eight in the FV condition and eight in the HHI condition. They were paid $10 per hour or were given class credit for a psychology course.

Stimuli.

The stimuli consisted of the names of 24 common FV and 24 HHI. FV were chosen in roughly equal numbers. They were also chosen so that there were six items from each of the color categories red, yellow, orange, and green, which cross-cut the fruit-vegetable category distinction. The HHI that were included consisted of half appliances and half implements (hand-held devices) of various types. (See Tables 2 and 3 for stimuli.) The names of all items were recorded to digital sound files and were presented to participants as triads, using a computer program generated with E-Prime software (Psychology Software Tools, Pittsburgh, PA). All unique triples (not controlling for order) were generated for both sets of stimuli (FV and HHI), yielding a total of 2,024 (24 choose 3) triples for each. Although only one permutation of each unique triad was used, across all of the triads, each word appeared an equal number of times in the first, second, and third position. During a single experimental session participants made decisions (see below) on a randomized eighth of the total number of triads (253), which took ≈1 hour to complete. We define a super-subject as a group of eight subjects who together completed the entire set of 2,024 triads. FV and HHI triads were tested separately and in separate experimental sessions. During a single session, participants only made decisions about one of the categories (FV or HHI) and there were no mixed trials: for example, there were no trials like “apple, strawberry, toothbrush.”

Odd-Man-Out Task.

Participants were instructed in the odd-man-out task in which they were to choose the odd-one-out given a triad of words. Participants heard three words in succession and were to choose the odd-man-out by means of a button press. If the participant heard “apple, strawberry, banana,” and chose “banana” as the odd-man-out, then they would press button 3, corresponding to the serial position of the odd word. Participants were instructed to make their decisions based on the meanings of the words, and not on how the words sounded or how they were spelled. All blind participants and 32 sighted participants (16 for FV and 16 for HHI) were given these naïve instructions. Sixteen additional sighted participants (eight for FV and eight for HHI) were instructed to make their decisions based solely on the color of the items referred to by the words. Each sighted participant participated in only one experimental session. Because the blind participants participated in two experimental sessions, the order was counterbalanced so that one half heard FV in their first session and HHI in the second and vice versa.

Computing Similarity Scores.

Pair-wise similarity scores were generated from the odd-man-out task as follows: every time an odd-man-out was chosen, the similarity score for the remaining pair in the triad was increased by one. For example, if the participant heard “apple, strawberry, banana” and the participant chose “banana” as the odd-man-out, then the similarity score for the apple–strawberry pair was increased by one. Raw similarity scores were generated that ranged from 0 to 44, where 44 was the total number of times that a single pair was presented to 16 participants. Thus, higher numbers indicate greater similarity for a pair of items.

Self-Report of Strategies for Triad Task.

After completing the triad task, participants were asked to list all of the criteria they used in making their decisions. For example, participants reported making their decisions based on fruit vs. vegetable, color, shape, etc.

Test of Explicit Color Knowledge.

After completing both triad sessions, blind participants were asked to report the colors associated with all of the test items presented as a single randomized list.

Acknowledgments

We thank Henry Gleitman, John Trueswell, and the members of the CHEESE seminar and the S.L.T.-S. laboratory for suggestions. We thank Barbara Landau and Edward Smith for their helpful comments in reviewing the manuscript, Frank Havemann for his help with programming and data collection, the Associated Services for the Blind of Philadelphia, and the members of the blind community who participated in the study for making it possible. This study was supported by National Institutes of Health Grant R01 MH67008 (to S.L.T.-S.), the University of Pennsylvania Research Foundation, and University of Pennsylvania School of Arts and Sciences Dissertation Fellowship (to A.C.C.).

Abbreviations

- FV

fruits and vegetables

- HHI

household items

- VAF

variance accounted for.

Footnotes

The authors declare no conflict of interest.

There were four super-subjects per stimulus category, two blind and two sighted. For further explanation of super-subject, see Materials and Methods.

Where possible, these scores were justified by referencing a list of feature norms made available by Ken McRae (30). In deciding how to score “green” for TOMATO, for example, 0.5 was decided on because green appears on the list for TOMATO with a frequency of 12 (meaning 12 of 30 subjects produced the feature) as opposed to red, which has a frequency of 28. In the case of OLIVE, we scored both green and black as correct, because they appeared on the McRae list for OLIVE with near equal frequency (frequencies of 27 and 26, respectively). Such validation was not always possible, however, because not all of our stimuli are represented in the McRae norms.

References

- 1.Locke J. In: An Essay Concerning Human Understanding. Nidditch PH, editor. Oxford: Oxford Univ Press; 1979. [Google Scholar]

- 2.Hume D. A Treatise of Human Nature. Baltimore: Pelican Books; 1969. [Google Scholar]

- 3.Landau B, Gleitman LR. Language and Experience: Evidence from the Blind Child. Cambridge: Harvard Univ Press; 1985. [Google Scholar]

- 4.Marmor GS. J Exp Child Psychol. 1978;25:267–278. doi: 10.1016/0022-0965(78)90082-6. [DOI] [PubMed] [Google Scholar]

- 5.Newton I. Optiks, Book 3. London: Smith and Walford; 1704. [Google Scholar]

- 6.Izmailov CA, Sokolov EN. Psychol Sci. 1992;3(2):105–110. [Google Scholar]

- 7.Shepard RN, Cooper LA. Psychol Sci. 1992;3(2):97–104. [Google Scholar]

- 8.Warrington EK, Shallice T. Brain. 1984;107:829–854. doi: 10.1093/brain/107.3.829. [DOI] [PubMed] [Google Scholar]

- 9.Farah MJ, McClelland JL. J Exp Psychol. 1991;120:339–357. [PubMed] [Google Scholar]

- 10.Warrington EK, McCarthy R. Brain. 1983;106:859–878. doi: 10.1093/brain/106.4.859. [DOI] [PubMed] [Google Scholar]

- 11.Warrington EK, McCarthy R. Brain. 1987;110:1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- 12.Silveri MC, Gainotti G. Cognit Neuropsychol. 1988;5:677–709. [Google Scholar]

- 13.Sacchett C, Humphreys GW. Cognit Neuropsychol. 1992;9:73–86. [Google Scholar]

- 14.Sheridan J, Humphreys GW. Cognit Neuropsychol. 1993;10:143–184. [Google Scholar]

- 15.Caramazza A, Shelton JR. J Cognit Neurosci. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- 16.Crutch SJ, Warrington EK. Cognit Neuropsychol. 2003;20:355–372. doi: 10.1080/02643290244000220. [DOI] [PubMed] [Google Scholar]

- 17.Saffran EM, Schwartz MF. In: Attention and performance XV: Conscious and Nonconscious Information Processing. Umilta C, Moscovitch M, editors. Cambridge, MA: MIT Press; 1994. pp. 507–536. [Google Scholar]

- 18.Forde EME, Humphreys GW. Aphasiology. 1999;13:169–193. [Google Scholar]

- 19.Capitani E, Laiacona M, Mahon B, Caramazza A. Clin Neuropsychol. 2003;20:213–261. doi: 10.1080/02643290244000266. [DOI] [PubMed] [Google Scholar]

- 20.Perani D, Cappa SF, Bettinardi V, Bressi S, Gornotempini M, Matarrese M, Fazio F. NeuroReport. 1995;6:1637–1641. doi: 10.1097/00001756-199508000-00012. [DOI] [PubMed] [Google Scholar]

- 21.Spitzer M, Kwong KK, Kennedy W, Rosen BR, Belliveau JW. NeuroReport. 1995;6:2109–2112. doi: 10.1097/00001756-199511000-00003. [DOI] [PubMed] [Google Scholar]

- 22.Damasio H, Grabowski TJ, Tranel D, Hichwa RD, Damasio AR. Nature. 1996;380:499–505. doi: 10.1038/380499a0. [DOI] [PubMed] [Google Scholar]

- 23.Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- 24.Mummery CJ, Patterson K, Hodges JR, Wise RJ. Proc R Soc London Ser B. 1996;263:989–995. doi: 10.1098/rspb.1996.0146. [DOI] [PubMed] [Google Scholar]

- 25.Grabowski TJ, Damasio H, Damasio AR. NeuroImage. 1998;7:232–243. doi: 10.1006/nimg.1998.0324. [DOI] [PubMed] [Google Scholar]

- 26.Spitzer M, Kischka U, Guckel F, Bellemann ME, Kammer T, Seyyedi S, Weisbrod M, Schwartz A, Brix G. Cognit Brain Res. 1998;6:309–319. doi: 10.1016/s0926-6410(97)00020-7. [DOI] [PubMed] [Google Scholar]

- 27.Perani D, Schnur T, Tettamanti M, Gorno-Tempini M, Cappa SF, Fazio F. Neuropsychologia. 1999;37:293–306. doi: 10.1016/s0028-3932(98)00073-6. [DOI] [PubMed] [Google Scholar]

- 28.Thompson-Schill SL, Aguirre GK, D'Esposito M, Farah MJ. Neuropsychologia. 1999;37:671–676. doi: 10.1016/s0028-3932(98)00126-2. [DOI] [PubMed] [Google Scholar]

- 29.Thompson-Schill SL, Kan IP, Oliver RT. In: The Handbook of Functional Neuroimaging of Cognition. Cabeza R, Kingstone A, editors. Cambridge, MA: MIT Press; 2006. pp. 149–190. [Google Scholar]

- 30.Cree GS, McRae K. J Exp Psychol. 2003;132(2):163–201. doi: 10.1037/0096-3445.132.2.163. [DOI] [PubMed] [Google Scholar]

- 31.Tversky A. Psychol Rev. 1977;84:327–352. [Google Scholar]

- 32.Smith EE, Osherson DN, Rips LJ, Keane M. Cognit Sci. 1988;12:485–527. [Google Scholar]

- 33.McRae K, Cree GS. In: Category-Specificity in Brain and Mind. Forde EME, Humphreys GW, editors. East Sussex, England: Psychology Press; 2002. [Google Scholar]

- 34.Gleitman LR, Gleitman H, Miller C, Ostrin R. Cognition. 1996;58:321–376. doi: 10.1016/0010-0277(95)00686-9. [DOI] [PubMed] [Google Scholar]

- 35.Romney AK, D'Andrade RG. Am Anthropol. 1964;66:146–170. [Google Scholar]

- 36.Fisher C, Gleitman H, Gleitman LR. Cognit Psychol. 1991;23:331–392. doi: 10.1016/0010-0285(91)90013-e. [DOI] [PubMed] [Google Scholar]

- 37.Carrol JD, Arabie P. Psychometrika. 1983;48(2):157–169. [Google Scholar]

- 38.Shepard RN, Arabie P. Psychol Rev. 1979;86:87–123. [Google Scholar]

- 39.Kruskal JB, Wish M. Multidimensional Scaling. Beverly Hills, CA: Sage Publications; 1978. [Google Scholar]

- 40.Carrol JD, Chang JJ. Psychometrika. 1970;35:283–319. [Google Scholar]

- 41.Pruzansky S, Tversky A, Carrol JD. Psychometrika. 1982;47:3–24. [Google Scholar]

- 42.Amedi A, Merabet LB, Bermpohl F, Pascual-Leone A. Curr Direct Psychol Sci. 2005;14:306–311. [Google Scholar]