Abstract

The Network for the Improvement of Addiction Treatment (NIATx) teaches participating treatment centers to use process improvement strategies. A cross-site evaluation monitored impacts on days between first contact and first treatment and percent of patients who started treatment and completed two, three and four units of care (i.e., one outpatient session, one day of intensive outpatient care, and one week of residential treatment). The analysis included 13 agencies that began participation in August 2003, submitted 10 to 15 months of data, and attempted improvements in outpatient (n = 7), intensive outpatient (n = 4) or residential treatment services (n = 4) (two agencies provided data for two levels of care). Days to treatment declined 37% (from 19.6 to 12.4 days) across levels of care; the change was significant overall and for outpatient and intensive outpatient services. Significant overall improvement in retention in care was observed for the second unit of care (72% to 85%; 18% increase) and the third unit of care (62% to 73%; 17% increase); when level of care was assessed, a significant gain was found only for intensive outpatient services. Small incremental changes in treatment processes can lead to significant reductions in days to treatment and consistent gains in retention.

Keywords: quality improvement, addiction treatment, access to care, retention in care

1. Introduction

Isolated efforts, small sample sizes and interventions with limited duration hamper research on quality improvement for treatment of alcohol and drug disorders. Practitioners struggle to implement and sustain quality improvements and investigators grapple with the challenge of measuring and monitoring quality.

The Crossing the Quality Chasm reports from the Institute of Medicine challenged health care systems in the United States to monitor and reduce errors in medical care in order to improve health outcomes; they recommended that health care systems prioritize patient needs, implement evidence-based decision making, and promote efforts to improve outcomes and reduce inefficiency (Institute of Medicine, 2000; 2001). The strategic emphasis on effective, efficient, consumer-oriented care draws heavily on continuous improvement models initially developed to reduce variation in manufacturing processes (e.g., Deming, 1986; Imai, 1986; Juran, 1988) and extended to enhance management of many business processes and relationships (Barney & McCarty, 2003; Eckes, 2001). These approaches reflect the adoption and evolution of the quality improvement concept—continuous improvement in product and process quality by reducing variation and error and meeting customer needs. Applications to business management and health care processes document the potential to enhance operational efficiency (Kaynak, 2003) and strengthen patient outcomes (O’Connor et al., 1996; Pearson et al., 2005).

A 2006 Institute of Medicine report concludes that the Crossing the Quality Chasm framework for improving health care processes is applicable to the treatment of alcohol, drug and mental health disorders and recommends efforts to a) promote patient centered care, b) foster the adoption of evidence based practices, c) develop and use process and outcome measures to enhance quality of care, and d) mandate the use of quality improvement measures (Institute of Medicine, 2006). Attention to errors in the delivery of treatment could foster improvements in the effectiveness of services for alcohol and drug dependence.

The challenge for behavioral health care is to define error in a way that leads to improved patient outcomes. Treatment services with high rates of missed appointments, for example, might consider missed appointments as errors in “engagement” and “retention in care” processes and seek to improve intake processes and reduce the “errors”. A common programmatic response to excess demand is to construct wait lists. In practice, however, wait lists lead to high rates of missed appointments, poor use of limited staff time and patient frustration. An analysis of appointment delays in a mental health clinic found that longer delays increased missed appointments – 23% for next day appointments versus 42% for appointments scheduled 7 days away; each day of delay increased the odds of a cancellation or no-show 12% (Gallucci, Swartz, & Hackerman, 2005). A better solution may be to review system processes and revise care delivery, eliminating steps and processes that contribute to retention errors (e.g., waits for intake and subsequent appointments). Instead of attributing low retention rates to patient motivation, the program modifies intake and treatment processes so that patients enter and remain in care despite ambivalence.

Efforts to apply quality improvement strategies to substance abuse treatment in the U.S. have yet to achieve substantive impact (Fishbein & McCarty, 1997; Institute of Medicine, 1997). The Washington Circle developed measures to assess initiation and engagement in alcohol and drug treatment (Garnick et al., 2002) and the measures are being used in the Health Plan Employer Data and Information Set (HEDIS) to monitor health plan performance (National Committee for Quality Assurance, 2006). Similarly, the Center for Substance Abuse Treatment (CSAT) is promoting the use of state data systems for performance management (Brolin, Seaver, & Nalty, 2005). Demonstrations of improved system performance, however, have yet to be published. The Change Book was developed to facilitate organizational change and the adoption of evidence-based practices (Addiction Technology Transfer Centers, 2000; 2004) and tools have been prepared to assess organizational readiness to change and to support implementation of new clinical practices (Lehman, Greener, & Simpson, 2002;,Simpson, 2002). Observers, however, suggest that the addiction treatment infrastructure may be too weak to respond to demands for quality care (McLellan, Carise, & Kleber, 2003).

There are obvious opportunities to apply process control and process improvement technologies to addiction treatment services. Prior efforts at quality improvement have tended to emphasize quality assurance (inspection of individual case records to meet documentation standards) and counselor skills (manualizing interventions to control inputs and foster replicability). System redesign can be more comprehensive; it facilitates process changes and removes barriers inhibiting more effective care.

The Robert Wood Johnson Foundation (RWJF) and the CSAT collaborated to test the application of process improvement strategies to treatment services for alcohol and drug disorders and sponsored the Network for the Improvement of Addiction Treatment (NIATx). NIATx is a learning community of alcohol and drug abuse treatment programs in the U.S.. Participating programs learn to use process improvement strategies and make organizational changes that reduce days to first treatment, increase admissions, enhance retention in care, and reduce no show rates. This paper presents quantitative data from the cross-site evaluation of the first 18 months of NIATx operation.

Process improvement emphasizes change in organizational processes to enhance customer services. NIATx uses a Plan-Do-Study-Act (PDSA) cycle to identify the problems and generate solutions (Plan), implement new processes (Do), measure and assess the outcomes (Study), and institutionalize the change or make additional changes (Act) (Gitlow et al., 1989;,Shewart, 1939). Five principles guide NIATx’s selection of problems and the implementation of change: 1) understand and involve the customer, 2) fix key problems (the ones that the Chief Executive worries about), 3) pick a powerful change leader, 4) get ideas from outside the organization, and 5) use rapid cycle testing (Gustafson & Hundt, 1995). Programs conduct a “walk-through” to gain customer insights and involve customers through focus groups, satisfaction ratings and advisory boards – agency leadership experiences the patient intake process and gains insight into needed changes in organizational procedures. Key problems are often associated with repetitive and burdensome intake procedures including paperwork, impersonal client interactions, and an inability to schedule intakes in a timely manner. Change leaders lead time-limited change cycles and must have the authority and skill to move the cycle from the beginning to the end. Ideas from transportation, fast-food restaurants, and manufacturing help NIATx members see new ways to approach persistent problems.

NIATx emphasizes rapid cycles. Changes are piloted with limited staff and patients to assess feasibility and initial effects. The pilots allow brief tests and frequent changes until strategies that work are identified or the change cycle is abandoned. Participating agencies strive to remove inefficiencies that delay admissions and contribute to missed appointments and early dropout. Successful strategies are adopted and sustained and cumulatively lead to larger scale improvements in organizational functioning.

RWJF made 10 awards to nonprofit community treatment programs in August 2003 and 15 more awards in January 2005 (each award is for 18 months) (one additional program participates without RWJF resources); CSAT announced 13 grantees in September 2003 for 36 months of support. In addition, five states (Delaware, Iowa, North Carolina, Oklahoma, and Texas) began participating in January 2005. The Center for Health Systems Research and Analysis at the University of Wisconsin directs project management and implementation; the Center for Substance Abuse Research and Policy at Oregon Health & Science University coordinates evaluation activities. The current analysis is limited to the 23 agencies funded in 2003.1

2. Methods

2.1 Selection of NIATx Members

The Robert Wood Johnson Foundation’s application process began with one day introductions to process improvement in Chicago and Portland, Oregon (January 2003); over 800 individuals registered. The symposium modeled features for efficient admission (self-registration and meeting materials at each seat) with customer attention (greeters at the hotel entrance and meeting rooms). The characteristics of effective change leaders were described and the need to reduce waiting was discussed. The session ended with a discussion of the call for proposals.

Eligibility was limited to nonprofit substance abuse treatment organizations with at least 100 admissions per year and the ability to control a continuum of care either within the corporation or through networking arrangements. Applicant organizations submitted 327 six-page letters of intent in March 2003. The brief applications outlined the agency’s view of process improvement, expected accomplishments, leadership capacity and change leader qualifications. Applicants conducted a “walk-through” exercise and described the results. A review of the letters of intent led to invitations to 46 applicants to submit full proposals describing the agency support for process improvement, strategies for implementation, and plans for dissemination. Applicants discussed how they made a simple change based on their walk-through exercise, described the change implementation, noted barriers, and outlined the data that provided evidence of change. Sixteen applicants received site visits and 10 awards were made in August 2003 ($150,000 per award over an 18-month period for staff time, travel to national meetings, upgrades to information systems, and weekly calls with process improvement coaches). Selection criteria included potential community impact, evidence of a leadership commitment, ability to move patients between levels of care, systems to collect and report data, suitable financial health, perceived strength of the project team, and a willingness to participate in a learning community.

The Center for Substance Abuse Treatment issued a separate request for applications in February 2003. Eligibility was limited to nonprofit corporations serving at least 100 individuals each year. Applicants documented their capacity to collect and analyze data on access and retention and described two evidence-based practices they anticipated using to facilitate improvements in access and early retention. Applications were received from 88 programs and 13 awards were made in September 2003. The three-year awards were for approximately $300,000 and supported staff time, travel to national meetings, process improvement coaches, information infrastructure, training, evaluation and dissemination.

Differences in the two programs may promote variation in project impacts. CSAT grantees were relatively uninformed about process improvement when the project began; RWJF grantees piloted process improvements for their application. CSAT awards were for 36 months (versus 18 months for RWJF) and allow an assessment of the value of longer versus shorter project periods. CSAT awards included local evaluation staff. Financial stability was not a selection variable for CSAT awards. All sites received similar coaching, training and participated in annual learning sessions.

2.2. Process Improvement Coaches and Change Projects

Each site received weekly technical assistance telephone calls and quarterly site visits from a Process Improvement Coach – individuals trained in process improvement (most had little experience with alcohol and drug treatment processes); coaches helped sites learn and apply the PDSA cycle. Restricted access areas on the project website facilitated communication among grantees and collected core evaluation measures. Programs learned to implement rapid cycle improvements, monitor impacts, and modify the intervention until achieving access and retention goals. NIATx emphasizes time to treatment and early retention because many programs have wait lists and high initial dropout rates. Successes in these areas are expected to help agencies learn process improvement and to generalize its application.

Change teams designed Change Projects to improve one NIATx Aim (e.g. days to treatment) for one level of care at one program site for a specific patient population (e.g., adolescents, court mandated individuals). A PDSA cycle tested the impact of a small change and multiple change cycles within a Change Project could be conducted successively to improve processes related to the targeted aim. Sites submitted Change Project Reports to the NIATx website monthly. The reports provide an indication of the types of PDSA change cycles that were implemented.

2.3 National Evaluation Design

The evaluation employed a mixed-method (qualitative and quantitative) design to assess change over time. Monthly client-level data on admissions and services monitored and assessed impacts on overall clinic operations. Programs provided data on all patients assessed for a specified level of care. Grantees began baseline data collection in October 2003. The evaluation team also conducted annual site visits and interviews with agency staff (e.g., executive sponsor, change leader, members of the change team and other staff) and quarterly telephone interviews with change leaders. Data systems were modified in January 2005 for a new cohort of RWJF awards. The current report, therefore, is limited to data collected between October 2003 and December 2004 with the first 23 members and examines the quantitative data. The Institutional Review Board at Oregon Health & Science University reviewed and approved evaluation procedures.

An “intent to treat” design tested for the effects of NIATx participation on reduced days to admission and increased retention in care over time. The analysis began with the first month of formal data collection (October 2003). Sites with RWJF support, however, began making changes with the brief letter of intent (8 months prior to the start of data collection); these changes precluded valid pre-change baseline data. A post-only evaluation design, therefore, assessed change using monthly admission data across levels of care. Secondary analyses assessed the influence of level of care.

2.4 Measures

Participating sites extracted patient data from administrative records and completed a spreadsheet of monthly admissions data that recorded date of first contact (i.e., a telephone or in-person request for service), date of the clinical assessment, and dates for the first treatment unit and attendance at the next three units of care. The definition of units of care varied by level of care: one visit for outpatient, one day for intensive outpatient and one week for residential treatment. Descriptive data (e.g., gender, age, race, drug of choice, and criminal justice involvement) were also recorded. Individuals who requested treatment from targeted levels of care were included in the monthly report (agencies with large volumes of assessments were allowed to provide a sample of client data). Client identifiers were deleted and spreadsheets were sent electronically to the national evaluation office. Training was provided on spreadsheet completion; the national evaluation office and coaches from the national program office offered additional clarification and instruction as requested. Site visits and quarterly telephone calls assessed data quality and compliance with reporting expectations. Despite these efforts, programs varied in their compliance with reporting expectations and the quality of the data submitted.

Participants also completed Change Project Reports and submitted the reports on the project website. These reports recorded specific change initiatives and included the change target, the level of care, the location, the population addressed, and results for each PDSA rapid cycle. The reports captured site level results and counted the number and type of change projects. Because change cycles were of limited duration and results were based on small samples, results may not be consistent with the monthly admissions data, which reflect overall program operations within a specified level of care.

2.5 Analyses

Three levels of care (outpatient, intensive outpatient and residential services) were included in the analysis of monthly admissions data if at least 10 months of data were available. The 23 members included one site that did not provide data and one site that did not complete substantive process changes during the study period. Data from methadone and detoxification services were not included because of small numbers (one site each). Six programs did not provide sufficient data (2 outpatient services, 1 intensive outpatient program and 3 residential programs). The analytic sample, therefore, included 13 (23 – 2 – 2 – 6) programs that provided 10 months or more of data: (7 outpatient services, 4 intensive outpatient programs, and 4 residential services – two members provided usable data for two levels of care).

The analysis calculated mean monthly access and retention rates for each site (i.e., one data point per site per month per measure). Polynomial regression models tested for trends within each site in the monthly rate data in conjunction with ARIMA (Autoregressive Integrated Moving Average) models that can accommodate potential serial correlations. ARIMA models allow a correlation structure that can vary depending on time lags between observations within a time series and generate more complex temporal dependence patterns than using fixed/random effect models (Box, Jenkins & Reinsel, 1994; Reinsel, 2003; Shumway & Stoffer, 2006; Weatherhead et al., 1998). The analysis generated linear or quadratic trend regression models for each monthly rate time series and residuals were assessed for temporal correlations. In most cases, neither the autocorrelation function (ACF) nor the partial autocorrelation function (PACF) were significant. Trend patterns within sites were diverse but, in most instances, neither the serial correlations within a site nor cross correlations in residuals across sites were significant. Because the ACFs and PACFs were generally not significant, monthly rates were treated as independent observations and monthly rates were averaged for the 15 services providing usable data (each data point was the average of observations from the 15 sites). Larger and smaller programs were weighted equally because the primary interest was program change over time and, if weighted by number of observations, larger programs would dominate the analysis. A cubic smoothing spline function was fit to the monthly means for days from first contact to the first treatment, days from assessment to first treatment, and the percent of treated patients retained for two, three and four additional units of care to confirm that the trend patterns were similar to those found with the polynomial regression models. Spline functions and linear regressions with ARIMA were calculated with R Statistical Language (R Development Core Team, 2005).

3. Results

Change Project Reports summarized the change cycle efforts and recorded immediate project impacts for the clients treated during the change cycle (typically less than 40 individuals over a one week to one month period). Monthly admissions data monitored longer-term impacts on access and retention measures.

3.1 Change Project Reports

Agency self-reports (Change Project Reports) summarized change cycles, recorded baseline data, and noted rates of improvement at the end of change cycle. Strategies to reduce days to treatment included on-demand scheduling and next day admissions. Other admission improvements included simplification of intake procedures and assessment processes, expanded hours of operation, elimination of redundant paperwork, cross-training, and enhanced telephone responsiveness. Reminder calls, changes in appointment times, staff training to use Motivational Interviewing, and introductions to counselors prior to the first treatment session were among the changes used to enhance retention in care. Programs varied in the number of change projects and change cycles within a change project.2

According to the Change Project Reports, 17 of the 23 programs reported 30 different change projects to reduce days to admission. Across levels of care and across interventions, days to admission declined an average of 43% during the change projects. Twenty of 23 members attempted change projects to enhance retention and reported a 25% average improvement (before versus after) the change project. Change project results, however, were based on small samples (i.e., less than 40 individuals) and were of limited duration (i.e., one to four weeks). Change projects are feasibility demonstrations and do not capture institutionalization of the changes and scaling up the change to apply to larger cohorts of admissions.

3.2 Monthly Admissions Data

Monthly admissions data assessed institutionalization, provided a broad assessment of process improvement impacts, and tracked change over time. Time to treatment was monitored using a) the days between the first client request for service (usually by telephone) and a first face to face session and, b) days between the first face to face session and a first treatment session (most agencies used the first face to face session for assessment and intake processes). Both time periods were examined to assure that waits were reduced and not shifted. Retention in care (percent of clients who received a first treatment session and completed two, three, and four sessions of care) was also assessed. Over the 15 month study period and three levels of care, data were available from 6,016 patients (intensive outpatient = 1,417; residential = 1,686; outpatient = 2,913).

Days to treatment

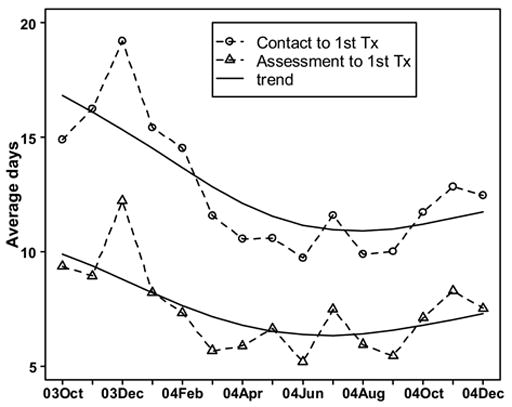

Significant reductions in days to treatment were observed among the programs that attempted changes in residential, intensive outpatient or outpatient care. Table 1 presents summary data and statistical tests. At baseline (October 2003) the mean days to first treatment was 19.6 days; in December 2004 the estimated days to first treatment had declined 37% to 12.4 days. A significant quadratic effect suggested that after achieving initial gains there was erosion back toward the baseline levels. Significant declines (33%) were also observed between assessment and first treatment. See Figure 1 for the trend over time. The smoothing spline function is plotted on top of the unadjusted data points. Improvements varied by level of care.

Table 1.

Changes in days to treatment by level of care

| Level of Care | Improvement days/month | F | p-value | Estimated baseline | Estimated End | % change | Model |

|---|---|---|---|---|---|---|---|

| First contact to First Treatment | |||||||

| Overall | −0.48 | 11.64 | 0.00 | 19.56 | 12.41 | -37% | Q |

| Outpatient Intensive | −0.82 | 30.43 | 0.00 | 32.06 | 19.76 | -38% | Q |

| Outpatient | −0.28 | 5.97 | 0.03 | 11.67 | 7.40 | -37% | L |

| Residential | −0.24 | 1.34 | 0.28 | 11.48 | 7.86 | -32% | L |

|

| |||||||

| Assessment to First Treatment | |||||||

| Overall | −0.26 | 7.49 | 0.01 | 11.79 | 7.93 | -33% | Q |

| Outpatient Intensive | −0.27 | 17.78 | 0.00 | 17.10 | 13.04 | -24% | Q |

| Outpatient | −0.22 | 3.47 | 0.09 | 7.53 | 4.24 | -44% | L |

| Residential | −0.21 | 1.63 | 0.22 | 7.32 | 4.23 | -42% | L |

In the Model column, Q means quadratic trend model and L linear trend model. P-values are based on F statistics for regression models with degrees of freedoms of 1 and 13 for linear trend model and 2 and 12 for quadratic trend model. Baseline estimated for October 1, 2003; end date was December 31, 2004.

Figure 1.

Mean days between contact and first treatment session and between assessment and first treatment session by month of admission.

The estimated days between first contact and first treatment for the seven outpatient programs averaged 32 days in October 2003. Days to treatment declined gradually through April 2004, leveled and ended at about 20 days in December 2004. Regression models found significant linear and quadratic trends (See Table 1) for days from first contact to first treatment and for days from assessment to first treatment. The overall reduction of 38% included a linear decline of 3.3 days per month and a quadratic leveling of 0.16 days per month.

A linear trend was observed for the four intensive outpatient (IOP) services. Days from first request to first IOP treatment declined from almost 12 days in November/December 2003, leveling in February 2004, and ending at about 7 days in December 2004, a 37% decline. A significant quadratic influence was not observed in the overall decline of 0.28 days per month in the time from first contact to first treatment. The decline in days between assessment and first treatment was consistent with the overall reduction in days to admission but did not reach statistical significance.

Significant reductions in days to admission were not observed in the four residential programs. Days between first contact and admission dropped 32% from about 11 days in October 2003 to about 8 days in November/December 2004.

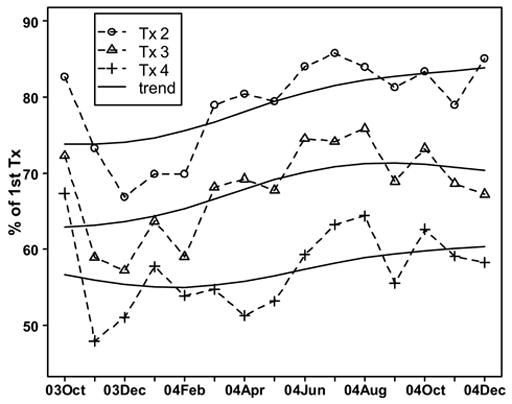

Early Retention

Retention in care increased significantly. See Table 2 for summary data and statistics. At baseline (October 2003), approximately 72% of the patients returned for a second visit (outpatient and intensive outpatient) or second week (residential). The retention rate increased to almost 85% by December 2004. Significant gains were also observed at the third unit of care and retention increased from 62% to nearly 73%. The gains in retention at the fourth unit of care (from 54% to 60%) did not reach statistical significance. Figure 2 plots the changes over time in retention.

Table 2.

Changes in retention by level of care

| Level of Care | Improvement %/month | F | p-value | Estimated baseline | Estimated End | % change | Model |

|---|---|---|---|---|---|---|---|

| % continuation from session 1 to session 2 | |||||||

|

| |||||||

| Overall | 0.85 | 8.34 | 0.01 | 72.09 | 84.84 | 18% | L |

| Outpatient | 0.74 | 3.64 | 0.08 | 63.08 | 74.12 | 17% | L |

| Intensive Outpatient | 0.92 | 5.01 | 0.04 | 78.37 | 92.24 | 18% | L |

| Residential | 1.00 | 2.39 | 0.11 | 74.40 | 89.34 | 20% | L |

|

| |||||||

| % continuation from session 1 to session 3 | |||||||

| Overall | 0.69 | 4.77 | 0.05 | 62.43 | 72.78 | 17% | L |

| Outpatient | 0.54 | 6.69 | 0.20 | 46.20 | 54.28 | 17% | L |

| Intensive Outpatient | 0.52 | 1.22 | 0.29 | 74.14 | 82.01 | 11% | L |

| Residential | 1.07 | 2.06 | 0.14 | 66.58 | 82.71 | 24% | L |

|

| |||||||

| % continuation from session 1 to session 4 | |||||||

| Overall | 0.39 | 1.44 | 0.25 | 54.17 | 60.02 | 11% | L |

| Outpatient | −0.43 | 0.95 | 0.35 | 35.30 | 28.85 | -18% | L |

| Intensive Outpatient | 0.34 | 5.36 | 0.02 | 80.79 | 85.88 | 6% | Q |

| Residential | 1.00 | 1.83 | 0.16 | 61.54 | 76.47 | 24% | L |

In the Model column, Q means quadratic trend model and L linear trend model. P-values are based on F statistics for regression models with degrees of freedoms of 1 and 13 for linear trend model and 2 and 12 for quadratic trend model. Baseline estimated for October 1, 2003; end date was December 31, 2004.

Figure 2.

Percent returning from first treatment session across levels of care by treatment session and month.

Variation was found by level of care. The assessment of improvements in retention in care suggested that the percent of patients retained in care between first treatment and second treatment improved significantly for individuals enrolled in intensive outpatient services; improvements for outpatient (p < .08) and residential services (p < .11) were in the expected direction but did not reach statistical significance. See Table 2. Retention in intensive outpatient services improved 18% from 78% returning for session 2 in October 2003 to a 92% continuation rate in December 2004. Similar but statistically non-significant improvements were observed for retention in outpatient (from 63% to 74%) and residential services (74% to 89%).

4.0 Discussion

Process modifications led to reductions in days to treatment and gains in retention in care. Change project reports suggest that alcohol and drug treatment agencies can make substantial improvements in client access and retention for small cohorts of patients during relatively brief rapid cycles. Analysis of admissions data, moreover, found the reductions in days to admission were robust and observable in large cohorts of patients over longer durations of time. Significant improvements in retention in care were observed at the second and third unit of care when data were aggregated across levels of care. Overall these results support the feasibility of using process improvement and organizational change to reduce days to admission, enhance retention in care and strengthen the quality of treatment for alcohol and drug disorders.

Declines in days to admission may be greater than the 37% reduction recorded in the aggregate analysis. Comparison of the CSAT and RWJF supported sites found much more dramatic improvements in CSAT programs. RWJF sites made substantial changes in admission practices during their application for NIATx participation and gains prior to October 2003 were not captured in the data system. The analysis of the CSAT sites suggested that outpatient and intensive outpatient programs can achieve a 50% to 60% reduction in days to treatment.3

The trend estimates, of course, are only a first order approximation of the complex changes over the study period and should not be used to extrapolate additional gains at NIATX sites. The evaluation will continue to monitor days to admission and retention in care for 18 months beyond the end of RWJF and CSAT support to assess long-term gains and sustainability.

Sustainability of the reduction in days to care, in fact, appears to be an on-going challenge. The improvements in days to care for outpatient included a significant quadratic trend – a decrement in improvement and an increase in days to admission. The pattern may reflect seasonal cycles and continued data collection will test for seasonal effects. More likely, the quadratic trend, although modest, reflects a need to continually monitor and maintain system change and improvements. Thus, staff (both experienced individuals and new replacements) may benefit from continuing supervision and coaching to ensure use of the revised processes. Qualitative interviews suggested that training and monitoring are ongoing issues but informants also attributed reductions in staff turnover to patient-friendly procedures that enhanced client cooperation and simplified counselor workloads.

Improvements in retention in care seem more difficult to achieve. As days to admission decline, programs are likely to increase patient heterogeneity. Retention rates, moreover, are likely to be function of multiple variables. Change efforts, therefore, probably need to address multiple facets before over all improvement is observed. The initial improvements in retention, therefore, are both encouraging (significant effects were observed) and challenging (stronger effects are desired).

Process changes are not without cost; changes in hours of operation may lead to increased payroll costs and increased admissions may mean higher clinical caseloads and more staff turnover because of increased workload or simply stress related to work in an environment that continues to change. Effective process improvements, however, should benefit all stakeholders – the client, the staff and the corporation. Treatment centers are documenting the business case for process improvements and several have increased revenues and attribute the improvement to changes in treatment processes (i.e., treating more patients with less cost). Programs that eliminated scheduled appointments for assessments, moreover, reported dramatic reductions in no-show rates and increases in counselor productivity. Qualitative analyses will examine these issues more closely.

4.1 Limitations

The gains in retention and timeliness were most apparent in the aggregated data. At the program level, there was month-to-month variability in the measures, especially in programs that reported fewer than 15 to 20 admissions per month. Small programs with relatively few admissions per month are likely to have more difficulty observing stable gains. Estimates are more consistent and stable in larger treatment units. Nonetheless, the inclusion of both larger and smaller programs in NIATx suggests that programs of all sizes can benefit from the application of process improvements.

A second limitation is that programs applied to participate and received awards to support participation. The application process selected for agencies that had strong leadership and a commitment to process improvement. Results, therefore, may not generalize to less receptive program environments. Participating programs, moreover, received resources to support staff time for process improvement activity. The ability to make these changes without external support is unclear. The evaluation will continue to monitor program performance for 18 months following the termination of external support to assess long-term sustainability.

Despite access to coaching and funding to support data extraction, some programs struggled with the evaluation and its participation burden; one site provided no data and six provided incomplete data of variable quality. Not every program will be able to fully implement a data driven process improvement initiative. Participating programs had to learn how to use data to make data-driven decisions (Wisdom et al., 2006).

A post-only evaluation design is a weak evaluation design. Although the results are encouraging, other factors may have contributed to the observed effects. The lack of randomization and the lack of comparison clinics that did not attempt process improvements limit confidence in study findings. A randomized clinical trial would provide additional documentation that the observed effects are due to process improvements and not to other cyclical trends. Moreover, programs made many changes and it is not clear which changes were most responsible for the observed improvements – did all changes have a cumulative effect or were one or two changes most influential? The qualitative interviews will provide more insight into the change efforts and will add texture to detailed project analyses. Analysis of data from the second round of applicants will also provide better baseline information and assess the ability to replicate results in a different cohort of treatment centers.

4.2 Implications

NIATx results suggest that it is feasible to apply process improvement strategies to the delivery of care for alcohol and drug disorders. The reductions in days to admission and the improvements in retention rates document the potential for applying these strategies to other facets of the treatment system. Already the agencies responsible for funding alcohol and drug treatment services in Colorado, Delaware, Iowa, North Carolina, and Oklahoma are promoting the use of process improvement and scaling up statewide applications.

Incremental improvements may seem modest but when aggregated over time and across sites they can lead to substantive reductions in days to treatment and to consistent gains in retention in care for thousands of patients. If 1,000 outpatient clinics averaged a 12 day reduction in days to admission, for example, and served 100 patients per year, there would be about 1.2 million fewer days waiting to enter outpatient care (12 days X 1,000 clinics X 100 patients). The potential gain is stunning. Small wins are an important strategy for making large social problems more manageable (Weick, 1984). The application of process improvement helped treatment programs make organizational changes that reduced days to treatment and enhanced retention in care. NIATx demonstrates that process improvement strategies in receptive treatment centers can contribute to enhanced quality of care for alcohol and drug disorders.

Supplementary Material

Acknowledgments

The Network for the Improvement of Addiction Treatment (NIATx) was supported through grants from the Robert Wood Johnson Foundation and cooperative agreements from the Substance Abuse and Mental Health Services Administration, Center for Substance Abuse Treatment. The National Evaluation Team at Oregon Health and Science University was supported through awards from the Robert Wood Johnson Foundation (46876 and 50165), the Center for Substance Abuse Treatment (through subcontracts from Northrop Grumman Corporation -- PIC-STAR-SC-03-044, SAMHSA SC-05-110), and the National Institute on Drug Abuse (R01 DA018282). National Program Office activities at the University of Wisconsin were supported through awards from the Robert Wood Johnson Foundation (48364), and the Center for Substance Abuse Treatment (through a subcontract from Northrop Grumman Corporation -- PIC-STAR-SC-04-035). We are especially grateful for the cooperation and collaboration from the 39 members of NIATx.

Footnotes

Supplementary materials can be found by accessing the online version of this paper at http://dx.doi.org by entering 10.1016/j.drugalcdep.2006.10.009

See Supplementary Table A for more detail on participating agencies and their annual admissions, number of staff, percent of patients who are women and minorities, and levels of care. This can be found by accessing the online version of this paper at http://dx.doi.org by entering 10.1016/j.drugalcdep.2006.10.009.

Case studies and detailed descriptions of change cycles and change projects are available as Supplementary Documents B and C and on the project web site (www.niatx.net) (also see Capoccia et al., 2006). The Supplementary Documents can be found by accessing the online version of this paper at http://dx.doi.org by entering 10.1016/j.drugalcdep.2006.10.009.

See Supplementary Figure D and Table E for these analyses. They can be found by accessing the online version of this paper at http://dx.doi.org by entering 10.1016/j.drugalcdep.2006.10.009.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Addiction Technology Transfer Centers. The Change Book: A Blueprint for Technology Transfer. Addiction Technology Transfer Center National Office; Kansas City, MO: 2000. [Google Scholar]

- Addiction Technology Transfer Centers. The Change Book: A Blueprint for Technology Transfer. 2. Addiction Technology Transfer Center National Office; Kansas City, MO: 2004. [Google Scholar]

- Barney M, McCarty T. The New Six Sigma: A Leader’s Guide to Achieving Rapid Business Improvement and Sustainable Results. Prentice Hall PTR; Upper Saddle River, NJ: 2003. [Google Scholar]

- Box G, Jenkins GM, Reinsel GC. Time Series Analysis: Forecasting and Control. 3. Prentice Hall; Englewood Cliffs, NJ: 1994. [Google Scholar]

- Brolin M, Seaver C, Nalty D. Performance Management: Improving State Systems Through Information-Based Decisionmaking. Center for Substance Abuse Treatment, Substance Abuse and Mental Health Services Administration; Rockville, MD: 2005. [Google Scholar]

- Capoccia VA, Cotter F, Gustafson DH, Cassidy E, Ford J, Madden L, Owens B, Farnum SO, McCarty D, Molfenter T. Making “stone soup”: How process improvement is changing the addiction treatment field”. Jt Comm J Qual Patient Saf in press. [Google Scholar]

- Deming WE. Out of the Crisis. MIT-CAES; Cambridge, MA: 1986. [Google Scholar]

- Eckes G. The Six Sigma Revolution: How General Electric and Others Turned Process Into Profits. John Wiley & Sons, Inc.; New York: 2001. [Google Scholar]

- Fishbein R, McCarty D. Quality improvement for publicly-funded substance abuse treatment services. In: Gibelman M, Demone HW, editors. Private Solutions to Public Problems. 2. Springer Publishing; New York: 1997. pp. 39–57. [Google Scholar]

- Gallucci G, Swartz W, Hackerman F. Impact of the wait for an initial appointment on the rate of kept appointments at a mental health center. Psychiatr Serv. 2005;56:344–346. doi: 10.1176/appi.ps.56.3.344. [DOI] [PubMed] [Google Scholar]

- Garnick DW, Lee MT, Chalk M, Gastfriend DR, Horgan CM, McCorry F, McLellan AT, Merrick EL. Establishing the feasibility of performance measures for alcohol and other drugs. J Subst Abuse Treat. 2002;23:375–385. doi: 10.1016/s0740-5472(02)00303-3. [DOI] [PubMed] [Google Scholar]

- Gitlow H, Gitlow S, Oppenheim A, Oppenheim R. Tools and Methods for the Improvement of Quality. Irwin; Homewood, IL: 1989. [Google Scholar]

- Gustafson DH, Hundt SA. Findings of innovation research applied to quality management principles for health care. Health Edu Q. 1995;20(2):16–33. [PubMed] [Google Scholar]

- Imai M. Kaizen: The Key to Japan’s Competitive Success. McGraw-Hill Publishing Company; New York: 1986. [Google Scholar]

- Institute of Medicine. Managing Managed Care: Quality Improvement in Behavioral Health. National Academy Press; Washington, DC: 1997. [Google Scholar]

- Institute of Medicine. To Err is Human: Building a Safer Health System. National Academy Press; Washington, DC: 2000. [Google Scholar]

- Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. National Academy Press; Washington, DC: 2001. [PubMed] [Google Scholar]

- Institute of Medicine. Improving the Quality of Health Care for Mental and Substance-Use Disorders: Quality Chasm Series. National Academy Press; Washington, DC: 2006. [Google Scholar]

- Juran JM. Juran’s Quality Control Handbook. McGraw-Hill Publishing Company; New York: 1988. [Google Scholar]

- Kaynak H. The relationship between total quality management practices and their effects on firm performance. J Oper Mange. 2003;21:405–435. [Google Scholar]

- Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. J Subst Abuse Treat. 2002;22:197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Carise D, Kleber HD. Can the national addiction treatment infrastructure support the public’s demand for quality care? J Subst Abuse Treat. 2003;25:117–121. [PubMed] [Google Scholar]

- National Committee for Quality Assurance. The State of Health Care Quality: Industry Trends and Analysis. National Committee on Quality Assurance; Washington, DC: 2006. [Google Scholar]

- O’Connor GT, Plume SK, Olmstead EM, Morton JR, Maloney CT, Nugent WC, Hernandez F, Jr, Clough R, Leavitt BJ, Coffin LH, Marrin CA, Wennberg D, Birkmeyer JD, Charlesworth DC, Malenka DJ, Quinton HB, Kasper JF. A regional intervention to improve the hospital mortality associated with coronary artery bypass graft surgery. The Northern New England Cardiovascular Disease Study Group. JAMA. 1996;275:841–846. [PubMed] [Google Scholar]

- Pearson ML, Wu S, Schaefer J, Bonomi AE, Shortell SM, Mendel PJ, Marsteller A, Lauis TA, Rosen M, Keeler EB. Health Services Research. Health Serv Res. 2005;40:987–996. doi: 10.1111/j.1475-6773.2005.00397.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2005. [Google Scholar]

- Reinsel GC. Elements of Multivariate Time Series Analysis. Springer Publishing; New York: 2003. [Google Scholar]

- Shewart WA. Statistical Method from the Viewpoint of Quality Control. Lancaster Press; Lancaster, PA: 1939. [Google Scholar]

- Shumway RH, Stoffer DS. Time Series Analysis and Its Applications. Springer Publishing; New York: 2006. [Google Scholar]

- Simpson DD. A conceptual framework for transferring research to practice. J Subst Abuse Treat. 2002;22:171–182. doi: 10.1016/s0740-5472(02)00231-3. [DOI] [PubMed] [Google Scholar]

- Weatherhead E, Reinsel GC, Tiao GC, Meng X, Choi D, Cheang W, Keller T, DeLuisi J, Wuebbles D, Kerr J, Miller AJ, Oltmans S, Frederick J. Factors affecting the detection of trends: Statistical considerations and applications to environmental data. J Geophys Res. 1998;03:17149–17161. [Google Scholar]

- Weick KE. Small wins: Redefining the scale of social problems. Am Psycho. 1984;39:40 – 49. [Google Scholar]

- Wisdom JP, Ford J, Hayes RA, Edmundson E, Hoffman K, McCarty D. Addiction treatment agencies’ use of data: A qualitative assessment. J Behav Health Serv Res. doi: 10.1007/s11414-006-9039-x. in press. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.