Abstract

This event-related fMRI study examined the impact of processing load on the BOLD response to emotional expressions. Participants were presented with composite stimuli consisting of neutral and fearful faces upon which semi-transparent words were superimposed. This manipulation held stimulus-driven features constant across multiple levels of processing load. Participants made either: (1) gender discriminations based on the face; (2) case judgments based on the words; or (3) syllable number judgments based on the words. A significant main effect for processing load was revealed in prefrontal cortex, parietal cortex, visual processing areas, and amygdala. Critically, enhanced activity in the amygdala and medial prefrontal cortex seen during gender discriminations was significantly reduced during the linguistic task conditions. A connectivity analysis conducted to investigate theories of cognitive modulation of emotion showed that activity in dorsolateral prefrontal cortex was inversely related to activity in the ventromedial prefrontal cortex. Together, the data suggest that the processing of task-irrelevant emotional information, like neutral information, is subject to the effects of processing load and is under top-down control.

Introduction

Considerable benefits are afforded an organism that preferentially and rapidly processes motivationally relevant stimuli appearing outside the focus of attention. The amygdala is thought to play a critical role in this process (Anderson & Phelps, 2001; Ledoux, 1998; Vuilleumier et al., 2001). Imaging studies have shown increased correlated activity between the amygdala and visual cortical areas (Morris, Ohman, & Dolan, 1999) including the middle occipital areas, fusiform gyri, and superior temporal sulci (Pessoa, McKenna, Gutierrez, & Ungerleider, 2002). In the auditory domain, enhanced activity in right amygdala and auditory cortex to angry vocalization has been reported (Sander et al., 2005). Patients with amygdala lesions fail to show enhanced attention to emotional stimuli presented in a rapid serial search task (Anderson & Phelps, 2001). Furthermore, amygdala lesion severity is inversely related to ipsilateral fusiform activity to fearful faces (Vuilleumier, Richardson, Armony, Driver, & Dolan, 2004). Together, these data support the idea that the amygdala interacts with sensory representation areas, increasing the “salience” of emotion-relevant representations (Anderson & Phelps, 2001; Pessoa, 2005; Vuilleumier, 2005). However, the extent to which activation of the amygdala itself occurs independent of the availability of attentional resources (i.e., the degree to which this activation is “automatic”), remains debated (Pessoa, 2005). It is also unclear what factors might constrain emotional responding during attentionally demanding tasks. Reduced emotional responding may arise not only because of innate limitations in perceptual and cognitive processing capacities (i.e., emotional stimuli might not be perceived because processing resources are unavailable), but also from executive attention mechanisms that might bias attention towards non-emotional stimulus representations. To address these questions, the present study used overlapping competing stimuli with a face-attended processing condition, and two face-unattended task conditions with different levels of processing load.

At present, there are two main views concerning the automaticity of emotional processing. One perspective states that the “automatic” processing of emotional stimuli is subserved by the amygdala regardless of the availability of attentional resources (Dolan & Vuilleumier, 2003). In support of this position, imaging work shows significant amygdala activation in response to unattended stimuli (Vuilleumier, 2001; Williams, McGlone, Abbott, & Mattingly, 2005). Furthermore, studies have also shown that the amygdala responds to stimuli presented outside of awareness (Morris, 1998; Morris, 1999; Whalen, 1998; but see also Pessoa, Japee, & Ungerleider, 2005; Pessoa, Japee, Sturman, & Ungerleider, 2006). Support for automaticity has also been inferred from lesion studies (Dolan & Vuilleumier, 2003). For example, studies report that patients with lesions to primary visual cortex show increased amygdala activity to emotional facial expressions (Morris et al., 2001; Pegna, Khateb, Lazeyras, & Seghier, 2004). It is suggested that, at the neural level, automatic emotional processing is subserved by a direct subcortical route through the superior colliculus and pulvinar to the amygdala (Morris, Ohman & Dolan, 1998; Morris et al., 2001).

A second position suggests that processing emotional stimuli, like neutral stimuli, requires the availability of attentional resources (Pessoa et al., 2002; Pessoa, Padmala, & Morland, 2005). This position adopts the biased competition model of attention (Desimone & Duncan, 1995) and Lavie's hypothesis regarding perceptual load (Lavie, 1995). According to the Desimone and Duncan (1995) model, attention is the product of the competition for neural representation. This competition is biased by both bottom-up sensory-driven mechanisms (e.g., visual salience), and top-down influences generated outside of visual cortex (Desimone & Duncan, 1995). Perceptual load determines the extent to which irrelevant distracters are processed (Lavie, 1995), and the degree to which representational features of a visual stimulus require augmentation by top down influences for successful performance. As perceptual load increases, priming of the representational features of the target visual stimulus will increase and, because of stimulus competition, will lead to greater suppression of competing representations (cf. Desimone & Duncan, 1995; Lavie, 1995). In the context of distracting emotional material, greater executive control over behavior should lead to augmentation of task-relevant sensory representations that will reduce the impact of distracters on behavior; conversely, reduction in the efficacy of executive control or increased salience of emotional distracters will result in stronger representation of distracting material at the expense of task-relevant representations and performance (Mitchell, Richell, Leonard, & Blair, 2006). According to the view of Pessoa and his colleagues (2002; 2005), emotional expressions are not a “privileged” category of object immune to the effects of attention, but rather, a class of stimuli that must compete for neural representation. In line with this view, it has been shown that amygdala activation to emotional material is depleted if sufficient demands are placed on attention by a cognitive task (Pessoa et al., 2002; Pessoa et al., 2005).

The apparently contradictory findings in support of automaticity and competition may be due to differences in methodology (Pessoa, 2005). For example, using spatially contiguous competing stimuli, Anderson and his colleagues (2003) show amygdala activation to unattended fearful and disgusted facial expressions. However, the task involved only one level of difficulty in the unattended condition, which raises the possibility that attention was not depleted by task demands (Pessoa et al., 2005). To date, those studies that have used multiple levels of processing load during unattended conditions support the view that emotional processing is subject to the availability of attentional resources (Pessoa et al., 2002; 2005). However, these studies have also differed in terms of the spatial relationship between target stimuli and distracters. In some studies targets and distracters appear separately at fixation and in the periphery (Pessoa et al., 2002), separately and away from fixation (Vuilleumier et al, 2001), overlapping and at fixation (Anderson et al., 2003), or overlapping and in the periphery (Williams et al., 2005). The spatial location of competing stimuli is important given data suggesting that spatially contiguous distracting features can generate greater interference (MacLeod, 1991).

In the current study, we investigate whether automatic amygdala processing of fearful faces exists by using overlapping competing stimuli across three levels of processing load. Participants were presented with composite stimuli consisting of neutral or fearful faces upon which semi-transparent words were superimposed. On each trial participants determined either the gender of the face, the case of the word, or the number of syllables in the word. The use of face-attended conditions and two face-unattended conditions that differ in level of difficulty allowed us to directly test the prediction that if amygdala activity is subject to cognitive modulation, then activity within the amygdala should be affected by attention or processing load (Pessoa et al., 2005). However, if the amygdala responds automatically to emotional stimuli, similar activation should be observed regardless of processing load. Furthermore, we used spatially contiguous distracting features as such formats have been shown to generate greater interference (MacLeod, 1991). We predicted that the increase in processing load would be associated with increased activity in regions previously implicated in attentional selection including left dorsolateral frontal cortex (Bishop et al., 2004; Botvinick, Cohen, & Carter, 2004; Liu et al., 2004; MacDonald, Cohen, Stenger, & Carter, 2000) and parietal cortex (Behrman, Geng, & Shomstein, 2004; Friedman-Hill et al., 2003; Nobre et al., 1997; Posner & Peterson, 1990). Following Pessoa and his colleagues (Pessoa et al., 2002; Pessoa et al., 2005), we predicted that increased processing load would be associated with decreased activity in regions associated with emotional responding, particularly the amygdala and medial frontal cortex. A connectivity analysis was performed to examine whether activity in regions implicated in cognitive control correlated with activity related to emotional responding. We predicted that activity in dorsolateral prefrontal cortex related to attentional control would be inversely related to regions associated with emotional processing.

Methods

Subjects

17 participants completed the study; data from two subjects were discarded due to movement during the course of the task (defined as movement during acquisition of greater than 4mm) leaving 15 subjects in the analysis. Consequently, fMRI data from 15 participants (9 women) aged between 23 and 38 (mean, 26.1; standard deviation, 4.32) were included in the analysis. All subjects granted informed consent, were in good health, and had no past history of psychiatric or neurological disease as determined by a medical exam performed by a licensed physician. The study was approved by the National Institute of Mental Health Institutional Review Board.

MRI data acquisition

Subjects were scanned during task performance using a 1.5 Tesla GE Signa scanner. Functional images were obtained with a gradient echo echo-planar imaging (EPI) sequence (repetition time = 3000 ms, echo time = 40 ms, 64 × 64 matrix, flip angle 90°, FOV 24 cm). Whole brain coverage was obtained with 29 axial slices (thickness, 4-mm; in-plane resolution, 3.75 × 3.75 mm). A high-resolution anatomical scan (three-dimensional Spoiled GRASS; repetition time = 8.1 ms, echo time = 3.2 ms; field of view = 24 cm; flip angle = 20°; 124 axial slices; thickness = 1.0 mm; 256 × 256 matrix) in register with the EPI dataset was obtained covering the whole brain.

Experimental procedure

In the experimental task participants viewed composite grey-scale stimuli consisting of a facial expression overlaid with semitransparent words. For each presentation, the participants were instructed to either judge the gender of the face (male/female), the case of the word (upper/lower case), or the number of syllables of the word (one or two). At the start of each trial a cue appeared (1000ms) indicating which task would follow (gender/case/syllable), followed by fixation (500ms), followed by the composite stimulus 250ms, and concluded with a blank screen for 1250 ms separating each trial. Stimuli were presented in random order in this event-related design. The attentional capture task consisted of 72 semi-transparent words individually superimposed on 36 neutral and their accompanying 36 fearful faces of actors taken from the MacBrain Face Stimulus Set (NimStim) and The Averaged Karolinska Directed Emotional Faces (Lundquist & Litton, 1998). In addition to the task-relevant trials, 72 additional trials (24 in each run) consisted of only a fixation point lasting one TR (3000 ms). Word stimuli were neutral words (mean valence rating 4.8; range 3.05 - 6.00) taken from the Affective Norms for English Words (ANEW; Bradley & Lang, 1999). The composite stimuli were constructed in Adobe Photoshop by adjusting the opacity of the word systematically; fearful and neutral stimuli were matched for luminosity and RBG colour composition. The task itself consisted of 3 runs presented in counter-balanced order. The task associated with each composite stimulus changed in each run so that the participant saw each composite stimulus 3 times (one for each task). A second version of the task was created that reversed the word-valence pairings so that each word was counterbalanced across subjects for valence. There were 6 conditions in the task corresponding to a 2 (emotion: fear and neutral) by 3 (attention: gender, case, syllable) factorial design matrix. The stimuli were presented on a Dell Inspiron laptop computer display projected onto a mirror through a data projector onto a screen that could be seen by the subject through mirrors positioned above the coil of the MRI scanner. Subjects were able to respond with right and left button presses. Responses made outside the 1250 ms response window were not recorded and were excluded from analysis as were errors. The subjects were placed in a light head restraint within the scanner to limit head movement. Before entering the scanner, subjects performed two 24-trial training runs consisting of 8 stimuli from two actors (1 male and 1 female) depicting an example of each of the conditions. The tasks were programmed using E-Prime software (Schneider, 2002). Figure 1 depicts a sample trial and the temporal sequence of events.

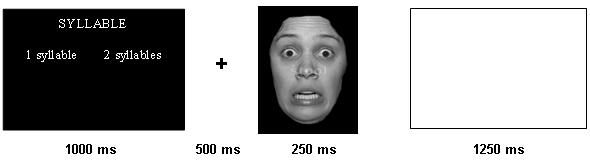

Figure 1.

A trial schematic displaying the time-course of each event. In this example, the instruction screen indicates that syllable discrimination is to be conducted (high processing load).

fMRI Analysis

Data were analyzed within the framework of the general linear model using Analysis of Functional Neuroimages (AFNI; Cox, 1996). Both individual and group-level analyses were conducted. The first six volumes in each scan series, collected before equilibrium magnetization was reached, were discarded. Motion correction was performed by registering all volumes in the EPI dataset to a volume collected shortly before acquisition of the high-resolution anatomical dataset. The EPI datasets for each subject were spatially smoothed (using an isotropic 6mm Gaussian kernel) to reduce the influence of anatomical variability among the individual maps in generating group maps. Next, the time series data were normalized by dividing the signal intensity of a voxel at each time point by the mean signal intensity of that voxel for each run and multiplying the result by 100. Resultant regression coefficients represented a percent signal change from the mean. Following this, regressors depicting each of the six trial types and a seventh for errors were created by convolving the train of stimulus events with a gamma-variate hemodynamic response function to account for the slow hemodynamic response (Cohen, 1997). Linear regression modeling was performed fitting the BOLD signal to the seven regressors. A Baseline plus linear drift and quadratic trend were modeled in each voxel's time series to control for voxel-wise correlated drifting. This produced a beta coefficient and its associated t-statistic for each voxel and each regressor. Voxel-wise group analyses involved transforming single subject beta coefficients into the standard coordinate space of Talairach and Tournoux (Talairach & Tournoux, 1988).

Three analyses were conducted on the functional data:

(1) Region of Interest Analysis of the Amygdala. In line with a previous study investigating emotional attention (Anderson et al., 2003), we conducted a fear versus neutral gender condition contrast within an anatomically specified ROI of the amygdala (taken from the AFNI anatomical ROI database). A functional ROI of voxels significantly activated by this contrast (p < 0.05) was then identified. The percent signal change within this functionally-defined ROI was interrogated with a series of paired t-tests. To test whether clusters that were selectively active to fearful faces were modulated by processing load, we performed paired t-tests on the mean percent signal change within this ROI to fearful versus neutral faces during case discriminations and syllable discriminations.

(2) Whole Brain Analysis. We followed this contrast with our primary whole-brain, voxel-wise repeated measures 3(Processing load) by 2(Emotion) ANOVA analysis using the beta weights of the six regressors of interest as the dependent measures. This was performed on the AFNI 3-dimensional data with subject entered as a random factor and fixed factors were comprised of the beta weights associated with processing load (gender, case and syllable) and emotion (fearful and neutral). This analysis allows the identification of clusters of activity that show a significant main effect of processing load, a significant main effect of emotion, and a significant processing load by emotion interaction. By using the resultant significantly active clusters as ROIs, any regions in the brain that are modulated by task conditions are functionally defined independently. The threshold for significance was set at p < 0.001. To correct for multiple comparisons we performed a spatial clustering operation using AlphaSim (Ward, 2000) with 1,000 Monte Carlo simulations taking into account the entire EPI matrix. All areas of activation are significant at p < 0.01 corrected for multiple comparisons.

For each significantly active cluster yielded by the ANOVA, we conducted follow-up analyses to determine the nature of the main effect using pair-wise comparisons, and paired t-tests to delineate the nature of the interaction. For the main effect of emotion, we compared the percent signal change fearful versus neutral faces collapsed across processing load conditions. For the main effect of processing load, we compared percent signal change to each of the tasks with each other (gender versus case; gender versus syllable; case versus syllable) collapsed across emotions. A series of paired t-tests were used to delineate the nature of the task by emotion interaction. We conducted three paired t-tests examining the effect fearful versus neutral faces during gender discriminations, case discriminations, and syllable discriminations.

(3) Connectivity Analysis. We measured functional connectivity by examining covariation across the whole brain with the activation within a functionally-defined ROI. Each individual subject's time series was converted to common Talairach space according to their structural data set. Within our primary ROI, dorsolateral prefrontal cortex, the voxel with the peak signal change for the main effect of processing load was identified across subjects. This voxel with peak signal change became our “seed” voxel, and the time series within it was extracted. Baseline plus linear drift and quadratic trend were modeled in each voxel's time series to control for voxel-wise correlated drifting. To control for global drifting, the average signal across the whole brain (global signal), was used as a covariate in the correlation analysis. A voxel-wise correlation analysis was conducted between each individual voxel's time series and that of the identified seed. The proportion of the variation in the signal that could be explained by the correlation with the seed was determined by squaring the resulting correlation coefficient. Correlation coefficients were converted to a Gaussian variable using a Fisher transformation formula in order to reduce the skew and normalize the sampling distribution. To identify regions significantly positively or negatively correlated with the target voxel at group level, a one-sample t test was performed on the transformed correlation coefficients.

Results

Behavioral Results

A 3(Processing load: gender, case, syllable) by 2(Emotion: fearful, neutral) repeated-measures ANOVA was conducted on the reaction time data. The mean reaction times and number correct are displayed in Table 1. This revealed a main effect of processing load (F(2,28) = 41.72; p < 0.0005). There was no significant main effect of emotion (F(1,14) = 1.03; ns) or processing load by emotion interaction (F(2,28) = 1.90; ns). Subsequent planned pair-wise comparisons revealed that participants responded significantly more slowly during case discriminations relative to gender discriminations (p < 0.05) and for syllable discriminations relative to case discriminations (p < 0.0005). The same ANOVA was conducted using errors as the dependent variable revealed a main effect of processing load (F(2,28) = 8.18; p < 0.005) and a main effect of emotion (F(1,14) = 8.45; p < 0.05), but no processing load by emotion interaction (F(2,28) = 0.70; ns). Subsequent planned pair-wise comparisons revealed that participants made significantly more errors during syllable discriminations than any other task condition (p < 0.05). Participants made significantly more errors during gender discriminations relative to case discriminations (p < 0.05). The main effect of emotion was characterized by significantly more errors in the presence of fearful relative to neutral faces (p < 0.05).

Table 1.

Behavioral Results

| Reaction Time | Error Rates | |

|---|---|---|

| Gender Discrimination | ||

| Neutral | 773.62 ms (120.93) | 8.2% (3.5) |

| Fear | 766.58 ms (122.34) | 9.1% (5.2) |

| Case Discrimination | ||

| Neutral | 808.33 ms (150.37) | 3.9% (3.9) |

| Fear | 831.32 ms (138.32) | 6.9% (4.8) |

| Syllable Discrimination | ||

| Neutral | 952.88 ms (154.40) | 14.1% (11.3) |

| Fear | 953.15 ms (132.00) | 16.7% (11.1) |

Standard deviation in brackets

Amygdala Region of Interest Analysis

Our first analysis followed a previous study investigating emotional attention (Anderson et al., 2003), and involved a fear versus neutral gender condition contrast within an anatomically specified ROI of the amygdala (taken from the AFNI anatomical ROI database). This yielded significant amygdala activity (p < 0.05; uncorrected). Planned paired t-tests were conducted on the resulting ROI and showed that the right amygdala activity was significantly greater for the fearful versus neutral faces during gender judgments (p = 0.01), but not during case or syllable discriminations (ns; Figure 2).

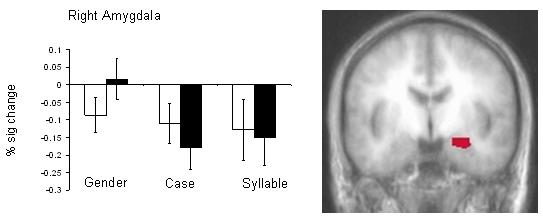

Figure 2.

Significantly active voxels within right amygdala (p < 0.05) are indicated (right) and percent signal change within this ROI plotted across conditions. White bars depict activity for neutral faces and shaded bars represent activity to fearful faces. Activity within this region that distinguished between fearful versus neutral faces during gender discriminations did not do so during case or syllable discrimination conditions (p > 0.10).

Whole brain 3 × 2 ANOVA

Our primary analysis involved a 3 (Processing load: gender, case, syllable) by 2 (Emotion: fearful, neutral) ANOVA. This analysis revealed significant main effects of processing load and emotion, as well as a processing load by emotion interaction (see Table 2).

Table 2.

Main Effect of Processing Load

| Anatomical Location | L/R | BA | x | y | z | F |

|---|---|---|---|---|---|---|

| Syllable > Case > Gender* | ||||||

| Inferior frontal gyrus | L | 9 | −42 | 3 | 22 | 46.55 |

| Middle frontal gyrus | R | 6 | 23 | −4 | 62 | 14.01 |

| Inferior parietal lobule | R | 40 | 44 | −45 | 49 | 16.05 |

| Inferior parietal lobule | L | 40 | −38 | −45 | 53 | 22.68 |

| Middle occipital gyrus | L | 19 | −47.5 | −59.4 | −11.8 | 17.00 |

| Syllable > Case & Gender* | ||||||

| Declive | R | - | 31 | −68 | −30 | 11.59 |

| Declive | R | - | 12 | −72 | −21 | 14.05 |

| Caudate | R | - | 14 | −3 | 12 | 15.78 |

| Thalamus | L | - | −6 | −19 | 15 | 10.95 |

| Thalamus | L | - | −14 | −8 | 9 | 17.63 |

| Gender > Case > Syllable* | ||||||

| Middle frontal gyrus† | L | 8 | −38 | 12 | 52 | 15.73 |

| Medial PFC | R | 32 | 5 | 47 | 4 | 13.75 |

| Medial PFC/ACC | R | 24 | 2 | 32 | 13 | 14.84 |

| Superior temporal gyrus | R | 22 | 53 | −62 | 14 | 19.42 |

| Superior temporal gyrus | L | 39 | −49 | −66 | 19 | 29.94 |

| Middle temporal gyrus | R | 21 | 69 | −16 | −9 | 14.44 |

| Precuneus | R | 31 | 3 | −64 | 23 | 22.84 |

| Gender > Case & Syllable* | ||||||

| Superior frontal gyrus | R | 9 | −1 | 50 | 35 | 13.13 |

| Amygdala | R | - | 25 | −7 | −12 | 27.31 |

| Amygdala | L | - | −17 | −7 | −11 | 16.61 |

| Middle temporal gyrus | L | 21 | −67 | −16 | −11 | 18.64 |

| Gender & Syllable > Case* | ||||||

| Inferior frontal gyrus / Insula | R | 13 | 37 | 26 | 13 | 15.6 |

| Inferior frontal gyrus / Insula | L | 47 | −47 | 17 | −6 | 13.6 |

| Cuneus ◆ | L | 18 | −5 | −89 | 8 | 13.72 |

Main effect of processing load p < 0.01 corrected for multiple comparisons.

Significant at p < 0.05

case > syllable p < 0.10

syllable > gender > case, p < 0.05

Main Effect of Processing Load

We first examined activity that was differentially modulated by the processing load manipulation. The main effect of processing load was revealed in a distributed network including areas implicated in social or emotional processing including the amygdala, medial prefrontal cortex, and superior temporal sulcus, and areas previously implicated in attention including the dorsolateral prefrontal cortex (Botvinick et al., 2004; Liu et al., 2004; MacDonald et al., 2000) and parietal cortex (Behrman, Geng, & Shomstein, 2004; Friedman-Hill et al., 2003; Nobre et al., 1997; Posner & Peterson, 1990). Table 2 shows the regions that were significantly modulated by processing load.

Regions showing reduced activity as processing load increased

Significant activity in two distinct clusters that included portions of the left and right amygdala separately exhibited a main effect of processing load (Figure 3c and 3d). Pair-wise comparisons revealed that, in each case, significantly greater activity in this region was elicited by faces within the gender discrimination task relative to the case or syllable discrimination tasks (p < 0.05). Activity in this region during case and syllable discriminations did not differ significantly from one another (p > 0.10). However, activation within right ACC/ medial prefrontal cortex (BA 24) also showed a significant decline in activation as processing load increased (Gender > Case > Syllable; p < 0.01). A similar pattern of declining activity was found in right and left superior temporal gyri (BA 22 and BA 39 respectively; Gender > Case > Syllable; p < 0.01 bilaterally).

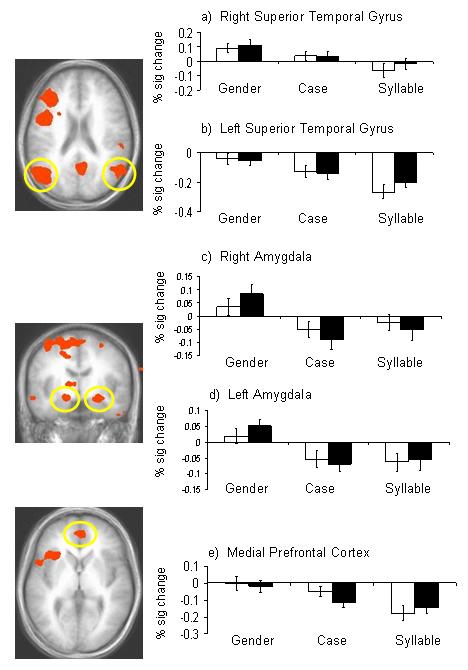

Figure 3.

Images depicting activity derived from the main effect of processing load. These regions were used as functionally defined ROIs and the percent signal change within each was plotted for each condition: a) right superior temporal gyrus; b) left superior temporal gyrus; c)right amygdala; d) left amygdala; e) medial prefrontal cortex. White bars depict activity for neutral faces and shaded bars represent activity to fearful faces. In each case, the observed BOLD response was significantly reduced at higher levels of processing load.

Regions showing increased activity as processing load increased

The mean percent signal change was investigated within functionally defined regions of bilateral parietal cortex and within dorsolateral prefrontal cortex (BA9). Contrasts revealed that for right and left parietal regions, case discriminations elicited significantly greater activation than gender discriminations, and that syllable discriminations elicited significantly greater activation than case discriminations (gender < case < syllable; p < 0.05). Similarly, dorsolateral prefrontal cortex showed increased activation as processing load increased (a trend for gender < case, p < 0.10; and significant for case < syllable, p < 0.001; Figure 4). The results show that the levels of processing load effectively manipulated demands on attention in line with our hypotheses.

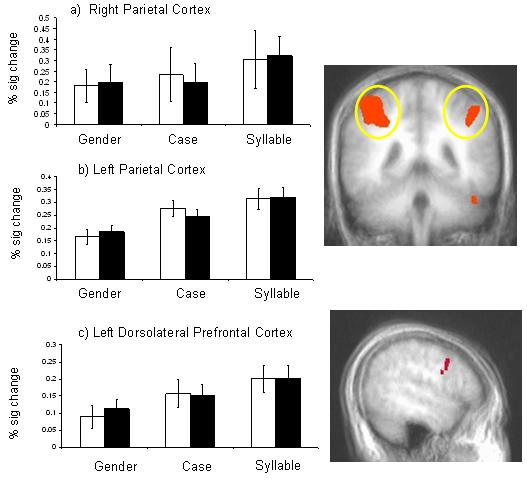

Figure 4.

A main effect of processing load reveals that activity in bilateral parietal cortex (a and b) and left dorsolateral prefrontal cortex (c) increased with task demands. White bars depict activity for neutral faces and shaded bars represent activity to fearful faces.

Main Effect of Emotion

Significant modulation of activity by emotion was seen only in superior occipital cortex (BA 19; Table 3). Pairwise comparisons revealed that activity was significantly greater for fearful versus neutral faces (p < 0.001).

Table 3.

Main Effect of Emotion and Processing Load by Emotion Interaction

| Anatomical Location | L/R | BA | x | y | z | F-value |

|---|---|---|---|---|---|---|

| Main Effect of Emotion | ||||||

| Middle Occipital Gyrus | R | 19 | 46 | −83 | 8 | 26.42 (Fear > Neut) |

| Processing Load by Emotion Interaction | ||||||

| Ventrolateral Prefrontal Cortex | L | 11 | −30 | 39 | −6 | 14.71 |

| Anterior Cingulate | R | 24 | 15 | 27 | 24 | 17.5 |

p < 0.01 corrected for multiple comparisons.

Task by Emotion Interaction

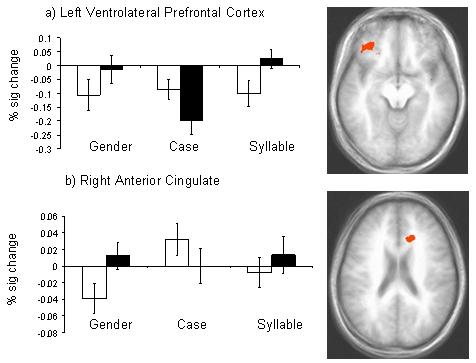

A significant Processing Load by Emotion interaction was revealed in left ventrolateral prefrontal cortex (BA 11) and right anterior cingulate cortex (BA24; p < 0.001; Figure 5). To delineate the nature of this interaction, we conducted a series of paired t-tests. Within the left ventrolateral prefrontal cortex, paired t-tests revealed greater activity to fearful faces than neutral faces during gender and syllable discriminations (p < 0.01), but greater activity to neutral than fearful faces during case discriminations (p < 0.01).

Figure 5.

A significant processing load by emotion interaction was observed in left ventrolateral prefrontal cortex and right anterior cingulate cortex. White bars depict activity for neutral faces and shaded bars represent activity to fearful faces.

Within the right anterior cingulate cortex, paired t-tests revealed significantly greater activity to fearful faces relative to neutral faces during gender discriminations (p < 0.01), and a trend in the syllable condition (p = 0.06). In the case condition, significantly greater activity was observed to neutral than fearful faces (p < 0.05). Figure 5 depicts the areas of activity showing a processing load by emotion interaction and charts the percent signal change to reveal the nature of the interaction.

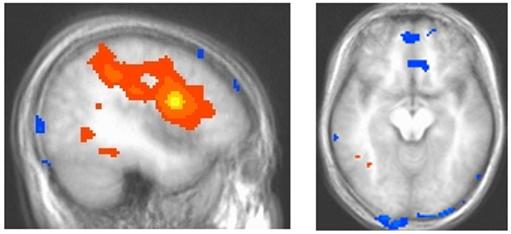

Functional Connectivity

Functional connectivity is a measure of significant correlated activity between a target neural region or voxel and the whole brain. The analysis examines correlated activity across the entire task irrespective of condition, and therefore provides an additional test of hypotheses concerning functional connectivity. Based on the data implicating the left dorsolateral prefrontal cortex in attentional selection or “cognitive control” (Bishop et al., 2004; Botvinick, Cohen, & Carter, 2004; Liu et al., 2004; MacDonald et al., 2000), we chose the voxel with peak intensity within this region as our “seed.” Activity in left dorsolateral prefrontal cortex was positively correlated with a distributed network of activity in ventrolateral prefrontal, temporal, and parietal cortices and negatively correlated with ventromedial prefrontal cortex (Figure 6 and Table 4).

Figure 6.

Connectivity analysis shows the “seed” voxel within dorsolateral prefrontal cortex (yellow) and correlated activity extending to parietal cortex, superior temporal regions, occipital cortex and ventromedial prefrontal cortex. Areas in shades of orange and yellow correlate positively with left dorsolateral prefrontal cortex activity whereas regions in blue (ventromedial prefrontal cortex) correlate negatively with dorsolateral prefrontal cortex responding.

Table 4.

Connectivity Analyses

| Anatomical Location | L/R | BA | x | y | z | t-value* |

|---|---|---|---|---|---|---|

| Regions Showing Positive Connectivity with Left Dorsolateral Prefrontal Cortex | ||||||

| Dorsolateral prefrontal cortex extending to parietal cortex | L/R | −39 | 2 | 22 | 27.63 | |

| Inferior frontal gyrus | R | 9 | 52 | 3 | 26 | 7.55 |

| Middle frontal gyrus | R | 9 | 39 | 28 | 34 | 5.82 |

| Insula | R | 13 | 27 | −27 | 25 | 4.38 |

| Fusiform gyrus | L | 19 | −49 | −46 | −19 | 6.06 |

| Fusiform gyrus | L | 19 | −39 | −61 | −8 | 5.26 |

| Culmen | R | - | 43 | −39 | −33 | 5.96 |

| Regions Showing Negative Connectivity with Left Dorsolateral Prefrontal Cortex | ||||||

| Medial frontal gyrus | L | 10 | −3 | 51 | −2 | −7.42 |

| ACC | R | 24 | 5 | 24 | −1 | −6.11 |

| Inferior temporal gyrus | R | 20 | 60 | −14 | −27 | −6.58 |

| Cuneus | L | 18 | −3 | −102 | 3 | −5.18 |

| Declive | R | - | 57.6 | −70 | −23 | −5.99 |

| L | - | −48 | −70 | −26 | −4.76 | |

p < 0.001, uncorrected

Discussion

This event-related fMRI study investigated the impact of processing load on the neural response to social-emotional stimuli in healthy adults. Significantly enhanced amygdala activity to fearful relative to neutral facial expressions evident during gender judgments was absent during the unattended conditions that included processing load. Moreover, data from the ANOVA also revealed a significant main effect of processing load within the amygdala. Contrary to theories of automaticity, amygdala activity diminished as processing load increased. In addition, BOLD responses in medial prefrontal cortex and sensory representation areas of temporal cortex decreased as processing load increased. Conversely, increased processing load was associated with increased activity in dorsolateral prefrontal and parietal cortices. In accordance, connectivity analysis measuring correlated activity across all task conditions revealed robust correlated activity from dorsolateral prefrontal cortex to parietal and ventrolateral cortices. Furthermore, this activity was negatively correlated with activity in ventromedial prefrontal cortex. The data are consistent with notions that the processing of emotional information, like neutral information, is governed by top-down processes involved in selective attention.

We observed amygdala activity in response to composite stimuli during gender discriminations, but deactivation to the same stimuli when processing load increased. Theories of automaticity predict that the amygdala responds to emotional stimuli independent of attention, perhaps through a subcortical route (Morris, Ohman, & Dolan, 1998; Morris et al., 2001). However, the current data support the view of Pessoa and his colleagues (2002; 2005), which suggests that emotional expressions are not a “privileged” category of object immune to the effects of attention. The fact that neural activity associated with social and emotional stimuli was reduced with increased processing load supports the notion that facial stimuli must also compete for neural representation. This pattern was observed not only in the amygdala, but also within medial prefrontal cortex and superior temporal sulcus. Both regions are implicated in social processing. Regions of medial prefrontal cortex have been implicated in emotional processing (Damasio, Tranel, & Damasio, 1990; Rolls, 1996), perhaps representing reinforcement information provided by the amygdala (Blair, 2004; Kosson et al., 2006). The superior temporal sulcus has been implicated in face processing (Chao et al., 1999; Haxby et al., 2000). Together, our results suggest that the response of the amygdala and medial frontal cortex to social-emotional stimuli is subject to top-down control related to attentional selection.

A previous study has shown that the level of processing load can be successfully modulated by task demands when participants engage in case and syllable discriminations of linguistic stimuli (Rees, Frith, & Lavie, 1997). In our study, increased processing load was associated with increased activity in parietal cortices and a large area of prefrontal cortex extending from dorsolateral cortex to parietal cortex. These regions are implicated in selective attention, particularly posterior parietal cortex (Behrman, Geng, & Shomstein, 2004; Nobre et al., 1997; Nobre et al., 2004; Posner & Peterson, 1990) and dorsolateral prefrontal cortex (Dias et al., 1996; Liu et al., 2004.

In the current study, greater activity to fearful versus neutral faces in middle occipital gyrus was observed across conditions suggesting that some form of emotional processing occurred across task conditions. In addition, the error data (though not the reaction time data) showed a similar main effect of emotion. However, activity in the amygdala was reduced at high levels of processing load. One possibility is that a weaker signal originating in the amygdala was present at higher levels of processing load, but was not detectable via measures of BOLD response due to susceptibility of signal drop-out. Another possibility is that the signal was not detected because it was transient. A recent event-related potentials study showed that activity evident while attending to emotional facial expressions was also evident during inattention; however, during inattention, the response was transient, and extinguished within 220 ms (Holmes, Kiss, & Eimer, 2006). In addition to restrictions placed by limited perceptual processing capacity, stimuli may be selected further through executive attention (i.e., goal-directed) mechanisms that augment the strength of target stimuli at the expense of irrelevant ones (Desimone & Duncan, 1995). This raises the possibility that in the current study, rapid emotional responses may have occurred even at high levels of processing load, but were subject to cognitive modulation through executive attention mechanisms. For example, very early fear-differentiating amygdala activity may occur across task-conditions via a proposed “automatic” sub-cortical route (LeDoux, 1996; Morris et al., 1999; Whalen et al., 1998). Although sufficient to influence activity in occipital gyrus, this early differentiated amygdala signal might be disrupted by the competing cortical representation of the target stimuli during case and syllable discriminations. A recent study involving MEG suggests that the amygdala distinguishes fearful from neutral faces by 30ms following stimulus-onset (Luo et al., in press). At least some animal data would support the existence of these conduction speeds (e.g., Quirk et al., 1995). It should be noted that other work, involving intra-cranial recordings in humans and with more complex pictorial stimuli (scenes rather than faces), have suggested that amygdala activity distinguishes pleasant from unpleasant images between 50 and 150ms (Oya et al., 2002). Additional studies involving methods with high levels of temporal resolution will help address these questions concerning the speed and susceptibility to regulation of amygdala activation.

A significant processing load by emotion interaction was observed in anterior cingulate cortex and ventrolateral prefrontal cortex. Both neural regions showed increased activity in the presence of fearful stimuli during the attended condition (gender discrimination) and during the high processing load condition (syllable discrimination), but increased activity to neutral versus fearful faces during the low processing load condition (case discrimination). It is difficult to interpret this effect with respect to valence given that the amygdala, which is the structure involved in emotional processing often considered most robust to attentional manipulations (e.g., Anderson et al., 2003), was not significantly active at higher levels of processing load. Furthermore, activity in these regions was greater to neutral faces than fearful faces at low levels of processing load in the unattended condition. It is interesting to note that the two areas identified by the interaction are regions frequently implicated in reacting to response conflict and control (see Blair, 2004; Botvinick et al., 2004; Casey et al., 2001; Luo et al., 2006). However, it is unclear why these two regions should show increased activity to fearful relative to neutral expressions in the gender and syllable discrimination conditions but the inverse in the case discrimination conditions.

According to current models of conflict monitoring, cognitive (“attentional”) control is enacted in prefrontal cortex, particularly left dorsolateral prefrontal cortex (MacDonald et al., 2000), in situations of increased conflict (Botvinick, Cohen, & Carter, 2004), and in the presence of threatening distracters (Bishop et al., 2004). With reference to this model, regulation of the emotional response to facial stimuli may have occurred through at least two pathways. During circumstances of greater conflict, regions of prefrontal cortex, particularly medial prefrontal cortex, may eliminate the competitive advantage afforded to emotional stimuli through direct modulatory connections with the amygdala. This functional relationship has been proposed on the basis of animal data (Quirk & Gehlert, 2003), and imaging studies (Pezawas et al., 2005). A second possibility is that regions of prefrontal cortex, particularly lateral prefrontal cortex, manipulate representations of task relevant stimuli in temporal regions at the expense of distracters (Botvinick, Cohen, & Carter, 2004). For example, the modulation of the strength of auditory representations is thought to result from regulatory projections from dorsolateral prefrontal cortex to auditory processing regions in temporal cortex (Barbas et al., 2005; Chao & Knight, 1997). A similar mechanism may exist for object and face representation areas of temporal lobe either through direct projections between these regions and prefrontal cortex, or indirectly through parietal cortex. In addition to interactions between amygdala and prefrontal cortex, emotional regulation may also be achieved through mechanisms associated with attentional selection and manipulating the strength of object representations (Mitchell et al., 2006). Both processes may be active in the current study. In order to further investigate the potential contribution of cognitive control of object representations, we conducted a connectivity analysis.

Following considerable data implicating dorsolateral prefrontal cortex in enacting attentional control over competing stimulus representations (Botvinick, Cohen, Carter, 2004; Liu et al., 2004; MacDonald et al., 2000), we selected the area of peak intensity within this region as our “seed” for the connectivity analysis. In line with our predictions, dorsolateral prefrontal cortex showed correlated activity with ventrolateral prefrontal cortex, and superior parietal regions, and was negatively correlated with activity in the ventromedial prefrontal cortex. Interestingly, this network of activity corresponds closely to areas observed in studies of emotional regulation in which subjects are asked to augment or reduce their emotional response to positive and negative pictorial stimuli (Ochsner, 2005). We suggest that as in our study, emotional regulation may be facilitated by activity in prefrontal and parietal regions that manipulate the salience of task-relevant or “non-emotional” stimulus features in temporal cortex at the expense of representations of emotional information.

An important caveat should be noted with respect to the nature of the stimuli used in the present task. Although the linguistic and facial stimuli used were spatially overlapping, there is a possible confound between processing load and the spatial extent of attentional focus. The spatial location subject to attentional focus was smaller in the word-related task than in the gender discrimination task. Thus, some critical emotional cues, particularly the mouth, might fall outside the spatial location of attentional focus. As a consequence, reduced amygdala activation in the linguistic conditions might not be due to higher processing load, but rather to the narrower spatial focus of attention. In the study by Anderson et al. (2003), in which amygdala responses to attended and unattended fearful faces were the same, the spatial extent of overlapping objects had been kept constant. However, the main conclusions of our study are not affected by this potential confound; activity in neural regions associated with emotional or social processing (medial PFC and superior temporal gyrus) diminished with increasing processing load.

This event-related fMRI study provides further evidence for the notion that significant amygdala BOLD response to behaviorally peripheral social or emotional stimuli requires attention. Amygdala activity, like activity in medial prefrontal cortex and superior temporal sulcus, diminished when processing load increased. In contrast, activity in attention-related regions increased with increased processing load. Collectively, the data suggest that the processing of emotional information, like neutral information, is subject to top-down control. The results also have implications for models of emotional regulation (Ochsner, 2005). We suggest that regions including the dorsolateral prefrontal cortex and parietal regions may contribute to emotional regulation by manipulating the salience of task-relevant stimuli at the expense of emotional stimuli. This function could operate in parallel to the regulatory impact that medial prefrontal cortex is thought to have on the amygdala (Quirk & Gehlert, 2003; Pezawas et al., 2005). Future work concerning the impact of emotional stimuli on goal-directed behavior will help determine the relative importance of each, and the parameters that determine its function.

Acknowledgements

This research was supported by the Intramural Research Program of the NIH:NIMH.

The authors would like to extend special thanks to Gang Chen and Ziad Saad for assistance with the fMRI connectivity analysis.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JDE. Neural correlates of the automatic processing of threat facial signals. The Journal of Neuroscience. 2003;23(13):5627–5633. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Barbas H, Medalla M, Alade O, Suski J, Zikopoulos B, Lera P. Relationship of prefrontal connections to inhibitory systems in superior temporal areas in the rhesus monkey. Cereb Cortex. 2005;159:1356–1370. doi: 10.1093/cercor/bhi018. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Geng JJ, Shomstein S. Parietal cortex and attention. Curr Opin Neurobiol. 2004;142:212–217. doi: 10.1016/j.conb.2004.03.012. [DOI] [PubMed] [Google Scholar]

- Bishop S, Duncan J, Brett M, Lawrence AD. Prefrontal cortical function and anxiety: controlling attention to threat-related stimuli. Nat Neurosci. 2004;72:184–188. doi: 10.1038/nn1173. [DOI] [PubMed] [Google Scholar]

- Blair RJR. The roles of orbitofrontal cortex in the modulation of antisocial behavior. Brain and Cognition. 2004;551:198–208. doi: 10.1016/S0278-2626(03)00276-8. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Cohen JD, Carter CS. Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn Sci. 2004;8(12):539–546. doi: 10.1016/j.tics.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Technical report C-1. The Center for Research in Psychophysiology, University of Florida; Gainesville, FL: 1999. Affective norms for English words (ANEW): Stimuli, instruction manual and affective ratings. [Google Scholar]

- Casey BJ, Forman SD, Franzen P, Berkowitz A, Braver TS, Nystrom LE, Thomas KM, Noll DC. Sensitivity of prefrontal cortex to changes in target probability: A functional MRI study. Human Brain Mapping. 2001;13:26–33. doi: 10.1002/hbm.1022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Knight RT. Prefrontal deficits in attention and inhibitory control with aging. Cereb Cortex. 1997;71:63–69. doi: 10.1093/cercor/7.1.63. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A, Haxby JV. Are face-responsive regions selective only for faces? Neuroreport. 1999;10:2945–2950. doi: 10.1097/00001756-199909290-00013. [DOI] [PubMed] [Google Scholar]

- Cohen MS. Parametric analysis of fMRI data using linear systems methods. Neuroimage. 1997;6(2):93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;293:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Tranel D, Damasio H. Individuals with sociopathic behaviour caused by frontal damage fail to respond autonomically to social stimuli. Behavioural Brain Research. 1990;41:81–94. doi: 10.1016/0166-4328(90)90144-4. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Dias R, Robbins TW, Roberts AC. Dissociation in prefrontal cortex of affective and attentional shifts. Nature. 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Vuilleumier P. Amygdala automaticity in emotional processing. Ann N Y Acad Sci. 2003;985:348–355. doi: 10.1111/j.1749-6632.2003.tb07093.x. [DOI] [PubMed] [Google Scholar]

- Friedman-Hill SR, Robertson LC, Desimone R, Ungerleider LG. Posterior parietal cortex and the filtering of distractors. Proc Natl Acad Sci U S A. 2003;100(7):4263–4268. doi: 10.1073/pnas.0730772100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Journal of Cognitive Neuroscience. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Holmes A, Kiss M, Eimer M. Attention modulates the processing of emotional expression triggered by foveal faces. Neurosci Lett. 2006;394(1):48–52. doi: 10.1016/j.neulet.2005.10.002. [DOI] [PubMed] [Google Scholar]

- Kosson DS, Budhani S, Nakic M, Chen G, Saad ZS, Vythilingam M, et al. The role of the amygdala and rostral anterior cingulate in encoding expected outcomes during learning. NeuroImage. 2006;294:1161–1172. doi: 10.1016/j.neuroimage.2005.07.060. [DOI] [PubMed] [Google Scholar]

- Lavie N. Perceptual load as a necessary condition for selective attention. J Exp Psychol Hum Percept Perform. 1995;21(3):451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused?: selective attention under load. Trends Cogn Sci. 2005;9(2):75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Lavie N, Hirst A, de Fockert JW, Viding E. Load theory of selective attention and cognitive control. J Exp Psychol Gen. 2004;1333:339–354. doi: 10.1037/0096-3445.133.3.339. [DOI] [PubMed] [Google Scholar]

- LeDoux J. The Emotional Brain. Weidenfeld & Nicolson; New York: 1998. [Google Scholar]

- Liu X, Banich MT, Jacobson BL, Tanabe JL. Common and distinct neural substrates of attentional control in an integrated Simon and spatial Stroop task as assessed by event-related fMRI. Neuroimage. 2004;22(3):1097–1106. doi: 10.1016/j.neuroimage.2004.02.033. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Ohman A. The Averaged Karolinska Directed Emotional Faces. Stockholm: 1998. [Google Scholar]

- Luo Q, Nakic M, Wheatley T, Richell R, Martin A, Blair RJR. The neural basis of implicit moral attitude--An IAT study using event-related fMRI. NeuroImage. 2006;30(4):1449–1457. doi: 10.1016/j.neuroimage.2005.11.005. [DOI] [PubMed] [Google Scholar]

- Luo Q, Holroyd T, Jones M, Hendler T, Blair RJR. Neural dynamics for facial threat processing as revealed by gamma band synchronization using MEG. NeuroImage. 2006 doi: 10.1016/j.neuroimage.2006.09.023. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald AW, 3rd, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288(5472):1835–1838. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- MacLeod CM. Half a century of research on the Stroop effect: an integrative review. Psychol Bull. 1991;109(2):163–203. doi: 10.1037/0033-2909.109.2.163. [DOI] [PubMed] [Google Scholar]

- Mitchell DGV, Richell RA, Leonard A, Blair RJR. Emotion at the expense of cognition: Psychopathic individuals outperform controls on an operant response task. Journal of Abnormal Psychology. doi: 10.1037/0021-843X.115.3.559. in press. [DOI] [PubMed] [Google Scholar]

- Morris JS, DeGelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124(Pt 6):1241–1252. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan R. A subcortical pathway to the right amygdala mediating “unseen” fear. Proceedings of the National Academy of Science USA. 1999;96:1680–1685. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393:467–470. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Maquet P, Frith CD, Vandenberghe R, Mesulam MM. Orienting attention to locations in perceptual versus mental representations. J Cogn Neurosci. 2004;163:363–373. doi: 10.1162/089892904322926700. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Sebestyen GN, Gitelman DR, Mesulam MM, Frackowiak RS, Frith CD. Functional localization of the system for visuospatial attention using positron emission tomography. Brain. 1997;120(Pt 3):515–533. doi: 10.1093/brain/120.3.515. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Gross JJ. The cognitive control of emotion. Trends Cogn Sci. 2005;9(5):242–249. doi: 10.1016/j.tics.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Oya H, Kawasaki H, Howard MA, III, Adolphs R. Electrophysiological responses in the human amygdala discriminate emotion categories of complex visual stimuli. Journal of Neuroscience. 2002;22:9502–9512. doi: 10.1523/JNEUROSCI.22-21-09502.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pegna AJ, Khateb A, Lazeyras F, Seghier ML. Discriminating emotional faces without primary visual cortices involves the right amygdala. Nat Neurosci. 2004;81:24–25. doi: 10.1038/nn1364. [DOI] [PubMed] [Google Scholar]

- Pessoa L. To what extent are emotional visual stimuli processed without attention and awareness? Curr Opin Neurobiol. 2005;15(2):188–196. doi: 10.1016/j.conb.2005.03.002. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Sturman D, Ungerleider LG. Target visibility and visual awareness modulate amygdala responses to fearful faces. Cereb Cortex. 2006;163:366–375. doi: 10.1093/cercor/bhi115. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Ungerleider LG. Visual awareness and the detection of fearful faces. Emotion. 2005;52:243–247. doi: 10.1037/1528-3542.5.2.243. [DOI] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proc Natl Acad Sci U S A. 2002;99(17):11458–11463. doi: 10.1073/pnas.172403899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Padmala S, Morland T. Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. NeuroImage. 2005;281:249–255. doi: 10.1016/j.neuroimage.2005.05.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pezawas L, Meyer-Lindenberg A, Drabant EM, Verchinski BA, Munoz KE, Kolachana BS, et al. 5-HTTLPR polymorphism impacts human cingulate-amygdala interactions: a genetic susceptibility mechanism for depression. Nat Neurosci. 2005;86:828–834. doi: 10.1038/nn1463. [DOI] [PubMed] [Google Scholar]

- Posner MI, Petersen SE. The attention system of the human brain. Annu Rev Neurosci. 1990;13:25–42. doi: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- Quirk GJ, Gehlert DR. Inhibition of the amygdala: key to pathological states? Ann N Y Acad Sci. 2003;985:263–272. doi: 10.1111/j.1749-6632.2003.tb07087.x. [DOI] [PubMed] [Google Scholar]

- Quirk GJ, Repa C, LeDoux JW. Fear conditioning enhances short-latency auditory responses of lateral amygdala neurons: parallel recordings in the freely behaving rat. Neuron. 1995;15:1029–1039. doi: 10.1016/0896-6273(95)90092-6. [DOI] [PubMed] [Google Scholar]

- Rees G, Frith CD, Lavie N. Modulating irrelevant motion perception by varying attentional load in an unrelated task. Science. 1997;278:1616–1619. doi: 10.1126/science.278.5343.1616. [DOI] [PubMed] [Google Scholar]

- Rolls ET. The orbitofrontal cortex. Phil Trans Roy Soc,B. 1996;351:1433–1443. doi: 10.1098/rstb.1996.0128. [DOI] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Pourtois G, Schwartz S, Seghier ML, Scherer KR, et al. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. NeuroImage. 2005;284:848–858. doi: 10.1016/j.neuroimage.2005.06.023. [DOI] [PubMed] [Google Scholar]

- Schneider W, Eschman A, Zuccolotto A. E-Prime User's Guide Version 1. Psychology Software Tools Inc.; Pittsburgh: 2002. [Google Scholar]

- Talairach J, Tournoux P. Co-planar Stereotaxic Atlas of the Human Brain. Thieme; Stuttgart: 1988. [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends Cogn Sci. 2005;912:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;303:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci. 2004;711:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Ward D. Simultaneous Interference for fMRI data. 2000 from http://afni.nimh.nih.gov/pub/dist/doc/manuals/AlphaSim.pdf.

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. The Journal of Neuroscience. 1998;18:411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams MA, McGlone F, Abbott DF, Mattingley JB. Differential amygdala responses to happy and fearful facial expressions depend on selective attention. NeuroImage. 2005;242:417–425. doi: 10.1016/j.neuroimage.2004.08.017. [DOI] [PubMed] [Google Scholar]