Abstract

Objective

To determine faculty perceptions about an evidence-based peer teaching assessment system.

Methods

Faculty members who served as instructors and assessors completed questionnaires after year 1 (2002-2003) and year 4 (2005-2006) of the peer assessment program. Factors were evaluated using a Likert scale (1 = strongly disagree; 5 = strongly agree) and included logistics, time, fostering quality teaching, diversifying teaching portfolios, faculty mentoring, and value of structured discussion of teaching among faculty members. Mean responses from instructors and assessors were compared using student t tests.

Results

Twenty-seven assessors and 52 instructors completed survey instruments. Assessors and instructors had positive perceptions of the process as indicated by the following mean (SD) scores: logistics = 4.0 (1.0), time = 3.6 (1.1), quality teaching = 4.0 (0.9), diversifying teaching portfolios = 3.6 (1.2), faculty mentoring = 3.9 (0.9), and structured discussion of teaching = 4.2 (0.8). Assessors agreed more strongly than instructors that the feedback provided would improve the quality of lecturing (4.5 vs. 3.9, p < 0.01) and course materials (4.3 vs. 3.6, p < 0.01).

Conclusion

This peer assessment process was well-accepted and provided a positive experience for the participants. Faculty members perceived that the quality of their teaching would improve and enjoyed the opportunity to have structured discussions about teaching.

Keywords: teaching, assessment, peer assessment, faculty development, academic training

INTRODUCTION

Within the university community, faculty members and administrators are responsible for the assessment of teaching to assure it meets the requirements of governing boards and accrediting agencies and to improve the quality of teaching. The Accreditation Council for Pharmacy Education includes a specific guideline about peer teaching assessment (guideline 26.2).1 Accordingly, peer assessment of teaching has been an integral component of regular faculty evaluations for many years.

Peer teaching assessments are encouraged at many institutions but to the best of our knowledge are not based on validated teaching models that include both objective and subjective evidence of teaching quality as well as faculty mentoring.2,3 In 2002, the assessment committee at the University of Colorado at Denver and Health Sciences Center (UCDHSC) School of Pharmacy developed a structured peer assessment process based on 3 validated teaching models: Mastery Teaching, Clinical Supervision, and Cognitive Coaching.4-7 The goal of this process was to develop an evidenced-based peer teaching assessment system that supported faculty mentoring, fostered quality teaching, and diversified teaching portfolios.

Validated Teaching Models

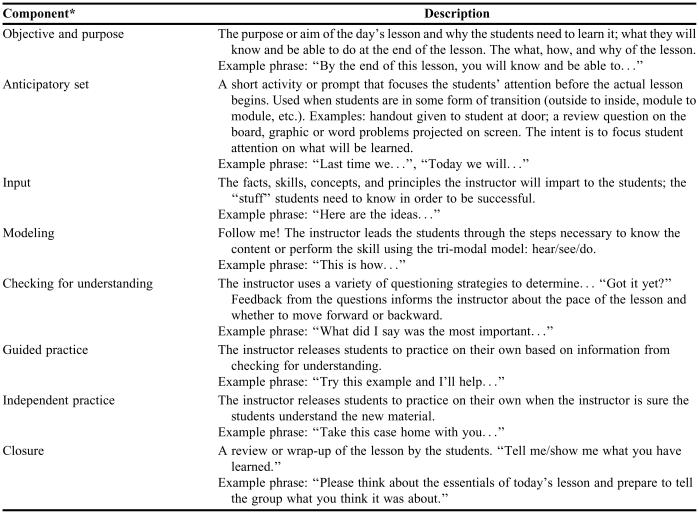

Madeline Hunter developed the mastery teaching model, which states that effective teachers use a methodology when planning and presenting a lesson.4 Specifically, a properly designed and taught lesson contains some or all of the following 8 elements that enhance and maximize learning: (1) objective and purpose, (2) anticipatory set, (3) input, (4) modeling, (5) checking for understanding, (6) guided practice, (7) independent practice, and (8) closure (Table 1). It follows the philosophy of “tell them what you are going to say, say it, then tell them what you said” with the purpose of providing information within a lesson structure to encourage students to attain stated outcomes or objectives deemed relevant for mastery.

Table 1.

Description of Components of the Hunter Mastery Teaching Model4

*Components do not have to occur in a particular order nor do all components have to be present for a lesson to be effective

The clinical supervision model for developing the instructional skills of teachers was introduced by Robert Goldhammer in 1969 and then revised by Morris Cogan in 1973.5,6 The basic format of clinical supervision includes 3 parts: (1) a conference with the instructor to preview objectives and the lesson plan, (2) direct observation of instruction, and (3) a follow-up conference with the instructor for feedback on strengths and areas for potential improvement. This model is designed to facilitate teachers' professional growth by systematically helping them build on strengths while eliminating counterproductive behavior and acknowledges that each teacher and teaching situation is different. This model complements Hunter's mastery teaching model in that it provides the framework for discussion and observation of the implementation of the 8 elements.

Lastly, cognitive coaching is based on the idea that metacognition (being aware of one's own thinking processes) fosters independence in learning.7 The 3 stages of metacognition important to cognitive coaching are: (1) developing a plan of action (eg, “What should I do first?”), (2) maintaining and monitoring action (eg, “How am I doing?”), and (3) evaluating action (eg, “How well did I do?”). These stages encourage self-reflection and self-management before, during, and after an action. Coaches (ie, assessors) act as facilitators using questioning strategies to assist the person being coached (ie, instructor) to self-reflect on performance and generate new ideas and behaviors for the future. Questions are open-ended, encourage reflective thinking regarding the instructor's own behavior, and promote improved decision making. The coach is not the expert providing solutions, but uses dialogue to help instructors become aware of their thinking and learning. This model was incorporated in both pre- and post-observation meetings with the assessors and instructor through the use of trigger questions provided for discussion. Cognitive coaching is complementary to clinical supervision because it supports the process of dialogue between peers, improving the assessor's and instructor's ability to engage in conversations about teaching.

Peer Assessment Process

A peer assessment tool (available by request from the author) was developed based on these 3 models and designed for use in various teaching modalities (eg, didactic courses, professional skills laboratories). At the beginning of the peer assessment process, instructors were provided with the assessment instrument (with a glossary of terms) along with other resources including Bloom's Taxonomy, Mager's Tips on Learning Objectives, Miller's “Framework for Assessment,” and Diamond's Syllabus Checklist.8-11 These additional resources were provided to support faculty members in writing syllabi and learning objectives and to help develop appropriate-level activities for the lesson taught (eg, skills-based course included demonstration/modeling; didactic course included input and knowledge). All of the materials served as a guide to the peer assessment process for both assessors and instructors.

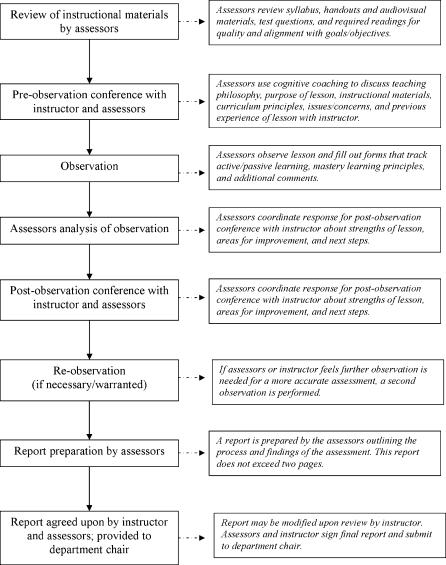

The peer assessment instrument integrated clinical supervision and cognitive coaching to provide the framework for the 7 steps of the peer teaching-assessment process: instructional materials review, pre-observation conference with the instructor, observation of instructor, assessor conference, post-observation conference with instructor, repeat observation if necessary, and summary report. This process is described in detail in Figure 1. The pre- and post-observation conferences typically took 1 hour each. The observation step took place for 1 to 2 hours during which time the assessors used the instrument to collect their observations (subjective and objective) based on the Hunter mastery teaching model. The information collected during the observation step served as the basis for the co-assessor's conference and post-observation conference with the instructor. The formative summary report was prepared using all information collected in steps 1 through 6. Because the peer assessment did not focus on content, instructors were provided the opportunity to request a content expert; however, this opportunity was rarely utilized. All faculty members were required to undergo peer teaching assessments during prepromotion years 1, 3, and 6, and every 5 years following promotion. Assessments could be completed more often upon request of the instructor or department chair.

Figure 1.

Description of steps involved in the peer teaching assessment process.

All assessors were required to participate in training sessions. For program year 1, all peer assessors were trained by the assessment specialist and 2 assessors were assigned to each instructor who was assessed. In program years 2 through 4, faculty members who were new to the assessment process were trained by faculty members with peer assessment experience. The training took approximately 1-2 hours and included a review of the various assessment models and peer assessment materials. Also, peer assessors were designated as a primary or secondary assessor for each assessment due to time concerns identified when both assessors served in equal capacity (as in year 1). Primary assessors were responsible for leading the process: handling all logistics issues, preparing written materials including the summary report, and training of new secondary assessors. Secondary assessors participated in all activities with a secondary (rather than a leading) role and reduced workload. Assessors came from both pharmacy practice and pharmaceutical sciences and were assigned for a 1-year term by the Associate Dean of Academic Affairs in conjunction with each department chair. This mandatory responsibility was considered part of his/her professional service and was a component of the annual evaluation portfolio. In most instances, the assessor served as a secondary assessor for 1 semester and a primary assessor for the next semester. While all assessors were also instructors, an assessor did not serve as a peer assessor for his/her own lesson.

The primary objective of the current study was to determine how this innovative and evidence-based peer teaching assessment process was perceived among faculty members serving as assessors and instructors.

METHODS

A 14-item survey instrument was developed to evaluate 6 factors of the peer assessment process: logistics, time, fostering teaching quality, diversifying teaching portfolios, faculty mentoring, and value of a structured discussion of teaching. These factors were included as a result of recommendations from the assessment specialist and addressed faculty members' concerns as well as potential benefits of the peer assessment process. The survey instrument used a Likert scale from 1 = strongly disagree to 5 = strongly agree to measure faculty perceptions. A score above 3 indicated a positive perception; a score of 3 was neutral; and a score below 3 indicated a negative perception. Additionally, faculty members were encouraged to write constructive comments about the peer assessment process.

The survey instruments were administered anonymously to all instructors and assessors who had participated in 19 peer assessments at the end of academic year 2002-2003 (following year 1 of the peer assessment process) and again to all instructors and assessors who participated in 38 assessments at the end of academic year 2005-2006 (following year 4 of the peer assessment process). Programmatic changes after assessments in year 1 included implementation of primary and secondary assessor roles as discussed above. Other changes included minor revisions of the forms to facilitate their use (eg, dropdown boxes).

If involved in the process during both evaluations, instructors and assessors surveyed in 2002-2003 completed another survey instrument in 2005-2006. Mean responses from instructors and assessors were compared using student t tests. Both department chairs were also asked to provide their feedback on the program as end users of the reports generated. This study was approved by the Colorado Multiple Institutional Review Board at UCDHSC.

RESULTS

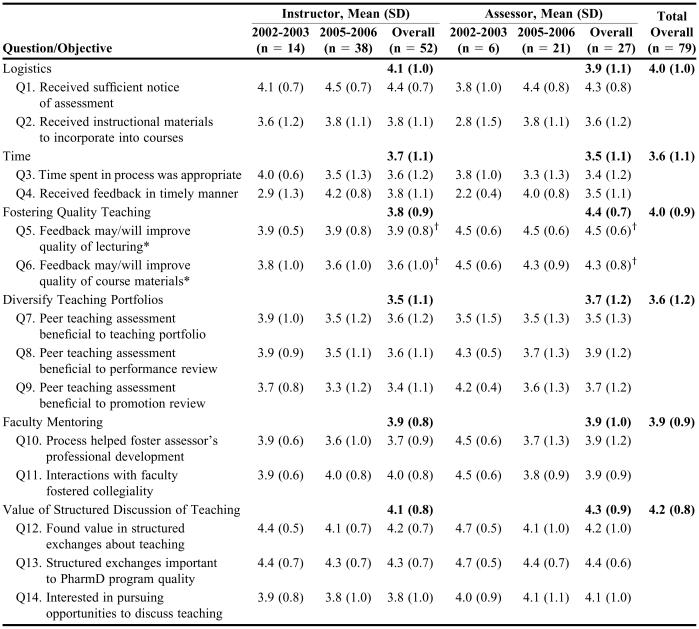

Survey instruments were completed by 6 of 6 assessors and 14 of 19 instructors in academic year 2002-2003 and 21 of 21 assessors and 38 of 38 instructors in academic year 2005-2006. Quantitative results (means and standard deviations) from the assessor and instructor survey instruments are presented in Table 2. Mean (SD) Likert scale responses from assessors and instructors from both rounds of the survey indicated favorable acceptance: logistics = 4.0 (1.0), time = 3.6 (1.1), quality teaching = 4.0 (0.9), diversifying teaching portfolios = 3.6 (1.2), faculty mentoring = 3.9 (0.9) and structured discussion of teaching = 4.2 (0.8). Only the responses to 2 of 14 questions were significantly different when comparing mean responses of assessors with those of instructors. Assessors agreed more strongly that the feedback provided would improve the quality of lecturing (Question 5: 4.5 vs. 3.9, p < 0.01) and course materials (Question 6: 4.3 vs. 3.6, p < 0.01) compared with instructors.

Table 2.

Instructor and Assessor Responses to a Survey Evaluating the Effectiveness of an Evidence-based Peer Teaching Assessment Program

Responses were based on a Likert scale ranging from 1 = strongly disagree to 5 = strongly agree

*For Q5 and Q6, assessors were asked if feedback may improve the quality of lecturing/course materials; instructors were asked if feedback will improve the quality of lecturing/course materials

†Differences significant, p < 0.01

Qualitative analysis of comments showed that most faculty members perceived the peer teaching assessment process to be a positive experience that fostered collegiality, initiated creativity, and improved teaching. Specifically, faculty members noted that they were able to get to know each other better and instructors indicated they would implement changes recommended by the assessors. Several concerns expressed in faculty comments included the lack of a designated content expert (although faculty members could request content experts as discussed previously), the perceived lack of appreciation for individual teaching styles, uncertainty about administrative issues (eg, how these formative assessments focused on improvement of teaching would contribute to a summative or judgmental evaluation), and perceived excessive time involved in the process.

The assessors commented on the change in format from 2 assessors to a primary and secondary assessor. They indicated that the quality of the process was maintained while the total time commitment decreased because secondary assessor responsibilities required less time than those of primary assessors. For each assessment, an instructor spent approximately 3 hours (pre-observation meeting, observation, post-observation meeting), the primary assessor spent 8 hours (material distribution and comments, pre-observation meeting, observation, post-observation meeting, written summaries, and final report), and the secondary assessor spent 4 hours (pre-observation meeting, observation, post-observation meeting, support for written summaries, and final report) with efficiency improving over time. Both assessors and instructors noted that the mentoring process allowed them to share thoughts and philosophies on teaching in a structured format.

Instructors also indicated that having a primary and secondary assessor rather than 2 assessors serving in equal capacity did not affect the quality of the feedback they received. Instructors valued input from their peers and thought that assessor information could provide insight that may not be captured in student evaluations. One instructor requested a content expert for an assessment. Comments from department chairs indicated the new program provided useful synopses of teaching, but it was not indicated how these assessments were used for the evaluation of teaching.

DISCUSSION

Faculty members who served as assessors and/or instructors positively accepted this peer teaching assessment system. Some opinions of the process changed from academic year 2002-2003 to 2005-2006. For example, Likert scores improved for logistics involved in material distribution, timely feedback, and the ability for instructors to improve their quality of teaching. Logistics and timely feedback most likely improved due to the change in designated assessor roles from both assessors investing an equal amount of time and effort to having a primary and secondary assessor. The scores regarding the ability to improve the quality of teaching most likely increased because faculty members who had been assessed indicated they were interested in implementing recommended changes. Assessors agreed more strongly than instructors that the feedback provided would improve the quality of lecturing and course materials. Two points should be considered in light of these findings. First, the wording on the survey instruments for instructors and assessors was slightly different in that the assessors were asked “the feedback I offered may improve the quality of lecturing and course materials,” while instructors were asked “the feedback I received will improve the quality of lecturing and course materials.” The subtle yet distinct difference in meaning between “may” and “will” may explain the difference in results. Alternatively, assessors may have had more confidence in the ability of the feedback provided to improve the quality of teaching and course material. This could be due to the training they had been given and experience with the program compared to the instructors.

Areas that showed notable decline on the Likert scale included diversifying teaching portfolios and faculty mentoring/fostering professional development. There are several possible explanations for the change regarding teaching portfolios. Comments indicated that some faculty members do not fully appreciate what constitutes a comprehensive or diverse teaching portfolio. Additionally, it was unclear to the faculty members how these assessments were used in summative evaluations by department chairs for annual reviews or promotion/tenure decisions. The primary intention of this peer teaching assessment was to serve as one of several sources of information for the individual faculty member to understand areas of strength and discover areas where improvements in teaching could be made. A secondary intention was to provide a more comprehensive teaching portfolio (including peer teaching assessment with student and self-evaluations) to administrators in the evaluation process. Feedback from department chairs indicated that they found the summary statements useful. It is not known what role peer teaching assessment summaries played in the promotion and tenure process. Faculty members are striving to gain clarification about how this assessment is used for evaluation purposes (eg, annual and promotion evaluations).

Faculty mentoring and professional development may not have been fostered to a sufficient level because each instructor is assessed at pre-promotion years 1, 3, and 6 and then every 5 years post-promotion. Annual assessment, although time-consuming, may be better able to foster faculty mentoring and professional development. Alternatively, with the focus of the assessment on the teaching observation, faculty members may not have had time or put forth effort to further explore professional development needs or desires.

Faculty members will be working to further improve this process through more effective communication. Specifically, assessors will be trained in a more structured format about the peer teaching assessment process. While a video describing the peer assessment process is available, most training is currently done one-on-one by one of several experienced peer assessors, which may lead to variability in the process. One or 2 designated faculty members who have trained with the assessment specialist and have peer assessment experience will provide training to new assessors to provide better consistency and accuracy in the process and forms. Also, faculty development seminars/meetings will be used to engage faculty members in discussions about diverse teaching portfolios (which include a variety of teaching assessments) to facilitate better understanding.

A limitation of this study was the inability to link the results with the global outcome of student learning. While it is hoped that assessments focused on improving teaching will lead to better student learning, that cannot be proven with this methodology. In addition, the ability to track changes in teaching over time is limited because very few instructors have been assessed twice. Whether or not changes in teaching have been implemented and with what results is an area for future study.

CONCLUSION

Survey results and comments regarding this peer teaching assessment process were positive overall. Two benefits noted by faculty members were an improvement in the quality of their teaching (although this was not measured empirically) and the opportunity to engage in structured/facilitated discussions about teaching. Faculty members found value in this structured process for peer teaching assessment and appreciated the efforts made to improve logistics and timely feedback throughout the process. Areas for improvement include diversifying teaching portfolios and fostering professional development.

This process serves to meet the requirements of the University and Accreditation Council for Pharmacy Education guidelines regarding peer teaching assessment (guideline 26.2), provides structured one-on-one faculty mentoring, provides assessment tools based on models of teaching excellence, and gives objective and subjective evidence that can be used to improve instructional techniques.1 The information gained from this peer teaching assessment can be coupled with other teaching activities and evaluations for a complete teaching portfolio to emerge.

ACKNOWLEDGEMENTS

We would like to acknowledge Tonya Martin, PharmD, for her work during the initial development stages of this peer assessment process while a faculty member at UCDHSC.

This work was presented in part at the 2003 Annual Meeting of the American Association of Colleges of Pharmacy, Minneapolis, Minn, and in full at the 2006 Annual Meeting of the American Association of Colleges of Pharmacy, San Diego, Calif.

REFERENCES

- 1. Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. Available at: http://www.acpe-accredit.org/pdf/ACPE_Revised_PharmD_Standards_Adopted_Jan152006.pdf. Accessed August 14, 2006.

- 2.Barnett CW, Matthews HW. Current procedures used to evaluate teaching in schools of pharmacy. Am J Pharm Educ. 1998;62:388–91. [Google Scholar]

- 3.Schultz KK, Latif D. The planning and implementation of a faculty peer review teaching project. Am J Pharm Educ. 2006;70 doi: 10.5688/aj700232. Article 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hunter M. Mastery Teaching: Increasing Instructional Effectiveness in Elementary and Secondary Schools, Colleges, and Universities. Los Angeles, Calif: Corwin Press; 1994. [Google Scholar]

- 5.Goldhammer R. Clinical Supervision. New York: Holt, Rinehart, and Winston; 1969. [Google Scholar]

- 6.Cogan M. Clinical Supervision. New York: Houghton-Mifflen; 1973. [Google Scholar]

- 7.Costa AL, Garmston RJ. Cognitive Coaching. Norwood, Mass: Christopher Gordan; 1994. [Google Scholar]

- 8.Bloom BS, editor. Taxonomy of Educational Objectives: The Classification of Educational Goals: Handbook I, Cognitive Domain. New York: McKay; 1956. [Google Scholar]

- 9.Mager RF. Preparing Instructional Objectives. 2nd ed. Belmont, Calif: David S. Lake; 1984. [Google Scholar]

- 10.Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65(9 Suppl):S63–7. doi: 10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]

- 11.Diamond RM. Designing and Assessing Courses and Curricula:A Practical Guide. Revised ed. San Francisco, Calif: Jossey-Bass; 1998. [Google Scholar]