Abstract

When behavior suggests that the value of a reinforcer depends inversely on the value of the events that precede or follow it, the behavior has been described as a contrast effect. Three major forms of contrast have been studied: incentive contrast, in which a downward (or upward) shift in the magnitude of reinforcement produces a relatively stronger downward (or upward) shift in the vigor of a response; anticipatory contrast, in which a forthcoming improvement in reinforcement results in a relative reduction in consummatory response; and behavioral contrast, in which a decrease in the probability of reinforcement in one component of a multiple schedule results in an increase in responding in an unchanged component of the schedule. Here we discuss a possible fourth kind of contrast that we call within-trial contrast because within a discrete trial, the relative value of an event has an inverse effect on the relative value of the reinforcer that follows. We show that greater effort, longer delay to reinforcement, or the absence of food all result in an increase in the preference for positive discriminative stimuli that follow (relative to less effort, shorter delay, or the presence of food). We further distinguish this within-trial contrast effect from the effects of delay reduction. A general model of this form of contrast is proposed in which the value of a primary or conditioned reinforcer depends on the change in value from the value of the event that precedes it.

Keywords: within-trial contrast, behavioral contrast, anticipatory contract, incentive contrast, delay reduction, justification of effort, cognitive dissonance

To understand how behavior changes, behavior analysts have tended to focus on the consequences of behavior. Generally, reinforcers that follow behavior strengthen it, whereas punishers weaken it. Furthermore, stimuli associated with less effort (the law of least effort), a shorter delay to reinforcement (delay reduction), and larger reinforcers are preferred over stimuli associated with greater effort, a longer time to reinforcement, and smaller reinforcers.

According to this view, the role played by antecedents is largely to identify the occasions on which a response will lead to a consequence. Thus, a discriminative stimulus identifies the occasions on which a response will be reinforced. However, there is some evidence that reinforcers that precede stimuli can elicit responding appropriate to the reinforcer (backward associative conditioning; see Hearst, 1989; Spetch, Wilkie, & Pinel, 1981).

If a discriminative stimulus that predicts reinforcement is preceded by either an appetitive or aversive event, it is possible that the value of (or preference for) that stimulus would be affected by the value of the preceding event although it is not obvious what that effect should be. On the one hand, the value of the prior event could generalize to the discriminative stimulus (induction), in which case the presentation of a more preferred event prior to a discriminative stimulus should enhance the value of the discriminative stimulus. On the other hand, the difference in value between the prior event and the reinforcer signaled by the discriminative stimulus might result in contrast, in which case the presentation of a less preferred event prior to a discriminative stimulus would enhance the value of the following discriminative stimulus. A third possibility is that antecedent events do not affect the value of a subsequent discriminative stimulus. The purpose of the line of research we have been conducting for several years is to examine the preference for a discriminative stimulus as a function of a preceding event that varies in aversiveness.

The Effect of Prior Effort on the Value of the Reinforcer that Follows

As a similar design was used in many of these experiments, presentation of some details of the procedure might be useful. The research was conducted in a typical three-response-key operant chamber. All trials began with the illumination of the center response key. In an unpublished preliminary experiment, a circle was projected on the center response key and on some trials, a single peck was required to turn off the key and replace it with a red hue. Five pecks to the red hue resulted in reinforcement (2-s access to mixed grain in the centrally mounted grain feeder). On the remaining trials, 20 pecks to the circle on the center key were required to turn it off and replace it with a green hue. Five pecks to green then resulted in reinforcement. (In all experiments, the color associated with the different response requirements was counterbalanced.) After several sessions of training, test trials were introduced in which pigeons were given a choice between red and green with either choice randomly reinforced 50% of the time. In this preliminary experiment, all of the pigeons showed strong position biases and indifference to the two colors. This may have occurred because the test trials were the first choice trials that the pigeons had experienced.

The Basic Finding

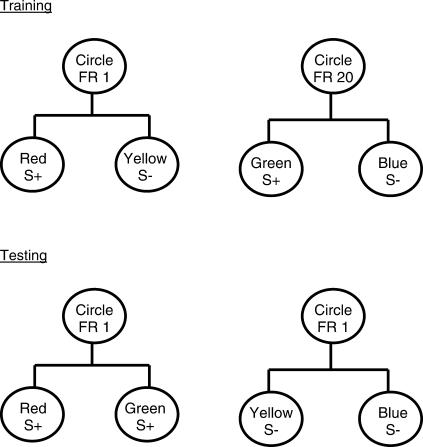

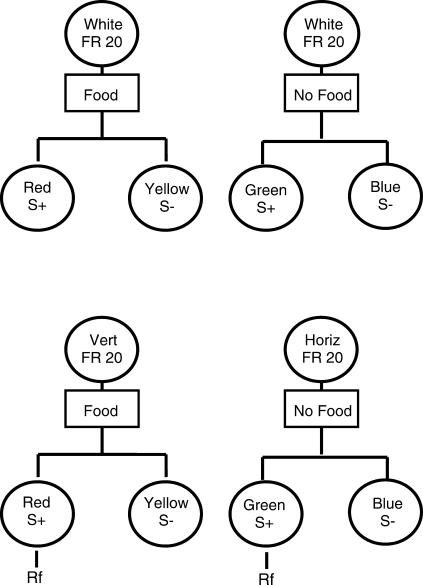

In a follow-up study (Clement, Feltus, Kaiser, & Zentall, 2000), in initial training, each of the two response requirements, 20 pecks (high effort) and 1 peck (low effort), was followed by a simple simultaneous discrimination that appeared on the left and right response keys. For example, one discrimination consisted of a choice between a red S+ and a yellow S– and the other consisted of a choice between a green S+ and a blue S–. Giving the pigeons these simple simultaneous discriminations in training forced them to choose between two stimuli on each trial. The design of this experiment is presented in Figure 1. On test trials we gave the pigeons a choice between the two S+ stimuli as well as between the two S– stimuli (bottom of Figure 1). Half of the test trials involved a choice between the two positive (S+) stimuli. The remaining test trials involved a choice between the two negative (S–) stimuli. On one third of those test trials the choice was preceded by a 1-peck requirement, a 20-peck requirement, or a no-peck requirement (i.e., the choice stimuli appeared immediately after the intertrial interval).

Fig 1. Design of experiment by Clement, Feltus, Kaiser, & Zentall (2000) in which one pair of discriminative stimuli followed 20 pecks and the other pair of discriminative stimuli followed 1 peck during training (top).

Example of two test trials (bottom) involving choice of the two S+ stimuli (left) and choice of the two S– trials (right). Other trials (not shown) involved no initial response requirement or a 20-peck response requirement.

We considered the possibility of obtaining one of four outcomes. (1) If preference for the discriminative stimulus depends solely on its consequences, no preference should be exhibited. (2) If the pigeons associate the discriminative stimulus with whether the trial was a low-effort response trial or a high-effort response trial, they may prefer the discriminative stimulus that followed low effort over the discriminative stimulus that followed high effort. (3) If the pigeons experience a form of contrast between the response ratio and the appearance of the discriminative stimulus, they might actually prefer the discriminative stimulus that followed high effort over the discriminative stimulus that followed low effort. (4) Finally, the response ratio may have acted as a conditional stimulus for choice in training so preference would depend on the response requirement preceding the choice stimuli on each test trial. If so, then if the response required on a test trial was 1 peck, the pigeons should choose the S+ stimulus that in training had followed the 1-peck requirement; however, if the response required on a test trial was 20 pecks, the pigeons should choose the S+ stimulus that in training had followed the 20-peck requirement.

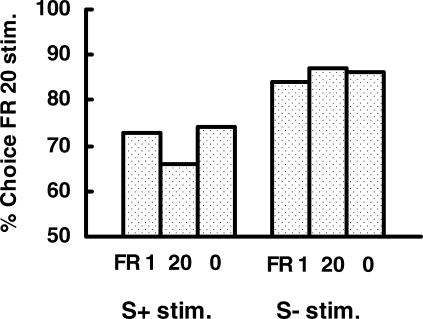

In this experiment, we found that on test trials involving a choice between the two S+ stimuli, the pigeons showed a strong tendency (69%) to peck the S+ that in training had been preceded by 20 pecks over the S+ that in training had been preceded by only 1 peck (see Figure 2). Interestingly, when the pigeons were given a choice between the two S– stimuli, they showed an even stronger tendency (84%) to peck the S– that in training had been preceded by 20 pecks over the S– that in training had been preceded by only 1 peck.

Fig 2. Results obtained by Clement, Feltus, Kaiser, & Zentall (2000).

Pigeons preferred the S+ and the S- that in training followed 20 pecks over the S+ and S– that followed 1 peck. FR 1, 20, and 0 indicate the response requirement that preceded choice between the S+ stimuli from training or between the S– stimuli from training.

We also found that the number of pecks (0, 1, or 20) that preceded choice between the two S+ or between the two S– stimuli had no significant effect on preference (see Figure 2). Thus, the pigeons did not learn to use the response requirement as a conditional cue for choice in training. Instead, it appeared that the colors that had followed the greater effort in training had taken on added value, relative to the colors that had followed less effort.

In a variation on this procedure, Kacelnik and Marsh (2002) trained European starlings to fly between a pair of perches several times to obtain a lighted key. Pecking the key produced food. If 16 flights were required (high effort), the key turned one color. If only four flights were required (low effort), the key turned a different color. On test trials, when the starlings were given a choice between the two colors, they preferred the color associated with high effort.

A Variation on the Basic Finding

The reason that we used discriminative stimuli following the different response requirements was to give us a way to distinguish between the two identical reinforcers. The assumption was that the reinforcers obtained after high effort would be preferred over the reinforcers obtained after low effort but because the reinforcers were identical, it was not possible to distinguish between them. In a more recent experiment, we used a more direct measure of reinforcer preference—the location of food. We asked if the location of food that followed high effort would be preferred over a different location of the same food that followed low effort. To answer this question we used two feeders, one that provided food on trials in which 30 pecks were required to the center response key, the other that provided the same quality and duration of access to food on trials in which a single peck was required to the center response key (Friedrich & Zentall, 2004). Prior to the start of training, we obtained a baseline feeder preference score for each pigeon. These scores were obtained by providing forced- and free-choice trials. On half of the forced trials, the left key was illuminated and pecks to the left key raised the left feeder. On the remaining forced trials, the right key was illuminated and pecks to the right key raised the right feeder. On interspersed choice trials, both the left and right keys were lit and the pigeons could choose which feeder would be raised.

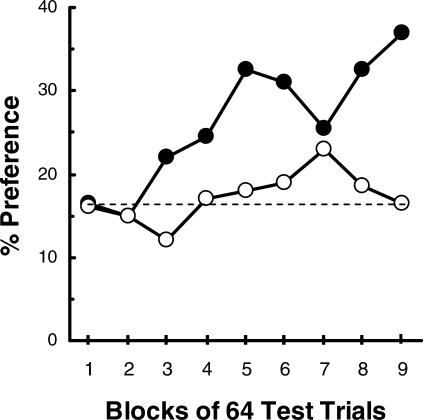

On training trials following feeder preference testing, the center key was illuminated yellow and either 1 peck or 30 pecks were required to turn off the center key and raise one of the two feeders. For each pigeon, the high-effort response raised the less preferred feeder and the low-effort response raised the more preferred feeder. Forced- and free-choice feeder trials continued throughout training to monitor any changes in feeder preference. On those free-choice trials, we found that there was a significant increase (20%) in preference for the originally nonpreferred feeder (the feeder associated with the high-effort response; see Figure 3, filled circles). A control group was included to assess changes in feeder preference that might occur simply with the added sessions of experience. For this group, each of the two response requirements was equally often followed by presentation of food in each feeder. The control group showed no systematic increase in preference for the nonpreferred feeder as a function of training (see Figure 3, open circles). Thus, it appears that the value of the location of food can be enhanced when preceded by a high-effort response.

Fig 3. Results obtained by Friedrich and Zentall (2004).

Graph shows the increase in preference for the originally nonpreferred feeder as a function of its association with the high-effort (30 peck) response (filled circles). The preferred feeder was associated with the low-effort (1 peck) response. For the control group (open circles), both feeders were associated equally often with the high-effort and low-effort response. The dashed line indicates the baseline preference for the originally nonpreferred feeder.

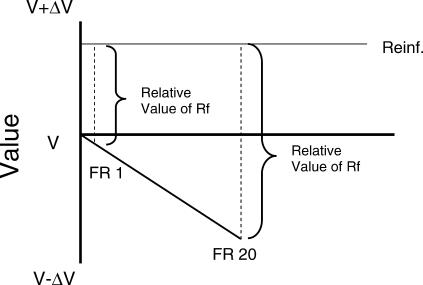

A Model of Within-Trial Contrast

The within-trial contrast effect we found can be modeled as follows (see Figure 4): First, the value of the experimental context at the start of each trial is set to V. Next, it is assumed that key pecking (or the time required to make those pecks) is a relatively aversive event that results in a negative change in value. It also is assumed that obtaining the reinforcer results in a shift in value of the context (relative to the value at the start of the trial). The final assumption is that the value of the reinforcer depends on the relative change in value (i.e., the change in value from the end of the response requirement to the appearance of the reinforcer or the appearance of the stimulus that signals reinforcement). Thus, because the change in value following a high-effort response is presumed to be larger than the change in value following a low-effort response, the relative value of the reinforcer following a high-effort response should be greater than that following a low-effort response.

Fig 4. A model of change in relative value to account for within-trial contrast effects.

According to the model, key pecking results in a negative change in value, V – ΔV1 for FR 1 and V – ΔV20 for FR 20, whereas obtaining a reinforcer results in a positive change in value, V + ΔVRf. The net change in value within a trial depends on the difference between V + ΔVRf and V – ΔV1 on an FR 1 trial, and between V + ΔVRf and V – ΔV20 on an FR 20 trial.

Relative Aversiveness of the Prior Event

Delay to Reinforcement as an Aversive Event

If the model presented in Figure 4 is correct, then any prior event that is relatively aversive (compared with the alternative event on other trials) should result in a similar enhanced preference for the stimuli that follow. For example, given that pigeons prefer a shorter over a longer delay to reinforcement (e.g., Chung & Herrnstein, 1967), our theory predicts that they also should prefer discriminative stimuli that follow a long delay over those that follow a short delay.

To test this hypothesis, we trained pigeons to peck the center response key (20 times on all trials) to produce a pair of discriminative stimuli (as in Clement et al., 2000). On some trials, pecking the response key was followed immediately by offset of the center response key and onset of one pair of discriminative stimuli (i.e., with no delay), whereas on the remaining trials pecking the response key was immediately followed by offset of the center response key and then onset of a different pair of discriminative stimuli, but only after a delay of 6 s (see top panel of Figure 5). On test trials, the pigeons were given a choice between the S+ stimuli, but unlike in the previous experiments, no preference was found (DiGian, Friedrich, & Zentall, 2004, Unsignaled Delay Condition).

Fig 5. Design of experiments by DiGian, Friedrich, & Zentall (2004) in which one pair of discriminative stimuli followed a delay and the other pair of discriminative stimuli followed the absence of a delay.

Top: The delay and absence of delay were unsignaled. Bottom: The delay and absence of delay were signaled.

However, there was an important difference between the manipulation of effort used in the first two experiments (Clement et al., 2000; Friedrich & Zentall, 2004) and the manipulation of delay used by DiGian et al. (2004). In the first two experiments, once the pigeon had made a single peck to the initial stimulus and the discriminative stimuli failed to appear, the pigeon could anticipate that 19 additional pecks would be required. Thus, whatever emotional state (e.g., frustration) might be produced by encountering a high-effort trial would be experienced in the context of having to make additional responses. That is, the prolonged presence of the center response key had likely become a conditioned aversive stimulus. For the delay manipulation, however, this was not the case. That is, the pigeon could not anticipate whether or not a delay would occur and, when a delay did occur, no further responding was required. Would the results be different if the pigeons were required to peck in the presence of a differentially aversive conditioned stimulus?

To test this hypothesis, the delay-to-reinforcement manipulation was repeated, but this time an initial stimulus was correlated with the delay (DiGian et al., 2004, Signaled Delay Condition). Thus, on half of the trials, a vertical line appeared on the response key and pecking resulted in the immediate appearance of one pair of discriminative stimuli (e.g., red and yellow). On the remaining trials, a horizontal line appeared on the response key and pecking resulted in the appearance of the other pair of discriminative stimuli (e.g., green and blue), but only after a 6-s delay (see bottom panel of Figure 5). On these latter trials, then, pecking was required in the presence of a presumably conditioned aversive stimulus, the horizontal lines. When pigeons trained in this fashion were tested, they showed a significant preference (65%) for the S+ that in training had followed the more aversive event—in this case, a 6-s delay. Once again, the experience of an aversive event produced an increase in the value of the positive discriminative stimulus that followed. Furthermore, the results of this experiment indicated that for contrast to occur, it may be necessary for the aversive event to be preceded by a stimulus that signals it (i.e., a conditioned aversive stimulus) and to which responding is required.

The Absence of Reinforcement as an Aversive Event

The absence of reinforcement in the context of reinforcement on other trials also may serve as a relatively aversive event (Amsel, 1958). If the model presented in Figure 4 is correct, then there should be a preference for discriminative stimuli that follow the absence of reinforcement. To test this hypothesis, pigeons once again were trained to peck a response key to produce a pair of discriminative stimuli. On some trials, pecking the response key was followed immediately by 2-s access to food and then by the presentation of one pair of discriminative stimuli. On the remaining trials, pecking the response key was followed by the absence of food (for 2 s) and then by the presentation of a different pair of discriminative stimuli (see top panel of Figure 6). On test trials, the pigeons were given a choice between the two S+ stimuli, but as in the unsignaled delay manipulation, they showed no preference (Friedrich, Clement, & Zentall, 2005).

Fig 6. Design of experiments by Friedrich, Clement, & Zentall (2005) in which one pair of discriminative stimuli followed reinforcement and the other pair of discriminative stimuli followed the absence of reinforcement.

Top: Reinforcement and absence of reinforcement were unsignaled. Bottom: Reinforcement and absence of reinforcement were signaled.

However, when an initial stimulus was correlated with the presence versus absence of food (see bottom panel of Figure 6), pigeons showed a significant preference (67%) for the S+ that in training had followed no food. Thus, once again, the experience of a relatively aversive event produced an increase in the value of the S+ stimulus that followed provided this relatively aversive event was preceded by a stimulus that signaled it (i.e., a conditioned aversive stimulus).

Hunger as the Aversive State

In an interesting variation on the manipulated aversiveness of a prior event, Marsh, Schuck-Paim, and Kacelnik (2004) trained European starlings to peck a lit response key that was one color (e.g., red) on trials when they were pre-fed and another color (e.g., green) on trials when they were not pre-fed. On test trials, they were given a choice between red and green and they showed a significant preference for the color that in training was associated with the absence of prefeeding (viz., hunger). Furthermore, this preference was unaffected by whether they were pre-fed or not at the time of testing (see also Pompilio, Kacelnik, & Behmer, 2006; Revusky, 1967). One might argue that when the starlings were hungry, conditioning was, in some sense, better than when they were not. However, it is to be noted that if rate of discrimination acquisition is an indication of conditioning, we have never found differences in discrimination acquisition following each of the two differentially aversive events in our experiments involving the manipulation of response requirement, delay, and the absence of food.

A finding that appears to be inconsistent with preference for a discriminative stimulus associated with greater food deprivation was reported by Capaldi and Myers (1982). They found that a flavor given to rats when under low deprivation was preferred over a different flavor given to them under high deprivation. However, this preference was found only when the flavors were provided in a solution that was nonnutritive. When the flavors were provided in a nutritive solution they were accompanied by reinforcement, and results consistent with contrast were found (but see Capaldi, Myers, Campbell, & Sheffer, 1983).

A Signal of Possible Effort as the Aversive Event

Can signaled effort that does not actually occur serve as the aversive event that increases the value of an S+ that follows? This question addresses the issue of whether the contrast between the initial aversive event and the discriminative stimulus depends on actually experiencing the relatively aversive event prior to presentation of the discriminative stimuli.

One account of the added value that accrues to stimuli that follow greater effort is that during training, the greater effort expended (or delay or absence of food) produces a heightened state of arousal, and in that heightened state of arousal, the pigeons learn more about the discriminative stimuli that follow high effort than about the discriminative stimuli experienced in the lower state of arousal produced by low effort. If a heightened state of arousal leads to better learning about discriminative stimuli that follow it, one might expect the pigeons to acquire that discrimination faster or better. However, as already noted, in all of the experiments we have conducted, examination of the acquisition functions for the two simultaneous discriminations offered no support for this hypothesis. Of course, small differences in the rate of acquisition and/or terminal levels of accuracy may be masked by individual differences or may not be apparent because of a performance ceiling.

Those results notwithstanding, the purpose of the next experiment was to ask if we could obtain a preference for the discriminative stimuli that followed a signal that high effort might be, but was not actually, required on that trial (Clement & Zentall, 2002). More specifically, on half of the training trials, pigeons were initially presented with a vertical line on the center response key which signaled that low effort might later be required. On half of the vertical-line trials, pecking the vertical line replaced it with a white key and a single peck to the white key resulted in reinforcement. On the remaining vertical-line trials, pecking the vertical line replaced it with a simultaneous discrimination S+FR1S–FR1 on the left and right response keys with five pecks to the S+FR1 reinforced. A schematic representation of the design of this experiment appears in Figure 7.

Fig 7. Design of experiment by Clement and Zentall (2002, Experiment 1) in which the effect of a signal for potential (rather than actual) effort on preference for the discriminative stimuli that followed was studied.

On the remaining training trials, pigeons were presented with a horizontal line on the center response key that signaled that greater effort might later be required. On half of the horizontal-line trials, pecking the horizontal line replaced it with a white key and 30 pecks to the white key resulted in reinforcement. On the remaining horizontal-line trials, pecking the horizontal line replaced it with a different simultaneous discrimination S+FR30S–FR30 on the left and right response keys with five pecks to the S+ FR30 reinforced.

On test trials, when the pigeons were given a choice between the S+FR1 and the S+FR30, they once again showed a significant preference (66%) for the S+FR30. Thus, the presence of stimuli (the line orientations) that were sometimes followed by differential pecking was sufficient to produce a preference for the stimuli that followed on other trials.

In this experiment, it is important to note that the events that occurred in training on trials involving each pair of discriminative stimuli involved essentially the same sequence of events (the same number of pecks to the center key followed by choice of one of the two side keys). It was only on the other half of the trials, those trials in which the discriminative stimuli did not appear, that differential responding was required. These results extend the findings of the earlier research and suggest that differential effort-producing arousal was not the basis for within-trial contrast. They also show that the presentation of a conditioned aversive stimulus (or the anticipation of a possible aversive event) is sufficient to produce the within-trial contrast effect.

The Signaled Possible Absence of Reinforcement as the Aversive Event

If signaled effort can function as a conditioned aversive event, can the signaled absence of reinforcement serve the same function? Using a design similar to that used to examine differential signaled effort, we evaluated the effect of differential signaled reinforcement (Clement & Zentall, 2002, Experiment 2). A schematic representation of the design of this experiment appears in Figure 8. On half of the vertical-line trials, pecking the vertical line was followed by immediate reinforcement. On the remaining vertical-line trials, pecking the vertical line replaced it with a simultaneous discrimination S+RfS–Rf on the left and right response keys with five pecks to the S+Rf reinforced 50% of the time. On half of the horizontal-line trials, pecking the horizontal line ended with no reinforcement. On the remaining horizontal-line trials, pecking the horizontal line replaced it with a different simultaneous discrimination S+NRfS–NRf on the left and right response keys with five pecks to the S+ NRf reinforced 50% of the time.

Fig 8. Design of experiment by Clement and Zentall (2002, Experiment 2) in which the effect of a signal for potential (rather than actual) reinforcement on preference for the discriminative stimuli that followed was studied.

Consistent with the test-trial results of the earlier research, when the pigeons were given a choice between an S+ signaled by a stimulus that on other trials was associated with reinforcement (S+Rf) and an S+ signaled by a stimulus that on other trials was associated with the absence of reinforcement (S+NRf), they showed a significant preference (67%) for S+NRf. Thus, a signal for the absence of food produced a preference for the S+ that followed it, an effect similar to that obtained with a signal for a high-effort response.

In a follow-up experiment (Clement & Zentall, 2002, Experiment 3), we examined whether preference for the discriminative stimuli associated with the absence of food was produced by positive contrast between the certain absence of food and a 50% chance of food (on discriminative stimulus trials) or negative contrast between the certain presence of food and a 50% chance of food (on the other set of discriminative stimulus trials). A schematic representation of this design appears in Figure 9. To accomplish this manipulation, on vertical-line trials, the conditions of reinforcement were essentially nondifferential for Group Positive (i.e., reinforcement always followed vertical-line trials whether the discriminative stimuli were presented or not). On half of these trials, reinforcement was presented immediately for responding to the vertical line. On the remaining trials, reinforcement occurred for responding to the S+ in the simultaneous discrimination. Thus, there should have been little contrast established between these two trial types.

Fig 9. Design of experiment by Clement and Zentall (2002, Experiment 3), in which whether the effect of a signal for potential (rather than actual) reinforcement on preference for the discriminative stimuli that followed was the result of positive contrast, negative contrast, or both was studied.

On horizontal-line trials, however, responding to the horizontal line ended with no reinforcement on half of the trials. On the remaining trials, reinforcement occurred for responding to the S+ in the other simultaneous discrimination. Thus, on horizontal-line trials for Group Positive, there was the opportunity for positive contrast to develop on discriminative stimulus trials (i.e., a signal that reinforcement might not occur may produce positive contrast when reinforcement does occur; see top right panel of Figure 9).

For Group Negative, the conditions of reinforcement were essentially nondifferential on all horizontal-line trials (i.e., the probability of reinforcement on these trials was always 50%, whether or not the trials involved discriminative stimuli). Thus, on half of the horizontal-line trials, reinforcement was provided immediately with a probability of .50 for responding to the horizontal line. On the remaining horizontal-line trials, reinforcement was provided for choice of the S+, but only on half of the trials. Thus, there should have been little contrast established between these two kinds of horizontal-line trials (see the bottom right panel of Figure 9).

On vertical-line trials, however, reinforcement for Group Negative was presented immediately (with a probability of 1.00) for responding to the vertical line on half of the trials. On the remaining vertical-line trials, reinforcement was provided for choice of the S+ with a probability of .50. Thus, on vertical-line trials for Group Negative, there was the opportunity for negative contrast to develop on discriminative stimulus trials (i.e., a signal that reinforcement is quite likely may produce negative contrast when reinforcement does not occur; see bottom left panel of Figure 9).

When given a choice between the S+ stimuli on the test trials, Group Positive showed a significant preference (60%) for the S+ that in training was preceded by a horizontal line (the initial stimulus that on other trials ended with no reinforcement). Thus, Group Positive showed evidence of positive contrast.

When pigeons in Group Negative were given a choice between the S+ stimuli, they, too, showed a preference (58%) for the positive discriminative stimulus that in training was preceded by a horizontal line (the initial stimulus that on other trials was followed by a lower probability of reinforcement than on comparable trials involving the vertical line). Thus, Group Negative showed evidence of negative contrast. In this case, the contrast should be described as a reduced preference for the S+ preceded by the vertical line which, on other trials, was associated with a higher probability of reinforcement (1.00). In this experiment, then, although the effects were somewhat reduced in magnitude because the positive and negative contrast effects were isolated, evidence was found for both types of contrast.

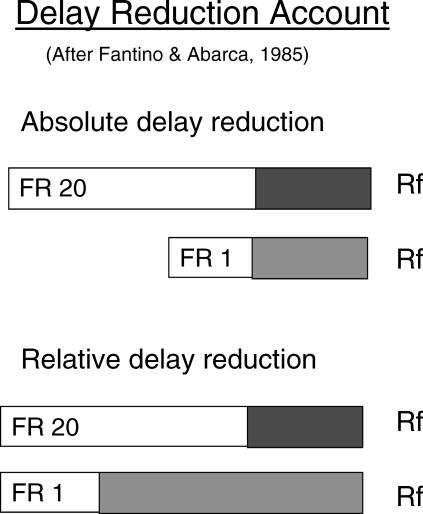

Contrast or Relative Delay Reduction?

To this point, we have described the stimulus (and location) preference in terms of a contrast effect. However, it also is possible to interpret this effect in terms of the delay-reduction hypothesis (Fantino & Abarca, 1985). According to the delay-reduction hypothesis, a stimulus that predicts reinforcement sooner in its presence than in its absence will become a conditioned reinforcer. In the present experiments, the time between the onset of each S+ and the reinforcers was the same (see Figure 10, top). Thus, one could argue that the S+ stimuli do not differentially reduce the delay to reinforcement. But the delay-reduction hypothesis also can apply to the relative reduction in delay to reinforcement. That is, one can consider the temporal proximity of the discriminative stimuli relative to the total duration of the trial. If one considers delay reduction in terms of its duration relative to the duration of the entire trial, then the delay reduction hypothesis may be relevant to the present designs (see Figure 10, bottom). Consider, for example, the case of the differential-effort manipulation (Clement et al., 2000). Because it takes longer to produce 20 responses than 1 response, 20-response trials would be longer than 1-response trials. As a consequence, the discriminative stimuli appear relatively later in a 20-response trial than in a 1-response trial. The later in a trial that the discriminative stimuli appear, the closer in time their onset would be to reinforcement relative to the start of the trial and, thus, their appearance would be associated with a greater relative reduction in delay to reinforcement. (The overall length of the bars at the bottom of Figure 10 is not meant to suggest equal trial durations but, instead, to emphasize the difference in the proportion of each trial type taken up by the discriminative stimuli.) Using the same logic, a trial with a delay is longer than a trial without a delay (DiGian et al., 2004) so stimuli appearing after a delay occur relatively later in the trial than stimuli appearing on no-delay trials.

Fig 10. Schematic representations of the relation between the discriminative stimuli and reinforcement as a function of the absolute reduction in delay to reinforcement (top) and the relative reduction in delay to reinforcement (bottom) signaled by the discriminative stimuli.

The time needed to make the required number of initial pecks is indicated by the white bars. The time during which the simultaneous discrimination is presented is indicated by the shaded bars. Notice that the absolute time from the presentation of the simultaneous discrimination to reinforcement is the same for both trial types (top) but the relative time (bottom) is longer on trials when fewer responses are required.

The delay-reduction hypothesis also can account for the effects of reinforcement versus no reinforcement. Although trial durations with and without reinforcement are the same prior to the appearance of the discriminative stimuli, the delay-reduction hypothesis considers the critical time to be the interval between reinforcements. Thus, on trials in which the discriminative stimuli are preceded by reinforcement, the time between reinforcements would be short, so the discriminative stimuli should be associated with little reduction in delay to reinforcement. On trials in which the discriminative stimuli are preceded by the absence of reinforcement, however, the time between reinforcements would be long (viz., the time between reinforcement on the preceding trial and reinforcement on the current trial), so the discriminative stimuli on the current trial should be associated with a relatively large reduction in delay to reinforcement.

Nevertheless, something more than relative delay reduction appears to be required to account for the effects of differential effort on trials other than those on which the discriminative stimuli appear (Clement & Zentall, 2002, Experiment 1) because discriminative-stimulus trials were not differentiated by number of responses, delay, or reinforcement (and, thus, should have been of comparable duration). The same is true for the effects of differential anticipated reinforcement (Clement & Zentall, 2002, Experiments 2 and 3) because that manipulation likewise occurred on separate trials.

It is possible for the delay-reduction hypothesis to account for the results of these latter experiments, however, if one assumes that the pigeons associated an average delay to reinforcement with each initial stimulus. By this assumption, reinforcement in the simultaneous discrimination link would occur after a shorter delay than the average delay associated with the initial stimulus that otherwise signaled a large number of pecks (or the absence of food) and it would occur after a longer delay than the average delay associated with the initial stimulus that otherwise signaled only one peck (or the presence of food).

Greater difficulty for the delay-reduction hypothesis comes from the different results found with signaled versus unsignaled delay (DiGian et al., 2004) and signaled versus unsignaled absence of food (Friedrich et al., 2005). According to the delay-reduction hypothesis, it should not matter whether the delay or the absence of food is signaled; comparable preferences should be found. Contrary to this prediction, however, preferences for stimuli following a delay or the absence of food were found only when those events were signaled. Thus, something more than delay reduction is needed to account for these results.

Recently, we have tested the delay-reduction hypothesis more directly by training pigeons with two schedules matched for trial duration (Singer, Berry, & Zentall, 2007). One was a differential-reinforcement-of-other-behavior (DRO) schedule that required the pigeons to refrain from responding for 20 s to obtain food. The other schedule was a variation of a fixed-interval (FI) schedule that matched the duration of the previous DRO schedule. Following the first couple of training sessions, all trials were approximately 20 s long. Thus, the time spent in the presence of each schedule was the same as was the distribution of those times throughout training. Each DRO trial began with the left response key lit; a peck to that key turned on vertical lines on the center key which signaled that the DRO schedule was in effect. Each FI trial began with the right response key lit; a peck to that key turned on horizontal lines on the center key which signaled that the FI schedule was in effect. (The side key associated with each schedule was counterbalanced.)

The initial question was which schedule the pigeons would prefer. The delay-reduction hypothesis predicts no schedule preference because trial durations were equated and the signals for reinforcement occurred at the same time. More important, delay reduction predicts that there should be no differential preference for discriminative stimuli that follow. The contrast account also makes no prediction about schedule preference but it makes a clear prediction that if one schedule is preferred, then there should also be a preference for the discriminative stimuli that follow the other (less preferred) schedule.

To determine schedule preference, pigeons were given a choice between the left and right response keys on selected probe trials. Once schedule preference had stabilized, we reversed the side-key schedule contingency to determine if preferences would reverse as well. If the side-key preference did not reverse, we scored that pigeon as having a side preference rather than a schedule preference. Of the 7 pigeons tested, one showed a strong preference for the FI schedule, 2 showed a strong preference for the DRO schedule, and 4 showed no consistent preference for either schedule but showed a consistent side-key preference. Thus, although the overall schedule preference results would appear to support the delay-reduction hypothesis, the strong preferences shown by all pigeons suggest that other factors may be involved.

Of more importance, following the tests for schedule preference, each schedule was then followed by a simultaneous color discrimination (S+DROS–DRO and S+FIS–FI). After acquiring the two simultaneous discriminations the pigeons were given a test session with probe trials intermixed with continued training trials, followed by 10 more training sessions and another test session. This procedure continued until the pigeons had received four test sessions. On probe trials, the pigeons were given a choice of the two S+ stimuli or the two S– stimuli to determine their preference.

The delay-reduction hypothesis predicts no preference because the trials were equated for duration and the relative delay reduction provided by the discriminative stimuli were the same. The contrast account, however, predicts an S+ preference based on each pigeon's schedule or side-key preference. In fact, pigeons showed a 63% preference, on average, for the S+ associated with their least preferred schedule or side key. Furthermore, the pigeons' degree of S+ preference was highly (negatively) correlated with their degree of side-key preference (r = -.79). Thus, the contrast account appears to offer a better account of the data than the delay-reduction account.

Failures to Obtain Contrast

A number of attempts to obtain the original effect reported by Clement et al. (2000) have not proved successful. One of these studies (Armus, 1999) involved rats running up inclined ramps (high effort) versus level ramps (low effort). Given the willingness of rats to work for access to a running wheel in the absence of any other reinforcer, we suspect that the inclined ramp does not provide a sufficiently aversive experience for contrast effects to be found.

Two other studies that have failed to find a contrast effect involved rats pressing levers in which the effort required to press the lever was manipulated (Armus, 2001; Jellison, 2003). In both studies, different flavored pellets followed low and high effort and the rats were later tested for their acquired flavor preference in a Y or T maze (one flavor was placed in each arm). Although Armus found no differential preference, the choice apparatus may not be particularly sensitive to detect modest preferences because in such contexts, rats are known to spontaneously alternate (Dember & Fowler, 1958). Furthermore, any acquired flavor preference during training may have been reduced because on the test trials the rats had to learn where the two flavors were.

Jellison (2003) found that over 70% of the rats (12 of 17) that showed a preference preferred the flavor associated with the high-effort response. This effect, however, was not statistically reliable, but included in this number was a group of rats that did not have the effort manipulation but rather had a successive light discrimination (presumed to be more difficult than no discrimination). Furthermore, once again, the choice apparatus used on test trials may have encouraged spontaneous alternation which would have reduced any evidence of a preference.

Other attempts to replicate the Clement et al. (2000) results under conditions very similar to those used in the original experiment are of more concern because the subjects and procedures used were quite similar to those used by Clement et al. (Vasconcelos, Urcuioli, & Lionello-DeNolf, 2007, as well as unpublished data from our own lab). When we first started to examine this contrast effect we recognized that we had no means of assessing the association that was developing between the simultaneous discriminative stimuli and the preceding event that was being manipulated. As a result, we provided what we thought would be sufficient overtraining (20 sessions) to ensure a strong association. In later research, however, we began to monitor the development of the contrast effect (see, e.g., Friedrich & Zentall, 2004) and discovered that contrast was slower to develop than we had thought. In that study, reliable contrast effects developed only after 60 training sessions. Given the rapid acquisition of the simultaneous discriminations, this was quite a bit more than the 20 overtraining sessions we had used in the original study.

More recently, we have used a procedure in which pigeons are tested for contrast immediately following acquisition of the simultaneous discriminations and then once every 10 sessions thereafter (Singer et al., 2007). In that experiment, a contrast effect began to emerge after 20 sessions of overtraining but it was statistically reliable only after 30 overtraining sessions. Furthermore, in an unpublished experiment we recently conducted, although contrast effects began to emerge after 40 sessions of overtraining, it was not until after the pigeons had undergone 50 overtraining sessions that a statistically reliable contrast effect was found.

Within-Trial Contrast: What Kind of Contrast is it?

The within-trial contrast effect described in this article appears to be somewhat different from other forms of contrast that have been studied. To better appreciate the differences among the various forms of contrast, it would be useful to characterize each of them.

Incentive Contrast

In incentive contrast, the magnitude of reinforcement that has been experienced for many trials suddenly changes, and the change in behavior that follows is compared with the behavior of a comparison group that has experienced the final magnitude of reinforcement from the start. An early example of incentive contrast was reported by Tinklepaugh (1928), who found that if monkeys were trained for a number of trials with a preferred food (e.g., fruit), when they then encountered a less preferred food (e.g., lettuce, a reinforcer for which they would normally work) they often would refuse to eat it.

Incentive contrast was studied more systematically by Crespi (1942; see also Mellgren, 1972). Rats trained to run for a large amount of food and shifted to a small amount typically ran slower than rats trained to run for the smaller amount of food from the start (negative incentive contrast). Conversely, rats trained to run for a small amount of food and shifted to a large amount were found to run faster than rats trained to run for the larger amount of food from the start (positive incentive contrast). By its nature, incentive contrast must be assessed following the shift in reinforcer magnitude rather than in anticipation of the change because, usually, only a single shift is experienced.

Several accounts of incentive contrast have been proposed. For example, Capaldi (1972) proposed that the behavioral effect of downward shifts in reinforcer magnitude could be attributed to generalization decrement. According to this account, any change in context should lead to a performance decrement and the magnitude of reinforcement can be thought of as a change in context. The appeal of the generalization decrement account is its simplicity. However, the supporting evidence for it has not been strong. For example, although contrast depends on the novelty of the shift in reinforcer magnitude, incentive contrast effects have been found even with repeated shifts between large and small reinforcers (Maxwell, Calef, Murry, Shepard, & Norville, 1976; Shanab, Domino, & Ralph, 1978).

Furthermore, generalization decrement has difficulty explaining positive incentive contrast. Indeed, it predicts an effect in the opposite direction. That is, when animals are shifted from a low magnitude of reinforcement to a high magnitude of reinforcement, the generalization decrement account predicts slower running than by animals that ran to the higher reinforcer magnitude from the start. Although positive incentive contrast has not been as easy to obtain as negative incentive contrast (due perhaps to ceiling effects, see Franchina & Brown, 1971), there is sufficient evidence for its existence (Crespi, 1942, 1944; Zeaman, 1949) to question any theory that predicts a decrement in running speed with any change in the magnitude of reinforcement.

Amsel's (1958) frustration theory also has been applied to incentive contrast effects (see also Gray, 1987). According to frustration theory, withdrawal of reinforcement (or, in the case of incentive contrast, a reduction of reinforcement) causes frustration which competes with responding. Although frustration theory does not address itself to findings of positive incentive contrast, one can posit, as Crespi (1942) did, an opposite kind of emotional response, ‘elation,’ to account for faster running when the reinforcer shifts from a lower magnitude to a higher magnitude.

Anticipatory Contrast

A second form of contrast, anticipatory contrast, involves repeated experiences with the incentive shift, and the dependent measure involves behavior that occurs prior to the anticipated change in reinforcer value. Furthermore, the behavior assessed is typically consummatory rather than nonconsummatory (like running). This research has found that rats typically drink less of a weak saccharin solution if it is followed by a strong sucrose solution, relative to a control group for which saccharin is followed by an identical concentration of saccharin (Flaherty, 1982). The fact that the measure of contrast involves differential rates of the consumption of a reinforcer makes this form of contrast quite different from the others.

The most obvious account of negative anticipatory contrast is that the initial saccharin solution was devalued by its association with the following preferred strong sucrose solution (Flaherty & Checke, 1982). However, tests of this theory have not provided support for it. For example, in a within-subject design, Flaherty, Coppotelli, Grigson, Mitchell, and Flaherty (1995) trained rats with an initial saccharin solution that was followed by a sucrose solution with one flavor or odor cue (S1) and another saccharin solution that was followed by a similar saccharin solution but with a different flavor or odor cue (S2). Although negative anticipatory contrast was found (the rats drank less of the saccharin solution that was followed by a sucrose solution than the one followed by another saccharin solution), there was no evidence of a preference for the flavor or odor associated with the more consumed solution (S2). Thus, the reinforcer associated with the two distinctive cues appeared to have similar value.

An alternative theory proposed by Flaherty (1996) to account for negative anticipatory contrast is response competition. According to this account, when the sucrose solution appears at a location that is different from the saccharin solution, animals anticipate the appearance of the sucrose solution and leave the saccharin solution early. However, research has shown that when the two solutions were presented at the same location, a significant negative anticipatory contrast effect is still found (Flaherty, Grigson, Coppotelli, & Mitchell, 1996).

A third theory, also proposed by Flaherty (1996), is that anticipation of the sucrose solution actively inhibits drinking of the saccharin solution in preparation for additional drinking of the sucrose solution. This theory is supported by the negative correlation between the amount of suppression of saccharin drinking and the amount of sucrose drinking (Flaherty, Turovsky, & Krauss, 1994). Alternatively, if inhibition of drinking the first solution is responsible for anticipatory contrast, then it should be possible to eliminate the contrast by administering a disinhibitory drug such as chlordiazepoxide. However, Flaherty and Rowan (1988) found that this drug had little effect on the anticipatory contrast effect. Thus, the mechanism underlying anticipatory contrast is still not well understood.

Differential or Behavioral Contrast

A third form of contrast involves signals for two different outcomes. When used in a discrete-trial procedure with rats, the procedure has been referred to as simultaneous incentive contrast. Bower (1961), for example, reported that rats trained to run to both large and small magnitudes of reinforcement that were signaled by the brightness of the alley ran slower to the small magnitude of reinforcement than rats that ran only to the small magnitude of reinforcement.

The more often studied, free-operant analog of this task results in what has been called behavioral contrast. To observe behavioral contrast, pigeons, for example, are trained on an operant task involving a multiple schedule of reinforcement in which two (or more) schedules, each signaled by a distinctive stimulus, are randomly alternated. Positive behavioral contrast can be demonstrated by training pigeons initially with equal-probability-of-reinforcement schedules (e.g., two variable-interval 60-s schedules) and then reducing the probability of reinforcement in one schedule (e.g., from variable-interval 60 s to extinction) and noting an increase in the response rate in the other, unaltered schedule (Halliday & Boakes, 1971; Reynolds, 1961).

It is difficult to classify behavioral contrast according to whether it involves a response to entering the richer schedule (as with incentive contrast) or the anticipation of entering the poorer schedule (as with anticipatory contrast) because during each session, there are multiple transitions from the richer to the poorer schedule and vice versa. Are the pigeons reacting to the current schedule in the context of the poorer preceding schedule, in anticipation that the next schedule may be poorer, or both?

Williams (1981) attempted to distinguish among these alternatives by presenting pigeons with triplets of signaled trials in an ABA design (with the richer schedule designated as A) and comparing their behavior to that of pigeons trained with an AAA design. Each triplet was followed by a 30-s intertrial interval to separate its effects from the preceding and following triplet. Williams found very different kinds of contrast in the first and last A components of the ABA sequence. In the first A component, he found a typically higher relative level of responding that was maintained over training sessions (see also Williams, 1983). In the last A component, however, he found a higher relative level of responding primarily at the start of the component (an effect known as local contrast—see Terrace, 1966) which was not maintained over training sessions (see also Cleary, 1992; Malone, 1976; but see Green & Holt, 2003). Thus, there is evidence that behavioral contrast may be attributable primarily to the higher rate of responding in anticipation of the poorer schedule, rather than in response to the appearance of the richer schedule (Williams, 1981; see also Williams & Wixted, 1986).

It typically is accepted that the higher rate of responding to the stimulus associated with the richer schedule of reinforcement occurs because, in the context of the poorer schedule, that stimulus is a better predictor of reinforcement (Keller, 1974). There is evidence, however, that it is not that the richer schedule appears better, but that the richer schedule will soon get worse. Williams (1992) found that although pigeons peck at a higher rate to stimuli that predict a worsening schedule of reinforcement, when given a choice between two similar schedules, one that occurs prior to a worsening schedule of reinforcement and the other that occurs prior to a nonworsening schedule of reinforcement, they prefer the one that occurs prior to a nonworsening schedule.

The implication of this finding is that the increased responding associated with the richer schedule does not reflect its greater value to the pigeon but, rather, its function as a signal that conditions will soon get worse (i.e., that the opportunity to obtain reinforcement will soon diminish). This analysis suggests that a compensatory or learned response is likely to be responsible for anticipatory contrast (Flaherty, 1982) and, in the case of behavioral contrast, responding in anticipation of a worsening schedule (Williams, 1981). In this sense, these two forms of contrast are probably quite different from the perceptual-like detection process involved in incentive contrast.

Recently Williams and McDevitt (2001) have tried to identify the mechanisms responsible for the dissociation of response rate and choice. They attempted to separate the Pavlovian function of stimuli that signaled the upcoming schedule of reinforcement from their instrumental function by including an initial link during which reinforcement could not be obtained (the Pavlovian function), followed by a link during which reinforcement could be obtained (the instrumental function), followed by the terminal link that involved the manipulated schedule. They found a higher response rate and greater preference for stimuli in the initial (presumably Pavlovian) link when the terminal link was richer (induction) but a higher response rate and greater preference for stimuli in the following (presumably instrumental) link when the terminal link was poorer (contrast).

Within-Trial Contrast

The within-trial contrast effect reported in the present article cannot neatly be adapted to any of the aforementioned contrast effects. For example, with incentive contrast, there is a sudden unanticipated change in the magnitude of reinforcement that occurs between phases of an experiment, whereas within-trial contrast involves predictable events very early in each trial.

Anticipatory contrast is the reduction in consummatory behavior in anticipation of an improvement in reinforcement. This seems similar to within-trial contrast because these transitions are experienced many times and contrast depends on the animal's ability to predict forthcoming improvement in reinforcing conditions. However, anticipatory contrast occurs at a time in the trial prior to the experimental manipulation, whereas within-trial contrast occurs at a time in the trial following the experimental manipulation. Furthermore, as previously mentioned, the measure of anticipatory contrast involves differential rates of consumption of a reinforcer rather than preference (as is the case with within-trial contrast) or running speed (as is the case with incentive contrast). The Williams and McDevitt (2001) study is of interest in this regard because when they isolated the instrumental pecking-for-food response from the Pavlovian anticipatory response, results were obtained similar to those found with anticipatory consummatory contrast.

Differential or behavioral contrast also appears to be similar to within-trial contrast because the former involves the random alternation of two signaled outcomes. But with behavioral contrast, it is more difficult to specify the source of the contrast because anticipatory and consequent effects are often confounded although, as already noted, when those effects have been isolated it appears that the anticipatory effects are greater and longer lasting (Williams, 1981).

Within-trial contrast of the kind described here would appear to be most similar to local contrast effects found with behavioral contrast procedures. Local contrast occurs at the start of a trial with a stimulus associated with a richer schedule, and that immediately follows a trial with a stimulus associated with a poorer schedule (or the reverse), and those transitions are at least somewhat predictable. However, local contrast effects typically are rather transient, especially when the schedule components are highly discriminable (Malone, 1976; see also Green & Rachlin, 1975), as the schedule components are in the present experiments. However, the within-trial contrast effect described here appears to develop quite slowly, appearing only after considerable training. Furthermore, the measure of local contrast is typically an increase in response rate whereas the measure of within-trial contrast involves a preference test, and these measures are not always correlated (Williams, 1992).

Justification of Effort

Social psychologists have studied human behavior in which there is a discrepancy between one's overt behavior and one's verbal expression of how one believes one should act. Researchers have been interested in how people modify their beliefs so as to resolve this discrepancy. In their accounts of these effects, social psychologists have often relied on complex social constructs involving such abstractions as cognitive dissonance (Festinger, 1957), self concept (Bem, 1967) and social norms (Tedeschi, Schlenker, & Bonoma, 1971), concepts that are difficult to define and are even more difficult to study experimentally. However, the contrast model depicted in Figure 4 may be able to provide a more parsimonious account of at least one of those phenomena, the justification of effort.

When it is difficult or embarrassing to obtain a reinforcer, social psychologists have proposed that the resulting cognitive dissonance imparts added value to the reinforcers in order to justify their ‘cost.’ For example, Aronson and Mills (1959) had participants read aloud different types of material as an initiation test prior to listening to a group discussion. Participants who read aloud embarrassing, sexually explicit material (severe test) judged the later group discussion as more interesting than those who read material that was not so sexually explicit (mild test). Aronson and Mills argued that to justify their behavior (reading embarrassing material aloud), participants experiencing the severe test had to increase the value of listening to the group discussion that followed.

A similar result was reported by Aronson and Carlsmith (1963) who showed that children who were threatened with severe punishment if they played with a forbidden toy (‘If you played with it I would be very angry’) valued the toy more than if they were threatened with mild punishment (‘If you played with it I would be annoyed’). Although none of the children played with the toy, it was argued that the greater threat of punishment had increased the value of the toy.

Interpretation of the results of these experiments is made difficult because of the complexity of the social context. For example, how might past experiences with initiations affect one's judgment of group value? High-initiation groups may objectively have more value (at least socially) than low-initiation groups. And in the case of punishment severity, playing with valuable objects in the past had probably been associated with greater threat of punishment, so the assumption may be quite valid.

More important, the design of the justification-of-effort experiment actually is quite similar to the design of the within-trial contrast experiments we have described. What is manipulated in justification of effort is the aversiveness of the prior initiation event or the threat of punishment and the dependent measure is the effect that this manipulation has on the value of the reinforcer, the expected group discussion, or the possibility of playing with the toy that would presumably follow (Aronson & Carlsmith, 1963; Aronson & Mills, 1959). Similarly, in the within-trial contrast experiment, the relative aversiveness of the initial event is manipulated and the dependent measure is the relative value of the discriminative stimuli that follow.

Festinger himself believed that his theory also applied to the behavior of nonhuman animals (Lawrence & Festinger, 1962) and felt that animals experienced dissonance between expectations and behavior similar to that of humans. Alternatively, it may be that cognitive dissonance does not require a social cognitive account. Instead, it may be a form of contrast in which the value of a reinforcer depends on the relative improvement in conditions leading up to the reinforcer (as in the justification-of-effort design).

Contrast effects also may be involved in several counterintuitive social psychological phenomena, including other forms of cognitive dissonance (e.g., Festinger & Carlsmith, 1959), the supposedly paradoxical effects of extrinsic reinforcement on intrinsic motivation (Deci, 1975), and learned industriousness (Eisenberger, 1992). (For a more extensive examination of the potential application of contrast to various social-psychological phenomena, see Zentall, Clement, Friedrich, & DiGian, 2006.) At some point it would be valuable to examine the role of contrast in these phenomena to determine if simpler accounts would work as well as the complex social-psychological explanations that traditionally have been proposed.

Conclusions

In this article we present evidence for a form of contrast that appears to be somewhat different from other, more familiar forms of contrast. In within-trial contrast, reinforcers or the stimuli that signal them are preferred if they follow events that are relatively less preferred than other prior events. Those less preferred, prior events can be high effort (a greater number of responses), a longer delay to reinforcement, or the absence of food relative to low effort (fewer responses), a shorter delay to reinforcement, or food, respectively. This contrast also can occur when the greater number of responses or the absence of food is signaled but occurs on trials correlated with, but separate from, the stimuli used in the preference assessment. We have attempted to account for this form of contrast in terms of the relative change in value between the end of the event (more or less effort, longer or shorter delay, or the presence vs. the absence of food) and the reinforcer (or the signal for reinforcement).

The within-trial contrast effect appears to result from a mechanism different from those underlying other forms of contrast that have been studied. Although it is most similar to local contrast in its occurrence after the schedule manipulation, it appears to be quite different from local contrast in when it occurs. Local contrast typically occurs early in training and then diminishes, whereas within-trial contrast appears to occur only after considerable training.

In many cases this theory makes the same predictions as the delay-reduction hypothesis, but the contrast account can also explain why the effect appears to depend on the signaling of the events or conditioned aversive stimuli that occur prior to the reinforcer. Without the addition of another mechanism, the delay-reduction hypothesis also has difficulty accounting for the effects obtained when the differential events do not actually occur on the same trials as the value of the reinforcer that is assessed. Furthermore, when trial duration is equated, preferences for prior events are inversely related to the preferences for the discriminative stimuli that follow.

Finally, the within-trial contrast effect described here bears a striking similarity to the justification–of-effort effect studied by social psychologists. Yet, that effect typically has been attributed to cognitive dissonance. The similarity raises the possibility that both effects result from the same underlying process and neither requires an account based on cognitive dissonance. Instead, the justification-of-effort phenomenon may be another example of contrast. Certainly, contrast provides a more parsimonious account of those and other related effects.

Acknowledgments

The research reported in this article was supported by Grants MH59194 and MH63726 from the National Institute of Mental Health to the first author and Grant MH077450 from the National Institute of Mental Health to the second author. We thank Peter Urcuioli and three anonymous reviewers for their helpful suggestions and constructive criticisms of an earlier version of this article. We also thank Tricia Clement, Andrea Friedrich, and Kelly DiGian for their assistance with the research.

References

- Amsel A. The role of frustrative nonreward in noncontinuous reward situations. Psychological Bulletin. 1958;55:102–119. doi: 10.1037/h0043125. [DOI] [PubMed] [Google Scholar]

- Armus H.L. Effects of response effort on secondary reward value. Psychological Reports. 1999;84:323–328. [Google Scholar]

- Armus H.L. Effect of response effort on the reward value of distinctively flavored food pellets. Psychological Reports. 2001;88:1031–1034. doi: 10.2466/pr0.2001.88.3c.1031. [DOI] [PubMed] [Google Scholar]

- Aronson E, Carlsmith J.M. Effects of severity of threat in the devaluation of forbidden behavior. Journal of Abnormal and Social Psychology. 1963;66:584–588. [Google Scholar]

- Aronson E, Mills J. The effect of severity of initiation on liking for a group. Journal of Abnormal and Social Psychology. 1959;59:177–181. doi: 10.1037/h0042162. [DOI] [PubMed] [Google Scholar]

- Bem D.J. Self-perception: An alternative interpretation of cognitive dissonance phenomena. Psychological Review. 1967;74:183–200. doi: 10.1037/h0024835. [DOI] [PubMed] [Google Scholar]

- Bower G.H. A contrast effect in differential conditioning. Journal of Experimental Psychology. 1961;62:196–199. [Google Scholar]

- Capaldi E.J. Successive negative contrast effect: Intertrial interval, type of shift, and four sources of generalization decrement. Journal of Experimental Psychology. 1972;96:433–438. [Google Scholar]

- Capaldi E.D, Myers D.E. Taste preferences as a function of food deprivation during original taste exposure. Animal Learning & Behavior. 1982;10:211–219. [Google Scholar]

- Capaldi E.D, Myers D.E, Campbell D.E, Sheffer J.D. Conditioned flavor preferences based on hunger level during original flavor exposure. Animal Learning & Behavior. 1983;11:107–115. [Google Scholar]

- Chung S.H, Herrnstein R.J. Choice and delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1967;10:67–74. doi: 10.1901/jeab.1967.10-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cleary T.L. The relationship of local to overall behavioral contrast. Bulletin of the Psychonomic Society. 1992;30:58–60. [Google Scholar]

- Clement T.S, Feltus J, Kaiser D.H, Zentall T.R. “Work ethic” in pigeons: Reward value is directly related to the effort or time required to obtain the reward. Psychonomic Bulletin & Review. 2000;7:100–106. doi: 10.3758/bf03210727. [DOI] [PubMed] [Google Scholar]

- Clement T.S, Zentall T.R. Second-order contrast based on the expectation of effort and reinforcement. Journal of Experimental Psychology: Animal Behavior Processes. 2002;28:64–74. [PubMed] [Google Scholar]

- Crespi L.P. Quantitative variation in incentive and performance in the white rat. American Journal of Psychology. 1942;40:467–517. [Google Scholar]

- Crespi L.P. Amount of reinforcement and level of performance. Psychological Review. 1944;51:341–357. [Google Scholar]

- Dember W.N, Fowler H. Spontaneous alternation behavior. Psychological Bulletin. 1958;55:412–428. doi: 10.1037/h0045446. [DOI] [PubMed] [Google Scholar]

- Deci E. Intrinsic motivation. New York: Plenum; 1975. [Google Scholar]

- DiGian K.A, Friedrich A.M, Zentall T.R. Reinforcers that follow a delay have added value for pigeons. Psychonomic Bulletin & Review. 2004;11:889–895. doi: 10.3758/bf03196717. [DOI] [PubMed] [Google Scholar]

- Eisenberger R. Learned industriousness. Psychological Review. 1992;99:248–267. doi: 10.1037/0033-295x.99.2.248. [DOI] [PubMed] [Google Scholar]

- Fantino E, Abarca N. Choice, optimal foraging, and the delay-reduction hypothesis. Behavioral and Brain Sciences. 1985;8:315–330. [Google Scholar]

- Festinger L. A theory of cognitive dissonance. Evanston, IL: Row, Peterson; 1957. [Google Scholar]

- Festinger L, Carlsmith J.M. Cognitive consequences of forced compliance. Journal of Abnormal and Social Psychology. 1959;58:203–210. doi: 10.1037/h0041593. [DOI] [PubMed] [Google Scholar]

- Flaherty C.F. Incentive contrast. A review of behavioral changes following shifts in reward. Animal Learning & Behavior. 1982;10:409–440. [Google Scholar]

- Flaherty C.F. Incentive relativity. New York: Cambridge University Press; 1996. [Google Scholar]

- Flaherty C.F, Checke S. Anticipation of incentive gain. Animal Learning & Behavior. 1982;10:177–182. [Google Scholar]

- Flaherty C.F, Coppotelli C, Grigson P.S, Mitchell C, Flaherty J.E. Investigation of the devaluation interpretation of anticipatory negative contrast. Journal of Experimental Psychology: Animal Behavior Processes. 1995;21:229–247. doi: 10.1037//0097-7403.21.3.229. [DOI] [PubMed] [Google Scholar]

- Flaherty C.F, Grigson P.S, Coppotelli C, Mitchell C. Anticipatory contrast as a function of access time and spatial location of saccharin and sucrose solutions. Animal Learning & Behavior. 1996;24:68–81. [Google Scholar]

- Flaherty C.F, Rowan G.A. Effect of intersolution interval, chlordiazepoxide, and amphetamine on anticipatory contrast. Animal Learning & Behavior. 1988;16:47–52. [Google Scholar]

- Flaherty C.F, Turovsky J, Krauss K.L. Relative hedonic value modulates anticipatory contrast. Physiology & Behavior. 1994;55:1047–1054. doi: 10.1016/0031-9384(94)90386-7. [DOI] [PubMed] [Google Scholar]

- Franchina J.J, Brown T.S. Reward magnitude shift effects in rats with hippocampal lesions. Journal of Comparative and Physiological Psychology. 1971;76:365–370. doi: 10.1037/h0031375. [DOI] [PubMed] [Google Scholar]

- Friedrich A.M, Clement T.S, Zentall T.R. Reinforcers that follow the absence of reinforcement have added value for pigeons. Learning & Behavior. 2005;33:337–342. doi: 10.3758/bf03192862. [DOI] [PubMed] [Google Scholar]

- Friedrich A.M, Zentall T.R. Pigeons shift their preference toward locations of food that take more effort to obtain. Behavioural Processes. 2004;67:405–415. doi: 10.1016/j.beproc.2004.07.001. [DOI] [PubMed] [Google Scholar]

- Gray J.A. The psychology of fear and stress. Cambridge, MA: Cambridge University Press; 1987. [Google Scholar]

- Green L, Rachlin H. Economic and biological influences on a pigeon's keypeck. Journal of the Experimental Analysis of Behavior. 1975;23:55–62. doi: 10.1901/jeab.1975.23-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Holt D.D. Economic and biological influences on key pecking and treadle pressing in pigeons. Journal of the Experimental Analysis of Behavior. 2003;80:43–58. doi: 10.1901/jeab.2003.80-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halliday M.S, Boakes R.A. Behavioural contrast and response-independent reinforcement. Journal of the Experimental Analysis of Behavior. 1971;16:429–434. doi: 10.1901/jeab.1971.16-429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hearst E. Backward associations: Differential learning about stimuli that follow the presence versus the absence of food in pigeons. Animal Learning & Behavior. 1989;17:280–290. [Google Scholar]

- Jellison J.L. Justification of effort in rats: Effects of physical and discriminative effort on reward value. Psychological Reports. 2003;93:1095–1100. doi: 10.2466/pr0.2003.93.3f.1095. [DOI] [PubMed] [Google Scholar]

- Kacelnik A, Marsh B. Cost can increase preference in starlings. Animal Behaviour. 2002;63:245–250. [Google Scholar]

- Keller K. The role of elicited responding in behavioral contrast. Journal of the Experimental Analysis of Behavior. 1974;21:249–257. doi: 10.1901/jeab.1974.21-249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawrence D.H, Festinger L. Deterrents and reinforcement: The psychology of insufficient reward. Stanford, CA: Stanford University Press; 1962. [Google Scholar]

- Malone J.C., Jr Local contrast and Pavlovian induction. Journal of the Experimental Analysis of Behavior. 1976;26:425–440. doi: 10.1901/jeab.1976.26-425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsh B, Schuck-Paim C, Kacelnik A. Energetic state during learning affects foraging choices in starlings. Behavioral Ecology. 2004;15:396–399. [Google Scholar]

- Maxwell F.R, Calef R.S, Murry D.W, Shepard J.C, Norville R.A. Positive and negative successive contrast effects following multiple shifts in reward magnitude under high drive and immediate reinforcement. Animal Learning & Behavior. 1976;4:480–484. [Google Scholar]

- Mellgren R.L. Positive and negative contrast effects using delayed reinforcement. Learning and Motivation. 1972;3:185–193. [Google Scholar]

- Pompilio L, Kacelnik A, Behmer S.T. State-dependent learned valuation drives choice in an invertebrate. Science. 2006 Mar 17;311:1613–1615. doi: 10.1126/science.1123924. [DOI] [PubMed] [Google Scholar]

- Revusky S.H. Hunger level during food consumption: Effects on subsequent preference. Psychonomic Science. 1967;7:109–110. [Google Scholar]

- Reynolds R.S. Behavioral contrast. Journal of the Experimental Analysis of Behavior. 1961;4:57–71. doi: 10.1901/jeab.1961.4-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shanab M.E, Domino J, Ralph L. Effects of repeated shifts in magnitude of food reward upon the barpress rate in the rat. Bulletin of the Psychonomic Society. 1978;12:29–31. [Google Scholar]

- Singer R.A, Berry L.M, Zentall T.R. Preference for a stimulus that follows an aversive event: Contrast or delay reduction? Journal of the Experimental Analysis of Behavior. 2007;87:275–285. doi: 10.1901/jeab.2007.39-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spetch M.L, Wilkie D.M, Pinel J.P.J. Backward conditioning: A reevaluation of the empirical evidence. Psychological Bulletin. 1981;89:163–175. [PubMed] [Google Scholar]

- Tedeschi J.T, Schlenker B.R, Bonoma T.V. Cognitive dissonance: Private ratiocination or public spectacle? American Psychologist. 1971;26:685–695. [Google Scholar]

- Terrace H.S. Stimulus control. In: Honig W.K, editor. Operant behavior: Areas of research and application. New York: Appleton-Century-Crofts; 1966. pp. 271–344. [Google Scholar]

- Tinklepaugh O.L. An experimental study of representative factors in monkeys. Journal of Comparative Psychology. 1928;8:197–236. [Google Scholar]

- Vasconcelos M, Urcuioli P.J, Lionello-DeNolf K.M. Failure to replicate the “work ethic” effect in pigeons. Journal of the Experimental Analysis of Behavior. 2007;87:383–399. doi: 10.1901/jeab.2007.68-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A. The following schedule of reinforcement as a fundamental determinant of steady state contrast in multiple schedules. Journal of the Experimental Analysis of Behavior. 1981;35:293–310. doi: 10.1901/jeab.1981.35-293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams B.A. Another look at contrast in multiple schedules. Journal of the Experimental Analysis of Behavior. 1983;39:345–384. doi: 10.1901/jeab.1983.39-345. [DOI] [PMC free article] [PubMed] [Google Scholar]