Abstract

Objectives: The study sought to determine if two major resources for primary care questions have significant differences in information content and whether the number of documents found differs by disease category, patient age, or patient gender.

Methods: Seven hundred fifty-two questions were randomly selected from the Clinical Questions Collection of the National Library of Medicine. UpToDate and the National Guidelines Clearinghouse (NGC) were searched utilizing keywords from the questions. The number of documents retrieved for each question in the resources was recorded. Chi-squared analysis was used to compare differences in retrieval between the resources. Logistic regression was used to evaluate the effect of patient age, patient gender, or disease category on the ability to find content.

Results: UpToDate returned 1 or more documents for 580 questions, while NGC returned at least 1 document for 493 questions (77.1% versus 65.5% of question sampled, P = 0.001). In combination, the 2 resources returned content for 91% of searches (n = 685). NGC retrieved a mean of 16.3 documents per question versus 8.7 documents from UpToDate. Disease category was the only variable having a significant impact on the presence of online resource content.

Conclusions: UpToDate had greater breadth of content than NGC, while neither resource provided complete coverage. Current practice guidelines, as reflected by those in the NGC, addressed at most two-thirds of the selected clinical questions.

Highlights

Neither clinical practice guidelines nor an extremely popular subscription resource, both often used for decision support in electronic medical records, addressed all randomly selected clinical questions.

Implications

Some overlapping content in library collections is likely necessary to achieve more comprehensive coverage of clinical questions.

Given the current state of knowledge resources, hospitals may need to maintain access to subscription-based resources to address clinical information needs.

Future research may include assessment of content quality and coverage for clinical questions from a spectrum of specialties and consideration of additional knowledge resources.

INTRODUCTION

It is now virtually impossible for clinicians, especially primary care practitioners, to know all of the information needed to adequately manage patients [1]. Although literature searches can improve the care of patients [2], time constraints and other factors result in many physicians not pursuing answers to their clinical questions [1, 3–8]. A number of clinically focused resources, such as the National Guidelines Clearinghouse (NGC) and UpToDate, have been developed to assist clinicians with quickly accessing information to more easily address such information needs.

However, in 2001, Alper and colleagues studied 2 experienced physician searchers and found that no single knowledge resource answered more than 70% of the clinicians' questions [9]. In that study, use of 4 databases was required to answer 95% of the sample questions [9]. Two more recent studies also found that answers were not found in evidence-based medicine (EBM) or more synthesized resources for one-quarter to one-third of clinical question samples [10, 11].

Given the continuing focus on knowledge resource development in health care, the current study attempted to estimate how often nonexpert searchers are likely to find potentially relevant information in two knowledge resources, UpToDate and NGC. The current study also explored potential differences in the volume of evidence-based information found in the two online resources by patient gender, patient age, or disease category. Secondary aims included comparing differences in content coverage by patient-related variables, evaluating potential suitability of automated search strategies for identifying relevant data for clinical questions, and estimating the overall coverage of the two resources.

METHODS

Primary care practitioner questions

General practitioner questions were randomly selected from the Clinical Questions Collection from the Lister Hill National Center for Biomedical Communications [12], a part of the National Library of Medicine (NLM). The collection contained approximately 3,000 questions recorded by trained observers in primary care settings during the years 1996 to 2002. Each question record also included demographic information about the patient and doctor involved, including patient age, patient gender, questioner age, year the question was asked, and the reason for the question.

A random number generator was used to generate a non-repetitive list of numbers between 1 and 2,987 (duplicating the record numbers in the Clinical Questions Collection). A search algorithm was run to select the records matching the first 752 random numbers. This sample size was selected to detect an absolute difference of 10% in coverage between the 2 resources at a 5% level of significance (one-tailed test).

For each question, trained NLM personnel had assigned keywords that were almost always taken from the Medical Subject Headings (MeSH) database. This procedure is likely to provide an optimistic approximation of which search terms might be chosen by nonexpert searchers or automatically extracted from an electronic health record. The questions were received in extensible markup language (XML) format, parsed, and placed in an Access database.

Information resources

UpToDate is a subscription-based clinical information resource available via the Internet, CD-ROM, and the handheld Pocket PC [13]. Although it is a commercial product, UpToDate was chosen over other similar products, because it does not have advertising and is endorsed by multiple medical societies [14]. Product documentation indicates that UpToDate is updated every four months through extensive literature surveillance [13, 15, 16].

An initiative of the Agency for Healthcare Research and Quality (AHRQ), NCG is a publicly available online resource including evidence-based clinical guidelines and related documents. It is updated weekly and content undergoes an annual verification process to ensure each guideline remains current [17]. NCG was chosen over other publicly available online resources, because it is not sponsored by a business or other entity that might influence content. Searching NGC was limited to evidence-based guidelines—those with a systematic approach to their assembly and not relying on consensus or expert opinion—operationally defined in this study as those with any value in the NGC “Methods Used to Analyze the Evidence” field other than “Not stated,” “Other,” or “Review.”

Search methods

The search methods approximated searching performed either by nonexpert humans or automated queries. The keywords present in the Clinical Questions Collection records were used for search terms. Keywords were not changed other than reordering post-coordinated terms (e.g., “shock, septic” is changed to “septic shock”). NGC was searched with “and” inserted between each term. For UpToDate, which does not accept multiple search terms simultaneously, the single most specific keyword was used as the search term for each question (e.g., for a question about administering immunizations during a physical exam, the term “Immunization” was selected from the assigned MeSH terms as the most specific term in the query).

Searches were executed once. To approximate automated generation of queries, searches that retrieved no documents were not revised. In addition, soon after the study started, informal observations led to one revision in the protocol: when searching UpToDate, the keyword “neoplasm” was changed to “cancer.”

Measurement and analytic strategies

The number of documents retrieved in both UpToDate and NGC was recorded for each randomly selected question. The number of documents retrieved for each online resource, the dependent variable for both hypotheses, was dichotomized into content found (at least one document) and no content found (no documents).

The independent variables used to determine content coverage included 3 patient-specific characteristics from each question record in the Clinical Questions Collection: patient age, patient gender, and disease category. Patient age was categorized as infant (age 0–23 months), child (age 0–18 years), adolescent (age 13– 18), adult (age 19–44), middle age (45–64), aged (age 65 or more), and unclassified. Patient gender was recorded as male, female, or unknown.

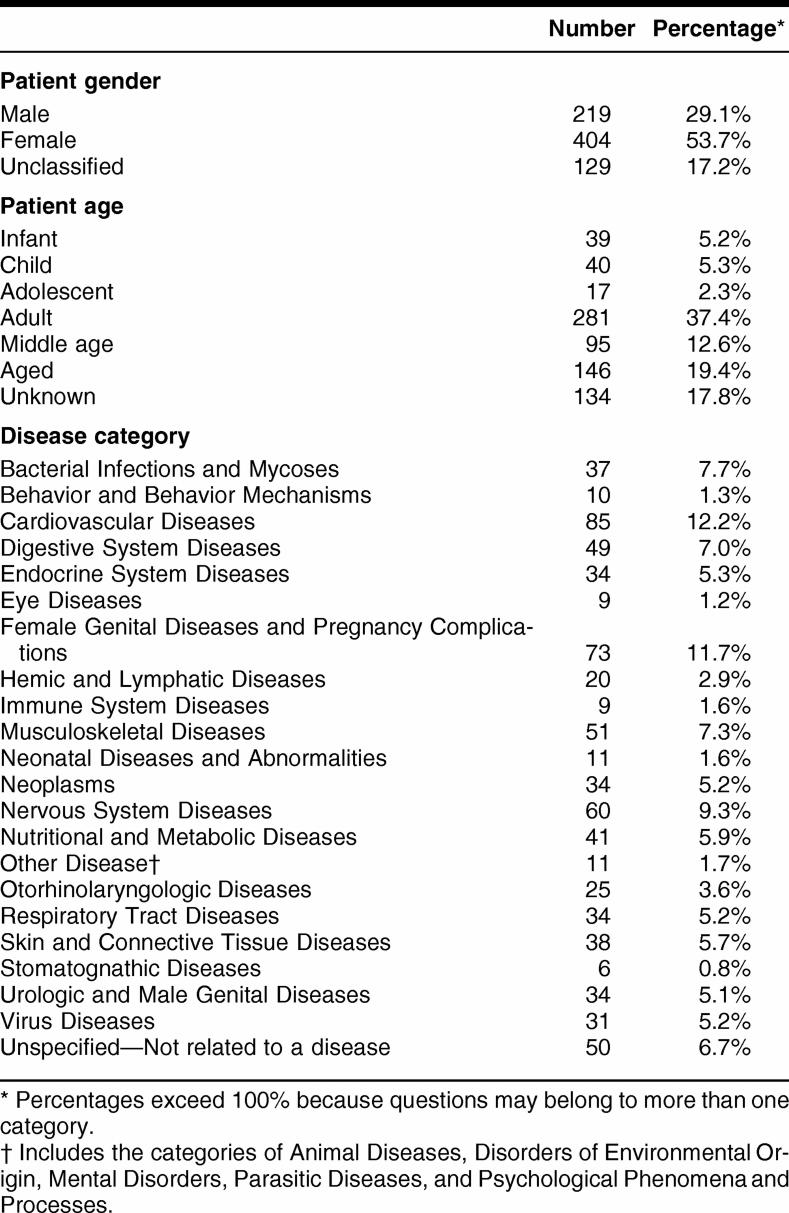

Disease categories were taken from the MeSH terms used by NLM, extracted from the Clinical Questions Collection record indexing. All of the categories under the MeSH term “Disease” were used, as were two categories under “Psychiatry and Psychology” (Table 1).

Table 1 Characteristics of patients prompting the sampled questions (n = 752)

The initial hypothesis of differences between the 2 resources in ability to find content in an automated manner was addressed by comparing the number of documents retrieved. The second hypothesis, exploring reasons for differences in content coverage, was answered by comparing the proportion of questions that found at least 1 document in UpToDate versus the same proportion in NGC. Both analyses used the chi-square test. Logistic regression measured the influence of disease category, patient age, and patient gender [18] on the ability to return a minimum of one document for each resource. In all analyses, significance was assumed if P < 0.05.

RESULTS

The patients who prompted the questions were typically female and at least 65 years of age (Table 1). There were more questions (12.2%) in the “Cardiovascular Diseases” category than any other.

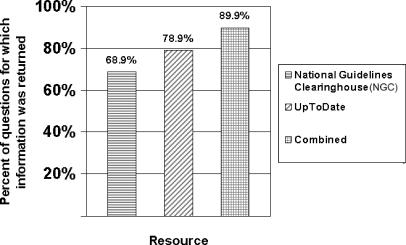

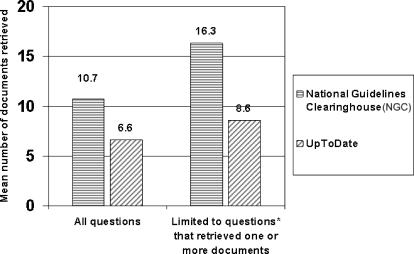

NGC returned content for 493 (65.5%) of the 752 searches (95% CI 62.0%–68.9%), while UpToDate returned content for 580 (77.1%) questions (95% CI 73.9%–80.1%; P = 0.001). When both resources were combined (Figure 1), they returned at least 1 document for 685 (91.1%) of searches (95% CI 87.5%– 93.0%). When documents were found, UpToDate returned a median of 3 and a mean of 8.7 documents, while NGC returned a median of 5 and a mean of 16.3 documents (Figure 2). For the individual questions, the number of documents found for the sampled questions in UpToDate ranged from 0 to 110, while in NGC the number of documents ranged from 0 to 305.

Figure 1.

Percent of questions yielding at least one document from each resource

Figure 2.

Mean number of documents found per question for each resource* 493 questions yielded documents in NGC; UpToDate yielded documents for 580 documents.

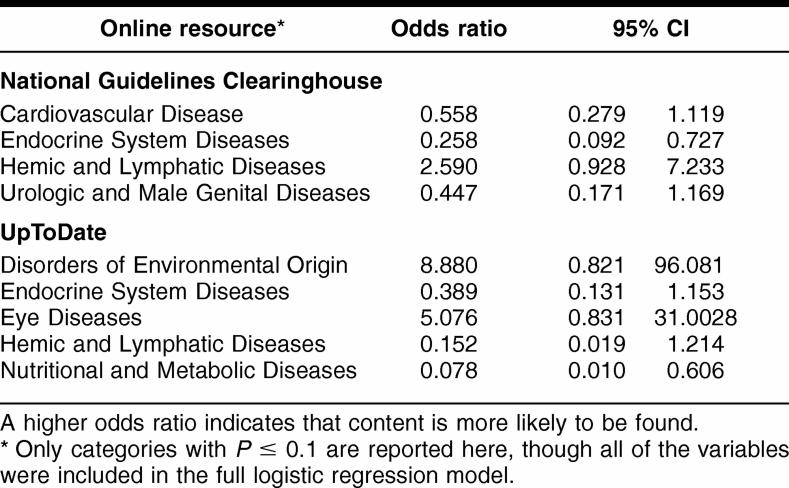

The only characteristic of the clinical question that predicted finding content was disease category (Table 2). NGC was less likely to have documents for questions from the “Urologic and Male Genital Diseases” category, while UpToDate was less likely to cover “Nutritional and Metabolic Diseases” and “Disorders of Environmental Origin.” The “Endocrine” and “Hemic and Lymphatic Diseases” categories had a significant influence on finding content in both databases (P < 0.10). Interestingly, Hemic and Lymphatic questions were more likely to be found in NGC and less likely to be found in UpToDate. Thus, while disease category was the only independent variable shown to have a significant effect on whether or not content was found, the disease categories that modulated results were not consistent across the 2 resources.

Table 2 Disease category as a predictor of documents retrieved

DISCUSSION

The results of this analysis showed that there was a difference in easily accessed content between the subscription-based resource and the publicly available resource. UpToDate returned a minimum of 1 document for 11.6% more questions than NGC, whereas NGC retrieved more documents when content was found for a question. The results also demonstrated that the combination of the knowledge resources covered more than each knowledge resource separately, thus confirming what many librarians experience every day: more than one resource is often required to answer a given question (Figure 1).

Although NGC returned at least 1 document in 11% fewer questions, the average number of documents found in NGC for all questions was larger than the average number of documents found in UpToDate. For example, multiple documents were retrieved from NGC on the subject of heart failure, but only one was retrieved from UpToDate. This concentration of guidelines on selected topics may be due to the absence of curriculum and editorial control at NGC due to its focus on providing content reflecting active areas of the work the producers of guidelines initiated. Dictating which diseases need guidelines is not in NGC's purview; NGC simply brings submitted guidelines together in one place. The process of determining whether their guidelines provide unique contributions rests with the guideline authors rather than NGC. The potentially associated problem of redundant guidelines may be analogous to the recognized problem of redundant clinical trials. Cumulative meta-analyses have shown that many clinical trails were unnecessary and were conducted after prior trials had already settled a research question [19].

The current study observed peculiarities in the UpToDate search engine. In addition to the inability to accept two search terms, initial informal observations led to revising the protocol to substitute “cancer” for the keyword “neoplasms” due to problems with the UpToDate engine's failure to process MeSH terms that are not common text-words in articles. Similarly, the influence of disease category might be due to variation among disease categories in the mapping between the indexing terms of the two resources and the MeSH terms that were used in the searching protocol. For example, the question “What is the name of that skin disease on the feet that produces moist skin and little holes?” with the very vague assigned keyword of “skin disease” resulted in 0 UpToDate documents and 285 NGC documents. This problem, and the authors' inability to completely anticipate or identify all problematic terms, underscores the need for formal research of mapping [20].

This analysis had several limitations. First, it was not meant to determine definitively whether or not the content could be found in the online resources, but rather could the content be found in an automated or nonexpert fashion. Assessing less robust searching methods as performed by nonexperts or by automated searches, such as those that might be incorporated into electronic medical records, would be valuable for future system development.

Second, no judgment regarding the quality of the content was attempted. This was a much more difficult question and concerns not only whether or not the required information was present (often what is required is debatable), but also whether or not it was in a form clinicians could use. The authors felt that the current results were an optimistic estimation, underscoring the need to improve existing resources. Third, this analysis was limited to only two of the many clinical knowledge resources currently available and to questions generated by primary care clinicians. It would be reasonable to presume that alternative knowledge resources or a pool of questions from other clinical specialties would have yielded different results.

CONCLUSIONS AND FUTURE WORK

This work has revealed that the two resources had a significant difference in the number of documents retrieved and that neither resource provides comprehensive coverage of the questions in the sample. Given the rising costs of subscriptions to knowledge resources [21], small health care organizations or those with tight budgets may be less likely to pay for a subscription to one or more knowledge resources. Thus, access to subscription resources may need to be subsidized for medically underserved or other disadvantaged areas to ensure more comprehensive coverage of primary care content.

Additional implications of this work include the need for improved standards of communication between databases (e.g., between UpToDate and MeSH). Standards are needed to ensure that keywords are being correctly mapped. Another implication is that professional associations and other health care organizations promulgating guidelines should look for diseases and conditions not yet addressed and avoid developing guidelines that duplicate existing work. Lastly, as decision support in computer provider order entry is often currently derived from practice guidelines, the estimate of the coverage provided by evidence-based guidelines may give an upper estimate of how often high-quality information can guide computer entry of provider orders.

In the future, this kind of research should be continued, exploring the clinical relevance of the documents retrieved from each resource as well as expanding the number and scope of examined resources. Such evaluation data will aid medical librarians in understanding which resources are most important for their collections given coverage and number of clinical specialties supported. Librarians can assist users in understanding which resources are most useful for which purpose and, perhaps most importantly, that each knowledge resource will have limitations. Clinicians make decisions every day that are important to continued good health of patients, if not necessarily life and death. They and their patients deserve high-quality, easily accessible resources.

Acknowledgments

The authors are grateful for the work of John W. Ely, Department of Family Medicine, University of Iowa College of Medicine, and to the National Library of Medicine for the creation of the Clinical Questions collection.

Footnotes

* This work was presented in part as a poster at the American Medical Informatics Association Annual Symposium, Washington, D.C., October 2005.

REFERENCES

- Schwartz K, Northrup J, Israel N, Crowell K, Lauder N, and Neale AV. Use of on-line evidence-based resources at the point of care. Fam Med. 2003 Apr; 35(4):251–6. [PubMed] [Google Scholar]

- Lucas BP, Evans AT, Reilly BM, Khodakov YV, Perumal K, Rohr LG, Akamah JA, Alausa TM, Smith CA, and Smith JP. The impact of evidence on physicians' inpatient treatment decisions. J Gen Intern Med. 2004 May; 19(5 pt 1):402–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hersh WR, Hickam DH. How well do physicians use electronic information retrieval systems? a framework for investigation and systematic review. JAMA. 1998 Oct 21; 280(15):1347–52. [DOI] [PubMed] [Google Scholar]

- Cogdill KW, Friedman CP, Jenkins CG, Mays BE, and Sharp MC. Information needs and information seeking in community medical education. Acad Med. 2000 May; 75(5):484–6. [DOI] [PubMed] [Google Scholar]

- McGowan JJ, Richwine M. Electronic information access in support of clinical decision making: a comparative study of the impact on rural health care outcomes. Proc AMIA Symp 2000:565–9. [PMC free article] [PubMed] [Google Scholar]

- Hunt DL, McKibbon KA. Locating and appraising systematic reviews. Ann Intern Med. 1997 Apr; 126(7):532–8. [DOI] [PubMed] [Google Scholar]

- Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, and Evans ER. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999 Aug 7; 319(7206):358–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ely JW, Osheroff JA, Ebell MH, Chambliss ML, Vinson DC, Stevermer JJ, and Pifer EA. Obstacles to answering doctors' questions about patient care with evidence: qualitative study. BMJ. 2002 Mar 23; 324(7339):710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alper BS, Stevermer JJ, White DS, and Ewigman BG. Answering family physicians' clinical questions using electronic medical databases. J Fam Pract. 2001 Nov; 50(11):960–5. [PubMed] [Google Scholar]

- Patel MR, Schardt CM, Sanders LL, and Keitz SA. Randomized trial for answers to clinical questions: evaluating a pre-appraised versus a MEDLINE search protocol. J Med Libr Assoc. 2006 Oct; 94(4):382–6. [PMC free article] [PubMed] [Google Scholar]

- Koonce TY, Giuse NB, and Todd P. Evidence-based databases versus primary medical literature: an in-house investigation on their optimal use. J Med Libr Assoc. 2004 Oct; 92(4):407–11. [PMC free article] [PubMed] [Google Scholar]

- National Library of Medicine. Clinical questions collection. [Web document]. Bethesda, MD: Cognitive Science Branch, Lister Hill National Center for Biomedical Communications, 2004. [rev. 16 Jan 2007 2004; cited 16 Jan 2007]. <http://clinques.nlm.nih.gov/JitSearch.html>. [Google Scholar]

- Fox GN, Moawad N. UpToDate: a comprehensive clinical database. J Fam Pract. 2003 Sep; 52(9):706–10. [PubMed] [Google Scholar]

- What is UpToDate: service description. [Web document]. Waltham, MA: UpToDate, 2007. [2007; cited 16 Feb 2007]. <http://www.uptodate.com/service/>. [Google Scholar]

- Campbell R, Ash J. An evaluation of five bedside information products using a user-centered, task-oriented approach. J Med Libr Assoc. 2006 Oct; 94(4):435–41.E206–7. [PMC free article] [PubMed] [Google Scholar]

- What is UpToDate: editorial policy. [Web document]. Waltham, MA: UpToDate, 2006. [2006; cited 3 Jan 2007]. <http://www.uptodate.com/service/editorial_policy.asp>. [Google Scholar]

- Isham G. Prospects for radical improvement. the National Guidelines Clearinghouse project debuts on the Internet. Healthplan. 1999 Jan–Feb; 40(1):13–5. [PubMed] [Google Scholar]

- SAS version 8. Cary, NC: SAS Institute, 2002. [Google Scholar]

- Young C, Horton R. Putting clinical trials into context. Lancet. 2005 Jul 9–15; 366:107–8. [DOI] [PubMed] [Google Scholar]

- Elkin PL, Brown SH, Husser CS, Bauer BA, Wahner-Roedler D, Rosenbloom ST, and Speroff T. Evaluation of the content coverage of SNOMED CT: ability of SNOMED clinical terms to represent clinical problem lists. Mayo Clin Proc. 2006 Jun; 81(6):741–8. [DOI] [PubMed] [Google Scholar]

- Schlimgen JB, Kronenfeld MR. Update on inflation of journal prices: Brandon/Hill list journals and the scientific, technical, and medical publishing market. J Med Libr Assoc. 2004 Jul; 92(3):307–14.15243636 [Google Scholar]