Abstract

Objective: The study sought to determine which online journals primary care physicians and specialists not affiliated with an academic medical center access and how the accesses correlate with measures of journal quality and importance.

Methods: Observational study of full-text accesses made during an eighteen-month digital library trial was performed. Access counts were correlated with six methods composed of nine measures for assessing journal importance: ISI impact factors; number of high-quality articles identified during hand-searches of key clinical journals; production data for ACP Journal Club, InfoPOEMs, and Evidence-Based Medicine; and mean clinician-provided clinical relevance and newsworthiness scores for individual journal titles.

Results: Full-text journals were accessed 2,322 times by 87 of 105 physicians. Participants accessed 136 of 348 available journal titles. Physicians often selected journals with relatively higher numbers of articles abstracted in ACP Journal Club. Accesses also showed significant correlations with 6 other measures of quality. Specialists' access patterns correlated with 3 measures, with weaker correlations than for primary care physicians.

Conclusions: Primary care physicians, more so than specialists, chose full-text articles from clinical journals deemed important by several measures of value. Most journals accessed by both groups were of high quality as measured by this study's methods for assessing journal importance.

Highlights

Journals that nonacademic practicing primary care physicians accessed from a virtual library service were highly rated by multiple methods of evaluating importance.

Specialists accessed different journals than primary care physicians; their choices were also less correlated with methods of evaluating importance.

Implications

The journals that primary care physicians and specialists accessed most often were the high-circulation, broad-based health care journals.

Not all journals in digital library collections were accessed; less than 40% of the available journals in this study were used.

Specialists and primary care physicians appeared to have different use patterns based on their specialties and populations served.

BACKGROUND

A recent systematic review by the Australian National Institute for Clinical Studies reported that in 13 of 24 studies of the information-seeking habits and preferences of health professionals, clinicians indicated journals as the first or second preferred source of information for answering questions that arose in clinical care [1]. This was true almost 30 years ago, as shown by results of 1 of the first evaluations of physician information-seeking behaviors and resources [2], and continues to be true today [3]. A substantial body of literature reports physician journal reading for current awareness, including Tenopir and King [4], who note a shift away from personal subscriptions toward using library or online subscriptions because of increasing subscription costs and changing availability. Saint et al. reported just over 4 hours of reading time per week for US internists [5], while Dutch general practitioners indicated shorter reading times of 1.6 hours per week [6]. Other studies reported ranges of weekly journal reading time between these 2 estimates [1, 3].

Most of the data from studies of journal use for keeping current or answering clinical questions come from self-reports. Little information is available on actual use of specific journals by health care professionals in their daily practice or on the effect of digital libraries and open access on journal use [7]. The majority of data on electronic journal use come from academic medical centers. Rogers reported that surveys at Ohio State University showed that a cultural shift from print to electronic journals for academic researchers and practitioners occurred during 1998 to 2000 [8]. This shift came about because of the availability of personal computers, increased built-in links from bibliographic databases to full-text articles, more awareness of the ease of use of full text, and ready availability of a critical mass of important journals and their backfiles. A diffusion study by Chew et al. [9] confirmed the results from Rogers et al. [8] and Casebeer et al. [7], showing that by 2003 a majority of US physicians reported access to the Internet in their offices and clinics and regular seeking of online information.

Librarians have always been interested in being able to predict use and plan journal collections; this work has expanded to assess electronic collections. For example, Wulff and Nixon reported that electronic access to journal articles in an academic health sciences library was associated with ISI impact factors (correlation coefficient [r] = 0.58, P < 0.01 for 94 Ovid medical and biomedical journals): the higher the impact factor, the more they were used [10].

The current study looked at journals accessed by physicians in the control arm of a larger study (McMaster PLUS Trial). The physicians in that study were practicing clinicians, only some of whom had a loose affiliation with an academic medical center. The McMaster PLUS Trial studied how physicians in northern Ontario used information resources [11, 12]. The study was a randomized trial of a new information service for all practicing clinicians in the study area (northern Ontario) designed to shift physician resource use to be more evidence based. All participants were given Internet access to a standard existing clinical digital library, the Northern Ontario Virtual Library (NOVL), which includes Ovid Technology databases with all of the Evidence-Based Medicine Reviews (EBMR) databases, 348 full-text journals and books, Stat!Ref (a collection of clinical books), and various help tools. The journals were chosen based on librarian experience with this group of physicians, availability from vendors (e.g., Ovid), open access availability, and other standard selection tools. The intervention group had access to additional resources, including alerts regarding recently published studies and reviews and a special search interface for selected articles [12]. The use of full-text journal articles by physicians who had access to the basic collection and services in NOVL (the control group physicians) are reported here.

This paper addresses the following questions:

Which journals did physicians not associated with an academic medical center use when they received access to a basic collection of online resources and services?

How frequently were the journals accessed over an eighteen-month period?

Were the chosen titles clinically important health care journals as measured by external assessments of quality?

METHODS

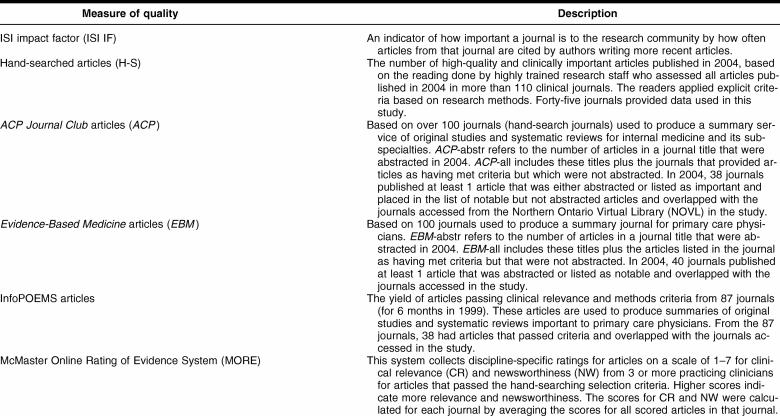

To ascertain which journals practicing physicians not closely affiliated with an academic medical center accessed, data were collected for 18 months (November 2003–April 2005). The clinicians had access to 348 full-text journals through NOVL. “Journal” was defined broadly to include Cochrane Database of Systematic Reviews, DARE Abstracts of Reviews of Effects, and ACP Journal Club summaries (Ovid EBMR database content). Journals accessed outside NOVL (e.g., open access journals) were not available for analysis. Each online access of a journal was captured and tabulated using the McMasterPLUS Trial data capture software. Correlation analyses were applied to the number of accesses to each journal title and 6 methods of assessing journal quality (corresponding to 9 different measures, Table 1).

Table 1 Descriptions of the external journal quality measures used in the study

Measures of journal quality

ISI impact factors

ISI impact factors [13] provide a measure of a journal's importance to the research community by summarizing how often articles from that journal are cited by authors writing more recent articles. The impact factor accounts for the number of articles each journal publishes per year. These values were obtained for the year 2004 from ISI Web of Knowledge and were cross-referenced with the full-text journals accessed by participants. ISI impact factors were not available for some titles (e.g., Cochrane Database of Systematic Reviews) and several nursing journals.

Hand-searched journals

The second method of assessing journal quality came from data collected by the staff members of the Health Information Research Unit at McMaster University during production of three evidence-based secondary journals (ACP Journal Club, Evidence-Based Medicine, and Evidence-Based Nursing). Experienced and highly trained research staff read more than 100 key clinical journals and identify high-quality and clinically important original studies and systematic review articles. The readers apply explicit criteria based on strong research methods for clinical topics (e.g., treatment, diagnosis, prognosis, etiology [14, 15]). An example of the reading criteria that a tagged treatment study must meet includes randomization, a clinically important outcome, appropriate statistical analysis, and participant follow-up of more than 80%. A list of the journals and the reading criteria are available online [16].

Of the 110 journals that were read, only 45 were on the list of 348 journals in the NOVL online collection. These titles are referred to as the hand-searched journals. For the study of journal accesses reported here, the data set consisted of the number of 2004 articles that met the quality criteria in each of the journals accessed by participants during the observation period. For example, the 5 journals with the highest number of articles meeting these inclusion criteria were Cochrane Database of Systematic Reviews (n = 444), Lancet (n = 134), Journal of Clinical Oncology (n = 100), BMJ (n = 93), and Circulation (n = 92).

Evidence-Based Medicine and ACP Journal Club

The third through sixth methods of evaluating the relative importance of the journals accessed in this study used the number of published articles that were included in Evidence-Based Medicine and ACP Journal Club in 2004 (Table 1). Content for each of these commercially available abstract journals is prepared at McMaster University. Each journal publishes summaries of important new clinical studies and systematic review articles that are identified in the hand-search of the important clinical journals described in the previous section. Articles chosen for the two journals come from the hand-searched reading, and therefore the data for these quality assessments overlap (i.e., they are not independent).

In the production of Evidence-Based Medicine and ACP Journal Club, only the most important articles in terms of clinical applicability and frequently encountered conditions are chosen for abstraction. Other important articles that cover conditions or diseases that are less common or articles with less potential clinical impact are classified as clinically notable and included in a separate list of citations, providing two levels of importance for this assessment.

For both ACP Journal Club and Evidence-Based Medicine, the total number of articles chosen for abstraction and commentary from each journal title was available for 2004, as were the number of articles in each journal title that met with selection criteria whether they were abstracted or only listed as important or notable with no abstraction. In 2004, data for fifty-two journals read for ACP Journal Club and Evidence-Based Medicine overlapped with the journals available to the study physicians. The number of articles in each of these fifty-two journals included for each of the four measures related to ACP Journal Club and Evidence-Based Medicine were cross-referenced with the journal titles accessed by study participants. For example, BMJ contributed the most articles to ACP Journal Club (six articles abstracted and forty-six articles listed as important), while JAMA was the top contributor for Evidence-Based Medicine (eight articles abstracted and forty-seven articles listed as important).

InfoPOEMs

The seventh method of evaluation used the journals assessed by the editors and staff who produce InfoPOEMs, Patient-Oriented Evidence that Matters [15], a commercial alerting service that provided summaries of recent high-quality clinical studies and systematic reviews for primary care physicians, much like those produced for ACP Journal Club and Evidence-Based Medicine. The articles selected by InfoPOEMs staff from 87 journal titles were available for analysis from 6 months of 1997. This list was cross-referenced with the list of journals accessed by study participants for 38 journal titles present on both the InfoPOEMs and NOVL lists. The 6 journals that contributed the most POEMs (75% of all InfoPOEMs) were JAMA, New England Journal of Medicine, Archives of Internal Medicine, Annals of Internal Medicine, BMJ, and Obstetrics and Gynecology.

Clinical relevance and newsworthiness scores

The final two measures of journal quality were based on ratings of clinical relevance and newsworthiness scores obtained from the MORE system [12]. The MORE system was started in 2000 to rate the original studies and systematic reviews identified as passing the quality and methods criteria during the hand-searches of the clinical journals. This system collects discipline-specific ratings (scale 1–7) of articles' clinical relevance and newsworthiness from 3 or more practicing clinicians for each pertinent discipline. A score of 7 for relevance is “directly and highly relevant to my discipline,” while a score of 1 is “definitely not relevant; topic completely unrelated content area.” For newsworthiness, a score of 7 indicates “useful information, most practitioners in my discipline definitely don't know this (unless they have read this article),” and a score of 1 indicates “not of direct clinical interest.” Raters for a given discipline are selected by an automated process from a panel of over 2,000 practicing physicians [12]. The ratings are used in the production of Evidence-Based Medicine, ACP Journal Club, bmjupdates+, and other research projects and information products. The mean clinical relevance and newsworthiness scores for the articles in each of the hand-searched journals were linked with the journal access data from study participants. CMAJ: Canadian Medical Association Journal and New England Journal of Medicine had the highest mean clinical relevance scores (5.90 and 5.85 respectively) with corresponding mean newsworthiness scores of 4.33 and 4.96 across all articles.

Statistical analysis

For the correlation analyses, the authors used the 136 journal titles that the study physicians accessed from NOVL. Of these 136 titles:

105 journals had ISI impact factors

45 journals included high-quality clinical articles identified during hand-searching (H-S journals)

52 journals provided studies or reviews for ACP Journal Club

52 journals provided studies or reviews for Evidence-Based Medicine

35 titles provided studies or reviews for InfoPOEMs

45 titles had clinical relevance and newsworthiness scores

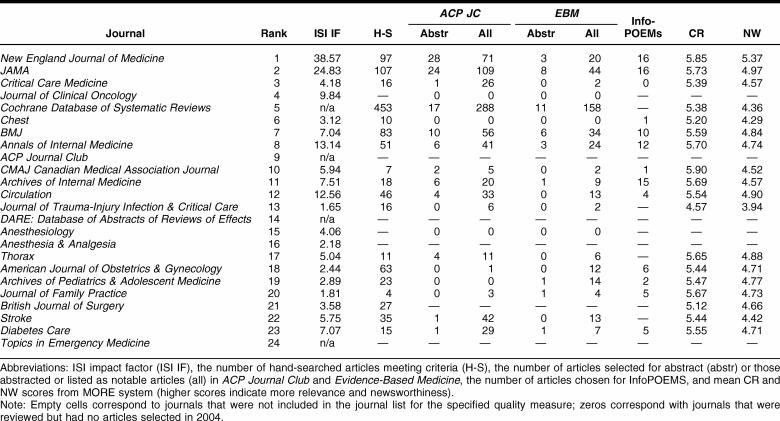

Examples of the data analyzed for each title are in Table 2. Correlations between the number of accesses of each journal title and each measure of quality were calculated using STATA Intercooled 9.0. Using the Pearson product moment correlation, 95% confidence intervals (CI) were calculated based on Fisher's transformation. Bonferroni corrections were not applied as suggested by Perneger [17]; only the statistical comparisons are listed.

Table 2 The top journals accessed by study physicians with corresponding measures of journal quality

RESULTS

The study included 105 physicians in northern Ontario (56 family physicians and 49 specialists). The mean time spent in clinical practice was 42.9 hours per week with a wide range of reported practice times (1 to 100 hours per week). Most of the physicians (n = 67) practiced in 2 small urban areas. The remaining 38 physicians provided service in remote communities or rural areas.

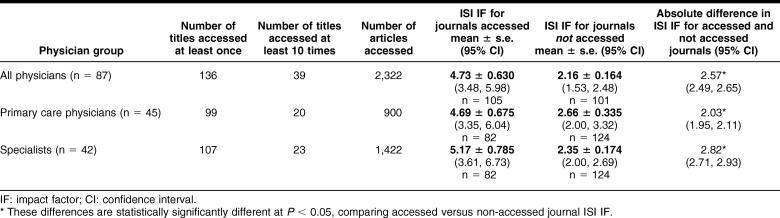

In 18 months, 87 of the study physicians (83% of available physicians) accessed journals 2,322 times through the digital library software, including 900 times by 45 primary care physicians and 1,422 times by 42 specialists (Table 3). Primary care physicians on average made 20 accesses, or slightly more than 1 access per physician per month. Specialists accessed full-text articles more often, with an average of 34 accesses in the study or an average of 1.9 accesses per physician per month. Full-text journal articles were accessed from 136 (38%) of 342 full-text journals in the NOVL Ovid collection of titles as well as another 5 non-Ovid titles (Lancet, Journal of Advanced Nursing, Journal of Clinical Nursing, Seminars in Reproductive Medicine, and Heart Disease). Of the 136 journals accessed, 70 titles (51%) were accessed by both groups, 29 titles only by the primary care physicians, and 47 titles only by specialists.

Table 3 The number of journal titles accessed by study physicians

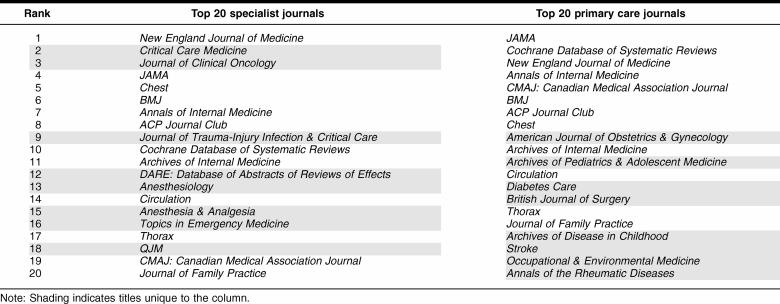

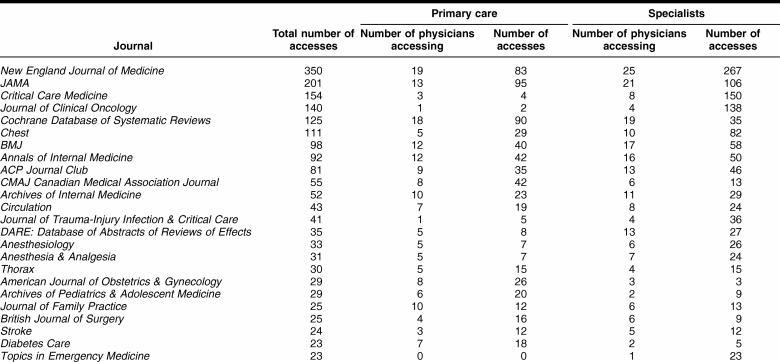

The distribution of the top twenty accessed journals differed among the physician categories, with an overlap of twelve journals (Table 4). Some journals were accessed more by specialists, including New England Journal of Medicine, Critical Care Medicine, Journal of Clinical Oncology, Chest, Journal of Trauma, DARE, Anesthesiology, and Anesthesia and Analgesia. Primary care physicians accessed other journals more often, especially Cochrane Database of Systematic Reviews, CMAJ, American Journal of Obstetrics and Gynecology, Archives of Pediatrics and Adolescent Medicine, and Diabetes Care (Table 4). Three of the top general health care journals (New England Journal of Medicine, JAMA, and BMJ) were on both lists. Also of note is that both lists included eighteen traditional journals and two “summary” journals: ACP Journal Club and Cochrane Database of Systematic Reviews.

Table 4 Top twenty journals accessed by specialists and primary care physicians

Table 5 shows that, for both groups of physicians, some journals were accessed by many physicians and some by only a few. In addition, for some journals, especially those used by the specialists, only a few physicians accessed individual titles but they accessed them many times. For example, Journal of Clinical Oncology was accessed 138 times by 4 physicians, Critical Care Medicine was accessed 150 times by 8 specialists, and Topics in Emergency Medicine was accessed 23 times by 1 specialist.

Table 5 The top twenty journals accessed by study physicians

Primary care physicians and specialists accessed journals that had substantially higher ISI impact factors than the journals that were not accessed (Table 3). The difference in ISI impact factors was larger for specialists than for primary care physicians.

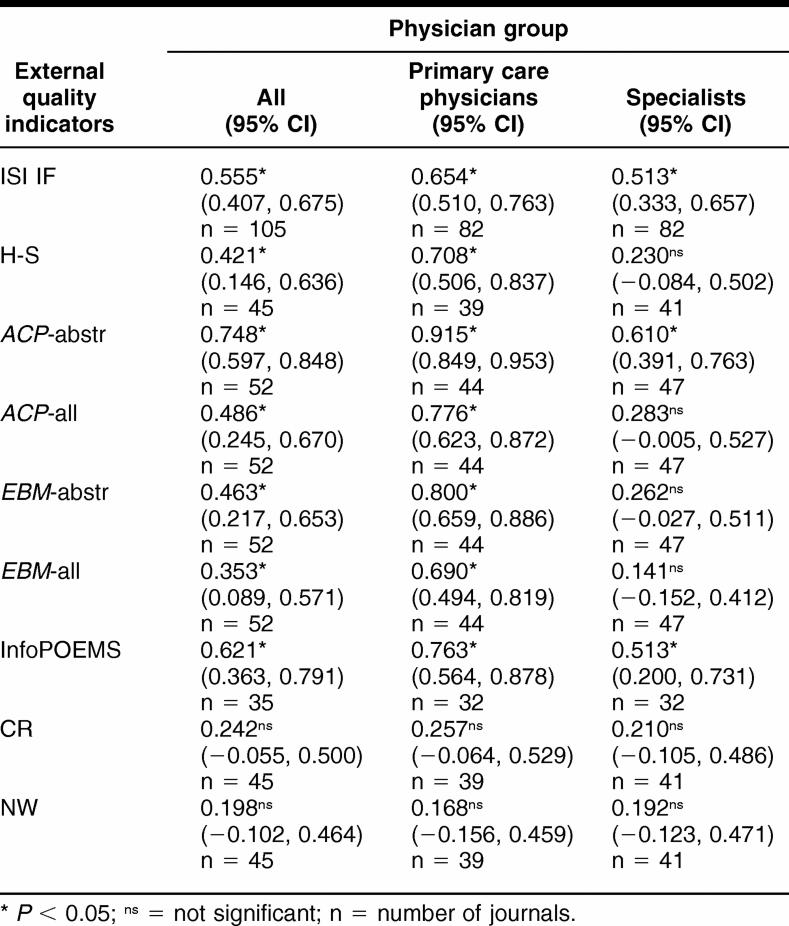

Table 2 illustrates the measures of journal quality for the top 20 accessed journals. These values were used to perform correlation analysis with the frequency that the journals were accessed by primary care physicians and specialists. Journal choice, as measured by the total number of full-text accesses per title, correlated with the external measures of quality for 7 of 9 measures for primary care physicians and 3 of 9 measures for specialists (Table 6). Participants' full-text accesses of journals that supplied ACP Journal Club with abstracts had the highest correlations for primary care physicians (r = 0.915, 95% CI 0.849 to 0.963) and for specialists (r = 0.610, 95% CI 0.391 to 0.763). The number of accesses to each journal was moderately related to ISI impact factors (Table 6) for primary care physician accesses (r = 0.654, 95% CI 0.510 to 0.763) and specialist accesses (r = 0.513, 95% CI 0.333 to 0.657).

Table 6 Correlation coefficients for nine external quality indicators

Correlations for each quality indicator were stronger for the primary care physicians than for the specialists (Table 6). Access by specialists correlated most strongly with ACP Journal Club abstracted journals (ACP-abstr, r = 0.610, 95% CI 0.391 to 0.763). Statistical difference between the primary care physicians and the specialists occurred only for the abstracted ACP Journal Club accesses (ACP-abstr, r = 0.915 vs. 0.610). Clinical relevance and newsworthiness scores for individual journals did not correlate with journal access for either group of physicians (Table 6).

DISCUSSION

The clinicians in the study accessed only 38% of the journals that were available. Some titles were accessed by many physicians but only a few times per physician, while other titles were accessed multiple times by the same physician. These findings of high use of a few titles and much lower use of the majority of the journals are consistent with print and journal use studies [18] and reflect the challenges that librarians have always had with providing a comprehensive and useful set of journals within budget and in times of ever-increasing subscription costs.

The ACP Journal Club, Evidence-Based Medicine, and InfoPOEMs journal lists are founded on evidence-based medicine principles and criteria, combined with centralized evaluation and selection by clinical editors. These selections are highly correlated with the independent choices of the primary care clinicians who participated in the study. The somewhat lower correlation with the hand-searched journal list, which is based on critical appraisal criteria alone, attests to the added value of having experienced clinical editors select content for clinical interest. The strong correlations with ACP Journal Club and Evidence-Based Medicine abstracted lists and the more moderate correlations of ISI impact factors and other journal lists for primary care physicians suggest that the physicians' choices could be guided by a preference for evidence-based clinical material rather than scientific interest as indicated by published citations. Also, ISI rankings are based on citations in studies published by researchers, while the reading lists for ACP Journal Club, Evidence-Based Medicine, and InfoPOEMs are based on clinician assessment of the value of the articles. This second method of article selection seems to more closely reflect the interests of the physicians in this study.

The moderate correlations between the number of accesses to each journal and ISI impact factors for primary care physician accesses (r = 0.654, 95% CI 0.510 to 0.763) and specialist accesses (r = 0.513, 95% CI 0.333 to 0.657) are similar to the correlation found by Saha et al. [19] between academic internists' quality assessments of general journals and ISI impact factors (n = 113, r = 0.62, P = 0.01).

The lower correlations for specialists suggest that their criteria for journal use are different than primary care physicians' criteria. Although they accessed many of the same journals as did the primary care physicians, the specialists accessed journals almost twice as often and accessed a greater number of more specialized journals, consistent with their clinical populations. It is interesting to note that almost all of the highest rated journals on any of the nine quality ranked lists are present and near the top in all of the lists (Tables 4 and 5). Beyond these high-ranked journals, little consistency exists with respect to the additional important journals clinicians use, almost 50% of the titles were unique to each group of physicians. This likely reflects the differences in information needs across specialties and populations served. It also shows how important and difficult it is to produce information resources such as ACP Journal Club, Evidence-Based Medicine, and InfoPOEMs and to build collections of journals for libraries.

This study of full-text accesses has limitations. First, the associations found between the number of accesses and various measures of quality were restricted by how the journals were chosen for the virtual library. The participating physicians had full-text access to only 348 of the more than 4,000 journals indexed by the US National Library of Medicine and included in MEDLINE. The librarians who made licensing decisions for the study physicians made their decisions independent of the research group. They considered content relevant to health practitioners, prices, availability through Ovid or open access, ISI impact factors, and evidence-based principles. Quality and influence were clearly not the only choice principles in the decision to provide access, as some less popular journals are bundled with more popular journals by aggregators such as Ovid. Access to some open access titles was also provided during the study period either through Ovid collections or direct access, yet very few accesses were made to these journals. This low number of accesses was probably due to the relatively easy access clinicians had to the Ovid journals compared with access in NOVL to the other online journals. Further, access to Lancet was artificially limited because of difficulties the physicians had in gaining easy and reliable access to it outside the Ovid interface using the digital library. These difficulties, rather than clinician choice, likely forced the exclusion of Lancet from the most accessed list in this study. This study also assumed that the number of articles chosen through the various quality processes reflects clinical importance.

Additionally, data for InfoPOEMs were older than data for the other measures, and therefore individual journal performance might have changed over time; more recent data were not available. Another important limitation to the study was that journal accesses reported in this paper did not include personal subscriptions to clinical journals, journals in other library or online collections to which the participants might have had access, or open access journals found through resources like PubMed, Google, or Google Scholar. As more open access journals become available and backfiles for existing titles expand, more clinicians will likely use these journals. Eysenbach reported that in general, open access journals had almost 50% more citations than non–open access journals 17 to 21 months after publication [20, 21]. This increased citation rate likely will affect the number of accesses of open source journals. The digital library service was the main route to the majority of the journals to which the clinicians had access, likely minimizing the potential effect of this limitation. In addition, many clinical decisions are made, and often appropriately, by using only the abstracts of studies and reviews available in MEDLINE, the Internet, or contact with peers [22]. Accesses of abstracts alone were not included in this report.

The nine measures used in this study were also not independent. ISI impact factors were one of the factors used by those who selected the journals available to the study participants as well as those who produced ACP Journal Club, Evidence-Based Medicine, and InfoPOEMs. The hand-searched journals provided input for production of ACP Journal Club, Evidence-Based Medicine, and the clinical usefulness and newsworthiness scores. Therefore, these quality indicators were not statistically independent, and the correlations overlapped.

CONCLUSIONS

Independent choices of online journals by the primary care clinicians with few ties to an academic medical center are highly correlated with clinical journal subsets determined by several means. This is true to an important but lesser extent for specialists. The correlation between journal choice and quality measures of clinical importance is especially strong using data from ACP Journal Club, followed by Evidence-Based Medicine and InfoPOEMs, and the hand-searched journal lists for the primary care physicians. These findings support the selection processes used by these summary journals in that the physicians in this study read articles from titles used in the production of the summary journals. The scientific interest or value of the journal choices [23] made by the physicians studied is also evident in the moderate correlations of their choices with ISI impact factors. Many of the most important clinical journals are easily identified, appearing high in the rankings of most lists of quality journals. In addition to aiding in the selection of journal for consultation during clinical practice, the choices of where to publish important new clinical knowledge can also be guided by importance rankings. Collection building, especially when restricted by budget and subscription increases, can likely be guided in part by using lists of journals selected for various evidence-based journal services.

ACKNOWLEDGMENTS AND DISCLOSURE

In addition to the authors, the team implementing the digital library (McMaster PLUS Trial) includes Nancy Wilczynski, Chris Cotoi, Dawn Jedras, Jennifer Holland, and Leslie Walter. The views expressed in this paper are those of the authors and not necessarily those of the funding agencies. The authors are grateful for the collaboration of the Northern Ontario Virtual Library and the Northern Academic Health Sciences Network. The Ontario Ministry of Health and Long Term Care, the Canadian Institutes for Health Research, and the US National Library of Medicine provided funding for the digital library project. The authors also acknowledge the in-kind support of the American College of Physicians and the BMJ Publishing Group (both supported the initial hand-search and critical appraisal process) and Ovid Technologies (which provided means for the investigators to create links from PLUS to Ovid's full-text journal articles and Evidence-Based Medicine Reviews). Funders and supporters had no role in the design, execution, analysis, or interpretation of the study or preparation of the manuscript. McKibbon and Haynes have received salaries and support and have been involved in the production of ACP Journal Club and Evidence-Based Medicine. Haynes is the principal investigator for the PLUS Trial from which the data were derived. McKinlay was employed as a data analyst for the PLUS Trial. No other competing interests are declared.

REFERENCES

- National Institute of Clinical Studies. Information finding and assessment methods that different groups of clinicians find most useful. Melbourne, Australia: The Institute, 2003. [Google Scholar]

- Strasser TC. The information needs of practicing physicians in northeastern New York State. Bull Med Libr Assoc. 1978 Apr; 66(2):200–9. [PMC free article] [PubMed] [Google Scholar]

- Coumou HCH, Meriman FJ. How do primary care physicians seek answers to clinical questions? a literature review. J Med Libr Assoc. 2006 Jan; 94(1):55–60. [PMC free article] [PubMed] [Google Scholar]

- Tenopir C, King DW. Towards electronic journal: realities for scientists, librarians, and publishers. [Web document]. Washington DC: Special Libraries Association, 2000. [cited 18 Nov 2006]. <http://psycprints.ecs.soton.ac.uk/archive/00000084/>. [Google Scholar]

- Saint S, Christakis DA, Saha A, Elmore JG, Welsh DE, and Baker P. Journal reading habits of internists. J Gen Intern Med. 2000 Dec; 15(12):881–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verhoeven AAH. Information seeking by general practitioners. [Web document]. Groningen, The Netherlands: Groningen University; 1999. [cited 5 Oct 2006]. <http://irs.ub.rug.nl/ppn/18670335X/>. [Google Scholar]

- Casebeer L, Bennett N, Kristofco R, and Carillo Centor R. Physician Internet medical information seeking and on-line continuing education use patterns. J Contin Educ Health Prof. 2002 Winter; 22(1):33–42. [DOI] [PubMed] [Google Scholar]

- Rogers SA. Electronic journal usage at Ohio State University. Coll Res Libr. 2001 Jan; 62(1):25–34. [Google Scholar]

- Chew F, Grant W, and Tote R. Doctors on-line: using diffusion of innovations theory to understand Internet use. Fam Med. 2004 Sep; 36(9):645–50. [PubMed] [Google Scholar]

- Wulff JL, Nixon ND. Quality markers and use of electronic journal in an academic health sciences library. J Med Libr Assoc. 2004 Jul; 92(3):315–22. [PMC free article] [PubMed] [Google Scholar]

- Haynes RB, Holland J, McKinlay RJ, Wilczynski NL, Walters LA, Jedraszewski D, Parris R, McKibbon KA, Garg AX, and Walter SD. McMaster PLUS: a cluster randomized clinical trial of an intervention to accelerate use of evidence-based information from digital libraries in clinical practice. J Am Med Inform Assoc. 2006 Nov–Dec; 13(6):593–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes RB, Cotoi C, Holland J, Walters L, Wilczynski N, Jedraszewski D, McKinlay J, Parrish R, and McKibbon KA. A second order peer review: a system to provide peer review of the medical literature for clinical practitioners. JAMA. 2006 Apr 19; 295(15):1801–8. [DOI] [PubMed] [Google Scholar]

- Science Citation Index. Journal citation reports. v. 2005. [Web document]. Thompson Scientific. [cited 6 Jan 2006]. <http://portal.isiknowledge.com>. [Google Scholar]

- Wilczynski NL, McKibbon KA, Haynes RB.. Enhancing retrieval of best evidence for healthcare from bibliographic databases: calibration of the hand search of the literature. MedInfo. 2001;10(pt 1):390–3. [PubMed] [Google Scholar]

- Ebell MH, Barry HC, and Slawson DV. Finding INFOPOEMs in the medical literature. J Fam Pract. 1999 May; 48(5):350–5. [PubMed] [Google Scholar]

- McKibbon KA, Wilczynski NL, and Haynes RB. What do evidence-based secondary journals tell us about the publication of clinically important articles in primary healthcare journals? BMC Medicine. 2004 Sep 6; 2:33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perneger TV. What's wrong with Bonferroni adjustments? BMJ. 1998 Apr 18; 316(7139):1236–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morse DH, Clintworth WA. Comparing patterns of print and electronic journal use in an academic health sciences library. Issues Sci Techn Libr [serial online]. 2000 Fall;28. [cited 19 Nov 2006]. <http://webdoc.sub.gwdg.de/edoc/aw/ucsb/istl/00-fall/refereed.html>. [Google Scholar]

- Saha S, Saint S, and Christakis DA. Impact factor: a valid measure of journal quality? Bull Med Libr Assoc. 2003 Jan; 91(1):42–6. [PMC free article] [PubMed] [Google Scholar]

- Eysenbach G. Citation advantage of open access articles. PLoS Biol [serial online]. 2006 May 4(5):e157. [cited 5 Nov 2006]. DOI: 10.1371/journal/plos.0040157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eysenbach G. The open access advantage. J Med Internet Res [serial online]. 2006 8(2):e8. [cited 5 Nov 2006]. <http://www.jmir.org/2006/2/e8/>. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes RB, Johnston ME, McKibbon KA, Walker CJ, and Willan AR. A program to enhance clinical use of MEDLINE. A randomized controlled trial. Online J Curr Clin Trials 1993; doc no 56. [PubMed] [Google Scholar]

- Garfield E. The history and meaning of the journal impact factor. JAMA. 2006 Jan 4; 295(1):90–3. [DOI] [PubMed] [Google Scholar]