Abstract

Previous studies have demonstrated that early deafness causes enhancements in peripheral visual attention. Here, we ask if this cross-modal plasticity of visual attention is accompanied by an increase in the number of objects that can be grasped at once. In a first experiment using an enumeration task, Deaf adult native signers and hearing non-signers performed comparably, suggesting that deafness does not enhance the number of objects one can attend to simultaneously. In a second experiment using the Multiple Object Task, Deaf adult native signers and hearing non-signers also performed comparably when required to monitor several, distinct, moving targets among moving distractors. The results of these experiments suggest that deafness does not significantly alter the ability to allocate attention to several objects at once. Thus, early deafness does not enhance all facets of visual attention, but rather its effects are quite specific.

SECTION: Cognitive and Behavioral Neuroscience

Keywords: Subitizing, Enumeration, Multiple object tracking, Visual attention, Deafness, Plasticity

1. INTRODUCTION

The loss of a sensory system early in development causes profound neural reorganization, and in particular an enhancement of the remaining modalities, a phenomenon also termed cross-modal plasticity (Bavelier and Neville, 2002; Frost, et al., 2000; Ptito, et al., 2001; Ptito and Kupers, 2005; Rauschecker, 2004; Sur, et al., 1990; Theoret, et al., 2004). Support for cross-modal plasticity is often echoed in the proposal that blind individuals have more acute senses of audition and touch, and deaf individuals a more acute sense of vision. Although this view is generally valid, recent research reveals that cross-modal plasticity is rather specific, in that only some aspects of the remaining senses appear modified after early sensory deprivation. For example, in the case of deafness, the available literature indicates comparable visual psychophysical thresholds, be it for brightness discrimination (Bross, 1979), visual contrast sensitivity (Finney and Dobkins, 2001), temporal discrimination (Mills, 1985), temporal resolution (Bross and Sauerwein, 1980; Poizner and Tallal, 1987), and sensitivity to motion processing (Bosworth and Dobkins, 1999; Brozinsky and Bavelier, 2004). This lack of population differences across several different measures of visual skill indicates that changes in visual performance after early deafness are not widespread.

One aspect of vision that has been reliably documented to be enhanced following auditory deprivation is peripheral visual processing, in particular during attention demanding tasks using moving stimuli. For example, deaf individuals exhibit a larger field of view than hearing controls when asked to detect the presence of moving light points at any locations in the periphery (Stevens and Neville, 2006). Deaf individuals are faster and more accurate than hearing controls in detecting the direction of motion of a small square at an attended location while ignoring squares flashing at unattended locations (Neville and Lawson, 1987b). Electro-physiological recordings indicate an increased N1 component -associated with a modulation of visual attention - when deaf subjects performed this task. Similar increases in N1 amplitude have been noted when deaf individuals are presented with abrupt onset squares flashed at three possible locations randomly (Neville, et al., 1983) or when monitoring drifting low-spatial frequency gratings for a rare target (Armstrong, et al., 2002). In line with the proposal of enhanced peripheral visual attention, the N1 enhancement in deaf individuals is more pronounced for peripheral than central stimuli. Using fMRI, we and others have found greater recruitment of area MT/MST, specialized for motion processing, in deaf than in hearing participants when motion stimuli were monitored peripherally rather than centrally (Bavelier, et al., 2001; Fine, et al., 2005). These results highlight enhanced performance in deaf individuals in tasks using moving stimuli and manipulating the spatial distribution of attention. These studies mostly focused on Deaf native signers allowing for the possibility that signing rather than deafness leads to enhanced peripheral vision. To disambiguate the role of signing from that of deafness, we and others have carried similar studies on hearing native signers. In all these studies (Bavelier, et al., 2001; Bosworth and Dobkins, 2002; Neville and Lawson, 1987c; Proksch and Bavelier, 2002), hearing native signers performed like hearing non-signers and unlike deaf signers. Thus, signing in itself does not induce the peripheral processing change observed in deaf signers.

It is worth noting however, that not all tasks that rely on motion processing or require peripheral processing show enhancement in the deaf population. We and others have found that sensory thresholds for motion direction and velocity are not altered by early deafness, even when tested in the visual periphery (Bosworth and Dobkins, 1999; Brozinsky and Bavelier, 2004). Similarly, recruitment of MT/MST, a brain area highly specialized for visual motion processing was found to be similar in deaf and hearing individuals upon passively viewing moving stimuli at various eccentricities (Bavelier, et al., 2001; Fine, et al., 2005). The visual skills for which deaf individuals exhibit different performance compared to hearing individuals appear therefore relatively specific to conditions that engage spatial attention (Bavelier, et al., 2006).

This specificity is also illustrated by research on the effects of deafness on visual attention itself. A host of studies document enhanced peripheral visual attention after early deafness as discussed above. In one such study, deaf individuals displayed greater distractibility from peripheral distractors than hearing individuals, revealing greater attentional resources in the visual periphery (Dye, et al., In Press; Lavie, 2005; Proksch and Bavelier, 2002). In contrast, few population differences have been documented on standard attentional paradigms. Studies using the Posner cueing paradigm document no robust change in orienting, except in the presence of a competing central load (Bosworth and Dobkins, 2002; Dye, et al., In Press; Parasnis, 1992; Parasnis and Samar, 1985). Although an early study of visual search reported a tendency for more effective visual search in deaf than in hearing individuals (Stivalet, et al., 1998), recent reports have failed to replicate the effect (Bosworth and Dobkins, 2002; Rettenbach, et al., 1999). The only population effect observed was that deaf adults terminated target-absent trials faster than hearing adults; this result may reflect differences in decision criterion rather than attention between the two populations (Rettenbach, et al., 1999). Early deafness may therefore lead to changes in visual attention, but these appear quite specific to the spatial distribution of visual attention over the visual field.

The aim of the present paper is to document further which of the many aspects of attention may be modified after early auditory deprivation. Here we specifically investigate the effect of deafness on the ability to deploy visual attention to several different objects at once. One view is that compensatory plasticity allows deaf individuals to reach similar performance levels as hearing individuals on tasks which typically benefit from the integration of visual and auditory information. As a result, one may only expect those visual functions known to benefit from multisensory integration between vision and audition to change after early deafness. This view readily captures the findings reviewed above, that the most robust change in visual functions in deaf individuals is in the spatial re-distribution of visual attention over space. Indeed, cross-modal links between audition and vision have been repeatedly documented to control the deployment of spatial attention (Eimer, et al., 2002; McDonald, et al., 2003; Teder-Salejarvi, et al., 2005). According to this view, we expect little if any changes in the ability to deploy visual attention to several objects at once, as this skill appears similarly limited to about 4 items whether tested visually or auditorily (Cowan, et al., 2005). An alternative view holds that compensatory plasticity enhances many aspects of the remaining modalities, with deaf individuals possibly displaying enhancement on a wide range of visual skills. In the absence of audition, the remaining modalities, and in particular vision, are put under increasing demands, leading to the expression of use-dependent plasticity in visual functions. Under this view, an enhancement of the ability to monitor several objects may be expected as a way to enhance visual processing in deaf individuals. Although this latter proposal is at odds with the existing literature to date, the ability to maintain a high number of events in the focus of attention is certainly advantageous and, given that this skill can be modified by experience such as video game playing (Green and Bavelier, 2006), it remains possible that it could be changed in deaf individuals.

As in our past studies, the deaf individuals selected to participate in this study were born to deaf parents (genetic etiology) and raised in an environment that used a visual language at home (hereafter referred to as Deaf native signers) (Mitchell and Karchmer, 2002). This is important because deaf individuals from hearing families introduce possible confounds. First, they often experience a language delay (and associated delay in psycho-social development) because their hearing loss is usually not detected until around the age of 18 months and they are not exposed to a natural language that they can readily grasp until a later age (Mertens, et al., 2000; Samuel, 1996). Second, the etiology of their hearing loss could also have caused some associated neurological changes (Hauser, et al., 2006; King, et al., 2006). One way to investigate performance of deaf individuals with minimal contamination from these confounds is to use Deaf native signers because they typically do not have such secondary disabilities and they achieve their language development milestones at the same rate and time as hearing individuals (Caselli and Volterra, 1994; Newport and Meier, 1985; Pettito and Marentette, 1991).

We first compare the performance of Deaf native signers and hearing controls on an enumeration task. We then explore the possibility that the use of a numerosity judgment task and static stimuli may have masked possible population differences in this first experiment. Deaf native signers and hearing individuals were then compared in their ability to monitor multiple moving objects over a wide visual field using an adaptation of the Multiple Object Tracking task (MOT) (Pylyshyn, 1989).

2. RESULTS

2.1 ENUMERATION TASK

2.1.1 Enumeration Task: Description

The enumeration task has classically been used to study the number of items that can be readily attended. This task requires participants to report the number of briefly flashed items in a display as quickly and as accurately as possible. Participants’ performance on this task appears best captured by two distinct processes, easily seen when performance is plotted against the number of items presented. When viewing enumeration performance in this manner, a clear discontinuity, or elbow, is seen giving the appearance of a bilinear function. For low numbers of items, usually in the range of one to four items (Atkinson, et al., 1976; Oyama, et al., 1981; Trick and Pylyshyn, 1993; Trick and Pylyshyn, 1994), subject performance is extremely fast and accurate. The slopes are near zero over this range - also termed the subitizing range. As numerosity increases above this range, each additional item has a substantially greater cost in terms of reaction time and error rate. The cost to performance is evident in the steep slope observed beyond about four items. The discontinuity in the enumeration curve has been the subject of much debate and has been posited to have various explanations (Green and Bavelier, 2006; Trick and Pylyshyn, 1994). However, all parties agree that the subitizing range provides an estimate of the number of items that can be concurrently apprehended. We therefore decided to compare Deaf native signers and hearing non-signers on the enumeration task to test the hypothesis that deafness may enhance the subitizing range, or in other words the number of items that can be concurrently attended.

To test the effect of deafness on both the central and peripheral visual field, the two populations underwent two different enumeration experiments, one with a field of view restricted around fixation (5°×5°) and the other with a much wider field of view (20°×20°). If deafness disproportionally enhances peripheral vision, any advantage over hearing individuals should be magnified in the wide field of view condition. Additionally, subjects were asked to report their answer by typing it on the keyboard. Although this response mode prevents a fine-grained measurement of reaction time - as finding one key among the twelve possibilities on the keyboard is challenging - it ensured identical response modes between deaf and hearing individuals.

2.1.2 Enumeration Task: Results

Three measures of performance will be discussed - error rate, accuracy breakpoint, and reaction times. Greenhouse-Geisser corrections were used for all analyses. Enumeration studies typically rely on reaction time analyses. In our case, however, the use of a keyboard response, rather than a vocal/signed response, makes the interpretation of reaction time difficult. Although subjects were trained in advance in typing the key that corresponded to a given number, it is likely that this method of response nevertheless introduced additional variability in the measurement of reaction times that may not be consistent across the two groups. With these caveats in mind, the reaction time data will be presented last.

Error Rate

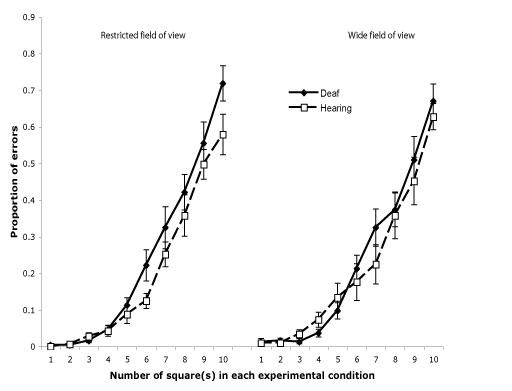

An omnibus ANOVA was performed on the proportion of errors with number of squares (1 to 10) and field-of-view (FOV; restricted vs. wide) as within-subject factors and group (deaf vs. hearing) as a between-subject factor. Consistent with previous studies, a main effect of the number of squares was observed (F (1, 9) = 146.29, p < .001, partial eta-squared = .880). The main effects of FOV and group failed to reach statistical significance (ps > .30, F (1, 20) = .05, partial eta-squared = .002, and F (1, 20) = .96, partial eta-squared = .046, respectively). None of the interactions reached statistical significance, (ps > .10; FOV × Group, F (1, 20) = 2.70, partial eta-squared = .119; Squares × Group, F (9, 180) = 1.28, partial eta-squared = .060; FOV × Squares, F (9, 180) = 0.93, partial eta-squared = .044; and, FOV × Squares × Group, F (9, 180) = 0.85, partial eta-squared = .041). As shown in Fig. 2, the error rate in enumeration increased with the number of squares at the same rate for both restricted and wide FOVs as well as across both deaf and hearing groups.

Figure 2.

Proportion of errors for Deaf native signers and hearing non-signers in the enumeration task as a function of the number of squares flashed (1 to 10) for the restricted and wide field-of-view conditions. The error bars represent standard error of the mean.

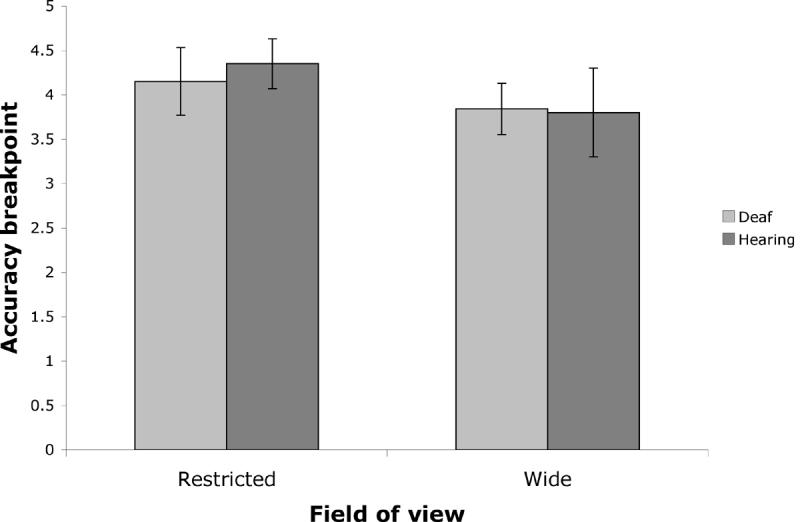

Accuracy Breakpoint

A central question for the present experiment was to investigate whether Deaf native signers might have a greater subitizing range (grasped more items at once) than hearing non-signers. The breakpoint, defined as the number of items where there is a switch from subitizing to counting response (considered as the capacity limit of visual attention), was computed for each participant for each condition with a bilinear model. To determine an individual subject’s breakpoint, their error proportion data was fitted to this bilinear model using the least squares method. Each subject’s curve was modeled as an intersection between two linear components; the first component was constrained to have a slope near zero (maximum slope of 3% per item) while the second component was modeled as linearly increasing (as the data is plotted in terms of error rate) with the slope allowed full room to vary in order to best fit the data. The output of the model was therefore the slope of the two lines as well as the breakpoint—the point at which the two lines intersected. This breakpoint was considered a quantification of the point at which subjects switched from the subitizing range to the counting range. Breakpoints for Deaf native signers and hearing non-signers in the restricted and wide FOV conditions are presented in Figure 3.

Figure 3.

Accuracy breakpoint as a function of group (Deaf native signers and hearing non-signers) and field of view condition (restricted and wide). The error bars represent standard error of the mean.

A 2-way mixed ANOVA was performed on the accuracy breakpoints with FOV (restricted vs. wide) as a within-subject factor and group (deaf vs. hearing) as a between-subject factor. No significant main effect was observed both for FOV and for group (ps > .30; F (1, 20) = 1.12, partial eta-squared = .053 and, F (1, 20) = 0.06, partial eta-squared = .003 respectively). There was no significant interaction between FOV and group (p > .75; F (1, 20) = 0.10, partial eta-squared = .005). Thus, comparable accuracy breakpoints were found across population and field of view.

Analyses of the slopes, that of the subitizing component and that of the counting component, also confirmed the lack of group differences. The analysis of the subitizing slope revealed no significant main effect of FOV or group (ps > .09; F (1, 20) = 3.12, partial eta-squared = .135 and, F (1, 20) = 1.22, partial eta-squared = .057 respectively), and no significant interaction between FOV and group (p > .90; F (1, 20) = 0.10, partial eta-squared = .001). A similar analysis was performed with the counting slope and also revealed no significant main effect for FOV or for group (p > .60; F (1, 20) = 0.04, partial eta-squared = .002, and F (1, 20) = 0.24, partial eta-squared = .012, respectively), as well as no significant interaction between FOV and group (p > .40; F (1, 20) = 0.68, partial eta-squared = .033; see Table 1 for slope means and standard deviations).

Table 1.

Means and Standard Deviations of the subitizing and counting slopes

| Deaf | Hearing | |||

|---|---|---|---|---|

| Slope (in degrees) | M | SD | M | SD |

| Wide field of view | ||||

| Subitizing | -.002 | .016 | .002 | .010 |

| Counting | .097 | .043 | .103 | .106 |

| Restricted field of view | ||||

| Subitizing | .004 | .014 | .009 | .012 |

| Counting | .118 | .059 | .091 | .051 |

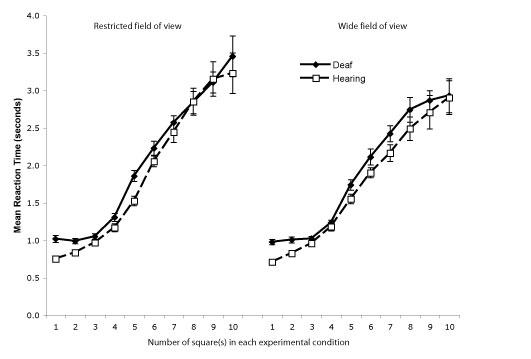

Reaction Times

An omnibus ANOVA was performed on the reaction times with number of squares (1 to 10) and FOV (restricted vs. wide) as within-subject factors and with group (deaf vs. hearing) as a between-subject factor. Similarly to the results on error proportion, the effect of group was not significant (p > .15, F (1, 20) = 2.01, partial eta-squared = .091; Fig. 4). As expected, a significant main effect of the number of squares was observed (F (1, 9) = 205.93, p < .001, partial eta-squared = .91) as the higher the number of squares, the longer it took subjects to answer.

Figure 4.

Reaction times (in seconds) for adult Deaf native signers and hearing non-signers in the enumeration task as a function of the number of squares (1 to 10) for the restricted and wide field of view conditions. The error bars represent standard error of the mean.

A significant effect of FOV due to shorter reaction times in the wide FOV presentation than the restricted FOV was also present (F (1, 20) = 11.63, p < .005, partial eta-squared = .37). FOV interacted with numbers of squares as reaction times were relatively equivalent for low numbers of squares, but shorter for larger numbers of squares in the wide field of view condition (F (9, 180) = 5.36, p < .01, partial eta-squared = .21). No other effects reached significance (ps > .25; FOV × Group, F (1, 20) = 0.06, partial eta-squared = .003; Square × Group, F (9, 180) = 0.361, partial eta-squared = .018; and, FOV × Square × Group, F (9, 180) = 1.30, partial eta-squared = .061).

2.1.3 Enumeration Task: Summary

Consistent with previous studies in hearing non-signers (Jensen, et al., 1950; Trick and Pylyshyn, 1993; Trick and Pylyshyn, 1994; Tuholski, et al., 2001), the present results indicate that about 4 items can be successfully grasped at once. It also confirms that performance during enumeration paradigms is well approximated by a bilinear model with an initial nearly flat region for small numerosities and a steeper slope for numerosities of about 4 and above. The factors varied, field of view and deafness, showed little impact on performance on this task. The only significant effect was that of field of view in the reaction time analyses, in which shorter reaction times were observed in the wide field of view condition at high numerosities. This result mirrors that reported by Green and Bavelier (Green and Bavelier, 2006), although its interpretation remains unclear. This effect was only observed in the RT analyses, cautioning against further interpretation. Importantly, the results of this study demonstrate comparable performance in Deaf native signers and hearing non-signers. This lack of population difference was noted not only for displays restricted to the central 5 degrees but also when using wider, more peripheral displays. Thus even in a condition that could have favored a population difference, deaf and hearing individuals did not differ. This lack of a difference between deaf and hearing individuals confirms and extends the recent study by Bull et al. (Bull, et al., 2006) that found no difference in performance between deaf non-native signers and hearing non-signers in enumeration accuracy for displays varying between 1 and 6 items.

Although suggestive, several factors may have masked a possible difference between deaf and hearing subjects. First, subjects who have a good command of numerosity have an advantage in this task because it involves estimating numerosity. It is possible that differential access to arithmetic instruction and levels of achievement might have been functioning as an extraneous variable making it difficult to observe actual enhancements in the deaf (Frost and Ahlberg, 1999; Nunes and Moreno, 2002; Traxler, 2000). Second, this experiment used static stimuli that may have also hampered the chances of seeing a population difference; as we have reviewed above, deaf/hearing differences tend to be most marked when using moving stimuli. Our second experiment addresses these issues by comparing Deaf native signers and hearing non-signers on a task requiring to track multiple moving stimuli - the Multiple Object Tracking (MOT) task.

2.2. MULTIPLE OBJECT TRACKING TASK

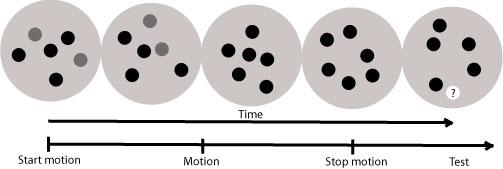

2.2.1 Multiple Object Tracking Task: Description

The MOT paradigm measures the ability of participants to track several, distinct, moving objects with their visual attention. Subjects view a number of randomly moving circles. At the beginning of the trial, some subset of the circles is cued. The cues then disappear and subjects are required to keep track of the circles that were cued (now visually indistinguishable from uncued circles) as they continue to move randomly about the screen. After several seconds of tracking, one of the circles is highlighted and the subject must make a yes (circle was cued) or a no (circle was not cued) decision. This method of response, rather than the more typical method of asking the subject to indicate each of the initially cued circles, was employed to minimize the impact of response interference, and in doing so gain a cleaner measure of the number of items that can be successfully kept in the focus of attention. Indeed, while previous theories have suggested a preattentive link between subitizing and MOT performance (Plyshyn, 1989), it is generally accepted that there is a large dynamic attentional component to the MOT task as well (Scholl, et al., 2001). The task requires active allocation of visual attention in order to successfully track targets embedded in a field of competing, and visually identical, distracting elements. Several studies have demonstrated that attention is actually split between the items during tracking (Sears and Pylyshyn, 2000). Furthermore, neuroimaging has revealed activation in what are thought of as attentional areas - parietal and frontal regions - when subjects perform a MOT task (Culham, et al., 1998; Culham, et al., 2001).

In addition, to maximize our chances of observing a deaf/hearing difference on this task, if present, a large field of view was used (20 degrees). Although there is much variation in the range of visual angle for which peripheral enhancement of processing have been noted in deaf signers (from 2 deg to 35 deg) (Bavelier, et al., 2001; Dye, et al., In Press; Loke and Song, 1991; Proksch and Bavelier, 2002; Sladen, et al., 2005; Stevens and Neville, 2006), the explanation of this effect in terms of enhanced peripheral attention in the deaf suggests that population differences should be heightened at larger eccentricities. We therefore selected the largest field of view possible given the size of the computer screen used and the requirement to maintain a comfortable viewing distance.

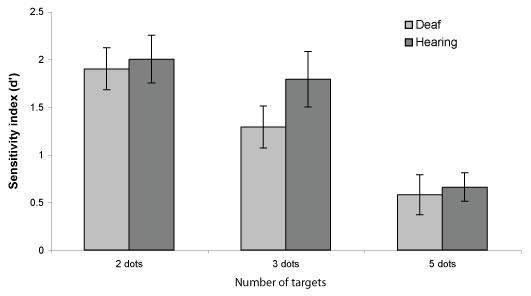

2.2.2. Multiple Object Tracking: Results

A measure of sensitivity, d’, was computed for each participant in each cued circle condition (2, 3, or 5 cued circles; d’ = Z[p(Hits)] - Z[p(False Alarms)]) (Macmillan and Creelman, 1991). ANOVAs were performed with group (deaf/hearing) as a between-subject factor and number of cued circles (2, 3, or 5) as a within-subject factor. Greenhouse-Geisser corrections were used for all analyses. As shown in Fig. 6, d’ decreased as the number of cued circles increased (F (2, 48) = 20.11, p < .001, partial eta-squared = .456). Importantly, d’ was comparable for both groups in all cued circle conditions and no significant main effect of group nor a group × circles interaction were present (ps > .25; F (1, 24) = 1.18, partial eta-squared = .047, and, F (2, 48) = 0.60, partial eta-squared = .024 respectively).

Figure 6.

Sensitivity (d’) as a function of the number of cued circles for Deaf native signers and hearing non-signers. The error bars represent standard error of the mean.

2.2.3. Multiple Object Tracking: Summary

The performance of the Deaf native signers and hearing non-signers in this experiment confirmed the results of previous studies on MOT that have demonstrated significant drops in response accuracy as the number of circles increases (Plyshyn, 1989; Scholl, et al., 2001). Importantly, this study demonstrates that Deaf native signers and hearing non-signers have a comparable capacity to actively allocate visual attention to moving targets embedded in a field of competing, and visually identical, distracting elements. Unlike in the enumeration experiment, arithmetic skills and numerosity judgments were not involved in the present experiment. Yet, the results of this experiment confirmed and extend the findings from the enumeration experiment. Early deafness does not enhance the ability to deploy visual attention to several different objects at once, to dynamically update information in memory as these objects move through space, and to ignore irrelevant distractors during such tracking.

3. DISCUSSION

The number of objects that can be concurrently apprehended was compared in Deaf native signers and hearing non-signers using two different paradigms, the enumeration and the multiple object tracking task (MOT). The first experiment compared the performance of Deaf native signers and hearing controls on an enumeration task. This task, classically used to study the number of items that can be readily attended, requires participants to report the number of briefly flashed items in a display as quickly and as accurately as possible. Our present implementation of this task also manipulated the visual extent of the stimuli to assess possible central/peripheral differences across populations. Our results indicate similar performance in deaf and hearing individuals, suggesting that this aspect of visual attention is not modified by deafness. The Multiple Object Tracking experiment explored the possibility that the use of a numerosity judgment task and static stimuli may have masked possible population differences in the enumeration task. Deaf and hearing individuals were compared in their ability to monitor multiple moving objects over a wide visual field using an adaptation of the Multiple Object Tracking task (MOT) (Pylyshyn, 1989). Again, no population differences were observed. Had deaf signers differed from hearing non-signers in these tasks, we would have then followed up with a study of hearing native signers to assess the impact of signing separately from that of deafness. However, the absence of population effects leads us to conclude that the ability to allocate attention to several objects at once is not significantly altered in Deaf native signers.

In each paradigm, the expected pattern of results was found, however. As previously described in the enumeration literature, all participants exhibited a roughly bilinear curve in performance, showing highly accurate performance for low numerosities up to about 4 items, and then a sharp cost in performance as more items were added. Similarly, performance on the MOT task was comparable to that reported in the literature with a decrease in accuracy as the number of circles to track increased. Importantly, the performance of Deaf native signers and hearing non-signers was always comparable indicating equal ability across groups when it comes to the numbers of objects that can be attended.

This lack of a population effect is not due to a lack of sensitivity of the tasks used. Relying on the same paradigms, Green and Bavelier (Green and Bavelier, 2006) have shown that performance in the enumeration and MOT tasks is enhanced in action video game players. In fact, this work indicates that only 10-30 hours of action video game playing is sufficient to increase the number of objects of attention. Similarly, Trick et al. (Trick, et al., 2005) have seen enhanced MOT performance in children who play both action video games and action sports. Thus, the lack of a population difference in the present study rather indicates that this aspect of attention shows little to no reorganization in the face of early deafness. Surprisingly, this was the case even when the stimulus presentation engaged a large field of vision (20°), which should have facilitated population differences. Indeed, the population of Deaf native signers studied here is identical to that studied in previous studies from our research group and others documenting enhanced peripheral attention (Bavelier, et al., 2000; Dye, et al., In Press; Loke and Song, 1991; Neville and Lawson, 1987b; Parasnis and Samar, 1985; Proksch and Bavelier, 2002; Stevens and Neville, 2006). Therefore, it appears that, although early deafness causes enhancements in peripheral visual attention, it does not lead to similar changes in the number of objects that can be attended.

The finding that immediate apprehension of numerosity is limited to about 4 items along with the limited performance seen on the Multiple Object Tracking task has led several authors to propose a resource-limited mechanism for individuating and maintaining object representations in the focus of attention (Cowan, 2001; object-file Kahneman, et al., 1992; see FINST Plyshyn, 1989). This form of storage, which typically encompasses about 4 items, has been proposed to provide a more reliable estimate of working memory resources than simple span tasks such as the digit span task (Cowan, 2001; Cowan, et al., 2005). Therefore, the present result suggests similar limits in working memory capacity in deaf signers and hearing speakers. Taken together with previous work documenting different storage capacity on simple verbal span tasks in deaf signers and hearing speakers (Bavelier, et al., 2006; Boutla, et al., 2004), this work reinforces the view that the lower span noted in signers in simple verbal span tasks does not reflect a smaller working memory capacity, but rather a specific advantage for the auditory modality in tasks that require serial order recall and rely heavily on verbal rehearsal. The present study therefore supports our earlier proposal of similar overall working memory capacities in Deaf native signers and hearing speakers (Boutla, et al., 2004, Exp. 3).

This work also highlights the specificity of plastic changes in vision following deafness. Whereas previous work documents changes in some aspects of attention following early deafness, visual attention is a multi-faceted process and one cannot assume that the lack of audition will enhance all facets of visual attention. As reviewed in the Introduction, there is good evidence that early deafness leads to changes in the spatial distribution of attention, in particular with an enhancement for peripheral locations and moving stimuli. However, few changes have been noted when it comes to visual search or orienting. Here we document another aspect of visual attention that stays unchanged following early deafness: the number of objects that can be apprehended, maintained for short-term storage and subsequently manipulated on-line. Which functions may or may not be altered after early deafness may be understood in the larger context of the literature on multi-modal integration. It has been shown that performance on multi-modal tasks is enhanced compared to that on the unimodal version of the same tasks. Information across modalities appears to be integrated in an optimal way, whereby each modality contributes to behavior according to the information it contains (Battaglia, et al., 2003; Ernst and Banks, 2002; Lalanne and Lorenceau, 2004). According to this view, visual skills that typically benefit from cross-modal auditory information in hearing individuals, such as processing of peri-personal and peripheral space, may be more likely to reorganize after early deafness. This would enable deaf individuals to attain similar performance levels as hearing individuals while relying on only one modality - vision - rather than two - vision and audition. In contrast, the limited usefulness of audition when tracking objects within reach or performing near-by searches would explain the lack of population difference in this and previous work.

Finally, the lack of an effect of early deafness but the existence of an effect of video gaming on enumeration and MOT cautions us from making absolute conclusions about which cortical functions are or are not plastic. The lack of plasticity in one function following a given pattern of experience does not necessarily imply that the specific function is not plastic, rather it implies that the experience considered may not be strong enough to induce plastic changes and modify functioning. Here, we conclude that auditory deprivation places higher demands on some, but not all, aspects of visual attention. Yet, the facets that are not affected by early deafness can still be affected by other visual experiences.

4. EXPERIMENTAL PROCEDURE

4.1. ENUMERATION TASK

4.1.1. Participants

Eleven Deaf native signers of American Sign Language (ASL) were included in the present experiment (Mage = 22.5, SDage = 4.1; 6 female). All subjects were born congenitally deaf and were exposed to ASL from birth through their deaf parents. Eleven hearing native English speakers were included as control subjects (Mage = 22.6, SDage = 4.2; 7 female). There was no significant difference between the Deaf and hearing subjects’ ages (t(20) = .052, p > .05). None of the hearing control participants had been exposed to ASL. Individuals with history of head injuries, neurological, cognitive, or psychiatric difficulties/disorders were excluded from this study. All participants had normal or corrected to normal vision. Informed consent was received from all participants, who were paid for their participation.

4.2. Materials and procedure

The materials and procedure are similar to those of Experiment 1 in Green and Bavelier (Green and Bavelier, 2006). Each participant was tested individually in a room with dim light. Stimuli were displayed on a Macintosh G3 laptop computer (screen size = 14 inches). The computer screen height was adjusted in order for the center of the screen to correspond to the height of the eye of each subject. Subjects viewed the display binocularly with their head positioned in a chin rest at a distance of 45 cm from the screen. A training condition was developed to familiarize the participants with the response keys. The numbers 1 - 12 were placed on the keyboard above their respective numbers (10 on the 0, 11 on the -, and 12 on the +). Participants were given a task where each trial began with a fixation cross, presented at the center of the screen for 500ms, followed by a display of an Arabic numeral between 1 and 12. The target number was presented in white on a black background and remained displayed till an answer was made. Participants were asked to use both hands with the left hand near 1-5 and the right hand near 6-10. For 11 and 12, participants had to move their right hand. Each participant performed 72 trials (6 repetitions of each of the 12 possible numbers) in the response practice. Brief rests were allowed after each block of 24 trials.

After this response familiarization phase, participants were administered the enumeration task. The participants were instructed to: “Respond as quickly as possible, but only once you feel confident of your answer.” Even though reaction times were recorded, it must be noted that this procedure does not provide the most accurate measurements of reaction times because it was no doubt easier for all subjects to remember where the “1” key was located compared to the “7” key. This variability is partially controlled by the training procedure described above and the fact that both groups followed the same response procedures; however, the absolute reaction time values should still be considered with caution.

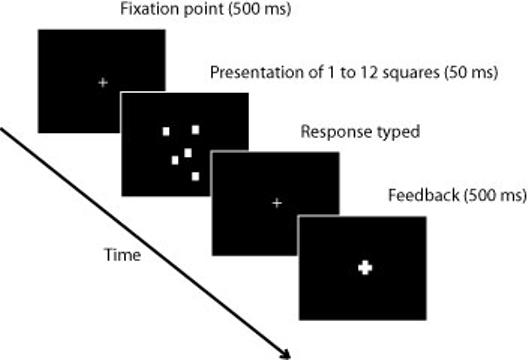

Two main conditions were compared in separate blocks: one with a field of view (FOV) restricted around fixation (5°×5°, henceforth referred to as the restricted FOV condition) and the other with a much wider FOV (20°×20°, the wide FOV condition). The order of the two conditions was counterbalanced across subjects. Each trial began with the presentation of a small white fixation cross in the center of a dark screen (see Fig. 1). After 500 ms, the stimulus made of a random number of white squares was presented for 50ms (between 1 and 12 squares each subtending .5 × .5 degrees). The fixation cross returned and subjects were then allowed to respond by pressing on the keyboard the number of squares they believed were presented. Subjects were instructed to respond by pressing the corresponding keyboard key as quickly as possible while maintaining high accuracy. Feedback was provided by the fixation cross becoming either green (correct response) or red (incorrect response) for 500 ms. Each subject underwent two experimental blocks of 360 trials each (1 - 12 squares presentations, 30 repetitions of each number of targets, pseudorandom presentation). The two conditions were counterbalanced. Due to the excessive difficulty of the enumeration of 11 and 12 square targets, the results from these were discarded and are not reported here.

Figure 1.

Enumeration task: The task began with the presentation of a white fixation cross in the center of the screen for 500 ms then a random number of white squares were presented for 50 ms. The fixation cross reappeared and subjects were to type the number of squares they saw. Feedback was provided by the fixation cross (bolded cross in figure) becoming either green (correct response) or red (incorrect response).

4.2 MULTIPLE OBJECT TRACKING TASK

4.2.1 Participants

Fourteen adult Deaf native ASL signers participated in this experiment (Mage = 23.4, SDage = 3.9; 9 females, 5 males). All of the deaf participants were born profoundly deaf and exposed to ASL from birth through their deaf parents. Twelve control hearing non-signers were also included (Mage = 23.5, SDage = 3.0; 6 females, 6 males). There was no significant difference between the Deaf and hearing subjects’ ages (t(24) = .053, p > .05). None of the hearing control participants had ever been exposed to ASL. One deaf subject had also participated in Experiment 1. Individuals with a history of head injuries, neurological, cognitive, or psychiatric difficulties/disorders were excluded. All participants had normal or corrected to normal vision. Informed consent was received from all participants, who were paid for their participation.

4.2.2 Materials and procedure

Each participant was tested individually in a room with dim light. The stimuli were presented on a Macintosh G4 laptop (screen size = 15 inches). The height of the screen was adjusted in order for the center of the screen to be at the level of the eyes of the participant. A chin rest was used to ensure a comfortable viewing distance of 50 cm. The participant initiated each trial by a key press. Each trial started with the display of a grey aperture (diameter = 20 degrees of visual angle) presented on a black background. A fixation point was presented at the center of the aperture, and the participant was explicitly instructed to fixate the fixation point throughout the trials. In each trial, 12 moving circles (diameter = 1 degree of visual angle, motion velocity = 10 deg/sec) were displayed in the aperture. In each test trial, a subset of circles turned red for 2 seconds then reverted to green, at which point participants continued tracking them. After a 5 second tracking interval, the circles stopped, and a single circle turned white. The participant was asked to indicate whether this white circle was a tracked target or an untracked distractor. Task difficulty was manipulated by increasing the number of tracked circles (2, 3, or 5). Each participant was first exposed to 15 trials of practice (5 repetitions of each possible number of cued circles), followed by 2 blocks of 45 trials each (15 repetitions of each possible number of cued circles). Hence, each participant completed a total of 90 test trials.

Figure 5.

Multiple object tracking: The task began with a subset of red (gray in figure) circles (2, 3, or 5) that moved randomly among moving green circles (black in figure). The red circles turned green after 2 seconds, at which point participants continued tracking them. After a 5 second tracking interval, the circles stopped, and a single circle turned white with a question mark. Subjects were to respond via keyboard with a YES/NO response whether the white circle was previously red.

ACKNOWLEDGMENTS

We wish to thank the student and staff of Gallaudet University, Washington DC and of the National Technical Institute for the Deaf, Rochester NY for making this research possible. We are also thankful to J. Cohen and M. Hall for data collection, and N. Fernandez for help with manuscript preparation. This research was supported by the National Institutes of Health (DC04418 to DB) and the James S. McDonnell Foundation.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Peter C. Hauser, National Technical Institute of the Deaf Rochester Institute of Technology

Matthew W. G. Dye, University of Rochester

Mrim Boutla, University of Rochester.

C. Shawn Green, University of Rochester.

Daphne Bavelier, University of Rochester.

REFERENCES

- Armstrong B, Hillyard SA, Neville HJ, Mitchell TV. Auditory deprivation affects processing of motion, but not color. Brain Res Cogn Brain Res. 2002;14(3):422–434. doi: 10.1016/s0926-6410(02)00211-2. [DOI] [PubMed] [Google Scholar]

- Atkinson J, Campbell F, Francis M. The magic number 4+/-0: A new look at visual numerosity judgments. Perception. 1976;5:327–334. doi: 10.1068/p050327. [DOI] [PubMed] [Google Scholar]

- Battaglia P, Jacobs R, Aslin R. Bayesian integration of visual and auditory signals for spatial localization. J Opt Soc Am A Opt Image Sci Vis. 2003;20(7):1391–1397. doi: 10.1364/josaa.20.001391. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Brozinsky C, Tomann A, Mitchell T, Neville H, Liu G. Impact of early deafness and early exposure to sign language on the cerebral organization for motion processing. J Neurosci. 2001;21:8931–8942. doi: 10.1523/JNEUROSCI.21-22-08931.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Dye M, Hauser P. Do deaf individuals see better? Trends in Cognitive Science. 2006;10(11):512–518. doi: 10.1016/j.tics.2006.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ. Cross-modal plasticity: Where and how? Nat Rev Neurosci. 2002;3:443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Newport EL, Hall ML, Supalla T, Boutla M. Persistent difference in short-term memory span between sign and speech: Implications for cross-linguistic comparisons. Psychological Science. 2006;17(12):1090–1092. doi: 10.1111/j.1467-9280.2006.01831.x. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell TV, Corina DP, Liu G, Neville HJ. Visual attention to the periphery is enhanced in congenitally deaf individuals. J Neurosci. 2000;20(RC93):1–6. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosworth RG, Dobkins KR. Left hemisphere dominance for motion processing in deaf signers. Psych. Sci. 1999;10(3):256–262. [Google Scholar]

- Bosworth RG, Dobkins KR. The effects of spatial attention on motion processing in deaf signers, hearing signers, and hearing nonsigners. Brain Cogn. 2002;49(1):152–169. doi: 10.1006/brcg.2001.1497. [DOI] [PubMed] [Google Scholar]

- Boutla M, Supalla T, Newport L, Bavelier D. Short-term memory span: Insights from sign language. Nature Neuroscience. 2004;7:997–1002. doi: 10.1038/nn1298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bross M. Residual sensory capacities of the deaf: A signal detection analysis of a visual discrimination task. Percept Mot Skills. 1979;48:187–194. doi: 10.2466/pms.1979.48.1.187. [DOI] [PubMed] [Google Scholar]

- Bross M, Sauerwein H. Signal detection analysis of visual flicker in deaf and hearing individuals. Percept Mot Skills. 1980;51:839–843. doi: 10.2466/pms.1980.51.3.839. [DOI] [PubMed] [Google Scholar]

- Brozinsky CJ, Bavelier D. Motion velocity thresholds in deaf signers: Changes in lateralization but not in overall sensitivity. Brain Res Cogn Brain Res. 2004;21(1):1–10. doi: 10.1016/j.cogbrainres.2004.05.002. [DOI] [PubMed] [Google Scholar]

- Bull R, Blatto-Vallee G, Fabich M. Subitizing, magnitude representation, and magnitude retrieval in deaf and hearing adults. Journal of Deaf Studies and Deaf Education. 2006;11(3):289–302. doi: 10.1093/deafed/enj038. [DOI] [PubMed] [Google Scholar]

- Caselli MC, Volterra V. From communication to language in hearing and deaf children. In: Volterra V, Erting CJ, editors. From gesture to language in hearing and deaf children. Gallaudet University Press; Washington, DC: 1994. pp. 263–277. [Google Scholar]

- Cowan N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behavioral and Brain Sciences. 2001;24:87–185. doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- Cowan N, Elliott EM, Saults JS, Morey CC, Mattox S, Hismjatullina A, Conway ARA. On the capacity of attention: Its estimation and its role in working memory and cognitive aptitudes. Cognitive Psychology. 2005;51:42–100. doi: 10.1016/j.cogpsych.2004.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Brandt S, Cavanagh P, Kanwisher NG, Dale AM, Tootell RB. Cortical fmri activation produced by attentive tracking of moving targets. Journal of Neurophysiology. 1998;80:2657–2670. doi: 10.1152/jn.1998.80.5.2657. [DOI] [PubMed] [Google Scholar]

- Culham JC, Cavanagh P, Kanwisher NG. Attention response functions: Characterizing brain areas using fmri activation during parametric variations of attentional load. Neuron. 2001;32(4):737–745. doi: 10.1016/s0896-6273(01)00499-8. [DOI] [PubMed] [Google Scholar]

- Dye MW, Baril D, Bavelier D.(In Press)Which aspects of visual attention are changed by deafness? The case of the attentional network task Neuropsychologia [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M, van Velzen J, Driver J. Cross-modal interactions between audition, touch, and vision in endogenous spatial attention: Erp evidence on preparatory states and sensory modulations. Journal of Cognitive Neuroscience. 2002;14(2):254–271. doi: 10.1162/089892902317236885. [DOI] [PubMed] [Google Scholar]

- Ernst M, Banks M. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fine I, Finney EM, Boynton GM, Dobkins KR. Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. J Cogn Neurosci. 2005;17(10):1621–1637. doi: 10.1162/089892905774597173. [DOI] [PubMed] [Google Scholar]

- Finney EM, Dobkins KR. Visual contrast sensitivity in deaf versus hearing populations: Exploring the perceptual consequences of auditory deprivation and experience with a visual language. Brain Res Cogn Brain Res. 2001;11(1):171–183. doi: 10.1016/s0926-6410(00)00082-3. [DOI] [PubMed] [Google Scholar]

- Frost D, Boire D, Gingras G, Ptito M. Surgically created neural pathways mediate visual pattern discrimination. Proceedings of the National Academy of Science. 2000;97(20):11068–11073. doi: 10.1073/pnas.190179997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frostand P, Ahlberg A. Solving story-based arithmetic problems: Achievement of children with hearing-impairment and their interpreting of meaning. Journal of Deaf Studies and Deaf Education. 1999;4:283–293. doi: 10.1093/deafed/4.4.283. [DOI] [PubMed] [Google Scholar]

- Green CS, Bavelier D. Enumeration versus multiple object tracking: The case of action video game players. Cognition. 2006;101(1):217–245. doi: 10.1016/j.cognition.2005.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauser PC, Wills K, Isquith PK. Hard of hearing, deafness, and being deaf. In: Farmer JDJE, Warschausky S, editors. Neurodevelopmental disabilities: Clinical research and practice. Guilford Publications, Inc; New York: 2006. pp. 119–131. [Google Scholar]

- Jensen EM, Reese EP, Reese TW. The subitizing and counting of visually presented fields of dots. The Journal of Psychology. 1950;30:363–392. [Google Scholar]

- Kahneman D, Treisman AM, Gibbs BJ. The reviewing of object files: Object-specific integration of information. Cognitive Psychology. 1992;24:175–219. doi: 10.1016/0010-0285(92)90007-o. [DOI] [PubMed] [Google Scholar]

- King BH, Hauser PC, Isquith PK.Coffey CE.B.R.A.Psychiatric aspects of blindness and severe visual impairment, and deafness and severe hearing loss in children Textbook of pediatric neuropsychiatry 2006397–423.American Psychiatric Association; Washington DC [Google Scholar]

- Lalanne C, Lorenceau J. Crossmodal integration for perception and action. J Physiol. 2004;98:265–279. doi: 10.1016/j.jphysparis.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused? Selective attention under load. Trends Cogn. Sci. 2005;9(2):75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Loke WH, Song S. Central and peripheral visual processing in hearing and nonhearing individuals. Bulletin of the Psychonomic Society. 1991;29(5):437–440. [Google Scholar]

- Macmillan N, Creelman C. Detection theory: A user′s guide. Cambridge University Press; New York: 1991. [Google Scholar]

- McDonald J, Teder-Salejarvi W, Di Russo F, Hillyard S. Neural substrates of perceptual enhancement by cross-modal spatial attention. J Cogn. Neurosci. 2003;15(1):10–19. doi: 10.1162/089892903321107783. [DOI] [PubMed] [Google Scholar]

- Mertens DM, Suss-Lehrer S, Scott-Olson K. Sensitivity in family-professional relationships: Potential experiences of families with young deaf and hard of hearing children. In: Spencer PT, Erting CJ, Marschark M, editors. The deaf child in the family at school. Lawrence Erlbaum Associates, Inc; Mahwah, NJ: 2000. pp. 133–150. [Google Scholar]

- Mills C. Perception of visual temporal patterns by deaf and hearing adults. Bulletin of the Psychonomic Society. 1985;23:483–486. [Google Scholar]

- Mitchell RE, Karchmer MA. Chasing the mythical ten percent: Parental hearing status of deaf and hard of hearing students in the united states. Sign Language Studies. 2002;4(2):128–163. [Google Scholar]

- Neville HJ, Lawson DS. Attention to central and peripheral visual space in a movement detection task: An event related potential and behavioral study. Ii. Congenitally deaf adults. Brain Res. 1987;405(b):268–283. doi: 10.1016/0006-8993(87)90296-4. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson DS. Attention to central and peripheral visual space in a movement decision task. Iii. Separate effects of auditory deprivation and acquisition of a visual language. Brain Res. 1987;405(c):284–294. doi: 10.1016/0006-8993(87)90297-6. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Schmidt AL, Kutas M. Altered visual-evoked potentials in congenitally deaf adults. Brain Research. 1983;266:127–132. doi: 10.1016/0006-8993(83)91314-8. [DOI] [PubMed] [Google Scholar]

- Newport E, Meier R. The acquisition of american sign language. In: Slobin D, editor. The crosslinguistic study of language acquisition. Vol. 1. Erlbaum; Hillsdale, NJ: 1985. pp. 881–938. [Google Scholar]

- Nunes T, Moreno C. An intervention program for mathematics. Journal of Deaf Studies and Deaf Education. 2002;7:120–133. doi: 10.1093/deafed/7.2.120. [DOI] [PubMed] [Google Scholar]

- Oyama T, Kikuchi T, Ichihara S. Span of attention, backward masking, and reaction time. Perception and Psychophysics. 1981;29(2):106–112. doi: 10.3758/bf03207273. [DOI] [PubMed] [Google Scholar]

- Parasnis I. Allocation of attention, reading skills, and deafness. Brain and Language. 1992;43:583–596. doi: 10.1016/0093-934x(92)90084-r. [DOI] [PubMed] [Google Scholar]

- Parasnis I, Samar VJ. Parafoveal attention in congenitally deaf and hearing young adults. Brain Cogn. 1985;4:313–327. doi: 10.1016/0278-2626(85)90024-7. [DOI] [PubMed] [Google Scholar]

- Pettito L, Marentette P. Babbling in the manual mode: Evidence for the ontogeny of language. Science. 1991;251:1493–1496. doi: 10.1126/science.2006424. [DOI] [PubMed] [Google Scholar]

- Plyshyn Z. The role of location indexes in spatial perception: A sketch of the finst spatial-index model. Cognition. 1989;32:65–97. doi: 10.1016/0010-0277(89)90014-0. [DOI] [PubMed] [Google Scholar]

- Poizner H, Tallal P. Temporal processing in deaf signers. Brain and Language. 1987;30:52–62. doi: 10.1016/0093-934x(87)90027-7. [DOI] [PubMed] [Google Scholar]

- Proksch J, Bavelier D. Changes in the spatial distribution of visual attention after early deafness. Journal of Cognitive Neuroscience. 2002;14:1–5. doi: 10.1162/08989290260138591. [DOI] [PubMed] [Google Scholar]

- Ptito M, Giguere J, Boire D, Frost D, Casanova C. When the auditory cortex turns visual. Progress in Brain Research. 2001;134:447–458. doi: 10.1016/s0079-6123(01)34029-3. [DOI] [PubMed] [Google Scholar]

- Ptito M, Kupers R. Cross-modal plasticity in early blindness. J Integr Neurosci. 2005;4(4):479–488. doi: 10.1142/s0219635205000951. [DOI] [PubMed] [Google Scholar]

- Pylyshyn ZW. The role of location indexes in spatial perception: A sketch of the finst spatial-index model. Cognition. 1989;32:65–97. doi: 10.1016/0010-0277(89)90014-0. [DOI] [PubMed] [Google Scholar]

- Rauschecker J. Crossmodal consequences of visual deprivation. In: Calvert GA, Spence CJ, Stein B, editors. Handbook of multisensory processes. MIT Press; 2004. [Google Scholar]

- Rettenbach R, Diller G, Sireteanu R. Do deaf people see better? Texture segmentation and visual search compensate in adult but not in juvenile subjects. Journal of Cognitive Neuroscience. 1999;11(5):560–583. doi: 10.1162/089892999563616. [DOI] [PubMed] [Google Scholar]

- Samuel KA. The relationship between attachment in deaf adolescents, parental sign communication and attitudes, and psychosocial adjustmen. Dissertation Abstracts International. 1996;57(2182B) [Google Scholar]

- Scholl BJ, Pylyshyn ZW, Feldman J. What is a visual object? Evidence from target merging in multiple object tracking. Cognition. 2001;80:159–177. doi: 10.1016/s0010-0277(00)00157-8. [DOI] [PubMed] [Google Scholar]

- Sears CR, Pylyshyn ZW. Multiple object tracking and attentional processing. Canadian Journal of Experimental Psychology. 2000;54:1–14. doi: 10.1037/h0087326. [DOI] [PubMed] [Google Scholar]

- Sladen DP, Tharpe AM, Ashmead DH, Grantham DW, Chun MM. Visual attention in deaf and normal hearing adults: Effects of stimulus compatibility. Journal of Speech, Language, and Hearing Research. 2005;48:1–9. doi: 10.1044/1092-4388(2005/106). [DOI] [PubMed] [Google Scholar]

- Stevens C, Neville H. Neuroplasticity as a double-edged sword: Deaf enhancements and dyslexic deficits in motion processing. Journal of Cognitive Neuroscience. 2006;18:701–714. doi: 10.1162/jocn.2006.18.5.701. [DOI] [PubMed] [Google Scholar]

- Stivalet P, Moreno Y, Richard J, Barraud P-A, Raphel C. Differences in visual search tasks between congenitally deaf and normally hearing adults. Cognitive Brain Research. 1998;6(3):227–232. doi: 10.1016/s0926-6410(97)00026-8. [DOI] [PubMed] [Google Scholar]

- Sur M, Pallas SL, Roe AW. Cross-modal plasticity in cortical development: Differentiation and specification of sensory neocortex. Trends in Neuroscience. 1990;13(6):227–233. doi: 10.1016/0166-2236(90)90165-7. [DOI] [PubMed] [Google Scholar]

- Teder-Salejarvi W, Di Russo F, McDonald J, Hillyard S. Effects of spatial congruity on audio-visual multimodal integration. J Cogn. Neurosci. 2005;17(9):1396–1409. doi: 10.1162/0898929054985383. [DOI] [PubMed] [Google Scholar]

- Theoret H, Merabet L, Pascual-Leone A. Behavioral and neuroplastic changes in the blind: Evidence for functionally relevant cross-modal interactions. J Physiol Paris. 2004;98(13):221–233. doi: 10.1016/j.jphysparis.2004.03.009. [DOI] [PubMed] [Google Scholar]

- Traxler CB. The stanford achievement test, 9th edition: National norming and performance standards for deaf and hard of hearing students. Journal of Deaf Studies and Deaf Education. 2000;5:337–348. doi: 10.1093/deafed/5.4.337. [DOI] [PubMed] [Google Scholar]

- Trick LM, Jaspers-Fayer F, Sethi N. Multiple-object tracking in children: The “catch the spiers” task. Cognitive Development. 2005;20(3):373–387. [Google Scholar]

- Trick LM, Pylyshyn ZW. What enumeration studies can show us about spatial attention: Evidence for limited capacity preattentive processing. Journal of Experimental Psychology: Human Perception and Performance. 1993;19(2):331–351. doi: 10.1037//0096-1523.19.2.331. [DOI] [PubMed] [Google Scholar]

- Trick LM, Pylyshyn ZW. Why are small and large numbers enumeration differently? A limited-capacity preattentive stage in vision. Psychological Review. 1994;101(1):80–102. doi: 10.1037/0033-295x.101.1.80. [DOI] [PubMed] [Google Scholar]

- Tuholski SW, Engle RW, Baylis GC. Individual differences in working memory capacity and enumeration. Memory and Cognition. 2001;29(3):484–492. doi: 10.3758/bf03196399. [DOI] [PubMed] [Google Scholar]