Abstract

Neuroplastic changes in auditory cortex as a result of lifelong perceptual experience were investigated. Adults with early-onset deafness and long-term hearing aid experience were hypothesized to have undergone auditory cortex plasticity due to somatosensory stimulation. Vibrations were presented on the hand of deaf and normal-hearing participants during functional magnetic resonance imaging (fMRI). Vibration stimuli were derived from speech or were a fixed frequency. Higher, more widespread activity was observed within auditory cortical regions of the deaf participants for both stimulus types. Life-long somatosensory stimulation due to hearing aid use could explain the greater activity observed with deaf participants.

Keywords: Deafness, vibrotactile, somatosensory, auditory cortex, crosssensory plasticity

Introduction

In our everyday experience, inputs from multiple sensory systems are typically integrated to form unified percepts of the world. Recently, regions within both the human cortex and the macaque auditory cortex have been proposed as candidates for auditory-somatosensory integration [1-8]. Although it has been well established that perceptual experience can alter cortical organization [9,10], virtually nothing is known about the influence of experience on the organization of these auditory-somatosensory regions. In this study, vibrotactile activation of auditory cortical regions was compared across individuals with normal-hearing and individuals with early-onset deafness.

In typically-developing human perceivers, somatosensory stimulation leads to enhanced detection of simultaneously presented low intensity auditory signals [11]. Auditory input is also known to alter the perception of somatosensory stimuli, as is demonstrated by the parchment skin illusion, in which altering the frequency of a simultaneous auditory stimulus alters the somatosensory sensation between rough and smooth [12]. Using non-invasive imaging techniques, somatosensory responsive and multisensory audio-tactile regions have been demonstrated within a region of human cortex immediately posterior to primary auditory cortex [1,3,4]. Using invasive imaging techniques, somatosensory input and multisensory audiotactile regions have been confirmed within homologous regions of non-human primates [6,8].

Determination of maturational and experiential factors in the functional cortical organization of sensory processing is a fundamental issue for cognitive neuroscience. Individuals with early-onset sensory losses provide an exceptional opportunity to study the role that environmental stimulation plays in functional cortical organization [9,10].

Studies of deaf and blind individuals have demonstrated altered organization within cortical regions associated with both the affected and non-affected sensory peripheral systems [9,13]. For example, visual stimulation produces differential patterns of activation within visual, multisensory, and auditory regions [9] in deaf versus hearing individuals. An increased reliance on the intact sensory systems in prelingually profoundly deaf individuals is hypothesized to be at least partially responsible for altered patterns of cortical responsiveness to input from the intact sensory systems [9,10], along with neuronal mechanisms that attempt to maintain activity under conditions of sensory deprivation [14].

The fact that profoundly deaf individuals rely on vision for the perception of language in a spoken and/or manual form is obvious even to the casual observer. Less obvious is the potential for reliance on vibrotactile perception, particularly at low frequencies, to perceive sound, including speech. Some deaf individuals perceive acoustic vibration stimuli, delivered through powerful hearing aids, via tactile skin receptors in their ears and/or via bone conduction [15,16], resulting in focused low-frequency somatosensory stimulation. The tactile stimulation is due to mechanical motion of the high-power hearing aid, which is situated in an earmold that seals the ear canal. This arrangement is needed in order to achieve the sound pressure levels required to achieve intensities that can be detected by someone with profound hearing loss (greater than 90 dB HL).

Two magnetoencephalography studies obtained contradictory findings concerning auditory cortex activation by vibrotactile stimulation. On the basis of a single deaf individual, one study reported cortical reorganization, and the other, also with a single deaf participant, did not [17,18]. Reported behavioral effects, such as enhanced vibrotactile sensitivity in congenitally deaf individuals in comparison to normal-hearing (NH) individuals ([19], see also [20], cf. [21]), support the presence of cortical reorganization. We investigated whether absence or reduction of auditory stimulation, combined with increased lifelong focused vibrotactile stimulation due to hearing aid use, results in altered functional cortical organization in deaf individuals. We used fMRI to measure the auditory cortex response to vibrotactile stimulation in a group of deaf versus NH adult participants.

Materials and Methods

Participants

Six deaf (1 male; mean age = 23 years, range 19-26) and six normal-hearing (NH) (2 male; mean age = 24 years, range 19-31) right-handed participants were tested. One NH participant was dropped from the analyses due to inaccurate and excessive detection of the target stimulus within the speech condition resulting in a substantial motor response and motion in that condition. The deaf participants’ hearing-impairments were of unknown etiology, with a mean three-frequency (500Hz, 1000Hz, 2000Hz) un-aided, better-ear pure-tone average equal to 96 dB HL (range = 85 to 107 dB HL; s.d. = 8.6). All deaf participants reported previous extensive use of hearing aids, and all but one (at 2 years) had a congenital onset. All protocols were conducted in compliance with both St. Vincent Hospital’s (Los Angeles, CA) and the University of Southern California’s Institutional Review Boards. Participants were reimbursed for their travel and time.

Stimuli and Procedure

The vibrotactile stimuli contrasted signals that were derived from speech versus ones that were steady-state pure tones. The two stimulus types were perceptually distinct. The speech stimuli conveyed the voice fundamental frequency (F0SPEECH), which can be perceived vibrotactually [22]. The F0SPEECH signals were digitized from a previously recorded linear-phase electroglottograph signal, which represents the impedance across the glottis during the opening and closing that drives the voice fundamental frequency. Signals were derived from 15 sentences spoken by a male talker. The fixed-frequency (FF) stimuli (125 Hz sine waves) were 1 s in duration and separated by 500 ms of silence. During the fMRI scanning, the vibrotactile stimuli comprised 4 blocks of fixed-frequency (FF) sinusoidal bursts and 4 blocks of voice F0SPEECH signals presented in a boxcar design and alternated with 8 silent blocks without vibrotactile stimuli. The order of stimulus blocks was FF, silence, F0SPEECH, silence, repeating four times.

Vibrotactile stimuli were presented through a custom-built pneumatically-driven stimulation system. The stimulator was held in the right hand at about waist level, with the thumb on a raised nylon button that was glued to the top of a vibrating nitrile membrane. To reduce the possibility of auditory stimulation by the vibratory stimuli, all participants wore insert earplugs. Additionally, NH participants wore circumaural hearing protection. During imaging, participants performed a vibrotactile target detection task. Within each block, participants monitored for a target of three 200-ms 250-Hz sinusoid bursts separated by 200 ms silence. When the target was detected, they squeezed a response ball held in the left hands. Participants were familiarized with the stimuli prior to imaging.

Imaging

Ten contiguous 8-mm thick oblique sections aligned approximately with the AC-PC line were imaged. A GE 1.5T Signa LX MRI system with a quadrature head-coil was used to acquire a time-series of images using a single shot echo-planar imaging (EPI) sequence with the following parameters: TR (repetition time) = 5000 ms, effective TE (echo-time) = 45 ms, 90 deg flip angle, 128 × 64 acquisition matrix, 40 × 20cm2 field-of-view and NEX (no. of excitations) = 1. In addition to the EPI time-series acquisition, spin-echo anatomical images of the same ten sections were also acquired using TR =400 ms and TE = 14 ms to obtain good gray/white-matter demarcation.

Analyses

A time-series of 100 images from each of the 10 sections was acquired. Images were realigned within each time-series to correct for motion artifacts [23], normalized to a standard stereotaxic space [Montreal Neurological Institute (MNI) Template] using the standard SPM2 software (www.fil.ion.ucl.ac.uk/spm) with the 12 parameter affine/non-linear transformation and submitted to General Linear Model analysis.

Fixed effects analyses were conducted within SPM2 to determine cortical activation from somatosensory stimulation and to compare the participant groups. Fixed effect analyses were chosen because the deaf participants were specifically selected on the basis of their previous perceptual experience. Within each participant group, separate contrasts were computed for F0SPEECH-Silence, FF-Silence. In addition differences in cortical activity between the two participant groups were assessed with fixed-effects analyses within SPM2 for both tactile stimulation conditions. All contrasts were analyzed with the False Discovery Rate set to p = .001 and a minimum of 10 voxels per cluster.

Results

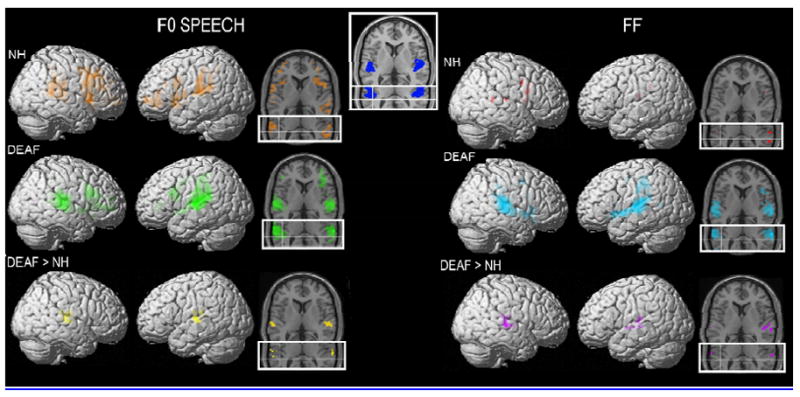

Within each participant group, widespread activation was evidenced by multiple significant clusters of activity for both the F0SPEECH-Silence and FF-Silence contrasts (Figure 1). F0SPEECH activation within the NH participant group included frontal inferior operculum, insula, frontal cortex, supplementary motor area, supramarginal gyrus, rolandic operculum, superior temporal gyrus, middle temporal gyrus, inferior parietal lobule, and cingulum. FF stimulation was associated with less widespread activation within the NH participant group. Significant clusters included frontal inferior operculum, superior temporal gyrus, supramarginal gyrus, precentral gyrus, and post central gyrus.

Figure 1.

Displays the significant activations from fixed-effects analysis in SPM2 with the False Discovery Rate set to p = .001 and a minimum of 10 voxels per cluster. The left column displays the results from the F0 Speech condition and the right column displays the results from the FF condition. The top row of images displays the NH participants. On the left, SPMs for the t-contrasts FOSPEECH > REST (ORANGE t threshold = 3.82) are displayed on both a rendered cortical volume and axial slices. The main axial slice is at Z = 6 in MNI space and is chosen for a good view through Heschl’s gyrus. The inset partial axial slice has crosshairs that intersect at X = -45, Y = -36, and Z = 12 in MNI space. These co-ordinates are at the peak voxel for the vibrotactile-auditory co-activation in Shurmann et al.[4]. On the right, SPMs for the t-contrasts FF > REST (RED t threshold = 4.61) are displayed on both a rendered cortical volume and axial slices. The middle row of images displays the same contrasts for the deaf participants (FOSPEECH > REST- GREEN, t threshold = 3.79; FF > REST-CYAN, t threshold = 3.90). The last row displays the results of the contrast testing explicitly where Deaf > NH in each of the stimulus conditions (FOSPEECH- YELLOW, t threshold = 4.49; FF-PURPLE, t threshold = 4.51). The inset image at the top center of the Figure displays the two axial slices with the primary auditory cortex (area TE 1.0 as defined by Morosan et al.,[24]) shown in BLUE.

F0SPEECH activation within the deaf participant group included frontal inferior operculum, frontal cortex, supplementary motor area, supramarginal gyrus, superior temporal gyrus, pre-central gyrus, and post-central gyrus. In contrast to the NH participants, FF stimulation was also associated with widespread activation within the deaf participant group. Significant clusters included frontal inferior operculum, insula, frontal cortex, supplementary motor area, supramarginal gyrus, superior temporal gyrus, middle temporal gyrus, inferior parietal lobule, pre- and post-central gyri, calcarine sulcus and putamen.

Although several participant group differences appear to be present when visually inspecting the results of active versus rest contrasts presented above, direct comparison of the participant groups for the contrast that tests for regions in which deaf participants had greater activation compared to NH participants yielded focal differences that localized primarily to auditory cortical regions, including areas in and around Heschl’s gyrus. This was true for both F0SPEECH and FF vibrotactile stimuli (Figure 1).

Discussion

These results demonstrate differences between groups in terms of the extent, height, and location of cortical activity during vibrotactile stimulation. In the deaf participants, who had experienced auditory deprivation, evidence of expanded activation was observed in auditory cortex and adjacent to auditory cortex. Furthermore, this activity was obtained for both speech patterned and fixed-frequency stimuli. This difference between the groups may be the result of altered connectivity involving chronic exposure to vibrotactile stimulation via high powered hearing aids [14] and may reflect the cortical bases for previously observed enhancements in tactile perception by deaf participants [19,20]. Importantly, this does not appear to be simply stronger activation of cortical sites previously demonstrated to be responsive to auditory-tactile stimuli. Instead the present results are consistent with an expanded region of tactile responsive cortex in deaf participants.

In the current results on deaf participants, activation elicited by vibrotactile stimuli was rostral and lateral to that previously observed for auditory-tactile stimulation in NH participants [3,4] (see crosshairs in Figure 1). Evidence of activation was obtained (see axial slices in Figure 1) within primary auditory cortical regions in our deaf participants. The template displayed at the top of Figure 1 in blue was defined in the literature as a cortical region in post-mortem tissue with an expanded layer IV, which is consistent with it being a primary sensory region [24]. Thus, the current evidence is consistent with possible expansion of the region of auditory cortex normally responsive to tactile input [3,4] into regions previous thought to be uniquely responsive to auditory stimuli.

At least two possible neurophysiological explanations exist for the observed stronger responses elicited by tactile stimulation in the auditory cortex of deaf participants. First, as noted above, typically unisensory auditory cortical regions could have become consolidated within somatosensory circuits, i.e., direct somatosensory take-over of auditory regions. Second, the typically multisensory cortical regions adjacent to auditory cortex may have expanded their responsiveness to somatosensory stimulation in the absence of competing auditory input. Both of these explanations are consistent with the hypothesis that the responsiveness of regions initially innervated by axons from multiple sensory systems is determined by competition for synaptic connections during development [17]. Under conditions of minimal or no competition from an auditory sensory input beginning in infancy, and a concomitant elevated exposure to vibrotactile signals, as was the case for our deaf participants, typically occurring auditory-somatosensory regions [3] may have become a somatosensory area.

The present result is consistent with informal evidence on cochlear implants (CI, a device used to restore hearing to profoundly hearing impaired individuals), such that some individuals with prelingual-onset hearing-impairments, who are implanted as adults, initially report vibrotactile as opposed to auditory sensations from their cochlear implant [25,26]. These sensations are eventually replaced by auditory sensations, an instance of adult neural plasticity. Notably, this type of vibrotactile sensation appears not to occur in CI patients with post-lingual onset hearing impairments, supporting the view that early and/or long-term reliance on vibrotactile sensation is necessary for somato-auditory cross-sensory reorganization. However, additional study of congenitally deaf adults without any, or with minimal, hearing aid experience is needed to confirm that the same type of reorganization is not due simply to the absence of auditory stimulation.

The present results suggest that cortical reorganization in deaf perceivers is not limited to effects of visual input but also results from somatosensory stimulation. These results are consistent with previous MEG results in a single deaf adult [17]. Such functional reorganization in deaf participants could underlie their enhanced vibrotactile perception observed in our laboratory and others [19,20].

Acknowledgments

The Authors thank John Jordan for the development of the custom MRI compatible vibrotactile stimulator.

Footnotes

This work was supported by NIH NIDCD Grants R01DC04856 (Auer, PI), R01DC008308, (Bernstein, PI) and NIH NIA Grant P50AGO5142 (Singh).

References

- 1.Caetano G, Jousmaki V. Evidence of vibrotactile input to human auditory cortex . Neuroimage. 2006;29(1):15–28. doi: 10.1016/j.neuroimage.2005.07.023. [DOI] [PubMed] [Google Scholar]

- 2.Foxe JJ, Schroeder CE. The case for feed forward multisensory convergence during early cortical processing. Neuroreport. 2005;16(5):419–423. doi: 10.1097/00001756-200504040-00001. [DOI] [PubMed] [Google Scholar]

- 3.Foxe JJ, Wylie GR, Martinez A, et al. Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J Neurophysiol. 2002;88(1):540–543. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- 4.Schurmann M, Caetano G, Hlushchuk Y, Jousmaki V, Hari R. Touch activates human auditory cortex. Neuroimage. 2006;30(4):1325–1331. doi: 10.1016/j.neuroimage.2005.11.020. [DOI] [PubMed] [Google Scholar]

- 5.Foxe JJ, Morocz IA, Murray MM, Higgins BA, Javitt DC, Schroeder CE. Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res Cogn Brain Res. 2000;10(12):77–83. doi: 10.1016/s0926-6410(00)00024-0. [DOI] [PubMed] [Google Scholar]

- 6.Fu KM, Johnston TA, Shah AS, et al. Auditory cortical neurons respond to somatosensory stimulation. J Neurosci. 2003;23(20):7510–7515. doi: 10.1523/JNEUROSCI.23-20-07510.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Murray MM, Molholm S, Michel CM, et al. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15(7):963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- 8.Schroeder CE, Lindsley RW, Specht C, Marcovici A, Smiley JF, Javitt DC. Somatosensory input to auditory association cortex in the macaque monkey. J Neurophysiol. 2001;85(3):1322–1327. doi: 10.1152/jn.2001.85.3.1322. [DOI] [PubMed] [Google Scholar]

- 9.Bavelier D, Brozinsky C, Tomann A, Mitchell T, Neville H, Liu G. Impact of early deafness and early exposure to sign language on the cerebral organization for motion processing. J Neurosci. 2001;21(22):8931–8942. doi: 10.1523/JNEUROSCI.21-22-08931.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bavelier D, Neville HJ. Cross-modal plasticity: where and how? Nat Rev Neurosci. 2002;3(6):443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- 11.Schurmann M, Caetano G, Jousmaki V, Hari R. Hands help hearing: facilitatory audiotactile interaction at low sound-intensity levels. J Acoust Soc Am. 2004;115(2):830–832. doi: 10.1121/1.1639909. [DOI] [PubMed] [Google Scholar]

- 12.Jousmaki V, Hari R. Parchment-skin illusion: sound-biased touch. Curr Biol. 1998;8(6):R190. doi: 10.1016/s0960-9822(98)70120-4. [DOI] [PubMed] [Google Scholar]

- 13.Finney EM, Clementz BA, Hickok G, Dobkins KR. Visual stimuli activate auditory cortex in deaf subjects: evidence from MEG. Neuroreport. 2003;14(11):1425–1427. doi: 10.1097/00001756-200308060-00004. [DOI] [PubMed] [Google Scholar]

- 14.Knudsen EI. Sensitive periods in the development of the brain and behavior. J Cogn Neurosci. 2004;16(8):1412–1425. doi: 10.1162/0898929042304796. [DOI] [PubMed] [Google Scholar]

- 15.Boothroyd A, Cawkwell S. Vibrotactile thresholds in pure tone audiometry. Acta Otolaryngol. 1970;69(6):381–387. doi: 10.3109/00016487009123382. [DOI] [PubMed] [Google Scholar]

- 16.Erber NP. Speech perception by profoundly hearing-impaired children. J Speech Hear Disord. 1979;44(3):255–270. doi: 10.1044/jshd.4403.255. [DOI] [PubMed] [Google Scholar]

- 17.Levanen S, Jousmaki V, Hari R. Vibration-induced auditory-cortex activation in a congenitally deaf adult. Curr Biol. 1998;8(15):869–872. doi: 10.1016/s0960-9822(07)00348-x. [DOI] [PubMed] [Google Scholar]

- 18.Hickok G, Poeppel D, Clark K, Buxton RB, Rowley HA, Roberts TPL. Sensory mapping in a congenitally deaf subject: MEG and fMRI studies of cross-modal non-plasticity. Human Brain Mapping. 1997;5(6):437–444. doi: 10.1002/(SICI)1097-0193(1997)5:6<437::AID-HBM4>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- 19.Levanen S, Hamdorf D. Feeling vibrations: enhanced tactile sensitivity in congenitally deaf humans. Neurosci Lett. 2001;301(1):75–77. doi: 10.1016/s0304-3940(01)01597-x. [DOI] [PubMed] [Google Scholar]

- 20.Bernstein LE, Tucker PE, Auer ET., Jr Potential perceptual bases for successful use of a vibrotactile speech perception aid. Scand J Psychol. 1998;39(3):181–186. doi: 10.1111/1467-9450.393076. [DOI] [PubMed] [Google Scholar]

- 21.Bernstein LE, Schechter MB, Goldstein MH., Jr Child and adult vibrotactile thresholds for sinusoidal and pulsatile stimuli. J Acoust Soc Am. 1986;80(1):118–123. doi: 10.1121/1.394172. [DOI] [PubMed] [Google Scholar]

- 22.Rothenberg M, Molitor RD. Encoding voice fundamental frequency into vibrotactile frequency. J Acoust Soc Am. 1979;66(4):1029–1038. doi: 10.1121/1.383322. [DOI] [PubMed] [Google Scholar]

- 23.Singh M, Al-Dayeh L, Patel P, Kim T, Guclu C, Nalcioglu O. Correction for head movements in multi-slice EPI functional MRI. IEEE Transactions on Nuclear Science. 1998;45(4):2162–2167. [Google Scholar]

- 24.Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K. Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage. 2001;13(4):684–701. doi: 10.1006/nimg.2000.0715. [DOI] [PubMed] [Google Scholar]

- 25.McFeely WJ, Jr, Antonelli PJ, Rodriguez FJ, Holmes AE. Somatosensory phenomena after multichannel cochlear implantation in prelingually deaf adults. Am J Otol. 1998;19(4):467–471. [PubMed] [Google Scholar]

- 26.Eisenberg LS. Use of the cochlear implant by the prelingually deaf. Ann Otol Rhinol Laryngol Suppl. 1982;91(2 Pt 3):62–66. [PubMed] [Google Scholar]