Abstract

Markov chain Monte Carlo sampling methods often suffer from long correlation times. Consequently, these methods must be run for many steps to generate an independent sample. In this paper, a method is proposed to overcome this difficulty. The method utilizes information from rapidly equilibrating coarse Markov chains that sample marginal distributions of the full system. This is accomplished through exchanges between the full chain and the auxiliary coarse chains. Results of numerical tests on the bridge sampling and filtering/smoothing problems for a stochastic differential equation are presented.

Keywords: Markov chain Monte Carlo, renormalization, multi-grid, filtering, parameter estimation

To understand the behavior of a physical system, it is often necessary to generate samples from complicated high dimensional distributions. The usual tools for sampling from these distributions are Markov chain Monte Carlo (MCMC) methods by which one constructs a Markov chain whose trajectory averages converge to averages with respect to the distribution of interest. For some simple systems, it is possible to construct Markov chains with independent values at each step. In general, however, spatial correlations in the system of interest result in long correlation times in the Markov chain and hence slow convergence of the chain's trajectory averages. Here, a method is proposed to alleviate the difficulties caused by spatial correlations in high dimensional systems. The method, parallel marginalization, is tested on two stochastic differential equation conditional path sampling problems.

Parallel marginalization takes advantage of the shorter correlation lengths present in marginal distributions of the target density. Auxiliary Markov chains that sample approximate marginal distributions are evolved simultaneously with the Markov chain that samples the distribution of interest. By swapping their configurations, these auxiliary chains pass information between themselves and with the chain sampling the original distribution. As shown below, these swaps are made in a manner consistent with both the original distributions and the approximate marginal distributions. The numerical examples indicate that improvement in efficiency of parallel marginalization over standard MCMC techniques can be significant.

The design of efficient methods to approximate marginal distributions was addressed by Chorin (1) and Stinis (2). The use of Monte Carlo updates on coarse subsets of variables is not a new concept (see ref. 3 and the references therein). The method presented in ref. 3 does not use marginal distributions. However, attempts have been made previously to use marginal distributions to accelerate the convergence of MCMC (see refs. 4 and 5). In contrast to parallel marginalization, the methods proposed in refs. 4 and 5 do not preserve the distribution of the full system and therefore are not guaranteed to converge. The parallel construction used here is motivated by the parallel tempering method (see ref. 6), and allows efficient comparison of the auxiliary chains and the original chain. See refs. 6 and 7 for expositions of standard MCMC methods.

Parallel marginalization for problems in Euclidean state spaces is described in detail below. In the final sections, the conditional path sampling problem is described and numerical results are presented for the bridge sampling and smoothing/filtering problems.

Parallel Marginalization

For the purposes of the discussion in this section, we assume that appropriate approximate marginal distributions are available. As discussed in a later section, they may be provided by coarse models of the physical problem as in the examples below, or they may be calculated via the methods in refs. 1 and 2.

Assume that the d0 dimensional system of interest has a probability density, π0(x0), where x0 ∈ ℝd0. Suppose further that, by the Metropolis–Hastings or any other method (see ref. 6), we can construct a Markov chain, Y0n ∈ ℝd0, which has π0 as its stationary measure. That is, for two points x0, y0 ∈ ℝd0

|

where T0(x0 → y0) is the probability density of a move to {Y0n+1 = y0} given that {Y0n = x0}. Here, n is the algorithmic step. Under appropriate conditions (see ref. 6), averages over a trajectory of {Y0n} will converge to averages over π0, i.e. for an objective function g(x0)

|

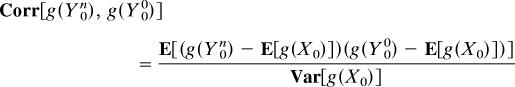

The size of the error in the above limit decreases as the rate of decay of the time autocorrelation

|

increases. In this formula, Y00 is assumed to be drawn from π0.

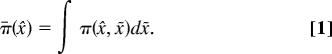

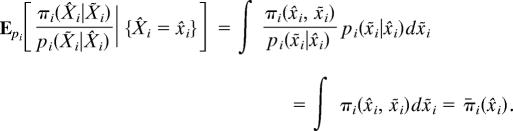

It is well known that judicious elimination of variables by renormalization can reduce long range spatial correlations (for example, see refs. 8 and 9). The variables are removed by averaging out their effects on the full distribution. If the original density is π(x̂, x̂) and we wish to remove the x̃ variables, the distribution of the remaining x̂ variables is given by the marginal density (see refs. 1 and 6)

|

The full distribution can be factored as

where π(x̃|x̂) is the conditional density of x̃ given x̂. Because they exhibit shorter correlation lengths, the marginal distributions are useful in the acceleration of MCMC methods.

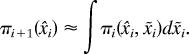

With this in mind, we consider a collection of lower dimensional Markov chains Yin ∈ ℝdi, which have stationary distributions πi(xi) where d0 > ⋯ > di. For each i ≤ L, let Ti be the transition probability density of Yin, i.e. Ti(xi → yi) is the probability density of {Yin+1 = yi} given that {Yin = xi}. The {πi} are approximate marginal distributions. For example, divide the xi variables into two subsets, x̂i ∈ ℝdi+1 and x̃i ∈ ℝdi−di+1, so that xi = (x̂i, x̃i). The x̃i variables represent the variables of xi that are removed by marginalization, i.e.,

|

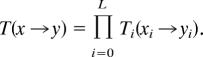

After arranging these chains in parallel, we have the larger process

The probability density of a move to {Yn+1 = y} given that {Yn = x} for x, y ∈ ℝd0 × ⋯ × ℝdL is given by

|

Because

|

the stationary distribution of Yn is

The next step in the construction is to allow interactions between the chains {Yin} and to thereby pass information from the rapidly equilibrating chains on the lower dimensional spaces (large i) down to the chain on the original space (i = 0). This is accomplished by swap moves. In a swap move between levels i and i + 1, we take a di+1 dimensional subset, x̂i, of the xi variables and exchange them with the xi+1 variables. The remaining di − di+1 x̃i variables are resampled from the conditional distribution πi (x̃i|xi+1). For the full chain, this swap takes the form of a move from {Yn = x} to {Yn+1 = y} where

and

The ellipses represent components of Yn that remain unchanged in the transition and ỹi is drawn from πi (x̃i|xi+1).

If these swaps are undertaken unconditionally, the resulting chain will equilibrate rapidly, but will not, in general, preserve the product distribution Π. To remedy this, we introduce the swap acceptance probability

In this formula, π̄i is the function on ℝdi+1 resulting from marginalization of πi as in Eq. 1. Given that {Yn = x}, the probability density of {Yn+1 = y}, after the proposal and either acceptance with probability Ai or rejection with probability 1 − Ai, of a swap move, is given by

|

for x, y ∈ d0 × ⋯ × ℝdL. δ is the Dirac delta function.

We have the following lemma.

Lemma 1.

The transition probabilities Si satisfy the detailed balance condition for the measure Π, i.e.,

where x, y ∈ ℝd0 × ⋯ × ℝdL.

The detailed balance condition stipulates that the probability of observing a transition x → y is equal to that of observing a transition y → x and guarantees that the resulting Markov chain preserves the distribution ∏. Therefore, under general conditions, averages over a trajectory of {Yn} will converge to averages over ∏. Because

|

we can calculate averages over π0 by taking averages over the trajectories of the first d0 components of Yn.

“Exact” Approximation of Acceptance Probability

Note that formula 3 for Ai requires the evaluation of π̃i at the points x̂i, xi+1 ∈ ℝdi+1. Although the approximation of π̃i by functions on ℝdi+1 is in general a very difficult problem, its evaluation at a single point is often not terribly demanding. In fact, in many cases, including the examples in this paper, the x̂i variables can be chosen so that the remaining x̃i variables are conditionally independent given x̂i.

Despite these mitigating factors, the requirement that we evaluate π̄i before we accept any swap is a little onerous. Fortunately, and somewhat surprisingly, this requirement is not necessary. In fact, standard strategies for approximating the point values of the marginals yield Markov chains that themselves preserve the target measure. Thus, even a poor estimate of the ratio appearing in Eq. 3 can give rise to a method that is exact in the sense that the resulting Markov chain will asymptotically sample the target measure.

To illustrate this point, we consider the following example of a swap move. Assume that the current position of the chain is {Yn = x} where

The following steps will result in either {Yn+1 = x} or {Yn+1 = y} where

and ỹi ∈ ℝdi−di+1.

Let v0 = x̃i and let vj ∈ ℝdi−di+1 for j = 1, …, M − 1 be independent samples from pi(·|x̂i), where pi(·|x̂i) is a reference density conditioned by x̂i. For example, pi(·|x̂i) could be a Gaussian approximation of πi(x̃i|x̂i). How pi is chosen depends on the problem at hand (see numerical examples below). In general, pi(·|x̂i) should be easily evaluated and independently sampled, and it should “cover” πi(·|x̂i) in the sense that areas of ℝdi where πi(·|x̂i) is not negligible should be contained in areas where pi(·|x̂i) is not negligible.

Let uj ∈ ℝdi−di+1 for j = 0, …, M − 1 be independent random variables sampled from pi(·|xi+1) (recall that we are considering a swap of x̂i and xi+1, which live in the same space). Note, that the {uj} variables depend on xi+1, whereas the {vj} variables depend on x̂i.

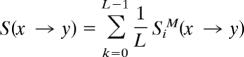

The transition probability density for the above swap move from x → y for x, y ∈ ℝd0 × ⋯ × ℝdL is given by

where

|

and δ is again the Dirac delta function. In other words, SiM dictates that the Markov chain accepts the swap with probability R and rejects it with probability 1 − R.

Although the preceding swap move corresponds to a method for approximating the ratio

appearing in the formula for Ai above, it also has some similarities with the multiple-try Metropolis method presented in ref. 10, which uses multiple suggestion samples to improve acceptance rates of standard MCMC methods. The following lemma is suggested by results in ref. 10.

Lemma 2.

The transition probabilities SiM satisfy the detailed balance condition for the measure ∏.

As before, the detailed balance condition guarantees that averages over trajectories of the first d0 dimensions of Yn will converge to averages over π0.

The AiM contain an approximation to the ratio of marginals in 3

|

where Epi denotes expectation with respect to the density pi. When 0 < Epi [wvj|{x̂i = x̂i}] < ∞, the convergence above follows from the strong law of large numbers and the fact that

|

For small values of M in 4, calculation of the swap acceptance probabilities is very cheap. However, higher values of M may improve the acceptance rates. For example, if the {πi}i>0 are exact marginals of π0, then Ai ≡ 1, whereas AiM ≤ 1. Results similar to Lemma 2 hold when more general approximations replace the one given above; for example, when the {uj} and {vj} are generated by a Metropolis–Hastings rule. In practice, one has to balance the speed of evaluating AiM for small M with the possible higher acceptance rates for M large.

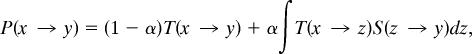

It is easy to see that a Markov chain, which evolves only by swap moves, cannot sample all configurations. These swap moves must therefore be used in conjunction with a transition rule that can reach any region of space, such as T from expression 2. More precisely, T should be ∏-irreducible and aperiodic (see ref. 11). The the transition rule for parallel marginalization is

|

where

|

and α ∈ [0, 1) is the probability that a swap move occurs. P dictates that, with probability α, the chain attempts a swap move between levels I and I + 1, where I is a random variable chosen uniformly from {0, …, L − 1}. Next, each level of the chain evolves independently according to the {Ti}. With probability 1 − α, the chain does not attempt a swap move, but does evolve each level. The next result follows trivially from Lemma 2 and guarantees the invariance of ∏ under evolution by P.

Theorem 1.

The transition probability P satisfies the detailed balance condition for the measure Π, i.e.,

where x, y ∈ ℝd0 × ⋯ × ℝdN.

Thus, by combining standard MCMC steps on each component governed by the transition probability T, with swap steps between the components governed by S, an MCMC method results that not only uses information from rapidly equilibrating lower dimensional chains, but is also convergent.

Numerical Example 1: Bridge Path Sampling

In the bridge path sampling problem, we wish to approximate conditional expectations of the form

where s ∈ (0, T) and {Zt} is the real valued processes given by the solution of the stochastic differential equation

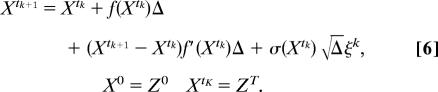

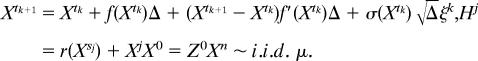

g, f, and σ are real valued functions of ℝ. Of course, we can also consider functions g of more than one time. This problem arises, for example, in financial volatility estimation. Because, in general, we cannot sample paths of 5, we must first approximate {Zt} by a discrete process for which the path density is readily available. Let t0 = 0, t1 = T/K, …, tK = T be a mesh on which we wish to calculate path averages. One such approximate process is given by the linearly implicit Euler scheme (a balanced implicit method, see ref. 12),

|

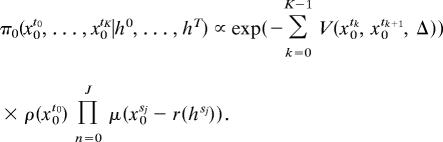

The {ξk} are independent Gaussian random variables with mean 0 and variance 1, and Δ = T/K. K is assumed to be a power of 2. The choice of this scheme over the Euler scheme (see ref. 13) is due to its favorable stability properties, as explained later. Without the condition XtK = ZT above, generating samples of (X0, …, XtK) is a relatively straightforward endeavor. One simply generates a sample of Z0, then evolves the system with this initial condition. However, the presence of information about {Zt}t>0 complicates the task. In general, a sampling method that requires only knowlege of a function proportional to conditional density of (Xt1, …, Xtk−1) must be applied. The approximate path density associated with discretization 6 is

|

where

At this point, we wish to apply the parallel marginalization sampling procedure to the density π0. However, as indicated above, a prerequisite for the use of parallel marginalization is the ability to estimate marginal densities. In some important problems, homogeneities in the underlying system yield simplifications in the calculation of these densities by the methods in refs. 1 and 2. These calculations can be carried out before implementation of parallel marginalization, or they can be integrated into the sampling procedure.

In some cases, the numerical estimation of the {πi}i>0 can be completely avoided. The examples presented here are two such cases. Let Si = {0, 2i, 3(2i), 4(2i), …, K}. Decompose Si as Ŝi ⊔ S̃i where

and

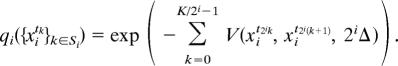

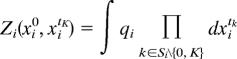

In the notation of the previous sections, xi = (x̂i, x̃i), where x̂i = {xitk}k∈Ŝi\{0,K} and x̃i = {xitk}k∈S̃i. In words, the hat and tilde variables represent alternating time slices of the path. For all i, fix xi0 = z− and xitK = z+. We choose the approximate marginal densities

where for each i, qi is defined by successive coarsenings of 6. That is,

|

Because πi will be sampled using a Metropolis–Hastings method with x0 and xtK fixed, knowlege of the normalization constants

|

is unnecessary.

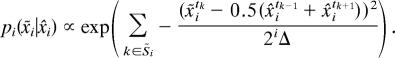

Note from 7 that, conditioned on the values of xtk−1 and xtk+1, the variance of xtK is of order Δ. Thus, any perturbation of xtK that leaves xtj fixed for j ≠ k and is compatible with joint distribution 7 must be of the order . This finding suggests that distributions defined by coarser discretizations of 7 will allow larger perturbations, and consequently will be easier to sample. However, it is important to choose a discretization that remains stable for large values of Δ. For example, although the linearly implicit Euler method performs well in the experiments below, similar tests using the Euler method were less successful due to limitations on the largest allowable values of Δ.

In this numerical example, bridge paths are sampled between time 0 and time 10 for a diffusion in a double-well potential

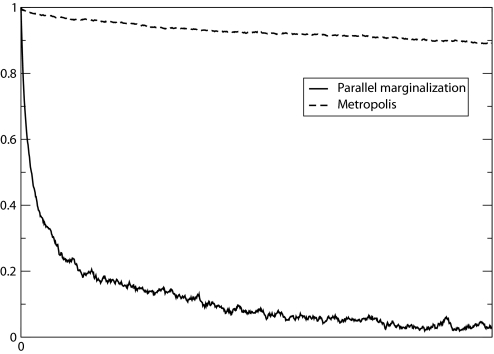

The left and right end points are chosen as z− = z+ = 0. Δ = 2−10. Yin ∈ ℝ10/(2iΔ)+1 is the ith level of the parallel marginalization Markov chain at algorithmic time n. There are 10 chains (L = 9 in expression 2). The observed swap acceptance rates are reported in Table 1. Let Ymidn ∈ ℝ denote the midpoint of the path defined by Y0n (i.e., an approximate sample of the path at time 5). In Fig. 1, the autocorrelation of Ymidn

is compared to that of a standard Metropolis–Hastings rule. In Fig. 1, the time scale of the autocorrelation for the Metropolis–Hastings method has been scaled by a factor of 1/10 to more than account for the extra computational time required per iteration of parallel marginalization. The relaxation time of the parallel chain is clearly reduced. In these numerical examples, the algorithm in the previous section is applied with a slight simplification. First generate M independent Gaussian random paths {ζj(tk)}k∈S˜i with independent components ζj(tk) of mean 0 and variance 2i−1Δ. For each j and k ∈ S̃i, let

If in step 4, ỹi = uj*, then in step 1 we set v0 = x̃i and for each k ∈ S̃i

All other steps remain the same. This change yields a slightly faster though less generally applicable swap step that also preserves the density ∏. Note that this modification implies that the reference density pi is given by

|

For this problem, the choice of M in 4, the number of samples of {uj} and {vj}, seems to have little effect on the swap acceptance rates. In the numerical experiment M = i + 1 for swaps between levels i and i + 1.

Table 1.

Swap acceptance rates for bridge sampling and filtering/smoothing problems

| Levels* | 0/1 | 1/2 | 2/3 | 3/4 | 4/5 | 5/6 | 6/7 | 7/8 | 8/9 |

|---|---|---|---|---|---|---|---|---|---|

| BS† | 0.86 | 0.83 | 0.75 | 0.69 | 0.54 | 0.45 | 0.30 | 0.22 | 0.26 |

| FS‡ | 0.86 | 0.83 | 0.74 | 0.65 | 0.46 | 0.23 | 0.04 | NA | NA |

NA, not applicable.

*Swaps between levels i and i + 1.

†Bridge sampling problem.

‡Filtering/smoothing problem.

Fig. 1.

Autocorrection of parallel marginalization and standard Metropolis methods for bridge sampling problem.

Numerical Example 2: Nonlinear Smoothing/Filtering

In the nonlinear smoothing and filtering problem, we wish to approximate conditional expectations of the form

where s ∈ (0, T) and the real valued processes {Zt} and {Hj} are given by the system

|

g, f, σ, and r are real valued functions of ℝ. The {Xj} are real valued independent random variable drawn from the density μ and are independent of the Brownian motion {Wt}. {sj} ⊂ {tj}, and 0 = s0 < s1 < ⋯ < sJ = T. The process Zt is a hidden signal, and the {Hj} are noisy observations.

Again, the system must first be discretized. The linearly implicit Euler scheme gives

|

The {ξk} are independent Gaussian random variables with mean 0 and variance 1, and Δ = T/K. The {ξk} are independent of the {Xj}. K is again assumed to be a power of 2.

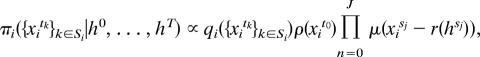

The approximate path measure for this problem is

|

The approximate marginals are chosen as

|

where V, qi, and Si are as defined in the previous section.

In this example, samples of the smoothed path are generated between time 0 and time 10 for the same diffusion in a double-well potential. The densities μ and ρ are chosen as

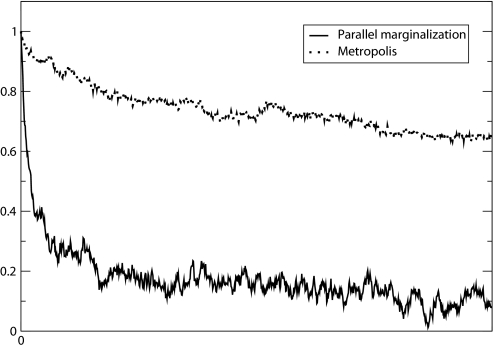

The observation times are s0 = 0, s1 = 1, …, s10 = 10 with Hj = −1 for j = 0, …, 5 and Hj = 1 for j = 6, …, 10. Δ = 2−10. There are eight chains (L = 7 in expression 2). The observed swap acceptance rates are reported in Table 1. Again, Ymidn ∈ ℝ denotes the midpoint of the path defined by Y0n (i.e., an approximate sample of the path at time 5). In Fig. 2, the autocorrelation of Ymidn is compared to that of a standard Metropolis–Hastings rule. Fig. 2 has been adjusted as in the previous example. The relaxation time of the parallel chain is again clearly reduced. The algorithm is modified as in the previous example. For this problem, acceptable swap rates require a higher choice of M in 4 than needed in the bridge sampling problem. In this numerical experiment M = 2i for swaps between levels i and i + 1.

Fig. 2.

Autocorrection of parallel marginalization and standard Metropolis methods for filtering and smoothing problem.

Conclusion

A MCMC method has been proposed and applied to two conditional path sampling problems for stochastic differential equations. Numerical results indicate that this method, parallel marginalization, can have a dramatically reduced equilibration time when compared to standard MCMC methods.

Note that parallel marginalization should not be viewed as a stand-alone method. Other acceleration techniques, such as hybrid Monte Carlo, can and should be implemented at each level within the parallel marginalization framework. As indicated by the smoothing problem, the acceptance probabilities at coarser levels can become small. The remedy for this is the development of more accurate approximate marginal distributions by, for example, the methods in refs. 1 and 2.

Acknowledgments

I thank Prof. A. Chorin for his guidance during this research, which was carried out during my Ph.D. studies at the University of California, Berkeley, and Dr. P. Okunev, Dr. P. Stinis, and the referees for their very helpful comments. This work was supported by the Director, Office of Science, Office of Advanced Scientific Computing Research, of the U.S. Department of Energy under Contract DE-AC03-76SF00098 and National Science Foundation Grant DMS0410110.

Abbreviation

- MCMC

Markov chain Monte Carlo.

Footnotes

The author declares no conflict of interest.

References

- 1.Chorin A. Multiscale Model Sim. 2003;1:105–118. [Google Scholar]

- 2.Stinis P. J Comput Phys. 2005;208:691–703. [Google Scholar]

- 3.Goodman J, Sokal A. Phys Rev D. 1989;40:2035–2071. doi: 10.1103/physrevd.40.2035. [DOI] [PubMed] [Google Scholar]

- 4.Brandt A, Ron D. J Stat Phys. 2001;102:163–186. [Google Scholar]

- 5.Okunev P. Berkeley: Univ of California; 2005. Ph.D. thesis. [Google Scholar]

- 6.Liu J. Monte Carlo Strategies in Scientific Computing. Berlin: Springer; 2002. [Google Scholar]

- 7.Binder K, Heermann D. Monte Carlo Simulation in Statistical Physics. Berlin: Springer; 2002. [Google Scholar]

- 8.Binney J, Dowrick N, Fisher A, Newman M. The Theory of Critical Phenomena: An Introduction to the Renormalization Group. New York: Oxford Univ Press; 1992. [Google Scholar]

- 9.Kadanoff L. Physics. 1966;2:263. [Google Scholar]

- 10.Liu J, Liang F, Wong W. J Am Stat Assoc. 2000;95:121–134. [Google Scholar]

- 11.Tierney L. Ann Stat. 1994;22:1701–1728. [Google Scholar]

- 12.Milstein G, Platen E, Schurz H. SIAM J Numer Anal. 1998;35:1010–1019. [Google Scholar]

- 13.Kloeden P, Platen E. Numerical Solution of Stochastic Differential Equations. Berlin: Springer; 1992. [Google Scholar]