Abstract

Objective

To measure how a change in gatekeeping model affects utilization of specialty mental health services.

Data Sources/Study Setting

Secondary data from health insurance claims for services during 1996–1999. The setting is a managed care organization that changed gatekeeping model in one of its divisions, from in-person evaluation to the use of a call-center.

Study Design

We evaluate the impact of the change in gatekeeping model by comparing utilization during the 2 years before and 2 years after the change, both in the affected division and in another division where gatekeeping model did not change. The design is thus a controlled quasi-experimental one. Subjects were not randomized. Key dependent variables are whether each individual had any specialty mental health visits in a year; the number of visits; and the proportion of users exceeding eight visits in a year. Key explanatory variables include demographic variables and indicators for patient diagnoses and their intervention status (time-period, study group).

Data Collection/Extraction Methods

Claims data were aggregated to create analytic files with one record per member per year, with variables reporting demographic characteristics and mental health service use.

Principal Findings

After controlling for secular trends at the other division, the division which changed gatekeeping model eventually experienced an increase in the proportion of enrollees receiving specialty mental health treatment, of 0.5 percentage point. Similarly, there was an increase of about 0.6 annual visits per user, concentrated at the low end of the distribution. These changes occurred only in the second year after the gatekeeping changes.

Conclusions

The results of this study suggest that the gatekeeping changes did lead to increases in utilization of mental health care, as hypothesized. At the same time, the magnitude of the increase in access and mean number of visits that we found was relatively modest. This suggests that while the change from face-to-face specialty gatekeeping to call-center intake does increase utilization, it is unlikely to overwhelm a system with new demand or create huge cost increases.

Keywords: Mental health, gatekeeping, utilization management

Managed care organizations (MCOs) use a variety of strategies to influence the type, quantity, costs, and quality of health care that their enrollees use. Utilization management (UM) is one major approach that includes gatekeeping arrangements, preauthorization, and subsequent review of care. The application of UM to mental health care has proven controversial. Its potential benefits include improved appropriateness and cost-effectiveness of care; however, there are concerns about the basis on which decisions are made, the qualifications of reviewers, impact on confidentiality, and disruption of the provider–patient relationship (Tischler 1990; Zusman 1990; Miller 1996; Hennessy and Green-Hennessy 1997).

This article evaluates the impact of two different models of UM on the use of outpatient specialty mental health services within an MCO. In the first model, patients must be evaluated in person by a “mental health coordinator” before entering outpatient specialty treatment. In the second model, patients need only telephone a call center to receive routine preauthorization for the first eight outpatient visits to a specialty provider. We evaluate the impact of moving from the first to the second model, using data from a natural experiment that occurred in one division of Harvard Pilgrim Health Care (HPHC), a large mature MCO in New England. Our hypothesis is that the change in UM model improved direct access to specialty mental health, and should therefore have resulted in increased utilization of care. The hypothesis is tested by applying a quasi-experimental research design to administrative data from HPHC before and after the change, including comparison with another division that experienced no change in gatekeeping.

BACKGROUND

Much of the empirical research on the effects of UM of mental health care focuses on inpatient services. Most studies have found that some UM strategies are associated with lower costs and/or quantities of treatment (e.g., Gotowka and Smith 1991; Frank and Brookmeyer 1995; Wickizer and Lessler 1998; reviews by Hodgkin 1992 and Mechanic, Schlesinger, and McAlpine 1995; Wickizer, Lessler, and Travis 1996). A few studies have found only weak or no effects (e.g., Dickey and Azeni 1992; Eisen et al. 1995) or that effects are short-term (Frank and Brookmeyer 1995). Some findings have raised concerns about negative impacts on quality of care (e.g., increased rapid readmission rates reported by Wickizer and Lessler 1998).

Only a few published studies are focused on the effects of UM for outpatient mental health care. One study of UM procedures for initial and continuing care in outpatient behavioral health services in an HMO randomly assigned patients to receive a different allotment of initially approved visits and procedures to extend care: the experimental groups received six, 10, or 19 visits with automatic approval for extension up to 19 visits, and a control group received six visits and case-by-case review of treatment extension requests (Howard 1998). All experimental groups had significantly greater treatment lengths, and the groups with six and 10 initial visits used significantly fewer total sessions than the 19-visit group. These results suggest that both actual restrictions and simply the expectation of closer monitoring (“sentinel effect”) affected outpatient utilization.

Liu, Sturm, and Cuffel (2000) report similar results from their study of preauthorization of outpatient mental health services in a specialty managed behavioral health care organization. Their study examined claims and authorization data for two groups of patients whose benefits—provided under employer carve-outs—were similar except in the number of mental health sessions initially authorized (either five or 10). Controlling for patient characteristics, patients in the five-visit group were about three times more likely to end treatment at the fifth visit than those in the 10-visit group. This was true despite the fact that approval of request for continued treatment was “almost guaranteed,” supporting the concept of sentinel effects of UM.

Mintz et al. (2004) examined the association between a wide range of UM techniques and psychiatrists' treatment plan modifications (changes in frequency or number of visits, or type of treatment). The data source was a national survey of psychiatrists, who completed patient logs. After adjusting for patient, setting, and psychiatrist characteristics, it was found that patients who were subject to any UM were more than twice as likely to have their treatment changed as patients who were not subject to UM. Specific techniques that were significantly associated with treatment change were limiting referrals to selected hospitals or providers, financial incentives to limit referrals, and formulary restrictions, but not utilization review. The study design did not allow determinations regarding quality of care, but the authors raise the possibility of negative impacts because of the reported downward pressure on visits and importance of medication choices.

Thus, while literature in this area is very sparse, the three previous studies suggest that UM approaches may have significant effects on outpatient mental health care. Given that managed care is now ubiquitous, it is important to understand more about how UM affects both initial and continuing access to care.

CONCEPTUAL FRAMEWORK

Traditional models of medical care have examined the role of physicians and patients in the decisions to initiate care and to continue in treatment, and typically assume that the patient has a greater role in the initial decision than in subsequent ones. The spread of UM requires adding a third party, and treatment can only occur if three parties give consent: the patient, the provider and the utilization manager. For specialty care, the role of the general physician's referral may also be important.

Landon, Wilson, and Cleary (1998) distinguish a variety of routes through which health plans seek to influence providers' behavior, including financial incentives, normative influences, effects on the structural characteristics of physician practices, and administrative strategies (of which they regard UM as predominant). Similarly, focusing on UM, one can distinguish various channels by which it influences providers' treatment decisions. One channel is the “network effect,” whereby providers comply because of fear of exclusion from the network if they deviate from plan guidelines or challenge reviewers' decisions (Ma and McGuire 1998). Schlesinger, Wynia, and Cummins (2000) found that psychiatrists feel significantly more at risk of exclusion from health plans than other specialists. A second channel is the “time-price,” which is the amount of time required to challenge reviewers' decisions. It seems probable that the higher the time-price (or “hassle factor”), the more likely providers are to comply with reviewers' initial decisions, even where they disagree. Grembowski et al. (1998) review a variety of network and plan characteristics that enhance the impact of UM by increasing the cost to the primary care physician of making referrals. It is reasonable to expect mental health providers to respond to changes in time-prices, in light of research showing that they respond to financial incentives such as the level of fees or the degree of risk (Harrow and Ellis 1992; Rosenthal 1999).

STUDY SETTING AND INTERVENTION

HPHC is a large, mature MCO based in New England, with approximately 900,000 members. At the time of our study, HPHC delivered mental health care “internally,” that is, through providers salaried or contracted by HPHC or its affiliated medical groups. HPHC was organized into different divisions corresponding to different HMO model types (e.g., staff, group) and geographical base. UM procedures differed across the various divisions.

Our focus is on two divisions that merged in January 1998 (the middle of our study period). Before 1998, the Medical Groups Division (MGD) included group-model and network-model delivery systems, with providers in group and smaller private practice settings. Specialty mental health care was delivered by contracted providers in private practice separate from the general medical practices. The Pilgrim Health Care Division contained providers who were formerly part of the Pilgrim independent practice association model HMO before it merged into HPHC in 1994. In Pilgrim as in MGD, specialty mental health care was delivered by contracted providers. As MGD and Pilgrim largely covered differing geographical areas, there was not much overlap between their networks of mental health practitioners. In neither of the divisions did providers limit their practice to HPHC patients.

In January 1998, the MGD and Pilgrim divisions were combined to form the Massachusetts Region. This resulted in important changes in UM practices for groups that had previously been part of the MGD, which occurred from January 1998 as they entered the new Massachusetts Region Division. The change included two main components.

First, the plan removed the requirement for assessment and preauthorization by a mental health coordinator located at the medical group. Before 1998, MGD enrollees had to be assessed in person by the mental health coordinator (a master's level clinician) before entering specialty treatment. This requirement was removed as part of the merger with the Pilgrim division. The new merged system required patients seeking specialty care to call a toll-free number, through which initial authorization was routinely provided without clinical assessment. This was implemented in January 1998 with the establishment of the call center. According to officials at HPHC, there was a transition period of approximately 6 months before it was operating smoothly.

Second, the plan eliminated UM of the first eight mental health visits. This change was made to achieve compliance with the 1996 Massachusetts mental health confidentiality law (chapter 8, Acts of 1996, Massachusetts). The law prohibited insurers from requiring detailed clinical information in order for patients to receive up to the state-mandated benefit of $500 per year (interpreted by HPHC as eight visits). The removal of UM for initial visits represented a substantial change at MGD, where mental health coordinators had been assessing and actively managing cases from the beginning of treatment. This implementation of the law at HPHC started in January 1998.

The two innovations responded to differing pressures, according to key informants. In the first case, the impetus was internal (harmonization of divisional practices after a merger), while in the second case it was external (compliance with new state legislation). Nonetheless, both innovations moved mental health management in the direction of expanding direct access to specialty care.

HYPOTHESES

In terms of the framework described earlier, the plan's abandonment of review for the first eight visits reduced the time–price to providers, potentially encouraging longer time in treatment (at least up to eight visits). Could the UM changes also have had a “network effect,” by reducing providers' fear of exclusion? In the present case it is less clear that the change in review procedures reduced fear of exclusion, as providers could still have reasonably feared exclusion from the network if they delivered episodes that were “too long.” So the most likely channel for an effect would be time–price reductions.

The abandonment of face-to-face evaluation seems unlikely to have had a direct effect on specialty providers' decision making. However, if patients found it burdensome to be evaluated by a clinician who would not end up treating them, then eliminating this practice would have increased the numbers willing to enter specialty treatment. Of course, it is possible that some patients may have found the evaluations helpful; one informant suggested they were intended to facilitate rather than impede entry into treatment.

Based on these points, we expected the following effects at MGD: an increase in the proportion of enrollees per year accessing specialty care, an increase in the average visits per user, and an increase in the proportion of patients receiving more than eight visits.

STUDY DESIGN

The change in access at MGD created a “natural experiment.” We can assess the impact of increasing direct access to specialty care by measuring how utilization changed for MGD enrollees. For Pilgrim division enrollees, on the other hand, the merger did not alter access. The Pilgrim enrollees could already obtain routine approval for initial treatment over a toll-free telephone line, and had not been subject to routine UM of initial visits before the $500 trigger. For them, the 1998 changes should only have affected visits after $500 had been incurred (around eight visits).

The Pilgrim enrollees, therefore, provide a nonexperimental comparison group that helps control for potentially confounding time trends. We compare the change in utilization at MGD (where access controls were loosened) to the change in utilization, if any, at Pilgrim (where access was already liberal and did not change).

As the comparison group (Pilgrim) was exposed to some of the same organizational and local influences as the experimental group (MGD), we reduce the risk of attributing general changes in utilization to the specific effect of the access expansion at MGD. There remains the possibility that the access expansion was confounded with other concurrent changes unique to either Pilgrim or MGD. One such change we identified was that enrollees at Pilgrim experienced a modest increase in copayments when the divisions merged, from $0 to $5 per visit. A similar increase was also experienced by some but not all MGD enrollees (some were already paying $5). We view a copay change this small as unlikely to have large utilization impacts for an employed population, and this was also the view of Pilgrim officials at the time.

A second potential confounder was the financial turbulence at Harvard Pilgrim late in 1999, which resulted in a period of receivership starting in January 2000 (after the end of our study period). Based on review of program data and conversations with health plan officials, there was no surge in disenrollment late in 1999, nor evidence of members disenrolling because of rumors of financial problems. Most importantly, disenrollment rates in 1999 did not differ substantially between individuals formerly in MGD and those formerly in Pilgrim.

There are inevitably limitations in a quasi-experimental design where subjects are not randomized to treatment. However, the use of 2 years data pre and post helps us to identify whether changes in 1998–1999 represent a true change or merely reflect a continuation of preexisting trends (Cook and Campbell 1979; Meyer 1995).

DATA

The population studied consists of commercially insured enrollees in the MGD and in Pilgrim Health Care division during 1996 and 1997, and all commercially insured enrollees in the merged Massachusetts Region during 1998 and 1999. Enrollees residing outside Massachusetts were excluded. The chief data sources for this study are HPHC's insurance claims and eligibility files, for the years 1996–1999. These data were supplemented with census data on the mean income and poverty rates in respondents' zip codes of residence. All data were de-identified by HPHC staff before analysis.

We limit our study to individuals who were continuously enrolled for all 4 years (1996–1999). This restriction is imposed to avoid confounding effects of the intervention with changes in the mix of enrollees. It also allows us to observe 2 years pre- and 2 years postintervention for every subject, and to classify subjects according to their pre-1998 division (which would not be possible if, for example, we included individuals who joined in 1998 or later). In some settings, limitation to the continuously enrolled can introduce bias, for example if the intervention being studied actually causes disenrollment. In the present setting, we view such bias as unlikely for two reasons. First, disenrollment is unlikely to result from an access enhancement. Second, in private health plans a large proportion of disenrollments occur not because the individual is dissatisfied with her plan, but because she changes employers or because the employer changes its health plan offerings (many offer only one plan).

The resulting sample includes 122,751 individuals (48,232 at MGD and 74,519 at Pilgrim). This represents about 25 percent of the total number of people who were enrolled in each year with these divisions. Forty-three individuals were excluded from regressions because of missing data on zip code variables.

Measures

Services were flagged as being for specialty outpatient mental health care if they were delivered by specialty mental health providers (psychiatrists, psychologists, psychiatric nurses, social workers), and also had either a primary diagnosis indicating mental health problem, or a procedure code for face-to-face mental health treatment (or both). Mental health diagnoses were those with ICD-9 codes 290, 293–302, 306–316 (substance abuse and mental retardation codes were excluded). Diagnoses were then grouped into categories using Clinical Classifications software (AHRQ 2003).

The key dependent variables are as follows. Initial use is measured by whether an enrollee used specialty outpatient mental health care in each year. Intensity is measured by the number of visits per year among users, and by the proportion of users who received more than four or more than eight visits in a year.

Independent variables include each enrollee's age, sex, relationship to subscriber (employee, spouse, or dependent), and the mean per capita income and poverty rate for the enrollee's five-digit zip code. We also include a set of dummies for the respondent's three-digit zip code to capture unobserved differences across geographical areas that might affect utilization. Finally, in regressions to predict intensity of care among users we include dummies to indicate whether the enrollee had claims with a principal diagnosis in one of five broad diagnostic categories (schizophrenia and other psychoses; affective disorders; anxiety, somatoform or personality disorders; adjustment disorders; other mental disorder). An enrollee could have claims in more than one category. An additional dummy indicates whether there were claims with a secondary diagnosis of substance abuse.

STATISTICAL METHODS

Our basic design is a controlled pre–post quasi-experiment. We measure the pre–post change in various utilization, quality, and cost measures and see whether the change differed between the experimental group (MGD) and the comparison group (Pilgrim). These data are first reported descriptively. In addition, we use multivariate regression to adjust for differences between the two groups on sociodemographic or other variables. This results in estimating models of the following form:

where Y is the utilization variable of interest; POST1 and POST2 are indicators for the first and second years postintervention, respectively; MGD indicates whether the subject was enrolled in the group that experienced the intervention; X is a vector of covariates (e.g., age, sex) and u is a disturbance term. For linear models, the coefficient on MGD × POST1 (β4) could be interpreted as indicating the impact of the MGD reforms on the dependent variable in question, for the first postperiod (and β5 serves similarly for the second postperiod). However, for analyses of the probability of use the model is nonlinear, so we took two additional steps to identify the reforms' impact. First, we computed the interaction effect for each sample member and the mean value of that interaction effect, using the approach suggested by Norton, Wang, and Ai (2004), and computing the standard error using their delta method. Second, we used the regression coefficients to compute each sample member's predicted probability of use, after controlling for included covariates. Summing these predicted probabilities across members measures the magnitude of the intervention's impact on access.

In addition, for the analyses of probability of use there are four annual observations for each enrollee, so we estimate a generalized estimating equations (GEE) model with individual random effects to control for unobserved individual attributes which might otherwise bias results (Zeger, Liang, and Albert 1988). This was implemented using SAS software (Genmod procedure with a logit link).

For the visits regressions, the dependent variable is the natural log of annual visits. This transformation is undertaken in order to correct for skewness in the distribution of visits per user (Duan et al. 1983). Individual-level clustering is also present in the analyses of visits per user, as many enrollees use specialty care in more than 1 year. We therefore control for unobserved individual attributes in this case too, by using a GEE model with a normal link (Zeger, Liang, and Albert 1988).

RESULTS

Sample Characteristics

The two study cohorts are compared in Table 1. Given the large sample size, even quite small differences are statistically significant. A somewhat higher proportion of enrollees at MGD were dependents (children or adolescents), and a lower proportion were employees. MGD enrollees also lived in zip codes with a higher mean income and lower poverty rates. MGD enrollees were more likely to have had at least one diagnosis of schizophrenia in the baseline year, and less likely to have had an anxiety or personality disorder. (The same enrollee could have had more than one diagnosis.)

Table 1.

Characteristics of Study Sample at Baseline

| MGD (n =48,232) | PHC (n =74,519) | |||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Female | 51.60% | 0.4997 | 52.36% | 0.4994 a |

| Age group a* | ||||

| Less than 18 | 29.87% | 0.4577 | 25.30% | 0.4347 |

| 18–30 | 8.59% | 0.2802 | 9.69% | 0.2959 |

| 31–45 | 30.36% | 0.4598 | 32.68% | 0.4690 |

| 46–55 | 21.62% | 0.4117 | 22.61% | 0.4183 |

| 56–64 | 9.56% | 0.2940 | 9.72% | 0.2962 |

| Relationship a* | ||||

| Employee | 43.08% | 0.4952 | 48.71% | 0.4998 |

| Spouse | 23.74% | 0.4255 | 23.11% | 0.4215 |

| Dependent | 33.19% | 0.4709 | 28.18% | 0.4499 |

| Mean income in zip code (000) | $31.52 | 10.051 | $26.58 | 8.0212 a |

| % below poverty in zip code | 5.38% | 4.4240 | 6.49% | 5.2716 a |

| Any claims in 1996 for | ||||

| Schizophrenia/related psychoses | 0.12% | 0.0344 | 0.08% | 0.0284 b |

| Affective disorder | 2.82% | 0.1654 | 2.69% | 0.1619 |

| Anxiety or personality disorder | 1.24% | 0.1105 | 1.43% | 0.1186 a |

| Adjustment disorder | 3.53% | 0.1845 | 3.56% | 0.1852 |

| Other mental disorder | 1.91% | 0.1370 | 1.10% | 0.1043 a |

| Any substance abuse secondary | 0.54% | 0.0734 | 0.60% | 0.0773 |

Notes:

denotes significant difference in means at p<.01

is p<.05

is p<.10.

Denotes χ2 test; other differences were tested using t-tests.

MGD, Medical Group Division; PHC, Pilgrim Health Care.

Descriptive Statistics

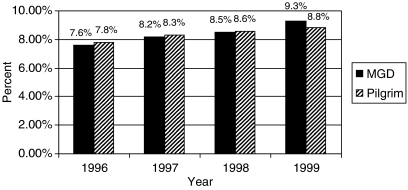

Both divisions experienced a gradual increase over time in the proportion of continuously enrolled members who accessed specialty mental health care (Figure 1). Through 1998, this proportion was consistently higher at Pilgrim. However, in 1999, the second year of the intervention, MGD underwent an acceleration of growth in the proportion using specialty mental health, overtaking Pilgrim. This pattern conforms with the hypothesis that access improved differentially at MGD, an initial pattern that is more fully investigated below using multivariate approaches.

Figure 1.

Proportion of Enrollees with Any Specialty Mental Health Visits

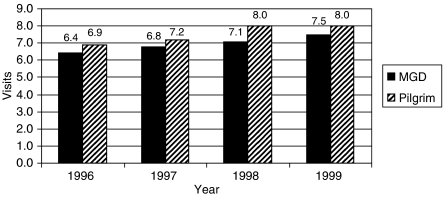

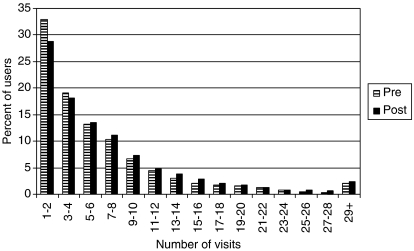

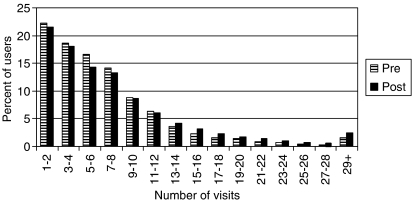

The annual number of visits per user also increased at both divisions over time, except at Pilgrim in 1999 (Figure 2). Users enrolled with MGD consistently received fewer annual visits than users at Pilgrim, throughout the study period. This pattern is less suggestive of any impact of the access changes at MGD. Finally, both divisions experienced some shift outward in the distribution of annual visits per user (Figures 3 and 4). At MGD, the proportion of users who received more than eight visits went from 24.4 percent in the pre-period to 28.4 percent in the postperiod. However, the proportion at Pilgrim changed from 28.1 to 32.7 percent, so this trend was not unique to MGD.

Figure 2.

Annual Specialty Mental Health Visits per User

Figure 3.

Annual Specialty Visits per User, Pre- versus Postintervention: Medical Groups Division

Figure 4.

Annual Specialty Visits per User, Pre- versus Postintervention: Pilgrim

Multivariate Analysis

The use of regression approaches suggests areas in which the intervention did and did not affect utilization.

Initial Access

Both divisions experienced a growth in probability of using specialty mental health care during the postperiod, as indicated by the positive coefficients on POST1 and POST2 (Table 2, first column). Growth did not differ significantly by division in the first year of the intervention. However, in the second year postintervention (1999), the increase was slightly larger at MGD. The mean of the interaction effect MGD × POST2 across sample members was positive and statistically significant (p<.01).

Table 2.

Determinants of the Probability of Using Any Specialty Mental Health Care Estimation Method: Generalized Estimating Equations Sample: All Person-Years

| β | SE | |

|---|---|---|

| Intercept | −2.6836 | 0.0589 a |

| Intervention | ||

| MGD | −0.0257 | 0.0211 |

| POST1 | 0.0571 | 0.0123 a |

| POST2 | 0.0915 | 0.0135 a |

| MGD × POST1 | −0.0002 | 0.0200 |

| MGD × POST2 | 0.0683 | 0.0215 a |

| Demographics | ||

| Female | 0.3233 | 0.0161 a |

| Age group (ref=46–55) | ||

| <18 | −0.2364 | 0.0598 a |

| 18–30 | −0.1100 | 0.0363 a |

| 31–45 | 0.1773 | 0.0212 a |

| 56–64 | −0.4888 | 0.0361 a |

| Relationship (ref=employee) | ||

| Spouse | 0.0656 | 0.0197 a |

| Dependent | 0.0447 | 0.0552 |

| Income per capita (000, zip code mean) | 0.0010 | 0.0012 |

| % below poverty (zip code mean) | −0.0041 | 0.0021 c |

| N (person-years) | 490,832 | |

| Log-likelihood | −139,985 | |

| Marginal effect of interaction | Mean | SE |

| MGD × POST1 | −0.0001 | 0.0009 |

| MGD × POST2 | 0.0050 | 0.0017 a |

Notes:

denotes p<.01; “b” is p<.05

is p<.10.

Model also includes 14 dummies for 3-digit zip codes (not shown).

MGD, Medical Group Division; SE, standard error.

To facilitate interpretation of nonlinear results, Table 3 provides the predicted probabilities of using specialty mental health based on the estimated coefficients. The growth in utilization from the preperiod to postperiod 2 was 0.5 percentage points faster at MGD than at Pilgrim, providing our difference-in-difference estimate of the intervention's impact: it increased the proportion receiving care.

Table 3.

Predicted Probabilities of Using Specialty Mental Health Care Computed from GEE Estimation in Table 2

| MGD (%) | Pilgrim (%) | Difference (%) | |

|---|---|---|---|

| Preintervention (1996–1997) | 8.0 | 8.1 | −0.1 |

| Postperiod 1 (1998) | 8.4 | 8.5 | −0.1 |

| Postperiod 2 (1999) | 9.2 | 8.8 | 0.4 |

| Difference | |||

| Post 1–pre | 0.4 | 0.4 | 0.0 |

| Post 2–pre | 1.2 | 0.7 | 0.5 a |

Note:

denotes significance at p<.01.

MGD, Medical Group Division; GEE, generalized estimating equations.

Intensity of Utilization

The change at MGD had a positive effect on annual visits per user, but again the effect only appears in the second year (Table 4). Correcting for the use of logs, the coefficient on MGDPOST2 indicates that an average user at MGD (one with seven visits) would have gained an increase of about 0.6 visits, beyond the general increase experienced at both divisions. Other coefficients indicate that women received more visits per year, and spouses received fewer than employees. People living in higher-income zip codes had more visits per year.

Table 4.

Determinants of Log Annual Visits per User Estimation Method: Generalized Estimating Equations Sample: Person-Years with Mental Health Use

| β | SE | |

|---|---|---|

| Intercept | 0.7713 | 0.0420 a |

| Intervention | ||

| MGD | −0.3375 | 0.0153 a |

| PRE2 | −0.0117 | 0.0102 |

| POST1 | 0.0446 | 0.0133 a |

| POST2 | 0.0338 | 0.0138 b |

| MGD × POST1 | 0.0134 | 0.0192 |

| MGD × POST2 | 0.0880 | 0.0203 a |

| Demographics | ||

| Female | 0.0755 | 0.0108 a |

| Age group (ref=46–55) | ||

| <18 | 0.0741 | 0.0392 c |

| 18–30 | −0.0400 | 0.0228 c |

| 31–45 | 0.0069 | 0.0140 |

| 56–64 | −0.0869 | 0.0248 a |

| Relationship (ref=employee) | ||

| Spouse | −0.0251 | 0.0128 b |

| Dependent | −0.0378 | 0.0364 |

| Income per capita (000, zipcode mean) | 3.9878 | 0.8768 a |

| % below poverty (zipcode mean) | 0.0011 | 0.0015 |

| Diagnosis | ||

| Schizophrenia/related psychoses | 0.7139 | 0.0435 a |

| Affective disorder | 0.7218 | 0.0113 a |

| Anxiety or personality disorder | 0.6045 | 0.0129 a |

| Adjustment disorder | 0.5053 | 0.0114 a |

| Other mental disorder | 0.4725 | 0.0142 a |

| Substance abuse secondary | 0.0905 | 0.0269 a |

| N (Person-years) | 41,279 | |

| R2 | 0.1282 | |

Notes:

denotes p<.01

is p<.05

is p<.10.

Model also includes 14 dummies for 3-digit zip codes (not shown).

MGD, Medical Group Division; SE, standard error.

We next examined effects on the proportion of users receiving more than four and more than eight visits, using a GEE model with the full set of covariates. As in the earlier probability-of-use analysis, we computed the difference-in-difference impact using predicted probabilities constructed from the regression coefficients. The results (not shown) revealed that from the preperiod to the second postperiod, the proportion of MGD users who received more than four visits increased from 56.0 to 61.9 percent. Over the same period, this proportion only increased by 0.6 percentage points among Pilgrim users. This implies that the changes at MGD increased the proportion receiving four or more visits by 5.3 percentage points (5.9–0.6).

Redoing this analysis with a higher cutoff, we found that the proportion receiving more than eight visits increased similarly at both divisions (5.1 versus 4.6 percentage points), implying less impact of the MGD changes on this part of the distribution. These results suggest that the increase in mean visits at MGD resulted from adding a few visits for low-end users (as observed in Figure 3), rather than from an outward shift in the entire distribution of visits.

Cost Impact

We used the regression results to project the cost of the additional utilization resulting from expanded access. As noted, simulation using the regression coefficients indicates that an additional 0.5 percent of enrollees used mental health care, and the average visits per user increased by 0.6 visits. Combining these changes implies a total of 4,675 additional visits (16 percent more). The mean cost per MGD visit in 1997 was $102.72, combining payments by insurer and patient. The total cost of the additional utilization was therefore $480,184 (4,675 × $102.72). This figure is limited, as it does not address the administrative costs of both the initial gatekeeping and the call center that replaced it. The administrative data this would require are not available.

DISCUSSION

The results of this study suggest that the gatekeeping changes at MGD did lead to increases in utilization of mental health care, as hypothesized. After controlling for secular trends at the Pilgrim division, MGD eventually experienced a 0.5-point increase in the proportion of enrollees receiving specialty mental health treatment. Similarly, there was an increase of about 0.6 annual visits per user, concentrated at the low end of the distribution. These changes occurred only in the second year after the gatekeeping changes, which corresponds to informants' reports that it took some months to stabilize the new system.

Our results support previous research indicating that UM approaches to mental health care, including outpatient services, can have a significant effect on utilization. At the same time, the magnitude of the increase in access and mean number of visits that we found was relatively modest. There are several possible explanations for this. First, 2 years may not be long enough to observe an effect, given that it takes users time to learn how to access a new system.

Second, MGD's requirement for specialty face-to-face evaluation may not have been as large a barrier as we hypothesized, for example if it did facilitate access for some patients. Also, its replacement was a different type of gatekeeping, not an unrestricted system. A change in gatekeeping model (from face-to-face evaluation to call-center intake) is not likely to have effects as large as eliminating gatekeeping altogether. In fact, even studies that examined the elimination of primary care gatekeeping, such as Ferris et al. (2001), did not find large effects on utilization of specialists.1

One implication of this study is that replacing older forms of UM with a call-center model need not result in a large surge in utilization and costs, as some health plans may fear. This could be relevant to plans that still require patients seeking specialty care to obtain access from a primary care gatekeeper, although this would be best addressed by a study directly comparing those approaches.

Several qualifications should be noted, in interpreting the results of this study. First, the utilization changes we report could also have resulted from confounding changes other than the change in gatekeeping (as enrollees were not randomized). However, given our control for secular trends, results would only be biased if such events affected the two divisions differentially. The only such differential change we identified was a small increase in copayments unique to Pilgrim, on a scale unlikely to have major effects.

Second, our study was limited to continuously enrolled plan members. This increases the internal validity of the study by reducing the impact of compositional changes, but may result in somewhat less generalizability. Third, we did not examine the effectiveness or quality of care delivered, only the number of visits. The additional utilization generated by the model change could have helped address unmet need, or alternatively it could have represented less efficient use of the health care system. Finally, some enrollees may have obtained care outside the plan, in which case we would not observe their utilization data. This would only alter our findings if out-of-plan use was changing differentially between the two divisions over time.

Acknowledgments

The authors acknowledge programming assistance from Doris Millan and Galina Zolotusky, and helpful discussions with Janice Harrington, Grant Ritter, Taverly Sousa, Steve Stelovitch, Joanna Volpe-Vartanian, and participants in the Harvard Pilgrim RISP seminar. This study was supported by NIMH grants R01 MH62197-03, and MH-56217-05 (Mental Health Services Research Program in Managed Care).

Disclosures: None.

Disclaimer: The views expressed in this paper are solely those of the authors and do not necessarily represent the views of Harvard Pilgrim Health Care.

NOTES

Ferris et al. (2001) did not examine specialty mental health care as this was already exempt from gatekeeping at the organization they studied (a different division of Harvard Pilgrim).

REFERENCES

- Agency for Healthcare Research and Quality. Clinical Classifications Software (CCS) for ICD-9-CM Fact Sheet. 2003 [accessed February 12, 2004]. Available at http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccsfactsheet.jsp.

- Cook TD, Campbell DT. Quasi-Experimentation: Design and Analysis Issues for Field Settings. Boston: Houghton-Mifflin; 1979. [Google Scholar]

- Dickey B, Azeni H. Impact of Managed Care on Mental-Health Services. Health Affairs. 1992;11(3):197–204. doi: 10.1377/hlthaff.11.3.197. [DOI] [PubMed] [Google Scholar]

- Duan N, Manning WG, Morris CN, Newhouse JP. A Comparison of Alternative Models of the Demand for Medical Care. Journal of Business and Economic Statistics. 1983;1:114–26. [Google Scholar]

- Eisen SV, Griffin M, Sederer LI, Dickey B, Mirin SM. The Impact of Preadmission Approval and Continued Stay Review on Hospital Stay and Outcome among Children and Adolescents. Journal of Mental Health Administration. 1995;22(3):270–7. doi: 10.1007/BF02521122. [DOI] [PubMed] [Google Scholar]

- Ferris TG, Chang Y, Blumenthal D, Pearson SD. Leaving Gatekeeping Behind—Effects of Opening Access to Specialists for Adults in a Health Maintenance Organization. New England Journal of Medicine. 2001;345(18):1312–7. doi: 10.1056/NEJMsa010097. [DOI] [PubMed] [Google Scholar]

- Frank RG, Brookmeyer R. Managed Mental Health Care and Patterns of Inpatient Utilization for Treatment of Affective Disorders. Social Psychiatry and Psychiatric Epidemiology. 1995;30:220–3. doi: 10.1007/BF00789057. [DOI] [PubMed] [Google Scholar]

- Gotowka TD, Smith RB. Focused Psychiatric Review: Impacts on Expense and Utilization. Benefits Quarterly. 1991;7(4):73–81. [PubMed] [Google Scholar]

- Grembowski DE, Cook K, Patrick DL, Roussel AE. Managed Care and Physician Referral. Medical Care Research and Review. 1998;55(1):3–31. doi: 10.1177/107755879805500101. [DOI] [PubMed] [Google Scholar]

- Harrow BS, Ellis RP. Mental Health Providers' Response to the Reimbursement System. In: Frank RG, Manning WG, editors. Economics and Mental Health. Baltimore: Johns Hopkins University Press; 1992. pp. 19–39. Chpt. 2. [Google Scholar]

- Hennessy KD, Green-Hennessy S. An Economic and Clinical Rationale for Changing Utilization Review Practices for Outpatient Psychotherapy. Journal of Mental Health Administration. 1997;24(3):340–9. doi: 10.1007/BF02832667. [DOI] [PubMed] [Google Scholar]

- Hodgkin D. The Impact of Private Utilization Management and Psychiatric Care: A Review of the Literature. Journal of Mental Health Administration. 1992;19(2):143–57. doi: 10.1007/BF02521315. [DOI] [PubMed] [Google Scholar]

- Howard RC. The Sentinel Effect in an Outpatient Managed Care Setting. Professional Psychology, Research and Practice. 1998;29(3):262–8. [Google Scholar]

- Landon BE, Wilson IB, Cleary PD. A Conceptual Model of the Effects of Health Care Organizations on the Quality of Care. Journal of the American Medical Association. 1998;279(17):1377–82. doi: 10.1001/jama.279.17.1377. [DOI] [PubMed] [Google Scholar]

- Liu X, Sturm R, Cuffel BJ. The Impact of Prior Authorization on Outpatient Utilization in Managed Behavioral Health Plans. Medical Care Research Review. 2000;57:182–95. doi: 10.1177/107755870005700203. [DOI] [PubMed] [Google Scholar]

- Ma CA, McGuire TG. Network Incentives in Managed Health Care. Journal of Economics and Management Strategy. 1998;11(1):1–35. [Google Scholar]

- Mechanic D, Schlesinger M, McAlpine DD. Management of Mental Health and Substance Abuse Services: State-of-the-Art and Early Results. Milbank Quarterly. 1995;73(1):19–53. [PubMed] [Google Scholar]

- Meyer BruceD. Natural and Quasi-Natural Experiments in Economics. Journal of Business and Economic Statistics. 1995;13(2):151–62. [Google Scholar]

- Miller I. Managed Care Is Harmful to Outpatient Health Services: A Call for Accountability. Professional Psychology, Research, and Practice. 1996;27:349–63. [Google Scholar]

- Mintz DC, Marcus SC, Druss BG, West JC, Brickman AL. Association of Utilization Management and Treatment Plan Modifications among Practicing US Psychiatrists. American Journal of Psychiatry. 2004;161(6):1103–9. doi: 10.1176/appi.ajp.161.6.1103. [DOI] [PubMed] [Google Scholar]

- Norton EC, Wang H, Ai C. Computing Interaction Effects and Standard Errors in Logit and Probit Models. Stata Journal. 2004;4(2):103–16. [Google Scholar]

- Rosenthal M. Risk Sharing in Managed Behavioral Health Care. Health Affairs. 1999;18(5):204–13. doi: 10.1377/hlthaff.18.5.204. [DOI] [PubMed] [Google Scholar]

- Schlesinger M, Wynia M, Cummins D. Some Distinctive Features of the Impact of Managed Care on Psychiatry. Harvard Review of Psychiatry. 2000;8(5):216–30. [PubMed] [Google Scholar]

- Tischler GL. Utilization Management of Mental Health Services by Private Third Parties. American Journal of Psychiatry. 1990;147(8):967–73. doi: 10.1176/ajp.147.8.967. [DOI] [PubMed] [Google Scholar]

- Wickizer TM, Lessler D, Travis KM. Controlling Inpatient Psychiatric Utilization through Managed Care. American Journal of Psychiatry. 1996;153:339–45. doi: 10.1176/ajp.153.3.339. [DOI] [PubMed] [Google Scholar]

- Wickizer TM, Lessler D. Do Treatment Restrictions Imposed by Utilization Management Increase the Likelihood of Readmission for Psychiatric Patients? Medical Care. 1998;36(6):844–50. doi: 10.1097/00005650-199806000-00008. [DOI] [PubMed] [Google Scholar]

- Zeger SL, Liang KY, Albert PS. Models for Longitudinal Data: A Generalized Estimating Equation Approach. Biometrics. 1988;44(4):1049–60. [PubMed] [Google Scholar]

- Zusman J. Utilization Review: Theory, Practice, and Issues. Hospital and Community Psychiatry. 1990;41(5):531–6. doi: 10.1176/ps.41.5.531. [DOI] [PubMed] [Google Scholar]