Abstract

Objective

To validate algorithms using administrative data that characterize ambulatory physician care for patients with a chronic disease.

Data Sources

Seven-hundred and eighty-one people with diabetes were recruited mostly from community pharmacies to complete a written questionnaire about their physician utilization in 2002. These data were linked with administrative databases detailing health service utilization.

Study Design

An administrative data algorithm was defined that identified whether or not patients received specialist care, and it was tested for agreement with self-report. Other algorithms, which assigned each patient to a primary care and specialist physician, were tested for concordance with self-reported regular providers of care.

Principal Findings

The algorithm to identify whether participants received specialist care had 80.4 percent agreement with questionnaire responses (κ = 0.59). Compared with self-report, administrative data had a sensitivity of 68.9 percent and specificity 88.3 percent for identifying specialist care. The best administrative data algorithm to assign each participant's regular primary care and specialist providers was concordant with self-report in 82.6 and 78.2 percent of cases, respectively.

Conclusions

Administrative data algorithms can accurately match self-reported ambulatory physician utilization.

Keywords: Validation studies, specialist care, chronic disease care, administrative data, primary care, diabetes mellitus

The care of chronic diseases encompasses a growing part of the health care system. Because of increasing accountability requirements and fiscal restraints, it is becoming ever more important to establish the most effective and efficient ways of delivering chronic disease care. Studies using administrative data offer a unique ability to evaluate the quality of care delivered under different care models, because they provide information on large numbers of patients quickly and inexpensively, and because they measure actual care in real-world clinical situations, rather than the idealized world of clinical trials or even prospective observational studies. However, the assumption that these data sources can accurately identify and assign patients to various providers of care may not be correct.

The accuracy of databases documenting hospitalization episodes has been established in many jurisdictions, rendering these data the standard against which other data sources are often measured (Fowles et al. 1995). In contrast, the accuracy of ambulatory physician billing claims data are not well documented. These claims have been used in both the United States and Canada to identify cohorts of patients with various diseases, (Studney and Hakstian 1981; Quam et al. 1993; Fowles et al. 1995; Fowles, Fowler, and Craft 1998; Hux et al. 2002) but their accuracy in measuring aspects of ambulatory physician utilization has not been as well examined. A recent study found 86.8 percent agreement between billing claims data and the office charts of academic family physicians for capturing ambulatory mental health service use (Steele et al. 2004). Validation studies using administrative data to measure ambulatory patient care for other chronic diseases are lacking.

In this study, we sought to validate administrative data algorithms related to ambulatory diabetes care, an archetypal chronic disease that results in many complications and comorbidities (Harris et al. 1995; Boyle et al. 2001). We evaluated algorithms that (1) predict whether a patient used specialist care, and (2) assigned physicians as a patient's regular providers. The algorithms were compared against patient self-report on a written questionnaire.

METHODS

Study Population

Study participants were recruited from across Ontario between August 2003 and December 2004 using a variety of strategies. Community- and hospital-based members of the Ontario Pharmacists' Association were approached to recruit for the study via an introductory e-mail and subsequent telephone calls from the study center. Staff in participating pharmacies identified people with diabetes based on prescriptions for glucose-lowering medications or capillary glucose test strips, or based on the computerized patient profile held in the pharmacy. Interested participants contacted the study center and were sent an information package, questionnaire, and consent form. Diabetes education centers (DECs) were also approached to give questionnaires to interested and eligible participants. Pharmacists and DECs were given a small honorarium for each participant recruited from their center; participants themselves were not remunerated. Other recruitment methods included advertising the study through brochures and posters placed in pharmacies, supermarkets, community centers, libraries, and DECs. Participants in the Toronto area were also recruited through community events and a newsletter from the local chapter of the Canadian Diabetes Association.

Participants were included if they lived in Ontario, and had type 1 or type 2 diabetes of at least 2 years duration. To restrict the sample to adults, people aged < 18 were excluded. Eligible participants completed a self-administered questionnaire containing questions on the utilization of specialty diabetes services, demographic information and history of complications and comorbidities. Specifically, participants indicated whether they had a regular diabetes specialist (defined in the questionnaire as an internist or endocrinologist) in 2002, and if so, who it was. They also indicated whether they had a regular family doctor or general practitioner in 2002, and if so, who it was. The College of Physicians and Surgeons of Ontario registration number for each physician reported by participants was found through the College's website (http://www.cpso.on.ca), and this number was scrambled to an anonymous physician identifier for linkage to the administrative data.

Participants also provided their health card number, which was scrambled to anonymously link their questionnaire responses to the administrative data.

Administrative Data Algorithms

The main administrative data source was the physician service claims database, which lists all claims for remuneration submitted by fee-for-service physicians in Ontario. Each record lists an anonymous identifier for the physician submitting the claim. Approximately 10 percent of physicians are paid under alternative funding arrangements, so service claims from these physicians are not present in the database. Physician specialty was identified by linking the physician identifier in the claims database to the Ontario Physician Workforce Database, which catalogues all registered physicians in Ontario.

All physician service claims submitted for each eligible participant in 2002 were extracted. Claims for ambulatory visits were selected by removing claims for diagnostic and therapeutic tests and procedures, and claims generated during hospitalizations and emergency department visits.

The first algorithm tested whether or not participants had received specialist care. Claims from general internists and endocrinologists were identified, and the numbers of such claims per participant were summed. The algorithm identified participants as having received specialist care if they had had a threshold number of specialist visits during the year. The algorithm was tested with the threshold varying between one and 20.

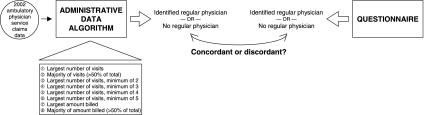

Several algorithms were then defined to identify the individual physicians who were each participant's regular primary care physician and diabetes specialist (Figure 1). These algorithms assigned, as the regular provider, the physician:

Figure 1.

To Test Whether Administrative Data Could Identify Participants' Regular Providers of Care, Eight Different Algorithms Were Applied to Each Participant's 2002 Ambulatory Physician Service Claims Data.

Each algorithm either identified a specific physician as the regular physician, or found that the participant did not have a regular physician. This result was compared with the participant's questionnaire responses, to determine concordance between the algorithm, and the questionnaire. Regular primary care and specialist providers were examined separately.

with whom a participant had the largest number of visits,

with whom a participant had the majority of his/her visits,

with whom a participant had the largest number of visits above a minimum threshold number,

who billed the largest amount for the participant, and

who billed the majority of the amount billed for the participant.

If an algorithm did not assign a physician (e.g., the algorithm assigning the physician with whom the participant had the majority of their visits, for a participant where no one physician had >50 percent of the visits), the participant was deemed to have no regular provider. The algorithms were applied using primary care claims data to define participants' regular primary care providers, and again using diabetes specialist claims data to define their regular specialists. In cases with ties, one physician was assigned at random.

Because some physicians' billing records are incomplete in the service claims database, we examined another database as a method of identifying participants' regular physicians: the Ontario Drug Benefit program database, which details all prescriptions filled under the provincial formulary for all residents aged 65 or older. This database includes an anonymous identifier for the prescribing physician, regardless of their funding arrangement. For participants aged 65 or older during the study period, two additional algorithms to identify their regular physicians were tested using their prescription records from 2002, assigning the physician who had written the largest number of the participant's prescriptions, or the majority of the participant's prescriptions. As before, the algorithms were applied using prescriptions written by primary care physicians to identify participants' regular primary care provider, and again using prescriptions written by diabetes specialists to identify participants' regular specialists. The final algorithms combined claims and prescription data, to address situations where the regular provider was paid under alternative funding arrangements and so claims data were missing. The algorithms assigned the physician with whom the participant had the most visits, as above, but if the number of visits was at or below a threshold ranging from zero to two, the algorithm instead used the physician who had written the most prescriptions.

Statistical Analysis

The demographic characteristics of the participants were compared with the general population of diabetic patients in Ontario, based on the Ontario Diabetes Database (Hux et al. 2002). The comparison general population was defined as all adults in Ontario diagnosed with diabetes before January 1, 2002 who were still alive on December 31, 2004.

The algorithm predicting whether or not participants had received specialist care was compared against their response with the question, “Did you have a regular diabetes specialist?” At each threshold level of visits from one to 20, we determined the percent agreement and kappa statistic of agreement (κ) with 95 percent confidence intervals (CIs). We also determined the sensitivity, specificity, and positive and negative predictive values with 95 percent CIs of the algorithm. When the algorithm found that a participant saw a specialist when the participant denied seeing one, it was considered a “false positive,” whereas when the algorithm found that a participant did not see a specialist and self-report indicated that he or she did, it was considered a “false negative.” A receiver-operating characteristic (ROC) curve was constructed using the sensitivities and specificities across threshold levels.

For each algorithm assigning participants to regular primary care and specialist providers, the concordance between the physician assigned by the algorithm and the physician named as the regular provider in the questionnaire was determined. The algorithm and self-report were considered concordant if they agreed on the same physician or if they agreed that the participant did not have a regular physician. They were discordant if the algorithm assigned a physician and the participant did not report one, if the algorithm did not assign a regular physician but the participant did identify one, or if the algorithm assigned a different physician than the one named by the participant.

Sample Size Calculation

The sample size for the study was based on having a CI of a prespecified width around the estimate of sensitivity for the algorithm predicting specialist utilization. We selected a CI with a width of 10 percent (±5). Based on the assumptions that 20 percent of diabetes patients in Ontario see specialists, (Jaakkimainen, Shah, and Kopp 2003) and that we would find false positive and false negative rates of 15 percent, a sample size of 1,285 participants was needed to have a CI with a width of 10 percent. However, an interim analysis of the data found that approximately 40 percent of the participants reported seeing a specialist, higher than expected. With this new assumption, only 591 participants were needed to achieve the desired CI width, so recruitment was terminated.

Ethics

The study received ethics approval from the Research Ethics Board of Sunnybrook & Women's College Health Sciences Center. All participants gave written informed consent to be included and for linkage of their questionnaire responses to administrative data via their health card number.

RESULTS

Recruitment

A total of 295 pharmacies agreed to take part in the study. From them, 1,483 people requested information about the survey, of whom 579 were eligible and agreed to participate in the study (39.0 percent). We asked 29 DECs to directly identify potential participants, from which 71 eligible people were recruited. The remaining patients were recruited through advertising posters in DECs and public places (n = 56), through community events (n = 51) and through a newsletter advertisement (n = 24). Hence, a total of 781 eligible people were recruited. All participants' health card numbers were successfully linked to their anonymous identification number for linkage with the administrative data.

The participants identified 634 primary care physicians and 135 specialists as regular providers of their care. One primary care physician did not practice in Ontario, and one specialist could not be identified, but the registration numbers of the remaining physicians were found and successfully converted to anonymous physician identification numbers to allow linkage with the administrative data. Most of the physicians identified by patients as regular primary care providers were, in fact, primary care physicians. However, 13 participants identified various specialists, including endocrinologists, internists, and obstetricians, despite the fact that in Ontario, these specialists do not provide primary care. Fifty-five participants identified physicians with other specialties as their regular diabetic specialists, including 32 identifying cardiologists, nine identifying primary care physicians, and three identifying rheumatologists.

Demographic Characteristics of Participants

The demographic characteristics of the questionnaire participants and of the general diabetic population are shown in Table 1. People who participated in the study were younger and had longer diabetes duration than the general population. These differences in demographic characteristics should not affect the accuracy of administrative data algorithms to describe specialist care. Toronto residents were underrepresented in the sample of participants, while eastern Ontario residents were overrepresented, which in part reflects the geographic distribution of pharmacies that participated in the study.

Table 1.

Comparison between the Questionnaire Participants and the General Diabetic Population of Ontario (Percent or Mean±Standard Deviation)

| Questionnaire Participants, n = 781 | General Diabetic Population, n = 567,289 | p | |

|---|---|---|---|

| Age (years) | 61.0 ± 13.5 | 63.2 ± 14.8 | < 0.0001 |

| Sex | 1.0 | ||

| Female | 48.5% | 48.4% | |

| Male | 51.5% | 51.6% | |

| Place of residence | < 0.0001 | ||

| Eastern Ontario | 30.3% | 15.0% | |

| South-central Ontario | 28.9% | 32.1% | |

| Toronto | 15.1% | 25.3% | |

| Southwestern Ontario | 16.9% | 19.1% | |

| Northern Ontario | 8.7% | 8.4% | |

| Duration of diabetes | < 0.0001 | ||

| 2–5 years | 25.6% | 29.2% | |

| 6–10 years | 24.7% | 36.1% | |

| >10 years | 49.7% | 34.7% |

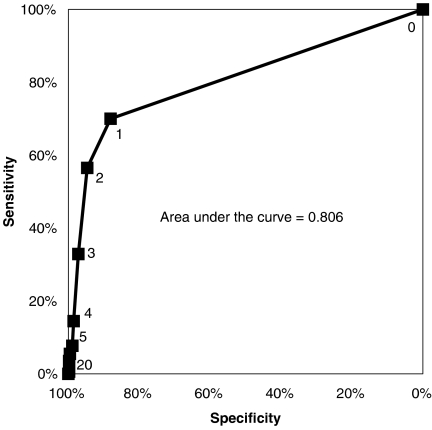

Algorithm to Identify Whether Participants Received Specialist Care

The ROC curve derived from the algorithm to identify specialist care is shown in Figure 2, and the area under the curve was 0.806. With a threshold of one specialist visit in the administrative data required to declare regular specialist care, the questionnaire and the algorithm agreed for 628 participants (80.4 percent), and κ was 0.59 (95 percent CI 0.53–0.64). Sensitivity relative to self-report was 68.9 percent (95 percent CI 63.8–74.0 percent), specificity was 88.3 percent (95 percent CI 85.4–91.2 percent), positive predictive value was 80.2 percent (95 percent CI 75.5–84.9 percent) and negative predictive value was 80.5 percent (95 percent CI 77.0–84.0 percent). Increasing the algorithm's threshold to two or more visits lowered κ, and marginally increased specificity with a substantial loss in sensitivity.

Figure 2.

Receiver-Operating-Characteristic Curve for the Algorithm to Predict Whether Patients Received Specialist Care, Compared with Self-Report.

Each point represents the sensitivity and specificity of the algorithm with the indicated number of visits required to assign specialist care.

Algorithms to Assign Participants to Regular Providers of Care

The concordance between administrative data algorithms and the self-reported regular providers of care is shown in Table 2. Many of the algorithms had quite good concordance. For example, the algorithm using the largest number of visits was concordant with self-report for the regular primary care physician in 82.6 percent of cases: in 82.2 percent where both agreed on the same regular primary care physician, and in 0.4 percent where they both agreed that the participant had no regular primary care physician. In 9.7 percent of cases, the algorithm assigned a different primary care physician than the one reported by the participant, in 5.4 percent the participant reported a physician but the algorithm did not assign one, and in 2.3 percent the algorithm assigned a regular primary care physician, but the participant reported not having one. For identification of diabetes specialists, the algorithm assigning the specialist who had the greatest number of visits (but only if there were at least two) had the best concordance. This algorithm assigned the same specialist as self-report in 20.2 percent of cases, and both agreed that the participant had no regular specialist for 58.3 percent of participants, for an overall 78.5 percent concordance rate. For 1.2 percent of participants, the algorithm assigned a different specialist than that reported by the participant, for 17.0 percent the participant reported a specialist but the algorithm did not assign one, and for 3.3 percent the algorithm assigned a regular specialist, but the participant reported not having one.

Table 2.

Concordance between Administrative Data Algorithms and Self-Report to Identify Regular Providers of Primary and Specialist Care among all Participants

| Algorithm | Primary Care Practitioners (%) | Specialists (%) |

|---|---|---|

| Largest number of visits | 82.6 | 78.2 |

| Majority of visits | 81.7 | 78.2 |

| Largest number of visits, minimum of two | 79.3 | 78.5 |

| Largest number of visits, minimum of three | 74.1 | 71.2 |

| Largest number of visits, minimum of four | 67.2 | 64.5 |

| Largest number of visits, minimum of five | 58.5 | 63.3 |

| Largest amount billed | 81.8 | 77.7 |

| Majority of amount billed | 79.4 | 77.6 |

Among participants aged 65 or older for whom prescription data were available, the algorithms using prescription data only had less concordance than the algorithms using physician claims data (Table 3). Combining the prescription and physician claims data improved concordance for identifying the regular primary care provider from 82.7 percent using visits data only to 84.9 percent using both data sources. However, the best concordance for identifying the regular specialist was achieved with visits data only.

Table 3.

Concordance between Administrative Data Algorithms and Self-Report to Identify Regular Providers of Primary and Specialist Care among Seniors

| Algorithm | Primary Care Practitioners (%) | Specialists (%) |

|---|---|---|

| Largest number of visits | 82.7 | 80.6 |

| Majority of visits | 80.9 | 80.6 |

| Largest number of visits, minimum of two | 78.8 | 82.0 |

| Largest number of visits, minimum of three | 76.3 | 77.7 |

| Largest number of visits, minimum of four | 72.3 | 73.0 |

| Largest number of visits, minimum of five | 64.4 | 71.9 |

| Largest amount billed | 81.3 | 80.2 |

| Majority of amount billed | 78.4 | 80.2 |

| Largest number of prescriptions | 76.6 | 77.3 |

| Majority of prescriptions | 76.6 | 77.3 |

| Visits, or prescriptions if visits = 0 | 84.9 | 78.8 |

| Visits, or prescriptions if visits ≤1 | 83.1 | 78.8 |

| Visits, or prescriptions if visits ≤2 | 82.7 | 78.8 |

Using 2003 physician claims data instead of 2002, we reevaluated those participants for whom the first algorithm, using the largest number of visits, assigned a different regular primary care physician (n = 76) and specialist (n = 17) than the one reported on the questionnaire. When doing so, 31 (41 percent) became concordant for the primary care physician and six (35 percent) for the specialist.

DISCUSSION

Compared with what patients themselves report, the administrative data algorithms described in this study accurately predict ambulatory physician utilization by people with diabetes. These algorithms could be used to describe the sources of care for diabetic patients, to examine temporal or geographic variations in physician utilization, or to measure continuity of care. They could also be used to study the influence of various care models, continuity of care, provider specialties, or other provider characteristics (such as age or sex) on quality of care and outcomes. In order to carry out such studies using administrative data, it is critical to know how to accurately assign people to different sources of care, to ensure that the health care, and policy decisions derived from the research are sound. This study is among the first to validate administrative data sources to measure ambulatory physician utilization, and these algorithms could serve as a model for studying other chronic diseases using administrative data.

The administrative data algorithm to predict the receipt of specialist care performed best with a threshold of one claim from a specialist, and had moderate agreement with self-report. Higher thresholds resulted in slight improvements in specificity at the expense of much worse sensitivity, suggesting that many participants had only one visit with a specialist. The algorithm may under-detect specialist care compared with self-report because visits with specialists who are not paid under fee-for-service models would not be captured. Alternatively, it may over-detect specialist care because any specialist visit would be counted, whether the patient was seeing the specialist for diabetes or some other medical problem.

To assign the regular providers of care, the algorithm using the largest number of visits with no threshold had very good concordance. Although other algorithms had slightly better concordance for assigning the specialist, or for assigning physicians among patients aged 65 or older, this algorithm was consistently good in all the scenarios. In general, the algorithms had better concordance with assigning primary care physicians than specialists. This may be because patients often have more visits with primary care providers than with specialists, so the concept of a “regular” provider is more appropriate in primary care. Algorithms using prescriptions data underperformed compared with those using physician visit data, perhaps because of misidentification of the prescribing physician by pharmacists.

Self-report is an imperfect gold standard for defining ambulatory care, so disagreement between data sources in our study may be due to mistakes made by participants on the questionnaire rather than inherent inaccuracies of the algorithms. Previous investigators have examined concordance between questionnaires and other sources of data (Harlow and Linet 1989). In general, agreement is good for major events like hospitalizations or surgery, but can be poor for diagnostic procedures, chronic disease diagnoses, or medications previously taken. Several other Canadian studies have examined concordance between self-reported health care utilization and administrative data sources. Raina et al. (2002) examined the agreement between discharge abstract and physician service claims databases and self-reported utilization in a survey of randomly selected seniors. Concordance between data sources was good for hospital stay in the previous 12 months, general practitioner utilization and specialist utilization, but κ (agreement beyond chance) was poor for the latter two measures. The agreement we found for diabetes specialist utilization was substantially higher, perhaps because patients with a chronic disease may be better able to recall visits to a physician from a specific specialty for that specific disease. However, another study compared self-reported mental health service use in a national population-based survey with physician service claims for people who reported depression, and found moderate to low agreement for both any utilization and volume of utilization (Rhodes and Fung 2004). Both of these studies examined utilization of health services in general; unlike our study, neither identified the specific physicians patients considered their regular providers.

There are several possible reasons why self-report may have been inaccurate in our questionnaire. The questionnaire asked about physician utilization in 2002, but was administered up until the end of 2004, so participants may have incorrectly recalled their physician use. Incorrect recall was shown to be a potential cause for discordance among those for whom the algorithm assigned a different physician than the one participants indicated as their regular provider: when we reapplied the algorithm using 2003 physician service claims for these patients, it became concordant in more than one-third of cases. Some participants may have incorrectly understood their physicians' specialties: for example, 55 participants (7 percent) identified a physician with another specialty as their regular diabetes specialist. For these participants, the algorithms could not be concordant. Finally, participants may have had a physician whom they considered their regular physician when asked, even if they did not actually visit that physician during 2002.

Ultimately, despite these limitations, self-reported ambulatory care utilization is still the best comparator for the algorithms used in this study. While a medical record audit could be an alternative source of information about ambulatory physician encounters, it would likely be inadequate, as no single chart would completely document all of a patient's physician utilization. Moreover, only patients themselves can report who they perceive to be their “regular” physicians.

These algorithms have only been validated using Ontario administrative data, but the results may be generalizable to administrative data in other jurisdictions. The proposed algorithms could also be modified to examine specialty care for other diseases, or to identify regular primary care providers for patients without any specific chronic disease. Furthermore, the algorithms could be modified to account for other differences in local practice patterns, such as by including internists among primary care providers in places where they provide this care. Although the algorithms' validity in these situations is not known, this study does show that simple administrative data algorithms can closely match what patients report about their outpatient physician care. The study offers a methodologic approach that could be used by other investigators wishing to study outpatient care for other diseases or in other settings.

Acknowledgments

This study was supported by grants from the Canadian Diabetes Association in honor of the late Dorothy Ernst, and the Banting and Best Diabetes Centre at the University of Toronto. We also gratefully acknowledge the assistance of Zana Mariano, Daniel Hackam, and the Ontario Pharmacists' Association in the completion of this study. The Canadian Institutes of Health Research supports Dr. Shah with a Clinician–Scientist Award and Dr. Laupacis as a Senior Scientist. Dr. Booth is supported by a St. Michael's Hospital/University of Toronto/Glaxo SmithKline Junior Faculty Scholarship in Endocrinology during this work and currently holds a scholarship in Innovative Health Systems Research through the Physicians' Services Incorporated Foundation of Ontario.

REFERENCES

- Boyle JP, Honeycutt AA, Narayan KM, Hoerger TJ, Geiss LS, Chen H, Thompson TJ. Projection of Diabetes Burden through 2050: Impact of Changing Demography and Disease Prevalence in the U.S. Diabetes Care. 2001;24(11):1936–40. doi: 10.2337/diacare.24.11.1936. [DOI] [PubMed] [Google Scholar]

- Fowles JB, Fowler EJ, Craft C. Validation of Claims Diagnoses and Self-Reported Conditions Compared with Medical Records for Selected Chronic Diseases. Journal of Ambulatory Care Management. 1998;21(1):24–34. doi: 10.1097/00004479-199801000-00004. [DOI] [PubMed] [Google Scholar]

- Fowles JB, Lawthers AG, Weiner JP, Garnick DW, Petrie DS, Palmer RH. Agreement between Physicians' Office Records and Medicare Part B Claims Data. Health Care Financing Review. 1995;16(4):189–99. [PMC free article] [PubMed] [Google Scholar]

- Harlow SD, Linet MS. Agreement between Questionnaire Data and Medical Records: The Evidence for Accuracy of Recall. American Journal of Epidemiology. 1989;129(2):233–48. doi: 10.1093/oxfordjournals.aje.a115129. [DOI] [PubMed] [Google Scholar]

- Harris MI, Cowie CC, Stern MP, Boyko EJ, Reiber GE, Bennett PH. Diabetes in America. Washington, DC: National Institutes of Health, National Institute of Diabetes and Digestive and Kidney Diseases; 1995. [Google Scholar]

- Hux JE, Ivis F, Flintoft V, Bica A. Diabetes in Ontario: Determination of Prevalence and Incidence Using a Validated Administrative Data Algorithm. Diabetes Care. 2002;25(3):512–6. doi: 10.2337/diacare.25.3.512. [DOI] [PubMed] [Google Scholar]

- Jaakkimainen L, Shah BR, Kopp A. Sources of Physician Care for People with Diabetes. In: Hux JE, Booth GL, Slaughter PM, Laupacis A, editors. Diabetes in Ontario: An ICES Practice Atlas. Toronto: Institute for Clinical Evaluative Sciences; 2003. pp. 9.181–92. [Google Scholar]

- Quam L, Ellis LBM, Venus P, Clouse J, Taylor CG, Leatherman S. Using Claims Data for Epidemiologic Research: The Concordance of Claims-based Criteria with the Medical Record and Patient Survey for Identifying a Hypertensive Population. Medical Care. 1993;31(6):498–507. [PubMed] [Google Scholar]

- Raina P, Torrance-Rynard V, Wong M, Woodward C. Agreement between Self-Reported and Routinely Collected Health-Care Utilization Data among Seniors. Health Services Research. 2002;37(3):751–74. doi: 10.1111/1475-6773.00047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhodes AE, Fung K. Self-Reported Use of Mental Health Services versus Administrative Records: Care to Recall? International Journal of Methods in Psychiatric Research. 2004;13(3):165–75. doi: 10.1002/mpr.172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steele LS, Glazier RH, Lin E, Evans M. Using Administrative Data to Measure Ambulatory Mental Health Service Provision in Primary Care. Medical Care. 2004;42(10):960–5. doi: 10.1097/00005650-200410000-00004. [DOI] [PubMed] [Google Scholar]

- Studney DR, Hakstian AR. A Comparison of Medical Record with Billing Diagnostic Information Associated with Ambulatory Medical Care. American Journal of Public Health. 1981;71(2):145–9. doi: 10.2105/ajph.71.2.145. [DOI] [PMC free article] [PubMed] [Google Scholar]