Abstract

Objective

To evaluate the accuracy of household survey estimates of the size and composition of the nonelderly population covered by nongroup health insurance.

Data Sources/Study Setting

Health insurance enrollment statistics reported to New Jersey insurance regulators. Household data from the following sources: the 2002 Current Population Survey (CPS)-March Demographic Supplement, the 1997 and 1999 National Surveys of America's Families (NSAF), the 2001 New Jersey Family Health Survey (NJFHS), a 2002 survey of known nongroup health insurance enrollees, a small 2004 survey testing alternative health insurance question wording.

Study Design

To assess the extent of bias in estimates of the size of the nongroup health insurance market in New Jersey, enrollment trends are compared between official enrollment statistics reported by insurance carriers to state insurance regulators with estimates from three general population household surveys. Next, to evaluate possible bias in the demographic and socioeconomic composition of the New Jersey nongroup market, distributions of characteristics of the enrolled population are contrasted among general household surveys and a survey of known nongroup subscribers. Finally, based on inferences drawn from these comparisons, alternative health insurance question wording was developed and tested in a local survey to test the potential for misreporting enrollment in nongroup coverage in a low-income population.

Data Collection/Extraction Methods

Data for nonelderly New Jersey residents from the 2002 CPS (n = 5,028) and the 1997 and 1999 NSAF (n = 6,467 and 7,272, respectively) were obtained from public sources. The 2001 NJFHS (n = 5,580 nonelderly) was conducted for a sample drawn by random digit dialing and employed computer-assisted telephone interviews and trained, professional interviewers. Sampling weights are used to adjust for under-coverage of households without telephones and other factors. In addition, a modified version of the NJFHS was administered to a 2002 sample of known nongroup subscribers (n = 1,398) using the same field methods. These lists were provided by four of the five largest New Jersey nongroup insurance carriers, which represented 95 percent of all nongroup enrollees in the state. Finally, a modified version of the NJFHS questionnaire was fielded using similar methods as part of a local health survey in New Brunswick, New Jersey, in 2004 (n = 1,460 nonelderly).

Principal Findings

General household sample surveys, including the widely used CPS, yield substantially higher estimates of nongroup enrollment compared with administrative totals and yield estimates of the characteristics of the nongroup population that vary greatly from a survey of known nongroup subscribers. A small survey testing a question about source of payment for direct-purchased coverage suggests than many public coverage enrollees report nongroup coverage.

Conclusions

Nongroup health insurance has been subject to more than a decade of reform and is of continuing policy interest. Comparisons of unique data from a survey of known nongroup subscribers and administrative sources to household surveys strongly suggest that the latter overstates the number and misrepresent the composition of the nongroup population. Research on the nongroup market using available sources should be interpreted cautiously and survey methods should be reexamined.

Keywords: Health insurance, nongroup insurance, health care surveys

The individual or nongroup health insurance market has played a prominent role in recent policy discussions seeking ways to improve the efficiency and equity with which health insurance is obtained in the United States (Pauly and Percy 2000; McClellan and Baicker 2002; Pauly and Nichols 2002). In particular, the nongroup market has been viewed by some as an alternative to the existing system of employment-based health insurance, one that would provide increased health plan choice to enrollees, portability of coverage, and enhanced competition among health insurers. Additionally, the nongroup market has been the focus of proposals to expand health insurance coverage to the uninsured population through the use of tax credits as in the Fair Care for the Uninsured Act of 2003 and the Health Coverage Tax Credits Program of the Trade Act of 2002. Finally, beginning in the early 1990s, about half the states implemented nongroup insurance regulatory reforms intended to improve access to coverage in the individual health insurance market through a variety of enrollment and rate reform provisions.

At the same time, the nongroup health insurance market presents a number of inherent challenges (Pauly and Nichols 2002). Because the decision to enroll in nongroup coverage is made by individuals, this market tends to have high administrative costs and is vulnerable to adverse risk selection. Except where proscribed by regulation, insurer business practices in this market leave many who arguably need coverage the most, such as those with chronic conditions, with access only to coverage that is extremely costly, has high cost sharing and limited benefits, and excludes preexisting conditions, or with no coverage at all. Business cycles that favorably affect the premiums and enrollment in employer-sponsored coverage compound instability in the nongroup market as individuals face shifting opportunities to obtain generally more affordable group coverage (Chollet 2004; Monheit et al. 2004). In times of economic expansion, tight labor markets may lead more employers to offer employer-sponsored health insurance to comparatively young workers, drawing them out of the nongroup market and leaving that market with a comparatively higher cost-enrolled population. Finally, as a “bridge” or “residual” health insurance for those with interruptions in coverage from other sources and a long-term source of coverage for few, the nongroup market is small, enrolling less than 7 percent of the nonelderly population (Fronstin 2004).

Given this broad interest in nongroup insurance and its relatively small and unstable enrollment, there is a need for precise data on enrollment to evaluate the performance of this market and its response to various policy initiatives. To date, most studies of nongroup enrollment rely on household surveys, most commonly the federal government's Current Population Survey (CPS). However, recent analyses of the CPS disclose some unsettling findings. For instance, despite its substantial out-of-pocket cost, 27 percent of enrollees with individually purchased insurance reported incomes less than $20,000 in 2003 (Fronstin 2004). Moreover, work by LoSasso and Buchmueller (2004) evaluating the impact of the State Children's Health Insurance Program (SCHIP) suggested that parents of low-income children enrolled in SCHIP tend to misreport such coverage as private nongroup insurance. The authors conclude that such measurement error leads to an overstatement of SCHIP crowd-out of private insurance and an understatement of SCHIP enrollment. Consistent with this observation is the well-documented undercount of Medicaid enrollment in household surveys compared with administrative totals. While research to date has not pointed to misreporting of nongroup coverage by public program enrollees, a few studies have examined possible sources of the Medicaid undercount in depth (Call et al. 2002; SHADAC 2004). These factors raise the question of how well household respondents report nongroup coverage and point to the critical implication of such measurement error for not only the evaluation of individual market performance but also for studies of public coverage expansions.

In this paper, we address this measurement issue through the application of unique data on enrollees in nongroup coverage in the New Jersey Individual Health Coverage Program (IHCP) and compare our findings with enrollment estimates from several household surveys. One motivation for our study has been our observation of a wide disparity between estimates from household surveys and enrollment statistics compiled by state insurance regulators. The magnitude of this difference cannot be explained by previously documented methodological differences in the survey reference period between our data and that of the CPS. In general, we find that household survey estimates overstate nongroup health coverage enrollment 3.5 to fourfold compared with administrative statistics reported to state regulators, and we find that the characteristics of nongroup enrollees from household surveys differ significantly from a survey of a sample of enrollees obtained directly from nongroup carriers. The latter provides evidence consistent with the hypothesis that public coverage is frequently misreported as nongroup insurance. Our findings suggest that applying conventional household survey methods to analyses of the nongroup insurance market will lead to flawed conclusions about market performance, and that survey methods should be modified to assure unbiased estimates of nongroup coverage.

DATA AND METHODS

To examine how well household surveys accurately measure the number and characteristics of persons with nongroup health insurance coverage in New Jersey, we contrast survey-based enrollment estimates to official administrative data. We also compare estimates of the demographic and socioeconomic composition of the New Jersey nongroup market from household surveys using general population samples with estimates from a survey of families of known nongroup coverage subscribers. Differences between survey estimates are compared using two-tailed t-tests.

Table 1 describes the surveys used in these contrasts.1 Differences in the number and composition of nongroup enrollees can stem from many survey design features, and our analysis uses a variety of sources to narrow the possible explanations for differences in estimates. Each of the surveys draws on a bank of detailed coverage enrollment questions to determine each person's source of coverage, if any. The CPS asks about coverage held in the prior year, and the other surveys ask about coverage at a point in time. The median spell of nongroup coverage is less than a year (8 months in a recent national study by Ziller et al. 2004), thus annual enrollment estimates will be higher than point-in-time measures.

Table 1.

Survey Data Sources

| Data Source, Reference Year, and Sponsor | Sampling | Principal Mode* | Response Rate† (%) | Total NJ Nonelderly Sample | NJ Nongroup Sample | Coverage Time Frame |

|---|---|---|---|---|---|---|

| Current Population Survey (CPS), 2001‡ | Area probability | In-person and telephone | 83.2 | 5,028 | 216 | Prior year |

| U.S. Census Bureau and Department of Labor | ||||||

| National Survey of America's Families (NSAF), 1997 and 1999 | Random digit dial | Telephone | 54.2 (’97) 50.6 (’99) | 6,467 7,272 | 318 254 | Current |

| The Urban Institute | ||||||

| New Jersey Family Health Survey (NJFHS), 2001 | Random digit dial | Telephone | 59.3 | 5,580 | 211 | Current |

| Rutgers Center for State Health Policy | ||||||

| Individual Health Coverage Program (IHCP) Supplement, 2002 | Carrier lists§ | Telephone | 52.0 | 1,398 | 882 | Current |

| Rutgers Center for State Health Policy |

The NSAF and NJFHS employ strategies to minimize bias from the exclusion of households without telephones; the NSAF includes a small in-person component and the NJFHS employs a telephone history weight adjustment.

Based on New Jersey sample only, except the CPS, which is a national response rate. The CPS response rate reflects cumulative nonresponse for the CPS and the Annual Demographic Supplement (personal communication, askcensus@custhelp.com, October 13, 2005).

March 2002 Annual Demographic Supplement; data from the 1998 to 2001 CPS Annual Demographic Supplements are also used.

Based on enrollment lists provided by four of the five largest carriers in the New Jersey nongroup market, representing 95% of total market enrollment.

Health insurance questions in all the surveys that we examine are similar. Survey questions refer to insurance purchased directly from a carrier rather than to nongroup insurance per se. The CPS determines enrollment in this type of coverage by asking: “At any time during 2001, (were you/was anyone in this household) covered by a plan that [you/they] PURCHASED DIRECTLY, that is, not related to current or past employer?” (Bureau of Labor Statistics and Bureau of the Census 1996). The New Jersey Family Health Survey (NJFHS) and National Survey of America's Families (NSAF) use a similar formulation: “At this time, is anyone in your family covered by a health plan that is purchased directly from an insurance company or HMO, that is, not from a current or past job?”2

The first three surveys described in Table 1 use population-based sampling techniques. The sample for the fourth survey is drawn from a list of known subscribers in New Jersey's nongroup market, known as the IHCP. Four of the five carriers with the greatest enrollment in the IHCP supplied complete lists of nongroup subscribers as of 2002. Collectively, these carriers insured 95 percent of all IHCP-covered lives at the time of sampling. A stratified random sample of IHCP subscribers was drawn by carrier, with a modest over-sample of subscribers of the two smaller carriers.

The survey instrument for this sample of known IHCP subscribers was based on the main NJFHS questionnaire. The two surveys included identical coverage questions with the exception that IHCP sample respondents were asked first to confirm that they were covered by the carrier that supplied their name and were then asked whether any member of the household obtained coverage from other sources (including other coverage purchased directly from an insurance carrier), while the general NJFHS questionnaire simply asked whether respondents and other household members obtained coverage from each possible source of coverage (including directly from an insurance carrier). Persons in the IHCP sample are identified as having nongroup insurance using these questions and include family members reported as having nongroup coverage whether or not they appear on the carriers' subscriber lists. This method of classifying coverage status assures that dependents of subscribers are included in estimates of the nongroup population; names of dependents were not available on the subscriber lists. This method also permitted the measurement of family characteristics (e.g., household income) including family members not enrolled in nongroup coverage. In total, the IHCP Supplement included 1,398 nonelderly adult family members, of whom 882 were reported with nongroup coverage.

The CPS uses in-person interviewing and has a very high response rate. The NSAF, conducted by the Urban Institute, and the NJFHS, and IHCP Supplement, conducted by Rutgers Center for State Health Policy, relied on telephone interviewing and obtained response rates between 50 and 60 percent. The NSAF also employed a supplemental area probability sample to ensure inclusion of households without landline telephones. Variations in mode of administration, sample sizes, and response rates across the surveys could affect the precision and bias of estimates in uncertain ways. However, the standardization of survey questions between population sample surveys and the survey of known nongroup enrollees offers a strong basis for drawing inferences about the implications of these survey techniques.

In each of the surveys, more than one source of coverage could be identified for each person. Given its year-long time frame, it is likely that some individuals in the CPS did have both nongroup and public coverage during the year. Having both public and private coverage at a point in time, the reference period for the NSAF, NJFHS, and IHCP sample, is much less likely. In our analyses, we apply a coverage hierarchy to identify a single source of coverage for each individual. Individuals are coded as having employer-sponsored coverage if they report that source regardless of whether other sources are reported as well, whereas individuals reported with both direct-purchase and public coverage are coded as having public coverage. This hierarchy will minimize estimated overstatement of nongroup coverage among public enrollees. More generally, given the year-long time frame of the CPS, applying the hierarchy will also minimize any over-count in the CPS estimate of total nongroup insurance enrollment. Separately, we evaluate the extent of double reporting of nongroup and public coverage in the CPS, NJFHS, and IHCP Supplement to shed light on possible confusion that respondents may have about these sources of coverage.

Three other sources of information are used in this analysis. We obtained counts of total enrollment in the IHCP from regulatory reports maintained by the New Jersey Department of Banking and Insurance and counts of enrollees in NJ FamilyCare (New Jersey's SCHIP initiative) from the New Jersey Department of Human Services. IHCP enrollment counts are based on year-end enrollment statistics (i.e., counts of those enrolled in December) for 1997–2001 reported by carriers to state regulators and NJ FamilyCare enrollment statistics are similarly based on enrollment data for 1998–2001. Finally, we also draw on data from a 2004 survey of 595 families (including 1,460 nonelderly individuals) in New Brunswick, New Jersey.3 New Brunswick is a disproportionately low-income community with a large number of immigrants, affording a good opportunity to test a simple method for identifying cases in which public coverage may have been misreported as directly purchased private insurance. Specifically, following coverage questions identical to the NJFHS, the New Brunswick survey asked respondents who reported direct purchase coverage, “Is that coverage part of a program such as NJ FamilyCare or Medicaid?”

The sample for the New Brunswick survey was drawn by random-digit dialing with a small supplemental area-probability sample for households without landline phones in two Census tracts with the lowest landline penetration. Interviews were conducted by telephone (4 percent of interviews were completed by cell phone among respondents without landlines), and achieved a response rate of 52.3 percent.

Estimates from the surveys analyzed in this study use sampling weights to adjust for differential probabilities of selection resulting from the sample designs, to compensate for sample frame under-coverage (e.g., households without landline telephones), and to improve the overall representativeness of survey estimates. Weights compensate for design-based differences in the probability of selection, for example, when some groups are intentionally over-sampled. In addition, because sampling for the NSAF, NJFHS, and the New Brunswick surveys were based on telephone lines, additional weight adjustments are used. Specifically, weighting strategies adjust for differences in the probability of selection by sampling method (i.e., random digit dialing or area-probability sampling in the NSAF and New Brunswick survey) and the number of landlines used for voice communication in each sampled household.

In addition to adjusting for the probability of selection and sample frame under-coverage, weights are also used to reduce the impact of nonresponse bias in surveys with significant non-response. In this technique, weights are recalibrated to yield “known” population totals by specific demographic characteristics, a procedure sometimes known as “poststratification adjustment.” The NJFHS and NSAF use poststratification adjustments to calibrate to population estimates by age, sex, and race/ethnicity at the county level or state levels, respectively. The CPS and New Brunswick surveys poststratify to demographic distributions in the Decennial Census. While weight calibration is designed to reduced bias associated with known population characteristics, such methods may not adequately adjust for bias that stems from different propensities to respond to surveys among populations with different sources of coverage. While we have no a priori reason to suspect that response propensity varies by source of coverage, we are not aware of any evidence to shed light on this possible source of nonresponse bias.

While weight recalibration is designed to reduce nonresponse bias, Canty and Davison (1999) observed that such recalibration frequently relies on the assumption that the resulting weights are fixed by the sampling design when, in fact, such weights are random as they depend on the particular sample that has been drawn. As a result, such randomness or uncertainty should be accounted for in obtaining standard errors for weighted estimates of population totals and proportions. Otherwise, the estimated standard errors can be biased downward. Consequently, we derive an adjustment factor based on work by Canty and Davison (found in Table 2 of their paper) to inflate standard errors for our estimates from the NSAF and NJFHS. Specifically, we inflate standard errors by a factor of 1.148 for our estimates of population proportions and by 1.065 for estimates of population totals in these surveys.4 This procedure is not used for estimates from the IHCP sample because demographic weight recalibration was not used in that survey, and it was not applied to CPS or the New Brunswick survey because weights for those surveys were poststratified to the Decennial Census rather than sample-based demographic data.

Table 2.

Comparison of Survey Estimates of Characteristics of Nonelderly New Jersey Nongroup Health Insurance Enrollees

| Data Source | |||

|---|---|---|---|

| Current Population Survey, 2002 (95% CI) | New Jersey Family Health Survey, 2001 (95% CI) | IHCP Supplement, 2002 (95% CI)† | |

| Total enrollment | 327,858* (259,574–396,142) | 293,111* (261,922–362,405) | 82,726 |

| Years of age (% distribution) | |||

| Under 19 | 21.0 (15.8–26.2) | 25.4* (22.4–35.9) | 16.8 (14.3–19.2) |

| 19–34 | 17.4* (11.7–23.0) | 20.5* (17.2–29.8) | 9.6 (7.6–11.5) |

| 35–44 | 18.2 (12.8–23.6) | 16.9 (13.5–25.3) | 13.8 (11.5–16.0) |

| 45–54 | 23.3 (16.8–29.7) | 14.6 (11.3–22.3) | 20.1 (17.4–22.7) |

| 55–64 | 20.2* (12.8–27.6) | 22.5* (19.4–32.4) | 39.8 (36.6–43.0) |

| Percent female | 49.2* (43.3–55.2) | 55.7 (56.3–73.7) | 60.4 (57.2–63.7) |

| Race/ethnicity (% distribution) | |||

| White, non-Hispanic | 75.9* (67.6–84.1) | 67.3 (69.9–84.6) | 86.5 (84.2–88.7) |

| Black, non-Hispanic | 6.8 (1.9–11.8) | 210.5* (7.2–16.8) | 3.0 (1.9–4.1) |

| Hispanic | 10.4 (4.9–15.9) | 11.8* (8.5–18.6) | 5.2 (3.7–6.6) |

| Other | 6.9 (1.5–12.3) | 10.5 (7.2–16.8) | 5.4 (3.9–6.9) |

| Education (% distribution)‡ | |||

| Less than high school | 11.0* (5.4–16.7) | 7.0 (3.2–13.0) | 3.7 (2.4–5.2) |

| High school | 33.5 (24.9–42.0) | 35.3 (31.2–49.7) | 34.9 (31.3–38.3) |

| Some college | 20.9 (13.8–28.0) | 22.6 (17.9–34.1) | 16.8 (14.1–19.5) |

| College graduate | 34.6 (25.6–43.6) | 35.1 (31.1–49.5) | 43.2 (39.6–46.9) |

| Federal poverty level (% distribution) | |||

| Under 200% | 19.4 (11.4–27.4) | 34.0* (31.6–46.4) | 14.7 (12.5–17.2) |

| 200–349% | 21.0 (12.8–29.2) | 33.2 (30.8–45.5) | 26.3 (23.3–29.1) |

| 350% and more | 59.6 (49.3–69.8) | 32.8* (30.3–45.0) | 58.9 (55.7–62.2) |

Total enrollment is based on administrative reports (hence, a confidence interval is not calculated) and distributions of population characteristics are based on the IHCP sample survey (see text).

Excludes individuals under 21 years old.

p < 0.05 compared with IHCP sample.

CI, confidence interval; IHCP, Individual Health Coverage Program.

Even with the application of poststratified weight recalibration, the possibility of nonresponse bias remains a concern in studies of this type. As noted, the NSAF, NJFHS, IHCP Supplement, and New Brunswick surveys have response rates between about 50 and 60 percent. To assess possible nonresponse bias in the 1997 NSAF, Groves and Wissoker (1999) used follow-up interviews with nonrespondents and comparisons with CPS demographic distributions and concluded that nonresponse bias is not a serious problem in that survey. They found slightly greater nonresponse among African Americans and that higher income households required more effort to obtain interviews. They further observed that weight adjustments for nonresponse corrected these discrepancies. Given that the household surveys used in this study use designs similar to the NSAF, the result of this detailed analysis by Groves and Wissoker is reassuring. Moreover, we do not expect differential nonresponse bias to affect our conclusions based on comparisons of the NJFHS and IHCP sample as these surveys used similar sampling and field methods and obtained similar levels of nonresponse.

FINDINGS

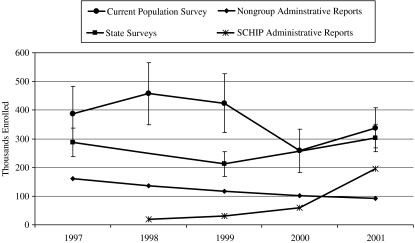

Figure 1 shows the 1997–2001 trend in New Jersey nongroup health insurance enrollment. Enrollment in SCHIP in New Jersey is also shown. According to administrative records, IHCP enrollment trended steadily downward during this period.5 New Jersey's SCHIP enrollment grew following its inception in 1998 as NJ KidCare, and accelerated considerably in 2000 when the program became NJ FamilyCare and began enrollment of adults.

Figure 1.

Trends in Survey Estimates of Nongroup Health Insurance Enrollment Compared with Administrative Reports of Nongroup and SCHIP Enrollment, New Jersey, 1997–2001.

Notes: The “State Surveys” are the National Survey of America's Families (1997 and 1999) and the New Jersey Family Health Survey (2001). Data from the Current Population Survey are shown for the reference year, see http://www.census.gov/hhes/www/hlthins/historicindex.html. Enrollees are under 65 years except for Administrative Reports, which include enrollees of any age.

There is wide variability in estimates of nongroup enrollment (enrollment estimates include subscribers and covered dependents) among the survey sources. CPS estimates of “direct purchase” or nongroup enrollment are above estimates from the NSAF and NJFHS for each year examined, although only significantly so for 1999, reflecting differences in the time frame of coverage questions. (Assuming an 8-month average duration of nongroup coverage discussed above, we expect that roughly two-thirds of those reporting this type of coverage in the CPS would have such coverage any given point in time, making the CPS estimates fairly consistent with the point in time estimates from the NSAF and NJFHS.) Nongroup enrollment in the CPS rose in 1998 then declined by more than 100,000 through 2000–2001. Methodological changes in the CPS may explain some of this trend, but the forces underlying the trend remain unclear. For example, in 2000, a question confirming the uninsured status was added for each person for whom no source of coverage was identified. This change led to a 3.8 percent increase in the population reported with nonemployer-sponsored private health insurance in 1999 (Nelson and Mills 2001). As well, the number of households sampled in the CPS increased in July 2001 by about 20 percent (Helwig, Ilg, and Mason undated), and CPS weights were recalibrated starting with data collected in 2000 to demographic totals from the 2000 Decennial Census, increasing population estimates from that survey (Bowler et al. undated). While these changes may have influenced estimates of the nongroup insured population over the study period, it is not possible to quantify their impact.

NSAF estimates of nongroup enrollment for 1997 and 1999 are about twice the level of IHCP administrative totals, but the trend in these estimates parallels the decline in enrollment reported by regulators. The 2001 NJFHS estimate shows a rise in nongroup enrollment of nearly one-third compared with the comparable 1999 NSAF estimate. This trend is at variance with the continued downward trend in IHCP administrative totals, but correlates with the sum of IHCP and NJ FamilyCare administrative reports.

Table 2 compares total enrollment estimates and characteristics of nongroup enrollees in the CPS and NJFHS with the number of IHCP enrollees reported by carriers to state regulators. Estimated total enrollment in the NJFHS and CPS are 3.5 to four times that of the number of enrollees reported in administrative data, respectively. Many differences between population characteristics reflected in the list sample-based IHCP sample and the general household surveys are of significant magnitude. The nongroup population in the IHCP list sample is significantly more likely to be near elderly and less likely to be young adult compared with both the CPS and NJFHS samples. The list sample is also disproportionately female, nonminority, and has higher education compared with the general household surveys, although differences in sex and education do not reach a p < 0.05 level of significance in the comparison with the NJFHS sample. The IHCP sample also has a significantly higher income distribution compared with responses to identically worded income questions in the NJFHS sample. As a result, fewer low-income (under 200 percent of the federal poverty level) enrollees are present in the IHCP survey data. Income measures in the NJFHS and IHCP cannot be compared directly with the CPS estimates. The former use omnibus income questions which yield systematically lower distributions than the multiple-component income questions used in the CPS (Davern et al. 2005). Overall, compared with the characteristics of nongroup enrollees identified in a survey of known subscribers, those reporting nongroup coverage in general household surveys have characteristics more likely to be associated with public coverage (i.e., they are younger, poorer, and more likely to be minority).

It is likely that some individuals have both public coverage and private nongroup coverage over the course of a year, which would lead to accurate reports of both types of coverage in response to the CPS annual coverage questions. However, reporting both types of coverage should rarely occur in response to the point-in-time coverage questions of the NSAF and NJFHS. In the results reported above, we assigned only one source of coverage to each individual (individuals reported with any employer-sponsored coverage were assigned that type and persons reported with both direct purchase and public coverage were assigned public coverage). However, an assessment of reporting of coverage from multiple sources is informative. In the general NJFHS population, 13.5 percent of nonelderly individuals reported with direct purchase coverage was also reported to have Medicaid or SCHIP. In contrast, in the IHCP sample, only 0.3 percent of the nongroup population was also reported with state-sponsored public coverage. (Individuals reported with both direct purchase and Medicaid or SCHIP coverage are treated as public program enrollees in the analyses presented above). As it is illogical for individuals to hold both nongroup and Medicaid or SCHIP coverage simultaneously, the high rate of duplicate coverage in the general NJFHS is consistent with the notion that inaccurate responses were given to coverage questions. Double reporting of nongroup and public coverage amounts to only 21.0 percent of the total differences between the NJFHS and the known IHCP enrollment total, suggesting that assigning coverage hierarchically so that persons reporting both types of coverage are deemed to be covered by public insurance is an insufficient method to adjust for possible misreporting.

To narrow the range of possible explanations for the observed trends and differences in population characteristics, we added a follow-up question to the NSAF/NJFHS “direct purchase” question in a small 2004 survey fielded in New Brunswick, New Jersey, a small urban community with many low-income and immigrant residents. The results of this question wording experiment are shown in Table 3. A large proportion of respondents initially reporting direct-purchase coverage said that the coverage was in fact “part of a program such as NJ FamilyCare or Medicaid.” Based on this question, it appears that more than one in four adults and about 70 percent of children were initially incorrectly classified as nongroup enrollees.

Table 3.

Responses to Survey Questions Confirming Sources of “Direct Purchase” Health Insurance Coverage in a New Brunswick, New Jersey Sample, 2004

| Number (Unweighted) | All Nonelderly | Nonelderly Adults | Children under 19 |

|---|---|---|---|

| 63 | 37 | 26 | |

| Weighted Percent Distribution (95% CI) | |||

| Family members initially reported with “Direct Purchase” but no Medicaid or SCHIP coverage | 100.0 | 100.0 | 100.0 |

| Confirmed “Direct Purchase” coverage | 62.2 (49.9–74.5) | 73.1 (58.1–88.1) | 30.1 (11.2–49.0) |

| Medicaid coverage | 28.5 (17.0–40.0) | 19.1 (5.0–32.5) | 56.0 (35.6–76.5) |

| SCHIP Coverage | 9.3 (1.9–16.7) | 7.8 (0.0–16.8) | 13.9 (0.0–28.1) |

Source: 2004 Healthier New Brunswick Community Survey.

CI, confidence interval; SCHIP, State Children's Health Insurance Program.

DISCUSSION

General population survey estimates of “direct purchase” health insurance coverage in New Jersey greatly overstate enrollment compared with statistics reported by insurance carriers to state regulators. Estimates from the CPS overstate this type of coverage the most and reflect a trend at variance with the steady enrollment decline reported by carriers to the state regulatory authority. The higher estimated enrollment in the coverage questions on the CPS may be at least partly attributable to the annual time frame of the CPS coverage items, with short-duration enrollees appearing in greater numbers compared with the point-in-time estimates on the NSAF and NJFHS. It is less clear what accounts for changes in CPS estimates over time.

Point-in-time enrollment estimates in the NSAF and NJFHS also overstate nongroup coverage, but by less than the CPS (this difference was statistically significant in only one of 3 years examined). Notably, the trend in NSAF/NJFHS estimates, which use nearly identical survey questions, reflects a U-shaped curve that parallels the sum of IHCP and SCHIP enrollment in New Jersey. Enrollment in New Jersey's Medicaid program also grew slightly during this period. The 2001 NJFHS estimates of nongroup enrollment exceed administrative counts by approximately 210,000 persons, a number equivalent to about 29 percent of nonelderly individuals in the NJFHS reported with Medicaid or SCHIP coverage—a large potential misreporting error.

Like public coverage initiatives of other states, New Jersey's programs rely on private HMOs to provide care for all SCHIP and most Medicaid enrollees. As well, New Jersey, like other states, has sought to reduce the stigma of public coverage for higher income expansion programs by making public coverage appear more like private insurance. These observations raise the possibility that SCHIP or Medicaid enrollees may report their coverage as “direct purchase” rather than public coverage, a hypothesis that is consistent with the conclusions by LoSasso and Buchmueller (2004) in their evaluation of the impact of SCHIP on coverage.

Differences in characteristics of respondents among the general population-based CPS and NJFHS and the list sample-based IHCP sample are also consistent with the idea that persons enrolled in public programs may be misclassified as having “directly purchased” insurance. The higher rate of reporting of Medicaid or SCHIP coverage along with directly purchased coverage in the NJFHS compared with the IHCP sample provides further evidence of respondent confusion. Very few, if any, IHCP enrollees would be covered simultaneously under state-sponsored programs, given the costliness of the former.

Adding an experimental question of direct-purchase respondents regarding participation in NJ FamilyCare or Medicaid adds additional compelling evidence of misclassification of public program enrollees. While the sample size of the local survey to which this question was added is comparatively small, a very large proportion of individuals were apparently misclassified.

It is important to consider the policy and market context in New Jersey in interpreting these findings. New Jersey is one of a few states that have permitted enrollment of parents and other adults in its SCHIP program. This feature of New Jersey's SCHIP program may increase the likelihood that SCHIP will be reported as “direct purchase” coverage. However, misreporting could easily occur among Medicaid beneficiaries, which include parents and other adults in all states, and Medicaid represents the majority of state-program beneficiaries.

Limitations of the data sets used in this analysis should be taken into account in interpreting findings. First, while the response rate for the CPS is high, the other surveys employed have response rates between 50 and 60 percent, leaving the possibility of nonresponse bias. One comprehensive study that compared the CPS to the NSAF and conducted follow-up interviews with NSAF nonrespondents suggests, however, that nonresponse bias is unlikely to substantially affect our findings (Groves and Wissoker 1999). Comparisons between the NJFHS and IHCP sample, which used similar sampling and field methods and obtained similar levels of nonresponse, should be unaffected by any potential nonresponse bias. Nevertheless, we cannot definitively conclude that nonresponse bias does not contribute to a potential over statement of nongroup coverage in household surveys. It is also impossible to rule out the influence of other differences in methods, including different field procedures, questionnaires, and weighting strategies on our findings. However, the strength of our conclusions is underscored by the consistency of our findings across diverse data sources.

In sum, our findings strongly suggest that survey methods measuring enrollment in public and non-group health coverage are flawed. Seeking to reduce the stigma of enrolling in public programs, many states promote their SCHIP and even Medicaid as private-coverage-like, and the significant role of private plans in these programs makes the meaning of “direct purchase” vague.

Existing survey methods can lead to seriously biased evaluations of policy strategies directed at either public or private coverage. As noted by LoSasso and Buchmueller (2004), the sort of bias that we observed can lead to underestimates of enrollment in public programs and overstatements of private insurance crowd out. This bias may contribute to the well-documented underestimate of public program enrollment in household surveys (Call et al. 2002; SHADAC 2004). As well, studies of regulatory reforms intended to improve the accessibility and affordability of nongroup coverage that rely on currently available data are likely to be misleading.

The experimental language in our New Brunswick survey offers a promising strategy for reducing bias without altering other aspects of the coverage-question battery, minimizing unwanted question wording effects on trends in annual coverage estimates. Other strategies for reducing bias might be considered, such as examining enrollment cards during in-person interviews. The policy importance of nongroup coverage and its inherent volatility make it imperative to identify ways to improve the accuracy of estimates of enrollment in the nongroup health insurance market.

Acknowledgments

The financial support of the Commonwealth Fund and the Health Care Financing and Organization (HCFO) program of the Robert Wood Johnson Foundation is gratefully acknowledged. We also thank Johnson & Johnson for its support of the Healthier New Brunswick Community Survey that is included in the analysis reported here. We are indebted to Margaret Koller, Associate Director, Rutgers Center for State Health Policy, and Wardell Sanders, former Executive Director of the IHCP Board, for providing helpful comments on earlier versions of this manuscript.

Disclaimers: The analyses and interpretation in this article are the sole responsibility of the authors.

NOTES

Questionnaires and detailed methods information about the NSAF can be found at http://www.urban.org/center/anf/nsaf.cfm; CPS questionnaires and methods reports are available at http://www.bls.gov/cps; and NJFHS and IHCP sample questionnaires and methods reports are available from the authors.

The wording shown here is from the NJFHS. NSAF question wording varied slightly (e.g., NSAF uses “employer” whereas NJFHS uses “job”). The NSAF question wording was also changed slightly between the 1997 and 1999 versions of the survey (Urban Institute, undated).

The New Brunswick survey was conducted as part of a local health needs assessment. Two Census tracts in Somerset, New Jersey, neighboring New Brunswick were also included in the survey and respondents reporting that they lived in the survey catchment areas primarily to attend college/university were excluded from the sample. The New Brunswick survey questionnaire and methods reports are available from the authors.

We are grateful to an anonymous referee suggesting this adjustment. We use results reported by Canty and Davison (1999, Tables 1 and 2) to inflate standard errors for our estimates. Details of this procedure are available from the authors upon request.

For a discussion of the causes of the decline in the IHCP see Monheit et al. (2004).

REFERENCES

- Bowler M, Ilg RE, Miller S, Robinson E, Polivka A. Revisions to the Current Population Survey Effective January 2003. Washington, DC: US Bureau of Labor Statistics; [cited 2006 May 22]. Available at http://www.bls.gov/cps/rvcps03.pdf. Undated. [Google Scholar]

- Bureau of Labor Statistics and Bureau of the Census. “CPS Annual Demographic Survey March Supplement: Questionnaire Items”. Available at http://www.bls.census.gov/cps/ads/1996/sqestair.htm.

- Call KT, Davidson G, Somers AS, Feldman R, Farseth P, Rockwood T. Uncovering the Missing Medicaid Cases and Assessing Their Bias for Estimates of the Uninsured. Inquiry. 2002;38(4):396–408. doi: 10.5034/inquiryjrnl_38.4.396. [DOI] [PubMed] [Google Scholar]

- Canty A, Davison AC. Resampling-Based Variance Estimation for Labour Force Surveys. Statistician. 1999;48(3):379–91. [Google Scholar]

- Chollet D. Research on Individual Market Reform. In: Monheit AC, Cantor JC, editors. State Health Insurance Market Reform: Toward Inclusive and Sustainable Health Insurance Markets. London: Routledge; 2004. pp. 46–63. [Google Scholar]

- Davern M, Rodin H, Beebee TJ, Call KT. The Effect of Income Question Design in Health Surveys on Family Income, Poverty and Eligibility Estimates. Health Services Research. 2005;40(3):1534–52. doi: 10.1111/j.1475-6773.2005.00416.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fronstin P. “Sources of Health Insurance and Characteristics of the Uninsured: Analysis of the 2004 Current Population Survey.” EBRI Issue Brief No. 276. Washington, DC: Employee Benefit Research Institute. [PubMed] [Google Scholar]

- Groves R, Wissoker D. Methodology Report No. 7: Early Nonresponse Studies of the 1997 National Survey of America's Families. Washington, DC: Urban Institute; 1999. [Google Scholar]

- Helwig RT, Ilg RE, Mason SL. Expansion of the Current Population Survey Sample Effective July 2001. Washington, DC: US Bureau of Labor Statistics; Available at http://www.bls.gov/cps/cpsjul2001.pdf. Undated. [Google Scholar]

- LoSasso AT, Buchmueller TC. The Effect of the State Children's Health Insurance Program on Health Insurance Coverage. Journal of Health Economics. 2004;23(5):1059–82. doi: 10.1016/j.jhealeco.2004.03.006. [DOI] [PubMed] [Google Scholar]

- McClellan M, Baicker K. Perspective: Reducing Uninsurance through the Nongroup Market: Health Insurance Credits and Purchasing Groups. Health Affairs Web Exclusive. 2002;W2:363–6. doi: 10.1377/hlthaff.w2.363. [DOI] [PubMed] [Google Scholar]

- Monheit AC, Cantor JC, Koller M, Fox K. Community Rating and Sustainable Individual Health Insurance Markets: Trends in the New Jersey Individual Health Coverage Program. Health Affairs. 2004;23(4):167–75. doi: 10.1377/hlthaff.23.4.167. [DOI] [PubMed] [Google Scholar]

- Nelson CT, Mills RJ. [cited 2005 October 7]. “The March CPS Health Insurance Verification Question and its Effect on Estimates of the Uninsured”. U.S. Bureau of the Census. Available at http://www.census.gov/hhes/www/hlthins/verif.html.

- Pauly MV, Nichols L. The Nongroup Insurance Market: Short on Facts, Long on Opinions and Policy Disputes. Health Affairs Web Exclusive. 2002;W2:323–44. doi: 10.1377/hlthaff.w2.325. [DOI] [PubMed] [Google Scholar]

- Pauly MV, Percy AM. Cost and Performance: A Comparison of the Individual and Group Health Insurance Markets. Journal of Health Politics, Policy and Law. 2000;25(1):9–26. doi: 10.1215/03616878-25-1-9. [DOI] [PubMed] [Google Scholar]

- State Health Access Data Assistance Center (SHADAC) 2004. “Do National Surveys Overestimate the Number of Uninsured? Findings from the Medicaid Undercount Experiment in Minnesota.” Issue Brief No. 9. Minneapolis, MN: University of Minnesota.

- Urban Institute. [cited 2005 October 9]. Undated. “National Survey of America's Families: Questionnaire”. Available at http://www.urban.org/content/Research/NewFederalism/NSAF/Questionnaire/Question.htm.

- Ziller EC, Coburn AF, McBride TD, Andrews C. Patterns of Individual Health Insurance Coverage, 1996–2000. Health Affairs. 2004;23(6):210–21. doi: 10.1377/hlthaff.23.6.210. [DOI] [PubMed] [Google Scholar]