Abstract

In order for organizations to become learning organizations, they must make sense of their environment and learn from safety events. Sensemaking, as described by Weick (1995), literally means making sense of events. The ultimate goal of sensemaking is to build the understanding that can inform and direct actions to eliminate risk and hazards that are a threat to patient safety. True sensemaking in patient safety must use both retrospective and prospective approach to learning. Sensemaking is as an essential part of the design process leading to risk informed design. Sensemaking serves as a conceptual framework to bring together well established approaches to assessment of risk and hazards: (1) at the single event level using root cause analysis (RCA), (2) at the processes level using failure modes effects analysis (FMEA) and (3) at the system level using probabilistic risk assessment (PRA). The results of these separate or combined approaches are most effective when end users in conversation-based meetings add their expertise and knowledge to the data produced by the RCA, FMEA, and/or PRA in order to make sense of the risks and hazards. Without ownership engendered by such conversations, the possibility of effective action to eliminate or minimize them is greatly reduced.

Keywords: Patient safety, medical error, risk assessment, hazard analysis, sensemaking

As noted in a 2000 patient safety report from the Department of Health in the United Kingdom—An Organization with a Memory: Report of an Expert Group on Learning from Adverse Events in the NHS (Department of Health, United Kingdom [DOH/UK] 2000)—improving patient safety involves health care organizations moving along a continuum of organizational development to become learning organizations that use failure as input for learning. Organizations with higher reliability worry chronically that errors or potential failures are embedded in ongoing activities and that unexpected failure modes and limitations of foresight may amplify those errors (Weick 2002). In order for organizations to become learning organizations as well as obtaining and maintaining a high reliability status, they must continually make sense of their environment and learn from event reports and identifying risks and hazards embedded in both processes and systems at both microlevels and macrolevels.

Sensemaking is always based on some set of existing data. The most fundamental level of data about patient safety is in the lived experience of staff, as they struggle to function within an imperfect system. Even given the limitations of the human mind (e.g., the tendency to give ascendancy to the most recent events, selective memory, limits of working memory, etc.) human beings manage to make sense of their world and their actions within it. For example, when an event occurs each individual, through the mental work of sensemaking, constructs an explanation of what happened, the reasons it happened, and what they can or should do about what happened. Sensemaking serves to reduce the ambiguity caused by encountering the unexpected event or near miss to a level that allows the individual to continue to carry out his or her daily tasks within a highly complex system.

There are analytical tools that allow staff working in patient safety to overcome some of the limitations of the individual human mind so that sensemaking about events can take into account larger datasets (e.g., case-based reasoning), hold more elements in mind concurrently (causal trees that graphically display events and causes), or aggregate data from multiple sources (findings and recommendations based on a root cause analysis [RCA]). These tools aid those who are attempting to make sense of events by providing an organizing framework for supporting data. Such frameworks assist the sensemaker but in some ways also delimit the sense that can be made of the data because the choice of the structure itself prefigures what is included and excluded from the dataset as well as determining the comparisons and associations that are possible. Thus, the choice of analytical tool(s) is of considerable significance.

What these analytical tools cannot do is make sense of the data. Sensemaking, which is the active process of assigning meaning to ambiguous data, can only occur through human reflection. And that reflection is most productive when those whose data are presented through the organized framework, participate in it jointly. Joint reflection serves two ends, (1) it develops a more accurate picture of the data and of the system in which the data are embedded and (2) it allows those who can act on the meaning constructed to more fully comprehend the outcomes they intend to enact.

It is the combination of these two processes—(1) tools that enhance the human ability to organize patient safety data and (2) deliberate reflection—that makes it possible for organizations to use events as learning opportunities. Absent from the patient safety discussion has been how these methods can be used separately or in combination to make sense of these risks and hazards, crafting interventions to minimize or eliminate them. The authors have found over the past 10 years trying to deal with patient safety events and associated risks and hazards that the concept of sensemaking is an extremely powerful organizing framework in which to examine threats to patient safety and to design effective interventions.

SENSEMAKING AS A CONCEPTUAL FRAMEWORK

Sensemaking, as described by Weick (1995), literally means making sense of what is happening. Dixon (2003) notes, it is the very human ability to retrospectively find patterns in the continual flow of events that individuals experience daily and hourly in order to give those events meaning. The patterns they construct are strongly influenced by their knowledge base and their past experience. People do not make sense of events only once, but rather engage in a continual revision of their understanding based on subsequent events (historical revision) and based on the interpretation of others (social influence). Thus, to “make sense” is not to find the “right” or “correct” answer, but to find a pattern, albeit temporary, that gives meaning to the individual or group doing the reflection—that makes what has occurred sensible (Dixon 2003). Taylor and Van Every (2000) point out that sensemaking is a way station on the road to a consensually constructed, coordinated system of action.

Sensemaking is a conversation among members of an organization about a particular issue or event.

The subject of a sensemaking conversation is something that has occurred within the organization that was unexpected, novel, or ambiguous.

The purpose of the sensemaking conversation is to reduce the ambiguity about the issue—literally to “make sense” out of it.

What makes it possible to create “sense” is that each person in the conversation brings their own unique knowledge about the issue drawn from their experience.

Conversation is the mechanism that combines that knowledge into new, more understandable form for the members.

Although any sense that is made can necessarily only reside in each individual's mind, through conversation, members are able to create a similar representation in their minds.

This shared representation allows for the development of potential action that can be implemented and understood by those who have participated in the conversation (Dixon 2003).

Many patient safety problem-solving meetings take as their goal the gathering of accurate information about the event or series of events from staff. In such meetings attendees are primarily framed as informants leaving it to QA or patient safety staff to then make sense of what they have learned from various sources. Sensemaking differs from such meetings in that staff are not only considered sources of information, but their capability of making sense of the data is equally sought and valued. Moreover, sensemaking is based on the principle that unless the staff involved in the event(s) are able to develop a similar representation, they will be unlikely to collaboratively work toward the identified solution (Dixon 2003).

When an unexpected event occurs, it must first be noticed, then those involved have to make sense of it, and finally they have to do something about it (Weick 2002). A health care organization engaged in sensemaking over time creates a culture of curiosity. In the course of their daily work, health care workers see incidents that they recognize as hazardous or as puzzling. But over time and through constant exposure, they quit noticing many of them—the hazard becomes “just the way we do things around here,” fading out of conscious awareness. The human mind has only a limited amount of attention capability. The tendency is to direct attention to things that individuals can impact and to lose from conscious awareness what cannot be affected.

Sensemaking conversations improve the ability to see more in two important ways. First, members who engage in a sensemaking conversation gain a sense of empowerment—the belief that they can impact the system (Dixon 2003). Weick (1993) notes that, “Small improvements in seeing can occur when individuals enlarge their personal repertoires of what they can do. But larger improvements in seeing should occur when people with more diverse skills, experience, and perspectives think together in a context of respectful interaction.”

An important first step in patient safety and for sensemaking is having a “just culture” (Marx 2001) that encourages the reporting of events and near misses. A second step is to use event reporting to make sense of events or near misses that have occurred. Encouraging event reporting within a just culture necessitates building a culture in which members are curious about risks and/or hazards (Dixon 2003). This curiosity extends not only to events or incidents that have occurred in the past (retrospective) but also to examining risk and hazards that have the potential for causing failures in the future (prospective). A third step is to turn the sense of what was made into an intervention—into action that eliminates or minimizes the impact of the risk and hazards within a particular process or practice or an entire system either at the microlevel or macrolevel.

Another way in which sensemaking improves the ability to see more is through the skillful questions of a facilitator, that helps those in the sensemaking conversation to uncover hazards and confusions of which no one had awareness. Once raised to awareness by the facilitator's questions, it is possible to watch out for a hazard as well as to develop interventions to correct it (Dixon 2003).

Weick, in describing a high-performing crew of an aircraft carrier deck says, “when flight deck crews interrelate their separate activities, they did so heedfully, taking special care to enact their actions as contributions to a system rather than as simply a task in their autonomous individual jobs. Their heedful interrelating was also reflected in the care they directed toward accurate representation of other players and their contributions. And heedful interrelating was evident in the care they directed toward subordinating their idiosyncratic intentions to the effective functioning of the system” (Weick 1993).

Health care systems engaged in sensemaking conversations make greater use of rich forms of communication. As Dixon (2003) notes, in the interest of efficiency there is an increasing tendency to use electronic forms of communication, e.g., e-mail, teleconferences, for much of the communication within health care. In many situations this time saver serves health care organizations well. However, situations for which a sensemaking conversation is requisite are by definition ambiguous, confusing, and subject to multiple interpretations. Olson and Olson have demonstrated that the greater the complexity of the subject, the richer the communication medium that needs to be employed. The richest form of communication is face-to-face conversation. It provides more clues in terms of tone of voice, facial expression, body language, etc., all of which assist the person speaking to make quick adjustments in their message in order to head off misunderstandings and disagreements. It also provides those listening a greater ability to immediately clarify or add perspective before the topic moves on. When, in the interest of efficiency, less rich forms of communication are used to communicate about ambiguous topics, the end result is countless hours of attempting to rectify misunderstood motives, misinformation, anger, and circumventions. For these reasons, sensemaking conversations need to be held face-to-face, as do invitations to the conversations, and communication about the results (Dixon 2003).

TOOLS FOR SENSEMAKING—RETROSPECTIVE

Sensemaking conversations are based on data that are produced through the tools for:

detection and information gathering of patient safety data to identify of risks and hazards,

analyzing the data to gain understanding of the contributing factors associated with the risks and hazards,

modeling risk through proactive risk assessment.

Risks and hazards to patient safety are identified at the initial detection phase. Both risks and hazards reveal themselves nested within an event. In the case of patient safety, the negative consequences are health care-associated injuries/harm or the potential to cause such injuries/harm. Adverse/harm events are those where actual harm and or injury has taken place, the harm representing some degree of severity from death to minor injury. There are, of course, events that involve human failure and an interaction with latent conditions where the outcome does not result in actual harm to the patient. These no harm events represent potential rather than actual harm with warning levels of potential severity as well. The near miss event on the other hand does not manifest itself in actual harm to a patient, because there was intervention and recovery. Again there is potential for harm with a similar level of potential severity (Battles and Shea 2001).

There are different types of surveillance and reporting systems that can and should be used in health care to detect and gather information about risk and hazards. They include spontaneous active event reporting systems, use of administrative data from discharge to determine indicators of harm or patient safety concerns, and triggers from medical record systems, both paper and electronic. Battles and Lilford (2003) point out that there is no single method that can be universally applied to identifying risk and hazards. Rather we must look to multiple approaches to the identification process. One should apply a principle from maritime navigation which states that you can never have a true sense of where you are without a three point fix of your position.

It is only when all of the different types of patient safety data are analyzed and sense is made of them that they can be used to make changes in the process and structure of care. Just collecting data for data's sake will only create larger and larger data graveyards with no practical use. The Institute of Medicine (IOM) report, Patient Safety: Achieving a New Standard of Care (Aspden et al. 2004) stresses the importance of adverse and near miss event analysis.

Root Cause Analysis (RCA) for Sensemaking

An important part of the analysis process has historically been for professionals to conduct a RCA of selected events to determine not only what happened but also why they happened. Extensively used in a variety of industries for decades, RCA provides a retrospective analysis of the factors that lay behind the consequent event. RCA cannot be used with archival records with any degree of accuracy (Battles and Lilford 2003). Some criticize the use of RCAs because they are uncontrolled case studies, and it is often impossible to show a statistical correlation between cause and outcome (Wald and Shojania 2001). However, the fact that a RCA is a case study is in fact its power. Nonetheless this criticism has led to shifting the focus of a RCA from the identification of causes of an event to the identification of contributing factors. These are both methods of conducting and displaying a causal analysis in a graphical display.

Visualizing for Sensemaking

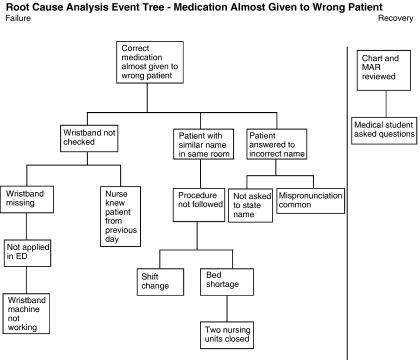

One of the most powerful forms of displaying a causal analysis is through the use of a causal or risk tree. The advantage of the causal tree approach is its ability to deal with multiple contributing factors rather than a single primary or root cause. Originally developed in the nuclear power industry, causal trees, which are also known as fault or risk trees, have now become a standard in safety science (Gano 1999). The use of causal trees has growing acceptance and use in health care along with associated classification approaches (Battles et al. 1998; Kaplan et al. 1998; Aspden et al. 2004). These approaches have now become recommended standards for patient safety as put forward by Patient Safety: Achieving a New Standard of Care (Aspden et al. 2004). The crucial aspect of the fault tree is the ability to visualize and thus make sense of an event that has or almost occurred. The tree becomes a common framework for conducting a sensemaking session or conversation about a single event. In a sensemaking conversation that is based on a tree, the tree which is displayed in a large format becomes the focus of the conversation, taking the emphasis off of individual error and placing it more broadly in the context of multiple factors. The group who gathers to make sense grow their understanding and expand their knowledge of the multiple causes, they physically alter the tree adding to and correcting it. Figure 1 is an example of an RCA visualized with a tree. The top or consequent event appears at the top of the tree with the antecedent events appearing below.

Figure 1.

An example of a causal tree

One needs to know where in the structure and process of care the actual or potential risk and hazards are located. Complicating the aspects of health care is the fact that patient care occurs at both a microlevel and macrolevel. Thus, there are both clinical microsystems that have elements of structure process and behaviors as well as the larger macrosystem of care what link together individual clinical microsystems. These microsystems are of course contained within the larger macrosystems. The search for these embedded risks and hazards look both within the clinical microsystems as well as those related microsystems and macrosystems both upstream and downstream of the system in question.

Data Mining

While the RCA is a tool that is applicable to single events, sensemaking can be carried out through a review of multiple events that have occurred in the past. Sensemaking allows the users of such event databases to discover trends, identify patterns of organizational behavior, and to predict future failures and process or system vulnerabilities. Databases containing events collected from across many different organizations or organizational elements are most valuable for data mining. To achieve these objectives, the users of such databases should be able to point to a specific event or report and then query the system for other events that are similar to it. Tools are needed to facilitate answering the question—have we ever seen this or similar events in our organization, and if so how many? If not in our database, has anyone in a regional or national database seen similar events? The analytical engine needs to be a user defined system that can be applied in an event by event responsive and interactive manner. Standard database retrieval cannot offer the necessary measure of similarity; objects in a traditional database re-accessed by exact matching field values cannot meet the needs of potential users. While it is of some value to identify events that have identical descriptions, it is more probable that these events will only be similar—will share common features but will differ in others. In addition, even features that are different in two events may share common characteristics. What users want to look for in the database are clusters of similar events—that is, reports that share some important common characteristics. Similarity requires both a syntactic and a semantic matching of the features describing an event (Tsatsoulis and Amthauer 2003).

Ideally, having the ability to record critical data from the events and also have the RCAs available as well can greatly facilitates sensemaking. The ability of a database to be able to reconstruct an RCA on demand and to have these graphic displays available for comparison is an extremely powerful way of facilitating sensemaking. It is much easier to spot common patterns through graphic representation than from data displayed in tabular or even in graph form.

Both RCA and data mining are methods ripe for making sense out of single or multiple events that have occurred or have almost occurred in the case of near misses. By necessity they are retrospective in nature.

TOOLS FOR SENSEMAKING—PROSPECTIVE

Traditionally, quality and safety assessments have been used to identify safety and quality problems retrospectively (Battles and Lilford 2003). Solely relying on retrospective approaches to identifying risks and hazards to the delivery of health care, however, is like driving using only the rear view mirror. There is growing awareness that more proactive or prospective analysis methods that have been used for years in other high hazard industries such as nuclear power and aerospace should be a necessary addition for improving quality and safety in health care. The Joint Commission for Accreditation of Healthcare Organizations (JCAHO), which accredits U.S. hospitals and other health care organizations and now requires that accredited institutions incorporate the use of prospective risk analysis methods as a part of organizational patient safety plans and procedures (Joint Commission on Accreditation of Healthcare Organizations [JCAHO] 2003). The IOM in its recent report Patient Safety: Advancing a New Standard of Care recommends integrating retrospective techniques (e.g., incident analysis) with prospective ones (Aspden et al. 2004).

Failure Mode and Effect Analysis (FMEA)

FMEA is a systematic method for failure identification probabilities. FMEA emerged as a methodology for ensuring that potential failure modes are analyzed and their effects understood. FMEA is a process for identifying the failure effects associated with individual failures within a process. The design philosophy embodied in FMEA is that individual failures cannot be allowed to result in an adverse outcome. FMEA uses a table format to identify system components, to identify the ways that different elements in a system can fail, and to estimate how the failures might affect the process. Often a process map is used to provide a graphical representation of the failure points to the total process relationship that accompany the tabular information. Recent studies (Cohen, Senders, and Davis 1994; Feldman and Douglas 1997; DeRosier et al. 2002) have described the use of FMEA in health care applications. FMEAs provide approximate failure probabilities and their consequences, if only on a relative scale—for example, events are frequent but harm is very unlikely. Even though there are many types of FMEAs, the analyses tend to focus on hardware and software failures within a process. There is growing interest in examining human failure particularly in health care. The strength of FMEA is to provide insight of a single process or system component and its potential failure points.

Sensemaking conversations, related to FMEAs, often revolve around a process-of-care-map displayed in a large format. The large format provides the opportunity for each person engaged in the conversation to be looking at the same display rather than each looking at a page in his or her own hand. A large and easily alterable (certainly not PowerPoint) display becomes the property of the group, losing its association with the individual or team who originated it. As participants in the conversation pick up the marker to add a box, change an arrow, or alter a number, the map changes to reflect their growing understanding of the risk and hazards—their ownership of the results also grows concomitantly.

PROBABILISTIC RISK ASSESSMENT (PRA)

Another approach which builds upon both process mapping and FMEA is that of PRA (Spitzer, Schmocker, and Dang 2004). Designers of nuclear power plants, aircraft, and spacecraft have been using this technique for decades (Wreathall and Nemeth 2004). PRA is used systematically to identify and review all of the factors that can contribute to an event, including equipment failure, human erroneous actions, departments or units involved, and associated interactions. It is performed to understand the causes that contribute to a class of undesirable outcomes and determines how to reduce, eliminate, or improve barriers to them. PRA provides a basis for resource allocation decisions and evaluation of performance goals in terms of safety related criteria (Marx and Slonim 2003).

Some have described PRA as FMEA on steroids. PRA is relatively complex in the application of sophisticated mathematical models to calculate the contributions of risks and hazards to the overall risk of system failure. It seems best applied in larger systems failures or threat modes. The approach is frequently used in technology driven industries such as chemical manufacturing, offshore drilling and production facilities, and aviation. However, many applications of PRA have targeted mechanical systems rather than work processes that have a high degree of human interaction such as exists in health care. Sociotechnical PRA (ST-PRA) combines the best of rigorous and well-tested engineering methodologies with the science of human factors to provide a new methodology for modeling human systems (Battles and Kanki 2004).

“The experience base of physicians, nurses, and technicians are often the best source of data on the likelihood of certain types of risks and hazards—placing them in the role of informants. The resulting numbers and formulas can be overwhelming in their amount and complexity, which only strengthens the need for sensemaking among those who have provided the information. Holding a meeting in which the PRA report is presented by the statistician, but made sense of by the medical personnel is critical. Sensemaking implies not only that the medical personnel ask questions, but that they share with each other their interpretations and insights based on the numbers.”

While applications of PRA and prospective risk and hazard analysis are newly emerging in health care, AHRQ has been funding prospective risk assessment projects to help inform interventions for system improvemement (Battles and Kanki 2004).

PROSPECTIVE AND RETROSPECTIVE INTERACTION THROUGH RISK MODELING

A number of high risk industries have begun to combine both retrospective and prospective forms of analysis through risk modeling. Nuclear power and NASA have been promoting the use of this combined approach. The prospective analysis using PRA is used to develop a system-level model of potential failures. This model then is compared with ongoing event reporting using reports of risk and hazards for actual harm events as well as near misses. The individual or clusters of events are then compared with the overall risk model developed prospectively. This combination of retrospective and prospective sensemaking allows individuals to be alert for potential failures and to reexamine assumption that do not fit the risk model.

PRA and ST-PRA use the fundamental building block of the causal tree to establish the model of risks and hazards. The tree can become extremely large but provides a valuable graphical display of the relations of various risk points linking system processes to potential failure points. The embedded mathematical formulas calculate the relative risk at each point in the tree to determine the overall contribution of each point in the tree to determine overall system risk. This combination of visual representation and mathematical calculation bring to light system vulnerabilities, supporting sensemaking at the system level.

Given the extensive mathematical formulas and calculations involved in PRA, this methodology might seem less conducive to a sensemaking conversation. However, numbers and mathematical formulas never contain meaning in and of themselves, it is only through the in-depth conversation about the numbers that meaning is created from the extensive trees. Because PRA represents the system level it often requires not one but many conversations across the organization for sense to be made.

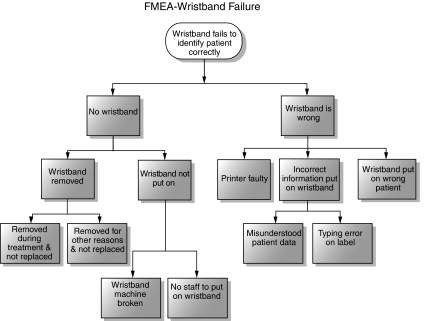

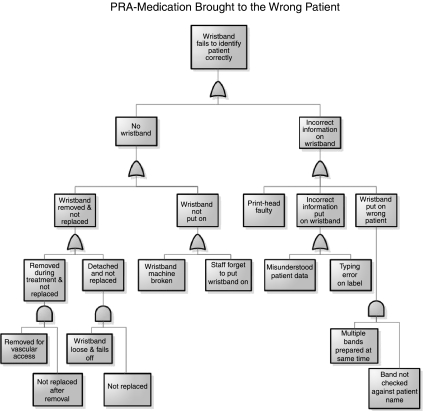

A CASE EXAMPLE

In order to help illustrate how the different sensemaking tools of RCA, FMEA, and PRA might be used in a real word setting, we have created a fictional case and event that is based on actual cases. Box 1 outlines a near miss event in a patient almost getting the wrong medications. Box 2 describes the RCA that was performed and Figure 1 illustrates the resulting casual tree from the RCA. Following the completion of the RCA an FMEA was conducted as outlined in Box 3 and illustrated in Figure 2. Finally, a PRA was conducted using an outside consultant as described in Box 4 and illustrated in Figure 3.

A Near Miss Event–Wrong Medication Almost Administered

Sunday Morning Riverbend Memorial Hospital

John A. Smith presented in the ED at 4:00 a.m., and was admitted and brought to 5 North General Medicine Unit at 6:30 a.m. He is a 60-year-old man needing treatment for alcohol withdrawal. He was placed in the same room as William Smyth, a 59- year-old man being treated for septicemia. There is a hospital policy that prohibits patients with the same or similar last names to be in the same room, but there is currently a bed shortage. In addition, it was a Sunday, and he was brought to the fifth floor during the nursing shift change. The name similarity went unnoticed.

What happened?

Nurse X starts the shift with administering scheduled medications to patients on the unit. Mr. Smith is scheduled to receive a dose of IV haloperidol at 07:00 a.m. Nurse X retrieves the prefilled syringe from Mr. Smith's medication drawer, and enters the room. Nurse X approaches Mr. Smyth, whom she knew from caring for him the previous day. She says “Good morning, Mr. Smith!,” and he responds cheerfully, being accustomed to people mispronouncing “Smyth.” A medical student happens to be in the room, reviewing charts and preparing for morning rounds. As Nurse X is about to administer the medication, the medical student speaks up and begins to ask questions. They both review Mr. Smyth's chart and MAR together, and notice what almost happened. The correct medication is given to the correct patient and no harm occurs. Nurse X decides to complete an event report.

An RCA of Wrong Medication Almost Administered

The event report is reviewed by both the unit manner and the patient safety officer and they decide that the probability of this type of event recurring within the next year is remote, but that if it did recur, there was a high probability of it causing severe harm to a patient. The patient safety officer decides that though no one was harmed, the potential for harm was high, and you will need to perform a root cause analysis on this case. A sensemaking session is conducted with all parties on the unit and a root cause analysis is conducted. Once the consequent event and initial antecedents are recorded, a tree is developed by placing all antecedents in logical order underneath the appropriate antecedent. Each additional antecedent is placed just to the right of the antecedent preceding it. Figure 1 is the completed tree.

FMEA of Wristband Failure

Following completion of the RCA, the patient safety officer realizes that the analysis has uncovered flaws in some of the barriers against adverse events that are not as effective as they had been assumed to be. For example, the use of wristbands to prevent drugs being given to the wrong patient is not perfect because circumstances can occur when patients do not have wristbands. The patient safety officer decides that performing a FMEA on the wristbanding process would be appropriate. Before the first meeting of the FMEA team, the patient safety officers decides to review the results of the RCA to identify how the wristband should play a role in preventing the close call, and to use this to brief the team as to why the issue is important to the hospital. The FEMA team agrees that these failures appear to fall into two general categories: missing wristband and incorrect name on wristband. For each of the two categories, each member of the team comes up using their own experience and imagination as to the ways in which a wristband is missing and the ways in which the wrong information can appear on the wristband. The resulting failure modes analysis is shown in Figure 2.

Figure 2.

An example of an failure modes effects analysis

Probabilistic Risk Assessment (PRA)—Medication Brought to Wrong Patient

The patient safety officer decides to do a more system-wide investigation of the overall failure using the probabilistic risk assessment (PRA). In performing a PRA, the outcome frequencies are estimated from the probabilities of the “yes” and “no” branches, together with the likelihood of the initiating event (“Medication brought to wrong patient”). These probabilities can sometimes be estimated directly from the experience of the people involved and data from records. In other cases, where data exist for contributing causes of a branch, a fault tree is created, which provides a way to estimate the probability of the top event. In this example, we will say that the probabilities of the second (“Nurse asks patient to say name?”) and third (“Medical records checked?”) can be estimated from the observations in the unit plus discussions with the staff. However, the probability of the first branch must be estimated by creating a fault tree. Figure 3 is the fault tree for the first branch of the event tree, Identity checked by wristband.

As can be seen from the structure of the tree, it is similar to the FMEA for the wristband. However in some areas, events have been further subdivided so that when the quantification steps are made, the relationship between different data can be defined. For example, the “wristband removed for treatment and not replaced” has been expanded to identify the likelihood that the band is removed for vascular treatment (to get access for the lines), and separately that the band is not replaced. This is because there should be data on how often patients have the wristbands removed for treatment (from experience) and how often that the wristbands are not replaced in such situations. This similarly applies for the wristband coming loose and not being replaced, for example, where the likelihood of the replacement is less than when the band has been removed deliberately for treatment.

Following the completion of the PRA a complete sensemaking briefing and discussion is conducted for the hospital leadership.

Figure 3.

An example of a probabilistic risk assessment fault tree

Table 1 has been prepared as a way to list the properties of each of the three sensemaking tools prospective analysis—FMEA and PRA, as will as the retrospective tool—RCA.

Table 1.

A Comparison of FMEA, PRA, and RCA

| Failure Modes and Effects Analysis (FMEA) | Case | Probabilistic Risk Assessment (PRA) | Case | Root Cause Analysis (RCA) | Case | |||

|---|---|---|---|---|---|---|---|---|

| Structured approach | Considers single failure and its consequences–one at a time under normal operating conditions | Issue: Patient dentification Process: Patient wristbanding Possible failures: No wristband OR Incorrect wristband (each with multiple possible reasons) Consequence: Patient misidentified | Considers combinations of failures by modeling the system in fault trees in a range of conditions (context) | Patient receiving correct medication Possible failures: Wristband not checked Nurse doesn't ask patient's name Medical record not checked Consequences Multiple | Identifies the immediate causes of an event and comprehensively explores how they, in turn, were caused.The context is fixed* | Issue: Patient almost receives a medication intended for another Why? Actual failures: Misidentified Why? No wristband Why? WB not made Why? WB machine broken Why? And Why? | And Why? Actual failures: Patient with same name Why? Bed shortage Etc. | And Why? Actual failures: Patient not asked to give full name, but responds to question Mr.—? Etc. |

| Proactive | What can go wrong and the consequences | Howthings go wrong. How likely to occur. | ||||||

| Reactive | What went wrong | |||||||

| Quantitation of events | Simple relative ranking | Explicitly models event probabilities and frequencies.Likelihood based on event data, interviews, audit, observation | May be useful in informing the PRA but not typically strong quantitative data, except in aggregate |

If analysis of near miss and no harm events—proactive.

OWNING THE RISK

Patient safety sensemaking is a conceptual framework and process which allows both individuals working within the clinical process as well as organizational managers and leaders to come to grips with patient safety issues and concerns. The sensemaking conversations that are conducted help various interest groups take ownership of the risk and prioritize actions to eliminate the identified risks and hazards. Owning the risk can lead to what is becoming known in nuclear power as risk informed design. Once those involved have made sense of the risk revealed in an event through an RCA, a process through FMEA, or a system through PRA, they can make informed decisions about how to design appropriate interventions that will have the greatest impact for improvement.

CONCLUSION

Patient safety sensemaking is a conceptual framework which allows everyone in the system to tie together separate processes to build greater understanding of the risks and hazards, and out of the conversations, develop ownership that empowers them to design out system failures and design in quality improvement. The ultimate goal of sensemaking is to understand and to eliminate risks and hazards that are a threat to patient safety. True sensemaking must involve both retrospective and prospective approaches to learning as an essential part of the process leading to risk informed design. The results of these separate or combined approaches are most effective when end users, in conversation-based meetings add their expertise and knowledge to the RCA, FMEA, or PRA data in order to make sense of the risks and hazards. Without a sense of ownership engendered by such conversations, the possibility of effective action to eliminate or minimize them is greatly reduced.

Acknowledgments

The opinions and assertions contained herein are the private views of the authors and are not to be construed as official or as reflecting the views of the Agency for Healthcare Research and Quality, the United States Department of Health and Human Services.

REFERENCES

- Aspden PJM, Wolcott CorriganJ, Erickson SM, editors. Patient Safety: Achieving a New Standard of Care. Washington, DC: The National Academies Press; 2004. [PubMed] [Google Scholar]

- Battles JB, Kanki BG. The Use of Scio-Technical Probabilistic Risk Assessment at AHRQ and NASA. In: Spitzer C, Schmocker U, Dang VN, editors. Probabilistic Safety Assessment and Management 2004. Vol. 4. Berlin: Springer; 2004. pp. 2212–7. [Google Scholar]

- Battles JB, Kaplan HS, Van der Schaaf TW, Shea CE. The Attributes of Medical Event-Reporting Systems: Experience with a Prototype Medical Event-Reporting System for Transfusion Medicine. Archives of Pathology and Laboratory Medicine. 1998;122(3):231–8. [PubMed] [Google Scholar]

- Battles JB, Lilford RJ. Organizing Patient Safety Research to Identify Risks and Hazards. Quality and Safety in Health Care. 2003;12(Suppl II):ii2–7. doi: 10.1136/qhc.12.suppl_2.ii2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Battles JB, Shea CE. A System of Analyzing Medical Errors to Improve GME Curricula and Programs. Academic Medicine. 2001;76(2):125–33. doi: 10.1097/00001888-200102000-00008. [DOI] [PubMed] [Google Scholar]

- Cohen MR, Senders J, Davis NM. Failure Modes and Effects Analysis: A Novel Approach to Avoiding Dangerous Medication Errors and Accidents. Hospital Pharmacy. 1994;29:319–24. [PubMed] [Google Scholar]

- Department of Health, United Kingdom (DOH/UK) An Organization with a Memory: A Report of an Expert Group on Learning from Adverse Events in the NHS. London: National Health Service; 2000. [Google Scholar]

- DeRosier J, Stalhandske E, Bagian JP, Nudell T. Using Health Care Failure Modes and Effects Analysis: The VA National Center for Patient Safety's Prospective Risk Analysis System. Joint Commission Journal on Quality Improvement. 2002;28:248–67. doi: 10.1016/s1070-3241(02)28025-6. [DOI] [PubMed] [Google Scholar]

- Dixon NM. Med QIC Medicare Quality Improvement. Baltimore: Centers for Medicare and Medicaid Services; 2003. Sensemaking Guidelines—a Quality Improvement Tool. Available at http://www.medqic.org. [Google Scholar]

- Feldman SE, Douglas DW. Medical Accidents in Hospital Care: Applications of Failure Analysis to Hospital Quality Appraisal. Joint Commission Journal on Quality Improvement. 1997;23:567–80. doi: 10.1016/s1070-3241(16)30340-6. [DOI] [PubMed] [Google Scholar]

- Gano DL. Apollo Root Cause Analysis—A New Way of Thinking. Huston, TX: Apollonian Publications; 1999. [Google Scholar]

- Joint Commission on Accreditation of Healthcare Organizations (JCAHO) Compressive Accreditation Manual for Hospitals. Chapter on Improving Organizational Performance. Standard P1.3.12 and the Intent for P13.1–The Organization Collects Data to Monitor Performance. Oakbrook Terrace, IL: JCAHO; 2003. [Google Scholar]

- Kaplan HS, Battles JB, Van der Schaaf TW, Shea CE, Mercer SQ. Identification and Classification of the Causes of Events in Transfusion Medicine. Transfusion. 1998;38(11–12):1071–81. doi: 10.1046/j.1537-2995.1998.38111299056319.x. [DOI] [PubMed] [Google Scholar]

- Marx D. Patient Safety and the “Just Culture”: A Primer for Health Care Executives. New York: Columbia University; 2001. [Google Scholar]

- Marx DA, Slonim AD. Assessing Patient Safety Risk before the Injury Occurs: An Introduction to Sociotechnical Probabilistic Risk Modeling in Health Care. Quality and Safety in Health Care. 2003;12(Suppl II):ii33–37. doi: 10.1136/qhc.12.suppl_2.ii33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson GM, Olson JS. Ann Arbor, MI: University of Michigan; 2000. Distance Matters, REW Technical Report-0-04. Available at http://www.umich.edu/publications/tr_00_04.html. [Google Scholar]

- Spitzer C, Schmocker U, Dang VN, editors. Probabilistic Safety Assessment and Management 2004. Berlin: Springer; 2004. [Google Scholar]

- Taylor JR, Van Every EJ. The Emergent Organization: Communication as Its Site and Surface. Mahwah, NJ: Erlbaum; 2000. [Google Scholar]

- Tsatsoulis C, Amthauer HA. Finding Clusters of Similar Events within Clinical Incident Reports: A Novel Methodology Combining Case Based Reasoning and Information Retrieval. Quality & Safety in Health Care. 2003;12(Suppl II):ii24–32. doi: 10.1136/qhc.12.suppl_2.ii24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wald W, Shojania KG. Root Cause Analysis. In: Shojania KG, Duncan BW, McDonald KM, Wachter RM, editors. Evidence Report/Technology Assessment Number 43:Making Healthcare Safer: A Critical Analysis of Patient Safety Practices. Rockville, MD: Agency for Healthcare Research and Quality; 2001. [Google Scholar]

- Weick KT. Collective Mind in Organizations: Heedful Interrelating on Flight Decks. Administrative Science Quarterly. 1993;38(3):357–81. [Google Scholar]

- Weick KE. Sensemaking in Organizations. Thousand Oaks, CA: Sage Publications; 1995. [Google Scholar]

- Weick K. The Reduction of Medical Errors through Mindful Interdependence. In: Rosenthal MM, Sutcliffe KM, editors. Medical Error: What Do We Know? What Do We Do? San Francisco: Jossey-Bass; 2002. [Google Scholar]

- Wreathall J, Nemeth C. Assessing Risk: The Role of Probabilistic Risk Assessment (PRA) in Patient Safety Improvement. Quality and Safety in Health Care. 2004;13:206–12. doi: 10.1136/qshc.2003.006056. [DOI] [PMC free article] [PubMed] [Google Scholar]