Abstract

Objective

To develop and describe the use of a rubric for reinforcing critical literature evaluation skills and assessing journal article critiques presented by pharmacy students during journal club exercises.

Design

A rubric was developed, tested, and revised as needed to guide students in presenting a published study critique during the second through fourth years of a first-professional doctor of pharmacy degree curriculum and to help faculty members assess student performance and provide formative feedback. Through each rubric iteration, the ease of use and clarity for both evaluators and students were determined with modifications made as indicated. Student feedback was obtained after using the rubric for journal article exercises, and interrater reliability of the rubric was determined.

Assessment

Student feedback regarding rubric use for preparing a clinical study critique was positive across years. Intraclass correlation coefficients were high for each rubric section. The rubric was modified a total of 5 times based upon student feedback and faculty discussions.

Conclusion

A properly designed and tested rubric can be a useful tool for evaluating student performance during a journal article presentation; however, a rubric can take considerable time to develop. A rubric can also be a valuable student learning aid for applying literature evaluation concepts to the critique of a published study.

Keywords: journal club, rubric, literature evaluation, drug information, assessment, evidence-based medicine, advanced pharmacy practice experience

INTRODUCTION

There has been increased interest over the past decade in using evidence-based medicine (EBM) as a basis for clinical decision making. Introduced in 1992 by the McMaster University-based Evidence-Based Medicine Working Group, EBM has been defined as “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients.”1 Current best evidence is disseminated via original contributions to the biomedical literature. However, the medical literature has expanded greatly over time. Medline, a biomedical database, indexes over 5000 biomedical journals and contains more than 15 million records.2 With this abundance of new medical information, keeping up with the literature and properly utilizing EBM techniques are difficult tasks. A journal club in which a published study is reviewed and critiqued for others can be used to help keep abreast of the literature. A properly designed journal club can also be a useful educational tool to teach and reinforce literature evaluation skills. Three common goals of journal clubs are to teach critical appraisal skills, to have an impact on clinical practice, and to keep up with the current literature.3,4 Journal clubs are a recognized part of many educational experiences for medical and pharmacy students in didactic and experiential settings, as well as for clinicians. Journal clubs have also been described as a means of teaching EBM and critical literature evaluation skills to various types of medical residents.

Cramer described use of a journal club to reinforce and evaluate family medicine residents' understanding and use of EBM concepts.5 Pre- and posttests were used during each journal club to assess the residents' understanding of key EBM concepts related to the article discussed. Pretest scores improved over the year from 54.5% to 78.9% (p < 0.001) and posttest scores improved from 63.6% to 81.6% (p < 0.001), demonstrating the journal club's ability to help residents utilize EBM techniques. Linzer and colleagues compared a journal club to a control seminar series with regard to medical interns' reading habits, epidemiology and biostatistics knowledge, and ability to read and incorporate the medical literature into their practice of medicine.6 Forty-four interns were randomized to participate in the journal club or a seminar series. After a mean of 5 journal club sessions, 86% of the journal club group improved their reading habits compared to none in the seminar group. Knowledge scores increased more with the journal club and there was a trend toward more knowledge gained with sessions attended. Eighty percent of the journal club participants reported improvement in their ability to incorporate the literature into medical practice compared to 44% of the seminar group.

Journal clubs have also been used extensively to aid in the education and training of pharmacy students and residents. The journal club was a major component in 90% and 83% of drug information practice experiences offered by first professional pharmacy degree programs and nontraditional PharmD degree programs, respectively.7

When a journal club presentation is used to promote learning, it is important that an appropriate method exists for assessing performance and providing the presenter with recommendations for improvement. Several articles have listed important questions and criteria to use when evaluating published clinical studies.8-11 However, using such questions or criteria in the form of a simple checklist (ie, indicating present or absent) does not provide judgments of the quality or depth of coverage of each item.12 A rubric is a scoring tool that contains criteria for performance with descriptions of the levels of performance that can be used for performance assessments.12,13 Performance assessments are used when students are required to demonstrate application of knowledge, particularly for tasks that resemble “real-life” situations.14 This report describes the development and use of a rubric for performance assessments of “journal club” study critiques by students in the didactic curriculum and during an advanced pharmacy practice experience (APPE).

DESIGN

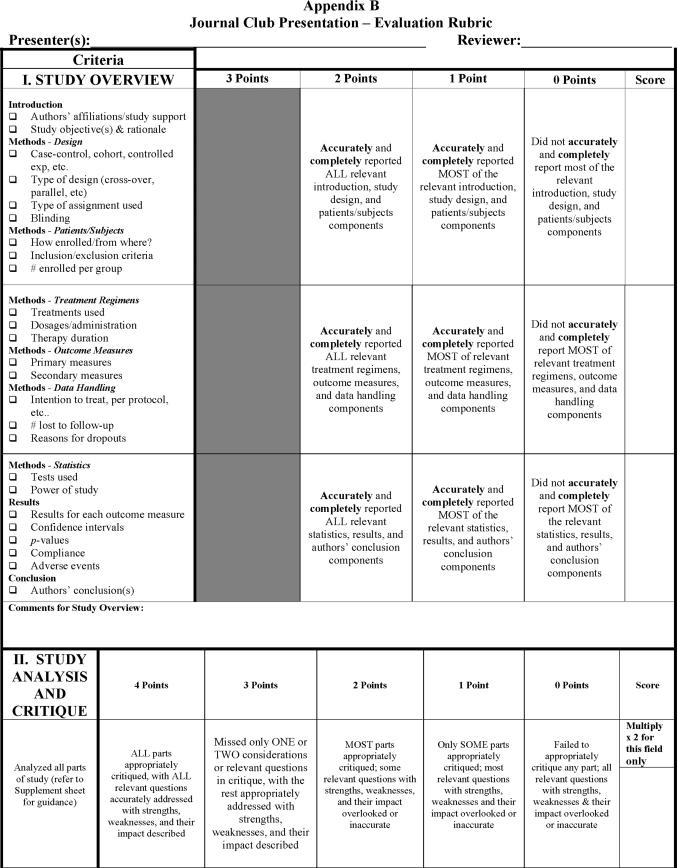

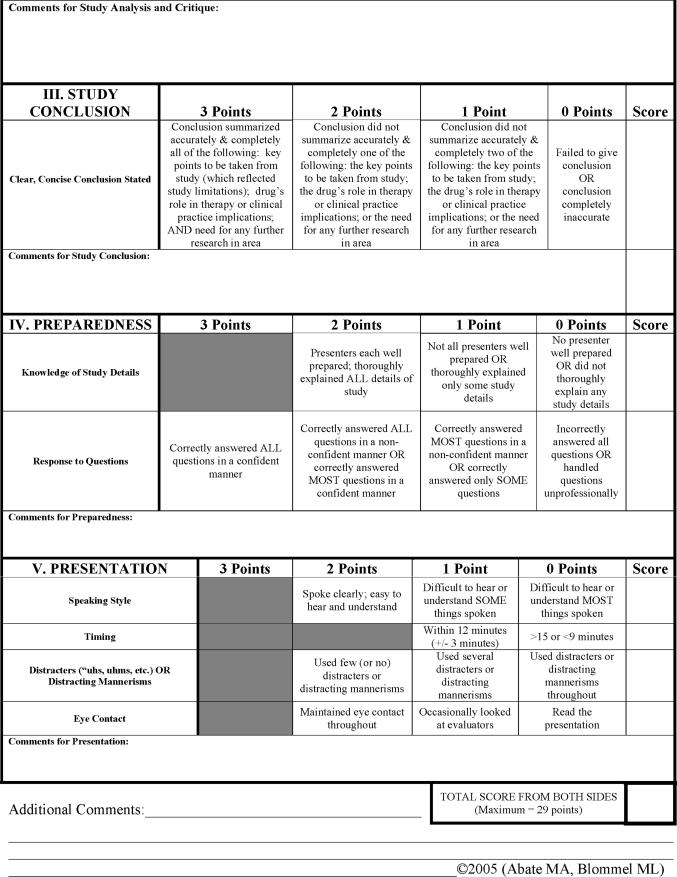

Two journal article presentations have been a required part of the elective drug information APPE at the West Virginia Center for Drug and Health Information for many years. For these presentations, students select a recent clinical study to evaluate and present their study overview and critique to the 2 primary drug information preceptors. Prior to rubric development, these presentations were evaluated using a brief checklist based upon the CONSORT criteria for reporting of randomized controlled trials.15 Work on a scoring rubric for the student presentations began in 2002. The first step in its development involved identifying the broad categories and specific criteria that were expected from the journal club presentation. The broad categories selected were those deemed important for a journal club presentation and included: “Content and Description,” “Study Analysis,” “Conclusion,” “Presentation Style,” and “Questions.” The criteria in “Content and Description” involved accurate and complete presentation of the study's objective(s), rationale, methods, results, and author(s)' conclusion. Other criteria within the rubric categories included important elements of statistical analyses, analysis of study strengths and weaknesses, the study drug's role in therapy, communication skills, and ability to handle questions appropriately and provide correct answers. The first version of the rubric was tested in 2003 during the drug information APPE, and several rubric deficiencies were identified. Some sections were difficult to consistently interpret or complete, other criteria did not follow a logical presentation sequence, and a few of the levels of performance were based on numbers that were difficult to quantitate during the presentation. For example, the criteria under “Content and Description” were too broad; students could miss one aspect of a study's design such as blinding but correctly identify the rest, making it difficult to accurately evaluate using the rubric.

Version 2 of the rubric was reformatted to remedy the problems. The description and content categories were expanded to make it easier to identify the specific parts of the study that the students should describe, and the “Study Overview” category was divided into distinct parts that included introduction, study design, patients/subjects, treatment regimens, outcome measures, data handling method, dropouts per group, statistics, results, and conclusion. To facilitate ease of use by evaluators, a check box was placed next to each item within the individual parts. This format also allowed the student to see in advance exactly which criteria they needed to include during their presentation, as well as any that were later missed. The use of a checklist also aided evaluators when determining the overall score assigned to the subsections within this category. “Study Analysis and Critique” directed students to refer to the “Study Overview” category as a guide to the parts of the study they should critically analyze. “Study Conclusion” divided the scoring criteria into an enumeration of key strengths, key limitations, and the conclusion of the group/individual student. “Preparedness” included criteria for knowledge of study details and handling of questions. The “Presentation” category included criteria for desired communication skills. This rubric version was tested during 8 journal club presentations during the drug information rotation, and on a larger scale in 2003 in the required medical literature evaluation course for second-professional year students. During the second-professional year journal club assignment, groups of 2 or 3 students were each given 1 published clinical study to evaluate, which they later presented to 2 evaluators consisting of a faculty member plus either a fourth-professional year drug information rotation student or a pharmacy resident. The faculty members evaluating students included the 2 rubric developers as well as 2 additional faculty evaluators. The evaluators first completed the rubric independently to assess student performance; evaluators then discussed their scores and jointly completed a rubric that was used for the grade. The rubric was given to the students in advance to serve as a guide when preparing their journal club presentation. In addition, to provide students with actual experience in using the rubric, 2 fourth-professional year drug information APPE students each presented a journal article critique to the second-professional year class. The fourth-professional year students first gave their presentations to the drug information preceptors as practice and to ensure that complete and accurate information would be relayed to the second-professional year class. The second-professional year students then used the rubric to evaluate the fourth-professional year students' presentations; the completed rubrics were shared with the fourth-professional year students as feedback.

Based on student and evaluator feedback at the end of the journal club assignment, additional revisions to the rubric were needed. Students stated they had difficulty determining the difference between the “Study Analysis and Critique” category and the key strengths and weaknesses parts of the rubric; they felt they were simply restating the same strengths and weaknesses. Students also felt there was insufficient time to discuss their article. The evaluators had difficulty arriving at a score for the “Study Analysis and Critique” category, and students often did not know the important aspects to focus on when critiquing a study. Revisions to the rubric included expanding the presentation time from a maximum of 12 to a maximum of 15 minutes, explaining that the strengths and weaknesses should relate to the areas listed under “Study Overview,” and stating that only the key limitations that impacted the study findings should be summarized as part of the conclusion.

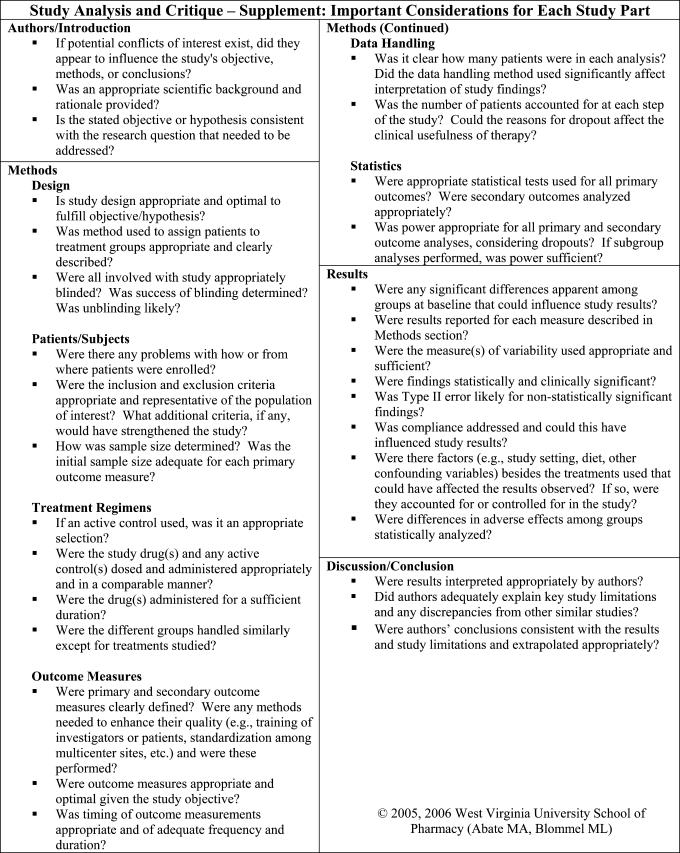

Version 3 of the rubric was tested during the 2004 journal club assignment for the second-professional year students. A brief survey was used to obtain student feedback about the rubric and the assignment as a tool for learning to apply literature evaluation skills. The rubric was revised once again based on the feedback plus evaluator observations. Through use of the first 3 versions of the rubric, the evaluators continually noted that students skipped key areas of the analysis/critique section when presenting their journal articles. Thus, for version 4, a list of questions was developed by the drug information faculty members to aid students in identifying the key considerations that should be included in their analysis (Appendix 1). To prepare this list, several sources were located that detailed questions or issues to take into account when evaluating a published study.8-11 Specific questions were also added based upon areas that were consistently overlooked or inappropriately discussed during the journal club presentations. Version 4 of the rubric was used by the 2 primary drug information preceptors to evaluate the fourth-professional year student journal club presentations during the drug information rotation. Following each fourth-professional year student's journal club presentation, each evaluator independently completed the rubric. The evaluators then met together to briefly review their scores, discuss discrepancies, and modify their individual scores if desired. This was important because one evaluator would occasionally miss a correct or incorrect statement made by a student and score the student inappropriately lower or higher for a particular section. Based upon further feedback from students and evaluators, final revisions were made to the rubric. The final and current version (Appendix 2) was used for all subsequent fourth-professional year journal club presentations, for the second-professional year students' journal club assignments during 2005 and 2006, and for a new, similar journal club assignment added to the curriculum for third-professional year students in 2006. Feedback about the finalized rubric was obtained from the second- and third-professional year students.

To evaluate the rubric's reliability, 3 drug information faculty members used the final rubric to evaluate the journal club presentations by 9 consecutive fourth-professional year drug information experiential students. Intraclass correlation coefficients were calculated for each rubric section and the total score.

ASSESSMENT

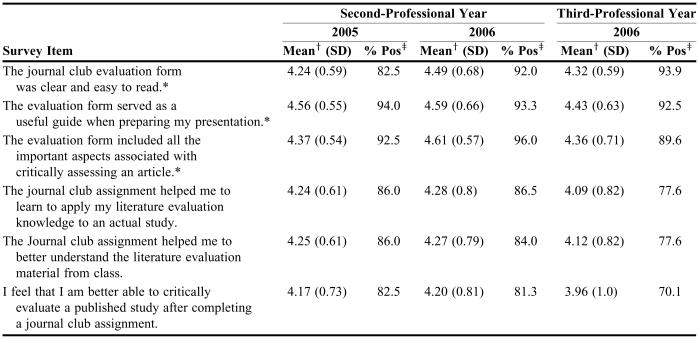

Five versions of the rubric were developed over a 3-year time period. The majority of the revisions involved formatting changes, clarifications in wording, and additions to the criteria. However, the change that appeared to have the greatest positive impact on the student presentations was the addition of the specific questions that should be considered during the study analysis and critique. Second- and third-professional year student feedback from the final version of the rubric is shown in Table 1 and is very positive overall. Representative comments from the students included: “Very helpful for putting the class info to use,” “Great technique for putting all concepts together,” and “This assignment helped me to become more comfortable with understanding medical studies.” The suggestions for change primarily involved providing points for the assignment (it was graded pass/fail for the second-professional year students), better scheduling (the journal club assignment was due at the end of the semester when several other assignments or tests were scheduled), and providing more pre-journal club assistance and guidance to students. A small number of students indicated they still found it confusing to critique a study after the journal club assignment, which was expected since literature evaluation skills take considerable practice and experience to master.

Table 1.

Pharmacy Students Feedback Concerning a Journal Club Assignment in Which the Rubric Was Used for Evaluation

*Items specific to rubric

†Based on a 5-point Likert scale ranging from 1 = strongly disagree to 5 = strongly agree

‡Positive response = agree or strongly agree

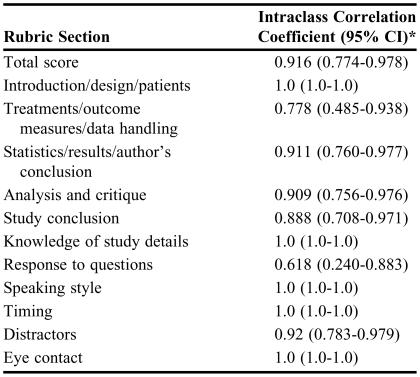

A survey of 7 recent fourth-professional year students who used the rubric to prepare for journal club presentations and who were also evaluated using the rubric found that all of the students agreed or strongly agreed with each item shown in Table 1. One representative comment was, “I was surprised at how articles appear to be good when I first read them but then after going through them again and using the form, I was able to find so many more limitations than I expected. I definitely feel that journal club has helped me to interpret studies better than I had been able to in the past.” Several fourth-professional year students took the rubric with them to use during other rotations that required a journal club presentation. After establishing that the rubric was user-friendly to evaluators and that students could clearly follow and differentiate the various sections, the reliability of the rubric in each of the 12 rating areas was determined (Table 2). The intra-class correlation coefficient demonstrated a high level of correlation between evaluators for each student for 11 of the 12 areas. A score of 0.618 was found for the section involving the students' response to questions. This was still considered acceptable; however, given that a fairly low variability in ratings affected the intra-class correlation coefficient due to the small scale (0-3 points) used in the rubric, with a relatively small number of observations. The intra-class correlation coefficient was calculated using the fourth-professional year students' journal club evaluations from the drug information rotation. Thus, by necessity, the evaluators consisted of the 2 primary faculty drug information preceptors and a drug information resident. These evaluators had previously used the rubric and the 2 faculty evaluators worked to develop the rubric. This may have increased the level of correlation between evaluators due to their familiarity with the sections of the rubric.

Table 2.

Rubric Intraclass Correlation Coefficients (N = 9)

*95% confidence interval

About 5 minutes are required for an individual evaluator to complete the rubric, with an additional 5 minutes needed for score comparison and discussion. In almost all cases, the reasons for any differences were easily identified through discussion and resulted from an evaluator simply missing or not correctly hearing what was said during the presentation. In general, evaluators found the rubric easy to use and did not require an extensive amount of time to consistently assess literature evaluation skills.

DISCUSSION

A rubric can be a useful tool for evaluating student performance in presenting and critiquing published clinical studies, as well as a valuable learning aid for students. However, developing a rubric that appropriately guides students in achieving the targeted performance, provides proper student feedback, and is user-friendly and reliable for evaluators requires a significant initial investment of time and effort. Multiple pilot tests of the rubric are generally required, with subsequent modifications needed to improve and refine the rubric's utility as an evaluation and learning tool. Once the rubric is developed, though, it can be used to quickly evaluate student performance in a more consistent manner.

As part of the development and use of a rubric, it is important that the rubric's criteria be thoroughly reviewed with students and they are provided the opportunity to observe examples of desired performance. Once a rubric is used to evaluate student performance, the completed rubric should be shared with students so they can identify areas of deficiency. This feedback will help enable students to appropriately modify their performance.

The journal club evaluation rubric can be used when teaching literature evaluation skills throughout all levels of education and training. Students early in their education will probably need to extensively refer to and rely upon the supplemental questions to help them identify key considerations when analyzing a study. However, as students progress with practice and experience and their literature evaluation skills are reinforced in actual clinical situations, their need to consult the supplemental questions should diminish.

CONCLUSION

Despite the considerable time and effort invested, the evaluation rubric has proven to be a valuable and ultimately timesaving tool for evaluating student performance when presenting a published study review and critique. More importantly, the rubric has provided students with clear expectations and a guide for desired performance.

Appendix 1. Study Analysis and Critique – Supplement

Appendix 2. Final evaluation rubric for journal club presentations

REFERENCES

- 1.Kuhn JG, Wyer PC, Cordell WH, et al. A survey to determine the prevalence and characteristics of training in evidence based medicine in emergency medicine residency programs. Education. 2005;28:353–9. doi: 10.1016/j.jemermed.2004.09.015. [DOI] [PubMed] [Google Scholar]

- 2. NCBI PubMed [PubMed Overview]. Bethesda, MD: National Library of Medicine and National Institutes of Health; 2006. Available from: http://www.ncbi.nlm.nih.gov/entrez/query/static/overview.html Accessed November 30, 2006.

- 3.Dirschl DR, Tornetta PT, Bhandari M. Designing, conducting, and evaluating journal clubs in orthopaedic surgery. Clin Orthop Relat Res. 2003;413:146–57. doi: 10.1097/01.blo.0000081203.51121.25. [DOI] [PubMed] [Google Scholar]

- 4.Heiligman RM. Resident evaluation of a family practice residency journal club. Fam Med. 1991;23:152–3. [PubMed] [Google Scholar]

- 5.Cramer JS, Mahoney MC. Introducing evidence based medicine to the journal club, using a structured pre and post test: a cohort study [e-publication] BMC Med Educ. 2001;1:6. doi: 10.1186/1472-6920-1-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Linzer M, Brown JT, Frazier LM, et al. Impact of a medical journal club on house-staff reading habits, knowledge, and critical appraisal skills. JAMA. 1988;260:2537–41. [PubMed] [Google Scholar]

- 7.Cole SW, Berensen NM. Comparison of drug information practice curriculum components in US colleges of pharmacy. Am J Pharm Educ. 2005;69(2) Article 34. [Google Scholar]

- 8.Task force of Academic Medicine and the GEA-RIME Committee. Appendix 1: Checklist of review criteria. Acad Med. 2001;76:958–9. [Google Scholar]

- 9.Askew JP. Journal Club 101 for the new practitioner: evaluation of a clinical trial. Am J Health-Syst Pharm. 2004;61:1885–7. doi: 10.1093/ajhp/61.18.1885. [DOI] [PubMed] [Google Scholar]

- 10.Krogh CL. A checklist system for critical review of medical literature. Med Educ. 1985;19:392–5. doi: 10.1111/j.1365-2923.1985.tb01343.x. [DOI] [PubMed] [Google Scholar]

- 11.Coomarasamy A, Latthe P, Papaioannou S, et al. Critical appraisal in clinical practice: sometimes irrelevant, occasionally invalid. J R Soc Med. 2001;94:573–7. doi: 10.1177/014107680109401105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Arter J, McTighe J. Scoring Rubrics in the Classroom. Thousand Oaks, Calif: Corwin Press, Inc; 2001. pp. 1–29. [Google Scholar]

- 13. Mertler CA. Designing scoring rubrics for your classroom. Pract Assess Research Eval. 2001;7(25). Available from: http://pareonline.net/getvn.asp?v=7&n=25.

- 14. Moskal BM. Recommendations for developing classroom performance assessments and scoring rubrics. Pract Assess Research Eval. 2003;8(14). Available from: http://pareonline.net/getvn.asp?v=8&n=14.

- 15.Altman DG, Schulz KF, Moher D, et al. The revised CONSORT statement for reporting randomized trials: explanation and elaboration. Ann Intern Med. 2001;134:663–94. doi: 10.7326/0003-4819-134-8-200104170-00012. [DOI] [PubMed] [Google Scholar]