Abstract

An instance of a random constraint satisfaction problem defines a random subset 𝒮 (the set of solutions) of a large product space XN (the set of assignments). We consider two prototypical problem ensembles (random k-satisfiability and q-coloring of random regular graphs) and study the uniform measure with support on S. As the number of constraints per variable increases, this measure first decomposes into an exponential number of pure states (“clusters”) and subsequently condensates over the largest such states. Above the condensation point, the mass carried by the n largest states follows a Poisson-Dirichlet process. For typical large instances, the two transitions are sharp. We determine their precise location. Further, we provide a formal definition of each phase transition in terms of different notions of correlation between distinct variables in the problem. The degree of correlation naturally affects the performances of many search/sampling algorithms. Empirical evidence suggests that local Monte Carlo Markov chain strategies are effective up to the clustering phase transition and belief propagation up to the condensation point. Finally, refined message passing techniques (such as survey propagation) may also beat this threshold.

Keywords: message passing algorithms, phase transitions, random graphs

Constraint satisfaction problems (CSPs) arise in a large spectrum of scientific disciplines. An instance of a CSP is said to be satisfiable if there exists an assignment of N variables (x1, x2, …, xN)  x, xi ∈ 𝒳 (𝒳 being a finite alphabet), which satisfies all of the constraints within a given collection. The problem is in finding such an assignment or showing that the constraints are unsatisfiable. More precisely, one is given a set of functions ψa : 𝒳k → {0, 1}, with a ∈ {1, …, M}

x, xi ∈ 𝒳 (𝒳 being a finite alphabet), which satisfies all of the constraints within a given collection. The problem is in finding such an assignment or showing that the constraints are unsatisfiable. More precisely, one is given a set of functions ψa : 𝒳k → {0, 1}, with a ∈ {1, …, M}  [M] and of k-tuples of indices {ia(1), …, ia(k)} ⊆ [N], and has to establish whether there exists x ∈ 𝒳N such that ψa (xia(1), …, xia(k)) = 1 for all as. In this article we shall consider two well known families of CSPs [both known to be NP-complete (1)]:

[M] and of k-tuples of indices {ia(1), …, ia(k)} ⊆ [N], and has to establish whether there exists x ∈ 𝒳N such that ψa (xia(1), …, xia(k)) = 1 for all as. In this article we shall consider two well known families of CSPs [both known to be NP-complete (1)]:

(i) k-satisfiability (k-SAT) with k ≥ 3. In this case 𝒳 = {0, 1}. The constraints are defined by fixing a k-tuple [za(1), …, za(k)] for each a, and setting ψa(xia(1), …, xia(k)) = 0 if (xia(1), …, xia(k) = (za(1), …, za(k)) and = 1 otherwise.

(ii) q-coloring (q-COL) with q ≥ 3. Given a graph G with N vertices and M edges, one is asked to assign colors xi ∈ 𝒳

{1, …, q} to the vertices in such a way that no edge has the same color at both ends.

{1, …, q} to the vertices in such a way that no edge has the same color at both ends.

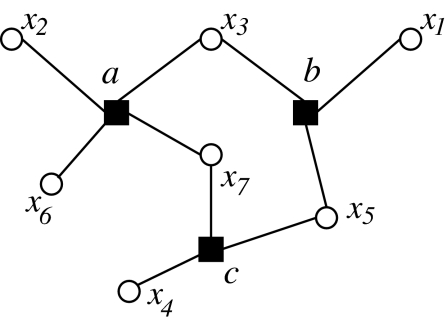

The optimization (maximize the number of satisfied constraints) and counting (count the number of satisfying assignments) versions of this problems are defined straightforwardly. It is also convenient to represent CSP instances as factor graphs (2), i.e., bipartite graphs with vertex sets [N], [M] including an edge between node i ∈ [N] and a ∈ [M] if, and only if, the ith variable is involved in the ath constraint (compare Fig. 1). This representation allows one to define naturally a distance d(i, j) between variable nodes.

Fig. 1.

The factor graph of a small CSP allows to define the distance d(i, j) between variables xi and xj (filled squares are constraints and empty circles variables). Here, for instance, d(6, 1) = 2 and d(3, 5) = 1.

Ensembles of random CSPs (rCSPs) were introduced (see e.g., ref. 3) with the hope of discovering generic mathematical phenomena that could be exploited in the design of efficient algorithms. Indeed several search heuristics, such as Walk-SAT (4) and “myopic” algorithms (5) have been successfully analyzed and optimized over rCSP ensembles. The most spectacular advance in this direction has probably been the introduction of a new and powerful message passing algorithm (survey propagation, SP) (6). The original justification for SP was based on the (nonrigorous) cavity method from spin glass theory. Subsequent work proved that standard message passing algorithms (such as belief propagation, BP) can indeed be useful for some CSPs (7–9). Nevertheless, the fundamental reason for the (empirical) superiority of SP in this context remains to be understood and is a major open problem in the field. Building on a refined picture of the solution set of rCSP, this article provides a possible (and testable) explanation. We consider two ensembles that have attracted the majority of work in the field: (i) random k-SAT: each k-SAT instance with N variables and M = Nα clauses is considered with the same probability; (ii) q-COL on random graphs: the graph G is uniformly random among the ones over N vertices, with uniform degree l (the number of constraints is therefore M = Nl/2).

Phase Transitions in rCSP

It is well known that rCSPs may undergo phase transitions as the number of constraints per variable α is varied.g The best known of such phase transitions is the SAT-UNSAT one: as α crosses a critical value αs(k) (that can, in principle, depend on N), the instances pass from being satisfiable to unsatisfiable with high probabilityh (10). For k-SAT, it is known that αs(2) = 1. A conjecture based on the cavity method was put forward in ref. 6 for all k ≥ 3 that implied in particular the values presented in Table 1 and αs(k) = 2k log 2 − (1 + log 2)/2 + O (2−k) for large k (11). Subsequently, it was proved that αs(k) = 2k log 2 − O(k), confirming this asymptotic behavior (12). An analogous conjecture for q-COL was proposed in ref. 13 yielding, for regular random graphs (14), the values reported in Table 1 and ls(q) = 2q log q − log q − 1 + o(1) for large q [according to our convention, random graphs are with high probability uncolorable if l ≥ ls(q)]. It was proved in refs. 12 and 15 that ls(q) = 2q log q − O(log q).

Table 1.

Critical connectivities for the dynamic, condensation, and satisfiability transitions in k-SAT and q-COL

Even more interesting and challenging are phase transitions in the structure of the set 𝒮 ⊆ 𝒳N of solutions of rCSP's (“structural” phase transitions). Assuming the existence of solutions, a convenient way of describing 𝒮 is to introduce the uniform measure over solutions μ(x):

|

where Z ≥ 1 is the number of solutions. Let us stress that, since 𝒮 depends on the rCSP instance, μ is itself random.

is itself random.

We shall now introduce a few possible “global” characterizations of the measure μ . Each one of these properties has its counterpart in the theory of Gibbs measures, and we shall partially adopt that terminology here (17).

. Each one of these properties has its counterpart in the theory of Gibbs measures, and we shall partially adopt that terminology here (17).

To define the first of such characterizations, we let i ∈ [N] be a uniformly random variable index, denote as xℓ the vector of variables whose distance from i is at least ℓ, and by μ(xi|xℓ) the marginal distribution of xi given xℓ. Then we say that the measure (Eq. 1) satisfies the uniqueness condition if for any given i ∈ [N],

as ℓ → ∞ (here and below the limit N → ∞ is understood to be taken before ℓ → ∞). This expresses a “worst case” correlation decay condition. Roughly speaking: the variable xi is (almost) independent of the far apart variables xℓ irrespective of the instance realization and the variables distribution outside the horizon of radius ℓ. The threshold for uniqueness (above which uniqueness ceases to hold) was estimated in ref. 9 for random k-SAT, yielding αu(k) = (2 log k)/k [1 + o(1)] (which is asymptotically close to the threshold for the pure literal heuristics) and in ref. 18 for coloring implying lu(q) = q for q large enough (a “numerical” proof of the same statement exists for small q). Below such thresholds BP can be proved to return good estimates of the local marginals of the distribution (Eq. 1).

Notice that the uniqueness threshold is far below the SAT-UNSAT threshold. Furthermore, several empirical studies (19, 20) pointed out that BP [as well as many other heuristics (4, 5)] is effective up to much larger values of the clause density. In a remarkable series of papers (6, 21), statistical physicists argued that a second structural phase transition is more relevant than the uniqueness one. Following this literature, we shall refer to this as the “dynamic phase transition” and denote the corresponding threshold as αd(k) [or ld(q)]. To precise this notion, we provide here two alternative formulations corresponding to two distinct intuitions. According to the first one, above αd(k) the variables (x1, …, xN) become globally correlated under μ . The criterion in 2 is replaced by one in which far apart variables xℓ are themselves sampled from μ (“extremality” condition):

. The criterion in 2 is replaced by one in which far apart variables xℓ are themselves sampled from μ (“extremality” condition):

as ℓ → ∞. The infimum value of α (respectively l) such that this condition is no longer fulfilled is the threshold αd(k) (ld(k)). Of course this criterion is weaker than the uniqueness one [hence αd(k) ≥ αu(k)].

According to the second intuition, above αd(k), the measure (Eq. 1) decomposes into a large number of disconnected “clusters.” This means that there exists a partition {An}n=1 … 𝒩 of 𝒳N (depending on the instance) such that: (i) one cannot find n such that μ(An) → 1; (ii) denoting by ∂ϵA the set of configurations x ∈ 𝒳N\A whose Hamming distance from A is at most Nϵ, we have μ(∂ϵAn)/μ(An)(1 − μ(An)) → 0 exponentially fast in N for all n and ϵ small enough. Notice that the measure μ can be decomposed as:

|

where wn  μ(An) and μn

μ(An) and μn

μ( · | An). We shall always refer to {An} as the “finer” partition with these properties.

μ( · | An). We shall always refer to {An} as the “finer” partition with these properties.

The above ideas are obviously related to the performance of algorithms. For instance, the correlation decay condition in Eq. 3 is likely to be sufficient for approximate correctness of BP on random formulae. Also, the existence of partitions as above implies exponential slowing down in a large class of Monte Carlo Markov chain sampling algorithms.i

Recently, some important rigorous results were obtained supporting this picture (22, 23). However, even at the heuristic level, several crucial questions remain open. The most important concern the distribution of the weights {wn}: are they tightly concentrated (on an appropriate scale) or not? A (somewhat surprisingly) related question is: can the absence of decorrelation above αd(k) be detected by probing a subset of variables bounded in N?

SP (6) can be thought as an inference algorithm for a modified graphical model that gives unit weight to each cluster (20, 24), thus tilting the original measure toward small clusters. The resulting performances will strongly depend on the distribution of the cluster sizes wn. Further, under the tilted measure, αd(k) is underestimated because small clusters have a larger impact. The correct value was never determined (but see ref. 16 for coloring). Mézard et al. (25) undertook the technically challenging task of determining the cluster size distribution without, however, clarifying several of its properties.

In this article we address these issues and unveil at least two unexpected phenomena. Our results are described in Results and Discussion and summarized in Fig. 2. Finally, we discuss the connection with the performances of SP. Some technical details of the calculation are collected in Cavity Formalism, Tree Reconstruction, and SP.

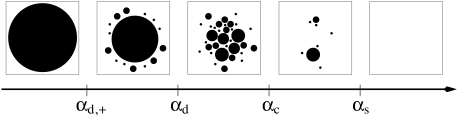

Fig. 2.

Pictorial representation of the different phase transitions in the set of solutions of a rCSP. At αd,+ some clusters appear, but for αd,+ < α < αd they comprise only an exponentially small fraction of solutions. For αd < α < αc the solutions are split among about eNΣ∗ clusters of size eNs∗. If αc < α < αs the set of solutions is dominated by a few large clusters (with strongly fluctuating weights), and above αs the problem does not admit solutions any more.

Results and Discussion

The formulation in terms of extremality condition (see Eq. 3) allows for an heuristic calculation of the dynamic threshold αd(k). Previous attempts were based instead on the cavity method, which is an heuristic implementation of the definition in terms of pure state decomposition (see Eq. 4). Generalizing the results of ref. 16, it is possible to show that the two calculations provide identical results. However, the first one is technically simpler and under much better control. As mentioned above we obtain, for all k ≥ 4 a value of αd(k) larger than the one quoted in refs. 6 and 11.

Further we determined the distribution of cluster sizes wn, thus unveiling a third “condensation” phase transition at αc(k) ≥ αd(k) (strict inequality holds for k ≥ 4 in SAT and q ≥ 4 in coloring, see below). For α < αc(k) the weights wn concentrate on a logarithmic scale [namely, −log wn is θ(N) with θ(N1/2) fluctuations]. Roughly speaking, the measure is evenly split among an exponential number of clusters.

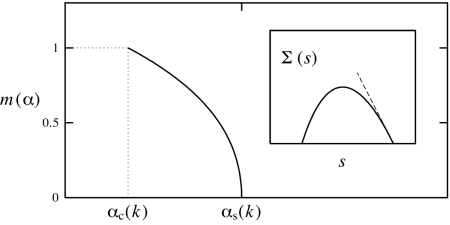

For α > αc(k) [and < αs(k)] the measure is carried by a subexponential number of clusters. More precisely, the ordered sequence {wn} converges to a well known Poisson-Dirichlet process {w∗n}, first recognized in the spin glass context by Ruelle (26). This is defined by w∗n = xn/Σxn, where xn > 0 are the points of a Poisson process with rate x−1−m(α) and m(α) ∈ (0, 1). This picture is known in spin glass theory as one-step replica symmetry breaking (1RSB) and has been proven in ref. 27 for some special models. The Parisi 1RSB parameter m(α) is monotonically decreasing from 1 to 0 when α increases from αc(k) to αs(k) (see Fig. 3).

Fig. 3.

The Parisi 1RSB parameter m(α) as a function of the constraint density α. In the Inset, the complexity Σ(s) as a function of the cluster entropy for α = αs(k) − 0.1 [the slope at Σ(s) = 0 is −m(α)]. Both curves have been computed from the large k expansion.

Remarkably, the condensation phase transition is also linked to an appropriate notion of correlation decay. If i(1), …, i(n) ∈ [N] are uniformly random variable indices, then, for α < αc(k) and any fixed n:

as N → ∞. Conversely, the quantity on the left side of Eq. 5 remains positive for α > αc(k). It is easy to understand that this condition is even weaker than the extremality one (compare Eq. 3) in that we probe correlations of finite subsets of the variables. In the next two sections we discuss the calculation of αd and αc.

Dynamic Phase Transition and Gibbs Measure Extremality.

A rigorous calculation of αd(k) along any of the two definitions provided above (compare Eqs. 3 and 4) remains an open problem. Each of the two approaches has, however, an heuristic implementation that we shall now describe. It can be proved that the two calculations yield equal results as further discussed in the last section.

The approach based on the extremality condition in Eq. 3 relies on an easy-to-state assumption and typically provides a more precise estimate. We begin by observing that, because of the Markov structure of μ , it is sufficient for Eq. 3 to hold that the same condition is verified by the correlation between xi and the set of variables at distance exactly ℓ from i, that we shall keep denoting as xℓ. The idea is then to consider a large yet finite neighborhood of i. Given ℓ̄ ≥ ℓ, the factor graph neighborhood of radius ℓ̄ around i converges in distribution to the radius-ℓ̄ neighborhood of the root in a well defined random tree factor graph T.

, it is sufficient for Eq. 3 to hold that the same condition is verified by the correlation between xi and the set of variables at distance exactly ℓ from i, that we shall keep denoting as xℓ. The idea is then to consider a large yet finite neighborhood of i. Given ℓ̄ ≥ ℓ, the factor graph neighborhood of radius ℓ̄ around i converges in distribution to the radius-ℓ̄ neighborhood of the root in a well defined random tree factor graph T.

For coloring of random regular graphs, the correct limiting tree model T is coloring on the infinite l-regular tree. For random k-SAT, T is defined by the following construction. Start from the root variable node and connect it to l new function nodes (clauses), l being a Poisson random variable of mean kα. Connect each of these function nodes with k − 1 new variables and repeat. The resulting tree is infinite with nonvanishing probability if α > 1/k(k− 1). Associate a formula to this graph in the usual way, with each variable occurrence being negated independently with probability 1/2.

The basic assumption within the first approach is that the extremality condition in Eq. 3 can be checked on the correlation between the root and generation-ℓ variables in the tree model. On the tree, μ is defined to be a translation invariant Gibbs measure (17) associated to the infinite factor graphj T (which provides a specification). The correlation between the root and generation-ℓ variables can be computed through a recursive procedure (defining a sequence of distributions P̄ℓ, see Eq. 15 below). The recursion can be efficiently implemented numerically yielding the values presented in Table 1 for k (resp. q) = 4, 5, 6. For large k (resp. q) one can formally expand the equations on Pℓ and obtain:

is defined to be a translation invariant Gibbs measure (17) associated to the infinite factor graphj T (which provides a specification). The correlation between the root and generation-ℓ variables can be computed through a recursive procedure (defining a sequence of distributions P̄ℓ, see Eq. 15 below). The recursion can be efficiently implemented numerically yielding the values presented in Table 1 for k (resp. q) = 4, 5, 6. For large k (resp. q) one can formally expand the equations on Pℓ and obtain:

with γd = 1 (under a technical assumption of the structure of Pℓ).

The second approach to the determination of αd(k) is based on the “cavity method” (6, 25). It begins by assuming a decomposition in pure states of the form 4 with two crucial properties: (i) if we denote by Wn the size of the nth cluster (and hence wn = Wn/Σ Wn), then the number of clusters of size Wn = eNs grows approximately as eNΣ(s); (ii) for each single-cluster measure μn , a correlation decay condition of the form 3 holds.

, a correlation decay condition of the form 3 holds.

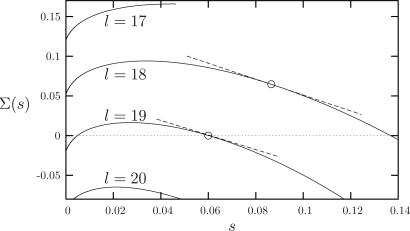

The approach aims at determining the rate function Σ(s), complexity: the result is expressed in terms of the solution of a distributional fixed point equation. For the sake of simplicity we describe here the simplest possible scenariok resulting from such a calculation (compare Fig. 4). For α < αd,−∞(k) the cavity fixed point equation does not admit any solution: no clusters are present. At αd,−∞(k) a solution appears, eventually yielding, for α > αd,+ a non-negative complexity Σ(s) for some values of s ∈ ℝ+. The maximum and minimum such values will be denoted by smax and smin. At a strictly larger value αd,0(k), Σ(s) develops a stationary point (local maximum). It turns out that αd,0(k) coincides with the threshold computed in refs. 6, 11, and 14. In particular, αd,0(4) ≈ 8.297, αd,0(5) ≈ 16.12, αd,0(6) ≈ 30.50 and ld,0(4) = 9, ld,0(5) = 13, ld,0(6) = 17. For large k (resp. q), αd,0(k) admits the same expansion as in Eqs. 6 and 7 with γd,0 = 1 − log 2. However, up to the larger value αd(k), the appearance of clusters is irrelevant from the point of view of μ . In fact, within the cavity method it can be shown that eN[s+Σ(s)] remains exponentially smaller than the total number of solutions Z: most of the solutions are in a single cluster. The value αd(k) is determined by the appearance of a point s∗ with Σ′(s∗) = −1 on the complexity curve. Correspondingly, one has Z ≈ eN[Σ(s∗)+s∗]: most of the solutions are comprised in clusters of size about eNs∗. The entropy per variable φ = limN→∞ N−1 log Z remains analytic at αd(k).

. In fact, within the cavity method it can be shown that eN[s+Σ(s)] remains exponentially smaller than the total number of solutions Z: most of the solutions are in a single cluster. The value αd(k) is determined by the appearance of a point s∗ with Σ′(s∗) = −1 on the complexity curve. Correspondingly, one has Z ≈ eN[Σ(s∗)+s∗]: most of the solutions are comprised in clusters of size about eNs∗. The entropy per variable φ = limN→∞ N−1 log Z remains analytic at αd(k).

Fig. 4.

The complexity function [the number of clusters with entropy density s is eNΣ(s)] for the six-colorings of l-regular graphs with l ∈ {17, 18, 19, 20}. Circles indicate the dominating states with entropy s∗; the dashed lines have slopes Σ′(s∗) = −1 for l = 18 and Σ′(s∗) = −0.92 for l = 19. The dynamic phase transition is ld(6) = 18, the condensation one ld(6) = 19, and the SAT-UNSAT one ls(6) = 20.

Condensation Phase Transition.

As α increases above αd, Σ(s∗) decreases: clusters of highly correlated solutions may no longer satisfy the newly added constraints. In Fig. 5Inset, we show the α dependency of Σ(s∗) for 4-SAT. In the large k limit, with α = ρ2k we get Σ(s∗) = log 2 − ρ − log 2 e−kρ + O(2−k), and s∗ = log 2e−kρ + O(2−k).

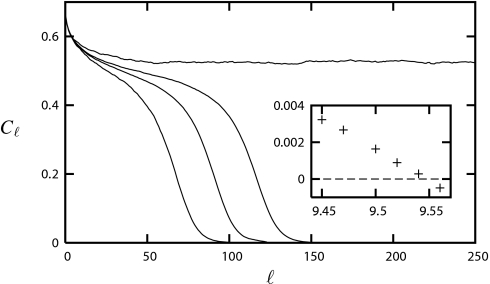

Fig. 5.

Correlation function (3) between the root and generation ℓ variables in a random k-SAT tree formula. Here k = 4 and (from bottom to top) α = 9.30, 9.33, 9.35, 9.40 [recall that αd(4) ≈ 9.38]. In the Inset, the complexity Σ(s∗) of dominant clusters as a function of α for 4-SAT.

The condensation point αc(k) is the value of α such that Σ(s∗) vanishes: above αc(k), most of the measure is contained in a subexponential number of large clustersl. Our estimates for αc(k) are presented in Table 1 [see also Fig. 4 for Σ(s) in the six-coloring] while in the large-k limit we obtain αc(k) = 2k log 2 − 3/2 log 2 + O(2−k) [recall that the SAT-UNSAT transition is at αs(k) = 2k log 2 − (1 + log 2)/2 + O(2−k)] and lc(q) = 2q log q − log q − 2 log 2 + o(1) [with the COL-UNCOL transition at ls(q) = 2q log q − log q − 1 + o(1)]. Technically, the size of dominating clusters is found by maximizing Σ(s) + s over the s interval on which Σ(s) ≥ 0. For α ∈ [αc(k), αs(k)], the maximum is reached at smax, with Σ(smax) = 0 yielding φ = smax. It turns out that the solutions are comprised within a finite number of clusters, with entropy eNsmax+Δ, where Δ = θ(1). The shifts Δ are asymptotically distributed according to a Poisson point process of rate e−m(α)Δ with m(α) = −Σ′(smax). This leads to the Poisson Dirichlet distribution of weights discussed above. Finally, the entropy per variable φ is nonanalytic at αc(k).

Let us conclude by stressing two points. First, we avoided the 3-SAT and three-coloring cases. These cases [as well as the three-coloring on Erdös-Rényi graphs (25)] are particular in that the dynamic transition point αd is determined by a local instability [a Kesten-Stigum (28, 29) condition, see also ref. 21], yielding αd(3) ≈ 3.86 and ld(3) = 6 (the case l = 5, q = 3 being marginal). Related to this is the fact that αc = αd: throughout the clustered phase, the measure is dominated by a few large clusters [technically, Σ(s∗) < 0 for all α > αd]. Second, we did not check the local stability of the 1RSB calculation. By analogy with ref. 30, we expect that an instability can modify the curve Σ(s) but not the values of αd and αc.

Algorithmic Implications.

Two message passing algorithms were studied extensively on random k-SAT: BP and SP (mixed strategies were also considered in refs. 19 and 20). A BP message νu→v(x) between nodes u and v on the factor graph is usually interpreted as the marginal distribution of xu (or xv) in a modified graphical model. An SP message is instead a distribution over such marginals Pu→v(ν). The empirical superiority of SP is usually attributed to the existence of clusters (6): the distribution Pu→v(ν) is a survey of the marginal distribution of xu over the clusters. As a consequence, according to the standard wisdom, SP should outperform BP for α > αd(k).

This picture, however, has several problems. Let us list two of them. First, it seems that essentially local algorithms (such as message passing ones) should be sensitive only to correlations among finite subsets of the variablesm, and these remain bounded up to the condensation transition. Recall in fact that the extremality condition in Eq. 3 involves a number of variables unbounded in N, while the weaker in Eq. 5 is satisfied up to αc(k).

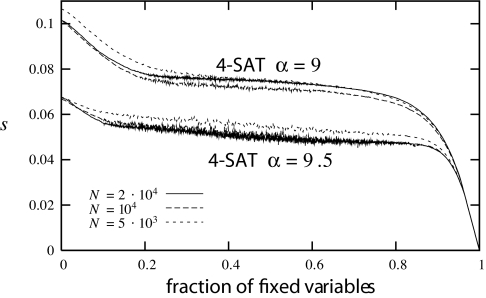

Second, it would be meaningful to weight uniformly the solutions when computing the surveys Pu→v(ν). In the cavity method jargon, this corresponds to using a 1RSB Parisi parameter r = 1 instead of r = 0 as is done in ref. 6. It is a simple algebraic fact of the cavity formalism that for r = 1 the means of the SP surveys satisfy the BP equations. Since the means are the most important statistics used by SP to find a solution, BP should perform roughly as SP. Both arguments suggest that BP should perform well up to the condensation point αc(k). We tested this conclusion on 4-SAT at α = 9.5 ∈ [αd(4), αc(4)], through the following numerical experiment (compare Fig. 6). (i) Run BP for tmax iterations. (ii) Compute the BP estimates νi(x) for the single-bit marginals and choose the one with largest bias. (iii) Fix xi = 0 or 1 with probabilities νi(0), νi(1). (iv) Reduce the formula accordingly (i.e., eliminate the constraints satisfied by the assignment of xi and reduce the ones violated). This cycle is repeated until a solution is found or a contradiction is encountered. If the marginals νi were correct, this procedure would provide a satisfying assignment sampled uniformly from μ

were correct, this procedure would provide a satisfying assignment sampled uniformly from μ . In fact, we found a solution with finite probability (≈0.4), despite the fact that α > αd(4). The experiment was repeated at α = 9 with a similar fraction of successes.

. In fact, we found a solution with finite probability (≈0.4), despite the fact that α > αd(4). The experiment was repeated at α = 9 with a similar fraction of successes.

Fig. 6.

Performance of BP heuristics on random 4-SAT formulae. The residual entropy per spin N−1 log Z (here we estimate it within Bethe approximation) as a function of the fraction of fixed variables. tmax = 20 in these experiments.

Above the condensation transition, correlations become too strong and the BP fixed point no longer describes the measure μ. Indeed the same algorithm proved unsuccessful at α = 9.7 ∈ [αc(4), αs(4)]. As mentioned above, SP can be regarded as an inference algorithm in a modified graphical model that weights preferentially small clusters. More precisely, it selects clusters of size eNs̄ with s̄ maximizing the complexity Σ(s). With respect to the new measure, the weak correlation condition in Eq. 5 still holds and allows one to perform inference by message passing.

Within the cavity formalism, the optimal choice would be to take r ≈ m(α) ∈ [0, 1). Any parameter corresponding to a non-negative complexity r ∈ [0, m(α)] should, however, give good results. SP corresponds to the choice r = 0 that has some definite computational advantages, since messages have a compact representation in this case (they are real numbers).

Cavity Formalism, Tree Reconstruction, and SP

This section provides some technical elements of our computation. The reader not familiar with this topic should consult refs. 6, 11, 25, and 32 for a more extensive introduction. The expert reader will find a new derivation and some hints of how we overcame technical difficulties.

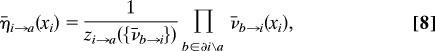

On a tree factor graph, the marginals of μ , Eq. 1 can be computed recursively. The edge of the factor graph from variable node i to constraint node a (respectively from a to i) carries “message” η̄i→a (ν̄a→i), a probability measure on 𝒳 defined as the marginal of xi in the modified graphical model obtained by deleting constraint node a (resp. all constraint nodes around i apart from a). The messages are determined by the equations:

, Eq. 1 can be computed recursively. The edge of the factor graph from variable node i to constraint node a (respectively from a to i) carries “message” η̄i→a (ν̄a→i), a probability measure on 𝒳 defined as the marginal of xi in the modified graphical model obtained by deleting constraint node a (resp. all constraint nodes around i apart from a). The messages are determined by the equations:

|

|

where ∂u is the set of nodes adjacent to u, \ denotes the set subtraction operation, and xA = {xj : j ∈ A}. These are just the BP equations for the model (1). The constants zi→a, ẑa→i are uniquely determined from the normalization conditions Σxi η̄i→a(xi) = Σxi v̄a→i(xi) = 1. In the following we refer to these equations by introducing functions fi→a , fa→i

, fa→i such that:

such that:

The marginals of μ are then computed from the solution of these equations. For instance μ(xi) is a function of the messages ν̄a→i from neighboring function nodes.

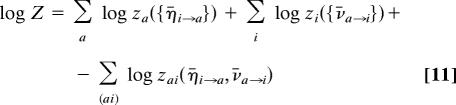

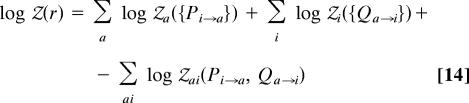

The log number of solutions, log Z, can be expressed as a sum of contributions that are local functions of the messages that solve Eqs. 8 and 9:

|

where the last sum is over undirected edges in the factor graph and

|

Each term z gives the change in the number of solutions when merging different subtrees (for instance, log zi is the change in entropy when the subtrees around i are glued together). This expression coincides with the Bethe free-energy (31) as expressed in terms of messages.

To move from trees to loopy graphs, we first consider an intermediate step in which the factor graph is still a tree but a subset of the variables, xB = {xj : j ∈ B} is fixed. We are therefore replacing the measure μ (compare Eq. 1), with the conditional one μ( · |xB). In physics terms, the variables in xB specify a boundary condition.

(compare Eq. 1), with the conditional one μ( · |xB). In physics terms, the variables in xB specify a boundary condition.

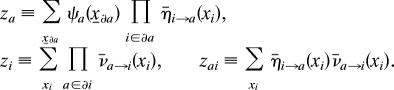

Notice that the measure μ( · |xB) still factorizes according to (a subgraph of) the original factor graph. As a consequence, the conditional marginals μ(xi|xB) can be computed along the same lines as above. The messages ηi→axB and νa→ixB obey Eq. 10, with an appropriate boundary condition for messages from B. Also, the number of solutions that take values xB on j ∈ B [call it Z(xB)] can be computed by using Eq. 11.

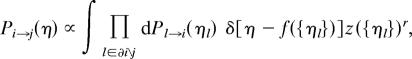

Next, we want to consider the boundary variables themselves as random variables. More precisely, given r ∈ ℝ, we let the boundary to be xB with probability

where 𝒵(r) enforces the normalization ΣxB μ̃(xB) = 1. Define Pi→a(η) as the probability density of ηi→axB when xB is drawn from μ̃, and similarly Qa→i(ν). One can show that Eq. 8 implies the following relation between messages distributions:

|

where fi→a is the function defined in Eq. 10, zi→a is determined by Eq. 8, and 𝒵i→a is a normalization. A similar equation holds for Qa→i(ν). These coincide with the 1RSB equations with Parisi parameter r. SP corresponds to a particular parameterization of Eq. 13 (and the analogous one expressing Qa→i in terms of the Ps) valid for r = 0.

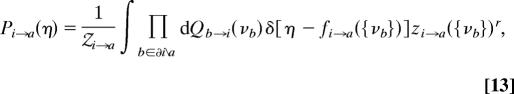

The log-partition function Φ(r) = log 𝒵(r) admits an expression that is analogous to Eq. 11,

|

where the shifts 𝒵(⋯) are defined through moments of order r of the zs, and sums run over vertices not in B. For instance 𝒵ai is the expectation of zai(η, ν)r when η, ν are independent random variables with distribution (respectively) Pi→a and Qa→i. The (Shannon) entropy of the distribution μ̃ is given by Σ(r) = Φ(r) − rΦ′(r).

As mentioned, the above derivation holds for tree factor graphs. Nevertheless, the local recursion equations 10 and 13 can be used as an heuristics on loopy factor graphs as well. Further, although we justified Eq. 13 through the introduction of a random boundary condition xB, we can take B = ∅ and still look for nondegenerate solutions of such equations.

Starting from an arbitrary initialization of the messages, the recursions are iterated until an approximate fixed point is reached. After convergence, the distributions Pi→a, Qa→i can be used to evaluate the potential Φ(r) (compare Eq. 14). From this we compute the complexity function Σ(r)  Φ(r) − rΦ′(r) that gives access to the decomposition of μ

Φ(r) − rΦ′(r) that gives access to the decomposition of μ in pure states. More precisely, Σ(r) is the exponential growth rate of the number of states with internal entropy s = Φ′(r). This is how curves such as in Fig. 4 are traced.

in pure states. More precisely, Σ(r) is the exponential growth rate of the number of states with internal entropy s = Φ′(r). This is how curves such as in Fig. 4 are traced.

In practice, it can be convenient to consider the distributions of messages Pi→a, Qa→i with respect to the graph realization. This approach is sometimes referred to as density evolution in coding theory. If one considers a uniformly random directed edge i → a (or a → i) in a rCSP instance, the corresponding message will be a random variable. After t parallel updates according to Eq. 13, the message distribution converges (in the N → ∞ limit) to a well defined law 𝒫t (for variable to constraint messages) or 𝒬t (for constraint to variable). As t → ∞, these converge to a fixed point 𝒫, 𝒬 that satisfies the distributional equivalent of Eq. 13.

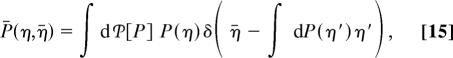

To be definite, let us consider the case of graph coloring. Since the compatibility functions are pairwise in this case (i.e., k = 2 in Eq. 1), the constraint-to-variable messages can be eliminated and Eq. 13 takes the form:

|

where f is defined by η(x) = z−1 Πl(1 − ηl(x)) and z by normalization. The distribution of Pi→j is then assumed to satisfy a distributional version of the last equation. In the special case of random regular graphs, a solution is obtained by assuming that Pi→j is indeed independent of the graph realization and i, j. One has therefore simply to set Pi→j = P in the above and solve it for P.

In general, finding messages distributions 𝒫, 𝒬 that satisfy the distributional version of Eq. 13 is an extremely challenging task, even numerically. We adopted the population dynamics method (32), which represents the distributions by samples (this is closely related to particle filters in statistics). For instance, one represents 𝒫 by a sample of Ps, each encoded as a list of ηs. Since computer memory drastically limits the samples size, and thus the precision of the results, we worked in two directions: (i) we analytically solved the distributional equations for large k (in the case of k-SAT) or q (q-Col); and (ii) we identified and exploited simplifications arising for special values of r.

Let us briefly discuss the second point. Simplifications emerge for r = 0 and r = 1. The first case corresponds to SP. Refs. 6 and 11 showed how to compute efficiently Σ(r = 0) through population dynamics. Building on this, we could show that the clusters internal entropy s(r = 0) can be computed at a small supplementary cost.

The value r = 1 corresponds instead to the tree reconstruction problem (33): In this case μ̃(xB) (compare Eq. 12), coincides with the marginal of μ. Averaging Eq. 13 (and the analogous one for Qa→i) one obtains the BP equations (8 and 9), e.g., ʃ dPi→a(η) η = η̄i→a. These remarks can be used to show that the constrained averages:

|

and Q̄(ν, ν̄) (defined analogously) satisfy closed equations that are much easier to solve numerically.

Acknowledgments

We thank J. Kurchan and M. Mézard for stimulating discussions. This work has been partially supported by the European Commission under Contracts EVERGROW and STIPCO.

Abbreviations

- CSP

constraint satisfaction problem

- rCSP

random CSP

- k-SAT

k-satisfiability

- q-COL

q-coloring

- SP

survey propagation

- BP

belief propagation

- 1RSB

one-step replica symmetry breaking.

Footnotes

The authors declare no conflict of interest.

For coloring l-regular graphs, we can use l = 2α as a parameter. When considering a phase transition defined through some property 𝒫 increasing in l, we adopt the convention of denoting its location through the smallest integer such that 𝒫 holds.

The term “with high probability” means with probability approaching one as N → ∞.

One possible approach to the definition of a Monte Carlo Markov chain algorithm is to relax the constraints by setting ψa(⋯) = ϵ instead of 0 whenever the ath constraint is violated. Glauber dynamics can then be used to sample from the relaxed measure μϵ .

.

More precisely μ is obtained as a limit of free boundary measures.

is obtained as a limit of free boundary measures.

The precise picture depends on the value of k (resp. q) and can be somewhat more complicated.

Notice that for q-COL, since l is an integer, the “condensated” regime [lc(q), ls (q)] may be empty. This is the case for q = 4. On the contrary, q = 5 is always condensated for ld < l < ls.

This paradox was noticed independently by Dimitris Achlioptas (personal communication).

References

- 1.Garey MR, Johnson DS. Computers and Intractability: A Guide to the Theory of NP-Completeness. New York: Freeman; 1979. [Google Scholar]

- 2.Kschischang FR, Frey BJ, Loeliger H-A. IEEE Trans Inform Theory. 2001;47:498–519. [Google Scholar]

- 3.Franco J, Paull M. Discrete Appl Math. 1983;5:77–87. [Google Scholar]

- 4.Selman B, Kautz HA, Cohen B. Proceedings of the Twelfth National Conference on Artificial Intelligence; Seattle WA: AAAI Press; 1994. pp. 337–343. [Google Scholar]

- 5.Achlioptas D, Sorkin GB. Proceedings of the 41st Annual Symposium on the Foundations of Computer Science; Los Alamitos, CA: IEEE Press; 2000. pp. 590–600. [Google Scholar]

- 6.Mézard M, Parisi G, Zecchina R. Science. 2002;297:812–815. doi: 10.1126/science.1073287. [DOI] [PubMed] [Google Scholar]

- 7.Bayati M, Shah D, Sharma M. Proceedings of the IEEE International Symposium on Information Theory; Los Alamitos, CA: IEEE Press; 2006. pp. 557–561. [Google Scholar]

- 8.Gamarnik D, Bandyopadhyay A. Proceedings of the 17th Annual ACM-SIAM Symposium on Discrete Algorithms; New York: ACM Press; 2006. pp. 890–899. [Google Scholar]

- 9.Montanari A, Shah D. Proceedings of the 18th Annual ACM-SIAM Symposium on Discrete Algorithms; New York: ACM Press; 2007. pp. 1255–1264. [Google Scholar]

- 10.Friedgut E. J Am Math Soc. 1999;12:1017–1054. [Google Scholar]

- 11.Mertens S, Mézard M, Zecchina R. Rand Struct Alg. 2006;28:340–373. [Google Scholar]

- 12.Achlioptas D, Naor A, Peres Y. Nature. 2005;435:759–764. doi: 10.1038/nature03602. [DOI] [PubMed] [Google Scholar]

- 13.Mulet R, Pagnani A, Weigt M, Zecchina R. Phys Rev Lett. 2002;89:268701. doi: 10.1103/PhysRevLett.89.268701. [DOI] [PubMed] [Google Scholar]

- 14.Krzakala F, Pagnani A, Weigt M. Phys Rev E. 2004;70:046705. doi: 10.1103/PhysRevE.70.046705. [DOI] [PubMed] [Google Scholar]

- 15.Luczak T. Combinatorica. 1991;11:45–54. [Google Scholar]

- 16.Mézard M, Montanari A. J Stat Phys. 2006;124:1317–1350. [Google Scholar]

- 17.Georgii HO. Gibbs Measures and Phase Transitions. Berlin: De Gruyter; 1988. [Google Scholar]

- 18.Jonasson J. Stat Prob Lett. 2002;57:243–248. [Google Scholar]

- 19.Aurell E, Gordon U, Kirkpatrick S. Advances in Neural Information Processing Systems. Cambridge, MA: MIT Press; 2004. pp. 49–56. [Google Scholar]

- 20.Maneva EN, Mossel E, Wainwright MJ. Proceedings of the 16th Annual ACM-SIAM Symposium on Discrete Algorithms; New York: ACM Press; 2005. pp. 1089–1098. [Google Scholar]

- 21.Biroli G, Monasson R, Weigt M. Eur Phys J B. 2000;14:551–568. [Google Scholar]

- 22.Mézard M, Mora T, Zecchina R. Phys Rev Lett. 2005;94:197205. doi: 10.1103/PhysRevLett.94.197205. [DOI] [PubMed] [Google Scholar]

- 23.Achlioptas D, Ricci-Tersenghi F. Proceedings of the 38th Annual ACM Symposium on Theory of Computing; New York: ACM Press; 2006. pp. 130–139. [Google Scholar]

- 24.Braunstein A, Zecchina R. J Stat Mech. 2004:P06007. [Google Scholar]

- 25.Mézard M, Palassini M, Rivoire O. Phys Rev Lett. 2005;95:200202. doi: 10.1103/PhysRevLett.95.200202. [DOI] [PubMed] [Google Scholar]

- 26.Ruelle D. Commun Math Phys. 1987;108:225–239. [Google Scholar]

- 27.Talagrand M. C R Acad Sci Paris Ser I. 2000;331:939–942. [Google Scholar]

- 28.Kesten H, Stigum BP. Ann Math Stat. 1966;37:1463–1481. [Google Scholar]

- 29.Kesten H, Stigum BP. J Math Anal Appl. 1966;17:309–338. [Google Scholar]

- 30.Montanari A, Ricci-Tersenghi F. Phys Rev B. 2004;70:134406. [Google Scholar]

- 31.Yedidia J, Freeman WT, Weiss Y. IEEE Trans Inform Theory. 2005;51:2282–2312. [Google Scholar]

- 32.Mézard M, Parisi G. Eur Phys J B. 2001;20:217–233. [Google Scholar]

- 33.Mossel E, Peres Y. Ann Appl Probab. 2003;13:817–844. [Google Scholar]