Abstract

Objective

In recent years, influenza surveillance data has expanded to include alternative sources such as emergency department data, absenteeism reports, pharmaceutical sales, website access and health advice calls. This study presents a review of alternative data sources for influenza surveillance, summarizes the time advantage or timeliness of each source relative to traditional reporting and discusses the strengths and weaknesses of competing approaches.

Methods

A literature search was conducted on Medline to identify relevant articles published after 1990. A total of 15 articles were obtained that reported the timeliness of an influenza surveillance system. Timeliness was described by peak comparison, aberration detection comparison and correlation.

Results

Overall, the data sources were highly correlated with traditional sources and had variable timeliness. Over-the-counter pharmaceutical sales, emergency visits, absenteeism and health calls appear to be more timely than physician diagnoses, sentinel influenza-like-illness surveillance and virological confirmation.

Conclusions

The methods used to describe timeliness vary greatly between studies and hence no strong conclusions regarding the most timely source/s of data can be reached. Future studies should apply the aberration detection method to determine data source timeliness in preference to the peak comparison method and correlation.

Introduction

Surveillance is the collection and analysis of data to facilitate the timely dissemination of results. 1 Traditionally, health departments monitor influenza via legally mandated reporting by physicians and laboratories. 2 Current research has focused on the potential of alternative data sources including emergency department data, absenteeism reports, pharmaceutical sales, health advice calls, triage calls and website access for the timely detection of influenza outbreaks. Despite this research, it is still not known which data source is the mostly timely for influenza surveillance.

Background

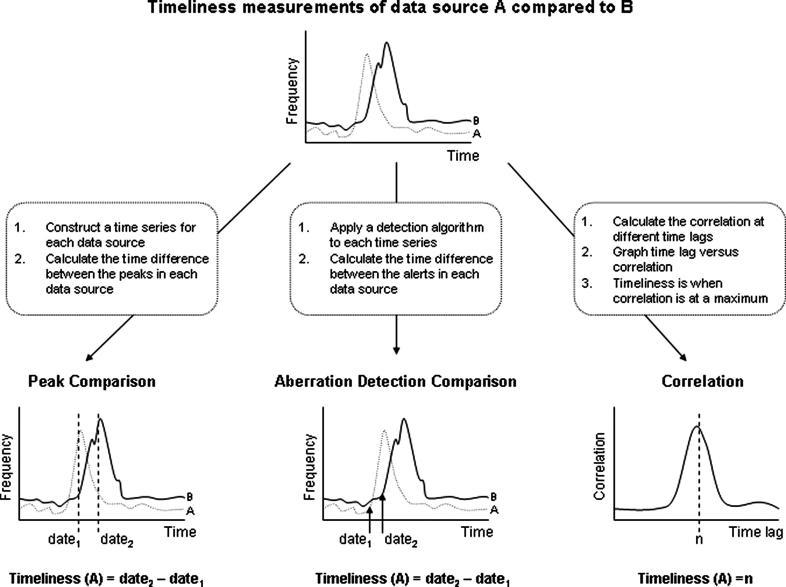

Timeliness for influenza and influenza-like-illness (ILI) surveillance has been described using various methods with different levels of complexity (▶). Timeliness does not have a well-established definition represented by a mathematical equation. 3 However, researchers generally define timeliness as the difference between the time an event occurs and the time the reference standard for that event occurs. In the literature timeliness has been used interchangeably with earliness and time lead. 4,5

Figure 1.

Three methods for determining the timeliness of data source A compared to data source B.

Three methods have been used to compare the timeliness of different data sources. These are peak comparison, aberration detection comparison and correlation. Peak comparison involves calculating the time difference between the peak in one data source compared to another. This method has been used in several studies. 6–8

Aberration detection involves comparing the date of alert generated by an algorithm based on one data source against the date of alert in another. Aberration detection can be based on a simple threshold where an alert is generated when the time series exceeds a specific value. 8–10 Thresholds have been based on two or three standard deviations above the mean 10 or arbitrary sales thresholds for over-the-counter pharmaceuticals. 8 Aberration detection based on a threshold is simple and able to detect shifts in excess of the specified threshold rapidly, 11 however it does not account for temporal features in the data such as day-of-week effects and seasonality.

More complex algorithms used for the detection of influenza outbreaks include moving averages, 4,12 scan statistics and its variations 12,13 and the cumulative sum (CUSUM). 14,15 Moving averages and CUSUMs are based on quality control methods that set a control limit or threshold. 16 The moving average calculates the mean of the previous values and compares it to the current value. Data closer in time to the current day can be given a greater weight in the calculation of the average more than data further in the past, as is the case with an exponentially weighted moving average (EWMA). Moving average charts are more sensitive than thresholds at detecting small shifts in the process average. 17 However, the predictions become accustomed to increasing counts during the early stages of the outbreak, which can increase the time to alert. 16 This problem is the prime motivation for using the CUSUM approach.

The CUSUM method involves calculating the cumulative sum over time of the differences between observed counts and a reference value which represents the in-control mean. 18 As the algorithm is based on the accumulation of differences, the CUSUM detects an aberration very quickly and is able to detect small shifts from the mean. 16,18 However, the algorithm will signal a false alert if changes in the underlying process not associated with an outbreak occur, such as a steady rise in the mean. 19

Spatial scan statistics are used to detect geographical disease clusters of high or low incidence and evaluate their statistical significance using likelihood-ratio tests. 20 Spatial scan statistics identify a significant excess of cases over a defined spatial region and can adjust for multiple hypothesis testing. 21 It differs from the other algorithms described in its ability to detect spatial as well as temporal clusters.

Timeliness has been assessed by correlation methods, including the cross correlation function (CCF) 4,5,15,22,23 and the Spearman rank correlation. 24 In terms of correlation, timeliness is defined as the time lag at which the correlation between two data sources is at maximum significance. 23 Specifically, the correlation between two time series is calculated, and then one of the series is moved in time relative to the other, representing different time lags. The correlation is calculated for each time lag, and the timeliness of a data source relative to another is determined by the correlation coefficients that are statistically significant.

The cross-correlation function calculates a numerical value that describes the similarity of two curves over a defined period, with two identical curves having a CCF value of one. 25 To satisfy the assumption of normality, the data have to be normalized prior to computing the cross correlation function. A significant CCF at a specific time lag ‘x’ indicates that the peak in one data source occurs ‘x’ time periods before the peak in another data source. A significant CCF at a lag of zero indicates the peaks occur at the same time. 25 If the assumption of normality is violated, a method based on ranked data, the Spearman rank correlation can be used. When interpreting correlation values, low correlations may be statistically significant due to the randomness and variability in biosurveillance data sources.

In this paper we review the timeliness of biosurveillance data sources for influenza and ILI surveillance in order to assess the methods for determining timeliness and the most timely source.

Methods

We conducted a Medline literature search of papers published after 1990 to identify studies on influenza surveillance. Key words included influenza, timeliness, syndromic, biosurveillance and surveillance. The reference lists of located publications were scanned for further relevant publications. Additional articles and reports were identified with an internet search engine using the same key words. Searches were limited to articles published in the English language. The search identified 73 articles related to influenza surveillance and 112 related to biosurveillance. From these, articles were included in the review if they described, tested or reviewed in detail a surveillance system for influenza or ILI and included timeliness in their evaluation criteria. Articles that did not include any information regarding timeliness, or the method for determining timeliness were excluded from this review. In total, 15 articles (8%) met this selection criterion.

We reviewed these articles and describe the reported detection method, number of outbreaks, gold standard and timeliness. Timeliness was described relative to the gold standard in days, and an average is given if there were multiple sites or years compared. Articles that used a correlation are described in terms of timeliness at a maximum or significant correlation.

Results

Of the 15 articles reviewed, two used the peak comparison method, seven used aberration detection comparison, three used correlations and three used a combination of aberration detection and correlation. A summary of the findings is located in ▶.

Table 1.

Table 1 Reported Timeliness, Method and Comparison of Data Sources in the Literature

| Data Source | Timeliness method (algorithm) | Comparison | Mean Timeliness (range) (reference) |

|---|---|---|---|

| OTC sales | Peak comparison | Virological confirmation | 7 days (6) |

| Cross Correlation | ICD-9 ∗ diagnosis |

|

|

| Cross Correlation | ICD-9 diagnosis |

|

|

| Aberration detection comparison (threshold) | ICD-10 diagnosis | 14 days (8) | |

| Emergency visit | Aberration detection comparison (threshold) | Virological confirmation | 11.2 days (7-28) (9) |

| Aberration detection comparison (threshold) | CDC influenza activity | 14 days (10) | |

| Aberration detection comparison (SMART, Scan statistic) | Virological confirmation | 0 days (12) | |

| Aberration detection comparison (CUSUM) | Sentinel ILI | 7 days (13) | |

| Aberration detection comparison (CUSUM) | Virological confirmation | 1 day (26) | |

| Aberration detection comparison (Scan statistic) | Virological confirmation | 14 days (14) | |

| Aberration detection comparison (Scan statistic) | Sentinel ILI | 21 days (14) | |

| Cross Correlation | ICD-9 diagnosis | 7.4 days (CI -8.34-43.3) (15) | |

| Aberration detection comparison (EWMA) | ICD-9 diagnosis | 10.3 days (95% CI -15.15, 35.5) (15) | |

| Emergency visit >65 yrs | Aberration detection comparison (CUSUM) | Virological confirmation | 24 days (26) |

| Absenteeism (school) | Peak comparison | Sentinel ILI | 2.8 days (0-7) (7) |

| Absenteeism (work) | Aberration detection comparison (threshold) | Virological confirmation | 8.4 days (7-21) (9) |

| Telephone triage (ED) | Cross Correlation | CDC influenza activity |

|

| Health advice calls | Spearman rank correlation | Sentinel ILI |

|

∗ International Classification of Diseases (ICD), 9th or 10th Revision.

Peak Comparison

Two studies used the peak comparison method to assess timeliness. Welliver and colleagues compared the weekly percentage change in non-prescription cold remedy sales in a Los Angeles supermarket chain to the proportion of positive influenza isolates from children presenting to a pediatric hospital. 6 In one season analysed, they found that sales of non-prescription cold remedies peaked seven days earlier than the peak in virus isolation. 6

The second study was a school-based influenza surveillance system that included 44 schools in a Colorado county. 7 This study compared five influenza seasons and found varied results. In two of the five years, absenteeism surveillance peaked seven days earlier than sentinel surveillance. However for the other three years, there was no time difference between peaks. 7 Hence, over the five year period, the peak in school-based absenteeism occurred, on average, 2.8 days earlier than the peak in ILI sentinel influenza surveillance.

Aberration Detection Comparison

Seven of the studies reviewed used aberration detection comparison. Three studies used a threshold to define alerts, two used the CUSUM and two used the scan statistic or a variation of this algorithm. An English study applied the peak comparison method and thresholds for comparing over-the-counter (OTC) sales of cough/cold remedies to emergency department admission data. 8 Analysis demonstrated that peak sales both preceded and lagged the peak in admissions in the years investigated. However, increases above a defined threshold of OTC sales occurred 14 days before the peak in emergency department admissions in all three years of the study. 8

Quenel and colleagues used thresholds to determine the date of alert for various health service based indicators from hospitals and absenteeism records. 9 The threshold above which an alert was declared was defined as the upper limit of the 95% confidence interval of the weekly average calculated from non-epidemic weeks. An epidemic week occurred when ≥1% of specimens were positive for influenza A. The health service based indicators increased before virological confirmation over the five year period. The emergency visit indicator was the earliest (average 11.2 days, range 7–28 days before virological confirmation) followed by sick-leave reports collected by general practitioners (average 8.4 days, range 7–21 days). 9

The timeliness of algorithmic alerts from an emergency department surveillance system based on chief complaint data was compared to the date of the influenza peak identified by the CDC. 10 An alert was defined as a value that exceeded two standard deviations greater than a historical constant mean on two of three consecutive days. For the influenza season investigated, emergency department chief complaint data alerted 14 days earlier than the peak in CDC influenza reports.

The use of emergency department medical records for the early detection of ILI was investigated for one influenza season in healthcare organizations in Minnesota. 26 Visual inspection of ILI counts and influenza and pneumonia deaths indicated that ILI counts rose several weeks before the peak in the number of deaths. A CUSUM detection algorithm signalled a confirmed influenza outbreak one day before the first virologically confirmed isolate. When this analysis was repeated with age stratified data (age >65 years) the algorithm signalled an alert 24 days earlier. 26

The CUSUM algorithm was also used to detect trends in fever and respiratory distress occurrences indicative of influenza at seven hospitals in Virginia. 13 In one of these hospitals, syndromic data revealed an increase in these two syndromes seven days earlier than an increase in sentinel influenza surveillance. 13

A syndromic surveillance system based in Colorado identified unusual clusters of ILI using three statistical models. These included the small area method (SMART; small area regression and testing), spatio-temporal method, and a purely temporal method (spatio-temporal scan statistic using 100% of the area). These algorithms were used to compare the timeliness of syndromic chief complaint data with laboratory-confirmed influenza cases. They found that despite both data sources showing substantial increases during the same calendar week, there was a greater absolute increase in syndromic surveillance episodes. 12

Heffernan and colleagues applied the temporal scan statistic to emergency department chief complaints. 14 The signal produced from respiratory and fever syndromes provided the earliest indication of community-wide influenza activity in New York City for the 2001–02 influenza season. The signal occurred 14 days before an increase in the number of positive influenza isolates and 21 days before an increase in the number of sentinel ILI reports. However, the size of these increases and method for determining the presence of an increase were not described.

Correlation

Three studies assessed timeliness using the cross-correlation function, two studies used both the cross correlation function and a moving average and one study used the Spearman rank correlation and a moving average.

Espino and colleagues 22 determined the CCF of regional and state influenza activity to emergency room telephone triage data based on ten hospitals in a major US city. They found that telephone triage was seven days (correlation 0.25) ahead of state influenza activity and 28 days (correlation 0.25) ahead of regional influenza activity. Hence, at this peak correlation, emergency room telephone triage was on average 17.5 days ahead of all influenza activity.

Magruder found that after controlling for day-of-week and holiday effects, the cross correlation function peaked between 0.86–0.93 for OTC sales and physician diagnoses. At this peak correlation, an increase in OTC sales occurred on average 2.8 days (range 2:7 days) before physician diagnoses based on two influenza seasons. 5

Johnson et al. investigated the correlation between influenza article access on the internet and CDC surveillance data using the CCF. 23 Although there was a moderately strong correlation between web access and influenza reports (ranged 0.71–0.80), the timeliness of this method was variable and hence the authors could not draw a strong conclusion. 23

One pediatric study used both the CCF and an exponentially weighted moving average (EWMA) to determine the timeliness of free-text chief complaints in the emergency department to respiratory discharge diagnoses. 15 The mean timeliness calculated across different values of the weighting parameter in the EWMA analysis varied from −11.7 to 32.7 with an overall mean of 10.3 days (95% CI −15.2:35.5). Cross correlation analyses of the three outbreaks resulted in an average timeliness of 7.4 days (95% CI −8.3, 43.3). 15

Hogan and colleagues also used the combined aberration detection-correlation method. 4 The CCF and an EWMA algorithm were used to determine the timeliness of sales of OTC electrolyte products for the detection of respiratory and diarrhoeal outbreaks. 4 Over the three year study period, the correlation of electrolyte sales to hospital diagnoses based on raw data was 0.9 (95% CI 0.87–0.93) and OTC sales preceded diagnoses by 1.7 weeks (95% CI 0.5–2.9) for respiratory and diarrhoeal outbreaks. Separate timeliness results were not reported for the respiratory outbreaks.

Doroshenko and colleagues applied an autoregressive moving average model and Spearman rank correlation to assess the timeliness of calls to a national telephone advice service and ILI sentinel reporting. They found statistically significant but weak correlations up to 21 days lag, suggesting that ILI calls occurred 7–21 days earlier than an increase in consultations recorded by a sentinel surveillance network. 24

Discussion

This study reviewed the reported timeliness of various data sources for influenza surveillance. These data sources included OTC sales, emergency department visits, absenteeism, telephone triage and calls to a health advice line. The advantage of determining the most timely data source/s for influenza surveillance lies in their potential use in early warning systems that could lead to the early implementation of control measures including antiviral therapy and vaccination campaigns.

Multiple studies have assessed the timeliness of emergency department data. Aberration detection comparison was the most frequently used method and algorithms were based on thresholds, scan statistics, CUSUM and moving averages. The reported timeliness of emergency department data varied between studies and ranged from 0–24 days. The maximum reported timeliness of emergency department data was 21 days before sentinel surveillance 14 and up to 24 days before virological confirmation when data were stratified by age. 26 One study used the cross correlation function which demonstrated that emergency department diagnoses were more timely than physician diagnoses at maximum correlation. 15

The potential timeliness of OTC sales as an early indicator for influenza or ILI ranged between 2.8–14 days. High correlations between OTC sales and physician diagnoses were found by two studies. 4,5 However, peak comparisons demonstrated that OTC sales preceded and lagged the gold standards used in the analyses. 8 Based on correlation, OTC sales were more timely than physician diagnoses. 4,5 This was also supported by an aberration detection comparison, however the algorithm was not applied to the gold standard and only an alert-peak comparison was reported. 8

Telephone triage was assessed in one study and although this source had a low correlation with CDC influenza activity, it was almost 21 days earlier at maximum correlation. 22 At significant correlations, health advice calls occurred up to three weeks earlier than sentinel ILI surveillance. Less promising results of up to 8.4 days were reported from school and work absenteeism. 9

Pre-diagnostic syndromic and biosurveillance data sources have several advantages over traditional surveillance. School-based absenteeism has the advantage of monitoring the sub-population of children that have been reported to be sentinels for ILI outbreaks. 7 Surveillance that employs telephone usage of advice, triage and emergency calls has the advantage of leveraging systems already in existence and usually recorded in an electronic format. In comparison to routinely obtaining viral cultures, monitoring OTC sales is simple and inexpensive. 6 A major limitation to monitoring ED data is that adults with mild respiratory symptoms usually do not seek medical care in emergency departments. 14

The methods for determining timeliness included peak comparison, aberration detection comparison and correlation. The peak comparison method is retrospective and can be used as a preliminary measure to determine the potential timeliness of one data source compared to another. However, comparing the peaks of two time series does not address the question of when an outbreak would be detected as the peak is not always the feature of interest. The peak comparison method does not account for the size or the width of the peaks in each time series. An earlier peak in one data source does not necessarily translate to a timelier source of data when a detection algorithm is applied in a prospective setting. For example, an algorithm may alert first in one data source yet have a later peak in comparison to another.

The aberration detection method is used to answer the fundamental question of when an outbreak would be detected. However, a weakness of this method to be considered when comparing results based on timeliness is the bias associated with algorithm selection. Numerous algorithms can be applied to surveillance data, and for each algorithm, parameter selection affects the time at which the algorithm will alert. Interpreting the timeliness of one data source in a particular study location can be affected by the appropriateness of the algorithm and the parameters used in the model. Hence, algorithm parameters should be set to detect a defined increase that will satisfy an algorithmic alert in the data and reflect the temporal features (day-of-week, seasonality) for a specific location. A sensitivity analysis should also be conducted to investigate algorithm parameters.

As with peak comparison, correlations produce a preliminary measure of potential. Correlations are not influenced by algorithm selection bias and provide a measure of the relationship between two data sources. However, a limitation of the cross correlation method is that it is sensitive to large variations in the amplitude of the time series. 5 Suyama and colleagues noted that CCFs are useful in showing that one data source is more timely than another, however they cannot be used to define a change or level that might be indicative of a disease occurrence or outbreak as with aberration detection. 25

Several studies that used the aberration detection method compared the date of alarm in one data source with the date of the peak in the gold standard. This introduces bias as pre-diagnostic data sources would be expected to alarm earlier than the peak in the gold standard. This could be further biased by setting a low threshold value, thus resulting in an earlier date of detection relative to the peak and hence increasing the timeliness. In order to find a better estimate of timeliness between two data sources, the alerts generated by algorithms on both sources should be compared if a well established indicator doesn’t exist.

Biosurveillance methods provide an alternative surveillance mechanism for influenza, which is closely linked with the rise, peak and fall of culture positive cases. 7 Overall, the timeliness of the alternative data sources investigated in this review were variable, but generally more timely than the gold standards used for comparison. Alternative data sources show promise as early indicators for disease outbreaks, as they were highly correlated with traditional sources and more timely than physician diagnoses, sentinel ILI and virological confirmation. No strong conclusion regarding the most timely source of data could be reached, due to the fact that there is little standardisation between studies, and few have applied rigorous methods of assessment over long time periods. The simultaneous analyses of several data sources in a single location would improve the validity of comparisons. However, when assessing the suitability of a data source for early warning, timeliness is only one component to be considered. For future timeliness studies, correlation and peak comparisons can be used to give preliminary results regarding potential; however, aberration detection methods are required for evaluating outbreak detection.

Footnotes

This research was supported by an Australian Biosecurity CRC for Emerging Infectious Disease postgraduate scholarship.

References

- 1.Sosin D. Draft framework for evaluating syndromic surveillance systems J Urban Health 2003;80(2 (suppl 1)):i8-i13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Labus B. Practical applications of syndromic surveillance: 2003–2004 influenza season. 2004. Available at: http://www.naccho.org/topics/emergency/APC/E-Link/Archive_2004.cfm. Accessed on 10/11/2005.

- 3.Stoto M, Fricker R, Jain A, Davies-Cole JO, Glymph C, Kidane G, et al. Evaluating statistical methods for syndromic surveillanceIn: Wilson AG, Wilson GD, Olwell DH, editors. Statistical Methods in Counterterrorism. New York, NY: Springer; 2006. pp. 141-172.

- 4.Hogan WR, Tsui FC, Ivanov O, Gesteland PH, Grannis S, Overhage JM, et al. Detection of pediatric respiratory and diarrheal outbreaks from sales of over-the-counter electrolyte products J Am Med Inform Assoc 2003;10(6):555-562Nov-Dec. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Magruder S. Evaluation of over-the-counter pharmaceutical sales as a possible early warning indicator of human disease Johns Hopkins APL Tech Digest 2003;24(4):349-353. [Google Scholar]

- 6.Welliver RC, Cherry JD, Boyer KM, Deseda-Tous JE, Krause PJ, Dudley JP, et al. Sales of nonprescription cold remedies: a unique method of influenza surveillance Pediatr Res 1979;13(9):1015-1017. [DOI] [PubMed] [Google Scholar]

- 7.Lenaway DD, Ambler A. Evaluation of a school-based influenza surveillance system Public Health Rep 1995;110(3):333-337May-Jun. [PMC free article] [PubMed] [Google Scholar]

- 8.Davies GR, Finch RG. Sales of over-the-counter remedies as an early warning system for winter bed crises Clin Microbiol Infect 2003;9(8):858-863Aug. [DOI] [PubMed] [Google Scholar]

- 9.Quenel P, Dab W, Hannoun C, Cohen J. Sensitivity, specificity and predictive positive values of health service based indicators for the surveillance of influenza A epidemics Int J Epidemiol 1994;23:849-855. [DOI] [PubMed] [Google Scholar]

- 10.Irvin CB, Nouhan PP, Rice K. Syndromic analysis of computerized emergency department patients’ chief complaints: an opportunity for bioterrorism and influenza surveillance Ann Emerg Med 2003;41(4):447-452Apr. [DOI] [PubMed] [Google Scholar]

- 11.In: Lawson A, Kleinman K, editors. Spatial and syndromic surveillance for public health. 1 ed.. West Sussex, England: John Wiley & Sons, Ltd; 2005.

- 12.Ritzwoller D, Kleinman K, Palen T, Abrams A, Kaferly J, Yih W, et al. Comparison of syndromic surveillance and a sentinel provider system in detecting an influenza outbreak—Denver, Colorado, 2003 MMWR 2005;54(suppl):151-156. [PubMed] [Google Scholar]

- 13.Yuan CM, Love S, Wilson M. Syndromic surveillance at hospital emergency departments—southeastern Virginia Morb Mortal Weekly Rep 2004;53(suppl):56-58. [PubMed] [Google Scholar]

- 14.Heffernan R, Mostahari F, Das D, Karpati A, Kulldorff M, Weiss D. Syndromic surveillance in public health practice, New York City Emerg Infect Dis. 2004;10(5):858-864. [DOI] [PubMed] [Google Scholar]

- 15.Ivanov O, Gesteland PH, Hogan W, Mundorff MB, Wagner MM. Detection of pediatric respiratory and gastrointestinal outbreaks from free-text chief complaints AMIA Annu Symp Proc 2003:318-322. [PMC free article] [PubMed]

- 16.Wong WK, Moore AW. Classical time-series methods for biosurveillanceIn: Wagner MM, Moore AW, Aryel R, editors. Handbook of biosurveillance. Burlington: Academic Press; 2006. pp. 605.

- 17.SAS. Statistical Process Control. Statistics and Operations Research 2005. Available at: http://support.sas.com/rnd/app/qc/qc/qcspc.html. Accessed on 06/12/2005.

- 18.O’Brien S, Christie P. Do CuSums have a role in routine communicable disease surveillance? Public Health 1997;111:255-258. [DOI] [PubMed] [Google Scholar]

- 19.Moore A, Cooper G, Tsui F, Wagner M. Summary of Biosurveillance-relevant statistical and data mining technologies. 2002. Available at: http://www.autonlab.org/autonweb/showPaper.jsp?ID=moore-biosurv. Accessed on 07/15/04.

- 20.Kulldorff M. A spatial scan statistic Comm Stat Theory Methods 1997;26:1481-1496. [Google Scholar]

- 21.Kulldorff M. Spatial scan statistics: models, calculations, and applicationsBoston: Birkhauser; 1999.

- 22.Espino JU, Hogan WR, Wagner MM. Telephone triage: a timely data source for surveillance of influenza-like diseases AMIA Annu Symp Proc. 2003:215-219. [PMC free article] [PubMed]

- 23.Johnson HA, Wagner MM, Hogan WR, Chapman W, Olszewski RT, Dowling J, et al. Analysis of web access logs for surveillance of influenza Medinfo. 2004;2004:1202-1206. [PubMed] [Google Scholar]

- 24.Doroshenko A, Cooper D, Smith GE, Gerard E, Chinemana F, Verlander NQ, et al. Evaluation of syndromic surveillance based on national health service direct derived data—England and Wales MMWR 2005;54(suppl):117-122. [PubMed] [Google Scholar]

- 25.Suyama J, Sztajnkrycer M, Lindsell C, Otten EJ, Daniels JM, Kressel AB. Surveillance of infectious disease occurrences in the community: an analysis of symptom presentation in the emergency department Acad Emerg Med. 2003;10(7):753-763Jul. [DOI] [PubMed] [Google Scholar]

- 26.Miller B, Kassenborg H, Dunsmuir W, Griffith J, Hadidi M, Nordin JD, et al. Syndromic surveillance for influenzalike illness in ambulatory care network Emerg Infect Dis. 2004;10(10):1806-1811Oct. [DOI] [PMC free article] [PubMed] [Google Scholar]