Abstract

Background

Electronic health records (EHRs) have great potential to improve safety, quality, and efficiency in medicine. However, adoption has been slow, and a key concern has been that clinicians will require more time to complete their work using EHRs. Most previous studies addressing this issue have been done in primary care.

Objective

To assess the impact of using an EHR on specialists’ time.

Design

Prospective, before-after trial of the impact of an EHR on attending physician time in four specialty clinics at an integrated delivery system: cardiology, dermatology, endocrine, and pain.

Measurements

We used a time-motion method to measure physician time spent in one of 85 designated activities.

Results

Attending physicians were monitored before and after the switch from paper records to a web-based ambulatory EHR. Across all specialties, 15 physicians were observed treating 157 patients while still using paper-based records, and 15 physicians were observed treating 146 patients after adoption. Following EHR implementation, the average adjusted total time spent per patient across all specialties increased slightly but not significantly (Δ = 0.94 min., p = 0.83) from 28.8 (SE = 3.6) to 29.8 (SE = 3.6) min.

Conclusion

These data suggest that implementation of an EHR had little effect on overall visit time in specialty clinics.

Introduction

The safety and quality of patient care represent central issues in medicine in the U.S., and both could be better, with an estimated 40,000 fatal events attributed to medical error each year. 1 Electronic health records (EHRs) represent one possible means to efficiently improving medical decision making, coordinating medical providers, and linking order entry through an organized and accessible collection of medical data. Clinical decision support systems can be embedded in EHRs, and subsequent improvements in preventive care have included increased rates of vaccinations and discharge with appropriate anti-coagulation medications, as well as increased physician adoption of practice guidelines in HIV patients. 2,3 Even though studies have reported greater physician satisfaction 4,5 and fewer medication errors with the use of EHRs and computerized physician order entry, 6 just 23.9% of US physicians reported using office-based EHRs in the 2005 National Ambulatory Medical Care Survey. 7

While issues around financial cost may represent the most important barrier to rapid adoption of health information technology, problems with physician acceptance are also pivotal. 8,9 In addition to the negative attitudes of some physicians toward information technology (IT), user difficulties with technology, and high start-up costs with slow and uncertain financial payoffs, a major issue for physicians is their unease with EHRs’ potential impact on workflow. 10,11 Some studies have described the process changes resulting from EHR use in clinics, and among doctors, the constant pressure to move more quickly is frequently cited as a major concern. 12,13 In response, recommendations for effective electronic clinical support include “time is everything,” “anticipate needs and deliver in real time,” “fit into the user’s workflow,” and “recognize that physicians will strongly resist stopping.” 10

Previous evaluations of workflow and clinical processes have included work sampling and self-reporting surveys; 14 however, time-motion, in which an observer follows a subject and continually records the nature and duration of every activity in a data collection tool, is the most precise standard. 15 Existing time-motion studies have examined the effect of EHRs within primary care; 16,17 however limited data is available for another important set of clinics, specialty care. Unlike general internists, specialists assume a very focused approach to their clinic visits, perform targeted activities, and experience high clinic volumes. The broad category of specialty care also encompasses multiple fields, ranging from cardiology to dermatology. The combination of multiple variables in specialty care undoubtedly leads to great uncertainty and apprehension surrounding the impact a switch from paper to computer might have on clinician workflow. We thus decided to conduct time-motion studies in four different specialty clinics to better understand any change in attending specialists’ time prior to and after EHR implementation.

Methods

Setting

We performed time-motion observations at five outpatient, urban specialty care clinics in the Partners HealthCare System, a large integrated delivery system with academic affiliations in the greater Boston area. All practices were hospital-based (Brigham and Women’s Hospital Cardiology, Dermatology, and Endocrine; Massachusetts General Hospital Endocrine and Pain). The number of physicians observed at the different sites ranged from 2 to 7. The study was approved by the Partners Human Research Committee.

The pre-EHR implementation study period was 5/8/2002 to 8/13/2003, and the post-implementation study period was 12/3/2002 to 5/12/2004. All post-implementation observations occurred a minimum six months after transition, with a maximum of nine months, to allow practice habits to stabilize.

EHR Implementation

Outpatient care at Partners was primarily paper-based prior to EHR implementation. Patient chart information was written either by hand or transcribed via dictation. Prescriptions were handwritten, although lab results could be viewed electronically.

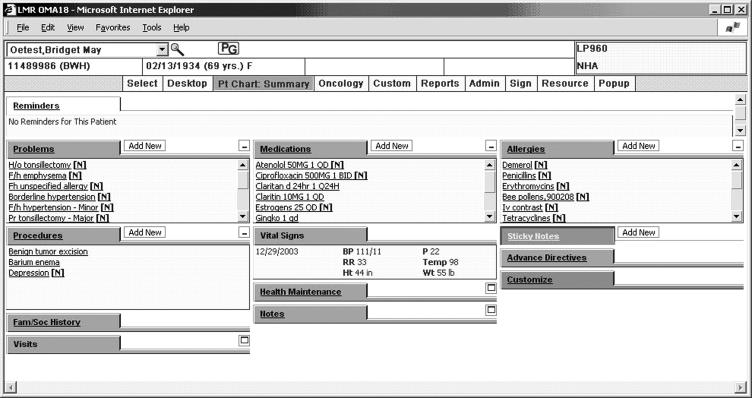

Post-implementation, physicians were given full usage of a home-grown, web-based electronic health record application known as the Longitudinal Medical Record (LMR) (▶). In the LMR, patient problem lists, medications, allergies, and health maintenance items were securely stored as structured clinical data, and test values and reports are directly populated by laboratories and procedure rooms. Physicians could also conduct medication order entry via the LMR and were assisted by clinical decision support including individualized reminders for health care maintenance. Additional notification and communication tools, such as a referral manager and results manager were also available.

Figure 1.

Main patient chart summary in the Longitudinal Medical Record (LMR), our electronic health record.

At the time of the study, however, physicians were not prohibited from dictating notes or handwriting notes and prescriptions. Paper charts were still available for reference, and encounter forms and test order requisitions remained paper-based.

Data Collection and Study Design

Our data collection approach was adapted from a time-motion methodology developed at the Regenstrief Institute for Health Care. 16 We emailed invitations, describing our study and possible observation dates to attending specialty physicians. Residents and fellows were excluded since they likely would not be available post-EHR implementation. Research assistants were trained to use the data collection tool and observed physicians during a clinic session. Each clinic session lasted approximately four hours.

Physicians and clinic staff informed patients of the study but emphasized that our only subjects were physicians and that medical care was completely independent of participation. Physicians were informed that we were performing time motion studies to assess how the computer affected their workflow. We did not, however, did not describe to our subjects the specific hypotheses of the study. Data were collected only with consent from both patient and physician and completely through passive observation. Observers had no interactions with patients or physicians, did not obtain any patient information, and identified physicians only by number.

Observation Categories

Observers documented physician activities by selecting descriptors from a predefined list of 85 activities, adapted initially from Overhage at Regenstrief and modified by Pizziferri et al. at Partners. 16,17

A large number of activities were used in order to facilitate data collection. Observers could easily identify activities and not have to make on-the-spot judgments about whether those activities were, for example, administrative versus patient-oriented. However, our intention was not to analyze 85 endpoints. Doing so would clearly lead to problems of multiple testing and invalid statistical interpretations.

Instead, we condensed the 85 activities into the same six analysis categories that were reported in Overhage and Pizziferri et al. By taking advantage of this prior experience and utilizing a previously established instrument, we have gained statistical power and limited the issue of multiple testing.

Observers identified each physician activity first based on “Major Categories” involving certain media (e.g., Computer-Looking for, Procedures, Talking) followed by more specific “Minor Categories” describing the respective detailed action (e.g., forms, phlebotomy, patient).

Since the “Major Categories” with subdivided “Minor Categories” resulted in 85 unique activities, we established six categories for the purposes of analysis: Direct Patient Care, Indirect Patient Care:Write, Indirect Patient Care:Read, Indirect Patient Care:Other, Administrative, and Miscellaneous.

Direct Patient Care included activities such as educating the patient or examining the patient. Indirect Patient Care included writing, reading, and other, and involved actions such as writing e-mails, reading patient charts, or finding digitized radiographs. Administrative covered matters such as referencing drugs or reviewing schedules. Miscellaneous contained remaining tasks such as reading articles, using the restroom, and talking to others about non-patient matters. A detailed list of the categories can be found in Appendix 1 (available as an online data supplement at www.jamia.org).

Data Entry Tool

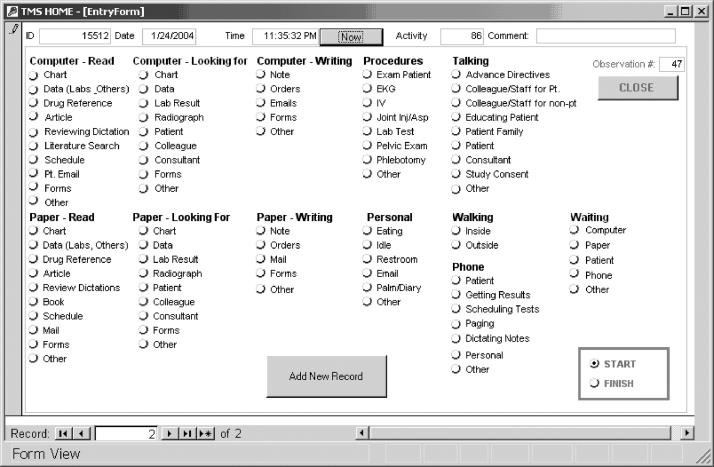

Observers logged data into a Microsoft Access form using Fujitsu Lifebook computer touch-screens (▶). An initial time stamp placed using the “Now” button was performed prior to selecting one of 85 possible activities. The “end time” was presumed to fall at the start of the next task. To ensure each choice was intended, no individually selected activity was logged into the system until the observer pressed the “Add Record” button. Each activity record contained a time stamp to the nearest second, the observation session identifier, the observer and physician ID, and the total number of patients seen during the observed clinic session. All data were compiled in a master Microsoft Access database.

Figure 2.

Data entry form for the time-motion study. Major Categories are listed in bold with Minor Categories designated underneath. Every activity record was preceded by a time stamp, created with the “Now” button. An appropriate activity was selected, and “Add New Record” committed the activity entry to an official data log.

Of note, only one activity could be registered at any given time. If a physician appeared to execute simultaneous activities, such as “writing notes” and “talking to patient,” the observer would judge “writing notes” as the physician’s primary activity. More frequently, physicians transitioned rapidly from one task to another, which was readily captured with the tool as a series of activities in sequence.

We did not record as a separate entry the “transitional time” between activities. Instead, we consistently incorporated the time it took, for example, a provider to physically hang up a phone and to begin typing a note into the computer as part of the latter activity’s expected “start-up time.” If, however, a long transition time was present, it would be scored as a separate activity classified as “other.”

Observer Training

Five non-clinician research assistants underwent training and multiple pilot tests to ensure proper recording and categorization of observations. Each was trained by an experienced observer to navigate the computer data entry tool and to correctly identify each activity category. Observers then underwent four to eight hour training sessions in which they observed non-study physicians. Results of training observations were reviewed to ensure proper data collection. The observed physician, experienced observers, and senior investigator were also available to answer questions if observers had difficulty categorizing tasks into one of the predefined categories. The observers for this study were concurrently involved in another project utilizing similar methods, so their experience is approximately twice the number of observations reported here.

Statistical Analysis

Our primary outcome measure was the minutes spent per patient in each analysis category. For each observation, the seconds spent in all activities, grouped under each of the six individual analysis categories, were averaged over the number of observed patients. For the final, broader analysis, we averaged the above times from all observations in each of the six analysis categories.

Of note, only the total time spent in one observation session on one activity, such as “dictating notes,” was taken into consideration. The clock was continuous and accurate to the nearest second, so the exact start and stop clock time of a task was recorded, regardless of the number of interruptions. The earlier time was subtracted from the later time to determine the number of second that elapsed “dictating notes” before one interruption. The same technique was applied to calculate the seconds used later to finish those same notes. We did not differentiate instances of the same activity. The final result was the sum of all time spent on that one activity, “dictating notes,” during the observation session.

We used a repeated measures linear regression model to measure the impact on time spent per patient. 18 In order to account for observer variability, we used a mixed model in the analysis. The main predictor of interest was a binary indicator for the pre- vs. post-intervention time period. We also included indicator variables for the observers and the clinics, since these covariates may have confounded the effect estimate for intervention. We also adjusted for specialty, physician years in practice, and the random effects of observer variability. The results from the repeated measures models are presented as adjusted means along with standard errors and p-values. Two-sided p-values less than 0.05 were considered to be statistically significant. All analyses were conducted with SAS, version 9.1 (SAS Institute, Cary, North Carolina).

Results

30 total observations of 17 individual attending physicians were captured in Endocrine, Pain, Dermatology, and Cardiology clinic (▶). Our observers collectively logged 109 hours of data collection, with each session lasting, on average, 3.6 hours. In all, 270 patients were seen by observed physicians during the study, 136 pre- and 134 post-EHR implementation. On average, nine patients were seen by specialists during each clinic session both pre- and post-implementation.

Table 1.

Table 1 Physician Characteristics ∗ , Pre- and Post-EHR Implementation

| Pre-EHR | Post-EHR | |

|---|---|---|

| Total Number of Physicians | 15 | 15 |

| Males | 12 (80%) | 12 (80%) |

| Number of Physicians per Specialty | ||

| Cardiology | 4 | 4 |

| Dermatology | 5 | 4 |

| Endocrine | 2 | 3 |

| Pain Clinic | 4 | 4 |

| Years in Practice | ||

| Number of Years Practiced: 10-19 | 6 | 7 |

| Number of Years Practiced: >19 | 9 | 8 |

∗ Similarity of characteristics reflect the availability of 13 physicians for data collection both Pre- and Post-EHR implementation.

Despite variable implementation dates for the EHR, there were 26 sessions with paired observations of 13 physicians using both paper-based records and the EHR. Two physicians (in Dermatology and Pain) with pre-implementation data eventually left the practice or were on leave and were not available for post-implementation collection. Two additional physicians in Endocrine and Pain joined the study later and provided only post-implementation data. Across all studied specialties pre- and post-EHR, 76% of our physicians were male (13/17) with an average of 22.4 years of practice.

Five observers conducted observations, three of whom performed evaluations both pre- and post-EHR implementation (▶). The minimum number of observations done by a single observer was four, while the maximum was 10. One observer performed solely pre- observations, and one observer performed solely post-observations.

Table 2.

Table 2 Number of Observations Performed by Each Observer, Pre- and Post-EHR Implementation

| Observer ID | Pre-EHR | Post-EHR |

|---|---|---|

| A | 2 | 2 |

| B | 4 | 2 |

| C | 4 | 0 |

| D | 5 | 5 |

| E | 0 | 6 |

Results by Overall Analysis Categories

▶ presents the average adjusted time (minutes per patient) spent by physicians in six analysis categories prior to and after the implementation of an EHR. The values were adjusted for the specialty, the number of years practiced by each physician, and the random effects of variability among our observers. Across all categories, there was a non-significant increase in overall time spent by physicians after using an EHR (+0.94 minutes per patient, p = 0.83). Doctors by far spent the most time in Direct Patient Care, 14.7 minutes per patient in the post-intervention period. Within specific categories, only one, Miscellaneous, showed a significant decrease in time spent pre- and post- EHR implementation (−3.1 minutes per patient, p = 0.03). There was also a near-significant increase in time spent on Indirect Patient Care: Read (+1.8 minutes per patient, p = 0.07).

Table 3.

Table 3 Adjusted Time Spent in Analysis Categories, Pre- and Post-EHR Implementation

| Analysis Category | Average Adjusted Minutes per Patient (SE) |

Estimate of change | p value | |

|---|---|---|---|---|

| Pre-EHR | Post-EHR | |||

| Direct Patient Care | 14.4 (1.1) | 14.7 (1.0) | 0.26 | 0.85 |

| Indirect Pt. Care: Write | 3.3 (1.3) | 5.3 (1.3) | 2.1 | 0.21 |

| Indirect Pt. Care: Read | 1.7 (0.76) | 3.5 (0.74) | 1.8 | 0.07 |

| Indirect Pt. Care: Other | 3.5 (1.0) | 3.0 (1.1) | −0.53 | 0.49 |

| Administration | 1.7 (0.48) | 1.3 (0.47) | −0.40 | 0.55 |

| Miscellaneous | 5.0 (1.2) | 1.9 (1.2) | −3.1 | 0.03 |

We also compared pre- and post-EHR implementation the time physicians spent using different mediums of communication, the computer versus phone and paper, in all activities within Indirect Patient Care. Pre-EHR, paper consumed the most time with 4.9 adjusted minutes per patient compared to just 2 adjusted minutes per patient for phone and 0.58 adjusted minutes per patient for computer. With use of the EHR, time spent on the computer increased significantly to 5.4 adjusted minutes spent per patient (p = 0.04), while phone usage dropped an insignificant 0.2 adjusted minutes (p = 0.54). An insignificant decrease of 0.6 adjusted minutes per patient also occurred in the paper category (p = 0.49), leaving 4.3 adjusted minutes per patient still spent in paper-related indirect patient care.

Results by Activity

Overall, the amount of time spent in Direct Patient Care after EHR implementation increased slightly (14.4 vs. 14.7, adjusted Δ = 0.26 minutes per patient); however, this difference was not significant (p = 0.85). Within this category, the greatest increases in time related to talking to the patient and performing procedures; however, the adjusted increases in both activities were 0.3 minutes and were not statistically significant.

Indirect Patient Care was divided into three categories: write, read, and other. In two of the categories, write and read, an insignificant overall rise in time spent reflected greater use of the computer. Post-EHR implementation, typing information into the computer rose 2.9 adjusted minutes per patient, while reading information from the computer rose 1.6 adjusted minutes per patient. In the Other category, an insignificant overall decrease of 0.53 adjusted minutes per patient was mostly attributed to 0.2 fewer adjusted minutes per patient spent on the phone. The remaining four major category activities that fell under Indirect Patient Care: Other also exhibited a consistent trend toward time saved post-EHR implementation, ranging from 0.02 to 0.14 adjusted minutes per patient. The one exception, Computer-Looking For, again reflected increased computer usage, yet the increase of 0.14 adjusted minutes spent per patient failed to change the overall trend.

Discussion

We found that implementing an EHR did not significantly affect the time specialty attending physicians spent per patient. To date, specialists have been concerned that general EHRs, not designed for any particular specialty, would either be more cumbersome to use than traditional paper or disrupt the focused workflow of their clinic visits. 19

These data suggest that those concerns may be exaggerated. Our findings demonstrate that given six to nine months time to adapt and stabilize practice behavior, specialists, like primary care physicians, can enter basic clinic information into a computer and conduct a routine clinic visit in no more time than it takes to perform the same operations on paper. This implies that certain features of clinic—gathering patient histories, documenting findings, referencing labs—are common enough to any visit that a general EHR can be used by either primary care physicians or specialists. A comparison of specialists from this study with our institution’s general internists from a previous time-motion study 17 reveal that differences in time spent were rarely more than one minute in any of the six analysis categories. The same outpatient study also corroborated our finding that physicians do not experience significant changes in overall time spent in clinic with implementation of EHRs. 11

We did find a 3.1 minute drop in the Miscellaneous tasks category, but examination revealed that most of the decrease was due to article reading and literature searches by one physician during a relatively quiet clinic day. When an additional analysis of just the 13 paired pre- and post-EHR implementation observations excluded this one physician, no significant change in time was observed in any of the analysis categories, including Miscellaneous, or the overall findings. There was also a modest but non-significant trend toward decreased time spent in administrative tasks both in this and the Pizziferri study. 11 Administrative tasks, such as scheduling, are overwhelmingly the leading source of physician dissatisfaction. 20 By reducing an undesired activity, EHRs may play an important role in improving physician satisfaction which, in turn, has been documented to increase patient satisfaction and may affect quality of care. 21-23

Our study has a number of limitations, and the results should be considered exploratory. Our sample size was small, so we could not make meaningful comparisons between the specialties, and we might also have missed a small but clinically important difference in visit time. In addition, due to the inclusion of transition times as part of each activity, the absolute time a physician commits to an activity may be shorter than the total values reported. Subsequently, the difference between the absolute pre-EHR activity time and the absolute post-EHR activity time may be a smaller value than the difference that incorporates transition times. For the results reported in this paper, we consistently incorporated transition times in both the control and intervention groups as part of the study design. We also did not conduct interrater reliability estimates, although any bias of one observer towards a certain category should not have affected the outcome of the study at the level of time per patient or the six analysis categories. We also studied only four specialties, and these data may not be applicable to other fields. Specialists such as orthopedists may be able to quickly enter information for patients with similar conditions, whereas other specialties, such as ophthalmology, may have highly specialized needs requiring the creation and the filling in of additional data fields. 19,24 In our effort to provide the greatest generalizability to specialists, who are mostly attending physicians, we also excluded residents and fellows who might have different work patterns. Additionally, the EHR we studied may not be representative of other EHRs. One randomized controlled trial showed a temporary increase in time after implementation of an EHR but remarked that usability of EHR is also strongly dependent on design and implementation. 16 Another limitation is that our sites were all urban, academic clinics in Massachusetts, where there is extensive commerce in technology, so that the results may not be generalizable to other settings. Finally, while we were able to capture the primary activity of a physician, multitasking is an essential element of clinical activity and we were unable to capture other simultaneous activities with our tool.

Future studies should examine the influences and nature of time-reallocation following EHR implementation. As more information is entered into the data repository over years, specialists may dedicate less time in the long-term to entering and retrieving information for returning patients. Our study, however, captured mostly patients new to the EHR and, if anything, may have overestimated the time physicians need to spend per patient in clinic at steady-state, especially if widespread interoperability between systems becomes the norm. Furthermore, newer generations of physicians for whom typing skills are near essential and who are accustomed to computers in daily life could find electronic health records more intuitive to navigate than paper charts. Some reports suggest that EHRs may cause physicians to spend more time after clinic completing documentation, yet no formal study has documented the extent and the reasons for this adjustment in activities. 17,24 Other topics worthy of future examination, but beyond the scope of our study, include analysis of the efficiency and quality of patient time spent by specialists with the addition of EHRs as well as whether the time spent transitioning between activities was different for paper versus the EHR.

Ultimately, the driving impetus behind adoption of electronic health records should be improving the safety and quality of health care. Specialists should be better able to retrieve organized lab results on demand and add to growing data in a format that will be easily accessible in the future to other providers. 25 That same repository of information can support measurement and improvement of health care quality, as well as pay-per-performance initiatives. 17,26 Decision support to prevent medication errors and recommend orders can further contribute to the accuracy of health care delivery. Institutions such as Kaiser Permanente and Group Health, have adopted a similar model of using the same EHR in all ambulatory practices for at least the last two years, regardless of medical specialty. 27,28 The system’s ability to provide better, immediate patient information has operated so well that Kaiser has noticed an increase in issues resolved over the telephone associated with fewer outpatient visits. 27 From a large organization’s perspective, a universal electronic health record for primary care and all specialties would not only save money but likely simplify implementation and ongoing maintenance. Even as far back as 2001, the Institute of Medicine recommended that physicians adopt information technology to automate patient-specific clinical information and to ensure regular monitoring and support for individuals living with chronic diseases. 29

Despite the utility of EHRs, many barriers to implementation exist. A 2003 report by the Center for Information Technology Leadership reported that while physician practices often shoulder most of the cost for EHR implementation, they accrue just 11.6% of the benefits. 8 The Massachusetts e-Health Collaborative, supported by a $50 million grant from Blue Cross Blue Shield to fully implement electronic health records in three pilot communities, 30 and the Leapfrog Group, composed of multiple Fortune 500 companies with quality criteria for preferred health care providers, represent just two emerging initiatives to provide greater economic incentive for physicians to implement EHRs as a quality improvement and cost saving tool. 31 Addressing the financial concerns is necessary, but may not be sufficient. Funding and efforts directed at implementing electronic systems in doctors’ offices will be disregarded unless doctors perceive that their time and workflow will not be impeded by change. 9 National standards, with the government being the most capable and likely influence for executing such a plan, need to be established if any form of interoperability is to be achieved. 32,33 While the National Academy has suggested capabilities for a functional electronic health record 34 and attempts have been made to certify vendors, 35 no standard evaluation scheme exists to help physicians determine which electronic records are most appropriate for their practice. Furthermore, the commercial EHR industry is plagued with rapid turnover of vendors, creating uncertainty and hesitancy among would-be purchasing physicians. 19

Conclusion

In conclusion, we found that EHR use in these specialty clinics did not result in a significant difference in clinic visit time. This suggests that at least for many specialties, the EHR does not have to be specifically designed to accommodate specialists’ clinical needs, and that an application used in primary care may also be used in specialty clinics without substantial increases in physician time needed. EHRs have great potential to improve the quality and satisfaction in health care. Adoption, however, is contingent upon addressing barriers, particularly concerns over time and workflow.

Footnotes

The authors would like to acknowledge Pat Carchidi for her help coordinating the study. We also thank John Orav, PhD, for his suggestions and advice during data analyses. Finally, we thank the physicians, patients, and clinic staff who participated.

References

- 1.Institute of Medicine To Err Is Human: Building a Safer Health SystemWashington D.C: National Academies Press; 2000. [PubMed]

- 2.Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ. A computerized reminder system to increase the use of preventive care for hospitalized patients N Engl J Med 2001;345(13):965-970. [DOI] [PubMed] [Google Scholar]

- 3.Safran C. msJAMAElectronic medical records: a decade of experience. JAMA 2001;285(13):1766. [PubMed] [Google Scholar]

- 4.Adams WG, Mann AM, Bauchner H. Use of an electronic medical record improves the quality of urban pediatric primary care Pediatrics 2003;111(3):626-632. [DOI] [PubMed] [Google Scholar]

- 5.Delpierre C, Cuzin L, Fillaux J, Alvarez M, Massip P, Lang T. A systematic review of computer-based patient record systems and quality of care: more randomized clinical trials or a broader approach? Int J Qual Health Care 2004;16(5):407-416. [DOI] [PubMed] [Google Scholar]

- 6.Mekhjian HS, Kumar RR, Kuehn L, Bentley TD, Teater P, Thomas A, et al. Immediate benefits realized following implementation of physician order entry at an academic medical center J Am Med Inform Assoc 2002;9(5):529-539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Burt CW, Hing E, Woodwell D. Electronic Medical Record Use by Office-Based Physicians: United States, 2005. Available at: http://www.cdc.gov/nchs/products/pubs/pubd/hestats/electronic/electronic.htm. Accessed on 10/16/2006. [PubMed]

- 8.Center for Information Technology Leadership The Value of Computerized Provider Order Entry in Ambulatory SettingsBoston: Partners HealthCare; 2003.

- 9.Bates DW. Physicians and ambulatory electronic health recordsU.S. Physicians are ready to make the transition to EHRs—which is clearly overdue, given the rest of the world’s experience. Health Aff (Millwood) 2005;24(5):1180-1189. [DOI] [PubMed] [Google Scholar]

- 10.Bates DW, Kuperman GJ, Wang S, Gandhi T, Kittler A, Volk L, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality J Am Med Inform Assoc 2003;10(6):523-530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Miller RH, Sim I. Physicians’ use of electronic medical records: barriers and solutions Health Aff (Millwood) 2004;23(2):116-126. [DOI] [PubMed] [Google Scholar]

- 12.Baron RJ, Fabens EL, Schiffman M, Wolf E. Electronic health records: just around the corner? Or over the cliff? Ann Intern Med 2005;143(3):222-226. [DOI] [PubMed] [Google Scholar]

- 13.Miller RH, Sim I, Newman J. Electronic Medical Records: Lessons from Small Physician PracticesCalifornia Health Care Foundation; 2003. Available at: http://www.chcf.org/documents/ihealth/EMRLessonsSmallPhyscianPractices.pdf. Accessed on 01/25/2007.

- 14.Poissant L, Pereira J, Tamblyn R, Kawasumi Y. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review J Am Med Inform Assoc 2005;12(5):505-516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Finkler SA, Knickman JR, Hendrickson G, Lipkin Jr. M, Thompson WG. A comparison of work-sampling and time-and-motion techniques for studies in health services research Health Serv Res 1993;28(5):577-597. [PMC free article] [PubMed] [Google Scholar]

- 16.Overhage JM, Perkins S, Tierney WM, McDonald CJ. Controlled trial of direct physician order entry: effects on physicians’ time utilization in ambulatory primary care internal medicine practices J Am Med Inform Assoc 2001;8(4):361-371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Pizziferri L, Kittler AF, Volk LA, Honour MM, Gupta S, Wang S, et al. Primary care physician time utilization before and after implementation of an electronic health record: a time-motion study J Biomed Inform 2005;38(3):176-188. [DOI] [PubMed] [Google Scholar]

- 18.Diggle PJ, Heagerty P, Liang KY, Zeger SL. Analysis of Longitudinal DataNew York: Oxford University Press; 2002.

- 19.DeBry PW. Considerations for choosing an electronic medical record for an ophthalmology practice Arch Ophthalmol 2001;119(4):590-596. [DOI] [PubMed] [Google Scholar]

- 20.The Kaiser Family Foundation. National Survey of Physicians, Part III: Doctors’ Opinions about their Profession. 2002. Available at: http://www.kff.org/kaiserpolls/upload/Highlights-and-Chart-Pack-2.pdf. Accessed on 10/16/2006.

- 21.Grembowski D, Paschane D, Diehr P, Katon W, Martin D, Patrick DL. Managed care, physician job satisfaction, and the quality of primary care J Gen Intern Med 2005;20(3):271-277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Grol R, Mokkink H, Smits A, van Eijk J, Beek M, Mesker P, et al. Work satisfaction of general practitioners and the quality of patient care Fam Pract 1985;2(3):128-135. [DOI] [PubMed] [Google Scholar]

- 23.Haas JS, Cook EF, Puopolo AL, Burstin HR, Cleary PD, Brennan TA. Is the professional satisfaction of general internists associated with patient satisfaction? J Gen Intern Med 2000;15(2):122-128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Brewin B. Do EHRs save time? Online. 6/12/2006. FCW Media Group. 7/17/2006. Ref Type: Electronic Citation.

- 25.Bates DW, Gawande AA. Improving safety with information technology N Engl J Med 2003;348(25):2526-2534. [DOI] [PubMed] [Google Scholar]

- 26.Bates DW, Pappius E, Kuperman GJ, Sittig D, Burstin H, Fairchild D, et al. Using information systems to measure and improve quality Int J Med Inform 1999;53(2-3):115-124. [DOI] [PubMed] [Google Scholar]

- 27.Garrido T, Jamieson L, Zhou Y, Wiesenthal A, Liang L. Effect of electronic health records in ambulatory care: retrospective, serial, cross sectional study BMJ 2005;330(7491):581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Group Health. Patient Centered Informatics Design at Group Health, 2005. Available at: www.hca.wa.gov/hit/doc/GHC.ppt. Accessed on 10/16/2006.

- 29.Institute of Medicine Crossing the Quality Chasm: A New Health System for the 21st CenturyWashington, D.C: National Academies Press; 2001. [PubMed]

- 30.Halamka J, Aranow M, Ascenzo C, Bates D, Debor G, Glaser J, et al. Health care IT collaboration in Massachusetts: the experience of creating regional connectivity J Am Med Inform Assoc 2005;12(6):596-601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.The Leapfrog Group. The Leapfrog Group Fact Sheet. 2006. Available at: http://www.leapfroggroup.org/about_us/leapfrog-factsheet. Accessed on 07/11/2006.

- 32.Brailer DJ. Interoperability: the key to the future health care system. Health Aff (Millwood) 2005; Suppl Web Exclusives:W5–19–W5–21.:W5. [DOI] [PubMed]

- 33.Hersh WR. Medical informatics: improving health care through information JAMA 2002;288(16):1955-1958. [DOI] [PubMed] [Google Scholar]

- 34.Committee on Data Standards for Patient Safety Key Capabilities of an Electronic Health Record System: Letter ReportNational Academies Press; 2003. Available at: http://www.nap.edu/catalog/10781.html. Accessed on 07/11/2006.

- 35.Little DR, Zapp JA, Mullins HC, Zuckerman AE, Teasdale S, Johnson KB. Moving toward a United States strategic plan in primary care informatics: a White Paper of the Primary Care Informatics Working Group, American Medical Informatics Association Inform Prim Care 2003;11(2):89-94. [DOI] [PubMed] [Google Scholar]