Abstract

Factors that influence reinforcer choice have been examined in a number of applied studies (e.g., Neef, Mace, Shea, & Shade, 1992; Shore, Iwata, DeLeon, Kahng, & Smith, 1997; Tustin, 1994). However, no applied studies have evaluated the effects of postsession reinforcement on choice between concurrently available reinforcers, even though basic findings indicate that this is an important factor to consider (Hursh, 1978; Zeiler, 1999). In this bridge investigation, we evaluated the influence of postsession reinforcement on choice of two food items when task responding was reinforced on progressive-ratio schedules. Participants were 3 children who had been diagnosed with developmental disabilities. Results indicated that response allocation shifted from one food item to the other food item under thinner schedules of reinforcement when no postsession reinforcement was provided. These findings suggest that the efficacy of instructional programs or treatments for problem behavior may be improved by restricting reinforcers outside treatment sessions.

Keywords: choice, behavioral economics, postsession reinforcement, substitutability

Variables that influence choice between concurrently available forms of reinforcement have been evaluated in an increasing number of applied studies (e.g., Neef, Mace, Shea, & Shade, 1992; Tustin, 1994). This area of research is important because multiple reinforcers often are available simultaneously in the natural environment. For example, children in a classroom may have a choice between completing academic tasks to gain access to adult attention and leaving their seats to obtain other items, such as toys or books. Allocation of responding among concurrently available forms of reinforcement has been evaluated under a number of different conceptual approaches.

The matching law, which states that choice among alternatives is related to the rate of reinforcement for each alternative, provides one approach for understanding choice between reinforcers (Herrnstein, 1961). When two reinforcers are concurrently available, participants will allocate responding towards the option that produces the highest rate of reinforcement. Neef et al. (1992) evaluated how reinforcer quality and rate influenced responding among concurrently available tasks for children with severe emotional disturbance or behavioral disorders and learning difficulties. The children were required to choose between two stacks of math problems associated with two different reinforcers. Reinforcer quality was determined based on the participant's rankings of 10 items. The matching law predicted the amount of time each child spent on math problems when the quality of the reinforcer was equal for both alternatives; however, the matching law did not predict choice when the reinforcers were unequal in quality. Thus, the matching law may be limited when choice involves qualitatively different reinforcers, which is often the case in the natural environment.

Another conceptual approach to understanding choice between reinforcers involves substitutability theory, which extends the matching law by accounting for choices between qualitatively different reinforcers (Green & Freed, 1993). In substitutability theory, the sensitivity of changes in choice is evaluated by altering the schedule of reinforcement for one reinforcer while measuring consumption of both reinforcers. Specifically, one reinforcer is said to be substitutable for another reinforcer when an increase in the price of one reinforcer results in decreased consumption of that reinforcer and increased consumption of the other reinforcer (Kagel et al., 1975). Substitutability describes a continuum of interactions among reinforcers. Thus, the degree of substitutability between reinforcers is determined by evaluating the extent to which changes in the reinforcement schedule associated with one reinforcer influence choice. The substitutability of reinforcers has been examined in a number of basic studies with nonhumans (Kagel et al.; Lea & Roper, 1977). However, substitutability has rarely been directly investigated with clinical populations or problems (see Shore, Iwata, DeLeon, Kahng, & Smith, 1997, and Zhou, Goff, & Iwata, 2000, for notable exceptions).

Basic research also indicates that the availability of reinforcers outside experimental sessions (called an open economy) may influence responding for single and concurrently available reinforcers during sessions (Hursh, 1978; Zeiler, 1999). In an open economy, postsession feedings are provided to maintain subjects at a certain body weight. In a closed economy, access to the reinforcer is only available contingent on responding during experimental sessions (Hursh, 1980). Zeiler compared rates of responding in a single-operant arrangement under increasing response requirements during open and closed economic conditions. Responding was evaluated for increasing fixed-ratio (FR), fixed-interval (FI), and random-interval (RI) schedules. Under the closed economy, pigeons were not maintained below free-feeding body weights prior to sessions, and responding during the experimental conditions determined the total amount of food received each day. During the open economy, pigeons were maintained at 80% of their free-feeding body weights, which was accomplished by controlling the amount of food received during experimental sessions and by providing supplemental feedings outside experimental sessions. The economic condition interacted with schedule effects. For example, in the closed economy, pigeons continued to respond as the schedule of reinforcement was thinned to FR 10,000, whereas responding ceased when the schedule reached FR 300 or FR 400 under the open economy. Level of food deprivation per se could not account for these findings because pigeons' body weights under the closed economy remained close to their free-feeding body weights. These results suggest that, under certain conditions, organisms may not work as hard for a reinforcer that is available outside experimental sessions.

Only one applied study has evaluated the influence of postsession reinforcement on responding during sessions. Roane, Call, and Falcomata (2005) compared levels of adaptive responding exhibited by 2 individuals under conditions that were designed to approximate open and closed economies. Participants were required to sort envelopes or complete math worksheets to gain access to video games or cartoons, respectively. Task requirements increased within session via progressive-ratio (PR) schedules. The specific reinforcers evaluated in the study were restricted outside experimental sessions (closed economy) or provided immediately or a few hours after the session (open economy). Higher levels of adaptive responding occurred under closed economic conditions even though deprivation for the reinforcer was held constant across conditions.

Research findings on reinforcer choice, substitutability theory, and postsession access to reinforcement have important implications for application. For example, it is sometimes impossible or impractical to withhold reinforcement for problem behavior. A child required to emit appropriate behavior during instructional trials to gain access to praise while a less preferred but substitutable form of reinforcement such as physical attention was available for aggression may be less likely to engage in appropriate behavior and more likely to engage in aggression as the schedule requirement for task responding increases, according to studies on the matching law and substitutability theory. Furthermore, changes in choice across increasing schedule requirements may be influenced by the availability of reinforcement following tasks. If the child receives a similar form of attention during and following instructional sessions (i.e., verbal interaction), the reinforcer that maintains inappropriate behavior (e.g., physical attention) may be more readily substitutable for the reinforcement provided for appropriate behavior. However, further research is needed to determine the importance of controlling the type and amount of reinforcement that is available outside of instructional sessions.

The purpose of the current study was to bridge basic and applied research on choice, substitutability, and postsession reinforcement by extending procedures drawn from the basic laboratory to children with developmental disabilities and clinically relevant responses. The methodology was designed as an analogue of applied situations involving choice between qualitatively different reinforcers (e.g., praise for appropriate behavior vs. physical attention for problem behavior) when reinforcers are available outside treatment sessions. Choice between specific food items was examined under rapidly increasing schedule requirements because food is easy to deliver during sessions and to control outside the sessions. Completion of previously mastered math problems (i.e., multiplication, addition) was selected as the target response because this academic task is similar to those children encounter in the classroom environment. Like other translational or bridge studies, the evaluation was not conducted in the context of treatment (see Lerman, 2003, for further discussion), so acquisition of math skills was not a primary goal of the study.

Method

Participants, Settings, and Materials

The 3 participants had been diagnosed with developmental disabilities and had been referred for the treatment of noncompliance or inappropriate behavior that interfered with daily activities. Only children who reportedly demonstrated an ability to discriminate between two items were eligible to participate. Randy was a 5-year-old child with autism. He communicated at a level similar to his peers and could follow complex instructions (e.g., “After you finish your work, you can play with the puzzle for 10 minutes and then eat your lunch.”). Kirk was a 5-year-old child with severe language impairment. He communicated via short sentences (e.g., “I want to play the game.”) and followed three-step instructions (e.g., “Sit down, pick up the pencil, and trace the letter.”). Johnny was an 11-year-old boy with pervasive developmental disorder (not otherwise specified). His communication skills were similar to his peers, and he followed complex instructions. He had been prescribed an antidepressant medication that he took each night. No medication changes occurred during the study. None of the participants had sensory or motor impairments. Sessions were conducted in either a therapy room at a university-based early intervention summer program (Randy and Kirk) or in a private room at the participant's home (Johnny). The rooms contained a desk, chairs, and relevant session materials.

Response Measurement and Interobserver Agreement

Data on choice of reinforcers were collected during all sessions. Choice was defined as pointing to one of two different-colored math problems following the therapist's instruction to “pick one.” Data on choice were expressed as a percentage of trials by dividing the number of times a particular activity was chosen by the total number of choice opportunities and multiplying the result by 100%. These data were examined in each phase to determine the PR breaking point for each reinforcer. The PR breaking point was defined as the highest schedule value completed by the participant in each phase. All data were recorded on laptop computers using real-time recording.

Two independent observers collected data during 38% of Randy's sessions, 51% of Kirk's sessions, and 41% of Johnny's sessions. Sessions were divided into consecutive 10-s bins to calculate occurrence agreement for each session. Interobserver agreement was calculated by dividing the total number of agreements by the total number of agreements plus disagreements and multiplying by 100%. Mean agreement for choice was 98% (range, 75% to 100%) for Randy, 98% (range, 82% to 100%) for Kirk, and 97% (range, 50% to 100%) for Johnny.

Preference Assessment

Prior to the analysis, a paired-stimulus preference assessment was conducted based on procedures described by Fisher et al. (1992) to identify highly preferred food items. Relative preference for food items was calculated by dividing the number of times an item was chosen by the number of times the item was presented and multiplying by 100%. The two highest ranked items were evaluated in the subsequent choice analysis. The first- and second-ranked items, respectively, were Goldfish® and M&Ms® for Randy, Funyuns® and Cheetos® for Kirk, and Goldfish® and pretzels for Johnny. The top-ranked item for each participant was associated with the PR schedule during the choice analysis.

Procedure

Choice between two highly preferred food items was compared when postsession reinforcement was and was not provided. Different-colored math index cards were associated with the different food reinforcers, and different-colored poster boards were associated with each postsession procedure. Math problems were selected for each participant based on parent or teacher report of the types of problems the participant was currently working on and had mastered. On each trial, the therapist provided a choice between two identical sets of math cards (i.e., addition problems for Kirk and Randy and multiplication problems for Johnny) by placing two index cards (i.e., one of each color) an equal distance from the participant and instructing the participant to “pick one.” Each index card contained one math problem. One set of math problems was on yellow cards, and the other identical set of math problems was on blue cards. Each food item was associated with the completion of a particular colored math problem.

Stimulus prompts in the form of dots that represented the numbers included in the math problem appeared on each card. For example, if the math problem required the participant to add the numerals three and two, three dots were placed next to the numeral three and two dots were placed next to the numeral two. For multiplication cards, groups of dots were placed next to each numeral. For example, if the card required the participant to multiply two and three, three groups of two dots were placed on the card. For each math problem, the experimenter presented instructional trials using a graduated three-step prompting procedure (verbal, model, physical prompts). If the model prompt was required, the therapist pointed to each dot with a finger and counted the number of dots. If physical guidance was required, the therapist physically guided the participant to touch each dot with his finger while the therapist counted the number of dots. No programmed consequences were provided for inappropriate behavior. The number of choices between reinforcers depended on the condition.

Throughout the analysis, parents were asked to restrict the child's access to the specific food items evaluated in the study. The experimenter confirmed that the food items were not available outside the experimental sessions by asking parents if the items were restricted. In addition, the specific food items evaluated with Randy and Johnny were items that parents reported were never provided in the home setting. The therapists of the university-based summer program also confirmed that Randy and Kirk did not have access to the food items evaluated in the study. Two to seven sessions were conducted daily, two to five times per week. Typically, four sessions were conducted per day. However, more sessions were occasionally conducted during baseline phases (i.e., five to seven sessions). The effects of reinforcement schedule and postsession reinforcement were evaluated in a reversal design.

Discrimination Training

Prior to the analysis, all participants were taught to discriminate between the conditions associated with the different-colored math index cards and poster boards. Discrimination training with the two sets of math cards was conducted first. For each discrimination training trial, the participant was physically guided to choose one of the two math problems. The participant then had to complete the selected math problem to receive the reinforcer associated with that problem. Instructions to complete the math problems were delivered using verbal, model, and physical prompts. A minimum of three trials was conducted for each math set. The therapist then asked the child to verbally report the food item associated with each of the two math sets. All participants accurately did so following the discrimination training trials. The participant was then taught to discriminate between the colored poster boards. The participant was physically guided to sit at a table with either a red or a blue poster board. Following completion of two math index cards (one card from each colored task) and delivery of the corresponding food items for each task, the participant was escorted to a separate table where an identical colored poster board was placed. If the table contained the blue poster board, no food items were placed on the table for the participant to consume. If the table contained the red poster board, three pieces of food (associated with the PR schedule in the postsession reinforcement conditions) were placed on the table for the participant to consume. The participant was exposed to the contingencies in place for each poster board a minimum of three times. Then, the participant was asked to verbally report the colors of the poster boards that were associated with the presence and absence of food items on the outside table. All participants accurately did so following discrimination training.

Baseline

The participant chose between two colored cards and received the food item associated with the color card for one correct answer (FR 1) following a verbal or model prompt. If physical guidance was required to complete the math card, the participant was required to complete another math problem from the same color deck of cards until one problem was completed without physical guidance. No colored poster boards were placed on the table during this condition, and none of the food items arranged during the session were available outside the sessions. Each session consisted of eight trials (i.e., eight reinforcer deliveries).

No Postsession Reinforcement (With or Without Breaks)

Math problems associated with the participant's second-ranked food item remained on an FR 1 schedule while problems associated with the top-ranked food item were exposed to a nonresetting PR schedule, under which the response requirement increased arithmetically within and across sessions of the phase. The blue poster board was placed on the table. At the beginning of the first session, the participant was required to complete one math problem of either color to receive the associated food reinforcer. Each subsequent opportunity to earn reinforcement under the PR schedule resulted in an increased work requirement. At the beginning of each choice trial, one math card from each stack was presented, and the participant was verbally prompted to pick one. When the participant chose the math task associated with the PR schedule, the participant was required to complete the number of math problems indicated by the schedule without physical guidance before receiving reinforcement. Once the reinforcer was earned, another choice trial was presented. The PR schedule increased by two responses each time the participant chose the math problem associated with the PR schedule until the schedule reached PR 14. Following PR 14, the schedule increased by one response each time the math problem associated with the PR schedule was chosen. The PR schedule did not reset at the beginning of each session. Thus, if the schedule reached PR 4 at the end of one session, PR 6 was in effect at the beginning of the next session. The PR schedule was reset following intervening baseline phases. Each session consisted of eight choice trials. A trial consisted of the participant choosing between the two tasks, completing the number of tasks dictated by the schedule requirement, and receiving the food item associated with the task that was chosen. Immediately following the completion of each session, the participant was escorted to a table in a separate room (Randy) or a separate table in the same room (Kirk and Johnny). The table contained a blue poster board. The participant was instructed to sit in a chair at the table. No food items were placed on the table. The participant was required to sit at the table for 2 min to control for the amount of time at the table during the postsession reinforcement phases.

The condition was terminated when the task associated with the PR schedule was not chosen for at least two consecutive sessions, with the exception of Randy's first exposure to this condition. Randy was the 1st participant in the study, and the degree to which choice would completely shift from one food item to the other food item was unclear; thus, his first exposure to this condition was terminated following two consecutive sessions during which the food item associated with the PR schedule was chosen once per session. During some phases (no postsession reinforcement without breaks), less than 5 min elapsed between sessions that were conducted on the same day. In other phases (no postsession reinforcement with breaks), 15 min elapsed between sessions. During the 15-min break, the participant was provided with free time and access to toys. These breaks were designed to limit the influence of postsession reinforcement on subsequent sessions that were conducted on the same day during the postsession reinforcement condition; thus, they were also implemented during the no postsession reinforcement condition.

Postsession Reinforcement (With or Without Breaks)

Procedures were identical to those in the no postsession reinforcement condition with several exceptions. Sessions consisted of five choice trials. A red poster board was placed on the table. Following each session, the participant was instructed to sit at a separate table that contained a red poster board. Three pieces of food associated with the PR schedule were placed on the table for the participant to consume. The participant was required to sit in the chair for either 2 min or until the food items had been consumed. All participants consumed the food item in the allotted time following the session. Thus, the total amount of food received during each session (eight pieces of food) was held constant across the postsession and no postsession reinforcement conditions. All other procedures were identical to those described above.

Results

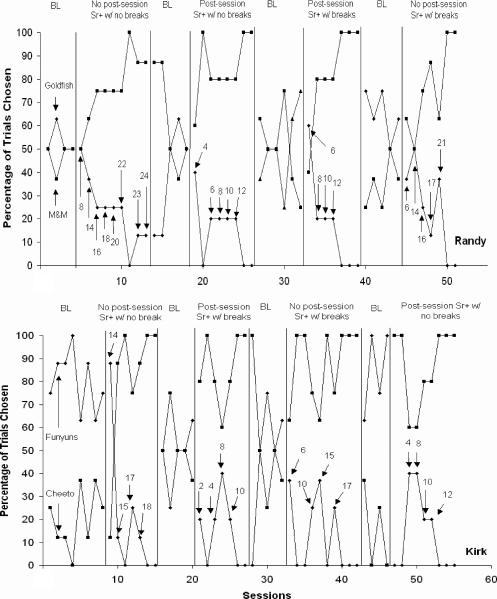

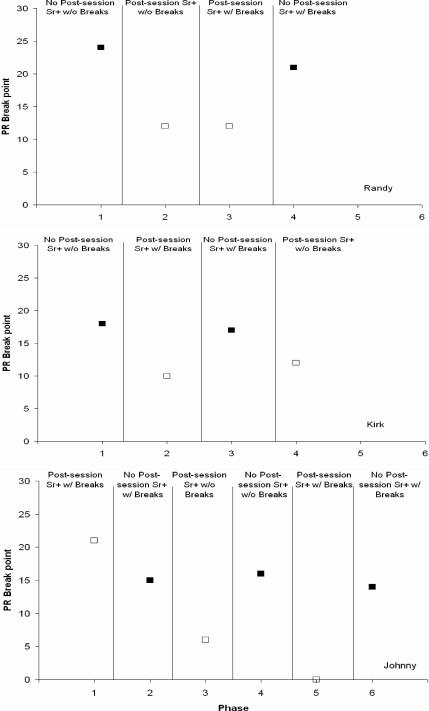

Participants' choices across conditions for each phase are shown in Figures 1 and 2. PR break points for each phase are shown in Figure 3. Randy's choices during the initial baseline indicated that the food items were equally preferred when both items were available on an FR 1 schedule (Figure 1). When no postsession reinforcement (without breaks) was introduced, he chose the food item associated with the PR schedule (Goldfish®) less often and began choosing the other food item (M&Ms®) as the schedule increased. He chose M&Ms® almost exclusively after the schedule reached PR 22. PR 24 was the highest schedule value completed by Randy before the phase was terminated. Thus, PR 24 was considered the breaking point for this phase (Figure 3). Similar results were obtained when the no postsession reinforcement condition was replicated with breaks in the last phase. Randy chose M&Ms® exclusively after the schedule reached PR 21 for the Goldfish®. The highest schedule that he completed for the Goldfish® was PR 21. In the postsession reinforcement conditions (with and without breaks), choice for the food item on the PR schedule decreased to zero more rapidly. Randy no longer chose the Goldfish® after the schedule reached PR 12. Thus, higher PR breaking points were reached when postsession reinforcement was unavailable.

Figure 1.

Percentage of choices under postsession and no postsession reinforcement conditions for Randy (top) and Kirk (bottom). The numbered arrows indicate the highest PR value completed in the session. The final PR schedule in each phase represents the PR breaking point for the phase.

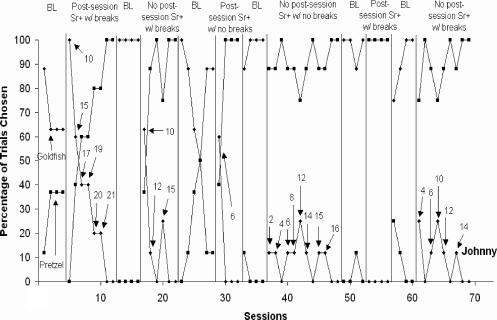

Figure 2.

Percentage of choices under postsession and no postsession reinforcement conditions for Johnny. The numbered arrows indicate the highest PR value completed in the session. The final PR schedule in each phase represents the PR breaking point for the phase.

Figure 3.

PR breaking points for each phase for Randy (top), Kirk (middle), and Johnny (bottom).

Kirk's initial baseline indicated a clear preference for Funyuns® (the food item that was placed on the PR schedule in the following phases) over Cheetos®. When no postsession reinforcement (without breaks) was introduced, Kirk no longer chose Funyuns® after completing the PR 18 schedule (Figure 1). Thus, the PR breaking point was PR 18 during this phase (Figure 3). Similar results were obtained under no postsession reinforcement (with breaks). Kirk chose Cheetos® exclusively after the schedule reached PR 17 for Funyuns®, which was considered his PR breaking point during this phase. Under the postsession reinforcement phases (with and without breaks), Kirk stopped responding for Funyuns® and exclusively chose Cheetos® after completing the PR 10 (with breaks) and PR 12 (without breaks) schedules. Thus, the PR breaking points reached in the postsession reinforcement phases (PR 10 and PR 12) were lower than those reached in the no postsession reinforcement phases (PR 18 and PR 17). That is, responding for Funyuns® persisted under thinner schedules of reinforcement when food was restricted outside the experimental sessions.

Johnny's initial baseline indicated that Goldfish® (the food item that was placed on the PR schedule in the following phases) were preferred over pretzels (Figure 2). When food was available following sessions (i.e., postsession reinforcement with and without breaks), the highest schedule value completed for Goldfish® was PR 21 (first exposure) and PR 6 (second exposure), which were considered his PR breaking points for the first two intervention phases (Figure 3). The Goldfish® were never selected in the third exposure to postsession reinforcement. Prior exposure to the conditions may have been responsible for the apparent increase in sensitivity to the increasing schedule requirement across the three postsession reinforcement phases. Johnny stopped choosing Goldfish® and chose pretzels almost exclusively under relatively thin schedules of reinforcement during the first, second, and third exposures to the no postsession reinforcement (with and without breaks) phases. The PR breaking points for the first, second, and third no postsession reinforcement phases were PR 16, PR 15, and PR 14, respectively. Thus, results showed that postsession reinforcement increased sensitivity to the rapidly increasing schedule requirement because the participant rapidly stopped choosing the reinforcer associated with the PR schedule when postsession reinforcement was provided.

Discussion

All participants completed more math problems to gain access to the reinforcer associated with the PR schedule when the food item was not available following sessions. The breaking point (i.e., the highest schedule completed) for the food associated with the PR schedule was nearly twice as high in the absence of postsession reinforcement. These findings were replicated regardless of whether breaks were provided between sessions. This investigation was the first to evaluate the influence of postsession reinforcement on choice in an applied context. Results extend previous findings (e.g., Neef et al., 1992) by evaluating an additional variable that may influence choice between reinforcers of unequal quality.

The findings also replicate and extend previous research on responding for reinforcers when items are available postsession. In both the present study and Roane et al. (2005), higher levels of responding for a reinforcer were observed when postsession access to the reinforcer was restricted. The current results extend the findings of Roane et al. by evaluating the influence of postsession reinforcement on choice between concurrently available reinforcers. These results may increase the generality of those obtained by Roane et al. because multiple substitutable reinforcers may be concurrently available during a session in the natural environment.

Future research on postsession reinforcement is warranted to evaluate whether reinforcers used as part of instructional programs (e.g., in the context of multiple acquisition programs or unmastered tasks) or treatment for problem behavior may need to be restricted outside the context of intervention to obtain clinically important changes in behavior. For instance, additional research might evaluate whether a child who is being taught to mand for a highly preferred item will be less likely to acquire the mand if alternative, substitutable reinforcers are concurrently available during the teaching sessions or independent of mands outside the teaching sessions.

In the natural environment, it may be difficult to restrict access to certain forms of reinforcement (e.g., attention). Examining the influence of postsession reinforcement on responding for reinforcers during treatment may indicate the conditions required to maintain treatment efficacy when postsession reinforcement is available. For example, if attention is provided for appropriate behavior during treatment and is provided independent of behavior following treatment, the schedule of reinforcement for appropriate behavior may need to be relatively rich to maintain high levels of appropriate behavior when alternative forms of reinforcement are concurrently available for problem behavior. However, future research will be necessary to evaluate whether other forms of reinforcement (e.g., attention, toys) are as readily traded as the reinforcers used in the present investigation.

A number of concepts and theoretical models are relevant to the findings (e.g., matching law, substitutability, behavioral economics). For example, as predicted by the matching law, participants began to allocate more responding to the task that produced the higher rate of reinforcement as the schedule of reinforcement was thinned for the preferred food item. Nonetheless, responding was not completely consistent with the matching law because participants continued to allocate responding to the higher quality food item even when the schedule requirement was more than 10 times that of the alternative food item. These results replicate those of Neef et al. (1992) by showing that the matching law does not predict responding when different reinforcers are concurrently available.

The findings are more consistent with substitutability theory, which accounts for choice between qualitatively different reinforcers. Results showed that as the price (i.e., schedule requirement) of one food item was increased, participants decreased consumption of that food item and increased consumption of an alternative food item. Thus, one food item was substitutable for another, more expensive, food item. More important, results suggested that the availability of postsession reinforcement (i.e., an open economy) increased the substitutability of this food item for the other item. Participants did not complete as many math problems to gain access to the food item that was provided outside the experimental sessions.

In the basic literature, findings on postsession reinforcement often have been discussed within a behavioral economics framework (Hursh, 1978; Ladewig, Sørensen, Nielsen, & Matthews, 2002; Roane et al., 2005). In behavioral economics, changes in response allocation under varying reinforcement schedules are described in terms of demand elasticity for a reinforcer. Demand for a reinforcer is considered elastic if responding is fairly sensitive to changes in the price of the reinforcer. Results of this study and those conducted in the basic laboratory indicate that the availability of substitutable commodities outside the experimental sessions may influence demand elasticity (Hursh, 1984; Ladewig et al.). That is, when the food item associated with intermittent reinforcement was available outside the experimental sessions, the participants were less likely to respond for this reinforcer as the schedule was thinned.

The present investigation should be considered translational because the target responses and reinforcers were selected on the basis of convenience rather than clinical relevance. Thus, further research will be needed to delineate the effects of postsession reinforcement on treatment outcomes. However, clinical studies of this type should be preceded by additional translational research on factors that may alter the relation between choice and postsession access to reinforcers. For example, future studies should investigate choice between more disparate reinforcers (e.g., a break from work vs. attention). In the present investigation, shifts in responding may have occurred under relatively low schedule requirements because both items were functionally similar (see DeLeon, Iwata, Goh, & Worsdell, 1997, for a related study). More research also is needed to evaluate the effect of the delay to postsession reinforcers. Results of at least two studies, one basic and one applied, indicate that even delayed postsession reinforcement can influence responding (Ladewig et al., 2002; Roane et al., 2005). In Roane et al., for example, 1 participant was exposed to postsession reinforcers 4 hr after the experimental session, and levels of responding were influenced by the availability of the delayed reinforcer.

Following further translational research, the effects of postsession reinforcement should be evaluated in the context of treatment. For example, in Lalli et al. (1999), a food reinforcer was provided contingent on compliance while problem behavior continued to produce the functional reinforcer (escape from demands). Participants allocated responding to compliance even when the schedule of food reinforcement was thinned. However, it is possible that the food item provided for compliance was restricted outside the experimental sessions. The impact of postsession reinforcement on these findings should be evaluated in future studies.

It should be noted that restricting the participant's access to specific food items outside the experimental sessions only approximated that of a true closed economy. In addition, the provision of response-independent postsession reinforcement (i.e., between-session feedings) constituted just one aspect of an open economy. However, limiting total food consumption would have raised ethical issues and decreased the generality of the findings to clinical settings. A closer approximation of open and closed economies as described in basic research may not be relevant to applied settings.

Another potential limitation of the present investigation was the use of relatively brief (15-min) breaks between sessions that were conducted on the same day. These breaks were arranged to limit the influence of postsession access to reinforcers on upcoming sessions. Nonetheless, it is entirely possible that the postsession reinforcement affected responding in the upcoming session. The problem could be minimized in future studies by using lengthier breaks or by conducting just one session per day. It is also possible that differences in the number of reinforcer trials per session (five trials vs. eight trials per session in the postsession and no postsession reinforcement conditions, respectively) may have influenced how rapidly response allocation shifted as the schedule requirements increased within and across sessions. However, it was important to equate total access to the food items across conditions. Thus, the postsession reinforcement condition included three fewer trials per session to allow the provision of three pieces of food following the session. Finally, the PR schedule used in the current investigation only approximated the schedule-thinning strategies typically used in clinical settings. The nonresetting PR schedule permitted a rapid evaluation of reinforcer substitutability and is more similar to applied approaches to schedule thinning compared to traditional (resetting) PR schedules (e.g., Roane, Lerman, & Vorndran, 2001). However, future research is needed to determine if similar results would be obtained when the reinforcement schedule is faded more gradually.

Although results suggest that postsession access to reinforcers influences responding during experimental sessions, more translational and applied research will be necessary to fully evaluate this question. Future research may help identify the conditions under which children will choose a substitutable reinforcer over a concurrently available form of reinforcement, and how limiting substitutable forms of reinforcement outside the treatment sessions may enhance the efficacy of treatment.

Acknowledgments

We thank Laura Addison and Valerie Volkert for their help with data collection.

References

- DeLeon I.G, Iwata B.A, Goh H, Worsdell A.S. Emergence of reinforcer preference as a function of schedule requirements and stimulus similarity. Journal of Applied Behavior Analysis. 1997;30:439–449. doi: 10.1901/jaba.1997.30-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W, Piazza C.C, Bowman L.G, Hagopian L.P, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Freed D.E. The substitutability of reinforcers. Journal of the Experimental Analysis of Behavior. 1993;60:141–158. doi: 10.1901/jeab.1993.60-141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R.J. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S.R. The economics of daily consumption controlling food- and water-reinforced responding. Journal of the Experimental Analysis of Behavior. 1978;29:475–491. doi: 10.1901/jeab.1978.29-475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S.R. Economic concepts for the analysis of behavior. Journal of the Experimental Analysis of Behavior. 1980;34:219–238. doi: 10.1901/jeab.1980.34-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S.R. Behavioral economics. Journal of the Experimental Analysis of Behavior. 1984;42:435–452. doi: 10.1901/jeab.1984.42-435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kagel J.H, Battalio R.C, Rachlin R, Green L, Basmann R.L, Klemm W.R. Experimental studies on consumer demand behavior using laboratory animals. Economic Inquiry. 1975;13:22–38. [Google Scholar]

- Ladewig J, Sørensen D.B, Nielsen P.P, Matthews L.R. The quantitative measurement of motivation: Generation of demand functions under open versus closed economies. Applied Animal Behaviour Science. 2002;79:325–331. [Google Scholar]

- Lalli J.S, Vollmer T.R, Progar P.R, Wright C, Borrero J, Daniel D, et al. Competition between positive and negative reinforcement in the treatment of escape behavior. Journal of Applied Behavior Analysis. 1999;32:285–296. doi: 10.1901/jaba.1999.32-285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lea S.E.G, Roper T.J. Demand for food on fixed-ratio schedules as a function of the quality of concurrently available reinforcement. Journal of the Experimental Analysis of Behavior. 1977;27:371–380. doi: 10.1901/jeab.1977.27-371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerman D.C. From the laboratory to community application: Translational research in behavior analysis. Journal of Applied Behavior Analysis. 2003;36:415–419. doi: 10.1901/jaba.2003.36-415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Mace F.C, Shea M.C, Shade D. Effects of reinforcer rate and reinforcer quality on time allocation: Extensions of matching theory to educational settings. Journal of Applied Behavior Analysis. 1992;25:691–699. doi: 10.1901/jaba.1992.25-691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Call N.A, Falcomata T.S. A preliminary analysis of adaptive responding under open and closed economies. Journal of Applied Behavior Analysis. 2005;38:335–348. doi: 10.1901/jaba.2005.85-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Lerman D.C, Vorndran C.M. Assessing reinforcers under progressive schedule requirements. Journal of Applied Behavior Analysis. 2001;34:145–167. doi: 10.1901/jaba.2001.34-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shore B.A, Iwata B.A, DeLeon I.G, Kahng S, Smith R.G. An analysis of reinforcer substitutability using object manipulation and self-injury as competing responses. Journal of Applied Behavior Analysis. 1997;30:21–41. doi: 10.1901/jaba.1997.30-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tustin R.D. Preference for reinforcers under varying schedule arrangements: A behavioral economic analysis. Journal of Applied Behavior Analysis. 1994;27:597–606. doi: 10.1901/jaba.1994.27-597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeiler M.D. Reversed schedule effects in closed and open economies. Journal of the Experimental Analysis of Behavior. 1999;71:171–186. doi: 10.1901/jeab.1999.71-171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou L, Goff G.A, Iwata B.A. Effects of increased response effort on self-injury and object manipulation as competing responses. Journal of Applied Behavior Analysis. 2000;33:29–40. doi: 10.1901/jaba.2000.33-29. [DOI] [PMC free article] [PubMed] [Google Scholar]