Abstract

The process of innovation adoption was investigated using longitudinal records collected from a statewide network of almost 60 treatment programs over a 2-year period. Program-level measures of innovation adoption were defined by averaged counselor ratings of program training needs and readiness, organizational functioning, quality of a workshop training conference, and adoption indicators at follow-up. Findings showed that staff attitudes about training needs and past experiences are predictive of their subsequent ratings of training quality and progress in adopting innovations a year later. Organizational climate (clarity of mission, cohesion, openness to change) also is related to innovation adoption. In programs that lack an open atmosphere for adopting new ideas it was found that counselor trial usage is likely to be attenuated. Most important was evidence that innovation adoption based on training for improving treatment engagement was significantly related to client self-reports of improved treatment participation and rapport recorded several months later.

Keywords: Innovation adoption, Organizational functioning, Training effectiveness, Client engagement, Longitudinal assessments

1. Introduction

“Substantial research effort has been devoted to understanding the real world context in which drug abuse treatment occurs, and a key area of research has been studies on how and why new, empirically derived treatments become adopted, organized, and managed. Studies suggest that transferring research to practice is associated with organizational factors such as leadership attitudes, staff turnover, organizational stress, regulatory and financial pressures, management style, and tolerance for change. These findings are leading to the development of an integrated framework of organizational change that can enhance the systematic study of research application” (Compton et al., 2005; p. 109).

Previous work reported by Simpson (2002) and Lehman, Greener, and Simpson (2002) are examples of the research efforts referred to by Compton et al. (2005), along with the present series of studies reported in this journal volume. As clarified by Simpson and Flynn (this issue) and a recent comprehensive review by Fixsen, Naoom, Blase, Friedman, and Wallace (2005), adoption and implementation of innovation is a process, not an isolated event. Furthermore, some of the stages that treatment programs move through to accomplish this process are comparable to those observed in relation to client changes during treatment (see Simpson, 2001, 2004, 2006). Both involve an integrated process that relies on readiness to change, training on how to do it, and engagement in its implementation. Like the clients they serve, treatment programs themselves must be ready and committed to change before engaging in staff training for innovations and successfully implementing them. Linking together components of the change process over time is a major challenge in conducting translational research in health services delivery systems.

Based on their textual review of over 700 articles dealing with implementation research drawn from broad cross-disciplinary selection process, Fixsen et al. (2005) offer the following conclusions. First, they observe that implementation evidence points mainly to what does NOT work, emphasizing the failure of simple forms of dissemination and didactic training. Second, they suggest future progress will require a long-term “multilevel” approach that takes into account intervention components, counselor skills, training processes, and policies. Third, they point out there is a deficiency in evidence regarding the influences of organizational factors and systems on the implementation process. Fourth, they note that a large gap exists in the research literature concerning interaction effects involving implementation stages and components, effectiveness, and sustainability.

In an effort to address some of these issues, the present study builds on a sequential, stage-based conceptualization of the elements of the innovation adoption and implementation process. As presented in more detail elsewhere (Simpson, 2002; Simpson & Flynn, this issue), the TCU Program Change Model postulates four key stages are involved – that is, training, adoption, implementation, and practice. Factors that influence each of these stages are separated into those related to the innovation per se, versus those that are related to the general organizational context into which the innovation is being incorporated. With respect to the innovation, evidence suggests that training must be relevant to the needs as perceived by staff and be delivered with competence. Adoption represents a decision by program leadership (preferably with staff participation) to try it out, based on expectations about potential use and benefits. The implementation phase is the broader “field test” of its effectiveness, feasibility, and sustainability. If the innovation passes this test, it likely becomes incorporated into regular practice. However, organizational functioning (measured as collective perceptions from staff) also influences discrete stages of this process. It includes information on program motivation and readiness for change, resource allocations, staff attributes, and organizational climate.

The series of studies reported in this journal volume rely on a variety of data sets from multiple treatment agencies to examine sequential elements in the process of innovation adoption and implementation. Collectively, they show that a survey of Program Training Needs (PTN; Rowan-Szal, Greener, Joe, & Simpson, this issue) provides reliable insights into staff perceptions about how their program is operating and what might be improved. The PTN therefore can serve as a strategic planning tool and is related to core elements of a more comprehensive appraisal of organizational functioning that can be obtained using the Organizational Readiness for Change (ORC; Lehman et al., 2002) assessment. The ORC is related to client performance in treatment (Broome, Flynn, Knight, & Simpson, this issue; Greener, Joe, Simpson, Rowan-Szal, & Lehman, this issue) and has utility for predicting program readiness to adopt innovations (Fuller et al., this issue; Joe, Broome, Simpson, & Rowan-Szal, this issue; Saldana, Chapman, Henggeler, & Rowland, this issue). Not surprisingly, more favorable counselor ratings of workshop training predict subsequent implementation of the training materials (Bartholomew, Joe, Rowan-Szal, & Simpson, this issue). Implementation of innovations by individual counselors also is associated with staff attributes measured by the ORC (reflecting professional confidence and influence), while program-wide implementation of innovations is dependent on better organizational climate, more resources, and more professional growth opportunities for staff (Joe, Simpson, & Broome, 2006).

1.1. Study aims

The studies mentioned above focus on specific segments of the innovation adoption process and are limited to addressing questions about data collected from one or two adjacent time periods. Most of the studies, however, are part of longitudinal projects that will add more waves of data collection over time as they mature. Only one of the data sets they used currently contains linked, longitudinal records from programs collected over a 2-year interval. Although the number of programs it includes is too small for applying some multivariate analytic techniques to examine program-level differences, the data were adequate for exploring several straightforward aspects of the innovation adoption process.

Pursuant to the proximal relationships found between adjacent stages of the adoption and implementation process reported in this volume, the present study expanded its focus to examine broader timeline interrelationships and more distal responses to training. Four general questions were asked. First, are staff perceptions about training needs and program readiness related to subsequent staff evaluations of training and adoption of innovations? Second, is the level of organizational functioning in programs related to staff responses to subsequent training? Third, are staff ratings on quality of workshop training and its adoption related to subsequent client ratings of treatment engagement? And fourth, are there long-term (e.g., 2-year) changes in staff ratings of program training needs and readiness that are related to intervening training experiences?

2. Methods

2.1. Procedures for data collection

Data for this study were collected between January 2002 and February 2004. The subject pool consisted of clinical supervisors, counseling staff, and clients from a statewide sample of publicly-funded substance abuse treatment programs. They were part of a state office of drug and alcohol services that sought assistance from the Institute of Behavioral Research at Texas Christian University (TCU) and the regional Gulf Coast Addiction Technology Transfer Center (GCATTC) to assess and respond to training needs of its treatment workforce. Each participating treatment unit was asked to administer a package of forms to be completed by the clinical supervisors and staff of that substance abuse treatment program. Methods and procedures for collecting these forms were carried out in accordance with protocols approved by the Institutional Review Board at Texas Christian University. Participation in the assessments was voluntary and a passive consent procedure was used in which completing an assessment and returning it to TCU in attached self-addressed and stamped envelope implied consent to participate.

In January 2002, 330 Program Training Needs (PTN) assessment forms were sent to 59 substance abuse treatment units, and a total of 253 forms were returned (77%). Two years later in February 2004, a follow-up PTN survey was administered with 330 PTN forms mailed and 192 forms returned (58%). The TCU Organizational Readiness for Change (ORC) assessment instrument was first administered in October 2002, collecting 191 ORC staff surveys using similar data collection procedures as the PTN described above. The overall return rate for the ORC forms completed was about 58%. These return percentages are in the range of the 56% to 64% rates for employees surveyed by mail as generally reported in the organizational literature (Schneider, Parkington, & Buxton, 1980; Schneider, White, & Paul, 1998). This resulted in an average of approximately three ORCs per treatment unit.

A package of Client Evaluation of Self and Treatment (CEST) assessment forms were also sent to each treatment unit (see Joe, Broome, Rowan-Szal, & Simpson, 2002). Typically, treatment units received up to 100 CESTs to be administered to a sequential sample of clients as they presented for treatment services. A total of 1,900 CEST forms were mailed and 1,147 forms were returned (60%) from clients 4 months before the training conference, designed to be completed within a few weeks and during the same time period that staff completed ORC assessments. The ORC (174 forms) and CEST (1,015 forms) also were repeated at each program 6 months after completion of the workshop training conference described below.

2.1.2. Workshop training conference

A state-sponsored training conference was held at the end of January 2003, planned in part on the basis of the PTN survey results from the previous year. The 3-day conference was attended by 253 counselors from publicly-funded agencies in the state. The conference focused on key issues identified by program directors and staff in the training needs assessment, including training sessions on improving counseling skills (therapeutic alliance and client engagement), working with dually diagnosed clients, and working with adolescents and their families (RowanSzal, Joe, Greener, & Simpson, 2005). As described in more detail by Rowan-Szal et al. (2005), one of the primary workshop sessions was on improving therapeutic alliance and treatment engagement (Scott Miller, Ph.D., “Heart and Soul of Change: What Works in Therapy”).

For this workshop on Improving Therapeutic Alliance (TA), participants received a full day of training, followed by a “booster” session covering material from the workshop offered regionally in the months following the conference to review the clinical strategies that had been recommended. Voluntary workshop-specific evaluation surveys of this training were collected from conference participants at two time points for this study. Workshop Evaluation (WEVAL) surveys were collected at the close of training from 293 participants who attended the workshop. Six months later, Workshop Assessment Follow-up (WAFU) follow-up forms were mailed to each person who attended the workshop. Both the WEVAL and WAFU questionnaire included a 4-digit anonymous linking code (first letter mother's first name, first letter father's first name, first digit SS#, last digit SS#) that allowed these forms to be cross-linked. Workshop-specific evaluations of training and adoption actions at follow-up (about 6 months later) are described in more detail below and are a key focus of this study.

2.1.3. Data timelines and linkages across instruments

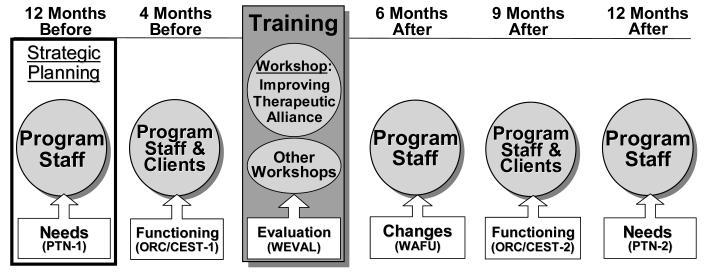

Using the workshop training as the center-point reference, Figure 1 shows that data collection began 12 months earlier (using a survey of Program Training Needs, PTN) to gauge staff perceptions of training needs and readiness. Staff-level and client-level assessments – using the Organizational Readiness for Change (ORC) and Client Evaluation of Self and Treatment (CEST), respectively – were completed 4 months prior to workshop training (Time 1). The Workshop Evaluation (WEVAL) was completed by participating staff at the end of each workshop training session, and they were contacted again about 6 months later and asked to complete a Workshop Assessment Follow-up (WAFU) for the training sessions they attended. In order to reexamine staff and client functioning at 9 months after training, the ORC and CEST were repeated at each program (Time 2). Finally, the PTN was repeated as a staff survey 12 months after workshop training (i.e., about 2-years after its initial administration).

Figure 1.

Data collection schedule for study of questions related to training needs, responses and impact.

2.1.4. Participants

Substance abuse treatment counselors were the primary respondents for most of the assessments collected. Based on the demographic information obtained from the initial statewide sample of 253 counselors at 59 programs that attended the workshop training, the staff reported being 65% Caucasian, 30% African American, and 5% other. Sixty-six percent were female and the average age of the counselor represented was 45 years. In regard to client self-report data based on 1,147 clients from these programs, 64% were Caucasian and 30% were African American. The average age of the clients represented was 36 years, and 67 % were male.

2.2. Instruments

2.2.1. Program Training Needs (PTN) assessment

The TCU Program Training Needs (PTN) assessment includes 54 items organized into seven content domains focused on Program Facilities and Climate, Program Computer Resources, Staff Training Needs, Preferences for Training Content, Preferences for Training Strategy, Training Barriers, and Satisfaction with Training (Rowan-Szal et al., this issue). Collectively, this type of information is intended to help guide overall training efforts as well as predict the types of innovations that participating programs are most likely to seek out and adopt. Four scales were selected for use in this study: Program Facilities and Climate (7 items, alpha = .79), Program Computer Resources (5 items, scored as a summative index with a range of 0 to 5 rather than composite scale), Training Barriers (10 items, alpha =. 83), and previous Satisfaction with Training (4 items, alpha =.75). Items use a 5-point Likert agree-disagree response format, and are averaged within scales and then multiplied by 10. Mean scores for scales therefore range from 10 to 50, with scores above 30 indicating overall agreement (higher scores indicate stronger agreement, while lower scores indicate stronger disagreement).

2.2.2. Organizational Readiness for Change (ORC) assessment

The TCU Organizational Readiness for Change (ORC) assessment of organizational needs and functioning focuses on organizational traits that are related to program change (Lehman et al., 2002). It includes 18 scales from four major domains – needs and pressures, resources, staff attributes, and climate. Needs and pressures (motivation for treatment) factors include program needs, training needs, and pressures for change, while program resources are evaluated in regard to office facilities, staffing, training, equipment, and Internet. Organizational dynamics include scales on staff attributes (growth, efficacy, influence, adaptability, and clinical orientation) and program climate (mission, cohesion, autonomy, communication, stress, and flexibility for change). The ORC scales are useful indicators of global strengths and weaknesses of the measured constructs and useful for identifying potential areas for programs to consider. Only the subset of organizational climate scales were examined as part of the present study, including clarity of program mission (5 items, alpha = .74), staff cohesiveness (6 items, alpha = .88), staff autonomy (5 items, alpha = .56), communication (5 items, alpha = .83), stress (4 items, alpha = .82), and openness to change (5 items, alpha = .73). In computing scale scores, counselor ratings using 5-point Likert-type items (agree-disagree) for each scale are averaged and then multiplied by 10. Mean scores for scales range from 10 to 50, with scores above 30 indicating overall agreement (and higher scores indicating stronger agreement).

2.2.3. Client Evaluation of Self and Treatment (CEST) assessment

The Client Evaluation of Self and Treatment (CEST) includes 14 scales representing client motivation and readiness for treatment, psychological and social functioning, and treatment engagement (Joe et al., 2002). The present study included only the three treatment engagement scales. Counseling rapport (13 items, alpha = .92) reflects client perceptions of core areas of therapeutic relationship with treatment counselors such as mutual goals, trust, and respect. Treatment participation (12 items, alpha = .86) summarizes client perceptions of their own involvement and active engagement in treatment sessions and services. Treatment satisfaction (7 items, alpha = .81) indicates how well clients feel the treatment program is meeting their needs. Scale scores are calculated based on client self-administered ratings using 5-point Likert-type items (agree-disagree). Responses on items for each scale are averaged and then multiplied by 10. Mean scores for scales range from 10 to 50, with scores above 30 indicating overall agreement (and higher scores indicating stronger agreement).

2.2.4. Workshop evaluation (WEVAL) form

The 22-item Workshop Evaluation (WEVAL) form includes two parts and was used to collect general information on (1) satisfaction with training, (2) resources available at programs, (3) desire for more training, and (4) perceived organizational support for using the training materials (Bartholomew et al., this issue). Participants also completed a 12-item trailer with additional questions that were specific to each workshop they attended (i.e., effectiveness of the topics covered; intentions to use specific strategies presented). The first (22 items) focuses on staff responses to training in general immediately following its completion, and the second (12 items) is workshop specific.

The present study focused only on the set of evaluations relating to the workshop for “improving treatment alliance.” General evaluation items represent three factors: relevance, engagement and support. Relevance (4 items, alpha = .82) indicates the extent to which the innovation was judged by trainees to fit with and offer usefulness in addressing client needs. Engagement (3 items, alpha = .88) is based on trainee interests in further training and in inviting colleagues to become involved. Support (3 items, alpha = .80) refers to attitudes about adequacy of program resource and staff to actually use the innovation. The 12 items for rating specific workshop training are highly intercorrelated, yielding a single composite scale for workshop quality (alpha = .95). These items address perceived effectiveness, usefulness, expectation of use, and endorsement of specific ideas about the innovation as presented by the workshop trainer (which for the present study was for improving therapeutic alliance). Ratings were completed at the end of workshop training, using 5-point Likert scales (agree-disagree) for each item. They are averaged for each scale and multiplied by 10 (yielding scales scores ranging from 10 to 50).

2.2.5. Workshop assessment follow-up (WAFU) form

The 14-item Workshop Assessment Follow-Up (WAFU) was completed by workshop trainees several months later to address questions about innovation adoption (Bartholomew et al., this issue). It contained a 6-item section on post-training satisfaction with and adoption of workshop materials, an 8-item inventory about barriers to use, and a 12-item section addendum on the booster. The satisfaction items were (1) How satisfied were you with the training provided? (2) Have you used any of the ideas or materials from the workshop? (3) If so, how useful were they? (4) Have you recommended or discussed them with others? (5) Do you expect to use these materials in the future? and (6) Are you interested in further more specialized training?

Training reactions at follow-up are represented by scales for post-training satisfaction (6 items; alpha =. 89) and experiences in trial use of workshop materials (4 items, alpha = .90). In addition, trainees who participated in booster training during the 2-3 months following the workshop were asked to complete a 12-item addendum to the WAFU for addressing its usefulness and perceptions about effects on clinical practice. The two scales from the addendum used for the present study reflect attitudes about developing better skills as a counselor (5 items, alpha = .86) and better rapport with clients (3 items, alpha = .83) as a result of the training. As with other forms, trainee ratings use 5-point Likert scales (agree-disagree) for each item.

2.4. Analyses

This study uses portions of the same data files and measures reported by Rowan-Szal et al., Bartholomew et al., and Joe, Broome, Simpson, and Rowan-Szal, in this journal volume. Because data collection extended over a 2-year period, sample sizes fluctuated and respondents were not the same over time. Therefore, respondents were matched into their respective treatment program units and data were analyzed at the treatment unit level. The total number of treatment units included in the analysis varied because there was missing information from programs on some variables. The research questions addressed in this study are based on the data sources summarized in Figure 1.

Each of the four questions is addressed primarily by using a set of straightforward cross-sectional analyses, including bivariate comparisons based on Pearson correlation coefficients. A way to judge the strength of the correlation is to examine it in terms of “effect size” (Cohen, 1988). Using the transformation given by Rosenthal (1991) for converting the correlation coefficient to Cohen's measure of effect size (d), it is seen that a small effect size (d = .20) corresponds to a correlation coefficient of .10, a medium effect size (d = .50) to a correlation coefficient of approximately .24, and a large effect size (d = .80) to correlation coefficient of approximately .37. Based on the sample sizes available in this study, for the correlations to be significant, it usually meant that a medium to large effect size was needed.

3. Results

3.1. Are program needs and readiness for training related to responses to training?

Because the initial staff survey of program needs and training readiness (PTN-Time 1) was used in part to help plan the statewide training conference being studied here, it was expected that these ratings of program needs would be related to staff reactions to the training. The first set of bivariate analyses for this study therefore focused on the relationships of PTN measures of previous satisfaction with training, program facilities and climate, program computer resources, and training barriers with staff evaluations of training (based on WEVAL measures for training relevance, engagement, agency support, and workshop quality) and indicators for adoption of materials after training (based on WAFU staff ratings of post-training satisfaction, trial use, better counseling skills, and better client rapport).

Program-level scores (i.e., using average ratings from counselors at each treatment program) on the PTN scales were significantly correlated as expected with workshop training and adoption indicators. That is, more favorable staff ratings for satisfaction with previous training experiences were associated with higher levels (based on WEVAL scales) of perceived agency support (r = .36, p < .01, n = 51), engagement in the training (r = .31, p < .05, n = 51), and overall ratings of workshop quality (r = .29, p < .05, n = 51). In regard to indicators of innovation adoption (taken from the WAFU ratings), program training barriers also were significant. That is, the counselors at programs where training barriers were regarded as lower gave more favorable ratings for post-training satisfaction (r = −.33, p < .05, n = 46) and trial use of the innovations (r = −.33, p < .05, n = 46). Although not statistically significant, staff ratings for other adoption indicators were likewise all positively related with past training experiences and program support. These results reveal a clear pattern of relationships between staff ratings on the PTN and their reactions several months later to training.

3.2. Is organizational functioning related to program adoption of training?

It was expected that organizational climate measures (ORC-Time 1) for programs would be related to staff progress in subsequently adopting innovations (WAFU scales). Indeed, as shown in Table 1, results showed that higher ORC scores for scales on clarity of program mission and openness to change were related to more favorable staff ratings of post-training satisfaction and trial use of the innovation. ORC climate (including clarity of mission, staff cohesion, communication, and openness to change) scales also were predictive of staff perceptions about training benefits in terms of leading to better counseling skills and better rapport with clients.

Table 1.

Correlations of Organizational Functioning (ORC) Measures at Time 1 with Innovation Adoption at Follow-up (by Programs Participating in the Therapeutic Alliance Workshop)

| TA Workshop Assessment Follow-up (WAFU) Measures |

|||||

|---|---|---|---|---|---|

| Organizational Assessment (ORC) Scales (Time 1) |

N | Post-training satisfaction |

Trial use |

Better counselor skills |

Better therapeutic alliance and rapport |

| Mission | 42 | .40** | .39* | .38* | .34* |

| Cohesiveness | 42 | .07 | .08 | .29† | .34* |

| Autonomy | 42 | .28† | .28† | .22 | .21 |

| Communication | 42 | .25 | .27† | .29† | .31* |

| Stress | 42 | .21 | .19 | .12 | −.01 |

| Openness to Change | 42 | .32* | .33* | .33* | .40* |

p < .10

p < .05

p < .01

3.3. Is program adoption of training materials related to client engagement?

If program staff make progress in adopting an innovation and consider that it has improved the quality of their counseling (WAFU scales), then it might also be expected to be associated with indicators of client functioning. This was a particularly reasonable expectation in this study because the training innovation was designed for “improving therapeutic alliance and treatment engagement.” Self-ratings from samples of clients were collected from participating programs about 9 months after workshop training (using CEST-Time 2), and 3 months after obtaining counselor ratings of their progress in adopting workshop materials. Results presented in Table 2 show that clients treated at programs with higher counselor ratings on innovation adoption indicators – including post-training satisfaction, trial use, and development of better skills – gave higher ratings of rapport with their counselors and greater participation in treatment. It should be noted that these client ratings were not returned to or seen by individual counselors of the clients, and efforts were made to emphasize confidentiality of the client assessments.

Table 2.

Correlations of Program-Level Innovation Adoption Measures with Client Engagement (CEST) Measures at Time 2

| Client (CEST) Measures (Time 2) |

||||

|---|---|---|---|---|

| Therapeutic Alliance Workshop (WAFU) Measures |

N | Treatment Satisfaction |

Counselor Rapport |

Treatment Participation |

| Post-training satisfaction | 32 | .27 | .37* | .51** |

| Trial use | 32 | .27 | .35* | .50** |

| Better counselor from improved skills | 32 | .10 | .26 | .36* |

| Better therapeutic alliance and rapport | 32 | −.01 | .08 | .21 |

p < .05

p < .01

3.4. Do program needs change over time and are they related to innovation adoption?

If the statewide training conference was adequately planned and conducted for the broad purpose of addressing training needs of programs, then changes might be expected over time in the way program staff view these needs. The PTN was re-administered to program staff after a 2-year period, with the training conference occurring at the mid-point of this time interval. Comparisons between them showed there were significant improvements from Time 1 to Time 2 in staff ratings of program computer resources [mean1 = 27.3, mean2 = 32.8, (F(1,53) = 36.10, p <. 0001)], and importantly, in their previous satisfaction with training experiences [mean1= 33.2 , mean2 = 36.1, (F(1,53) = 11.81, p < .002)]. The latter finding is pertinent because it indicates there was a significant increase in satisfaction with post-training experiences from before to after the statewide training conference in 2003.

Secondly, staff perceptions of program training needs at follow-up (PTN-Time 2) should be influenced by training experiences during the previous year. Predictably, as presented in Table 3, results show that program training barriers (in PTN-2) were regarded less severe in programs where staff felt there was higher relevance of the training materials, stronger program support, and higher counselor engagement in training. The set of indicators on subsequent adoption of training was an even stronger predictor of staff ratings of program needs at Time 2. That is, higher counselor ratings of post-training satisfaction, trial use, and development of better counseling skills and rapport with clients (taken from the WAFU) were followed by higher scores on program facilities and climate, higher ratings for program computer resources, and lower levels of training barriers.

Table 3.

Correlations of Quality of Training Measures and Innovation Adoption Measures with Program Training Needs (PTN) Composites at Time 2

| PTN Composites at Time 2 |

||||

|---|---|---|---|---|

| Therapeutic Alliance (TA) Workshop Measures |

N | Program Facilities and Climate |

Program Computer Resources |

Training Barriers |

| Quality of Training TA workshop | ||||

| Relevance | 54 | .23† | .14 | −.32* |

| Support | 54 | .33* | .18 | −.37** |

| Engagement | 54 | .14 | .11 | −.27* |

| Adoption TA workshop | ||||

| Post-training Satisfaction | 46 | .52*** | .38** | −.37** |

| Trial Use | 46 | .52*** | .39** | −.39** |

| Better counselor from improved skills | 46 | .50*** | .53*** | −.37* |

| Better therapeutic alliance and rapport | 46 | .51*** | .48*** | −.35* |

p < .10

p < .05

p< .01

3.5. Are responses to innovation training affected by “moderator variables”?

It is often important to consider the potential effects of “moderator variables” in observational studies of change in natural settings (James & Brett, 1984; Tucker & Roth, 2006). It was reported above, for instance, that long-range changes occurred in staff ratings of program needs (i.e., based on comparisons of PTN-1 and PTN-2 data collected over a 2-year period). Some of the changes over time were found to be “moderated” by experiences related to staff training. Namely, programs in which staff became more engaged in training and reported greater benefits from adopting the innovation were later rated by staff as having fewer barriers and more positive climate and available resources.

Moderator effects generally indicate there are interactions between factors that influence the change process. In the present study, it seemed useful to examine program-level adoption actions (measured by the WAFU scales) in relation to organizational climate (ORC-1 scales) and staff perceptions about the quality of training (WEVAL scales). In the program change process as described by Simpson and Flynn (this issue), it is postulated that the stages of training, adoption decision, and adoption actions are subject to influences from staff and organizational attributes.

In order to examine these potential effects as part of the present study, the organizational climate scale for “openness to change” (from ORC-1) and the training score for indicating “relevance” of the innovation (from WEVAL) were selected as independent variables because both are predictors of adoption actions at follow-up. After converting these predictor variable scores into dichotomous measures to define high versus low level programs on each measure, a 2-way analysis of variance was conducted. It tested specifically the question of whether program environment (openness to change) moderates the role of training opinions (relevance of the innovation) to adoption actions at follow-up (WAFU). Using a reduced sample of 43 programs for which these data were available, results supported the moderator hypothesis. That is, a prori t-tests for high versus low relevance within levels of openness to change showed that at programs higher on openness to change, the subset of programs with higher staff ratings on training relevance reported significantly higher post-training satisfaction [mean1 = 3.85, mean2 = 4.36, (t = 2.16, p < .04), trial use [mean1 = 3.75, mean2 = 4.30,(t = 2.10, p < .05), and development of better skills [mean1 = 3.24, mean2 = 3.95, (t = 2.48, p < .02)] at follow-up. Within the group of programs reporting low openness to change, however, staff ratings for relevance of the training were unrelated to progress in adopting the innovation. These findings suggest that unless there is an open atmosphere to accommodate new ideas for treatment in a program, counselor interest and enthusiasm for making innovative changes are unlikely to be realized.

4. Discussion

This study examined longitudinal interrelationships among program-level measures of needs and functioning, workshop training, innovation adoption, client functioning, and follow-up changes in training needs collected at six separate time points during a 2-year period. The results augment findings from other studies in this special volume by using a “wide-angle” view for showing how changes fit together over time as portrayed in a stage-based program change model (Simpson, 2002; Simpson & Flynn, this issue). Because of the comparatively brief time interval studied between training and follow-up assessment of its utilization, it is pointed out that adoption decisions and actions are considered to be the primary focus of the study, rather than the longer-term implementation stage of the change process (Klein & Knight, 2005).

In general, program needs and training readiness were found to be predictive of staff responsiveness to workshop training and trial use of innovation materials at follow-up. Better workshop training experiences of counselors also predicted more likely adoption and resulting improvements in counseling skills. However, organizational factors play a role in this process. Well functioning programs – defined by higher scores on clarity of mission, staff cohesion and communication, and openness to change – have greater likelihood of adopting new ideas for treatment, which in the case of the present study included a workshop and follow-up training for improving therapeutic alliance with clients. And it was not entirely surprising to find that even if counselors perceived the training to be more relevant to their needs and have higher quality, their progress in adopting the materials over the next few months was “moderated” by the organizational atmosphere of the treatment program. Thus, if their program was considered to have low tolerance for change, then innovation adoption was unlikely to occur regardless of how well counselors in the program liked the training.

These findings address in part the four conclusions listed earlier that were taken from Fixsen et al. (2005) regarding improvements needed in implementation research. Namely, the focus in this study was on a training protocol that seems to have worked. It also addressed multilevel components of the process by examining program needs, organizational functioning, staff attributes, training practices, and barriers to practice. Interaction effects were likewise examined which showed how organizational climate can moderate staff responses to innovation training. Most importantly, the findings suggest that innovation adoption makes a difference in clinical care. The analyses addressing adoption and program-level client engagement provided support for the overall process model for change. Factors that influenced adoption of therapeutic alliance workshop ideas helped counselors to see themselves as functioning better in their job and raised client self-rating of treatment participation and rapport with their counselors.

A limiting factor in the analyses involving client-level (CEST) data, however, was the reduced sample of programs available for addressing these relationships. As noted by Greener and associates (this issue) in a related study using client records, this was due to the fact that the CEST was not successfully administered in all programs participating in the training. Although this reduced the number of programs available for analysis, no systematic biases were apparent that would confound these results.

The reassessment of staff attitudes about needs for program training conducted 2-years later provided another view of the effects of offering workshop training related to the counselors consider important. For instance, there were large effect sizes (based on correlations of .50 and greater; Rosenthal, 1991) for relationships between program climate indicators at follow-up and counselor ratings of progress in innovation adoption obtained more than 6 months earlier. These measures included post-training satisfaction with new counseling ideas, their trial usage in the months following training, enhancements of counseling skill, and perceived improvements in their rapport with clients.

The consistency of these linkages across stages of the overall adoption process is encouraging. As noted by Tucker and Roth (2006), using methodologies for connecting measures of this sequential change process and the factors that influence it offers the best chance to advance scientific knowledge about effectively transferring research to practice in natural settings. This study is a good example of the feasibility and promise of such research. But admittedly, it is only a start. It is limited by the needs for more systematic information about counselor backgrounds and skill sets, a larger and broader representation of treatment programs, more comprehensive and theoretically-focused assessments of innovation training and adoption activities, and better representation of client measures on treatment engagement and outcomes. It is noteworthy in this regard, however, that although it was not feasible to report them formally in this article, parallel analyses were conducted in relation to a companion workshop on “dealing with dual diagnosis” at the same statewide training conference. Although training content and protocol were different (e.g., it included no follow-up booster sessions), the basic findings reflecting the importance of organizational climate and quality of training for adoption were similar. Because the sample of counselors represented in this analysis was somewhat different, this replication of key findings added confidence to these study results.

The conceptual model used to integrate this research on how programs adopt and implement innovations (Simpson, 2002; Simpson & Flynn, this issue) is being refined and expanded by these results. Its heuristic value includes benefits in explanatory value for helping treatment systems understand the complicated process of how innovations become adopted and implemented, and factors that influence it. Applying this information to formulate customized plans for improving treatment will hopefully follow. For example, evidence suggests that programs should “plan and prepare” before beginning an innovation training and implementation initiative. If staff assessments reveal barriers or reservations, or if organizational functioning has deficiencies, then the program should first consider addressing its own infrastructure problems before introducing innovation initiatives (e.g., Simpson & Dansereau, in press).

If “systems are go” for training readiness, on the other hand, the focus shifts to innovation training and implementation. Assessments for monitoring distinct stages of this process using procedures like the ones illustrated in this study can help track and evaluate progress. By identifying potential training and early adoption problems as they emerge, efficient solutions are more likely to be found and applied. In effect, this means refocusing typical expectations from merely “attending or completing” a training session (sometimes related to certification or continuing education requirements) to actively using it. The difficulty of accomplishing this should not be underestimated.

Acknowledgements

The authors thank Mr. Michael Duffy, Director of the Louisiana Office for Addictive Disorders, and his staff for their leadership in planning the statewide training conference in early 2003 and for their collaborative assistance in completing the series of data collection activities needed for this study. Dr. Richard Spence (Director of the Gulf Coast Addiction Technology Training Center) and his associates at the GCATTC provided a crucial partnership in carrying out this long-range evaluation project. Especially important was their assistance in managing portions of the data collection and coordination with the network of treatment programs in Louisiana for sustaining agency participation. We would also like to thank the individual programs (staff and clients) in Louisiana who participated in the assessments and training.

This work was funded by the National Institute of Drug Abuse (Grant R37 DA13093). The interpretations and conclusions, however, do not necessarily represent the position of NIDA or the Department of Health and Human Services. More information (including intervention manuals and data collection instruments that can be downloaded without charge) is available on the Internet at www.ibr.tcu.edu, and electronic mail can be sent to ibr@tcu.edu.

Footnotes

This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bartholomew NG, Joe GW, Rowan-Szal GA, Simpson DD. Counselor assessments of training and adoption barriers. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2007.01.005. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2006.12.030. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. Erlbaum; Hillsdale, NJ: 1988. [Google Scholar]

- Compton WM, Stein JB, Robertson EB, Pintello D, Pringle B, Volkow ND. Charting a course for health services research at the National Institute on Drug Abuse. Journal of Substance Abuse Treatment. 2005;29(3):167–172. doi: 10.1016/j.jsat.2005.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. University of South Florida; Tampa: 2005. (No. Louis de la Parte Florida Mental Health Publication #231). [Google Scholar]

- Fuller BE, Rieckmann T, McCarty D, Nunes EV, Miller M, Arfken C, Edmundson E. Organizational readiness for change and opinions toward treatment innovations. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2006.12.026. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greener JM, Joe GW, Simpson DD, Rowan-Szal GA, Lehman WEK. Influence of organizational functioning on client engagement in treatment. Journal of Substance Abuse. doi: 10.1016/j.jsat.2006.12.025. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James LR, Brett JM. Mediators, moderators, and tests for mediation. Journal of Applied Psychology. 1984;69:307–321. [Google Scholar]

- Joe GW, Broome KM, Rowan-Szal GA, Simpson DD. Measuring patient attributes and engagement in treatment. Journal of Substance Abuse Treatment. 2002;22(4):183–196. doi: 10.1016/s0740-5472(02)00232-5. [DOI] [PubMed] [Google Scholar]

- Joe GW, Broome KM, Simpson DD, Rowan-Szal GA. Counselor perceptions of organizational factors and innovations training experiences. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2006.12.027. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joe GW, Simpson DD, Broome KM. Organizational functioning and staff attributes related to adoption of innovations. 2006 Manuscript submitted for publication. [Google Scholar]

- Klein KJ, Knight AP. Innovation implementation: Overcoming the challenge. Current Directions in Psychological Science. 2005;14(5):243–246. [Google Scholar]

- Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. Journal of Substance Abuse Treatment. 2002;22(4):197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- Rosenthal R. Meta-analytic procedures for social research. Newbury Park, CA: 1991. [Google Scholar]

- Rowan-Szal GA, Greener JM, Joe GW, Simpson DD. Assessing program needs and planning change. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2006.12.028. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowan-Szal GA, Joe GW, Greener JM, Simpson DD. Assessment of the TCU Program Training Needs (PTN) Survey. Poster presentation at the annual Addiction Health Services Research Conference; Santa Monica, CA. Oct, 2005. [Google Scholar]

- Saldana L, Chapman JE, Henggeler SW, Rowland MD. Assessing organizational readiness for change in adolescent treatment programs. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2006.12.029. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD. Modeling treatment process and outcomes. Addiction. 2001;96(2):207–211. doi: 10.1046/j.1360-0443.2001.9622073.x. [DOI] [PubMed] [Google Scholar]

- Simpson DD. A conceptual framework for transferring research to practice. Journal of Substance Abuse Treatment. 2002;22(4):171–182. doi: 10.1016/s0740-5472(02)00231-3. [DOI] [PubMed] [Google Scholar]

- Simpson DD. A conceptual framework for drug treatment process and outcomes. Journal of Substance Abuse Treatment. 2004;27(2):99–121. doi: 10.1016/j.jsat.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Simpson DD. A plan for planning treatment. Counselor: A Magazine for Addiction Professionals. 2006 August;7(4):20–28. [Google Scholar]

- Simpson DD, Dansereau DF. Assessing organizational functioning as a step toward innovation. NIDA Science & Practice Perspectives. doi: 10.1151/spp073220. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. Journal of Substance Abuse Treatment. doi: 10.1016/j.jsat.2006.12.023. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider B, Parkington JJ, Buxton VM. Employee and customer perceptions of service in banks. Administrative Science Quarterly. 1980;25:252–267. [Google Scholar]

- Schneider B, White SS, Paul MC. Linking service climate and customer perceptions of service quality: Test of a causal model. Journal of Applied Psychology. 1998;83(2):150–163. doi: 10.1037/0021-9010.83.2.150. [DOI] [PubMed] [Google Scholar]

- Tucker JA, Roth DA. Extending the evidence hierarchy to enhance evidence-based practice for substance use disorders. Addiction. 2006;101:918–932. doi: 10.1111/j.1360-0443.2006.01396.x. [DOI] [PubMed] [Google Scholar]