Abstract

Bilateral amygdala lesions impair the ability to identify certain emotions, especially fear, from facial expressions, and neuroimaging studies have demonstrated differential amygdala activation as a function of the emotional expression of faces, even under conditions of subliminal presentation, and again especially for fear. Yet the amygdala's role in processing emotion from other classes of stimuli remains poorly understood. On the basis of its known connectivity as well as prior studies in humans and animals, we hypothesised that the amygdala would be important also for the recognition of fear from body expressions. To test this hypothesis, we assessed a patient (S.M.) with complete bilateral amygdala lesions who is known to be severely impaired at recognising fear from faces. S.M. completed a battery of tasks involving forced-choice labelling and rating of the emotions in two sets of dynamic body movement stimuli, as well as in a set of static body postures. Unexpectedly, S.M.'s performance was completely normal. We replicated the finding in a second rare subject with bilateral lesions entirely confined to the amygdala. Compared to healthy comparison subjects, neither of the amygdala lesion subjects was impaired in identifying fear from any of these displays. Thus, whatever the role of the amygdala in processing whole-body fear cues, it is apparently not necessary for the normal recognition of fear from either static or dynamic body expressions.

Keywords: Biological motion, Body gestures, Emotion recognition, Point-light

1. Introduction

Bilateral amygdala lesions impair the ability to recognise fear, and to a more variable extent anger and other negatively valenced emotions, from static facial expressions (e.g., Adolphs, Tranel, Damasio, & Damasio, 1994; Adolphs, Tranel, Damasio, & Damasio, 1995; Adolphs et al., 1999; Calder et al., 1996). The amygdala is also activated by fearful facial expressions in neuroimaging studies, although this finding is more variable and appears to be less specific to fear (e.g., Breiter et al., 1996; Morris et al., 1996; Whalen et al., 2001; Winston, O'Doherty, & Dolan, 2003), and does not require conscious perception of the emotion (Morris, Ohman, & Dolan, 1999) or even of the face (Jiang & He, 2006; Morris, de Gelder, Weiskrantz, & Dolan, 2001; Williams, Morris, McGlone, Abbott, & Mattingley, 2004). While other visual stimuli have been less investigated, greater amygdala activation has been recorded also for fearful versus neutral whole-body postures and movements (Atkinson, Heining, & Phillips, submitted; de Gelder, Snyder, Greve, Gerard, & Hadjikhani, 2004; Hadjikhani & de Gelder, 2003). Yet, as is the case with facial expressions, fear is not the only emotion to activate the amygdala when expressed by body movements and such fearful movements do not always activate the amygdala (Grézes, Pichon, & de Gelder, 2007; Peelen, Atkinson, Andersson, & Vuilleumier, in press). Considered together, these findings suggest that the amygdala automatically and rapidly processes emotional information from a broader class of visual stimuli, notably including fear from facial expressions but likely extending also to other social visual stimuli, such as body postures and movement.

However, to demonstrate that the amygdala is necessary for recognition of fear from body postures and movement, lesion studies are required. To date only one study has examined the effect of amygdala damage on emotion recognition from body expressions in the absence of facial expressions. Sprengelmeyer et al. (1999) reported impaired identification of fear from static body postures, faces and voices in a male patient with a bilateral amygdala lesion, relative to the performance of similarly aged neurologically healthy control subjects (a group of 10 in the case of the body posture task). The posture recognition impairment was specific to fear, moderately large (2/10 correct compared to control group mean of 6.5/10; z-score: −2.37), and not easily attributable to more general perceptual deficits. However, this subject's lesion was not entirely restricted to the amygdala, notably including some damage to left thalamus, nor did the lesion encompass the entire extent of both amygdalae, the damage to the left amygdala being incomplete and smaller than that to the right amygdala. Thus, it is possible that the impaired recognition of fear from body postures in this subject was not entirely a consequence of amygdala damage. Furthermore, Sprengelmeyer et al.'s (1999) study did not examine emotion recognition from moving bodily expressions. The purpose of the present study was to examine more thoroughly the critical role of the amygdala in the recognition of emotions from a large set of whole-body emotional stimuli including both moving and static expressions. We tested a subject, S.M., whose brain damage encompasses all of the amygdala bilaterally, and who has been extensively documented to be severely impaired at recognising fear from faces (e.g., Adolphs et al., 1994, 1995, 1999). To replicate the findings and confirm their specificity to the amygdala, we also tested a second subject, A.P., who has bilateral damage encompassing most of the amygdala and confined completely to the amygdala.

2. Methods

2.1. Participants

We tested two extremely rare women with bilateral amygdala lesions resulting from Urbach-Wiethe disease, S.M. and A.P. Background information, neuroanatomical data, and face and facial emotion recognition abilities for both subjects with amygdala lesions have been described previously (Adolphs et al., 1994, 1995, 1999; Buchanan, Tranel, & Adolphs, in press), and are summarised in Table 1. Whereas S.M. has complete bilateral amygdala damage, as well as minor damage to anterior entorhinal cortex, A.P. has bilateral damage encompassing approximately 70% of the amygdala, which does not extend beyond the amygdala at all. S.M. was 39 years old when she undertook the first set of tasks (reported as Tasks 3 and 4 in Sections 2.3.3 and 2.3.4) and 40 when she carried out the second set (reported as Tasks 1 and 2 in Sections 2.3.1 and 2.3.2). A.P. was 21 years old at the time of testing.

Table 1.

Background neuropsychological performance and demographics of the two subjects with bilateral amygdala lesions

| S.M. | A.P. | |

|---|---|---|

| Age | 39/40 | 21 |

| Education | 12 | 16 |

| VIQ | 86 | 92 |

| PIQ | 95 | 106 |

| Full-scale IQ | 88 | 98 |

| Benton face-matching test | 90th percentile | 85th percentile |

| Judgement of line orientation | 22nd percentile | >74th percentile |

| Hooper Visual Organization Test | 25.5/30 | 24/30 |

| Complex Figure Test (copying) | 32/36 | 36/36 |

Age in years at time of testing (S.M. was 39 when she completed tasks 3 and 4, and 40 when she completed tasks 1 and 2). Education: number of years of formal education. VIQ, PIQ and full-scale IQ: Verbal, performance and full-scale IQ from the Wechsler Adult Intelligence Scale (Revised for S.M., III for A.P.; age-corrected scaled scores). Benton face-matching test, judgement of line orientation, Hopper Visual Organization Test and Complex Figure Test: all from Benton, Sivan, Hamsher, Varney, and Spreen (1994).

We compared performances given by S.M. and A.P. with those given by two neurologically and psychiatrically healthy comparison groups of women. One normal comparison group (NC1) comprised 11 females, with a mean age of 33.9 years (range 27–43 years, S.D. = 6.6) and a mean of 13.5 years in formal education (range 12–15 years, S.D. = 0.82). The second normal comparison group (NC2) comprised 12 females, with a mean age of 46.7 years (range 31–57 years, S.D. = 7.8) and a mean of 15.1 years in formal education (range 12–18 years, S.D. = 2.1).

2.2. Normal reference groups and scoring of the emotion-labelling tasks

Since the perception of the body expression stimuli may well differ from what the actors intended, we did not use the intended emotions as the “correct” answer for our emotion-labelling tasks. Instead, we used the answers given by two large reference groups of neurologically normal subjects, distinct from our healthy comparison groups, both to assign stimuli to specific emotion categories and to assign emotion recognition scores to the amygdala lesion subjects and each member of the normal comparison (NC1 and NC2) groups. We assessed emotion recognition performance using both percentage correct and partial-credit scores, as detailed below.

One of the independent normal reference groups, used for Tasks 1 and 2, comprised 77 people (52 females, 25 males), with a mean age of 26.3 years (range 16–64, S.D. = 9.8) and a mean of 15.4 years in formal education (S.D. = 3.2). The other independent normal reference group, used for Task 3, comprised 109 people (73 females, 36 males), with a mean age of 25.6 years (range 19–69 years, S.D. = 11.6) and all with a minimum of 12 years of formal education.

Assignment of stimuli to emotion categories was determined according to the modal response of the normal reference groups (see Adolphs & Tranel, 2003; Heberlein, Adolphs, Tranel, & Damasio, 2004): a stimulus was considered a “happy” posture, for example, if a majority of the reference group called it happy. In order to test for possible effects of gender and age on the emotion recognition performance of the normal reference groups, we first assigned percentage correct recognition scores to each reference group subject relative to the modal responses of the rest of their respective reference group; that is, if a response accorded with the majority response of the relevant reference group, then it was considered correct, otherwise incorrect. For Task 1, there were no effects of gender for any emotion. Age was also uncorrelated with recognition performance for any emotion, except for a marginally significant correlation of age with surprise recognition in males (Spearman's rho = 0.442, p < 0.05 two-tailed, uncorrected for multiple emotion comparisons). For Task 2, the age of subjects was not correlated with their recognition performance for any of the emotions and there was no effect of gender. For Task 3, the age of female subjects was not significantly correlated with their emotion-labelling accuracy, while the age of male subjects was significantly correlated only with their accuracy at labelling happy expressions in patch-light displays (Spearman's rho = −0.486, p < 0.005, two-tailed but uncorrected for multiple emotions). There was no effect of gender on this task.

The amygdala lesion and normal comparison subjects were assigned percentage correct recognition scores for each emotion in each task, relative to the modal responses of the relevant reference group. As a further means of quantifying each subject's emotion recognition ability relative to the independent normal reference groups, the amygdala lesion and normal comparison subjects were also assigned partial credit scores, calculated in relation to the relative frequencies of occurrence of responses given by the relevant normal reference group (Heberlein et al., 2004). Thus, if 100% of normal reference subjects called a scene “happy”, for example, a brain-damaged or normal comparison subject would get a score of 1.0 for choosing the label “happy” and 0.0 for all other choices. On the other hand, if 50% of normal reference subjects called a scene “surprise”, 40% called it “afraid” and 10% called it “sad”, a subject would receive a score for that scene of 1.0 if choosing the label “surprise”, 0.8 if choosing the label “afraid” and 0.2 if choosing the label “sad”. In this way, correctness was made a parametric function solely of the distribution of responses that the normal reference subjects gave: high scores correspond to relatively better performance, low scores to relatively worse performance. We first examined performance on emotion recognition in general by calculating overall partial credit scores averaged across all stimuli presented in a given task. We then examined performance on the recognition of individual emotions by calculating partial credit scores for each emotion category from the subset of stimuli in that task with clear modal responses.

2.3. Stimuli and tasks

In order to examine the effects of bilateral amygdala damage on the ability to judge emotions from both motion and form cues in whole-body gestures, we used four sets of stimuli, comprising portrayals of basic emotions in: (1) full-light static displays (newly created), (2) point-light dynamic displays (similar to those used by Heberlein et al., 2004), and sets of (3) patch-light and (4) corresponding full-light dynamic displays (from Atkinson, Dittrich, Gemmell, & Young, 2004, and Atkinson, Tunstall, & Dittrich, 2007), as detailed below. In the point-light and patch-light displays the static form information is minimal or absent but motion and form-from-motion information is preserved (Johansson, 1973). The full-light dynamic displays contain both motion and full form information. Due to practical and time constraints, one of the neurologically normal comparison groups (NC1) completed the tasks involving the static posture and point-light body movement stimuli, whereas the comparison data for the tasks involving the patch-light displays and identical movements in the full-light displays were acquired from a different normal comparison group (NC2). All participants gave informed consent to participate in these studies, which were approved by either the Internal Review Board of the University of Iowa, the Cambridge Local Research Ethics Committee, the Internal Review Board of Harvard University or the Durham University Ethics Advisory Committee, in accordance with the Declaration of Helsinki.

2.3.1. Task 1: Forced-choice emotion-labelling of static body postures

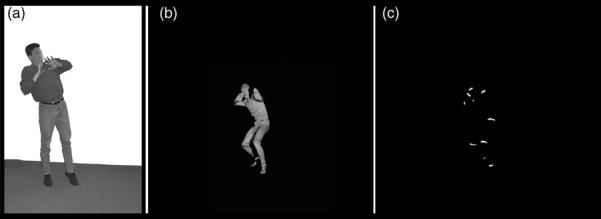

Emotion recognition from static body postures was investigated using a six-alternative forced-choice emotion-labelling task with a set of photographic images of static body postures. Four professional actors, two males and two females, were depicted in neutral poses and in postures portraying five basic emotions (anger, fear, happiness, sadness and surprise) in front of a white wall, standing on a grey floor. Faces were Gaussian blurred such that expressions were not identifiable (see Fig. 1); we chose this method of eliminating face information in preference over completely erasing the face or obscuring it, since the latter methods generated stimuli that looked more jarring. Eighty-two stimuli were piloted on 15 adult pilot subjects, out of which we chose 63 stimuli whose emotion was labelled the most reliably.

Fig. 1.

Illustrative examples of stimuli used in (a) Task 1 (a fearful static posture) and (b) and (c) Tasks 3 and 4 (still frames extracted from full-light and patch-light movie clips that showed a fearful body movement).

For the present study, the participants were asked to match each of the 63 stimuli to a single word from a list of six words (angry, afraid, happy, sad, surprised or neutral) in terms of how they thought the person depicted in each picture might feel. The stimuli were presented in a different random order for each subject. There was no time limit and response times were not recorded. For scoring purposes, 57 of these 63 stimuli had clear modal responses, that is, were assigned a single label by more than 50% of the independent normal reference group. The set of 57 stimuli with clear modal responses comprised 13 fearful, 13 angry, 12 sad, 10 neutral, 5 happy and 4 surprised postures.

2.3.2. Task 2: Forced-choice emotion-labelling of point-light walkers

Emotion recognition from whole-body displays of biological motion was investigated using a five-alternative forced-choice emotion-labelling task with a set of short digital movie clips of point-light walkers. The stimuli were similar to those described in Heberlein et al. (2004), and included six clips from that earlier study. These stimuli were created by filming six professional or student actors, three males and three females, who walked across the frame of a movie camera from left to right, with locomotory patterns intended to convey specific emotions. Small lights were attached to their wrists, ankles, knees, elbows, outer hip, waist, outer shoulder and head; they were filmed in the dark so that only the moving lights were visible. A set of 40 of these stimuli was presented to the normal reference group (described above), who were asked to choose the best word from a list of five words – angry, afraid, happy, sad or neutral – to describe how they thought the person depicted in each clip might feel. The stimuli were presented individually in a different random order for each subject. For scoring purposes, 39 of the 40 stimuli received clear modal responses from the normal reference group: 10 for anger, 9 for fear, 8 for sadness, 7 for happiness and 5 for neutral.

2.3.3. Task 3: Forced-choice emotion-labelling of patch-light and full-light body gestures

The stimuli in this task were grey-scale digital movie clips of people expressing emotions with whole-body movement, presented in patch-light (PL) and full-light (FL) displays, as developed by Atkinson et al. (2004). In the PL displays all that is visible against the black background are 13 thin strips of white tape that were attached to the actor (one wrapped around each ankle, knee, elbow and hand, one on each hip and shoulder, and one on the forehead), whereas in the FL displays the whole-body and head of the actor is visible but not his or her face. Student amateur actors gave short, individual portrayals of anger, disgust, fear, happiness and sadness with whole-body movements and gestures. They were free to interpret and express these emotions as they saw fit, with only minimal guidance as to the sorts of situations in which people might experience those emotions. This resulted in a varied set of mostly conventional and sometimes symbolic (Buck, 1984) movements. A subset of these original stimuli was selected for use in the present study, with the play length of each clip adjusted to 3 s. The selection process, described in Atkinson et al. (2007), was blind to the particular movements made by the actors, and an informal inspection of the selected set revealed a range of movements representative of the larger set, as described in Atkinson et al. (2004). Furthermore, the stimulus sets for each emotion consisted in identical sequences of movement across the two lighting conditions (FL and PL). Consequently, an objection that differences in performance across these two stimulus conditions could be due to differences in movement sequences between the conditions, rather than to differences in the amount of static form information in each stimulus type, can be ruled out. Examples of these stimuli can be viewed online at http://www.dur.ac.uk/a.p.atkinson/.

The subjects viewed all 50 PL gestures sequentially in a single block, followed by the 50 corresponding FL gestures. The stimuli were presented in a different random order for each block and for each participant. The task was to classify each stimulus in a five-alternative forced-choice emotion-labelling task (angry, disgusted, fearful, happy, sad). The amygdala lesion and NC2 subjects were required to respond verbally, whereas the normal reference group provided manual keyboard responses.

S.M.'s performance on this task was compared with the performance of the NC2 group in terms of percentage correct recognition scores and partial credit scores, relative to the independent normal reference group, as described above. Ninety-six of the 100 stimuli received clear modal responses from the normal reference group. For the FL displays, 10 were categorised as angry, 9 disgusted, 10 fearful, 10 happy and 10 sad; for the PL displays, 10 were categorised as angry, 7 disgusted, 9 fearful, 10 happy and 11 sad. Only one of these 96 stimuli was frequently labelled with an emotion (sadness) other than that intended by the actor (disgust).

2.3.4. Task 4: Emotion-rating task with patch-light and full-light body gestures

The stimuli for this task comprised half of the stimuli used for the forced-choice emotion-labelling task described in Section 2.3.3, such that there were five versions of each of five emotions in each of the two lighting conditions, with the PL and FL conditions again containing identical movement sequences. The participants first viewed the 25 patch-light gestures, presented sequentially and in a random order, and were asked to rate, on a 0–5 scale, how much anger was in each display. (The instructions indicated that 0 = not at all angry and 5 = extremely angry.) They provided verbal responses, which were entered into the computer by the experimenter. The participants then saw the same 25 stimuli again, in a different random order (different random orders were also used for each participant), and were asked to rate how much fear was in each display, using a similar 0–5 scale. This procedure was repeated for subsequent ratings of disgust, happiness and sadness, and the whole procedure was then repeated for the full-light versions of these gestures, such that at the end of the task the participants had rated the 25 patch-light followed by the 25 matching full-light gestures on each of anger, fear, disgust, happiness and sadness (all participants rated the emotions in that same order). Such an emotion-rating task has been used extensively with static facial expressions (e.g., Adolphs et al., 1994, 1995, 1999).

In addition to presenting raw emotion intensity ratings, we calculated Pearson correlation scores as follows (see also Adolphs et al., 1994, 1995, 1999). The rating profile given to each body stimulus by S.M. was correlated with the mean rating profile given to that stimulus by the NC2 group. Thus, correlations near 1 indicate that S.M. rated the stimulus normally; correlations near 0 (or negative) indicate that she rated the stimulus very abnormally. This procedure essentially prevents floor and ceiling effects, and controls for idiosyncratic global response biases. To calculate averages for correlations over several body stimuli (e.g., the average correlation for all five happy bodies), S.M.'s correlation for each individual stimulus was Fisher z-transformed, the transformed correlations were averaged over all five stimuli that expressed a given emotion, and the average was then inverse transformed to obtain the mean correlation for that emotion.

2.3.5. Control tasks

Subject S.M. and the NC2 group judged the speed and size of the same PL and FL body movements they had seen in the emotion-rating task. They were first presented the 25 PL displays followed by the 25 FL displays (the stimuli in each condition were presented in a random order) and for each stimulus were asked to rate the speed of the body movements on a scale of 1 (extremely slow movements) to 6 (extremely fast movements). They then saw the same stimuli again (PL then FL, in different random orders) and were asked to rate the size of the body movements on a scale of 1 (extremely small movements) to 6 (extremely large movements).

S.M. and the NC2 group also completed a forced-choice action judgement task, in which they were presented with stimuli displaying individual male or female actors portraying simple body movements and actions. The stimulus set comprised 32 PL and 32 FL displays of emotionally neutral body actions (4 portrayals × 8 actions in each lighting condition), as described in Atkinson et al. (2007). In the present control task, for each stimulus the participants were asked to choose from the following list the one label that best described the depicted movement: bending, hopping, jumping-jacks (or in the UK, star-jumps), walking, digging, kicking, knocking and pushing. The PL displays were presented first, followed by the FL displays (with a different random order for each condition and each participant).

2.3.6. Testing environments

S.M. completed Task 1 in a quiet room with an experimenter present, Task 2 via the Internet, viewing the stimuli on a computer in her home, and Tasks 3 and 4 and the control tasks in a laboratory with an experimenter. A.P. completed the web-based versions of Tasks 1 and 2 at her home. The NC1 subjects participated in Tasks 1 and 2 via the Internet, viewing the stimuli on computers in their homes or institutions. The NC2 subjects were tested individually either in a laboratory or in their homes, with an experimenter present. For the web-based versions of the tasks, all stimuli were preloaded on the host computer before the participant could begin each task, to ensure constant play of the movie clips at the standard 25 frames per second irrespective of the speed of the Internet connection.

3. Results

3.1. Task 1: Forced-choice emotion-labelling of static body postures

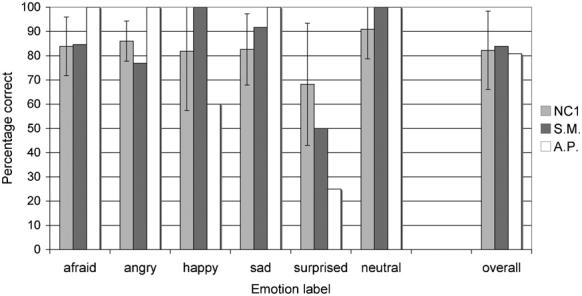

The forced-choice emotion-labelling accuracy data for the static body postures obtained from S.M. and A.P., along with the data from the normal comparison group, are shown in Fig. 2. The partial credit scores are shown in Table 2. (Partial credit scores closer to 1 indicate greater correspondence in the pattern of responses relative to the normal reference group.) Both S.M.'s and A.P.'s labelling of emotions expressed by static body postures were normal, except for a mild impairment for A.P. in labelling surprised expressions (accuracy z-score relative to the NC1 group = −1.71). For surprise, however, there were only four stimuli with this modal response label, and all three that A.P. labelled incorrectly she called afraid. S.M. labelled one surprised posture incorrectly, also calling it afraid. Confusions between surprise and fear were common for the NC1 group as well, likely because they are semantically similar emotions and have postural similarities. Indeed, when A.P.'s performance on this posture task is assessed in terms of partial credit scores (Table 2), the apparent difference compared to the NC1 group for surprised expressions essentially disappears (z-score = −1.17). Notably, her classification of fearful postures was always well within the normal range (indeed, she achieved 100% correct performance on two out of three testing sessions). S.M. showed similarly consistent performance on this same task when tested again via the Internet more than a year after the original testing session, including normal classification of fearful postures.

Fig. 2.

Mean percentage correct classification of emotions in the static whole-body postures (Task 1) for patients S.M. and A.P. and the normal comparison (NC1) group. Data are for the 57 stimuli with clear modal responses from the normal reference group (a further 6 stimuli did not have clear modal responses). Error bars indicate ± 1 standard deviation.

Table 2.

Mean partial credit scores (and S.D.s) for the bilateral amygdala lesion subjects and the matched normal comparison groups (NC1 and NC2), relative to the larger normal reference groups, for the forced-choice emotional-labelling of each stimulus type in Tasks 1–3

| Judgment category | Partial credit scores |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Static postures (Task 1) |

Point-light walkers (Task 2) |

Full-light dynamic gestures (Task 3) |

Patch-light dynamic gestures (Task 3) |

|||||||

| S.M. | A.P. | NC1 | S.M. | A.P. | NC1 | S.M. | NC2 | S.M. | NC2 | |

| Afraid | 0.9 | 1.0 | 0.88 (0.09) | 0.81 | 1.0 | 0.83 (0.15) | 1.0 | 0.96 (0.09) | 0.9 | 0.87 (0.14) |

| Angry | 0.88 | 1.0 | 0.91 (0.06) | 1.0 | 0.91 | 0.94 (0.07) | 0.43a | 0.96 (0.05) | 0.55a | 0.94 (0.09) |

| Happy | 1.0 | 0.63 | 0.84 (0.23) | 1.0 | 1.0 | 0.95 (0.05) | 0.84 | 0.9 (0.14) | 0.86 | 0.83 (0.12) |

| Sad | 0.97 | 1.0 | 0.86 (0.13) | 0.91 | 1.0 | 0.87 (0.09) | 0.91 | 0.87 (0.11) | 0.83 | 0.83 (0.12) |

| Surprised | 0.72 | 0.64 | 0.82 (0.16) | - | - | - | - | - | - | |

| Disgusted | - | - | - | - | - | 1.0 | 0.87 (0.15) | 1.0 | 0.91 (0.1) | |

| Neutral | 1.0 | 1.0 | 0.95 (0.09) | 0.72 | 0.79 | 0.79 (0.16) | - | - | - | - |

| Overallb | 0.92 | 0.9 | 0.88 (0.05) | 0.9 | 0.93 | 0.88 (0.05) | 0.84 | 0.91 (0.04) | 0.82a | 0.89 (0.03) |

The absence of an entry in a cell of the table indicates that the corresponding judgement category was not a response option in that task.

Indicates z-score > 2S.D.s below the NC mean partial credit score.

The overall average partial credit scores provide a measure of overall emotion recognition performance calculated across all stimuli presented in a task, including those stimuli that did not receive clear modal responses from the normal reference groups. The partial credit scores for each emotion category were calculated across only those stimuli that received clear modal responses. See text (Section 2.2) for further details of how the partial credit scores were calculated.

3.2. Task 2: Forced-choice emotion-labelling of point-light walkers

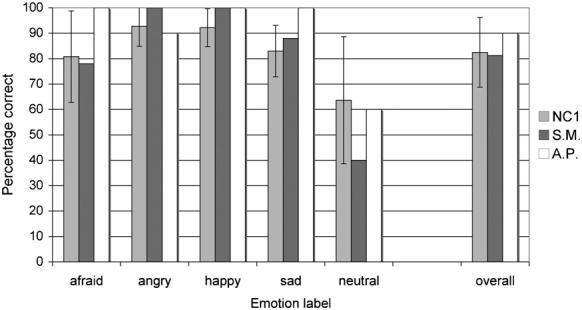

The forced-choice emotion-labelling accuracy data for the point-light walkers obtained from S.M. and A.P., along with the data from the normal comparison group, are shown in Fig. 3. Partial credit scores are shown in Table 2. Both S.M.'s and A.P.'s labelling of emotions expressed by these point-light walkers were entirely normal.

Fig. 3.

Mean percentage correct classification of emotions in the point-light walker stimuli (Task 2) for patients S.M. and A.P. and the normal comparison (NC1) group. Data are for the 39 stimuli with clear modal responses from the normal reference group (an additional stimulus did not have a clear modal response). Error bars indicate ± 1 standard deviation.

3.3. Task 3: Forced-choice emotion-labelling of patch-light and full-light body gestures

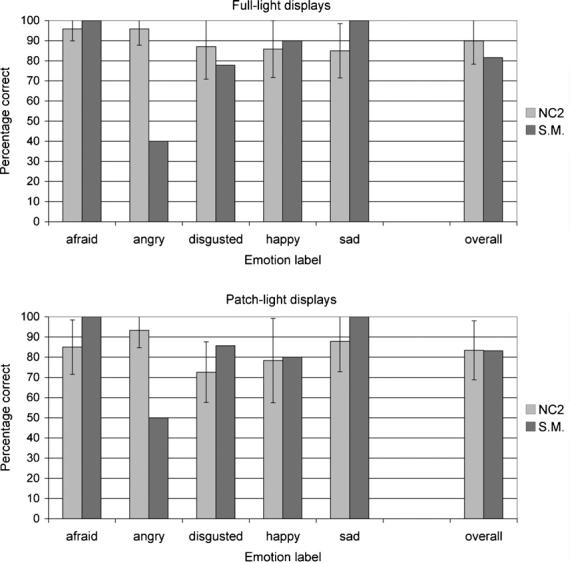

As shown in Fig. 4, S.M. labelled the PL and FL body movements normally, except for expressions of anger in both conditions, for which she performed significantly below the level of the NC2 group (z-scores: PL =−4.4, FL =−10.8). This difference was also evident in the partial credit scores (Table 2), indicating that S.M. labelled angry postures differently from the NC2 group. Examination of S.M.'s responses revealed that she consistently misclassified angry expressions as disgusted for both the PL (4 out of 10 gestures; z-score = 4.5) and FL (6 out of 10 gestures; z-score = 15) displays. Similar performance was recorded in a subsequent testing session with S.M. on the following day, when she again labelled the PL and FL body movements normally, except for angry gestures (z-scores: PL =−4.4, FL =−8.9). This time, however, while she again tended to label more FL angry expressions as disgusted compared to the normal comparison group (4 out of 10 gestures; z-score = 9.9), this was not the case for the PL displays of anger, for which she labelled only one exemplar as disgusted (z-score = 0.7), but two as happy and two as sad (z-scores = 6.6 in each case).

Fig. 4.

Mean percentage correct classification of emotions in the full-light (top) and patch-light (bottom) displays of moving whole-body gestures for S.M. and the normal comparison (NC2) group. Data are for the 49 full-light movies and 47 patch-light movies with clear modal responses from the normal reference group (one FL and three PL stimuli did not have clear modal responses). Error bars indicate ± 1 standard deviation.

The partial credit scores for this task (Table 2) reveal a marginal overall emotion identification impairment for S.M. compared to the NC2 group with the PL displays (z-score: −2.004). Yet she did not show an overall emotion identification impairment with identical movements in the FL displays, or with any of the specific emotion categories in either the PL or FL displays.

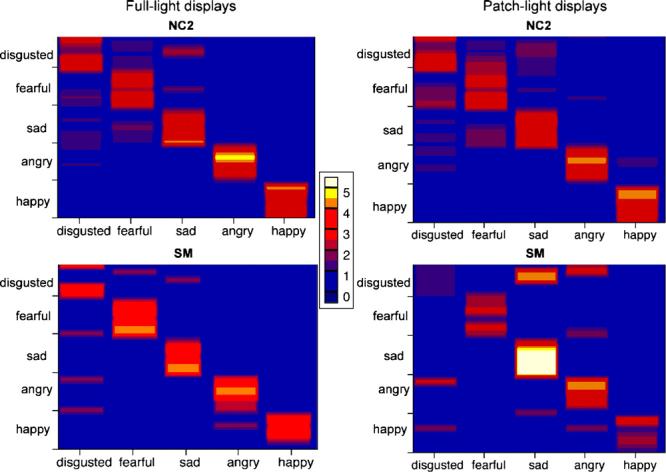

3.4. Task 4: Emotion-rating task with patch-light and full-light body gestures

The raw emotion intensity ratings for S.M. and the normal comparison (NC2) group are shown in Fig. 5. As this figure indicates, S.M.'s emotion-ratings of both the FL and the PL body expressions showed similar patterns to those of the normal comparison group. This was confirmed by examination of the z-scores for S.M.'s mean emotion intensity ratings, all of which were within two standard deviations from the NC2 mean ratings. Notable but relatively minor departures from the comparison group performance for S.M. were evident for ratings of some of the PL displays, namely: lower disgust ratings for expressions of disgust (z-score =−1.5) and lower happiness ratings for expressions of happiness (z-score =−1.3). S.M. also gave higher sadness ratings for PL expressions of sadness than did the comparison group (she gave the maximum rating of 5 for 4 of these five expressions of sadness), although the z-score for this rating was only 0.8, and higher sadness ratings for three of the PL disgust displays, although when averaged across all five PL displays of disgust S.M.'s mean sadness ratings did not differ from that of the NC2 group.

Fig. 5.

Plots of the raw emotion intensity ratings of the full-light (left) and patch-light (right) body gesture stimuli for S.M. (bottom) and the normal comparison group (NC2; top). The emotional stimuli (25 dynamic body gestures in each display type; 5 of each basic emotion indicated) are ordered on the y-axis according to their perceived similarity by the normal comparison group (stimuli perceived to be similar are adjacent). The five emotion labels on which subjects rated the faces are displayed on the x-axis. Colour encodes the mean rating given to each body stimulus, as indicated in the scale. Thus, a purple or red line indicates a lower mean rating than an orange or yellow line for a given body gesture; and a thin yellow or white line for a given emotion category indicates that few stimuli of that emotion received a high rating, whereas a thick yellow or white line indicates that many or all stimuli within that emotion category received high ratings.

In light of the fact that S.M. frequently labelled angry body movements as disgusted in the forced-choice task (Task 3), it is noteworthy that she tended not to rate angry body expressions as highly disgusted. She rated 6 of the 10 intended angry expressions with 0 on the disgust scale (“not at all disgusted”), and the other four received ratings of 1, 2 (for 2 stimuli) or 3 on the six point scale, all of which were lower than her anger intensity ratings for those same stimuli.

As detailed in Section 2.3.4, we calculated correlation scores for S.M.'s emotion intensity ratings relative to the mean normal ratings, to obtain a single measure of performance that avoided floor and ceiling effects. This correlation measure was calculated from the rating profile one sees across a horizontal band of an emotion category in Fig. 5. The correlation scores are shown in Table 3, which shows that S.M.'s emotion-rating profiles correlated highly with the emotion-rating profiles provided by the normal comparison group for all emotions in both the FL and PL displays. Nonetheless, S.M.'s correlation scores were slightly lower for the PL than for the FL displays for all emotions except sadness.

Table 3.

Mean correlation scores for S.M.'s emotion intensity ratings relative to the mean emotion intensity ratings of the normal comparison group (NC2), as a function of lighting condition and emotion category

| Emotion | Lighting condition |

|

|---|---|---|

| Full-light | Patch-light | |

| Anger | 0.89 | 0.69 |

| Disgust | 0.8 | 0.61 |

| Fear | 0.91 | 0.74 |

| Happiness | 0.97 | 0.82 |

| Sadness | 0.92 | 0.91 |

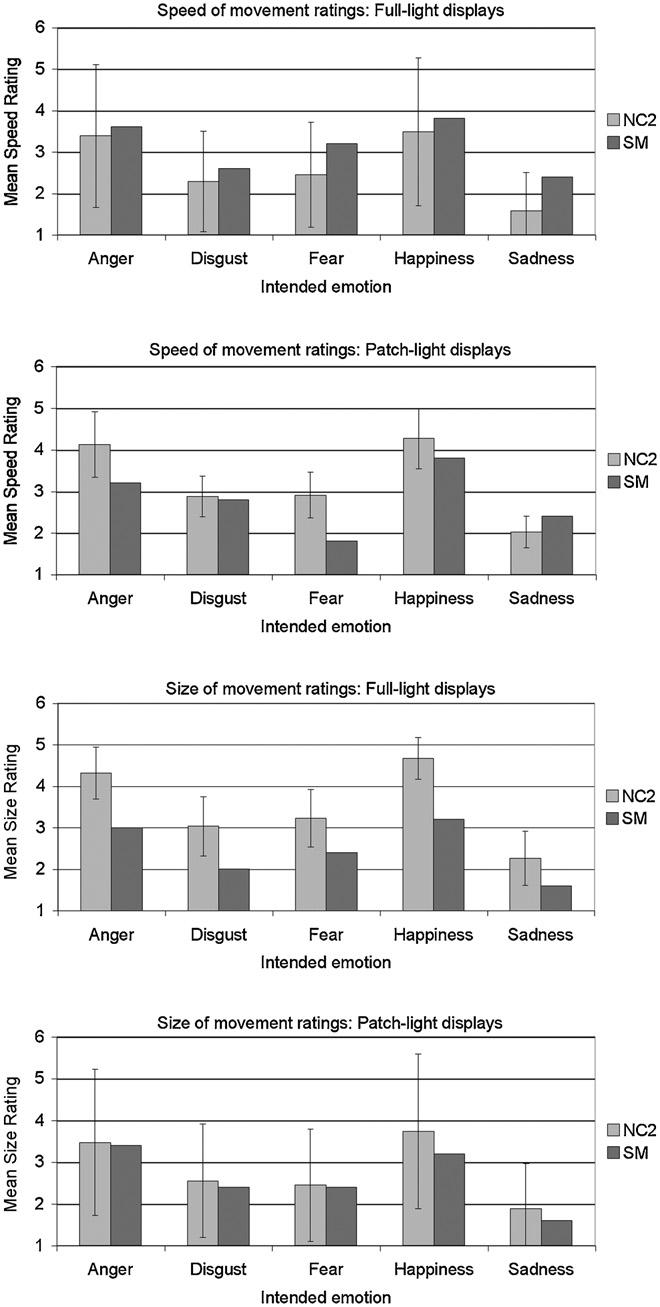

3.5. Control tasks

S.M.'s movement ratings tended to be lower than the comparison group's (NC2) ratings (Fig. 6), but she was ‘impaired’ on only size ratings for FL anger (z-score =−2.11) and happiness (z-score =−2.92), and on speed ratings for PL fear (z-score =−2.02). S.M. performed identically to the NC2 group on the action-naming task, correctly classifying all the FL and PL displays of whole-body actions.

Fig. 6.

Mean speed of movement ratings (top two panels) and size of movement ratings (bottom two panels) for the full-light and patch-light displays of moving whole-body gestures for S.M. and the normal comparison (NC2) group. Both scales ranged from 1 (extremely slow/small movements) to 6 (extremely fast/large movements). Error bars indicate ± 1 standard deviation.

4. Discussion

We found that neither of two very rare subjects with bilateral amygdala lesions was impaired at recognising fear from body movements or static body postures, compared to the performance of healthy comparison subjects. One of these individuals (S.M.) has complete bilateral amygdala damage, as well as minor damage to anterior entorhinal cortex, whereas the other (A.P.) has bilateral damage encompassing approximately 70% of the amygdala, which does not extend beyond the amygdala at all. S.M., who is known to be severely impaired at recognising fear from faces, performed normally across four different tasks involving two sets of dynamic body movement stimuli and a set of static body postures. A.P., who was tested with a subset of these tasks, also performed normally. S.M. appeared to be impaired at identifying angry body movements, but only because she frequently labelled them as disgusted when ‘disgust’ was a response option, likely reflecting the conceptual overlap between these two emotions (see below). S.M. also showed a borderline impairment in her overall emotion recognition score with patch-light body movements, as indicated by the partial-credit score, although not in her overall emotion recognition score with identical movements in full-light displays or with any specific emotion in either of these two display types.

These results contrast with those of Sprengelmeyer et al. (1999), who reported impaired identification of fear from static body postures in a patient with bilateral amygdala damage (recognition accuracy was within or above normal levels for happy, surprised, sad, disgusted and angry postures). However, this person's lesion was not entirely restricted to bilateral amygdala (in contrast to A.P.) and did not encompass the entire extent of both amygdalae (in contrast to S.M.). Thus, our study provides a stronger test of whether the amygdala is necessary for the normal recognition of fear from static body postures, a test that we extended to moving as well as static bodily expressions of emotion.

Adolphs and Tranel (2003) found that S.M. and other subjects with bilateral amygdala damage were impaired at recognising anger from static images of social scenes containing facial and body posture cues, mistaking anger for a variety of other emotions. Yet when the faces in these images were obscured, these amygdala lesion subjects judged anger normally. In the present study, the actors' faces were not visible and yet S.M. was apparently impaired at labelling angry body movements. Tellingly, however, she was impaired on anger only in the task in which ‘disgust’ was a response option (Task 3), frequently judging angry body movements as disgusted. S.M. did not label disgusted expressions as angry, nor did she tend to rate angry expressions as disgusted more than did the controls in the emotion-rating task (Task 4). Prior to commencing Tasks 3 and 4, all subjects were provided short definitions of the meaning of each emotion label and written examples of situations in which one might experience each emotion. The definition of disgust that we provided corresponded to what Rozin and colleagues (Rozin, Haidt, & McCauley, 2000; Rozin, Lowery, & Ebert, 1994) term “core” disgust, which is related to a distaste food rejection system responsive to such stimuli as offensive smells and bad tastes, and extends to stimuli that remind humans of their animal origins, such as faeces, poor hygiene and death. All our disgusted body stimuli were clearly intended as expressions of core disgust (e.g., miming of blowing away a bad smell or of retching). Other theoretical as well as lay conceptions of disgust overlap with those of anger (e.g., Rozin, Lowery, Imada, & Haidt, 1999; Russell & Fehr, 1994; Shaver, Schwartz, Kirson, & O'Connor, 1987; Storm & Storm, 1987). For example, Rozin et al. (1994, 2000) distinguish core disgust from interpersonal disgust, related to contact with undesirable persons and moral disgust, related to violations of moral “purity”. These latter conceptions of disgust are close in definition to contempt, disdain or scorn, that is, to a feeling of dislike toward somebody or something considered as inferior or undeserving of respect, and thus closer in definition to anger (Rozin, 1996). Moreover, the word disgust has a common everyday usage that embodies a combination of the meanings of disgust and anger, at least in the USA (Nabi, 2002). Confusions between disgusted and angry facial expressions are not uncommon (e.g., Ekman & Friesen, 1976; McKelvie, 1995; Palermo & Coltheart, 2004), more so in children (e.g., Russell & Bullock, 1985) and in younger than in older adults (Suzuki, Hoshino, Shigemasu, & Kawamura, 2007). Part of the reason for this confusion is likely that facial expressions of anger and disgust have certain “facial action units” or muscle contractions in common (Ekman & Friesen, 1976; Young, Perrett, Calder, Sprengelmeyer, & Ekman, 2002). Yet conceptual overlap between disgust and anger also likely contributes to confusions in the classification of facial expressions of those emotions (e.g., Rozin et al., 1999; Russell & Bullock, 1985, 1986; Widen & Russell, 2003). Bodily expressions of core disgust and anger, including those used in the present study, share few postural and movement characteristics (Atkinson et al., 2004; Wallbott, 1998). Thus, we speculate that, rather than having a perceptual deficit selective for angry body expressions, or confusing disgusted and angry expressions because of their perceptual similarities, S.M. tended to apply distinct but overlapping conceptions of disgust to the disgusted and angry gestures, viz. core disgust and a sense of disgust that is closer in meaning to anger, respectively.

On the basis of prior functional imaging evidence and what is known about the amygdala's cortical connectivity, we had hypothesised that the amygdala would be important for the recognition of fear from body expressions, as it is for the recognition of fear from facial expressions. Fearful versus neutral whole-body postures have been shown to activate the amygdala (de Gelder et al., 2004; Hadjikhani & de Gelder, 2003), as have some of the fearful body postures and movements used in the present study (Atkinson et al., submitted). Nonetheless, as in the case of facial expressions, fearful body movements do not necessarily activate the amygdala (Grézes et al., 2007) and the amygdala can show activation to bodily expressed emotions other than fear (Peelen et al., in press). Studies in monkeys and other non-human animals demonstrate that the amygdala has strong reciprocal connections to various cortical regions, including visual processing regions in temporal cortex (Amaral, Price, Pitkanen, & Carmichael, 1992; Price, 2003). In humans, such connections may underlie modulatory influences of the amygdala on visual cortex, serving to prioritise visual processing of emotionally salient stimuli (Vuilleumier, 2005; Vuilleumier, Richardson, Armony, Driver, & Dolan, 2004), including bodies as well as faces. Indeed, a recent study, Peelen et al. (in press) showed that amygdala responses to emotional versus neutral whole-body movements positively correlated with the activation by the same stimuli of body-selective areas in temporal cortex (i.e., the extrastriate body area, or EBA, and the fusiform body area, or FBA). Furthermore, the activity of these body-selective regions but not of a face-selective region (the fusiform face area, or FFA) was modulated by the emotional content of the body stimuli, suggesting that emotional cues from body movements produce topographically selective influences on category-specific populations of neurons in visual cortex.

In the light of these functional imaging studies, our results indicate that while the amygdala may sometimes be involved in processing whole-body fear cues, it is not necessary for the normal recognition of fear from either static or dynamic body expressions. What, then, might explain amygdala damage sparing the ability to recognise fear and other basic emotions expressed in whole-body gestures, especially despite a typically concomitant impairment in identifying fear in faces?

One possibility is that bilateral amygdala damage particularly impacts on the ability to recognise emotions from faces more so than from other stimuli. Adolphs and Tranel (2003), for example, demonstrated that bilateral (but not unilateral) amygdala damage reduced the ability to recognise emotions from static images of complex social scenes when subjects utilised information from facial expressions, but not for negative emotions when the faces were obscured such that the participants had to rely on other cues including body posture, hand gestures and interpersonal stances. More recently, Adolphs et al. (2005) showed that S.M.'s impaired perception of fear in faces is due to a lack of spontaneous fixations on the eyes of viewed faces and a consequent inability to use information from the eye region when judging emotions, a region that is especially diagnostic for the discrimination of fearful expressions (Smith, Cottrell, Gosselin, & Schyns, 2005). The results of the present study demonstrate that the amygdala is not necessarily involved in emotion recognition across all modes of expression. Nevertheless, the amygdala's role in social perception, including the recognition of emotions, is not restricted to the eyes or even to faces. For instance, S.M.'s descriptions of the movements of the Heider and Simmel (1944) stimulus, which depicts simple geometric shapes moving on a plain background, are abnormal. Solely on the basis of the movements of these shapes, normal and brain-damaged control subjects attribute social and emotional states to the objects. S.M., however, failed to describe these movements spontaneously in social terms, an impairment that was not the result of a global inability to describe social stimuli or of a bias in language use (Heberlein & Adolphs, 2004). Taken together with our finding of a borderline impairment in S.M.'s overall emotion recognition score with patch-light body movements, these results prompt further, more detailed investigation of motion processing following amygdala damage, especially in the context of social perception.

Another possible explanation of the spared ability to recognise fear and other basic emotions in whole-body gestures following bilateral amygdala lesions is that emotion recognition relies on processes of emotional contagion or simulation, and that the engagement of these processes by visually presented bodies relies less on the amygdala than does the engagement of such processes by viewed static faces. The emotional contagion proposal (e.g., Hatfield, Cacioppo, & Rapson, 1994; Wild, Erb, & Bartels, 2001) is that viewing another's emotional expression triggers that emotion in oneself, either directly or via unintentional mimicry of that expression, which allows one then to attribute that emotion to the other person. Alternatively, viewing another's emotional expression might involve simulating the viewed emotional state via the generation of a somatosensory image of the associated body state (Adolphs, 2002), or simulating the motor programmes for producing the viewed expression (Carr, Iacoboni, Dubeau, Mazziotta, & Lenzi, 2003; Gallese, Keysers, & Rizzolatti, 2004; Leslie, Johnson-Frey, & Grafton, 2004) (for discussions of these proposals, see Atkinson, 2006). Right somatosensory cortices have a critical role in the recognition of emotions expressed in the face (Adolphs, Damasio, Tranel, Cooper, & Damasio, 2000; Pourtois et al., 2004), body (Heberlein et al., 2004) and voice (Adolphs, Damasio, & Tranel, 2002; van Rijn et al., 2005), and thus may be central to processes of emotional contagion or simulation, or both. Whether the amygdala is also critically involved in such processes is less clear, although it is known to have a role in linking the perception of stimuli to somatic responses or representations thereof (see e.g., Adolphs, 2002), and to have direct connections to insular cortex, amongst other cortical regions (e.g., Amaral et al., 1992). Nevertheless, it is still an open question whether the amygdala is more critical to the engagement of contagion or simulation processes by emotional bodies than by emotional faces.

A final suggested explanation of our findings is that S.M. is relying on general processes of visual inference and knowledge of how people hold and move their bodies when emotional – for example, that people stamp their feet and shake their fists in anger, cower in fear, move slowly and bow their heads when sad – which do not depend on an intact amygdala. Knowledge about emotion-related body postures and movement is likely to be dominated by symbolic or emblematic cues. Perhaps more so than facial expressions, some bodily gestures have come to serve as widely used and recognised symbols that represent emotional states; for instance, raised clenched fists can signal anger or joy, a bowed head with the face buried in one's hands or the miming of sniffing and wiping away tears can signal sadness. Buck (1984, 1991) distinguished symbolic emotional communication, which is propositional, intentional and referential, from spontaneous emotional communication, which is non-propositional, involuntary and expressive. These categories likely reflect opposing ends of a continuum: bodily signals of emotion vary in their symbolic or emblematic nature. Our body posture and movement stimuli exemplify this variation in symbolic content, both within the stimulus sets and between them, with the Heberlein et al. (2004) point-light body movements being in general less symbolic than the Atkinson et al. (2004) body movements, given that the former all involve the actors walking, running or dancing across the screen in a way intended to imply individual basic emotional states, whereas the actors for the latter stimulus set generally faced the camera and were given much more freedom as to how they moved and portrayed emotions with their bodies. Interestingly, S.M. was not impaired with either stimulus set. Thus, if in our tasks S.M. was relying on general processes of visual inference and knowledge of how people hold and move their bodies when emotional, then she was unlikely to have been relying solely on the symbolic nature of the postures and movements.

In conclusion, the amygdala is not a critical structure for the conscious recognition of fear or other basic emotions from whole-body static postures and movements, at least as assessed by our battery of tasks, even if the amygdala may sometimes be involved in processing whole-body fear cues. Further research is required to assess whether these findings extend to more automatic and rapid processing of emotional signals from the body.

Acknowledgments

A.P.A.'s contribution to this research was supported by a Short-term Fellowship from the Human Frontier Science Program (ST00302/2002-C), held at the Department of Neurology, University of Iowa Hospitals and Clinics, Iowa City, USA, and by a grant as part of the McDonnell Project in Philosophy and the Neurosciences. A.S.H. was supported by a training grant from the National Institutes of Health (T32-NS07413), held at the Children's Hospital of Philadelphia and the Center for Cognitive Neuroscience, University of Pennsylvania. R.A.'s contribution was supported by grants from the National Institute of Mental Health and the National Institute of Neurological Disorders and Stroke. We are grateful to all the participants for their time and effort, to Dan Tranel for discussion and for facilitating access to brain-damaged subjects, to Elaine Behan for creating the Internet versions of the tasks and to Winand Dittrich for first suggesting the construction of the stimuli used in Tasks 3 and 4 and for his advice on their development.

Footnotes

Publisher's Disclaimer: This article was published in an Elsevier journal. The attached copy is furnished to the author for non-commercial research and education use, including for instruction at the author's institution, sharing with colleagues and providing to institution administration.

References

- Adolphs R. Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–62. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D. Neural systems for the recognition of emotional prosody: A 3-D lesion study. Emotion. 2002;2:23–51. doi: 10.1037/1528-3542.2.1.23. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. Journal of Neuroscience. 2000;20:2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D. Amygdala damage impairs emotion recognition from scenes only when they contain facial expressions. Neuropsychologia. 2003;41:1281–1289. doi: 10.1016/s0028-3932(03)00064-2. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR. Fear and the human amygdala. Journal of Neuroscience. 1995;15:5879–5891. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, Young AW, Calder AJ, Phelps EA, et al. Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia. 1999;37:1111–1117. doi: 10.1016/s0028-3932(99)00039-1. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Price JL, Pitkanen A, Carmichael ST. Anatomical organization of the primate amygdaloid complex. In: Aggleton JP, editor. The amygdala: Neurobiological aspects of emotion, memory, and mental dysfunction. Wiley-Liss; New York: 1992. pp. 1–66. [Google Scholar]

- Atkinson AP. Face processing and empathy. In: Farrow TFD, Woodruff PWR, editors. Empathy in mental illness and health. Cambridge University Press; Cambridge: 2006. pp. 360–385. [Google Scholar]

- Atkinson AP, Dittrich WH, Gemmell AJ, Young AW. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004;33:717–746. doi: 10.1068/p5096. [DOI] [PubMed] [Google Scholar]

- Atkinson AP, Heining M, Phillips ML. Modulation of functionally distinct neural systems by the form and motion of fearful bodies. submitted. [Google Scholar]

- Atkinson AP, Tunstall ML, Dittrich WH. Evidence for distinct contributions of form and motion information to the recognition of emotions from body gestures. Cognition. 2007;104:59–72. doi: 10.1016/j.cognition.2006.05.005. [DOI] [PubMed] [Google Scholar]

- Benton AL, Sivan AB, Hamsher KS, Varney NS, Spreen O. Contributions to neuropsychological assessment: A clinical manual. Oxford University Press; New York: 1994. [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Buchanan TW, Tranel D, Adolphs R. The human amygdala in social function. In: Whalen PW, Phelps L, editors. The human amygdala. Oxford University Press; New York: in press. [Google Scholar]

- Buck R. The communication of emotion. Guilford Press; New York: 1984. [Google Scholar]

- Buck R. Motivation, emotion, and cognition: A developmental-interactionalist view. In: Strongman KT, editor. International review of emotion. John Wiley; New York: 1991. pp. 101–142. [Google Scholar]

- Calder AJ, Young AW, Rowland D, Perrett DI, Hodges JR, Etcoff NL. Facial emotion recognition after bilateral amygdala damage: Differentially severe impairment of fear. Cognitive Neuropsychology. 1996;13:699–745. [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proceedings of the National Academy of Sciences. 2003;100:5497–5502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: A mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences. 2004;101:16701–16706. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Consulting Psychologist's Press; Palo Alto, CA: 1976. [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends in Cognitive Sciences. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Grézes J, Pichon S, de Gelder B. Perceiving fear in dynamic body expressions. NeuroImage. 2007;35:959–967. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, de Gelder B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Current Biology. 2003;13:2201–2205. doi: 10.1016/j.cub.2003.11.049. [DOI] [PubMed] [Google Scholar]

- Hatfield E, Cacioppo JT, Rapson RL. Emotional contagion. Cambridge University Press; New York: 1994. [Google Scholar]

- Heberlein AS, Adolphs R. Impaired spontaneous anthropomorphizing despite intact perception and social knowledge. Proceedings of the National Academy of Sciences. 2004;101:7487–7491. doi: 10.1073/pnas.0308220101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heberlein AS, Adolphs R, Tranel D, Damasio H. Cortical regions for judgments of emotions and personality traits from point-light walkers. Journal of Cognitive Neuroscience. 2004;16:1143–1158. doi: 10.1162/0898929041920423. [DOI] [PubMed] [Google Scholar]

- Heider F, Simmel M. An experimental study of apparent behavior. American Journal of Psychology. 1944;57:243–259. [Google Scholar]

- Jiang Y, He S. Cortical responses to invisible faces: Dissociating subsystems for facial-information processing. Current Biology. 2006;16:2023–2029. doi: 10.1016/j.cub.2006.08.084. [DOI] [PubMed] [Google Scholar]

- Johansson G. Visual perception of biological motion and a model for its analysis. Perception and Psychophysics. 1973;14:201–211. [Google Scholar]

- Leslie KR, Johnson-Frey SH, Grafton ST. Functional imaging of face and hand imitation: Towards a motor theory of empathy. NeuroImage. 2004;21:601–607. doi: 10.1016/j.neuroimage.2003.09.038. [DOI] [PubMed] [Google Scholar]

- McKelvie SJ. Emotional expression in upside-down faces: Evidence for configurational and componential processing. British Journal of Social Psychology. 1995;34:325–334. doi: 10.1111/j.2044-8309.1995.tb01067.x. [DOI] [PubMed] [Google Scholar]

- Morris JS, de Gelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124:1241–1252. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, et al. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature. 1996;383:812–815. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. A subcortical pathway to the right amygdala mediating “unseen” fear. Proceedings of the National Academy of Sciences USA. 1999;96:1680–1685. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nabi RL. The theoretical versus the lay meaning of disgust: Implications for emotion research. Cognition & Emotion. 2002;16:695–703. [Google Scholar]

- Palermo R, Coltheart M. Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behavior Research Methods Instruments & Computers. 2004;36:634–638. doi: 10.3758/bf03206544. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P. Emotional modulation of body-selective visual areas. Social Cognitive and Affective Neuroscience. doi: 10.1093/scan/nsm023. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Sander D, Andres M, Grandjean D, Reveret L, Olivier E, et al. Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. European Journal of Neuroscience. 2004;20:3507–3515. doi: 10.1111/j.1460-9568.2004.03794.x. [DOI] [PubMed] [Google Scholar]

- Price JL. Comparative aspects of amygdala connectivity. Annals of the New York Academy of Sciences. 2003;985:50–58. doi: 10.1111/j.1749-6632.2003.tb07070.x. [DOI] [PubMed] [Google Scholar]

- Rozin P. Towards a psychology of food and eating: From motivation to module to marker, morality, meaning, and metaphor. Current Directions in Psychological Science. 1996;5:18–24. [Google Scholar]

- Rozin P, Lowery L, Ebert R. Varieties of disgust faces and the structure of disgust. Journal of Personality and Social Psychology. 1994;66:870–881. doi: 10.1037//0022-3514.66.5.870. [DOI] [PubMed] [Google Scholar]

- Rozin P, Lowery L, Imada S, Haidt J. The CAD triad hypothesis: A mapping between three moral emotions (contempt, anger, disgust) and three moral codes (community, autonomy, divinity) Journal of Personality and Social Psychology. 1999;76:574–586. doi: 10.1037//0022-3514.76.4.574. [DOI] [PubMed] [Google Scholar]

- Rozin P, Haidt J, McCauley CR. Disgust. In: Lewis M, Havliand J, editors. Handbook of emotions. 2nd ed. Vol. 1. Guilford Press; New York: 2000. pp. 637–653. [Google Scholar]

- Russell JA, Bullock M. Multidimensional-scaling of emotional facial expressions: Similarity from preschoolers to adults. Journal of Personality and Social Psychology. 1985;48:1290–1298. [Google Scholar]

- Russell JA, Bullock M. Fuzzy concepts and the perception of emotion in facial expressions. Social Cognition. 1986;4:309–341. [Google Scholar]

- Russell JA, Fehr B. Fuzzy concepts in a fuzzy hierarchy: Varieties of anger. Journal of Personality and Social Psychology. 1994;67:186–205. doi: 10.1037//0022-3514.67.2.186. [DOI] [PubMed] [Google Scholar]

- Shaver P, Schwartz J, Kirson D, O'Connor C. Emotion knowledge: Further exploration of a prototype approach. Journal of Personality and Social Psychology. 1987;52:1061–1086. doi: 10.1037//0022-3514.52.6.1061. [DOI] [PubMed] [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and decoding facial expressions. Psychological Science. 2005;16:184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Young AW, Schroeder U, Grossenbacher PG, Federlein J, Buttner T, et al. Knowing no fear. Proceedings of the Royal Society of London, Series B: Biological Sciences. 1999;266:2451–2456. doi: 10.1098/rspb.1999.0945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Storm C, Storm T. A taxonomic study of the vocabulary of emotions. Journal of Personality and Social Psychology. 1987;53:805–816. [Google Scholar]

- Suzuki A, Hoshino T, Shigemasu K, Kawamura M. Decline or improvement? Age-related differences in facial expression recognition. Biological Psychology. 2007;74:75–84. doi: 10.1016/j.biopsycho.2006.07.003. [DOI] [PubMed] [Google Scholar]

- van Rijn S, Aleman A, van Diessen E, Berckmoes C, Vingerhoets G, Kahn RS. What is said or how it is said makes a difference: Role of the right fronto-parietal operculum in emotional prosody as revealed by repetitive TMS. European Journal of Neuroscience. 2005;21:3195–3200. doi: 10.1111/j.1460-9568.2005.04130.x. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: Neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Wallbott HG. Bodily expression of emotion. European Journal of Social Psychology. 1998;28:879–896. [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion. 2001;1:70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]

- Widen SC, Russell JA. A closer look at preschoolers' freely produced labels for facial expressions. Developmental Psychology. 2003;39:114–128. doi: 10.1037//0012-1649.39.1.114. [DOI] [PubMed] [Google Scholar]

- Wild B, Erb M, Bartels M. Are emotions contagious? Evoked emotions while viewing emotionally expressive faces: Quality, quantity, time course and gender differences. Psychiatry Research. 2001;102:109–124. doi: 10.1016/s0165-1781(01)00225-6. [DOI] [PubMed] [Google Scholar]

- Williams MA, Morris AP, McGlone F, Abbott DF, Mattingley JB. Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. Journal of Neuroscience. 2004;24:2898–2904. doi: 10.1523/JNEUROSCI.4977-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winston JS, O'Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. NeuroImage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Young AW, Perrett DI, Calder AJ, Sprengelmeyer R, Ekman P. Facial expressions of emotion: Stimuli and tests (FEEST) Thames Valley Test Company; Bury St. Edmunds: 2002. [Google Scholar]