Abstract

We describe the development, current features, and some directions for future development of the Amber package of computer programs. This package evolved from a program that was constructed in the late 1970s to do Assisted Model Building with Energy Refinement, and now contains a group of programs embodying a number of powerful tools of modern computational chemistry, focused on molecular dynamics and free energy calculations of proteins, nucleic acids, and carbohydrates.

Keywords: Amber, biomolecular simulation programs

Introduction

Molecular dynamics simulations of proteins, which began about 25 years ago, are by now widely used as tools to investigate structure and dynamics under a variety of conditions; these range from studies of ligand binding and enzyme reaction mechanisms to problems of denaturation and protein refolding to analysis of experimental data and refinement of structures. “Amber” is the collective name for a suite of programs that allows users to carry out and analyze molecular dynamics simulations, particularly for proteins, nucleic acids and carbohydrates. None of the individual programs carries this name, but the various parts work reasonably well together, providing a powerful framework for many common calculations.1 The term amber sometimes also refers to the empirical force fields that are implemented here. It should be recognized, however, that the code and force fields are separate; several other computer packages have implemented the amber force fields, and other force fields can be used within the Amber programs.

A history of Amber’s early development was presented about 10 years ago;2 here we give an overview of more recent efforts. Our goal is to provide scientific background for the simulation techniques that are implemented in the Amber programs and to illustrate how certain common tasks are carried out. We cannot be exhaustive here (the Users’ Manual is 310 pages long!), but we do try to give a sense of the trade-offs that are inevitably involved in maintaining a large set of programs as simulation protocols, force fields, and computer power rapidly evolve. There are certain tasks that Amber tries to support well, and these are our focus here. We hope that this account will help potential users decide whether Amber might suit their needs, and to help current users understand why things are implemented the way they are.

It should be clear that this is not a primer on biomolecular simulations, for there are many excellent books that cover this subject at various levels of detail.3-7 More information about Amber itself, including tutorials and a Users’ Manual, is available at http://amber.scripps.edu.

Overview of Amber Simulations

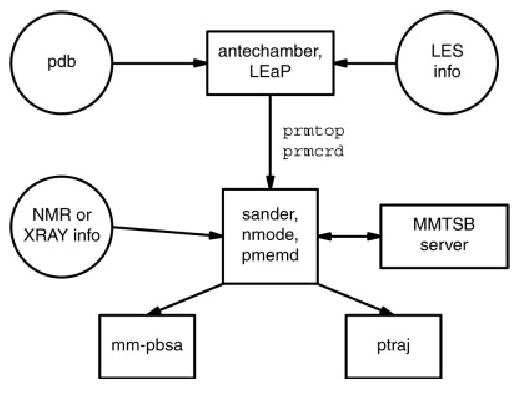

Amber is not a single program, but is rather a collection of codes that are designed to work together. The principal flow of information is shown in Figure 1. There are three main steps, shown top to bottom in the figure: system preparation, simulation, and trajectory analysis. Encoding these operations in separate programs has some important advantages. First, it allows individual pieces to be upgraded or replaced with minimal impact on other parts of the program suite; this has happened several times in Amber’s history. Second, it allows different programs to be written with different coding practices: LEaP is written in C using X-window libraries, ptraj and antechamber are text-based C codes, mm-pbsa is implemented in Perl, and the main simulation programs are coded in Fortran 90. Third, this separation often eases porting to new computing platforms: only the principal simulation codes (sander and pmemd) need to be coded for parallel operation or need to know about optimized (perhaps vendor-supplied) libraries. Typically, the preparation and analysis programs are carried out on local machines on a user’s desktop, whereas time-consuming simulation tasks are sent to a batch system on a remote machine; having stable and well-defined file formats for these interfaces facilitates this mode of operation. Finally, the code separation shown in Figure 1 facilitates interaction with programs written by others. For example, the NAMD simulation program8 can take advantage of Amber tools and force fields by virtue of knowing how to interpret the information in Amber’s prmtop files; similarly, the VMD graphics tools9 can read our trajectory format to prepare animations. The toolkit from the Multiscale Modeling Tools for Structural Biology (MMTSB) project10 can be used to control many aspects of Amber simulations (see Fig. 1). Other users have written tools to improve upon LeAP’s preparation interface, sometimes using a portion of the LEaP code and sometimes bypassing it entirely.

Figure 1.

Information flow in the Amber program suite.

Of course, there are also disadvantages in the code fragmentation implied by Figure 1. There is generally no consistent user interface for the various components, which makes it more difficult to learn. Furthermore, there is no (easy) way for code in one section to modify the results of another section. For example, atom types or charges (which are established in the preparation phase) cannot be modified as the simulation proceeds; similarly, the simulation cannot decide which trajectory data to archive based on the sort of analysis to be done, because it does not have any information about that. Despite these limitations, breaking up of the code into distinct pieces has generally served the user and developer communities well.

Preparation Programs

The main preparation programs are antechamber (which assembles force fields for residues or organic molecules that are not part of the standard libraries) and LEaP (which constructs biopolymers from the component residues, solvates the system, and prepares lists of force field terms and their associated parameters). The result of this preparation phase is contained in two text files: a coordinate (prmcrd) file that contains just the Cartesian coordinates of all atoms in the system, and a parameter-topology (prm-top) file that contains all other information needed to compute energies and forces; this includes atom names and masses, force field parameters, lists of bonds, angles, and dihedrals, and additional bookkeeping information. Since version 7, the prmtop file has been written in an extensible format that allows new features or user-supplied information to be included if required.

LEaP incorporates a fairly primitive X-window graphics interface that allows for visual checking of results and for some interactive structure manipulation. Most users, however, find other programs better suited to inspection of PDB files and construction of initial coordinates, and primarily use LEaP in a text mode (tLEaP) to assemble the system from a “clean” PDB-format file that contains acceptable starting coordinates. The nucleic acid builder (NAB) program integrates well with Amber to construct initial models for nucleic acids,11 and the Amber/GLYCAM configurator tool (http://glycam.ccrc.uga.edu/Amber) serves a similar purpose for carbohydrates. Tools for manipulating protein structures (e.g., for constructing homology models) are widespread, and the resulting PDB-format files can generally be processed by LEaP with little or no modification.

Simulation Programs

The main molecular dynamics program is called sander; as with “Amber,” the original acronym is no longer very descriptive. This code is written in Fortran 90, and uses the Fortran namelist syntax to read user-defined parameters as label-value pairs. As one might imagine, there are many possible options, and about 150 possible input variables. Of these, only 32 (identified in boldface in the Users’ Manual) generally need to be changed for most simulations. As much as possible, the default options have been chosen to give good quality simulations.

Sander is a parallel program, using the MPI programing interface to communicate among processors. It uses a replicated data structure, in which each processor “owns” certain atoms, but where all processors know the coordinates of all atoms. At each step, processors compute a portion of the potential energy and corresponding gradients. A binary tree global communication then sums the force vector, so that each processor gets the full force vector components for its “owned” atoms. The processors then perform a molecular dynamics update step for the “owned” atoms, and use a second binary tree to communicate the updated positions to all processors, in preparation for the next molecular dynamics step. Details of this procedure have been given elsewhere.2,12

Because all processors know the positions of all atoms, this model provides a convenient programming environment, in which the division of force-field tasks among the processors can be made in a variety of ways. The main problem is that the communication required at each step is roughly constant with the number of processors, which inhibits parallel scaling. In practice, this communication overhead means that typical explicit solvent molecular dynamics simulations do not scale well beyond about eight processors for a typical cluster with gigabit ethernet, or beyond 16 –32 clusters for machines with more efficient (and expensive) interconnection hardware. Implicit solvent simulations, which have many fewer forces and coordinates to communicate, scale significantly better. For these relatively small numbers of processors, inequities in load-balancing and serial portions of the code are not limiting factors, although more work would have to be done for larger processor counts.

To improve performance, Bob Duke has prepared an extensively revised version of sander, called pmemd, which communicates to each processor only the coordinate information necessary for computing the pieces of the potential energy assigned to it. Many other optimizations were also made to improve single-processor performance. This code does not support all of the options found in sander, but has a significant performance advantage for the most commonly used simulation options. Some pmemd performance numbers may be found at http://amber.scripps.edu/amber8.bench2.html. A similar communications strategy is being added to development versions of sander by Mike Crowley.

The normal mode analysis code, nmode, is by now quite old, but is still useful for certain types of analysis. It is limited to nonperiodic simulations and, as a practical matter, to systems with fewer than 3000 atoms. Its primary use now is to compute estimates of thermodynamic quantities (in particular, vibrational entropies) for configurations extracted from molecular dynamics simulations, as in the mm-pbsa scheme discussed below. Its original purpose, to compute vibrational properties of small molecules used in force-field parameterization, is still relevant as well. The code supports the Amber polarizable potentials, but not (yet) the generalized Born model; second derivatives of GB energies are available in the NAB program (see http://www.scripps.edu/case/nab.html), which has a GB parameterization identical to that of Amber.

Analysis Programs

The task of analyzing MD trajectories faces two main obstacles. First, trajectory files may become very large, and the total time course may need to be assembled from pieces that were computed in different runs of the simulation program. Second, the types of analyses that can be carried out are quite varied, and change with time, as simulation science progresses and as new ideas are developed. The ptraj analysis program (see http://www.chpc.utah.edu/~cheatham/software.html) was designed with these obstacles in mind, although it provides only a partial resolution to them. It can process both Amber and CHARMM trajectories, parsing their respective prmtop or psf files to atom and residue names and connectivity, and can assemble trajectories from partial ones, often stripping out parts (such as solvent) that might not be needed for a particular analysis. After this, a variety of common analysis tasks may be carried out; some of these are illustrated below. The command syntax is designed to allow users to add new tasks, but this requires knowledge of C programming, and is not as straightforward as it might be. But it does provide a powerful framework for adding new commands, and the ptraj repertoire continues to grow; recent additions include a variety of clustering algorithms and variants of principal component analysis.

Our eventual goal is that ptraj will support analyses based on energies as well as structures, but this is not the case in the current codes. Rather, a variety of programs are used to estimate energies and entropies from the snapshots contained within trajectory files. The calculations are organized and spawned by a Perl script, mm-pbsa, which also collects statistics and formats the output in tabular form. As its name suggests, the analysis is primarily based on continuum solvation models.13-15

Overall Strengths and Weaknesses

An overall view of our perception of Amber’s strengths and weaknesses is given in Table 1. In brief, the suite is good at carrying out and analyzing “standard” MD simulations for proteins, nucleic acids, and carbohydrates, using either explicit solvent with periodic boundary conditions or an implicit solvent model. Our aim is to make it easy for users to carry out good-quality simulations, and to keep the codes up to date as standards and expectations evolve. However, some desirable options are missing, and changing the way in which the calculations are performed can require the user to understand and modify a core code that has varying standards of readability. As we prepare new versions of the code, our goal is to ameliorate some of the weaknesses, and to continue to incorporate new simulation techniques, especially those that accelerate convergence of conformational sampling, or which allow us to use force fields that have a greater underlying physical realism.

Table 1.

Strong and Weak Points of the Amber Biomolecular Simulation Programs.

| Strengths | Weaknesses |

|---|---|

| Amber implements efficient simulations with periodic boundary conditions, using the PME method for electrostatic interactions and a continuum model for long-range van der Waals interactions. | One cannot do good simulations of just part of a system, such as the active site of an enzyme: stochastic boundary conditions for the water-continuum interface are missing, as are efficient means for handling long-range electrostatics and a reaction field. |

| Non-periodic simulations are supported, using a generalized Born or numerical Poisson-Boltzmann implicit solvent model. | The component programs lack a consistent user interface; there is only limited scripting capability to support types of calculations not anticipated by the authors. |

| Explicit support is provided for carbohydrate simulations, as well as for proteins, nucleic acids and small organic molecules. | There is limited support for force fields other than those developed by Amber contributors. |

| Free-energy calculations use thermodynamic integration or umbrella sampling techniques, and are not limited to pairwise decomposable potentials. | Missing features include: “dual topology” free energy calculations, reaction-path analysis, Monte Carlo sampling, torsion angle dynamics, and interactive steered molecular dynamics. |

| Convergence acceleration can use locally-enhanced sampling or replica exchange techniques. | QM/MM simulations are limited to semiempirical Hamiltonians, and cannot currently be combined with the PME or generalized Born solvation options. |

| There is a extensive support for trajectory analysis and energetic post-processing. | The codes were written by many authors over many years, and much of it is difficult to understand or modify. |

| Restraints can be very flexible, and can be based on many types of NMR data. | Efficient parallel scaling beyond about a dozen processors may required access to special hardware or the adoption of an implicit solvent model. |

| There is a large and active user community, plus tutorials and a User’s Manual to guide new users. The source code is portable and is available for inspection and modification. | Users are required to compile the programs themselves, and it can be tedious to assemble the needed compilers and libraries. |

No program can hope to do all tasks well, and subjective choices usually need to be made about which capabilities are most needed, and which ones can be supported by the developers. These choices may be expected to change with time, as computers become more powerful, and as algorithms evolve and experience is gained about which sorts of calculations provide the greatest amount of physical realism. Over the years, Amber has discarded a number of features that were once viewed as important parts of its feature set. Some comments about what we no longer support (or support well) may help users better know what to expect from Amber. Some of the features that are now missing are these:

“Vacuum” simulations: Amber is strongly aimed at simulations of biomolecules in water. Although it is still possible to use the codes in situations where there is no solvent environment, we no longer implement this capability in a manner that is as efficient or robust as in earlier versions of the code. The generalized Born (or numerical Poisson–Boltzmann) continuum solvent models allow for more realistic (albeit more expensive) simulations for cases where an explicit treatment of solvation is not appropriate. This lack of full support for solvent-free simulations can be a genuine limitation for cases where coarse-grained, statistical, or other “effective” potentials are appropriate, and future versions of Amber may reintroduce some of this older functionality.

Simulations with nonbonded cutoffs: simulations that ignore long-range nonbonded interactions (particular for electrostatics) often exhibit biased behavior and artifacts that are difficult to predict or correct for. For many types of simulations, it is feasible to include all long-range electrostatics by means of a particle-mesh Ewald (PME) scheme (discussed below), with a simulation cost that is comparable to (or even less than) schemes that do include cutoffs. Given this, Amber neither needs nor implements cutoff-based switching or smoothing functions. In the generalized Born model, electrostatic effects are much less long ranged (especially when a nonzero concentration of added salt is modeled), and Amber can use cutoffs for this situation, although recommended cutoffs are still fairly large (ca. 15 Å).

Free energy simulations for pairwise-decomposable potentials: classical molecular mechanics potentials allow the energy to be written as sums of terms that primarily depend upon two atoms at a time. Free energy calculations that involve changes to only a small part of the system (such as a single amino acid side chain) can exploit this feature by calculating only the small number of terms that change when accumulating free energy differences. This efficiency comes at the expense of some complex bookkeeping, and in any event breaks down when the energy is not written in this pairwise form; the latter, however, is the case for GB or PB theories, for polarizable potentials, and for the “reciprocal space” portion of PME simulations. Because these more modern techniques are the ones we recommend, we have dropped development of the gibbs module that implemented free energy calculations for pairwise potentials. With perhaps less justification, we have also dropped support for free energy perturbation (FEP) calculations, in favor of thermodynamic integration (TI) techniques that are (in most cases) more robust and efficient, and are certainly easier to program and debug.

Force Fields for Biomolecular Simulations

It has long been recognized that the accuracy of the force field model is fundamental to successful application of computational methods. The Amber-related force fields are among the most widely used for biomolecular simulation; the original 1984 article16 is currently the 10th most-cited in the history of the Journal of the American Chemical Society, and the 1995 revision17 has been that journal’s most-cited article published in the last decade. But this widespread use also means that some significant deficiencies are known, especially in terms of the relative stabilities of α-helical vs. extended conformations of peptides.18 -20 There are good recent reviews of the Amber force fields for proteins21 and for nucleic acids.22 These discuss some of the trade-offs that were made in constructing the force fields, and provide comparisons to other potentials in common use. For this reason, we will not summarize this information here. Rather, we will focus on two more recent aspects of the Amber force fields: applications to carbohydrates, and the facilities for defining a model in which part of the system is treated as a quantum subsystem, embedded in a (generally) larger environment described by molecular mechanics force fields.

Amber also supports a more generic force field for organic molecules, called GAFF (the general Amber force field).23 The antechamber program takes a three-dimensional structure as input, and automatically assigns charges, atom types, and force field parameters. Given the wide diversity of functionality in organic molecules, it is not surprising that the resulting descriptions are sometimes not optimal, and “hand-built” force fields are often required for detailed studies. Nevertheless, the tables that drive the antechamber program continue be improved as we gain experience with their weak points. The primary application so far of the GAFF potentials has been to the analysis of complexes of small molecules with proteins or nucleic acids, especially for the automated analysis of diverse libraries of such ligands. The QM/MM facility described below can also be used to describe fairly arbitrary organic molecules in the presence of a receptor described in terms of molecular mechanics potentials.

It is worth noting that the next generation of force fields for proteins and nucleic acids is likely to be significantly more complex than the ones we use today. These coming implementations will certainly have some representation of electronic polarizability,24 -26 and are also likely to include fixed atomic multipoles or off-center charges to provide more realistic descriptions of the electron density. There may also be more complex descriptions of internal distortions (especially for the peptide group27) and terms beyond Lennard–Jones 6 –12 interactions to represent exchange–repulsion and dispersion. A key goal for future development of Amber is to support efficient and parallel simulations that track these new developments.

Applications to Carbohydrates

In eukaryotes, the majority of proteins are glycosylated, that is, they have carbohydrates covalently linked to their surfaces, either through the amido nitrogen atom of asparagine side chains (known as N-linked glycosylation) or through the hydroxyl oxygen atoms of serine or threonine side chains (O-linked). These modifications serve a multitude of roles, spanning the purely structural, such as protecting the protein from proteolytic degradation, to the functional, such as enabling cell adhesion through carbohydrate-protein binding. Computational methods can assist in the interpretation of otherwise insufficient experimental data, and can provide models for the structure of oligosaccharides and insight into the mechanisms of carbohydrate recognition.

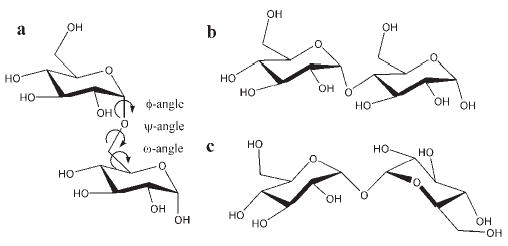

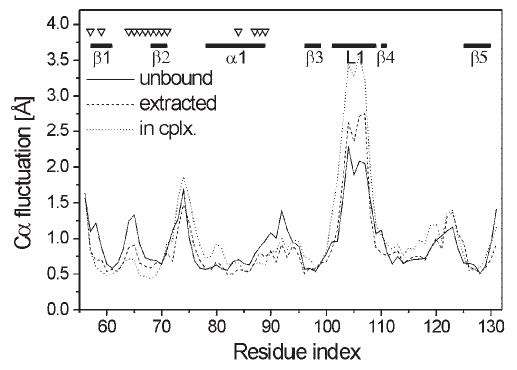

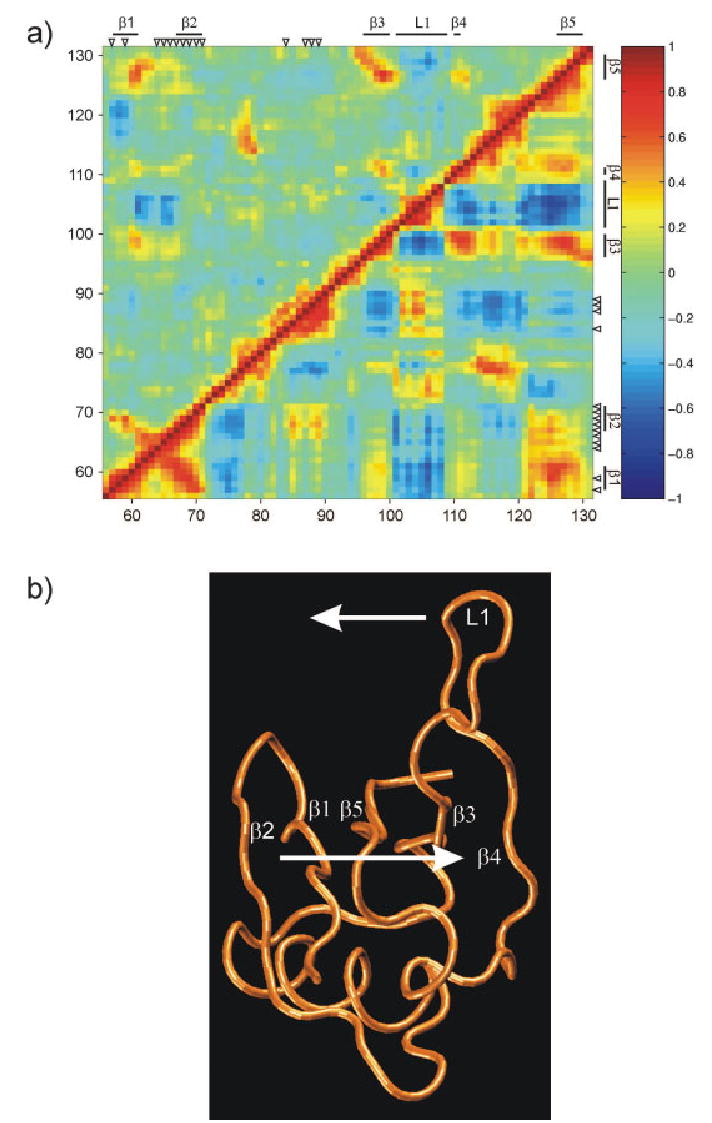

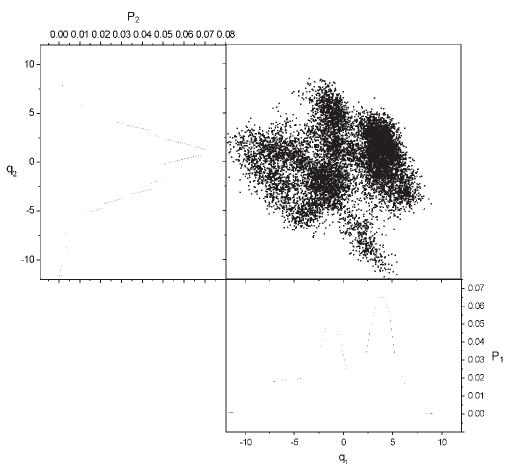

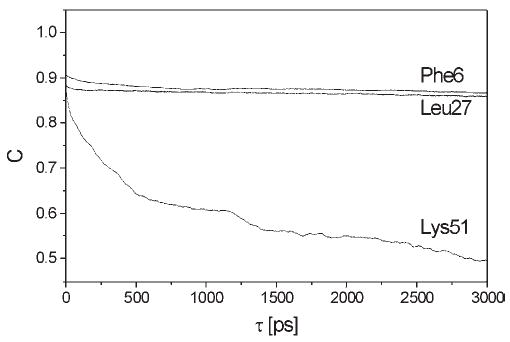

Oligosaccharides frequently populate multiple conformational families arising from rotation about the glycosidic linkages (see Fig. 2). As a result, they do not generally exhibit well-defined tertiary structures. A simulation time scale that may be adequate for establishing the performance of a force field for folded proteins cannot be expected to be appropriate for oligosaccharides, whose conformational lifetimes are on the order of 5–10 ns. In this regard, oligo- and polysaccharides behave more like peptides, and force field validation must be based on properties computed from structural ensembles.

Figure 2.

Illustration of 3 of the 10 possible disaccharides generated from linking two α-D-glucopyranosyl residues (α-D-glcp): (a) α-D-glcp-(1→6)-α-D-glcp (iso-maltose), (b) α-D-glcp-(1→4)-α-D-glcp (maltose), and (c) α-D-glcp-(1→1)-α-D-glcp (α,α-trehalose). Conformation-determining glycosidic torsion angles are indicated in (a).

The GLYCAM force field introduced to Amber all of the features that were necessary for carbohydrate conformational simulations, with the key focal points being treatment of glycosidic torsion angles and nonbonded interactions.28 Many valence terms, with the exception of those directly associated with the anomeric carbon atom [notably R(C1–O5), R(C1–O1) and θ(O5–C1–O1)], were taken from the parm94 parameter set, as were all van der Waals terms. The force constants for the newly introduced valence terms were derived by fitting to quantum data at the HF/6-31G* level.

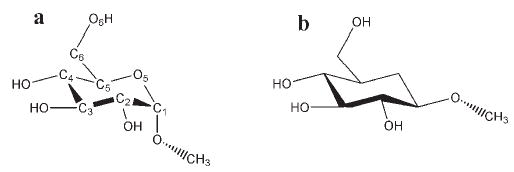

To address the electrostatic properties unique to each monosaccharide, as fully as possible in a nonpolarizable framework, all releases of GLYCAM have employed residue-specific partial atomic charges. In versions of GLYCAM up to and including GLYCAM04, these charges were computed by fitting to the quantum mechanical electrostatic potential computed at the HF/6-31G* level for the methyl glycoside of each residue in each anomeric configuration. For example, the partial charges on the atoms in the glucopyranose methyl α-D-glcp are distinct from those on the atoms in methyl β-D-glcp (see Fig. 3).

Figure 3.

Numbering and anomeric configuration in (a) methyl α- and (b) methyl β-D-glucopyranoside. In GLYCAM, anomeric carbon atom C1 is atom type AC in (a) and atom type EC in (b); in GLYCAM04 both are atom type CG.

Similarly, the structures and partial charges in glucopyranose are distinct from those in mannopyranose (manp) and galactopyranose (galp) etc. Due to the potential for rotations of the hydroxyl groups to influence the electrostatic properties, beginning in 200029 the partial charges were no longer computed from the neutron diffraction structures of the methyl glucosides, but from an ensemble of 100 configurations extracted from solvated MD simulations of the glycosides. The charges were computed at the same quantum level as earlier, consistent with the philosophy of AMBER, but were based on the HF/6-31G* optimized geometries of the ensemble of conformations. It should be noted that the torsion angles of the exocyclic groups were restrained during the geometry optimizations in the solvation preferred orientations. Although the charges were derived at the HF/6-31G* level, the fitting was performed with a larger RESP restraint weight (0.01) than that employed in fitting the charges for the amino acids in AMBER (0.001). Simulations of the crystal lattices of monosaccharides led to the inescapable conclusion that the HF/6-31G* ESP charges were too polar and required the larger damping afforded by the higher restraint weight.30 Although affecting the strengths of direct hydrogen bonds, this damping has negligible effect on molecular dipole moments and ensuing long-range electrostatics.

As in the case of peptides, the interresidue rotational properties (the positions of minima, their relative energies, and interconversion barriers) have a direct influence on the 3D structure and dynamics of an oligosaccharide. In the majority of carbohydrates these rotational properties are associated with only three atomic linkages, emanating from the anomeric carbon atom (Fig. 2).

The first of these is known as the φ-angle, and has been the subject of extensive theoretical and experimental examination because of its unique rotameric properties. In pyranosides, the φ-angle refers to the orientation of the exocyclic O5–C1–O1–Cx sequence. The hyperconjugation associated with the O–C–O sequence imparts a preference for this linkage to adopt gauche orientations, rather than populating all three potential rotamers. This preference is known as the exo-anomeric effect.31 The population of the gauche rotamers differs in α- and β-pyranosides because of further steric effects introduced by the pyran ring system. For this reason, in GLYCAM the rotational properties of the φ-angle were incorporated with a separate torsion term for α-and β-pyranosides. Each term was individually derived by fitting to the rotational energy curves for 2-methoxytetrahydropyran (2-axial serving as a model for α-pyranosides and 2-equatorial for β-pyranosides). All torsion terms associated with the φ-angle, other than those for the O5–C1–O1–Cx sequence, were set to zero. This approach necessitated the introduction of two new atom types for the anomeric carbon in pyranosides, one for use when the linkage was present in an equatorial configuration (atom type EC, generally corresponding to β-pyranosides) and one for axial (AC, generally corresponding to α-).

The second important torsion angle, the ψ-angle, is associated with the C1–O1–Cx–Hx sequence and does not exhibit any profound stereoelectronic properties that are unique to carbohydrates. As such, it was treated with the parameters applicable to ether linkages of this type.

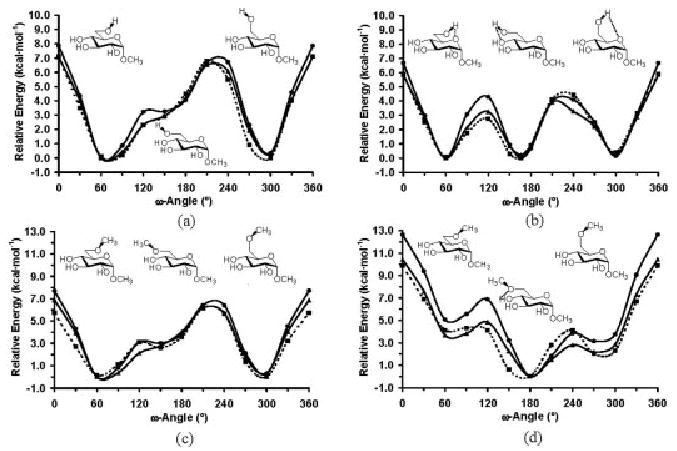

The most problematic carbohydrate-specific torsion term for hexopyranoses is that associated with the exocyclic rotation of the hydroxymethyl group. This O6 –C6 –C5–O5 linkage is known as the ω-angle, and plays a crucial role in defining the 3D structures of any oligosaccharide containing a 16 linkage. The tendency for the O–C–C–O sequence to preferentially adopt a gauche orientation has been associated with the more general gauche effect.32 In the gas phase it arises primarily from hydrogen bonding between the vicinal hydroxyl groups; however, there is a small contribution from hyperconjugation that is further stabilizing. In the condensed phase, its origin is more complex.33 In solution, in pyranosides in which both C6 and O4 are equatorial (most notably in gluco- and mannopyranose), the O6 –C6 –C5–O5 torsion angle adopts nearly exclusively one or the other of the two gauche orientations, known as gauche– gauche (gg) or gauche–trans (gt); labels that refer to the orientation of the O6 –C6 –C5–O5 and O6 –C6 –C5–C4 angles, respectively. Remarkably, when O4 is axial (as in galactopyranose) all three rotamers are populated, with a significant amount of the trans (tg) rotamer being observed.34 To address these issues much computational focus has been given to the O–C–C–O linkage.35-37 In gas phase quantum calculations it is only when intramolecular hydrogen bonding is disallowed that the rotational energy curves agree even qualitatively with the experimental data (see Fig. 4).37 In a combined quantum and simulation study it was concluded that the gauche effect in carbohydrates arose solely from the attenuation of internal hydrogen bonding by interactions with explicit solvent.37

Figure 4.

HF/6-31G(d), B3-LYP/6-31++G(2d,2p), and GLYCAM ω-angle rotational curves for methyl α-D-glucopyranoside, without (a) and with (b) internal hydrogen bonding; and methyl 6-O-methyl-α-D-glucopyranoside, without (c) and with (d) internal hydrogen bonding.

To parameterize this property into the GLYCAM force field it was necessary to ensure that the quantum mechanical rotational properties of the O–C–C–O linkage, in the presence and absence of internal hydrogen bonding, could be reproduced. This presented a more serious challenge than might first be expected, largely because of the difficulty in achieving a balance between the strengths of the internal nonbonded energies associated with interactions between O6, O5, and O4. In pyranosides, the O6 . . . O4 interaction is a 1–5 type, while the O6 . . . O5 is a 1– 4. Yet, geometrically, the relevant interatomic distances, both in the presence and absence of internal hydrogen bonding are similar for each case. Thus, the practice in AMBER of damping the magnitude of 1– 4 nonbonded interactions relative to all others by applying a scale factor (of between 0.5– 0.83) introduced an artificial imbalance in the strengths of the O6 . . . O4 and O6 . . . O5 interactions in carbohydrates. It was only when the GLYCAM parameters were derived in the absence of 1– 4 scaling that quantitative agreement with the experimental solution rotamer populations for the ω-angle could be achieved.37

Although adequate for a great variety of carbohydrate systems, the presence in GLYCAM of a unique atom type for the anomeric carbon in α- and β-glycosides meant that it was not possible to simulate processes, such as ring flipping, in which the substituents at the anomeric center changed configuration. This is irrelevant to most pyranosides, which populate only one chair form in solution, but some (like the monosaccharide idopyranose, present in heparin) exist as an equilibrium between the 4C1 and 1C4 chair forms. Further, many carbohydrate processing enzymes are believed to distort the 4C1 chair so as to lower the activation energy. To study these systems it was necessary to derive a parameter set that employed the same atom type for the anomeric carbon in all pyranosides. This was the motivation behind the development of GLYCAM04. Recalling that new anomeric carbon atom types had been introduced to facilitate parameterization of the rotational φ-angle energy curves, it was these parameters that were again the focus of the parameter development. To achieve adequate reproduction of the quantum rotational curves, it was no longer possible to employ only torsion term for the O5–C1–O1–Cx linkage. To employ a single atom type for C1 in GLYCAM04, it was necessary to introduce torsion terms for each of the relevant linkages associated with the φ-angle, namely for the O5–C1–O1–Cx, C2–C1–O1–Cx, and H1–C1–O1–Cx linkages. Unambiguous partitioning of the rotational energies into contributions from each of these sources necessitated a detailed study of the underlying sequences in model structures. This undertaking ultimately led to a completely new set of valence and torsion terms, each fit to quantum data, largely now at the B3LYP/cc-pVTZ level. Partial atomic charges remain as computed earlier. In the course of the development of GLYCAM04, it was decided to remove all default torsion parameters and introduce quantum-derived torsion terms for all linkages found in carbohydrates. This is a paradigm shift that has many advantages; most notable being that it results in a transferable and generalizable force field that enables the introduction of functional groups and chemical modifications into a carbohydrate, without the need to develop a large number of new parameters. However, for a given linkage, the sum of the general torsion terms employed in GLYCAM04 may not be as precise as the explicit fitting employed in GLYCAM. Nevertheless, the accuracy of the GLYCAM04 rotational curves can be rationally tuned, and the parameters extended in ways that were all but impossible in GLYCAM. Whereas the GLYCAM parameters augmented the PARM94 AMBER parameters, the GLYCAM04 parameters are self-contained. To maintain orthogonality with the AMBER protein parameter sets, atom type CT was renamed in GLYCAM04 to CG. In principle, the GLYCAM04 parameters could be extended to generate a completely generalizable force field for proteins.

Currently under development, in both the GLYCAM and GLY-CAM04 formats, are TIP5P compatible parameters that include TIP5P-like lone pairs on the oxygen atoms, and polarizability parameters that employ quantum-derived atomic polarizabilities. An interactive tool (http://glycam.ccrc.uga.edu) has been introduced that greatly facilitates the preparation of the files necessary for running MD simulations with AMBER of proteins, glycoproteins, and oligosaccharides. Further intergration of this facility into the Amber codes is planned, as is continued work on testing and improvement of the GLYCAM force fields.

The QM/MM Approach

Prior to AMBER 8, the QM/MM module was called Roar. This coupled an earlier version of the Amber energy minimization/MD module with Mopac 6.0. As a part of modernization efforts, we wanted to merge more things into the sander program, and we recognized that semiempirical technology had advanced well beyond what was available in Mopac 6.0, particularly in the area of linear-scaling approaches. Because the theory and application of the QM/MM approach38,39 has been extensively reviewed,40 – 42 we only give a brief description of our approach.

The combination of quantum mechanics and molecular mechanics is a natural approach for the study of enzyme reactions and protein–ligand interactions. The active site or binding site is treated by the ab initio density functional theory or semiempirical potentials, whereas the rest of the system is calculated by the force fields based on molecular mechanics. In the current version of sander, one can use the MNDO, AM1, or PM3 semiempirical Hamiltonian for the quantum mechanical region. Interaction between the QM and MM regions includes electrostatics (based on partial charges in the MM part) and Lennard–Jones terms, designed to mimic the exchange-repulsion terms that keep QM and MM atoms from overlapping.

Standard semiempirical molecular orbital methods are widely used in QM/MM applications because they are able to provide fast and reliable QM calculations for energies and molecular properties. However, these methods are still hampered by the need for repeated global matrix diagonalizations in the SCF procedure, resulting in computational expense scaling as O(N3), where N is the number of basis functions. If the QM part is extended to several hundred atoms, it becomes increasingly difficult to apply the standard semiempirical MO method for QM/MM calculation.

This expense can be greatly reduced with a linear scaling approach43,44 based on the density matrix divide and conquer (D&C) method.45 In this approach, global Fock matrix diagonalization and cubic scaling are avoided by decomposing a large-scale electronic structure calculation into a series of relatively inexpensive calculations involving a set of small, overlapping subregions of a system. Each subsystem consists of a core, surrounded by inner and outer buffer regions, and an accurate global description of the system is obtained by combining information from all subsystem density matrices. When the system is large enough, the so-called crossover point is reached, the D&C calculation becomes faster than the standard MO calculation. The accuracy of the D&C approximation can be controlled by the two buffer sizes. According to our experience, the subsetting scheme with the inner and outer buffer layers of 4.0 ± 0.5 Å and 2.0 ± 0.5 Å, respectively appear to be a good compromise between speed and accuracy.46

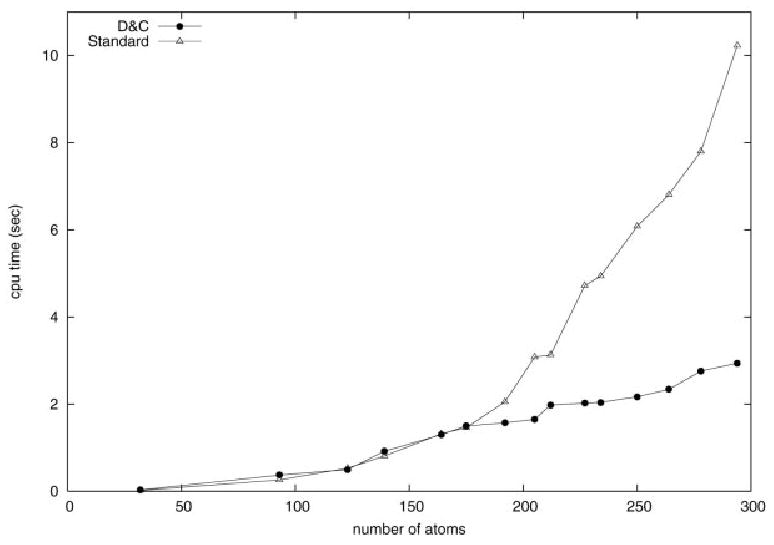

Figure 5 shows SCF cycle timings for standard and D&C calculations for different QM region sizes studied in the bovine α-chymotrypsin system complexed with 4-fluorobenzylamine. The crossover point occurs at about 170 QM atoms. After this point, the D&C calculation is significantly faster than the standard calculation.

Figure 5.

Timings for semiempirical calculations of chymotrypsin, as a function of the size of the QM region.

In some cases, the partitioning of the whole system into QM and MM parts involves the cutting of covalent bonds and raises the question of how to best model the interface between the classical and quantum subsystems. Although many approaches have been proposed to answer this question,47,48 the link atom method is still the most commonly used due to its simplicity. Generally hydrogen atoms are added in the QM part at the covalent bonds cut by the QM/MM interface. Although the link atom approach is subject to the criticism that it partitions the systems in an unphysical manner, results from the link atom approach, if carefully selected, are not so different from other approaches.49 Moreover, because the linear-scaling D&C QM treatment is available, we can readily make the QM part so large that the link atom effect is negligible for the region of interest.

Amber versions under development will allow QM regions to be present when the PME or generalized Born options are chosen, and will be significantly faster, at least for small quantum regions. For protein–ligand complexes, it is natural to treat the ligand and associated residues in the binding site as the QM part, and the rest as the MM part,50 and this facility will be further integrated into the codes.

Treating Solvent Effects

Explicit Solvent Models

Amber provides support for the TIP3P,51 TIP4P and TIP4P-Ew,52-54 TIP5P,55 SPC/E,56 and POL357 models for water, as well as solvent models for methanol, chloroform, N-methylacetamide, and urea/water mixtures. General triclinic unit cells can be used, although some of the analysis and visualization tools are limited to the most common (rectangular and truncated octahedron) shapes; there is no support for symmetry elements (such as screw axes) that involve rotations. By default, electrostatic interactions are handled by a particle-mesh Ewald (PME) procedure, and long-range Lennard–Jones attractions are treated by a continuum model. This gives densities and cohesive energies of simple liquids that are in excellent agreement with more elaborate methods,54 at a computational cost that is often less than that of cutoff based simulations.

The PME is, as the name suggests, a modified form of Ewald summation that is inspired by and closely related to the original Hockney–Eastwood PPPM method.58 Ewald summation is a method to efficiently calculate the infinite range Coulomb interaction under periodic boundary conditions (PBC), and PME is a modification to accelerate the Ewald reciprocal sum to near linear scaling, using the three dimensional fast Fourier transform (3DFFT).

Because the Coulomb interaction has infinite range, under PBC particle i within the unit cell interacts electrostatically with all other particles j within the cell, as well as with all the periodic images of j. It also interacts with all of its own periodic images. The electrostatic energy of the unit cell, and related quantities such as forces on individual particles, are found by summing the resulting infinite series. This latter converges (slowly) to a finite limit only if the unit cell is electrically neutral, and furthermore, the limit is found to depend on the order of summation (conditional rather than absolute convergence). Ewald59 applied a Jacobi theta transform to convert this slowly, conditionally convergent series to a pair of rapidly, absolutely convergent series, called the Ewald direct and reciprocal sums. The conditional convergence of the original series is expressed in a third term60 as a quadratic function of the dipole moment of the unit cell, whose form depends on the order of summation. It is standard to assume that the whole assembly of unit cells is immersed in an external dielectric. Most commonly this dielectric is assumed to be fully conducting (“tin-foil” boundary conditions), in which case the third term vanishes and the order of summation becomes irrelevant. A more elementary derivation of the Ewald sum, using compensating Gaussian charge densities together with Poisson’s equation under PBC in place of the Jacobi theta transform, can be found in the appendix to Kittel.61

The Ewald direct sum resembles the standard Coulomb interaction, but with the term qiqj/rij, representing the Coulomb energy of interaction between particles i and j, replaced by qiqj erfc(βrij)/rij, where β is the so-called Ewald convergence parameter. This latter term involving erfc converges rapidly to zero as a function of the interparticle distance rij, allowing the use of a finite cutoff. In the sander and pmemd programs the Ewald direct sum is calculated together with the van der Waals interactions. The default value of the direct sum cutoff is 8 Å, independent of system size. Accordingly, β is chosen to be ≈0.35 Å−1, leading to a relative RMS force error due to truncation below 5 × 10−4.

The Ewald reciprocal sum is the sum, over all reciprocal lattice vectors m, (m ≠ 0), of a Gaussian-like weight factor exp(−π2m2/β2)/(2πm2) multiplied by |S(m)|,2 where the so-called structure factor S(m) is given by the sum of qjexp(2πim · rj) over all particles j in the unit cell (rj is the Cartesian coordinate vector of particle j). A cutoff can also be applied to the reciprocal sum. With the above choice of β, the number of reciprocal vectors needed so that the relative RMS force error due to truncation is below 5 × 10−4 is typically several times the number of particles in the unit cell. Because the computational cost of calculating S(m) is of order N for each such vector m, the cost of the reciprocal sum is thus of order N.2 Unfortunately, this cost becomes prohibitive for systems containing tens of thousands of particles as is typical today.

What the PME algorithm does is to accurately approximate the structure factors S(m) using the 3DFFT. The essential idea is to first note that exp(2πim · rj) can be factored into three one-dimensional trigonometric terms (even in triclinic unit cells). One can then simply apply table lookup to these terms, approximating the trigonometric functions (evaluated at the crystallographic fractional coordinates of particle j) in terms of their values at nearby grid points. By this means the structure factors are approximated as sums over regular grid points, that is as a discrete Fourier transform that can be rapidly calculated using the 3DFFT, delivering all the needed structure factors at order Nlog(N) computational cost.

The original version62 of the PME utilized Lagrange interpolation to do the table lookup of trigonometric functions. The Ewald reciprocal sum forces were approximated separately from the energy. The smooth PME (SPME)63 replaced Lagrange polynomials with cardinal B-splines, which are better behaved at higher order, and which can be differentiated via recursion, allowing forces to be derived analytically. Thus, in SPME, the forces are obtained from the gradient of the energy, unlike in the original PME. It is also possible to use B-splines to separately approximate the forces, and used in this mode, PME becomes essentially identical to PPPM as implemented by Pollock and Glosli64 (originally we used B-spline interpolation, but least-squares B-spline approximation, advocated by Pollock and Glosli, yields superior accuracy, so we adopted it beginning with version 6 of Amber65). Separate force approximation has the advantage that the resulting forces satisfy Newton’s 2nd law, thus conserving momentum. The accuracy is also better than that of SPME. However, the discrepancy in momentum conservation with SPME is of the order of the force error, which so far appears to be adequate for typical biomolecular simulations (there may yet be situations in which more precise momentum conservation is important). Furthermore, SPME requires half as many 3DFFTs, and thus when used with the standard defaults in sander or pmemd is more efficient than the method of separate force approximation. Finally, the SPME approach of differentiating the approximate reciprocal sum electrostatic potential using the properties of B-splines is critical for efficient approximation of Ewald sums involving atomic dipoles and multipoles (see below). For these reasons we have pursued the SPME approach in recent sander versions and in pmemd.

Over the last 25–30 years objections have sometimes been raised to the use of Ewald summation in liquid state simulations. In the early 1980s, it was demonstrated that simulations of liquids such as water, using Ewald summation, led to calculated properties in quantitative agreement with those of similar simulations using reaction field boundary conditions.60 This alleviated many of the early concerns. More recently, Hummer et al.66 demonstrated that ionic charging free energies could be calculated accurately using Ewald summation, and that the finite size effects, due to limited unit cells, could be accounted for. The picture emerged that simulations of biomolecules in water, using Ewald summation in sufficiently large unit cells, accurately represented the idealized state of the isolated biomolecule in solution. Poisson–Boltzmann calculations under PBC vs. nonperiodic boundary conditions have been used67,68 to estimate the artifacts in conformational free energy due to finite unit cell size (that is, artificial preference for certain conformations due to the imposition of strict PBC within a limited unit cell). From these it is clear that small but nonnegligible finite size artifacts exist, which would be particularly important in protein folding studies and systems containing a low dielectric region (such as lipid bilayers). The cure within Ewald summation (or any modified Ewald method such as PME) is to enlarge the unit cell, which, of course, incurs more computational cost. A number of alternative treatments of long-range electrostatics have been proposed. These typically involve a modified form of cutoff of standard Coulombic interactions, possibly including an approximate treatment of the field due to charges outside the cutoff, as in reaction fields type methods. None of these alternative approaches have been demonstrated to be reliably superior to Ewald summation. Because Ewald summation in a large unit cell is known to be correct, superiority would mean that the alternative method gave the same results as Ewald summation in a large unit cell, using, however, a smaller unit cell. Notably, these alternative treatments all lead to spherically isotropic interaction potentials [An exception, for slab boundary conditions, is the recent method of Wu and Brooks (X.W. Wu, personal communication)]. This spherical isotropy can be expected to be problematic in membrane simulations and other cases involving nonisotropic dielectric media.69,70

Force fields designed for macromolecules have until recently modeled the electrostatic interaction between molecules using fixed atomic point charges. This approximation is thought by many to be the principal limitation in current macromolecular force fields. Recent AMBER forcefields have begun to advance beyond this electrostatic model by introducing off-center charges and inducible atomic dipoles71,72 to account for the nonisotropy of the electron density near atoms and for the inductive response of that density to the local electric field, which changes dynamically as a function of the changing configuration of the system.

The introduction of atomic dipoles necessitates a generalization of the standard Ewald sum, and hence, of the PME algorithm. The generalization of Ewald summation was provided by Smith,73 who gave explicit formulas for Ewald summation of atomic multipoles up to quadrupole level. Smith’s methodology was utilized by Toukmaji et al.74 for performing the Ewald sum involving fixed atomic charges and inducible dipoles, and is implemented in this form into sander. More recently, it was used by Ren and Ponder75 for the AMOEBA force field that includes fixed atomic multipoles up to quadrupole level as well as inducible atomic dipoles using a Thole damping model.76 We have now implemented Ewald summation as well as SPME for atomic Cartesian multipoles up to hexadecapole level,77 using the McMurchie Davidson recursion78 for the Ewald direct sum in place of Smith’s formalism.

Generalizing the SPME to the case of Cartesian multipoles is straightforward. One notes that the Ewald reciprocal sum structure factors now involve a sum of products of Cartesian multipole moments of particle j multiplied by the appropriate derivative, with respect to rj, of the function exp(2πim · rj). Again, these moments and derivatives can all be reexpressed in terms of the crystallographic fractional coordinates of particle j. The function exp(2πim · rj) as well as its successive derivatives with respect to fractional coordinates are approximated in terms of B-splines and their successive derivatives. In practice these derivatives, multiplied by the corresponding transformed moments, are summed onto the PME grid. This grid is then passed to the 3DFFT, modified in reciprocal space, and passed to the inverse 3DFFT precisely as in the standard charge-based SPME algorithm. The reciprocal electrostatic potential is then obtained at an atom i precisely as in the charges only case, and derivatives of this with respect to fractional coordinates of i are multiplied against transformed Cartesian atomic multipole moments of i for all atoms i to get the energy and forces. Due to the above mentioned factorizability of the B-spline approximation, the SPME for atomic multipoles is very efficient. Indeed, for the same grid density and spline order, calculating reciprocal sum interactions up to hexadecapole– hexadecapole order is only twice as expensive as calculating charge– charge interactions (note, however, that the hexadecapole– hexadecapole case generally requires a greater grid density and higher spline order than the charge– charge case). The SPME for atomic multipoles up to quadrupole is currently being implemented into sander and pmemd along with the AMOEBA force field of Ren and Ponder.75,79

Calculating the inductive response of the electron density, modeled by a set of inducible atomic dipoles, usually requires the solution of a set of linear equations relating the electric field to the induced dipoles. In the sander code we have implemented several variants of an iterative scheme for solving these, as well as Car–Parrinello-like extended Lagrangian scheme, wherein the induced dipoles are given a fictitious mass and evolved dynamically along with the atoms. In practice, this latter method is quite efficient, requiring, however, a 1-fs time step and gentle temperature control for stability. We have found that the AMBER ff02 force field, having atomic point charges and induced dipoles leads to stable trajectories using the extended Lagrangian scheme, with some improvement over previous force fields, at least for DNA simulations.80 However, stability problems can occur with force fields involving extra points in addition to inducible dipoles, due to occasional close approaches of the unshielded extra point charges.

Implicit Solvent Models

An accurate description of the aqueous environment is essential for realistic biomolecular simulations, but may become very expensive computationally. For example, an adequate representation of the solvation of a medium-size protein typically requires thousands of discrete water molecules to be placed around it. An alternative replaces the discrete water molecules by “virtual water”—an infinite continuum medium with some of the dielectric and “hydrophobic” properties of water.

These continuum implicit solvent models have several advantages over the explicit water representation, especially in molecular dynamics simulations:

Implicit solvent models are often less expensive, and generally scale better on parallel machines.

There is no need for the lengthy equilibration of water that is typically necessary in explicit water simulations; implicit solvent models correspond to instantaneous solvent dielectric response.

Continuum simulations generally give improved sampling, due to the absence of viscosity associated with the explicit water environment; hence, the macromolecule can more quickly explore the available conformational space.

There are no artifacts of periodic boundary conditions; the continuum model corresponds to solvation in an infinite volume of solvent.

New (and simpler) ways to estimate free energies become feasible; because solvent degrees of freedom are taken into account implicitly, estimating free energies of solvated structures is much more straightforward than with explicit water models.13,14

Implicit models provide a higher degree of algorithm flexibility. For instance, a Monte Carlo move involving a solvent exposed side chain would require nontrivial rearrangement of the nearby water molecules if they were treated explicitly. With an implicit solvent model this complication does not arise.

Most ideas about “landscape characterization” (that is, analysis of local energy minima and the pathways between them) make good physical sense only with an implicit solvent model. Trying to find a minimum energy structure of a system that includes a large number of explicit solvent molecules is both difficult and generally pointless: the enormous number of potential minima that differ only in the arrangement of water molecules might easily overwhelm an attempt to understand the important nature of the protein solute.

Of course, all of these attractive features of the implicit solvent methodology come at a price of making a number of approximations whose effects are often hard, if not impossible, to estimate. Some familiar descriptors of molecular interaction, such as solute–solvent hydrogen bonds, are no longer explicitly present in the model; instead, they come in implicitly, in the mean-field way via a linear dielectric response, and contribute to the overall solvation energy. However, despite the fact that the methodology represents an approximation at a fundamental level, it has in many cases been successful in calculating various macromolecular properties.81– 83

In many molecular modeling applications, and especially in molecular dynamics (MD), the key quantity that needs to be computed is the total energy of the molecule in the presence of solvent. This energy is a function of molecular configuration, its gradients with respect to atomic positions determine the forces on the atoms. The total energy of a solvated molecule can be written as Etot = Evac + ΔGsolv, where Evac represents molecule’s energy in vacuum (gas phase), and ΔGsolv is the free energy of transferring the molecule from vacuum into solvent, that is, solvation free energy. To estimate the total solvation free energy of a molecule, one typically assumes that it can be decomposed into the electrostatic and nonelectrostatic parts:

| (1) |

where ΔGnonel is the free energy of solvating a molecule from which all charges have been removed (i.e., partial charges of every atom are set to zero), and ΔGel is the free energy of first removing all charges in the vacuum, and then adding them back in the presence of a continuum solvent environment. The above decomposition, which is yet another approximation, is the basis of the widely used PB/SA scheme.14 Generally speaking, ΔGnonel comes from the combined effect of two types of interaction: the favorable van der Waals attraction between the solute and solvent molecules, and the unfavorable cost of disturbing the structure of the solvent (water) around the solute. Within the PB/SA, ΔGsurf is taken to be proportional to the total solvent accessible surface area (SA) of the molecule, with a proportionality constant derived from experimental solvation energies of small nonpolar molecules.84,85 Some more complex approaches have been proposed,86,87 but Amber currently follows this simple way to compute ΔGnonel, and uses a fast LCPO algorithm to compute an analytical approximation to the surface-accessible area of the molecule.88 This part is relatively straightforward, and is not the bottleneck of a typical MD simulation. The most time-consuming part is the computation of the electrostatic contribution to the total solvation free energy, mainly because the forces involved are long ranged, and because screening due to the solvent is a complex phenomenon.

Within the framework of the continuum model, a numerically exact way to compute the electrostatic potential φ(r) produced by molecular charge distribution ρm(r), is based on the Poisson-Boltzman (PB) approach in which the following equation (or its equivalent) must be solved; for simplicity we give its linearized form:

| (2) |

Here, ε(r) represents the position-dependent dielectric constant that equals that of bulk water far away from the molecule, and is expected to decrease fairly rapidly across the solute/solvent boundary. The electrostatic screening effects of (monovalent) salt enter via the second term on the right-hand side of eq. (2), where the Debye–Huckel screening parameter κ ≈ 0.1 Å−1 at physiological conditions. Once the potential φ(r) is computed, the electrostatic part of the solvation free energy is

| (3) |

where qi are the partial atomic charges at positions ri that make up the molecular charge density ρm(r) = ∑iδ(r − ri), and φ(ri)|vac is the electrostatic potential computed for the same charge distribution in the absence of the dielectric boundary, for example, in vacuum. Full accounts of this theory are available elsewhere.85,89

Numerical Approaches to the Poisson–Boltzmann Equation

The computational expense and difficulty of applying a Poisson–Boltzmann (PB) model to biomolecules have sparked much interest in developing faster and better numerical methods. Many different numerical methods have been proposed. The finite-difference method (FDPB) is one of the most popular and mature methods for biomolecular applications.90,91 Recently, its numerical efficiency has been dramatically enhanced, especially for dynamics simulations of biomolecules: the overhead of FDPB numerical solver is reduced from higher than explicit water simulations to comparable to vacuum simulations. The Amber package implements this improved FDPB method.92

A typical FDPB method involves the following steps: mapping atomic charges to the finite difference grid points; assigning nonperiodic boundary conditions, (electrostatic potentials on the boundary surfaces of the finite difference grid); and applying a molecular dielectric model to define the boundary between high-dielectric (water) and low-dielectric (molecular interior) regions, sometimes with the help of a dielectric smoothing technique to alleviate the problem in discretization of the dielectric boundary.93 These steps allow the partial differential equation to be converted into a sparse linear system Ax = b, with the electrostatic potential on grid points x as unknowns, the charge distribution on the grid points as the source b, and the dielectric constant on the grid edges and salt-related terms wrapped into the coefficient matrix A, which is a seven-banded symmetric matrix in the usual formulation. The linear system obtained is then solved with an iterative procedure.

In the Amber implementation, a standard trilinear mapping of charges is used. The boundary potentials may use a sum of contributions from individual atom charges,94 from individual residue dipolar charges,95 from molecular dipolar charges,94 or from individual grid charges. Here a grid charge is the net charge at a grid point after the trilinear charge mapping. A previous study shows that the last option (using grid charges) gives the best balance of accuracy and efficiency for electrostatic force calculations when electrostatic focusing is used. The dielectric model requires a definition of molecular volume; the choices offered in the Amber implementation are van der Waals volume and solvent excluded (molecular) volume. (Note that the van der Waals option is based on an improved definition as discussed in detail elsewhere.95 Briefly, the radii of buried atoms have been increased by solvent probe before they are used for van der Waals volume calculation.) The linear system solver adopted in Amber is the Eisenstat’s implementation of preconditioned conjugate gradient algorithm. Care has been taken to utilize electrostatic update in potential calculations for applications to molecular dynamics and minimization. Once the linear system is solved, the solution can be used to compute the electrostatic energies and forces.95

The Amber PB implementation offers two methods to compute electrostatic energy from a FDPB calculation. In the first method, total electrostatic energy is computed as 1/2 ∑iqiφi, where qi is the charge of atom i and φi is the potential at atom i as interpolated from the finite difference grid. This energy includes not only the reaction field energy, but also the finite-difference self energy and the finite-difference Coulombic energy. To compute a reaction field energy in the first method, two runs are usually required: one with solvent region dielectric constant set as its desired value, and one with solvent region dielectric constant set to that in the solute region. The second run is used to compute the finite-difference self-energy and the finite-difference Coulombic energy so that the difference between the total electrostatic energies of the two runs yields the reaction field energy. However, if there is no need to compute reaction field energy directly, for example, as in a molecular dynamics simulation, the finite-difference total electrostatic energy can be corrected to obtain the analytical total electrostatic energy.95 In doing so, the overhead in second FDPB run can be avoided. This approach is the same as that implemented in UHBD. In the second method, FDPB is only used to calculate reaction field energy, which can be expressed as half of the Coulombic energy between solute charges and induced surface charges on the solute/solvent boundary. The induced surface charges can be computed using Gauss’s law with the knowledge of FDPB electrostatic potential. This method is the same as that implemented in Delphi.

The Generalized Born Model

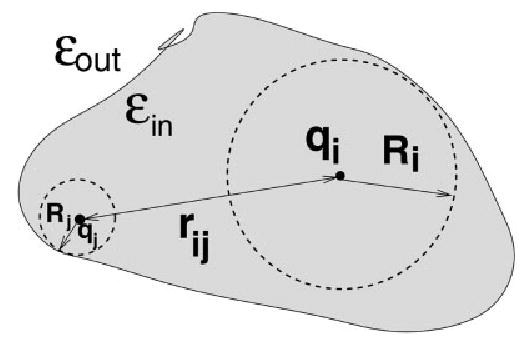

There exists an alternative approach to obtain a reasonable, computationally efficient estimate of the electrostatic contribution to the solvation free energy, to be used in molecular dynamics simulations. The analytic generalized Born (GB) method is an approximate way to calculate ΔGel. The methodology has become popular, especially in MD applications, due to its relative simplicity and computational efficiency, compared to the more standard numerical solution of the Poisson–Boltzmann equation.82,96 Within the GB models currently available in Amber, each atom in a molecule is represented as a sphere of radius ρi with a charge qi at its center; the interior of the atom is assumed to be filled uniformly with material of dielectric constant of 1. The molecule is surrounded by a solvent of a high dielectric εw (78.5 for water at 300 K). The GB model approximates ΔGel by an analytical formula,82,97

| (4) |

where rij is the distance between atoms i and j, Ri is the so-called effective Born radii of atom i, and fGB is a certain smooth function of its arguments (Fig. 6).

Figure 6.

The effective Born radius of an atom reflects the degree of its burial inside the low dielectric region defined by the solvent boundary.

A common choice97 of fGB is

| (5) |

although other expressions have been tried.98,99 The effective Born radius of an atom reflects the degree of its burial inside the molecule: for an isolated ion, Ri is equal to its VDW radius ρi, and eq. (4) takes on a particularly simple form:

| (6) |

where we assumed εw = 78.5 and κ = 0 (pure water). The reader can recognize in eq. (6) the famous expression due to Born of the solvation energy of a single ion. For simple monovalent ions, substituting q = 1 and ρ ~1.5 Å yields ΔGel ~−100 kcal/mol, in reasonable agreement with the experiment. This type of calculation was probably the first success of the implicit solvent model based on continuum electrostatics. The function fGB in eq. (5) is designed to interpolate, in a clever manner, between the limit rij → 0, when atomic spheres merge into one and eq. (6) holds, and the opposite extreme rij → ∞, when the ions can be treated as point charges obeying the Coulomb’s law. In fact,100 without the exponential term in Eq. 5, Eq. 4 corresponds to the exact (in the limit εw→ ∞) solution of the Poisson equation for an arbitrary charge distribution inside a sphere. However, in the case of realistic molecules, the exponential factor in eq. (5) appears to be necessary. This is probably because, compared to a sphere, the electric field lines between a pair of distant charges in a real molecule go more through the high dielectric region, effectively reducing the pairwise interaction. The traditional form of fijGB eq. (5) takes this into account, at least to some extent, by allowing for steeper decay of the interaction with charge– charge distance. For deeply buried atoms, the effective radii are large, Ri >> ρi, and for such atoms one can use a rough estimate Ri ~ L, where L is the distance from the atom to the molecular surface. Closer to the surface, the effective radii become smaller, and for a completely solvent exposed side chain one can expect Ri to approach ρi. Note that the effective radii depend on the molecule’s conformation, and these need to be recomputed every time the conformation changes.

The efficiency of computing the effective radii is therefore a critical issue, and various approximations are normally made that facilitate an effective estimate of ΔGel. In particular, the so-called Coulomb field approximation is often used, which approximates the electric displacement around an atom by the Coulomb field . Within this assumption, the following expression for Ri can be derived:82,101

| (7) |

where the integral is over the solute volume, excluding a sphere of radius ρi around atom i. For a realistic molecule, the solute boundary (molecular surface) is anything but trivial, and so further approximations are often made to obtain a closed-form analytical expression. The Amber programs have adopted the pairwise de-screening approach of Hawkins, Cramer, and Truhlar,102,103 which leads to a GB model termed GBHCT. The electrostatic screening effects of (monovalent) salt are incorporated104 into eq. 4 via the Debye–Huckel screening parameter Note, that within the implicit solvent framework, one does not use explicit counterions unless these are known to remain “fixed” in specific positions around the solute. To set up an MD simulation based on an implicit solvation model, one requires a set of atomic radii {ρi}, which is an extra set of input parameters compared to the explicit solvent case. Over the years, a number of slightly different radii sets have been proposed, each being optimal for some class of problems, for example, for computing solvation free energies of molecules. A good set is expected to perform reasonably well in different types of problems, that is, to be transferable. One example is the Bondi radii set originally proposed in the context of geometrical analysis of macromolecular structures, but later found to be useful in continuum electrostatics models as well.105

Because the GB model shares the same underlying physical approximation— continuum electrostatics—with the Poisson–Boltzmann approach, it is natural, in optimizing the GB performance, to use the PB model as a reference. In particular, it has been shown that a very good agreement between the GB and PB models can be achieved if the effective Born radii match those computed exactly using the PB approach.99 Therefore, by improving the way the effective radii are computed within the analytic generalized Born, one can improve accuracy of the GB model. However, agreement with PB calculations is not the only criterion of optimal GB performance; other tests include comparisons to explicit solvent simulations results, and to the experiment. Also, to ensure computational efficiency in molecular dynamics simulations, the analytical expressions used to compute the effective Born radii, which enter eq. (4), must be simple enough, and the resulting electrostatic energy component ΔGel “well-behaved,” so as not to cause any instabilities in numerical integration of Newton’s equations of motion. The versions of the GB model currently available in Amber reflect various stages of the evolution of this approach; an analysis of these and other popular GB models has recently appeared.106 Historically, the first GB model to appear in Amber was GBHCT. It was later found that the model worked well on small molecules, but not so well on macromolecules, relative to the PB treatment. Note that in the GBHCT, the integral used in the estimation of the effective radii is performed over the VDW spheres of solute atoms, which implies a definition of the solute volume in terms of a set of spheres, rather than the more complex but realistic molecular surface commonly used in the PB calculations. For macromolecules, this approach tends to underestimate the effective radii for buried atoms,101 arguably because the standard integration procedure treats the small vacuum-filled crevices between the VDW spheres of protein atoms as being filled with water, even for structures with large interior.99 This error is expected to be greatest for deeply buried atoms characterized by large effective radii, while for the surface atoms it is largely canceled by the opposing error arising from the Coulomb approximation, which tends82,107,108 to overestimate Ri.

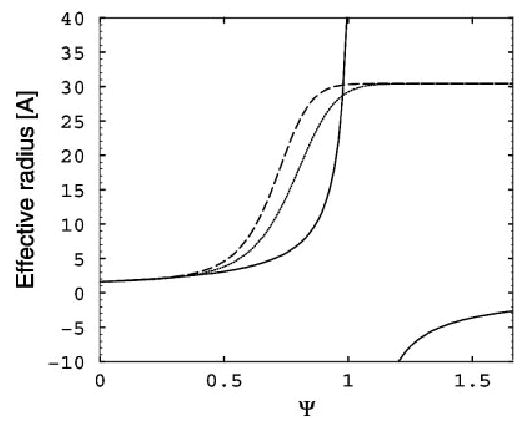

The deficiency of the GBHCT model described above can, to some extent, be corrected by rescaling the effective radii with the empirical parameters that are proportional to the degree of the atom’s burial, as quantified by the value I of the integral in eq. (7). The latter is large for the deeply buried atoms and small for exposed ones. Consequently, one seeks a well-behaved rescaling function, such that for small I, and when I becomes large; here, . One would also want to have a “smooth” upper bound on Ri vs. I to ensure numerical stability, see eq. (8) and Fig. 7). Although there is certainly more than one way to satisfy these constraints, the following simple, infinitely differentiable rescaling function was chosen to replace the GBHCT original expression for the effective radii:

Figure 7.

Graphical representation of different expressions used to compute the effective Born radius from the scaled volume Ψ. The broken lines correspond to eq. (8) of the GBOBC model, with parameters corresponding to igb = 2 (dashed) and igb = 5 (dotted). The GBHCT model (corresponding to igb = 1 in Amber) is shown as a solid line. All curves are computed for ρi = 1.7 Å.

| (8) |

where Ψ = I, and α, β, and γ are treated as adjustable dimensionless parameters that were optimized using the guidelines mentioned earlier (primarily agreement with the PB).

Currently, Amber supports two GB models (termed GBOBC) based on eq. (8). These differ by the values of {α, β, γ}, and are invoked by setting igb to either 2 or 5. The details of the optimization procedure and the performance of the GBOBC model relative to the PB treatment and in MD simulations on proteins is described in the original article.109

Enhancing Conformational Sampling

As described above, the use of continuum solvents can improve sampling due to reduced solvent viscosity. This section describes several other methods implemented in Amber to improve conformational sampling. The reader is also encouraged to read articles in a recent special journal issue dedicated to conformational sampling techniques.110

Locally Enchanced Sampling

Locally enhanced sampling (LES)111 is a mean-field approach that has proven useful in improving sampling through a reduction in internal barrier heights. In brief, the LES method provides the opportunity to focus computational resources on the portion of the system of interest by replacing it with multiple copies. The copies do not directly interact, and the system responds to the average force felt through interaction with all of the copies. The mean-field effect obtained from this averaging provides a smoothing effect of the energy landscape,112 improving sampling efficiency through reduction of barrier heights. In this manner, one also obtains multiple trajectories for the region of interest without repeating the entire simulation.

In Amber, the addles module is used to prepare a LES simulation (see Fig. 1). Addles reads files generated by Leap, and outputs the corresponding files with the LES copies as defined by the user in the input. In addition to replicating atoms, the force field parameters are modified; the LES energy function is constructed so that the energy of a LES system when all copies occupy identical positions is the same as the energy of the original system with the same coordinates. This maintains proper balance between interactions between LES and non-LES atoms, and also maintains an exact correspondence between the global minima in the LES and non-LES systems.112 This makes LES particularly useful for global optimization problems such as structure refinement. For example, LES simulations applied to optimization of protein loop conformations are nearly an order of magnitude more efficient than standard MD simulations.113

LES can be employed with several solvent models. Explicit solvent models with PME are supported, and this approach has been used to successfully refine structures of proteins and nucleic acids.114,115 One potential drawback to the use of LES with explicit solvent is that the solvent molecules are typically not placed in the set of atoms that are replicated with LES. Thus, the solvent will interact with all of the copies, and moving the copies apart can require the creation of a larger solvent cavity. This results in a free energy penalty analogous to the hydrophobic effect, and tends to reduce the independence of the copies through an indirect coupling. In addition, the solvent molecules surrounding the group of copies may not be able to simultaneously provide ideal solvation for each of the copies.

Because it is desirable to maximize the independence of the replicas during the simulation to increase both the amount of phase space that is sampled and the magnitude of the mean-field smoothing effect, Amber also supports the use of the GB solvent model in LES simulations. This GB+ LES approach has been described in detail.116 Each LES copy is individually solvated, avoiding the caging effect described above and providing more realistic solvation of the LES atoms. We have observed that GB + LES permits observation of independent transition pathways in a single simulation.

A drawback to the GB + LES approach (in addition to the approximations inherent to the GB models) is that the calculation of effective Born radii becomes more complex, requiring multiple effective radii for each of the non-LES atoms. Increased computational overhead is associated with the use of these extra radii, but this can be significantly reduced through the use of a radii difference threshold that allows atoms to be assigned a single radius if the set of radii differ by less than the threshold. The details of this approximation have been described,116 and a value of 0.01 Å is recommended.