Abstract

Objectives. We explored the effect of disseminating evidence-based guidelines that promote physical activity on US health department organizational practices in the United States.

Methods. We implemented a quasi-experimental design to examine changes in the dissemination of suggested guidelines to promote physical activity (The Guide to Community Preventive Services) in 8 study states; the remaining states and the Virgin Islands served as the comparison group. Guidelines were disseminated through workshops, ongoing technical assistance, and the distribution of an instructional CD-ROM. The main evaluation tool was a pre- and postdissemination survey administered to state and local health department staffs (baseline n=154; follow-up n=124).

Results. After guidelines were disseminated through workshops, knowledge of and skill in 11 intervention-related characteristics increased from baseline to follow-up. Awareness-related characteristics tended to increase more among local respondents than among state participants. Intervention adoption and implementation showed a pattern of increase among state practitioners but findings were mixed among local respondents.

Conclusions. Our exploratory study provides several dissemination approaches that should be considered by practitioners as they seek to promote physical activity in the populations they serve.

Lack of physical activity is closely linked with the incidence of several chronic diseases and a lower quality of life.1,2 There is now an array of physical activity interventions that have been proven to be effective across a variety of populations and geographic settings. For example, the Task Force on Community Preventive Services has produced a set of evidence-based guidelines for promoting physical activity titled The Guide to Community Preventive Services: What Works to Promote Health? (hereafter Community Guide).3,4

In the Community Guide, intervention strategies that show evidence of increased physical activity in targeted populations are grouped into 3 categories: (1) informational approaches to change the knowledge and attitudes regarding the benefits of and opportunities for physical activity within a community among populations that state and local public health workers serve; (2) behavioral and social approaches to teach the targeted populations the behavioral management skills necessary for successful adoption and maintenance of behavior change and for creating social environments that facilitate and enhance behavioral change; and (3) environmental and policy approaches to change the structure of physical and organizational environments to provide safe, attractive, and convenient places for physical activity. Across these 3 categories, 8 specific intervention strategies were found to have sufficient or strong evidence of effectiveness.3,4

Effective intervention strategies, such as those in the Community Guide, can be implemented in community settings through the efforts of numerous agencies, organizations, and individuals. State and local health departments are key to promoting physical activity interventions, because of the ability to assess public health problems, develop appropriate programs and policies, and ensure that the programs and policies are effectively delivered and implemented.5,6 However, data are lacking for effective methods of disseminating suggested physical activity interventions in community settings through public health agencies.

Even the most innovative scientific discoveries (e.g., a new and effective intervention strategy in the Community Guide) do not become a standard of professional practice unless targeted and sustained efforts are used to enhance their dissemination.7–11 Three reviews show the limited extent to which effective interventions were disseminated and institutionalized. In a content analysis of 1210 articles from 12 prominent public health journals, Oldenburg et al.12 classified 89% of published studies as basic research and development. They classified another 5% as innovation development studies, less than 1% as diffusion studies (close to our use of the term dissemination), and 5% as institutionalization studies. Similarly, Sallis et al.13 conducted a content analysis of 4 journals and found that 2% to 20% of articles fell in a category defined as “translate research to practice.” A recent systematic review of 31 dissemination studies in cancer control found no strong evidence to recommend any 1 dissemination strategy as effective for promoting the uptake of interventions.14 A variety of organizational factors are likely to influence readiness to change and dissemination (e.g., resources, organizational capacity, time frame).15

We sought to better understand the dissemination of information regarding physical activity guidelines across the United States in state and local health departments. We focused particularly on the evidence-based guidelines in the Community Guide. We describe the extent of awareness and adoption of evidence-based physical activity guidelines in state and local public health departments and examine the effectiveness of active dissemination efforts among state and local public health practitioners.

METHODS

Design and Sample

We implemented a quasi-experimental design to examine the effect of disseminating evidence-based intervention strategies on the promotion of physical activity in state and local health departments. The 8 study states were selected to reflect geographic dispersion and capacity to implement physical activity interventions. Capacity (i.e., existing resources, current interventions, policy environment) was estimated on the basis of our 2003 baseline survey.16 The target audience for dissemination efforts was public health practitioners (i.e., people who direct and implement population-based intervention programs in state, city, or county health departments, and their affiliated partners). The remaining states and the Virgin Islands served as the comparison group.

Dissemination Activities

We used 3 interrelated dissemination approaches: workshops, ongoing technical assistance, and the distribution of an instructional CD-ROM. To inform the dissemination approaches, formative research was conducted using 11 key informant interviews in February 2003. Each interview took approximately 30 minutes and was designed to gather information from opinion leaders within state health departments who were knowledgeable about applying data and scientific findings to physical activity programs and policies. Questions assessed sources of credible information, influences on decision-making, and familiarity with and uses of evidence-based intervention approaches such as those in the Community Guide. All interviews were taped, transcribed, and summarized.

Workshops to promote evidence-based intervention strategies.

Eight workshops for state and local health departments and their partners were convened between August 2003 and March 2004. There were 200 attendees and an average of 25 attendees per workshop. Participants represented a range of professions including program managers (45%), health educators (23%), epidemiologists (2%), and department heads (2%).

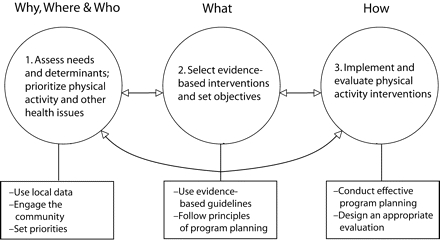

The workshops had 6 objectives, which were organized around the framework shown in Figure 1 ▶: to help participants understand (1) the burden of physical inactivity, (2) state and local patterns of physical activity, (3) basic concepts of evidence-based decisionmaking in public health settings, (4) selected tools to enhance evidence-based practice, (5) the Community Guide physical activity interventions, and (6) how the Community Guide can be used to implement and evaluate physical activity programs and policies. In addition to hearing formal presentations, attendees participated in case study applications of interventions, which incorporated their experience and “real world” examples along with intervention strategies from the Community Guide.

FIGURE 1—

Framework for a systematic approach to promoting effective physical activity programs and policies.

Ongoing technical assistance.

After the workshops, we offered ongoing technical assistance to the state and local health departments for which workshops had been conducted. Workshop attendees and others in the 8 study states could select from a list of possible technical assistance topics, including the following:

Assistance with strategic planning that incorporated evidence-based decision making regarding physical activity

Assistance with grant writing related to evidence-based approaches for promoting physical activity

Tuition waivers for health department staff members to attend our course, Evidence-Based Public Health17

Phone and e-mail consultation about effective intervention planning

Guidance on how a state health department might best work with city and county health departments to disseminate Community Guide recommendations

After the workshops, additional technical assistance was provided to 6 of the 8 study states.

Instructional CD-ROM development and distribution.

In addition to conducting in-person workshops and providing ongoing technical assistance, we created a CD-ROM that would provide background on evidence-based approaches and skills and skills to enhance adoption of the Community Guide. The CD-ROM was a mini-version of the workshop and was designed to provide additional information to state and local practitioners who were unable to attend the workshop. In addition to the project team, prominent public health leaders were featured on the CD-ROM, including David Satcher, Steven Blair, and William Dietz. Because key informant interviews indicated that “tools” would be helpful, the CD-ROM also featured a resource section to help viewers put the Community Guide’s physical activity recommendations into practice. The resources section featured the Centers for Disease Control and Prevention’s evaluation handbook, slide sets, grant-related resources, selected government reports, and selected Web sites. Dissemination of the CD-ROM began in October 2004 and included 228 individuals in the 8 study intervention states. Recipients of the CD-ROM included workshop participants and other individuals who could help promote physical activity in the 8 states.

Evaluation and Data Collection

According to diffusion theory, the dissemination of programs and policies usually occurs in stages.18,19 We therefore adopted a 3-stage framework for our evaluation. The awareness stage included actions we took to make target audiences aware of the effective interventions across sites and settings.20,21 The adoption and implementation stage is when we examined the workshop participants’ “decision to make full use of an innovation as the best course of action available,”18 which determined whether an evidence-based intervention was to be carried out. And finally, the maintenance stage is the stage wherein we measured the extent to which there were adequate resources and policy support to sustain interventions.

Evaluation Tool

The principal evaluation tool we used was a pre- and postdissemination survey administered to state and local health departments.

On the basis of our input and previous literature,18,19,22–24 we developed a 46-item questionnaire. First, 5 staff members who worked in health communication research at Saint Louis University (St. Louis, Mo) pre-tested the questionnaire for length, clarity, and organization. By using established methods of cognitive response testing,25–27 we obtained feedback and revised the instrument. After revision, the questionnaire underwent a second round of testing with mid- to senior-level employees of a large county health department. Within a 7- to 10-day period, 15 people completed the survey twice to examine test–retest properties. On the basis of these results, questions with concordance (r values) less than 0.60 were either discarded or revised. The final questionnaire included 25 questions (some with multiple parts) that covered 4 major areas: (1) awareness and use of the Community Guide, (2) physical activity programs and priorities, (3) funding and the policy environment, and (4) biographical information about the respondent (the questionnaire is available at http://prc.slu.edu/articles.htm).

At the state level, the respondent for the survey was the physical activity program contact person in the health department of each state, Guam, and the Virgin Islands. These individuals were identified by using the Centers for Disease Control and Prevention’s state-based Physical Activity Program Directory28 and through leadership information listed within the directory of the National Association of Chronic Disease Directors.29 The role of the physical activity program contact person from each state is to lead and facilitate activities for promoting physical activity, serve as a clearinghouse for information, and develop new initiatives. The role is a set of responsibilities rather than a specific job title.

At the local level, the National Association of City and County Health Officials provided a list of 510 local health departments in the United States with jurisdictions of 100 000 or more. The baseline survey was conducted by e-mail and fax from March through June 2003. Follow-up surveys were conducted from April to July 2005. Baseline and follow-up response rates were higher among state (baseline = 94%; follow-up = 98%) than among local respondents (baseline = 73%; follow-up = 73%).

We also evaluated participant learning at each of the 8 workshops by using an evaluation tool that was similar to the pre- and post-dissemination survey described earlier. This instrument was modeled after earlier workshops conducted by members of the project team on evidence-based public health and tobacco control.17,30 The measures assessed attitudes regarding, as well as knowledge and uses of, evidence-based approaches.

Statistical Analysis

We conducted descriptive analyses to summarize demographic variables for state and local respondents separately. We assessed pre-to postworkshop changes in knowledge, attitudes, skills, and rates of use of evidence-based approaches by organization type (local health department, state health department, other). We evaluated change in knowledge over time for each workshop learning objective in each of these groups using a paired-sample t test. We used analysis of variance to compare the degree of change across the organization types.

We used exact logistic regressions to assess the effect of the intervention on change between baseline and follow-up in awareness, adoption and implementation of the intervention, and maintenance of physical activity programs. Awareness was assessed by determining whether administrators and managers were aware of the Community Guide and 7 specific modalities of that awareness (Table 1 ▶). Adoption and implementation was assessed by the occurrence of 7 evidence-based programs and whether existing programs were modified or new programs were developed. Maintenance was assessed using 4 variables that detailed staffing, executive and legislative support, and budgetary constraints. Each of these 20 variables was entered independently into a regression equation in which the time-2 value was related to intervention status with control for the time-1 value.

TABLE 1—

Change from Baseline to Follow-Up in the State and Local Health Department Survey of Physical Activity Programs, United States, 2003–2005

| State Respondents | Local Respondents | |||||

| Characteristic | Baseline Prevalence, % | Net Change, a % | P | Baseline Prevalence, % | Net Change, a % | P |

| Awareness | ||||||

| Administrators and managers at health dept. are aware of the Community Guideb | 80.5 | 28.0 | .635 | 10.5 | 6.4 | .636 |

| Community Guide awareness | ||||||

| Heard of recommendations in the Community Guide | 89.8 | 0.0 | . . . | 29.5 | 28.6 | .040 |

| Read or seen materials of the Community Guide | 85.7 | 0.0 | . . . | 21.0 | 31.6 | .260 |

| Visited Community Guide Web site | 68.8 | —15.4 | .576 | 18.1 | 11.5 | .808 |

| Printed materials from Web site | 47.9 | —23.7 | .272 | 8.6 | —2.5 | .639 |

| Attended training to learn about the Community Guide | 20.8 | 39.5 | .553 | 1.0 | 23.7 | .025 |

| Attended professional meeting during which the Community Guide was discussed | 51.1 | 16.5 | > .999 | 7.6 | 13.6 | .314 |

| Adoption and implemetation | ||||||

| Physical activity interventions underway | ||||||

| Communitywide campaigns | 71.4 | 15.1 | > .999 | 44.8 | —10.7 | .646 |

| Stair-use campaigns | 40.8 | 6.1 | > .999 | 22.9 | —12.6 | .607 |

| School-based physical education programs | 73.5 | 7.8 | .867 | 34.3 | —7.8 | .378 |

| Social-support interventions | 71.4 | 28.7 | .112 | 55.2 | —9.9 | .276 |

| Individually adapted health behavior change | 61.2 | 15.6 | > .999 | 42.9 | 20.0 | .001 |

| Enhanced access and outreach programs | 85.7 | 29.6 | .884 | 67.6 | 23.2 | .270 |

| Urban planning and policy approaches | 73.5 | 34.6 | .132 | 39.0 | 17.8 | .575 |

| Changes occurred on the basis of the Community Guide | ||||||

| Existing programs were changed | 20.4 | —0.5 | > .999 | 0.0 | 12.5 | .348 |

| New programs were developed or implemented | 34.7 | 9.2 | > .999 | 3.8 | 21.7 | .374 |

| Maintenance | ||||||

| My agency’s staff is adequate for developing and implementing physical activity interventions | 14.6 | —0.3 | > .999 | 14.9 | —14.4 | .305 |

| The governor is supportive of physical activity interventions | 35.4 | 19.6 | .822 | 44.6 | —23.2 | .074 |

| Most state legislators are supportive of physical activity interventions | 21.3 | 34.9 | .198 | 15.8 | —37.9 | .022 |

| Budget constraints have disproportionately affected programs and staff to promote physical activity | 63.6 | —39.9 | .109 | 83.8 | —19.5 | .627 |

aNet percentage change was calculated using the formula (%intervention-post _ %intervention-pre) _ (%comparison-post _ %comparison-pre).

bThe full title is The Guide to Community Preventive Services: What Works to Promote Health?

We also created 2 summary-dependent variables. The first was an intervention assessment (adoption) variable in which program activity was summed over 7 evidence-based programs and policies.3,4 These represented the 8 interventions recommended in the Community Guide; 2 were collapsed into a single category because of their similarity (i.e., street-scale and community-scale changes in the urban planning and policy category were combined). The second summary-dependent variable was a level of awareness variable in which 7 awareness-related factors were summed. The net difference in each of these 2 measures was then calculated to create variables that assessed the pre- to post-change in the number of interventions and awareness, which accounted for baseline differences in intervention and comparison sites, using the formula:

|

(1) |

We used least squares linear regression to examine the association of these variables with independent measures of intervention status, activity level, relative priority of physical activity to other health issues, administrative support, authority, timing, funding of programs, and executive and legislative support for physical activity interventions.

RESULTS

Before and after the dissemination workshops, change scores were compared by place of employment (state health department, local health department, other; Table 2 ▶). From baseline to follow-up, knowledge and favorable attitudes increased for each of 11 characteristics. Change scores were larger for local than for state health participants in every category except methods in understanding cost. The largest increase occurred in attitudes regarding the Community Guide.

TABLE 2—

Pre- and Postassessment Changes in Knowledge or Skills From Workshops on Evidence-Based Decisionmaking, by Type of Agency: United States, 2003–2004

| State Health Department | Local Health Department | Other Settingsa | |||||

| Knowledge or Skill | (n = 58), mean | P | (n = 55), mean | P | (n = 80), mean | P | Difference Between Groups |

| How to decide if an intervention is scientifically effective | 0.638 | < .001 | 1.061 | < .001 | 0.809 | < .001 | P = .043 |

| How to decide if an intervention provides good economic value | 0.660 | < .001 | 0.735 | < .001 | 0.717 | < .001 | P = .915 |

| How to interpret summary data from economic evaluations | 0.478 | < .001 | 0.714 | < .001 | 0.761 | < .001 | P = .254 |

| How to assess advantages and challenges to using evidence-based interventions | 0.696 | < .001 | 1.208 | < .001 | 0.711 | < .001 | P = .013 |

| How to assess advantages and challenges to using economic evaluation data | 0.698 | < .001 | 1.143 | < .001 | 0.622 | < .001 | P = .008 |

| How to understand methods used to estimate the cost of an intervention | 0.543 | < .001 | 0.531 | < .001 | 0.422 | .003 | P = .789 |

| How to understand methods used to compare the costs and health outcomes of an intervention | 0.630 | < .001 | 0.755 | < .001 | 0.644 | < .001 | P = .818 |

| Where to find evidence-based physical activity interventions | 0.870 | < .001 | 1.500 | < .001 | 1.364 | < .001 | P = .015 |

| Awareness of the Guide to Community Preventive Services: What Works to Promote Health? | 1.087 | < .001 | 1.8337 | < .001 | 1.977 | < .001 | P = .002 |

| Awareness of Physical Activity and Health: A Report of the Surgeon General | 0.533 | .003 | 0.653 | < .001 | 0.696 | < .001 | P = .767 |

| Awareness of the Guide to Clinical Preventive Services | 0.844 | < .001 | 1.367 | < .001 | 1.227 | < .001 | P = .097 |

Note. Value is the change in the mean value from pre- to postassessment, on the basis of a 5-point Likert scale.

aOther settings include health coalitions, voluntary health agencies, schools, and private businesses.

There were 154 respondents to the baseline health department survey (state n = 49; local n = 105; Table 3 ▶). Follow-up rates differed by group (state = 98%; local = 71%). Most state respondents were program managers or administrators, whereas local respondents included more division, bureau, or agency heads and “other” positions (e.g., program planner, nutritionist). State respondents were more likely than were local respondents to have a shorter tenure in their agency, to have a master’s or doctorate degree, and to personally meet physical activity recommendations.

TABLE 3—

Characteristics of Participants in the State and Local Health Department Survey of Physical Activity Programs: United States, 2003–2005

| State Respondents | Local Respondents | |||

| Characteristic | Baseline, % | Follow-Up, % | Baseline, % | Follow-Up, % |

| Total sample, no. | 49 | 48 | 105 | 74 |

| Job title | ||||

| Program manager or administrator | 60.4 | 60.4 | 34.3 | 40.5 |

| Health educator | 14.6 | 22.9 | 9.5 | 18.9 |

| Program planner | 8.3 | 6.3 | 1.0 | 4.1 |

| Division or bureau head | 6.3 | 2.1 | 33.4 | 21.6 |

| Other | 10.4 | 8.3 | 21.9 | 14.9 |

| Years working for health department | ||||

| < 2 | 16.3 | 19.1 | 13.3 | 6.8 |

| 2–4 | 30.6 | 29.8 | 18.1 | 18.9 |

| 5–9 | 14.3 | 17.0 | 20.0 | 27.0 |

| ≥ 10 | 38.8 | 34.0 | 48.6 | 47.3 |

| Highest degree held | ||||

| BS or BA | 33.3 | 21.3 | 27.9 | 34.2 |

| MS | 12.5 | 31.9 | 13.5 | 19.2 |

| MPH or MSPH | 18.8 | 21.3 | 12.5 | 15.1 |

| Other master’s degree | 27.1 | 21.3 | 23.1 | 19.2 |

| PhD | 6.3 | 2.1 | 2.9 | 2.7 |

| Other | 2.1 | 2.1 | 20.2 | 9.6 |

| Meets physical activity recommendationa | ||||

| Yes | 71.4 | 77.1 | 47.6 | 52.7 |

| No | 28.6 | 22.9 | 52.4 | 47.3 |

aObtaining moderate physical activity 5 or more time per week, 30 min or more per day.

Longer-term change was assessed among state and local practitioners from the pre- to postsurveys (Table 1 ▶). Baseline awareness rates were higher among state than among local respondents. Baseline adoption and implementation rates were closer, yet percentages were consistently higher among state respondents. Although statistically significant for 2 variables (heard of recommendations in the Community Guide and attended training), awareness-related characteristics showed no consistent pattern among state participants and a pattern of increase more among local respondents.

The 7 physical activity interventions listed within the adoption and implementation category were those recommended as effective in the Community Guide. Adoption and implementation showed a clear pattern of increase among state practitioners with mixed findings for local respondents. The maintenance variables assessed resources, financial constraints, and political climate. Among state respondents, some maintenance variables increased and some decreased. For local participants, all maintenance variables decreased.

We also examined overall predictors of change (awareness, adoption) from baseline to follow-up with pooled state and local data (not shown). For the summary awareness score, the largest effect was shown for the independent variable of whether the respondentmet physical activity recommendations (B=1.71; P=.08). When predicting whether interventions were adopted from baseline to follow-up, the largest effect was observed for whether the respondent had authority to implement interventions (B=0.62; P=.08).

DISCUSSION

Although there is growing evidence of the effect of clinical guidelines such as those sponsored by the US Preventive Services Task Force,31–33 compelling evidence for the adoption of evidence-based guidelines in community settings is lacking. Cross-sectional research from Canada suggests that organizational decisionmakers have a positive view of the usefulness of systematic reviews and that these guidelines have had a positive effect on public health practice.34

There are a few examples of successful dissemination and institutionalization of a particular physical activity program. For example, the US Child and Adolescent Trial for Cardiovascular Health is a comprehensive, school-based physical activity and diet change intervention that has now been disseminated and sustained over time.35 By contrast, the goal of our study was to promote and sustain a wide range of evidence-based intervention strategies.

Our study was among the first to apply an active dissemination approach to numerous evidence-based intervention strategies and measure changes in awareness and adoption. Although we consider our study exploratory, several themes emerged that deserve consideration. We found that baseline awareness of the Community Guide varied greatly between state and local respondents. Our analysis of longer-term change in awareness and uses of evidence-based approaches among public health practitioners showed positive net increases in awareness among local health departments as well as adoption and implementation in state health agencies. However, often these changes were not statistically significant partially because of the limited number of intervention states. As illustrated in Table 3 ▶, our sample of practitioners indicates a higher degree of heterogeneity in job types among local than among state health respondents. This suggests that dissemination planning may need to be tailored differently to these audiences because one’s professional role and past experiences are likely to influence the way in which evidence and work-related training is assimilated. Just as behavioral interventions are often modified according to the stage of readiness,36,37 dissemination approaches also should carefully consider stages as they are developed and implemented.

We found that respondents from state health departments were much more likely to meet the physical activity recommendation than were those from local health departments (71% compared with 48% at baseline). The activity patterns of the people in charge of programs may influence success in implementation (“practice what we preach”). A recent study from Kansas showed that program delivery agents who were physically active were more likely to implement physical activity programs at the local level.38 Staff members who take a personal interest in physical activity may enhance uptake of effective programs and policies. Giving physical activity program coordinators adequate authority to shape priorities might also affect dissemination rates.

In cross-sectional analyses of state health practitioners from 2003,16 most respondents (90%) were aware of evidence-based guidelines to promote physical activity. However, less than half the respondents (41%) had the authority to implement evidence-based programs and policies. A minority of respondents reported receiving support from their state governor (35%) or from most of their state legislators (21%). Several key factors were correlated with the presence of evidence-based interventions, including (1) the presence of state funding for physical activity, (2) the respondent’s participation in moderate physical activity, (3) the presence of adequate staffing, and (4) the presence of a supportive state legislature. These baseline characteristics were derived from cross-sectional analyses. Factors that influence dissemination and change over time may be different. A limitation of our longitudinal analyses was the relatively small sample size. Other limitations included the lack of comprehensive process evaluation data, difficulty determining attribution, and difficulty assessing the effects of other events in the 2-year period from baseline to follow-up. These events external to our study may have included educational campaigns or budget reductions, which could in part explain why some variables (e.g., maintenance measures among local respondents) showed a net decrease during the study period.

From our results and the existing dissemination literature,8,10,39–45 several important topics emerged:

The need for more innovative, active approaches. Many of the dissemination approaches within the US federal health system are passive11 and largely ineffective,8,14,46,47 which suggests the need for more active approaches. It is unclear whether our study approach was active enough to sustain positive changes.

The need for better adaptation to the audience. As noted in the differences between state versus local respondents, dissemination approaches need to be informed by audience analysis and adapted on the basis of the information gathered. For example, our data showed lower baseline rates of awareness and adoption of evidence-based guidelines among local practitioners. Yet, the local audience seemed to be receptive to active dissemination according to the large knowledge change scores from our workshops. State versus local dissemination approaches should be targeted appropriately. Lack of physical activity is closely linked with the incidence of several chronic diseases, and the relatively low capacity for many local health agencies to control chronic diseases should be taken into account.48 Our study focused mainly on state and local public health workers; it is important to understand effective dissemination approaches among many other partners (e.g., urban planners, community advocates).

The need for better measures. To determine the success of dissemination approaches, we need to determine reliable and valid indicators of organizational and policy change (in addition to measuring traditional, individual level endpoints such as physical activity rates).49 Furthermore, basic economic measures (cost of development, delivery, training) should be reported to help determine the economic efficiency of an intervention and ultimately enhance the uptake of those programs in various settings.

Greater understanding of mediators and moderators. Little is known about the mediators (intermediate factors that lie in the causal pathway) and moderators (factors that alter the causal effect of an independent variable) of dissemination.49–52 If these can be better characterized, public health researchers will likely identify the pathways that are most promising for interventions.

There is a substantial body of interventions that effectively promote physical activity.3,4 However, researchers and practitioners often lack the knowledge and resources to successfully implement the programs and interventions that are proven to be effective. If the needed public health effect from decades of intervention research is to be achieved, we must better understand which factors support or inhibit the uptake of effective programs and policies. Our findings suggest several dissemination approaches that should be considered by practitioners as they seek to promote activity in the populations they serve.

Acknowledgments

This study was supported by the Centers for Disease Control and Prevention, Prevention Research Centers Program (grant U48/CCU710806; Special Interest Project 10–02).

The authors are grateful for advice and assistance with this study from Doug Corbett, Jackie Epping, Jonathan Fielding, Jack Hataway, Susan Lukwago, Brad Myers, Lloyd Novick, Darcy Scharff, Anne Seeley, and Craig Thomas.

Human Participant Protection This study was approved by the Saint Louis University Institutional Review Board.

Peer Reviewed

Contributors R. C. Brownson originated and led the study and wrote the draft article. P. Ballew coordinated all aspects of the study, collected data, and conducted workshops. K. L. Brown assisted with data collection and analysis. M. B. Elliott assisted with the study and conducted the analysis. D. Haire-Joshu, G. W. Heath, and M. W. Kreuter provided scientific input in all phases of the study, conducted workshops, and provided technical assistance.

References

- 1.Physical Activity and Health. A Report of the Surgeon General. Atlanta, Ga: US Department of Health and Human Services, Centers for Disease Control and Prevention; 1996.

- 2.Zaza S, Briss PA, Harris KW, eds. The Guide to Community Preventive Services: What Works to Promote Health? New York, NY: Oxford University Press; 2005.

- 3.Heath GW, Brownson RC, Kruger J, et al. The effectiveness of urban design and land use and transport policies and practices to increase physical activity: a systematic review. J Phys Ac Health. 2006;3(suppl 1): S55–S76. [DOI] [PubMed] [Google Scholar]

- 4.Kahn EB, Ramsey LT, Brownson RC, et al. The effectiveness of interventions to increase physical activity. A systematic review. Am J Prev Med. 2002;22: 73–107. [DOI] [PubMed] [Google Scholar]

- 5.Institute of Medicine. The Future of the Public’s Health in the 21st Century. Washington, DC: National Academies Press; 2003.

- 6.Institute of Medicine. The Future of Public Health. Washington, DC: National Academy Press; 1988.

- 7.Community Intervention Trial for Smoking Cessation (COMMIT): I. cohort results from a four-year community intervention. Am J Public Health. 1995;85: 183–192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA. Closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. The Cochrane Effective Practice and Organization of Care Review Group. BMJ. 1998; 317:465–468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Briss PA, Brownson RC, Fielding JE, Zaza S. Developing and using the Guide to Community Preventive Services: lessons learned about evidence-based public health. Annu Rev Public Health. 2004;25: 281–302. [DOI] [PubMed] [Google Scholar]

- 10.Brownson RC, Kreuter MW, Arrington BA, True WR. Translating scientific discoveries into public health action: how can schools of public health move us forward? Public Health Rep. 2006;121:97–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kerner J, Rimer B, Emmons K. Introduction to the special section on dissemination: dissemination research and research dissemination: how can we close the gap? Health Psychol. 2005;24:443–446. [DOI] [PubMed] [Google Scholar]

- 12.Oldenburg BF, Sallis JF, French ML, Owen N. Health promotion research and the diffusion and institutionalization of interventions. Health Educ Res. 1999; 14:121–130. [DOI] [PubMed] [Google Scholar]

- 13.Sallis JF, Owen N, Fotheringham MJ. Behavioral epidemiology: a systematic framework to classify phases of research on health promotion and disease prevention. Ann Behav Med. 2000;22:294–298. [DOI] [PubMed] [Google Scholar]

- 14.Ellis P, Robinson P, Ciliska D, et al. A systematic review of studies evaluating diffusion and dissemination of selected cancer control interventions. Health Psychol. 2005;24:488–500. [DOI] [PubMed] [Google Scholar]

- 15.McLean S, Feather J, Butler-Jones D. Building Health Promotion Capacity: Action for Learning, Learning from Action. Vancouver: University of British Columbia Press; 2005.

- 16.Brownson RC, Ballew P, Dieffenderfer B, et al. Evidence-based interventions to promote physical activity. What contributes to dissemination by state health departments. Am J Prev Med. 2007;33(suppl 1): S66–S78. [DOI] [PubMed] [Google Scholar]

- 17.Brownson RC, Baker EA, Leet TL, Gillespie KN. Evidence-Based Public Health. New York, NY: Oxford University Press; 2003.

- 18.Rogers EM. Diffusion of Innovations. 5th ed. New York, NY: Free Press; 2003.

- 19.Steckler A, Goodman RM, McLeroy KR, Davis S, Koch G. Measuring the diffusion of innovative health promotion programs. Am J Health Promot. 1992;6: 214–224. [DOI] [PubMed] [Google Scholar]

- 20.Kar SB. Implications of diffusion research for planned change. Int J Health Educ. 1976;17:192–220. [Google Scholar]

- 21.McCormick LK, Steckler AB, McLeroy KR. Diffusion of innovations in schools: a study of adoption and implementation of school-based tobacco prevention curricula. Am J Health Promot. 1995;9:210–219. [DOI] [PubMed] [Google Scholar]

- 22.Brink SG, Basen-Engquist KM, O’Hara-Tompkins NM, Parcel GS, Gottlieb NH, Lovato CY. Diffusion of an effective tobacco prevention program. Part I: Evaluation of the dissemination phase. Health Educ Res. 1995;10:283–295. [DOI] [PubMed] [Google Scholar]

- 23.Parcel GS, O’Hara-Tompkins NM, Harrist RB, et al. Diffusion of an effective tobacco prevention program. Part II: Evaluation of the adoption phase. Health Educ Res. 1995;10:297–307. [DOI] [PubMed] [Google Scholar]

- 24.Riley BL. Dissemination of heart health promotion in the Ontario Public Health System: 1989–1999. Health Educ Res. 2003;18:15–31. [DOI] [PubMed] [Google Scholar]

- 25.Forsyth BH, Lessler JT. Cognitive laboratory methods: a taxonomy. In: Biemer PP, Groves RM, Lyberg LE, Mathiowetz NA, Sudman S, eds. Measurement Errors in Surveys. New York, NY: Wiley-Interscience; 1991:395–418.

- 26.Jobe JB, Mingay DJ. Cognitive research improves questionnaires. Am J Public Health. 1989;79:1053–1055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jobe JB, Mingay DJ. Cognitive laboratory approach to designing questionnaires for surveys of the elderly. Public Health Rep. 1990;105:518–524. [PMC free article] [PubMed] [Google Scholar]

- 28.Centers for Disease Control and Prevention. State-based Physical Activity Program Directory. Available at: http://apps.nccd.cdc.gov/DNPAProg. Accessed August 1, 2006.

- 29.National Association of Chronic Disease Directors. Promoting Health. Preventing Disease. Available at: http://www.chronicdisease.org. Accessed August 1, 2006.

- 30.O’Neall MA, Brownson RC. Teaching evidence-based public health to public health practitioners. Ann Epidemiol. 2005;15:540–544. [DOI] [PubMed] [Google Scholar]

- 31.Harris RP, Helfand M, Woolf SH, et al. Current methods of the US Preventive Services Task Force. A review of the process. Am J Prev Med. 2001;20:21–35. [DOI] [PubMed] [Google Scholar]

- 32.Lawrence RS. Diffusion of the US Preventive Services Task Force recommendations into practice. J Gen Intern Med. 1990;5(suppl 5):S99–S103. [DOI] [PubMed] [Google Scholar]

- 33.Woolf SH, DiGuiseppi CG, Atkins D, Kamerow DB. Developing evidence-based clinical practice guidelines: lessons learned by the US Preventive Services Task Force. Annu Rev Public Health. 1996;17: 511–538. [DOI] [PubMed] [Google Scholar]

- 34.Dobbins M, Thomas H, O’Brien MA, Duggan M. Use of systematic reviews in the development of new provincial public health policies in Ontario. Int J Technol Assess Health Care. 2004;20:399–404. [DOI] [PubMed] [Google Scholar]

- 35.Hoelscher DM, Feldman HA, Johnson CC, et al. School-based health education programs can be maintained over time: results from the CATCH Institutionalization study. Prev Med. 2004;38:594–606. [DOI] [PubMed] [Google Scholar]

- 36.Prochaska JO, Diclemente CC. States and processes of self-change in smoking: toward an integrative model of change. J Consult Clinical Psychol. 1983; 51:390–395. [DOI] [PubMed] [Google Scholar]

- 37.Prochaska JO, Velicer WF. The Transtheoretical Model of health behavior change. Am J Health Promot. 1997;12:38–48. [DOI] [PubMed] [Google Scholar]

- 38.Estabrooks P, Bradshaw M, Fox E, Berg J, Dzewal-towski DA. The relationships between delivery agents’ physical activity level and the likelihood of implementing a physical activity program. Am J Health Promot. 2004;18:350–353. [DOI] [PubMed] [Google Scholar]

- 39.Glasgow RE, Klesges LM, Dzewaltowski DA, Bull SS, Estabrooks P. The future of health behavior change research: what is needed to improve translation of research into health promotion practice? Ann Behav Med. 2004;27:3–12. [DOI] [PubMed] [Google Scholar]

- 40.Glasgow RE, Marcus AC, Bull SS, Wilson KM. Disseminating effective cancer screening interventions. Cancer. 2004;101:1239–1250. [DOI] [PubMed] [Google Scholar]

- 41.Granados A, Jonsson E, Banta HD, et al. EUR-ASSESS Project subgroup report on dissemination and impact. Int J Technol Assess Health Care. 1997;13: 220–286. [DOI] [PubMed] [Google Scholar]

- 42.Johnson JL, Green LW, Frankish CJ, MacLean DR, Stachenko S. A dissemination research agenda to strengthen health promotion and disease prevention. Can J Public Health. 1996;87(suppl 2):S5–S10. [PubMed] [Google Scholar]

- 43.Kerner JF, Guirguis-Blake J, Hennessy KD, et al. Translating research into improved outcomes in comprehensive cancer control. Cancer Causes Control. 2005;16(suppl 1):S27–S40. [DOI] [PubMed] [Google Scholar]

- 44.Redman S. Towards a research strategy to support public health programs for behaviour change. Aust N Z J Public Health. 1996;20:352–358. [DOI] [PubMed] [Google Scholar]

- 45.Brownson RC, Haire-Joshu D, Luke DA. Shaping the context of health: a review of environmental and policy approaches in the prevention of chronic diseases. Annu Rev Public Health. 2006;27:341–370. [DOI] [PubMed] [Google Scholar]

- 46.Patterson RE, Kristal AR, Biener L, et al. Durability and diffusion of the nutrition intervention in the Working Well Trial. Prev Med. 1998;27:668–673. [DOI] [PubMed] [Google Scholar]

- 47.Sorensen G, Thompson B, Basen-Engquist K, et al. Durability, dissemination, and institutionalization of worksite tobacco control programs: results from the Working Well Trial. Int J Behav Med. 1998;5:335–351. [DOI] [PubMed] [Google Scholar]

- 48.Frieden TR. Asleep at the switch: local public health and chronic disease. Am J Public Health. 2004; 94:2059–2061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Rabin BA, Brownson RC, Kerner JF, Glasgow RE. Methodological challenges in disseminating evidence-based interventions to promote physical activity. Am J Prev Med. 2006;31(suppl 4):S24–S34. [DOI] [PubMed] [Google Scholar]

- 50.Bauman AE, Sallis JF, Dzewaltowski DA, Owen N. Toward a better understanding of the influences on physical activity: the role of determinants, correlates, causal variables, mediators, moderators, and confounders. Am J Prev Med. 2002;23(suppl 2):5–14. [DOI] [PubMed] [Google Scholar]

- 51.Glasgow RE, Klesges LM, Dzewaltowski DA, Estabrooks PA, Vogt TM. Evaluating the impact of health promotion programs: using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Educ Res. 2006;21:688–694. [DOI] [PubMed] [Google Scholar]

- 52.Kraemer HC, Stice E, Kazdin A, Offord D, Kupfer D. How do risk factors work together? Mediators, moderators, and independent, overlapping, and proxy risk factors. Am J Psychiatry. 2001;158:848–856. [DOI] [PubMed] [Google Scholar]