Abstract

Objective To verify or refute the value of hospital episode statistics (HES) in determining 30 day mortality after open congenital cardiac surgery in infants nationally in comparison with central cardiac audit database (CCAD) information.

Design External review of paediatric cardiac surgical outcomes in England (HES) and all UK units (CCAD), as derived from each database.

Setting Congenital heart surgery centres in the United Kingdom.

Data sources HES for congenital heart surgery and corresponding information from CCAD for the period 1 April 2000 to 31 March 2002. HES was restricted to the 11 English centres; CCAD covered all 13 UK centres.

Main outcome measure Mortality within 30 days of open heart surgery in infants aged under 12 months.

Results In a direct comparison for the years when data from the 11 English centres were available from both databases, HES omitted between 5% and 38% of infants operated on in each centre. A median 40% (range 0-73%) shortfall occurred in identification of deaths by HES. As a result, mean 30 day mortality was underestimated at 4% by HES as compared with 8% for CCAD. In CCAD, between 1% and 23% of outcomes were missing in nine of 11 English centres used in the comparison (predominantly those for overseas patients). Accordingly, CCAD mortality could also be underestimated. Oxford provided the most complete dataset to HES, including all deaths recorded by CCAD. From three years of CCAD, Oxford's infant mortality from open cardiac surgery (10%) was not statistically different from the mean for all 13 UK centres (8%), in marked contrast to the conclusions drawn from HES for two of those years.

Conclusions Hospital episode statistics are unsatisfactory for the assessment of activity and outcomes in congenital heart surgery. The central cardiac audit database is more accurate and complete, but further work is needed to achieve fully comprehensive risk stratified mortality data. Given unresolved limitations in data quality, commercial organisations should reconsider placing centre specific or surgeon specific mortality data in the public domain.

Introduction

The inquiry into congenital heart surgery deaths in Bristol was widely publicised, became a political issue, and has had a profound effect on surgical practice in the United Kingdom.1 Irrespective of the intense controversy generated by public reporting of mortality statistics in the American healthcare system, the Department of Health has insisted on a similar policy for cardiac surgical outcomes in the UK.2 3 The Freedom of Information Act allows external bodies to access hospital statistics irrespective of whether they are complete, accurate, and substantiated. In these circumstances, any reporting agency has a responsibility to present factual information. Public reporting is particularly sensitive in the realm of paediatric mortality.

The Bristol inquiry used hospital episode statistics (HES) to compare outcomes with those of other congenital cardiac surgical units in the UK.1 Spiegelhalter and colleagues subsequently debated the validity of this approach.4 In 2004 the BMJ published a manuscript from the “Dr Foster” Unit at Imperial College, London, which described HES for mortality in congenital heart surgery.5 The authors applied detailed statistical analysis to non-risk assessed HES data submitted by hospital clerical staff in most but not all UK congenital cardiac surgical centres. The clinical teams did not verify the data. The paper suggested that one unit, Oxford, had significantly higher mortality than the national average for open (with cardiopulmonary bypass) operations in infants and drew damaging conclusions. The information was widely published in the lay press.

The Oxford unit questioned the results before publication, and the central cardiac audit database (CCAD) did so afterwards. A paper based on the CCAD had found no detectable difference in 30 day or one year survival between any of the 13 UK tertiary centres for congenital heart disease for the first year the database operated nationally.6 In contrast, the Dr Foster paper suggested that Oxford had outlying mortality for open procedures between 1991 and 2002 in infants less than 1 year, with a probability of this happening by chance alone of less than 0.0002.5 A multidisciplinary team therefore carried out an investigation to establish the difference between the mortality reported in the BMJ paper and carefully verified death rates for Oxford and other centres. We now report the findings of this investigation.

Methods

Dr Foster epidemiologists obtained HES by using the Office of Population Census and Surveys classification of operation and procedures, fourth revision (OPCS4) codes for open cardiac operations in children from the 11 centres in England between April 1991 and March 2002. HES data are not available for Northern Ireland and Scotland. The Dr Foster group used a list of OPCS4 procedure codes, which were classified as either open or closed cardiac operations on the basis of information from the United Bristol Healthcare NHS Trust. HES does not have a flag to determine whether or not a procedure is done on cardiopulmonary bypass. For several complex paediatric cardiac surgical procedures, no OPCS4 codes were available and no hierarchical system existed where more than one operation was done. HES recorded only deaths that occurred at the hospital where the operation took place and during the same admission as the surgery. Procedures that were difficult to define by OCPS4 codes were excluded from the analysis. Aylin and colleagues used HES to compare mortality within 30 days of surgery for each of the 11 centres in England with the average mortality of all centres combined. Their report then focused on mortality in hospital within 30 days of surgery in infants less than 12 months of age who had heart operations under cardiopulmonary bypass.5

Thames Valley Strategic Health Authority instigated the investigation reported here, in conjunction with the Oxford Radcliffe Hospitals Trust at the request of the Department of Health.7 The aim was to compare mortality as reported by the administrative HES database and the clinically based CCAD for infants aged under 12 months who had cardiac operations with cardiopulmonary bypass in the UK irrespective of the site of death. The CCAD began collecting data from all centres only in 2000. Consequently, both datasets provided comprehensive statistics between 1 April 2000 and 31 March 2002, and this was the period used for comparison of data completeness and accuracy. HES and the CCAD provided primary data from their datasets, which enabled a direct comparison of the number of deaths by each hospital with the total number of cases. We reanalysed HES data provided by Aylin and colleagues for the specific two year period.

The minimum dataset used by the CCAD includes date of death and is linked with the Office of National Statistics by using National Health Service number, to track mortality irrespective of place of death. In the CCAD, most cases with unknown outcomes were patients recorded as non-UK residents. These patients (averaging 8% but zero for Oxford and one other centre) were predominantly coded as private patients and lost to follow-up after leaving the UK. In order to have a longer time period for comparison of performance of centres, we also examined CCAD data for 2000-3 by investigation as the most up to date information available at the time (CCAD tracks outcomes at 12 months as well as 30 days after surgery and then validates it).

The clinicians from all 13 UK centres continuously collected detailed information for the CCAD dataset on a prospective basis, and dedicated cardiac data managers from the hospital database at each centre submitted the data. The data are validated by annual multidisciplinary team visits to the clinical departments to confirm the accuracy of the information. This was a period when CCAD submissions had reached a high level of compliance and accuracy. Overall data provision for the CCAD dataset against benchmarked procedures was 96.8% at this time; completeness for individual fields ranged from 75% to 100%. When CCAD outcome data could not be recorded or verified (for instance when overseas patients had returned home and could not be traced) the data point was recorded as missing. We used outcomes for infants aged under 12 months who had surgery with cardiopulmonary bypass at the 11 centres in England to provide direct comparison with the HES data supplied by Dr Foster for the study period.

The findings presented are a simple comparison between HES and CCAD statistics for the number of patients operated on and mortality within 30 days. We also provide the CCAD recorded mortality statistics for all the centres between 1 April 2000 and 31 March 2003 compared with the national average as an update on the report of Gibbs and colleagues.6

Results

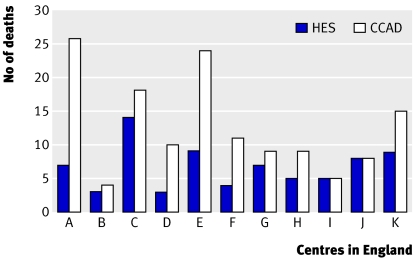

In the study period 2000-2, CCAD data included between five and 147 more operations for each centre than the HES data (median 23). Compared with CCAD data, HES omitted between 5% and 38% (median 15%) of infants operated on in each centre (table). The system used for reporting of postoperative deaths by HES resulted in a median shortfall of 40% (range 0-73%). In centre A, with the largest number of operations, 38% of all patients were missed by HES and only 27% of the total deaths were recorded. HES failed to track between 44% and 70% of deaths in four other centres (fig 1). As a result, HES underestimated the mean 30 day hospital mortality at 4% compared with the CCAD derived figure of 8%.

Comparison of completeness of hospital episode statistics (HES) and central cardiac audit database (CCAD) data for open cardiac operations in infants in contributing congenital cardiac centres during study period 1 April 2000 to 31 March 2002

| Centre | Statistic | HES data | CCAD data | Missing CCAD outcomes (%) | Shortfall in operations recorded by HES relative to CCAD (%) | Shortfall in deaths recorded by HES relative to CCAD (%) |

|---|---|---|---|---|---|---|

| A | Operations* | 242 | 389 | 38 | ||

| Deaths† | 7 | 26 | 4 | 73 | ||

| B | Operations* | 121 | 138 | 12 | ||

| Deaths† | 3 | 4 | 12 | 25 | ||

| C | Operations* | 303 | 355 | 15 | ||

| Deaths† | 14 | 18 | 23 | 22 | ||

| D | Operations* | 167 | 225 | 26 | ||

| Deaths† | 3 | 10 | 19 | 70 | ||

| E | Operations* | 177 | 234 | 24 | ||

| Deaths† | 9 | 24 | 12 | 63 | ||

| F | Operations* | 133 | 156 | 15 | ||

| Deaths† | 4 | 11 | 0 | 64 | ||

| G | Operations* | 87 | 94 | 7 | ||

| Deaths† | 7 | 9 | 1 | 22 | ||

| H | Operations* | 220 | 239 | 8 | ||

| Deaths† | 5 | 9 | 2 | 44 | ||

| I | Operations* | 87 | 92 | 5 | ||

| Deaths† | 5 | 5 | 8 | 0 | ||

| J (Oxford) | Operations* | 70 | 78 | 10 | ||

| Deaths† | 8 | 8 | 0 | 0 | ||

| K | Operations* | 138 | 182 | 24 | ||

| Deaths† | 9 | 15 | 8 | 40 | ||

| All‡ | Operations* | 1745 | 2182 | 17 | ||

| Deaths† | 74 | 139 | 8 | 38 |

*Number of recorded open cardiac operations in infants aged <12 months.

†Number of deaths within 30 days of operation reported in cohort.

‡All centres in England.

Fig 1 Comparison of mortality recorded by hospital episode statistics (HES) and central cardiac audit database (CCAD) for open heart surgery in infants for the 11 centres in England, between 1 April 2000 and 31 March 2002. Centre J=Oxford

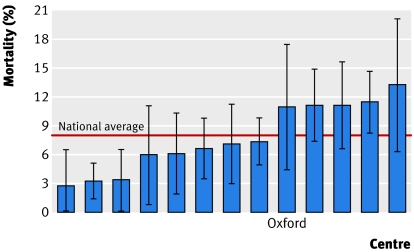

In CCAD, between 1% and 23% of outcomes were missing in nine of the 11 English centres. Because of this, 30 day mortality could be higher in these centres. Oxford had the fewest open cardiac operations and provided the most complete statistics under direct comparison. From the CCAD outcome information, Oxford reported all deaths and had 98% and 100% completeness for all data points over the two year period. The 10% mortality for open heart surgery in infants for 2000-2 was not different from the mean for all centres (8%) (fig 1). The missing deaths from other centres in HES led to the suggestion that Oxford had an outlying mortality because of the artefactually low national mortality produced from HES data. Figure 2 shows the CCAD recorded 30 day mortality compared with the national average mortality of 8% for all 13 UK centres for the three years between 1 April 2000 and 31 March 2003. These figures refine information available from the report of the first year of CCAD results.6

Fig 2 Thirty day mortality (with 95% confidence intervals) for infants aged under 12 months who had surgery with cardiopulmonary bypass in all 13 UK centres between 1 April 2000 and 31 March 2003

Discussion

Principal findings

Hospital episode statistics (HES) did not provide reliable patient numbers or 30 day mortality data. On average, HES recorded 20% (5-38%) fewer cases than the central cardiac audit database (CCAD) and captured only between 27% and 78% of 30 day deaths in nine of the 11 centres in England. HES did not record operations on non-UK residents and detected only deaths that occurred in the hospital where the operation took place and during the same admission as the surgery. No validated data exist with which to compare HES before 2000, but we have no reason to suppose that it was more reliable before that time.

Accordingly, the non-verified HES information was an unsuitable platform on which to base sophisticated statistical analysis. The Dr Foster paper did not present an accurate account of cardiac surgical activity or mortality in infants and consequently placed spurious and harmful conclusions in the public arena.

Strengths and weaknesses of the study

This study provides a comprehensive appraisal of congenital cardiac surgical activity in the UK between 1 April 2000 and 31 March 2002 and highlights the substantial discrepancy between HES and CCAD. Aylin and colleagues stated that Oxford had excessive mortality on the basis of data collected between 1999 and 2000 and adjusted for procedure. Given that CCAD validated data were available only after 2000, we can refute that claim only from that time onwards. Equally, we did not adjust our data comparison for procedure. So far, none of the studies has attempted to stratify children by risk according to functional status or comorbidity.

The Thames Valley Strategic Health Authority review highlighted previous CCAD reviews of independently validated data, which show that all UK units produce similarly acceptable results.7 CCAD data also showed that Oxford had the lowest mortality for non- cardiopulmonary bypass operations in infants aged under 12 months and was in the middle of the spectrum for all cardiac operations in this age group.

Although it provides the gold standard for collection of cardiac data in the UK, CCAD was imperfect in that some non-UK residents were lost to follow-up. This occurred particularly in high volume centres with overseas links. Some deaths within 30 days of operation could have been missed if the patients died abroad. CCAD now makes increasingly strenuous efforts to verify data at each congenital heart centre. Multidisciplinary CCAD team visits to all 13 UK centres guarantee a thorough approach for this system. Both cardiology and surgical teams are aware of the importance of accurate data submission. Providing an accurate description of a complex cardiac operation on an unusual heart defect can sometimes be very difficult, given the restrictions in database entry. Clinicians must enter the information of best fit. In contrast, clerical staff involved in HES submission are disadvantaged by less specialised knowledge and motivation to provide comprehensive and accurate data.

Strengths and weaknesses in relation to other studies

Referencing their own publications, Aylin and colleagues suggested that HES data were of “significant quality to be used for analysis.”4 5 They have also stated that “patients where the outcome was unknown made little difference to the overall mortality.” Information from our study indicates that these statements are wrong. Other authors have clearly shown the weaknesses and dangers of administrative databases when presenting cardiac mortality data in the public arena.8 9 In a recent paper, Shahian and colleagues compared clinical and administrative data sources for report cards on coronary artery bypass graft surgery in hospitals in Massachusetts.10 They found a 27.4% disparity in the volume of isolated coronary artery bypass graft surgery, a significant difference in observed in-hospital mortality, and an inappropriate classification of a centre as an outlier on the basis of inaccuracies in administrative data. Various statistical methods produced different risk adjusted mortalities. The administrative dataset was more prone to errors in coding of procedures and incorrect case numbers, non-standardised mortality end points, misalignment of data sources with intended use, absence of critical clinical variables, failure to differentiate comorbidities from complications, and inability to safely define outliers. In an editorial discussion of Shahian's paper, Ryan concluded that the public reporting of mortality statistics must be based on data of the highest quality derived from prospectively gathered, validated, and audited clinical sources and not from unverified administrative datasets.10 11

Meaning of the study

Publication of inaccurate statistics, particularly regarding paediatric deaths, detracts from rather than enhances public confidence. Data management requires resources, but most of the units were not funded to collect and validate data effectively. If mortality statistics are to be released, their quality must be beyond reproach. Precise database definitions, uniform training of data managers, and periodic external audit are essential. Adequate ascertainment of relevant deaths and complete recording of patient episodes are also needed.

The media are eager to publish leagues tables of performance. Dr Foster has pioneered this approach by providing newspapers with information for heart disease and other topical aspects of health care in return for a fee. Government agencies and the media increasingly tend to use administrative data for hospital profiling because they are inexpensive and available in a short time frame. Marshall and Spiegelhalter have questioned the reporting of performance indicators to provide explicit ranking of institutions.12 Their key messages were that league tables are unreliable statistical summaries of performance and that institutions with smaller numbers of cases may be unjustifiably penalised or credited in comparison exercises. Any performance indicator should be reported with its associated statistical sampling variability.

Uncertainty about the public reporting of unit specific mortality statistics

We believe the UK to be the first country to follow some American states by placing non-risk stratified statistics for cardiac surgical mortality in the public arena.13 This was a Department of Health initiative in response to the Bristol inquiry, which also used HES.4 Similar problems have now been experienced on both sides of the Atlantic. The public reporting of mortality statistics in isolation cannot increase the safety of cardiac surgery but may reduce mortality if the system discards high risk patients. We do not believe that surgeons wish to take this route, but many will follow their self preservation instinct.14 In the United States, the tertiary referral centres with the greatest expertise often have the highest mortality because they treat the sickest patients.10 11 13 Many other countries collect surgical outcome data to monitor and improve their hospitals' performance but will not divulge this information to the media. Given the problems with data quality, the imprecision of risk stratification models, and the confrontational agenda in the media, we question the value of placing mortality statistics in the public domain.

What is already known on this topic

Hospital episode statistics have been used to compare activity rates and mortality between centres, but their reliability has been questioned

What this study adds

The congenital cardiac audit database is more accurate and complete than hospital episode statistics, but individual centres need further investment to improve completeness of data

The value of placing unit or surgeon specific mortality statistics in the public domain is in doubt, given the poor quality of data, imprecision of risk stratification, and confrontational media agenda

We appreciate the role of the Thames Valley Strategic Health Authority, Oxford Radcliffe NHS Trust, and Roger Boyle in the work to resolve this problem. We thank P Aylin and officers of the congenital cardiac audit database for providing their data for the review.

Contributors: SW and RP operated on the patients and were involved in collecting and validating the data and writing the manuscript. CG was one of the paediatric cardiac anaesthetists and was involved in validating data. NA, NM, SA, and NW collected and validated data and contributed to the manuscript. OO participated in the investigation to compare HES and CCAD data. NA is the guarantor.

Funding: None.

Competing interests: None declared.

Ethical approval: Not needed.

Provenance and peer review: Non-commissioned; externally peer reviewed.

References

- 1.Bristol Royal Infirmary Inquiry. Learning from Bristol: the report of the public inquiry into children's heart surgery at the Bristol Royal Infirmary 1984-1995 Norwich: Stationery Office, 2001. (CM 5207.) Available at www.bristol-inquiry.org.uk/

- 2.Schneider EC, Epstein AM. Influence of cardiac surgery performance reports on referral practices and access to care: a survey of cardiovascular specialists. N Engl J Med 1996;335:251-6. [DOI] [PubMed] [Google Scholar]

- 3.Westaby S. League tables, risk assessment and an opportunity to improve standards. Br J Cardiol (Acute Interv Cardiol) 2002;9:5-10. [Google Scholar]

- 4.Spiegelhalter DJ, Evans S, Aylin P, Murray G. Overview of statistical evidence presented to the Bristol Royal Infirmary inquiry concerning the nature and outcomes of paediatric cardiac surgical services at Bristol relative to other specialist centres from 1984 to 1995. September 2000. (accessed 15 Sep 2004).www.bristol-inquiry.org.uk/Documents/statistical%20overview%20report.pdf

- 5.Aylin P, Bottle A, Jarman B, Elliot P. Paediatric cardiac surgical mortality in England after Bristol: descriptive analysis of hospital episode statistics1991-2002. BMJ 2004;329:825-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gibbs J, Monro J, Cunningham D, Rickards A. Survival after surgery or therapeutic catheterisation for congenital heart disease in children in the United Kingdom: analysis of the central cardiac audit database for 2001. BMJ 2004;328:611-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Thames Valley Strategic Health Authority. Report of Paediatric Cardiac Surgery Steering Group, appendix 8 TVSHA, 2005

- 8.Shahian DM, Torchiana DF, Shemin RJ, Rawn JD, Normand SL. Massachusetts cardiac surgery report card: implications of statistical methodology. Ann Thorac Surg 2005;80:2106-13. [DOI] [PubMed] [Google Scholar]

- 9.Mack MJ, Herbert M, Prince S, Dewey TM, Magee MJ, Edgerton JR. Does reporting of coronary artery bypass grafting from administrative databases accurately reflect actual clinical outcomes. J Thorac Cardiovasc Surg 2005;129:1309-17. [DOI] [PubMed] [Google Scholar]

- 10.Shahian DM, Silverstein T, Lovett AF, Wolf RE, Normand Sen T. Comparison of clinical and administrative data sources for hospital coronary artery bypass surgery report cards. Circulation 2007;115:1518-27. [DOI] [PubMed] [Google Scholar]

- 11.Ryan TJ. To understand cardiac surgical report cards look both ways. Circulation 2007;115:1508-10. [DOI] [PubMed] [Google Scholar]

- 12.Marshall EC, Spiegelhalter DJ. Reliability of league tables of in vitro fertilisation clinics: retrospective analysis of live birth rates. BMJ 1998;316:1701-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Green J, Wintfeld N. Report cards on cardiac surgeons: assessing New York State's approach. N Engl J Med 1995;332:1229-32. [DOI] [PubMed] [Google Scholar]

- 14.Burack JH, Imprellizerri P, Homel P, Cunningham JN. Public reporting of surgical mortality: a survey of New York State cardiothoracic surgeons. Ann Thorac Surg 1999;68:1195-202. [DOI] [PubMed] [Google Scholar]