Abstract

Objective

The aim was to determine whether children with Specific Language Impairment (SLI) differed from children with typical language development (TLD) in their allocation of attention to speech sounds.

Methods

Event-related potentials were recorded to nontarget speech sounds in two tasks (passive-watch a video and attend to target tones among speech sounds) in two experiments, one using 50-ms duration vowels and the second using 250-ms vowels. The difference in ERPs across tasks was examined in the latency range of the early Negative difference wave (Nd) found in adults. Analyses of the data using selected superior and inferior sites were compared to those using electrical field power (i.e., global field power or GFP). The topography of the ERP at the maximum GFP was also examined.

Results

A negative difference, comparable to the adult Nd, was observed in the attend compared to the passive task for both types of analysis, suggesting allocation of attentional resources to processing the speech stimuli in the attend task. Children with TLD also showed greater negativity than those with SLI in the passive task for the long vowels, suggesting that they allocated more attentional resources to processing the speech in this task than the SLI group. This effect was only significant using the GFP analysis and was seen as smaller GFP for the TLD than SLI group. The SLI group also showed significantly later latency than the TLD group in reaching the maximum GFP. In addition, a significantly greater proportion of children with SLI compared to those with typical language showed left-greater-than-right fronto-central amplitude at the latency determined from each child's maximum GFP peak.

Conclusions

Children generally showed greater attention to speech sounds when attention is directed to the auditory modality compared to the visual modality. However, children with TLD, unlike SLI, also appear to devote some attentional resources to speech even in a task in which they are instructed to attend to visual information and ignore the speech.

Significance

These findings suggest that children with SLI have limited attentional resources, that they are poorer at dividing attention, or that they are less automatic in allocating resources to speech compared to children with typically developing language skills.

Keywords: specific language impairment, auditory evoked potentials, speech perception, attention, neurophysiology

Introduction

Good language skills are essential for success in many endeavors of our modern society. Unfortunately, between five and ten percent of children show impaired language development, despite apparent typical development of non-verbal cognitive abilities (Bishop, 1997). This disorder has been termed specific language impairment (SLI). A great deal is now known concerning the language profile of children with SLI. They exhibit deficits in phonological and grammatical processing (Leonard, 1998; Rice, & Wexler, 1999), and, in particular, show atypical identification of native-language speech categories (e.g., /ba/ versus /da/; Sussman, 1993; Shafer, Morr, Datta, Kurtzberg & Schwartz, 2005; Datta, Shafer, Morr & Schwartz, 2006). Despite extensive descriptions of deficits associated with SLI, the causes of this disorder continue to be debated.

Clues to the nature of SLI come from research showing that these children often have processing deficits, such as poor auditory processing, slower information processing and poor phonological working memory (e.g., Tallal, Miller, Fitch, 1993; Rosen, 2003; Ellis Weismer, et al, 2000). These findings have led researchers to propose these various factors as the underlying causes of SLI. Although the exact nature of how each of these deficits interferes with language learning is not yet clear, they point to the possibility that some more general deficit (e.g., attention, control of attention, automatization of processing) may underlie these seemingly disparate findings.

For these reasons, it is useful to turn to models of typical language development to examine how during development different types of deficits could interfere with language learning, and, in particular, with learning of phonological categories. Peter Jusczyk's (1997) model of the development of speech perception and word learning suggests that the infant/toddler learns to attend automatically to the relevant information in the speech signal, which will then allow for efficient and rapid identification of native-language speech categories, and thus, better word learning and speech segmentation. This notion that speech perception becomes highly automated has been used to explain why non-native listeners and second language learners exhibit poorer discrimination and categorization of speech sounds that are not phonemically contrasted in the first language (L1), particularly when processing demands are high (see Strange & Shafer, in press). Results from electrophysiological studies support the suggestion that L1 speech perception is highly automated by showing that a preattentive component indexing discrimination, the Mismatch Negativity (MMN), is larger to native than non-native language phonemic contrasts (e.g., Näätänen et al. 1997; Winkler, Kujala, Tiitinen, Sivonen, Alku, et al., 1999). This claim that infants learn to automatically attend to relevant features in the speech signal suggests the hypothesis that a deficit in learning to attend automatically to speech could contribute to SLI. This deficit has two possible sources: 1) the child may not have sufficient attentional resources for speech, which then interferes with learning the relevant acoustic cues for identifying speech sound categories; or 2) the child does not learn to focus automatically on relevant speech cues, and thus, speech processing requires more resources and is more effortful.

Neurophysiological measures provide evidence consistent with the hypothesis that children with SLI are less automatic in discriminating speech. Specifically, many children with SLI show smaller or absent MMN to speech sound changes (Uwer, Albrecht, & von Suchodoletz, 2002; Shafer, et al., 2005). MMN is believed to index an automatic trace-comparison process, and can be elicited even when attention is directed to some other stimulus or task. If the deficit were entirely one of limited automaticity of processing, then one might expect that focusing attention on the auditory modality would result in improved discrimination, as indexed by increased MMN amplitude. This hypothesis was examined by Shafer, et al. (2005), but children with SLI showed no increase in MMN amplitude with attention.

In contrast, Shafer and colleagues did observe a difference in the amplitude of the ERP in the range of the P1 (or P100) obligatory component between children with SLI and those with typical language development (TLD) that could be attributed to differences in attention to speech. Specifically, attention to the auditory modality (identifying target tones) compared to the visual modality (watching a video) led to a negative shift in the ERP from 100 to 190 ms to the frequent vowel at fronto-central sites. Children with TLD also showed significantly greater negativity of the ERP from 130 to 160 ms than those with SLI in the video-watching task, in which they were supposed to ignore the speech stimuli. The negative shift may be the processing negativity (PN) or negative difference wave (Nd) observed in the adult ERP when attention is focused on the stimulus of interest rather than away from it (e.g., Hansen & Hillyard, 1980; Näätänen, 1990). The early portion of the Nd, which has a similar topography to N1, may reflect enhancement of the N1 component, although the later phase appears to be an independent endogenous component (Woldorff & Hillyard, 1991). The greater negative shift to the vowels in the visual task for the children with TLD may indicate that they were better at allocating some attentional resources to the speech even when attention was directed to the video. Another way to characterize this is that for children with TLD, speech processing is highly automated even in the presence of a competing task.

This negative attentional shift was seen in the latency range of a prominent positive component because the N1 was not apparent in these data. A superior site negativity at the adult N1 latency is less likely to be observed in children to auditory stimuli presented with interstimulus intervals (ISIs) of less than 1000 ms (e.g., Gilley, Sharma, Dorman & Martin, 2005). Coch and colleagues suggested that there is some controversy regarding the identity of N1 (or, more specifically, N1b) in children, and that in children N1b might be the later negativity peaking around 200 ms (Coch, Skendzel & Neville, 2005). However, the recent paper by Gilley and colleagues (2005), along with some earlier papers (e.g., Ceponiene et al., 1998), strongly suggests that N1b is the earlier peak and is highly refractory at short ISIs.

In the absence of N1b at faster rates of stimulus presentation, a prominent P1 is found at superior sites peaking around 100 ms, which we are calling the P100 (e.g., Ceponiene et al., 1998; Coch et al., 2005; Gilley, et al., 2005; Shafer, et al., 2000; Shafer, et al., 2005). The data in studies focusing on the interaction of peak amplitude and ISI suggests that the rising slope of this peak is comparable to the P1 and the falling slope to the P2 component found in adults (see Gilley et al., 2005; Ceponiene et al., 1998) and that at slower rates (and longer ISIs) the N1b component bifurcates the P100. The P1 component appears to have a source in Layer IV of the superior temporal plane of secondary auditory cortex, whereas N1b has contributions from Layers II and III in primary and secondary cortex (e.g., Tonnquist-Uhlen, Ponton, Eggermont Kwong & Don, 2003). Tonnquist-Uhlen and colleagues have suggested that the predominance of the P1 component and absence of N1b in early development may be related to earlier maturation of Layer IV than Layers II and III (Tonnquist-Uhlen, et al., 2003).

These studies indicate that the N1b is immature and highly attenuated at short ISIs but not that it is absent from children. Thus, if the Nd is an enhancement of N1b, we should expect to see this as an increase in negativity of the ERP in the latency range of the N1b even in the case that N1b is masked by the P100 component.

To date, no neurophysiological studies other than Shafer, et al. (2005) have contrasted processing of auditory stimuli in children with SLI under two different attentional conditions. However, results from several studies are consistent with the suggestion that attention to speech and/or auditory information may differ between SLI and TLD groups. Specifically, several studies have found later latency of N1 in, at least, a subset of Language Impaired (LI) children (Jirsa & Clontz, 1990; Neville, et al., 1993; Tonnquist-Uhlen, Borg, Persson & Spens, 1996), although this was not seen in other studies (e.g., Lincoln, et al., 1995; Marler, Champlin & Gillam, 2002). These differences in N1 latency could be due to reduced N1 negativity leading to the appearance of later N1 peak latency.

Irrespective of the exact explanation for the negative shift across task in Shafer and colleagues (2005), this result suggests that attention is an issue and should be explored further.

The purpose of this paper is to examine whether differences in speech processing between children with TLD and SLI can be attributed to differences in attention to speech. To do this, we examined whether children with SLI and TLD showed differences in the ERP under two task conditions, passive video-watching and attend-tones. Specifically, we expected evidence of attention to the speech to be seen as a negative shift (Nd) in the ERP at superior electrode sites in the latency range of N1 (e.g., Woldorff & Hillyard). Poorer attention to speech in the SLI group would be seen as a smaller Nd. A second goal was to determine whether using a simple, but comprehensive measure of amplitude, which uses information from all electrode sites, called Global Field Power (GFP), yields different results than a more standard analysis of selecting a subset of electrode sites. We calculated the GFP using the data set from Shafer et al. (2005), and using a second data set from different groups of TLD and SLI children who heard longer versions of the vowel stimuli (Datta, et al., 2006) using the identical design as that in Shafer, et al. (2005). The GFP provides an unbiased global measure of brain activity by calculating variance across sites (e.g., Lehmann & Skrandies, 1980; Murray, Molholm, Michel, Heslenfeld, Ritter. Javitt, Schroeder & Foxe, 2005). In particular, GFP provides an omnibus measure of the timing of maximum brain activation (presumably cortical) because it is not biased by choice of electrode and reference. The analyses using GFP were compared to those using central sites and mastoids. We also identified the sites of greatest positivity and negativity at the peak of GFP power in the 50-150 ms time-range to allow us to examine topographical differences across groups, since several studies have also shown abnormal topography of ERP components in children with LI between 50 and 400 ms (e.g., Tonnquist-Uhlen, et al., 1996; Mason & Mellor, 1984; Shafer, Schwartz, Morr, Kessler & Kurtzberg, 2000).

Methods

Subjects

Nine children with SLI (M = 9;6 years, range, 8;6 - 10;11.), and eleven children with TLD (M = 9;9 years, range 8;1 - 10;11) participated in the long-vowel experiment (see Datta, et al., 2006). One additional child with SLI was excluded from the analysis for not completing the electrophysiological testing. Eight children with SLI and eleven with TLD (matched for IQ) participated in the short-vowel experiment (see Shafer, et al., 2005). To match groups on nonverbal performance, eight additional children who were tested were excluded from the final sample of Shafer et al. (2005) because they had scores greater than 115 or less than 82 on the Test of Nonverbal Intelligence (TONI). One child with language impairment was excluded because she also had a fluctuating sensori-neural hearing loss. These nine children are excluded from most analyses in the current paper but are shown in one figure (Figure 4) to illustrate the performance across a group with a larger range of non-verbal performance scores. Because non-verbal intelligence is not always tested in studies of typical children, this larger group is likely to be comparable to the intelligence characteristics of children found in these other papers (e.g., Coch et al., 2005). Children with SLI were referred by certified Speech Language Pathologists (SLP). All children received either the Clinical Evaluation of Language Fundamentals 3 (CELF3) or the Test of Language Development (TOLD) to test their expressive and receptive language skills. The TONI was administered to evaluate their nonverbal abilities. Table 1 displays means and SDs for the TONI and language tests. Note that the majority of children with SLI performed particularly poorly on expressive language skills (< 90) whereas all children with TLD has scores greater than 92. The three SLI children who had relatively high expressive scores (ranging from 90 to 96) were confirmed as SLI by receptive scores less than 90 (two cases in the short-vowel experiment) and diagnosis by the SLP on non-standardized language measures (one case in the long-vowel experiment). All SLI children had deficits in morphology and syntax production. SLI children scored significantly lower on the language tests than the control group (long vowel study t(1,18) = 2.294, p = 0.02; short vowel study: t(1,17) = 4.0, p = 0.0001), but did not differ on the TONI (long vowel study, t(1,18) = 1.275. p = 0.1097; short vowel study t(1,17) = 1.2, p = 0.2).

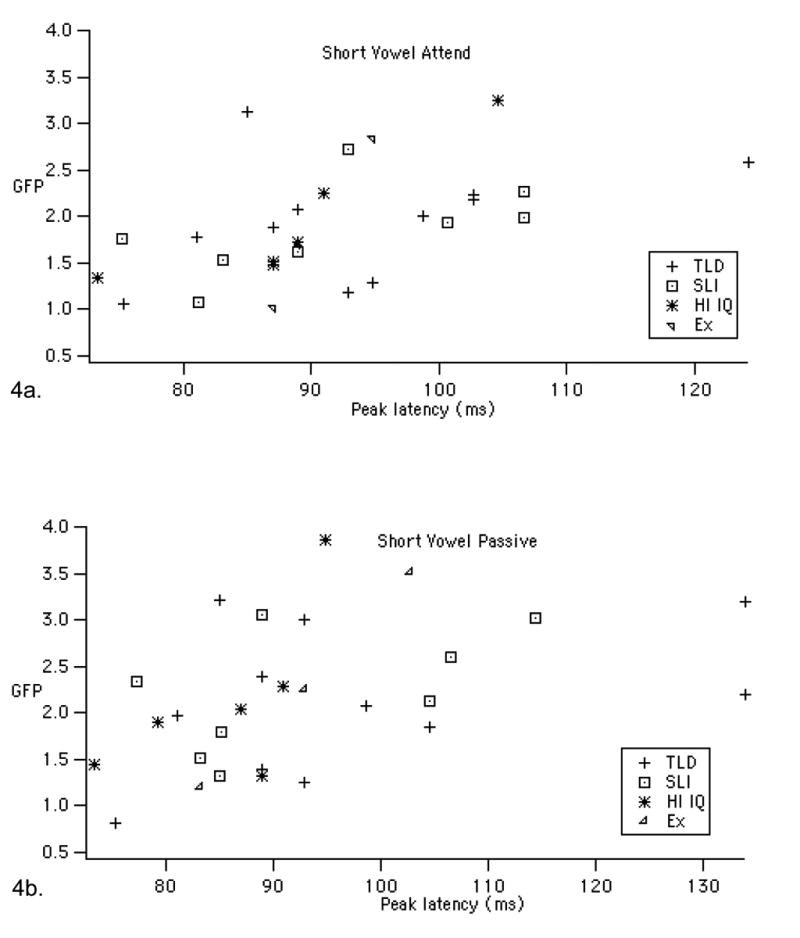

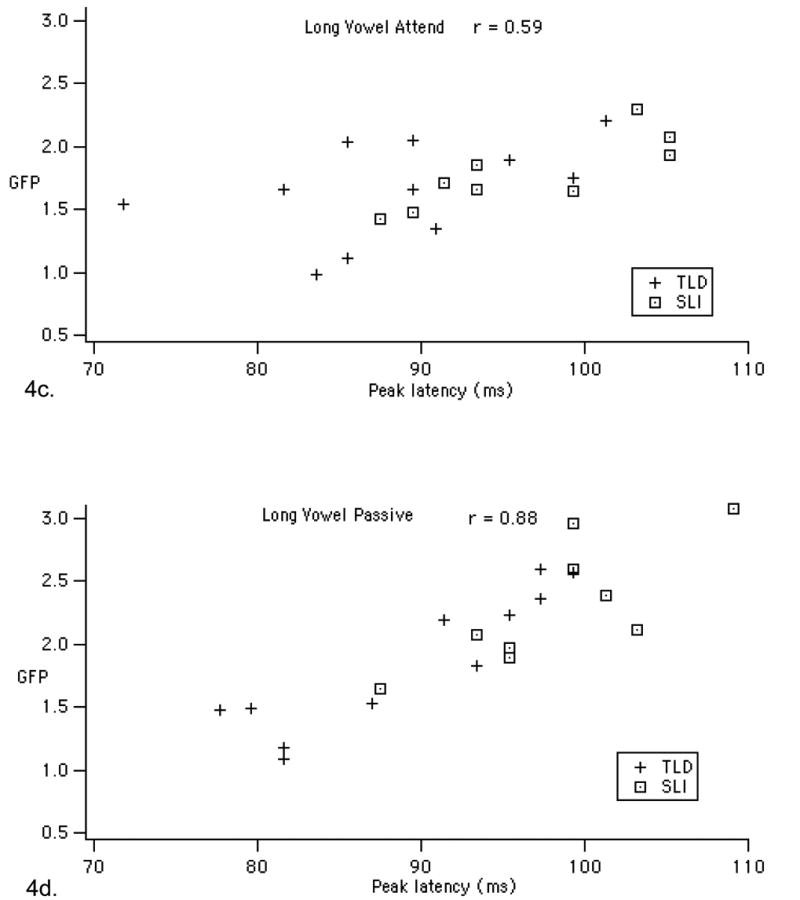

Figure 4.

Scatterplots showing the relationship between GFP peak latency and amplitude for the short and long vowels in the two tasks. The short-vowel graphs include the six children excluded for high TONI scores (HI IQ) and three children excluded for low TONI and SNHL (Ex) to demonstrate that the correlations remain with this larger sample. Note that the statistics in the results section for the short vowels only include the 11 TLD and 8 SLI children reported in Shafer et al. (2005).

Table 1.

Group Means (SD) on TONI and language tests (Expressive and Receptive Standardized Mean = 100).

| Group | N | Age | TONI | Rec | Exp |

|---|---|---|---|---|---|

| Short Vowel SLI | 8 | 9;3 (1;4) | 95(9) | 89(11) | 83(21) |

| Short Vowel TLD | 11 | 8;11 (0;8) | 100(9) | 107(9) | 108(12) |

| Long Vowel SLI | 9 | 9;6 (11) | 92(9) | 92(19) | 80(14) |

| Long Vowel TLD | 11 | 9;9 (11) | 100(16) | 108(12) | 105(10) |

Stimuli

A nine-step continuum of 250 ms phonetically similar vowels was created from resynthesized natural tokens of a female speaker using the Analysis by Synthesis Lab, version 3.2. Fundamental frequency (F0) and F3 and F4 frequencies were identical for all stimuli at 190 Hz, 2174 Hz and 3175 Hz, respectively. For the first experiment, 50-ms versions were cut from the center of these vowels and edited to a 5ms rise and fall time. The second experiment used the longer, 250-ms version of these vowels. The children in these experiments were presented with two tokens from the continuum in the ERP paradigm. One was perceived by adults as the vowel /I/ in ‘bid” with F1 of 500 Hz and F2 of 2160 Hz, and the second was heard as [ε] in “bet” with F1 of 650 Hz and F2 of 1980 Hz. The stimuli were binaurally presented at 86.5 dB SPL through insert earphones.

Procedures and Recording

The studies shared identical designs, with the only difference being the stimulus duration. The two stimuli were presented in an oddball paradigm with /I/ occurring on 21% of trials and /ε/ on 79% of trials at a rate of 1 per 600 ms. Children received a total of 2004 stimuli in each of the two conditions (1583 standards): one in which they ignored the stimuli and attended to a video with the sound muted (Passive), followed by a second condition in which they attended to target tones inserted randomly among the stimuli (Attend; 13 tone targets per 500 vowel stimuli). Children pressed a response button to the target tones and received an M&M candy for each correctly identified tone.

Participants also performed three behavioral discrimination tasks and one identification task following the ERP tasks. In one of the discrimination tasks (D1), participants were asked to press a button to identify the different stimulus in the oddball paradigm used for the ERP study, but with stimuli delivered at a slower rate (1 per 2 sec) with a total of 30 deviant trials. The second discrimination task (D3) used the same design but included 4 different exemplars from the continuum (two from the /I/ category and two from the /ε/ category). This D3 Task showed the same pattern of results as D1 and will not be reported here. The third discrimination task (D2) presented pairs of stimuli (the same two stimuli used in the electrophysiology) with an ISI of 550 ms for the short vowel study and 350 ms for the long vowel study and participants had to press one button if the pair were the same and a second button if they were different. In the identification task, which always occurred last, participants were presented with four stimuli (two from the /I/ category and two from the /ε/ category, each 15 times and asked to categorize them by pressing one button if they heard the /I/ in “bit” and a second button if they heard the /ε/ in “bet”. Stimuli were presented at a rate of 1 per 2 seconds. A practice session was give before each task.

The electroencephalogram (EEG) was sampled at 512 Hz, bandwidth of 0.05-100 Hz, from 31 scalp sites referenced to the nose. An electrode placed 1 cm below the left eye and referenced to FP1 was used to monitor eye movement. The epochs of the EEG time-locked to the onset of the stimuli were averaged and digitally filtered (low-pass 30 Hz). Epochs with artifact greater than +/−100 μV were not included in the averaged response. After artifact reject, the mean number of epochs in an ERP average for the two studies, the two conditions and the two groups ranged from 815 to 1027. Only three cases had fewer than 700 (but greater than 545 trials) in an ERP average each for one task condition only. A pre-stimulus interval consisting of 50 ms of EEG activity was used to baseline correct the averaged evoked potential data.

Analyses

In the previous paper using the short vowels, both TLD and SLI groups showed greater negativity from 100-190 ms to the standard stimulus in the Attend compared to the Passive task, and a difference between TLD and SLI groups from 130 to 160 ms at CZ and C3 (Shafer, et al., 2005). This general time-range is the focus of the analyses for this report. To derive GFP, the standard deviation of the absolute voltage deviations of the 31 scalp sites from the average was calculated for each participant (Lehmann & Skrandies, 1980). Figure 1 displays how greater GFP reflects greater variance in amplitude across sites by comparing GFP to a butterfly plot of the 31 sites used to derive it. The mean GFP was calculated between 50 and 176 ms (divided into three 42 ms intervals) for each participant and task. The 176 ms value occurs at the approximate minimum of GFP between the first and second peaks. The earlier time point was selected at an equivalent GFP magnitude to that at 176 ms. Mean amplitudes for these three time intervals were also computed for C3, CZ, C4, LM and RM. The latency of the maximum GFP between 75 and 115 ms was also identified for each participant and task. The GFP values and amplitude measures from the five selected sites were compared between groups and tasks using mixed ANOVAs. One child with TLD in the long vowel study and two with TLD in the short vowel study were excluded from the peak latency analysis because the GFP peak fell more the 2 SD from the mean.

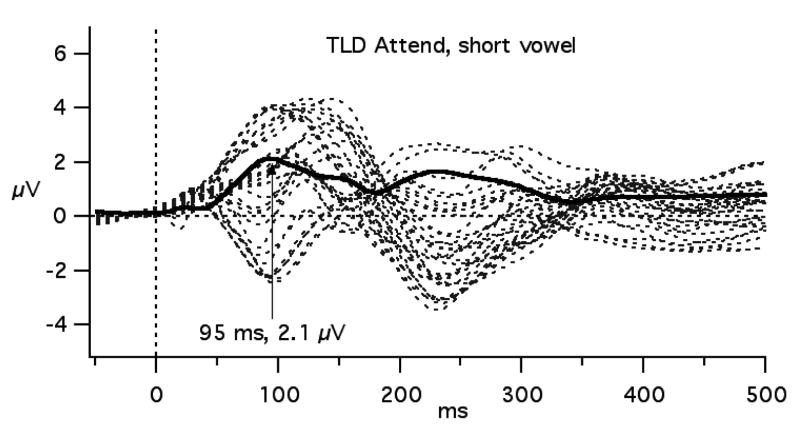

Figure 1.

The relationship between GFP (thick, solid line) and the ERP waveforms (dotted lines) from 31 scalp sites for the TLD and SLI groups in the short vowel experiment.

The site of maximum positivity and negativity at each child's GFP peak was identified from butterfly plots of all sites (Lehmann & Skrandies, 1980). The Fisher Exact test (similar to Chi Square) was used to determine whether the proportion of left versus right site maximums differed for the SLI and TLD groups in the two tasks (Siegel, Castellan & Castellan, 1990). In the case that two sites showed nearly identical amplitude for a child (less than 0.2 μV difference), we assigned a value of .5 to the two tying sites to be used in calculating these proportions.

The Fisher Exact test was used to determine whether a greater proportion of children in the SLI compared to the TLD group fell below the median for the discrimination tasks or performed more poorly (between 33% and 72%) on identifying the stimuli. Reaction time data are not reported here and can be found in Shafer et al. (2005) and Datta et al. (2006).

Results

No significant differences between the two groups were found for the discrimination tasks for either the short or the long vowels (Fisher Exact, p > 0.320). The children generally performed well on these tasks (Short Vowel D1: greater than 82% correct for 6/8 SLI and 9/11 TLD; D2: greater than 90% correct for 6/8 SLI and 8/11 TLD; Long Vowel D1: greater than 90% correct 8/9 SLI and 11/11 TLD; D2: greater than 90% correct 8/9 SLI and TLD 10/11). In contrast, the SLI group performed significantly more poorly than the TLD group on the identification tasks for both short and long vowels (Fisher Exact, p < 0.040; Short Vowels: greater than 87% correct 2/8 SLI and 9/11 TLD, greater than 73% less than 87% 1/8 SLI and 2/11 TLD, and less than 73% 5/8 SLI; Long Vowels: greater than 93% correct 1/9 SLI; 11/11 TLD, greater than 73% and less than 93% correct 4/9 SLI, and less than 73% correct 4/9 SLI). Further details of the behavioral results are reported in Shafer, et al. (2005) and Datta, et al. (2006).

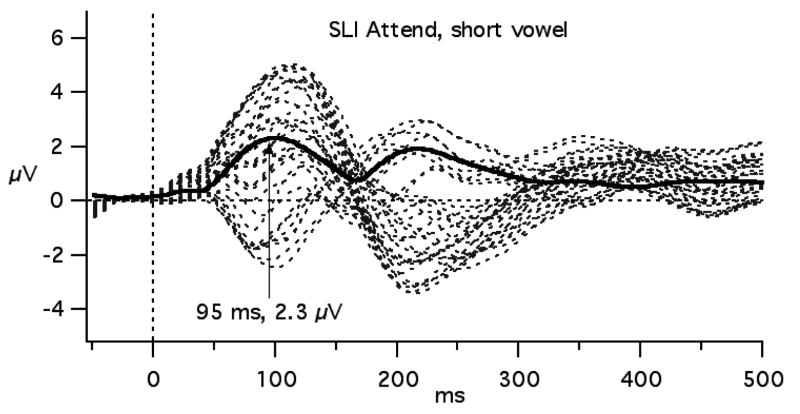

Figure 2 shows that from approximately 75 to 150 ms, the ERPs to the vowels in the Passive Task show greater GFP than those to the vowels in the Attend Task. Furthermore, in the Passive Task, the SLI group appears to have greater GFP than the TLD group in this time interval. Figure 2 also illustrates the relationship between GFP and the amplitude of P100 as measured at electrodes Cz and RM. In particular, it is apparent that the CZ-RM difference at the GFP peak to the long vowels is considerably greater for the SLI group in the Passive Task compared to that for any other group or condition. These observations are supported by the statistical analyses.

Figure 2.

GFP for the two groups and two conditions in the short (graphs a and b) and long vowel (graphs c and d) experiments. Graphs b and d show how the greater GFP for the SLI children in the Passive task is manifested at CZ and RM for short vowels (left) and long vowels (right).

In the short-vowel experiment, ANOVAs including Group, Task, Site and Time, examining amplitude at the central (C3, CZ, C4) and mastoid (LM, RM) sites separately showed main effects of Task (central: F(1,17) = 16.479, p = 0.001; mastoid: F(1,17) = 29.24, p = 0.000) and an interaction of Task by Time at the central sites (F(2,35) = 18.950, p = 0.000). Post hoc analyses showed that the Attend and Passive Tasks differed from each other in all three time intervals (p < 0.05). This pattern can be seen in the graphs of CZ and RM in Figure 2b. The group by site by time interaction did not reach significance for either the central or mastoid sites (F(2,34) = 1.828, p = .18; (F(1,17) = 1.475, p = 0.240).

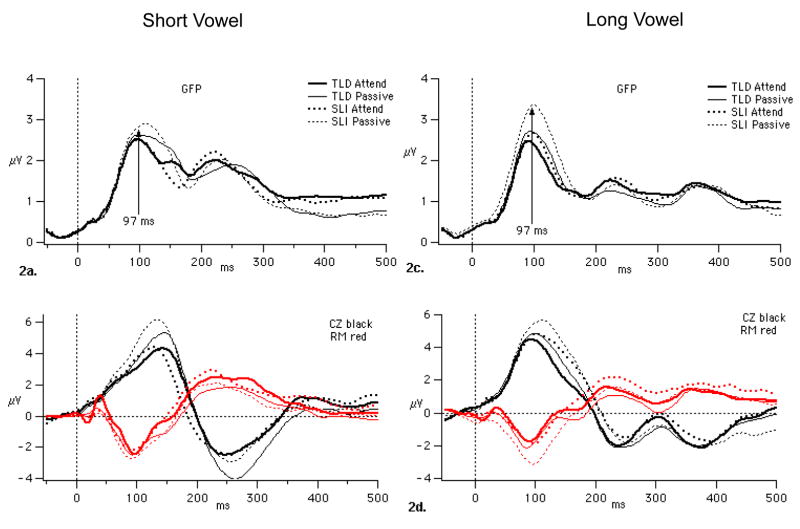

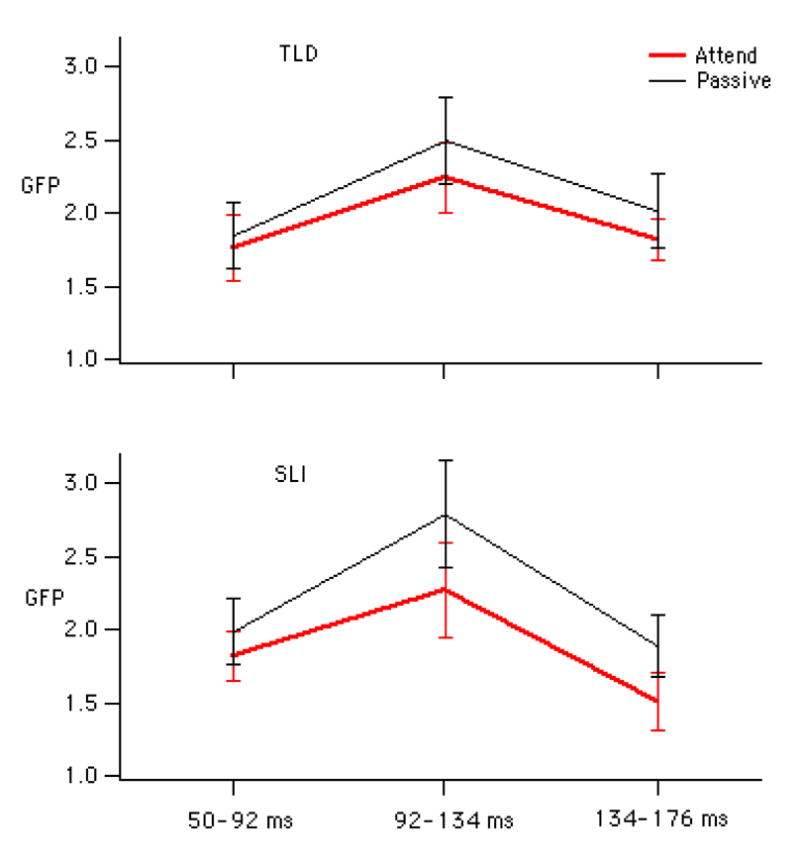

The analysis of the short vowels using GFP revealed an interaction of Task by Time (F(2,34) = 3.820, p= 0.030) and main effect of Task (F(1,17) = 11.919, p = 0.003) for the mean GFP to the standard ERP, but no group differences. Post-hoc comparisons revealed significantly lower GFP in the Attend compared to the Passive Task from 92-134 ms and 134-176 ms (p < 0.05), as shown in Figure 2a. Mean GFP amplitudes and standard errors for the three time intervals and two groups are displayed in Figure 3. This Figure suggests that the Task difference is only robustly present for the SLI group, which is confirmed by ANOVAs examining the two groups separately (main effect of Task, TLD: F(1,10) = 2.450, p = .15; SLI: F(1,7) = 15.030, p = 0.006).

Figure 3.

Mean and standard error bars of the GFP to the short vowels at the three time intervals for the two groups.

For the long-vowel experiment, the 4-way mixed ANOVAs at central and mastoid sites revealed main effects of Task at the central (F(1,18)=7.805, p = 0.012) and mastoid sites (F(1,18) = 17.480, p= 0.001) and an interaction of Group by Time at the central sites (F(2,36) = 5.922, p = .006). The SLI group showed greater amplitude than the TLD group in the later two time intervals compared to the earlier interval, as can be seen in Figure 2d.

In the analysis using GFP, we observed a significant Group by Task interaction (F(1,18) = 5.270, p = 0.034), as well as a main effect of Task (F(1,18) = 29.130, p = 0.000). Post-hoc comparisons showed significantly lower GFP for the TLD compared to SLI group in the Passive Task (p < 0.05) and, for the SLI group, significantly lower GFP in the Attend compared to the Passive Task (p < 0.05; see Figure 2c).

We also found a significant Group difference in GFP peak latency, but only for the long vowels (long vowels: F(1,17) = 5.180, p = 0.027; short vowels: F(1,15) = 0.290, p = 0.600). For the short vowels, the mean peak latency across task was 96 ms (SD= 16) and 93 ms (SD = 13) for the TLD and SLI group, respectively. In contrast, for the long vowels, the mean latency for the SLI group peaked approximately 7 ms later than for the TLD group. No significant Task differences were observed for GFP peak latency for either group (p > 0.370). Table 2 shows the means and SDs for the average GFP amplitude and peak latencies for the two groups in the two tasks. The final column also shows that the majority of children had greater GFP for the Passive compared to Attend, and, in particular, all of the children with SLI showed this pattern.

Table 2.

Means (SD) for the average amplitude and peak latency of GFP to the long vowels for the two groups. The last column displays the number of children showing greater GFP to the vowel in the Passive compared to Attend Task.

| Group | Attend Amplitude | Attend Latency | Passive Amplitude | Passive Latency | Passive > Attend |

|---|---|---|---|---|---|

| TLD | 1.66 (0.39) | 90 (6.6) | 1.86 (0.54) | 90 (7.4) | 8 of 11 |

| SLI | 1.78 (0.28) | 96 (6.9) | 2.30 (0.49) | 98 (6.2) | 9 of 9 |

We also found that GFP peak latency and power were significantly correlated, particularly for the long vowels, as shown in Figure 4 and Table 3. Specifically, larger GFP was associated with later peak latency. This correlation was maintained for the short vowels when adding the nine participants who did not fit the nonverbal performance IQ inclusion criteria, as shown in Figure 4.

Table 3.

Correlation (r) and significance (p) between peak latency and average GFP for the long and short vowels. The last two rows include an additional nine children in the short-vowel study.

| Stimulus/Task | N | r | p |

|---|---|---|---|

| Long Attend | 20 | 0.59 | 0.006 |

| Long Passive | 20 | 0.88 | 0.000 |

| Short Attend | 19 | 0.61 | 0.006 |

| Short Passive | 19 | 0.55 | 0.013 |

| Short Attend | 28 | 0.52 | 0.005 |

| Short Passive | 28 | 0.44 | 0.01 |

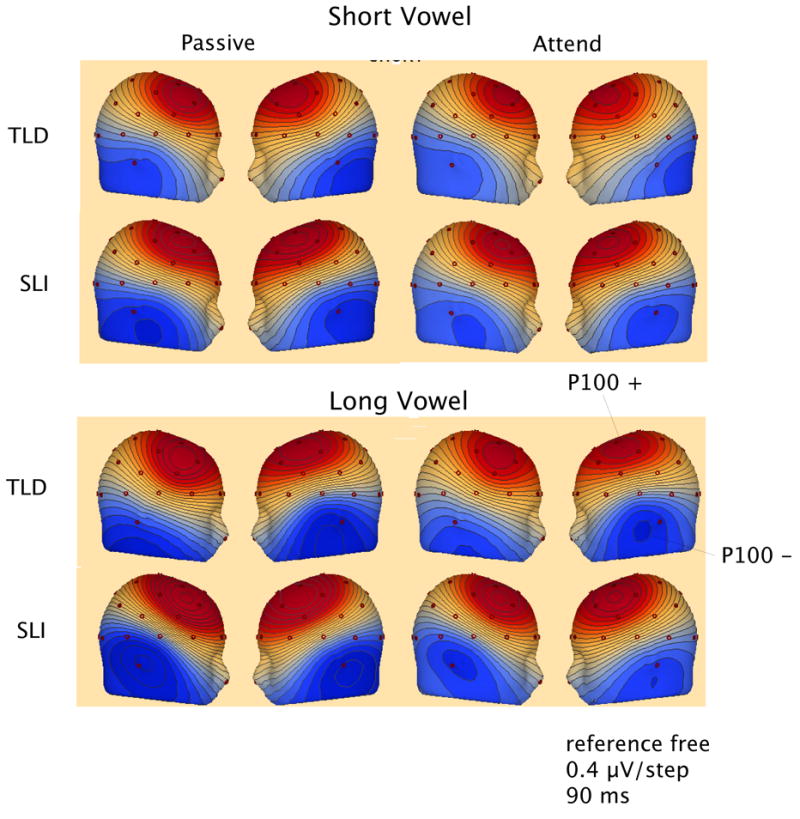

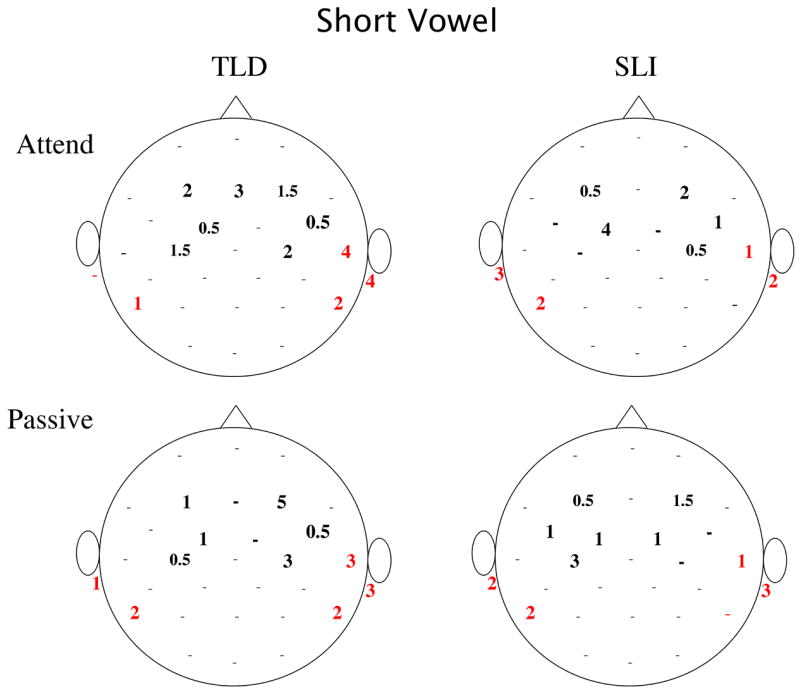

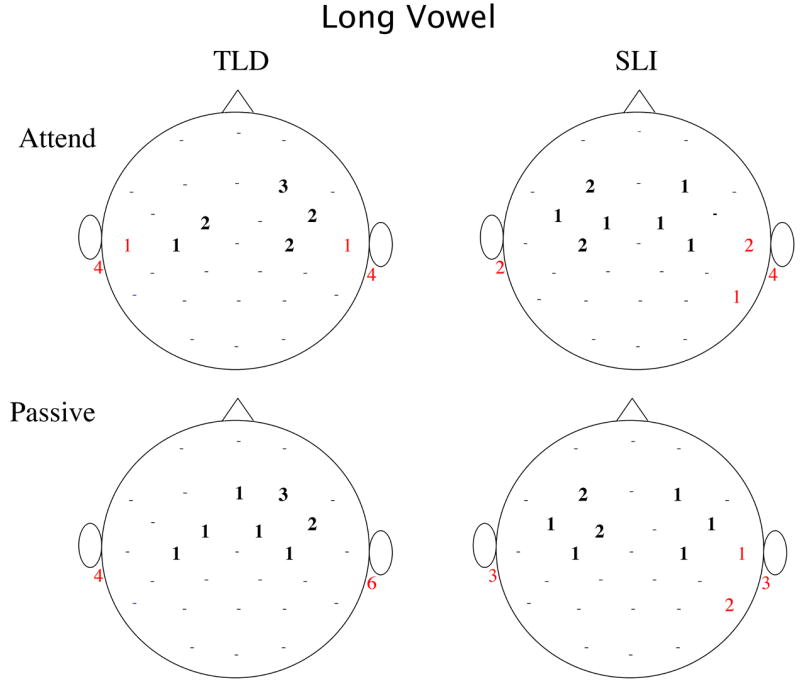

Examination of the scalp locations of largest amplitude positivity and negativity at the latency of maximum GFP showed differences in lateralization for the two groups, as well. Figure 5, shows the mean topography for the two groups and two tasks at the GFP peak (90 ms) and Figure 6 quantifies the number of times a site was identified as having the maximum (positive and negative) amplitude for each Group, Stimulus and Task. In both studies, the majority of children with TLD showed maximum P100 positivity at right frontal-central locations. In contrast, many of the children with SLI showed maximum positivity at left fronto-central sites (see Figure 6). These proportions were significantly different for the TLD and SLI groups in the Passive task and approached significance in the Attend task when pooling the data across the short and long studies (Fisher-Exact: Passive Task, p = 0.005, Attend Task, p = 0.078). The maximum negativity of this peak was evenly split across left and right or greater at right inferior-posterior sites (RM, P8, T4) for both groups for all but the short vowels in the Attend task, in which the children in the SLI group more frequently showed the greatest negativity at left inferior sites. In this latter case, the proportion of left versus right maximum negativity differed for the TLD and SLI groups, as shown in Figure 6 (Fisher Exact: p = 0.023).

Figure 5.

Left and right views of voltage topography (BESA, 6.0) at the GFP peak (90 ms) for the grand mean data for the two groups, two tasks, and two stimuli. Red is positive and blue is negative. The data for the maps was re-referenced to an average reference.

Figure 6.

Number of children showing maximum positivity (black bolded) and maximum negativity (red) for each scalp sites for each group, condition and experiment (a: short vowel, and b: long vowel). The maximum for several children is represented by 0.5 because these few children showed equivalent amplitude (< 0.2 μV difference) at a left and right site. Dots represent scalp sites where no positive or negative maxima were found for any child.

Discussion

In the current study we found evidence that children, with and without SLI, show a negative shift in the ERP when attending to the auditory modality compared to when attention is directed to the visual modality. The latency and topography of this negativity was consistent with the Nd seen in adults. A second finding revealed that children with TLD showed greater negativity than the children with SLI in this time range during the visual-modality task. This second pattern was clearly present in the long-vowel experiment, but was much less apparent for the short vowel experiment. The children with SLI also exhibited later peak latencies than those with TLD in the long-vowel experiment. For both vowel durations, there were clear differences between groups in the scalp distribution of the large positivity at the child's peak GFP; for many of the children in the SLI group, this peak tended to be larger in the left hemisphere but for the TLD group, the peak was generally larger in the right hemisphere, particularly in the Passive Task.

The comparison of the GFP analysis versus the more common analysis of selecting several sites revealed roughly similar findings for the general attention difference. Specifically, both analysis techniques found significant Task differences in the two experiments. Both methods of analysis also showed group differences in latency of reaching peak amplitude for the long vowel experiment. Only the GFP analysis of the long-vowel experiment revealed a Group by Task effect. Below we will discuss how these findings might be related to deficits in attention or auditory processing and further discuss the similarities and differences shown by the two methods of analysis.

Auditory Attention

These experiments were designed to examine whether allocating attention to the auditory modality would facilitate automatic discrimination (measured by MMN) of phonetically-similar vowel contrasts in children with SLI. This attentional manipulation had little or no effect on the MMN (see Shafer, et al, 2005; Datta, et al., 2006), but the analysis suggests that it does affect the ERPs to the standard. In particular, directed allocation of attention to the auditory modality by means of the tone detection task led to a negative shift in the amplitude of ERPs at fronto-central sites from approximately 50 to 176 ms. This negative shift is consistent with the Nd (or the processing negativity, PN) observed in adults when attention is shifted to some auditory stimulus (Woldorff & Hillyard, 1991; Naatanen, 1990). The analyses using GFP indicate that this negative shift at fronto-central sites is manifested as decreased GFP in the temporal window from 92 to 176 ms for the short vowels and in the temporal window from 50 to 176 ms for the long vowels. Adults who were tested in the same paradigm showed the same tendency of decreased GFP in the Attend Condition. Six of nine adults showed lower GFP for the attend compared to the passive task from 50-135 ms, although it did not reach significance (F(1,8) = 3.06, p = 0.12).

A second finding of these analyses was that, particularly for the long vowels, the GFP was larger in magnitude for the SLI group than for the TLD group under the Passive Task. This indicates that many of the children with SLI were allocating attentional resources differently than children with TLD in processing the speech sounds in the Passive Task. To interpret this pattern of findings further, we need to consider how the tone-detection versus video-watching tasks might be affecting processing of the vowel stimuli.

Our original goal in designing these experiments was to increase attention to the vowel contrast by directing attention to the tone targets delivered among the vowel stimuli. One question that arises from our results is whether the tone-detection task compared to the passive video-watching task actually led to increased attention to the vowel stimuli. If this is in fact the case, then it means that increased attention is seen as reduced GFP. Because this finding initially appears to be counterintuitive, we will consider carefully how these tasks affected processing of the vowels.

Models of selective attention indicate that processing of the non-target information (i.e., distracter) is dependent on perceptual load and cognitive control load (e.g., Lavie, 2005). In the visual modality, high perceptual load leads to earlier selection (thus, less processing of irrelevant stimuli) because resources are used up in processing the selected stimulus. For example, a greater number of letters in a string leads to greater perceptual load (Lavie, 2005). In contrast, high cognitive control load leads to later selection (thus, greater processing of irrelevant stimuli) because there are fewer executive resources left to maintain the selected process; holding a random order of five digits (2,5,3,4,1) in working memory is higher cognitive load than holding a fixed order in working memory (1,2,3,4,5) (Lavie, 2005). Findings from the auditory modality are not entirely consistent with this model (e.g., Sabri, Liebenthal, Waldron, Medler & Binder, 2006). Specifically, in the auditory modality when stimuli were presented binaurally, high perceptual load (induced by using smaller duration difference) led to greater processing of the distracters (deviant stimuli) compared to low load. In the visual modality the eyes can be moved to place an attended stimulus in the central part of the visual field and unattended stimuli are relegated to the periphery. In the auditory modality, a similar phenomenon is only found in dichotic listening paradigms. Distracter stimuli can be ignored by focusing attention on the central visual field in the visual modality and on one ear in the auditory modality in a dichotic listening task. In contrast, when target and non-target stimuli are presented binaurally, the only means of separating targets from distracters is by means of processing both targets and distracters. This argument supports the suggestion that in our study attention to the auditory modality by means of a tone-detection task compared to the visual modality by means of a video-watching task resulted in greater processing of the vowel stimuli.

Further support for our interpretation comes from cross-modal designs comparing processing where the target stimuli are in the visual modality and the non-target stimuli in the auditory modality versus where both target and non-target stimuli are in the same modality. In general, previous research suggests that greater processing of a stimulus that is not relevant to a primary task occurs when the task-relevant information is presented in the same channel compared to a different channel (e.g., Alain & Woods, 1993; Muller, Achenback, Oades, Bender, & Schall, 2002). For example, Muller and colleagues found greater MMN to non-target auditory deviants when attention was directed to the auditory modality compared to the visual modality using a visual versus auditory detection task, respectively (Muller, et al., 2002). There is also evidence that increased visual processing limits the processing of auditory stimuli. Specifically, Dyson and colleagues found increased N1 to auditory tones in an easy compared to difficult visual modality task (Dyson, Alain, & He, 2005). The results from these investigations suggest that our task design should have led to greater attention to the vowel stimuli in the attend-tone task compared to the passive video-watching task.

The similar latency and topography of the frontocentral negative shift in the Attend Task to the adult Nd also supports the claim the children were attending more to the vowels in the Attend compared to the Passive Task. The initially counterintuitive finding that reduced GFP corresponds to increased attention can be explained by considering how Nd sums with N1b versus P100. Lehmann & Skrandies, (1980) had suggested that the maximum power of the evoked scalp field indexes the maximum activity of the process invoked in a particular brain region by a stimulus. Careful consideration of this suggestion indicates that it is too simplistic, because activity from different neural sources occurring in the same time-frame can have different dipolar orientation and, thus, cancel out. Research with adults shows that the Nd has similar topography to the N1b (relative negativity at frontocentral sites and positivity at inferior sites, (e.g., Woldorff & Hillyard, 1991). In adult studies, the Nd will increase the frontocentral negativity and the inferior positivity. This will increase the variance in amplitude across sites, and thus the GFP. In contrast, the P100 in children has the opposite topography to the N1b (positivity at frontocentral sites and negativity at inferior sites). Addition of the Nd will result in increased negativity at the frontocentral sites and increased positivity at the inferior sites. This is seen as reduced variance across sites, and, thus a reduction in GFP.

In summary, the arguments presented above strongly suggest that the reduced frontocentral negativity, corresponding to reduced GFP indicates greater attention to the auditory stimuli. Even so, it remains possible that this negative shift found in children is different from the adult Nd and rather indicates that greater attention was allocated to processing the vowels in the Passive compared to Attend Task; however, we believe that this is the less likely explanation. Below, we will consider both explanations.

Deficits in Attention

Under the explanation that the frontocentral negativity (and reduced GFP) is the Nd, both groups of children showed equivalent attention to the speech sounds in the Attend Task. However, in the Passive Task, the children with TLD were allocating more attentional resources to processing the speech stimuli than were the children with SLI. One explanation for this finding is that children with SLI have general (non-modality specific) limitations in available cognitive resources compared to their peers with TLD, and thus had fewer resources to divide between processing the visual information and the auditory information in the Passive Task. However, if this were the case, one would expect these children to also exhibit non-verbal cognitive processing deficits. There is some evidence that children with SLI have general cognitive deficits. For example, children with SLI have been found to be impaired on attentional tasks measuring cost-benefit cueing (Nichols, Townsend & Wulfeck, 1995), measuring focused attention to stimuli presented in the left ear (Niemi, et al., 2003), and measuring auditory sustained and selective attention (Noterdaeme, et al., 2003). It should also be noted that children with SLI tend to score lower than their peers on non-verbal IQ tests, as can be seen in the IQ scores of studies that have not specifically matched the SLI and control groups using IQ (Leonard, 1998). However, this may be a consequence of poor language skills. Even on nonverbal IQ tests, as items (e.g., pattern matching) become more complex children may use language to encode, categorize or differentiate nonverbal stimuli. Even though there is some research supporting general cognitive deficit models of SLI, proponents of these models have not yet convincingly explained why speech and language skills are more severely affected than non-verbal abilities.

Alternatively, deficits in attention may be specific to the auditory modality. In this case, performance on non-auditory tasks should be within the normal range. There is considerable evidence that many children with SLI have poor auditory processing (e.g., Bishop, 1997), so it seems reasonable to suggest that specifically poor auditory attention could contribute to the observed poor performance on auditory processing tasks. Two of the three studies cited above that showed attention deficits in SLI were performed using auditory tasks (Niemi, et al., 2003 and Noterdaeme, et al., 2001).

A second explanation is that children with SLI do not automatically extend resources to processing speech. This explanation leads back to Jusczyk's (1997) developmental model, in which learning to attend automatically to relevant cues of speech is crucial for developing word recognition skills. This model also suggests that infants have an innate attraction to speech that enables them to bootstrap into learning the sound patterns of a language. An absence of this affinity for speech could lead to less effective early speech perception experience. An interesting parallel in the visual attention literature is the finding that distracter famous faces are processed equally under low or high-perceptual load conditions, whereas unknown distracter faces interfere only at low perceptual loads (Lavie, 2005). Speech could be similar to familiar faces in drawing attentional resources even when it is not the main focus of a task. The children with TLD (and adults) may have shown less difference in the ERPs to the speech in the two tasks compared to the children with SLI because these non-clinical groups treat speech as socially relevant information that requires attentional resources under most conditions.

Attentional Gating Deficit

The alternative explanation is that the frontocentral negativity (and corresponding smaller GFP) is related to less attention to the speech for all children, and thus leads to the interpretation that the children were allocating more attention to the speech in the Passive than Attend Task. According to this view, identifying target tones draws more resources away from processing the vowels than the video-watching task. In this case, the children with SLI are attending to speech in the Passive Task, when they should be ignoring it. This hypothesis could be tested with a paradigm used to examine attentional gating deficits in cases of schizophrenia. ERP studies have shown that the P50 (i.e., adult P1) obligatory component is not attenuated to a repeated stimulus in patients with schizophrenia, as is found for controls. This failure is attributed to a faulty gating mechanism at the thalamic level, which interferes with filtering out irrelevant stimuli (see for review, Potter, Summerfelt, Gold, Buchanan, et al., 2006). Such an attentional deficit could clearly interfere with language learning and processing, since novel information would not receive preference of attentional focus over familiar, repeated information. To date, there is no direct evidence supporting this hypothesis from other studies of SLI. For example, in their behavioral study, Noterdaeme and colleagues (2001) did not find poorer performance inhibiting responses on an interference or Go/Nogo task in children with SLI. As stated above, we believe that this attentional gating deficit is the less likely explanation because it hinges on the interpretation that greater attention was allocated to the speech in the Passive compared to the Attend Task.

Maturational Delay

Seven of the nine children with SLI, but only three of the ten children with TLD, were ranked in the bottom half of all participants for maximum GFP latency to the long vowels, and the four children with the latest latencies were SLI; this finding suggests that these children may be slower at processing speech than their peers. This slowed processing may be the result of poorer speech or auditory processing (Bishop, 1997; Tallal, Miller & Fitch, 1993). The delayed GFP maxima may also indicate delayed maturation, because latencies of ERP components are known to correlate negatively with age in child populations (Ponton, et al., 2000; Shafer, et al., 2000). The P100 peak does appear to be later in younger children (e.g., see Shafer, et al., 2000). This suggestion of delayed maturation is consonant with the finding that teenage children with SLI exhibit immature ERP component morphology (Bishop & McArthur, 2005).

Alternatively, the delayed GFP may be an artifact of the attentional differences; attention to the vowel stimuli leads to a smaller GFP, and therefore earlier peak. Of course, this attentional difference could also be a result of delayed maturation, but of the attentional system. The hypothesis that slower brain maturation contributes to learning disabilities is gaining support, and these data are consistent with this view (e.g., Wright & Zecker, 2004).

Differences in Topography

The topography of the response at the GFP maximum for P100 was similar in the anterior/ posterior and in the inferior/superior planes for both groups, showing frontocentral positivity and inferior-posterior negativity. This distribution is consistent with sources in left and right auditory cortex. The two groups, however, differed in hemispheric topography of the maximum positivity, with the typical-language children favoring the right and the SLI group favoring the left. The voltage maps in Figure 5 do not clearly show this difference because they display the mean topography across participants at 90 ms. The point of maximum positivity was different for individuals, and quantifying the topography at these individually-selected points reveals this hemispheric difference.

Previous neurobiological investigations have shown reduced left hemisphere amplitude (Shafer, Schwartz, Morr, Kessler, & Kurtzberg, 2000) and reduced or reversed anatomical asymmetry (e.g., Plante, Swisher, Vance, & Rapcsak, 1991) in children with SLI. Similar to our result, Mason and Mellor (1984) observed higher amplitude ERP responses at left hemispheres sites in children with SLI compared to those with TLD, although these were to non-speech stimuli. Previous research has shown that processing of formant frequency information is typically greater in the left and processing of fundamental frequency information (pitch) in the right hemisphere (e.g., Zatorre, Belin, and Penhune, 2002), and ERP studies examining speech discrimination tend to support this pattern (for review, Näätänen, 2001). However, in the present study, we were examining the obligatory evoked potentials and not the discriminative component, MMN. Thus, the greater-right than-left activation in the TLD group may simply indicate that this processing stage reflects greater processing of the acoustic than the phonetic-phonological aspect of the stimulus. The absence of this pattern in 2/3 of the children with SLI suggests that deficits in processing speech and language may involve both temporal lobes, and not exclusively the left hemisphere. An alternative explanation is that, because this is speech, the attentional Nd effect is greater in the left than the right hemisphere for children with TLD (leading to smaller left hemisphere GFP). In contrast, the children with SLI show the reversed pattern because the left hemisphere is deficient in contributing to the Nd effect.

Differences between Experiments

The current study revealed a robust attention difference between the SLI and TLD groups for the long vowels in the Passive Task, but only for the GFP analysis. In contrast, a group difference in attention was not significant for the short vowels, although analyses for the SLI and TLD groups separately found a robust difference in attention between the Attend and Passive Tasks only for the SLI group.

The finding of a stronger group difference in task (as measured by GFP) to the long than short vowels could be attributed to (unknown) differences in the populations of children with SLI in the two studies, although there are no obvious differences between these two SLI groups. Most of the children with SLI in the two studies had more severe expressive than receptive language deficits. A second explanation is related to the stimuli themselves. The rate of presentation was identical in both experiments; thus, the interstimulus interval was shorter for the long compared to the short vowels (350 ms for long and 550 ms for short). The longer ISI for the short vowels allowed for greater recovery of obligatory components. Greater recovery of N1b will lead to a broad plateau or two peaks, one corresponding to P1 and the second to P2, and the latency of these peaks will be dependent on the amplitude of the N1b. A greater amplitude N1b will result in an earlier P1 and later P2 peak. The presence of an incipient N1b to the short vowels, resulted in larger SDs for peak latency compared to that of the long vowels because the greatest amplitude peak for some children preceded the N1b and for other children followed it. The emergence of N1b may also have complicated measurement of the Nd attention affect. A third explanation is that the short vowels were less speech-like than the longer versions (50-ms vowels do not occur in isolation), and thus did not attract automatic processing to the same extent as the long vowels. Manipulation of stimulus type (more or less speech like) and ISI will be necessary to select between these explanations. A larger sample size should reveal whether the apparent difference in findings between the long and short vowel studies is related to heterogeneity of the SLI groups or to the differences in stimulus properties described above.

GFP versus Selected Sites

We chose to use global field power to examine group and task differences because this measure is unbiased by choice of site and reference. One problem in undertaking a more standard analysis of selecting a limited number of sites is that it is not obvious which sites serve as the best index of a component. If all sites are included in a standard analysis (e.g., Analysis of Variance), then Type 2 error is more likely with the greater number of sites. In addition, the measurements at each site are not necessarily independent, and the choice of reference electrode will bias the outcome of the analysis. There are various methods that could potentially be used to solve these problems (e.g., principle components analysis), but each method has shortcomings. GFP is limited in that it does not retain the topographical information. However, its advantage is that it is a simple objective measure for identifying the time and location (by examining topography at peak GFPs) of major ERP components using data from all sites.

Conclusion

These experiments lend support to the hypothesis that some children with SLI attend to speech differently than those with typical language development. They also illustrate the utility of the GFP measure for evaluating data. It is possible that this difference in attention is a reflection of an early developmental deficit in children with SLI. Specifically, some of these children may be less attracted to speech, and thus have less effective exposure to speech early in their development. Alternatively, these findings may indicate that children with SLI have poorer attention abilities in the auditory modality than their age-matched peers, and these poorer abilities may be related to delayed auditory maturation. Investigations of auditory attention early in development, particularly to speech sounds, will be necessary to determine which of these explanations is plausible.

Acknowledgments

We would like to thank Diane Kurtzberg, who is now happily retired, for helping to design these studies, and Francis Scheffler for recruiting the children and providing the language test scores. The data were collected at Albert Einstein College of Medicine in Dr. Kurtzberg's laboratory. This work was supported by NIH grants DC00223 to D.K, DC003885 to R.G.S, and HD46193 to V.L.S.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alain C, Woods DL. Distracter clustering enhances detection speech and accuracy during selective listening. Perception and Psychophysics. 1993;54:509–514. doi: 10.3758/bf03211773. [DOI] [PubMed] [Google Scholar]

- Bishop DVM. Listening out for subtle deficits. Nature. 1997;387:129–130. doi: 10.1038/387129a0. [DOI] [PubMed] [Google Scholar]

- Bishop DVM, Carolyn RP, Deeks JM, Bishop SJ. Auditory temporal processing impairment: neither necessary nor sufficient for causing language impairment in children. JSLHR. 1999;42:1295–1310. doi: 10.1044/jslhr.4206.1295. [DOI] [PubMed] [Google Scholar]

- Bishop DVM, McArthur GM. Individual differences in auditory processing in specific language impairment: A follow-up study using even-related potentials and behavioural thresholds. Cortex. 2005;41:327–341. doi: 10.1016/s0010-9452(08)70270-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceponiene R, Cheour M, Näätänen Interstimulus interval and auditory event-related potentials in children: evidence for multiple generators. EEG and Clinical Neurophysiology. 1998;108:345–354. doi: 10.1016/s0168-5597(97)00081-6. [DOI] [PubMed] [Google Scholar]

- Coch D, Skendzel W, Neville HJ. Auditory and visual refractory period effects in children and adults: An ERP study. Clinical Neurophysiology. 2005;116:2184–2203. doi: 10.1016/j.clinph.2005.06.005. [DOI] [PubMed] [Google Scholar]

- Datta H, Shafer VL, Morr ML, Kurtzberg D, Schwarz RG. Neurophysiological indices of vowel-procesing deficits in children with specific language impairment. doi: 10.1162/0898929054475217. Under revision. [DOI] [PubMed] [Google Scholar]

- Dyson BJ, Alain C, He Y. Effects of visual attentional load on low-level auditory scene analysis. Cognitive, Affective & Behavioral Neuroscience. 2005;5:319–338. doi: 10.3758/cabn.5.3.319. [DOI] [PubMed] [Google Scholar]

- Gilley PM, Sharma A, Dorman M, Martin K. Developmental changes in refractoriness of the cortical auditory evoked potential. Clinical Neurophysiology. 2005;116:648–657. doi: 10.1016/j.clinph.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Hansen JC, Hillyard SA. Endogenous brain associated with selective auditory attention. EEG and Clinical Neurophysiology. 1980;49:277–290. doi: 10.1016/0013-4694(80)90222-9. [DOI] [PubMed] [Google Scholar]

- Jirsa RE, Clontz KB. Long latency auditory event-related potentials from children with auditory processing disorders. Ear and Hearing. 1990;11:222–232. doi: 10.1097/00003446-199006000-00010. [DOI] [PubMed] [Google Scholar]

- Jusczyk P. The Discovery of Language. Cambridge: MIT Press; 1997. [Google Scholar]

- Korpilahti P, Lang HA. Audotory ERP components and mismatch negativity in dysphasic children. EEG and Clinical Neurophysiology. 1994;91:256–264. doi: 10.1016/0013-4694(94)90189-9. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused?: Selective attention under load. Trends in Cognitive Sciences. 2005;2:75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Lehmann D, Skrandies W. Reference-free identification of components of checkerboard-evoked multichannel potential fields. EEG & Clin Neurophysiol. 1980;48:609–621. doi: 10.1016/0013-4694(80)90419-8. [DOI] [PubMed] [Google Scholar]

- Leonard LB. Children with Specific Language Impairment. Cambridge: MIT Press; 1998. [Google Scholar]

- Lincoln AJ, Courchesne E, Harms L, Allen M. Sensory modulation of auditory stimuli in chidren with autism and receptive developmental language disorder – event related potential evidence. Journal of Autism and Developmental Disorders. 1995;25:521–539. doi: 10.1007/BF02178298. [DOI] [PubMed] [Google Scholar]

- Marler JA, Champlin CA, Gillam RB. Auditory memory for backward masking signals in children with language impairment. Psychophysiology. 2002;39:767–780. doi: 10.1111/1469-8986.3960767. [DOI] [PubMed] [Google Scholar]

- Mason SM, Mellor DH. Brain-stem, middle latency and late cortical evoked potentials in children with speech and language disorders. Electroencephalography and Clinical Neurophysiology. 1984;59:297–309. doi: 10.1016/0168-5597(84)90047-9. [DOI] [PubMed] [Google Scholar]

- Muller BW, Achenback C, Oades RD, Bender S, Schall U. Modulation of mismatch negativity by stimulus diviance and modality of attention. NeuroReport. 2002;13:1317–1320. doi: 10.1097/00001756-200207190-00021. [DOI] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory-somatosensory mutlisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cerebral Cortex. 2005;15(7):963–74. doi: 10.1093/cercor/bhh197. Published online 10 November. [DOI] [PubMed] [Google Scholar]

- Näätänen R. The role of attention in auditory information processing as revealed by event-related potentials and other brain measures of cognitive function. Behavioral and Brain Sciences. 1990;13:201–288. [Google Scholar]

- Näätänen The perception of speech sounds by the human brain as reflected by the mismatch negativity (MMN) and its magnetic equivalent (MMNm) 2001;38:1–21. doi: 10.1017/s0048577201000208. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Lehtokoski A, Lennes M, Cheour-Luhtanen M, Huotilainen M, Iivonen A, Vainio M, Alku P, Ilmoniemi RJ, Luuk A, Allik J, Sinkkonen J, Alho K. Language-specific phoneme representations revealed by electric and magnetic brain responses. Nature. 1997;385:432–434. doi: 10.1038/385432a0. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Coffey SA, Holcomb PJ, Tallal P. The neurobiology of sensory and language processing in language-impaired children. Journal of Cognitive Neuroscience. 1993;5:235–253. doi: 10.1162/jocn.1993.5.2.235. [DOI] [PubMed] [Google Scholar]

- Nichols S, Townsend J, Wulfeck B. Covert visual attention in language impaired children. San Diego, CA: Center for Research in Language, University of California; 1995. [Google Scholar]

- Niemi J, Gundersen H, Leppasaari T, Hughahl K. Speech lateralization and attention/executive functions in a Finnish family with specific language impairment (SLI) Journal of Clinical and Experimental Neuropsychology. 2003;25:457–464. doi: 10.1076/jcen.25.4.457.13868. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Burton LT. The role of early language experience in the development of speech perception and phonological processing abilities: Evidence from 5-year olds with histories of otitis media with effusion and low socioeconimic status. J of Communicative Disorders. 2005;38:29–63. doi: 10.1016/j.jcomdis.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Noterdaeme M, Amorosa H, Mildenberger K, Sitter S, Minow F. Evaluation of attention problems in children with autism and children with a specific language disorder. European Child and Adolescent Psychiatry. 2001;10:58–66. doi: 10.1007/s007870170048. [DOI] [PubMed] [Google Scholar]

- Plante E, Swisher L, Vance R, Rapcsak S. MRI findings in boys with specific language impairment. Brain and Language. 1991;41:52–66. doi: 10.1016/0093-934x(91)90110-m. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ, Kwong B, Don M. Maturation of human central auditory system activity: Evidence from multi-channel evoked potentials. Clin Neurophysiol. 2000;111:220–236. doi: 10.1016/s1388-2457(99)00236-9. [DOI] [PubMed] [Google Scholar]

- Potter D, Summerfelt A, Gold J, Buchanan RW, et al. Review of Clinical Correlates of P50 Sensory Gating Abnormalities in Patients with Schizophrenia. Schizophrenia Bulletin. 2006 doi: 10.1093/schbul/sbj050. Epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rice ML, Wexler K. Grammaticality judgments of an extended optional infinitive grammar : Evidence from English-speaking children with specific language impairment. JSLHR. 1999;42:943–961. doi: 10.1044/jslhr.4204.943. [DOI] [PubMed] [Google Scholar]

- Rosen S. Auditory processing in dyslexia and specific language impairment: Is there a deficit? What is its nature? Does it explain anything? J of Phonetics. 2003;31:509–527. [Google Scholar]

- Sabri M, Liebenthal E, Waldron EJ, Medler DA, Binder JR. Attentional modulation in the detection of irrelevant deviance: A simultaneous ERP/fMRI study. J of Cognitive Neuroscience. doi: 10.1162/jocn.2006.18.5.689. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel S, Castellan NJ, Castellan NJ., Jr . Nonparmetric Statistics for the behavioral sciences. McGraw-Hill: 1990. [Google Scholar]

- Shafer VL, Morr ML, Datta H, Kurtzberg D, Schwarz RG. Neurophysiological indices of speech processing deficits in children with specific language impairment. J of Cognitive Neuroscience. 2005;17:1168–1180. doi: 10.1162/0898929054475217. [DOI] [PubMed] [Google Scholar]

- Shafer VL, Morr ML, Kreuzer J, Kurtzberg D. Maturation of mismatch negativity in school-age children. Ear Hear. 2000;21:242–251. doi: 10.1097/00003446-200006000-00008. [DOI] [PubMed] [Google Scholar]

- Shafer VL, Schwartz RG, Morr ML, Kessler KL, Kurtzberg D. Deviant neurophysiological asymmetry in children with language impairment. Neuroreport. 2000;11:3715–3718. doi: 10.1097/00001756-200011270-00025. [DOI] [PubMed] [Google Scholar]

- Strange W, Shafer VL. Speech perception in second language learners: the re-education of selective perception. In: Zampini M, Hansen J, editors. Phonology and Second Language Acquisition. Cambridge University Press; In press. [Google Scholar]

- Sussman JE. Perception of formant transition cues to place of articulation in children with language impairments. JSHR. 1993;36:1286–1299. doi: 10.1044/jshr.3606.1286. [DOI] [PubMed] [Google Scholar]

- Tallal P, Miller S, Fitch RH. Neurobiological basis of speech: a case for the preeminence of temporal processing. Annals of the NY Academy of Sciences. 1993;682:27–47. doi: 10.1111/j.1749-6632.1993.tb22957.x. [DOI] [PubMed] [Google Scholar]

- Tonquist-Uhlen I, Borg E, Persson HE, Spens KE. Topography of auditory evoked cortical potentials in children with severe language impairment: the N1 component. Electroencephalography and Clinical Neurophysiology. 1996;100:250–260. doi: 10.1016/0168-5597(95)00256-1. [DOI] [PubMed] [Google Scholar]

- Uwer R, Albrecht R, von Suchodoletz W. Automatic processing of tones and speech stimuli in children with specific language impairment. Developmental Medicine & Child Neurology. 2002;44:527–532. doi: 10.1017/s001216220100250x. [DOI] [PubMed] [Google Scholar]

- Winkler I, Kujala T, Tiitinen H, Sivonen P, Alku P, Lehtokoski A, Czigler I, Csepe V, Ilmoniemi R, Näätänen R. Brain responses reveal the learning of foreign language phonemes. Psychophysiology. 1999;36:638–642. [PubMed] [Google Scholar]

- Woldorff MG, Hillyard SA. Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalography and clinical Neurophysiology. 1991;79:170–191. doi: 10.1016/0013-4694(91)90136-r. [DOI] [PubMed] [Google Scholar]

- Wright BA, Zecker SG. Learning problems, delayed development, and puberty. Proceedings of the National Academy of Sciences. 2004;101:9942–9946. doi: 10.1073/pnas.0401825101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends in Cognitive Science. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]