Abstract

This study examines the relationship between hospital structural characteristics and coding accuracy from the perspective of quality measurement. To measure coding accuracy for quality measurement, the study utilizes the “present on admission” indicator, a data element in the New York state hospital administrative database. This data element is used by hospitals across New York state to indicate if a particular secondary diagnosis is “present on admission,” “not present on admission,” or “uncertain.” Since the accurate distinction between comorbidities (present at admission) and complications (not present at admission,) is critical for risk adjustment in comparative hospital quality reports, this study uses the occurrence of the value “uncertain” in the “present on admission” indicator as the primary measure of coding accuracy. A lower occurrence of the value “uncertain” is considered to be reflective of better coding accuracy. Moreover, since coding accuracy of the “present on admission” indicator links back to the accuracy of physician documentation, a focus on the occurrence of the value “uncertain,” also helps gain insight into physician documentation efficacy within the facility. By utilizing this approach, therefore, the study serves the twin purpose of 1) addressing the gap in the literature with respect to large-scale studies of “coding for quality,” and 2) providing insight into the structural characteristics of institutions that are likely facing organizational challenges of physician documentation from the perspective of quality measurement.

Key words: health information management, hospital coding accuracy, hospital quality measurement, risk adjustment, comparative report cards, public reporting, hospital administrative data, present on admission indicator, comorbidities and complications, physician documentation, physician-coder coordination

Introduction

Growing concern over the variation in healthcare quality across the nation and increasing involvement of consumers in healthcare decision making have resulted in a strong impetus in the healthcare industry toward hospital quality measurement, public reporting of hospital performance and persistent efforts to increase the alignment between hospital quality and reimbursement.1–4 These rapidly growing trends reflect concerns and actions taken by both private and public stakeholders in the industry, including federal and state governments, health insurance plans, healthcare providers, purchasing/consumer groups, and business coalitions.5

Traditionally, hospitals have submitted coded discharge data (administrative data) to external stakeholders including public and private payers to claim financial reimbursement for services rendered.6,7 In the changing comparative quality-reporting environment, however, (especially since the turn of the millennium) the same hospital discharge abstracts are increasingly being used by these external entities to conduct performance evaluation and outcomes analysis for making quality-rating decisions.8,9 An immediate consequence of the use of administrative data for hospital quality measurement has been the rise in importance of coding accuracy of these data.

In the context of today's changing environment of comparative hospital quality reporting, this study examines the relationship between hospital structural characteristics and coding accuracy from a quality measurement perspective. The remaining portion of the paper presents the study background, research methods, study results, and a discussion of study findings.

Background

Hospital coding accuracy is critical for ensuring accurate risk adjustment, and, correspondingly, reliable comparative quality ratings. To obtain fair comparative ratings of hospitals from administrative databases, hospital outcomes such as mortality rates and complication rates need to be risk adjusted for differences in patient characteristics—mainly, differences in patients' severity of illness (comorbidities at admission) and patient demographics. Severity-of-illness computations from hospital administrative data are based on coded secondary diagnoses. Secondary diagnoses, however, include both comorbidities (that exist at the time of admission) and complications, which occur during patients' stays.

The goal of risk adjustment is to adjust for differences in patient characteristics across hospitals so as to enable fair comparison of hospitals' outcomes (such as the mortality rates or the complication rates).10 The process of risk adjustment therefore strives to account for patients' demographics as well as comorbidities or preexisting illnesses at the time of admission that are considered beyond the control of the hospital, while taking care to exclude complications, which arise subsequent to the hospital's admission. This is because complications that develop post admission are considered to be a reflection of a hospital's performance or quality of care provided.11,12 In this context, therefore, accurate quality rating is largely dependent on not only comprehensive coding of comorbidities and complications but also the accurate distinction between comorbidities and complications. Consider the example of a patient who dies after being admitted with a fractured hip. After further study of the medical record, the principal diagnosis is identified as the fractured hip. If this same patient had suffered a myocardial infarction (MI) during hospitalization, the MI would be considered a “complication,” and hence coded as a secondary diagnosis.13

The purpose of “risk adjustment” is to adjust for “comorbidities” (present at admission), and take care to not adjust for “complications.” Accordingly, in the above example, the MI would not be adjusted for as a risk factor (since it developed during hospitalization). It is important to note that when fewer risk factors are adjusted for, it is likely to lead to a less favorable risk-adjusted outcome for the hospital (in this case, the hip fracture mortality rate) compared to when more risk factors are adjusted for. As such, in the above example, if in fact the MI had been present at admission, but owing to poor documentation and/or coding, had been erroneously classified as “not present on admission,” then the hospital will likely be presented with a less favorable risk-adjusted mortality than it rightfully should have been. This explains why the accurate distinction between comorbidities and complications is crucial from the perspective of risk adjustment and comparative quality measurement.

Although the importance of hospital coding accuracy for quality measurement is widely recognized, industry literature suggests that there may be considerable inconsistency and variation in coding accuracy of administrative data. Studies have demonstrated that nearly 20 percent of payments based on codes on hospital bills are incorrect, and that there is a considerable variation in hospital coding accuracy.14–16 For instance, in a nationwide study of coding accuracy by hospital structural characteristics, Lorence and Ibrahim found substantial variation in coding accuracy by geographic location and bed size, with small rural facilities performing consistently better than large urban hospitals.17,18

Studies investigating factors contributing to coding accuracy have found that a majority of the coding errors occur due to inadequate physician documentation in the health record.19–23 While scant literature is available on reasons for improper documentation, federal and state investigations into issues of physician documentation have suggested that there is an overall lack of knowledge exchange and communication hospital administrators, physicians, and coders, which may be playing an important role in adversely impacting documentation, and, correspondingly, coding accuracy. For instance, audits by the Office of Inspector General and MassPro have inferred that documentation problems largely stem from physicians who do not understand the methodology behind coding and how documentation affects coding and billing.24–25 This suggests that neither professional subgroup, physicians or coders, may have a proper understanding of the role of documentation and coding with respect to organizational outcomes such as hospital reimbursement and comparative quality results.

It is important to note at this juncture that existing studies on hospital coding accuracy have viewed coding from a purely reimbursement perspective rather than a quality-measurement perspective. In doing so, they have mostly focused on errors related to the selection and coding of the principal diagnosis and/or diagnosis-related groups (DRGs). Few studies have attempted to understand and explain coding accuracy from a purely quality-measurement perspective, identifying errors pertaining to the coding of secondary diagnoses that might be significant enough to change hospital quality ratings or comparative rankings. Moreover, the few studies that have attempted to assess coding for quality (see, for example, studies by Ballaro and Emberton, Dixon et al, Gibson and Bridgman, and Kashner) have for the most part relied on a sampling of coded diagnoses and procedures taken from paper records, relying on a code-recode methodology (comparing coded abstracts with information on patient charts).26–29 These studies however, are limited by small sample size.

In today's context of the growing use of administrative databases for comparative hospital quality reporting, this study addresses a gap in the literature with respect to large-scale assessments of hospital coding accuracy from the quality measurement perspective. In the process, it also lays a foundation for future research directed toward addressing hospital organizational challenges associated with improving physician documentation and communication among hospital administrators, physicians, and coders in the context of quality measurement.

Research Questions

In the context of today's changing environment of comparative quality measurement using administrative data, this study seeks to address the following research question.

What is the relationship between basic hospital structural characteristics such as bed size, teaching status, region, and rural/urban location, and coding accuracy for quality measurement? In other words, is there a systematic association between specific hospital structural characteristics and coding accuracy for quality measurement, such that some are associated with better coding accuracy and others with poorer coding accuracy?

Research Methods

To address its research question, the study performs a descriptive analysis of existing databases. Specifically, inpatient discharge data for 2000 through 2004 were obtained from the New York state Department of Health (DOH) and analyzed at the hospital level.

To measure coding accuracy for quality measurement, the study utilizes the “present on admission” indicator in the DOH database. The “present on admission indicator” is used by hospitals across New York state to indicate if a particular patient-level secondary diagnosis is “present on admission,” “not present on admission,” or “uncertain.” This study used the occurrence of the value “uncertain” in the “present on admission” indicator for a select set of diagnosis codes, as the primary measure of coding accuracy. Correspondingly, the hospital's percentage of secondary diagnosis codes (for a particular set of diagnosis codes), coded “uncertain” (vis-à-vis “present on admission” or “not present on admission”) served as the measure of coding accuracy for quality measurement, wherein, a lower percentage of codes coded “uncertain” is considered to be indicative of better coding accuracy, and vice versa.

The set of diagnoses for which the “present on admission” indicator is assessed represents conditions that are classifiable as either “present on admission” or “not present on admission,” depending on the specific case at hand. In other words, the “present on admission” indicator for these conditions is not a priori hard coded to a particular value. Examples of codes in this diagnosis set (shown in Appendix 1) include Acute Myocardial Infarction, Pneumonia, and Respiratory Failure. Since the classification of the “present on admission” indicator for these conditions varies based on the case at hand, the coding accuracy of the “present on admission” indicator is considered to be reflective of the quality of physician documentation, and likewise, the efficacy of physician documentation within the facility.

The DOH databases provided access to hospital-level data on principal and secondary diagnoses as well as the percentage of secondary diagnosis codes coded “present on admission,” “not present on admission,” and “uncertain” (using the “present on admission indicator”). To analyze the relationship between hospital structural characteristics and coding accuracy (for quality measurement), additional hospital-level data were obtained from DOH on basic hospital structural characteristics, similar to the ones used in the Lorence and Ibrahim study mentioned earlier.30,31 This in turn enabled the study to verify if the patterns of association between hospital structural characteristics and coding accuracy from the quality measurement perspective are consistent with the reimbursement perspective. These structural characteristics examined in this study are further described below.

Bed Size is defined as the actual number of licensed beds in the facility. The study groups bed size into the following: Less than 50 beds, 50–99 beds, 100–249 beds, 250–500 beds, greater than 500 beds.

Teaching Status categories of ‘major teaching,' ‘minor teaching,' and ‘non-teaching,' are reflective of the categories used by both DOH and the Healthcare Association of New York State (HANYS). These categories have been defined to reflect the scope and depth of the facility's medical “residency” or training program and/or affiliation with an academic medical center.

Rural/Urban Status is defined to identify a facility's geographic location as “urban” or rural.” Rural designation is given to all locations that do not form part of federally designated Metropolitan Statistical Areas (or MSAs).

Region is defined to indicate the region (within NYS) to which a hospital belongs. Hospitals' regional assignment is based on the DOH classification of Health Service Areas (HSAs) across New York state. Regional classification is as follows: Region 1: Western NY, Region 2: Finger Lakes, Region 3: Central NY, Region 4: Northeast NY, Region 5: Hudson Valley, Region 6: Bronx, Region 7: Brooklyn, Region 8: Manhattan 1, Region 9: Manhattan 2, Region 10: Queens and Staten Island, Region 11: Long Island.

Additionally, to enable comparisons of hospital coding accuracy across groups of similar facilities, the study uses “peer group” definitions used by both the DOH and the HANYS, to divide New York hospitals into eight peer groups based on bed size, teaching status, and rural/urban status. These peer groups are outlined below.

Peer Group 1: All Urban, Major Teaching Hospitals, with Greater than 500 Beds

Peer Group 2: All Urban, Major Teaching Hospitals, with 150–500 Beds

Peer Group 3: All Urban, Minor Teaching Hospitals, with 250–500 Beds

Peer Group 4: All Urban, Minor Teaching Hospitals, with Less than 250 Beds

Peer Group 5: All Urban, Nonteaching Hospitals, with Greater than 200 Beds

Peer Group 6: All Urban, Nonteaching Hospitals, with Less than 200 Beds

Peer Group 7: All Rural, Nonteaching Hospitals, with Greater than 50 Beds

Peer Group 8: All Rural, Nonteaching Hospitals, with Less than 50 Beds

Study methods included simple comparative analyses and multivariate regression analyses to describe the relationship between hospital structural characteristics and coding accuracy. The remaining portion of this paper describes the study background, methods, and results and then presents a discussion of results. Specifically, two regression models were used: 1) Linear Regression Model on Panel Data, and 2) Random Effects Model on Panel Data.

Results

Simple comparative analyses

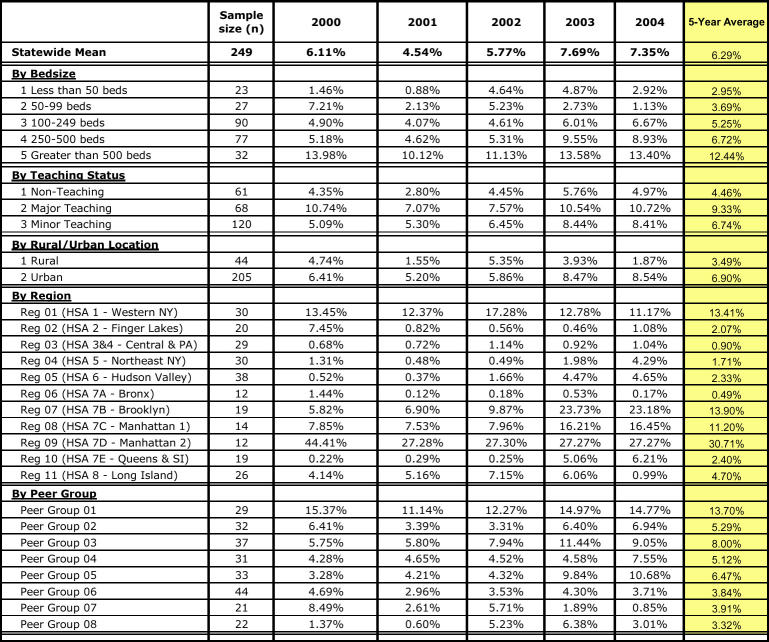

Table 1 shows the hospitals' percentage of codes (in the specified diagnosis set) coded “uncertain” for 2000 through 2004, both statewide, and by hospital structural characteristic. As Table 1 indicates, the statewide average increased from 6.11 percent in 2000 to 7.35 percent in 2004. Comparative analysis of hospital coding performance by structural characteristic, moreover, revealed considerable variation in coding accuracy by structural characteristic, with the variation by region being most stark.

TABLE 1.

Hospitals' Percentage of Secondary Diagnosis Codes (in Diagnosis Set) Coded “Uncertain”: 2000–2004

By bed size, the smallest hospitals (with less than 50 beds) showed the best performance or lowest percentage of codes (in the specified diagnosis set) coded “uncertain” (2.95 percent). On the other hand, the largest hospitals (with greater than 500 beds,) recorded the highest average (12.44 percent). The table also shows that the percentage of codes coded “uncertain” consistently increases with bed size.

By teaching status, nonteaching hospitals recorded the best performance with the lowest average (4.46 percent). On the other hand, major teaching hospitals recorded the highest average (9.33 percent).

Rural hospitals recorded a lower average or better performance (3.49 percent), compared to urban hospitals (6.90 percent).

By peer group, urban, rural non-teaching hospitals with less than 60 beds recorded the best performance (3.32 percent). On the other hand, major teaching hospitals with greater than 500 beds, recorded the worst performance with a far higher percentage of codes coded “uncertain” (13.70 percent).

Regional variation in coding accuracy for quality measurement was much more substantial. Manhattan 2 hospitals recorded the highest average (30.71 percent), whereas, upstate hospitals including Finger Lakes, Central, Northeast and Hudson Valley hospitals recorded far lower averages (2.07 percent, 0.90 percent, 1.71 percent and 2.33 percent, respectively). Bronx hospitals stood out among NYC hospitals, with a low average of 0.49 percent, whereas western NY stood out among upstate hospitals, with a high average of 13.71 percent.

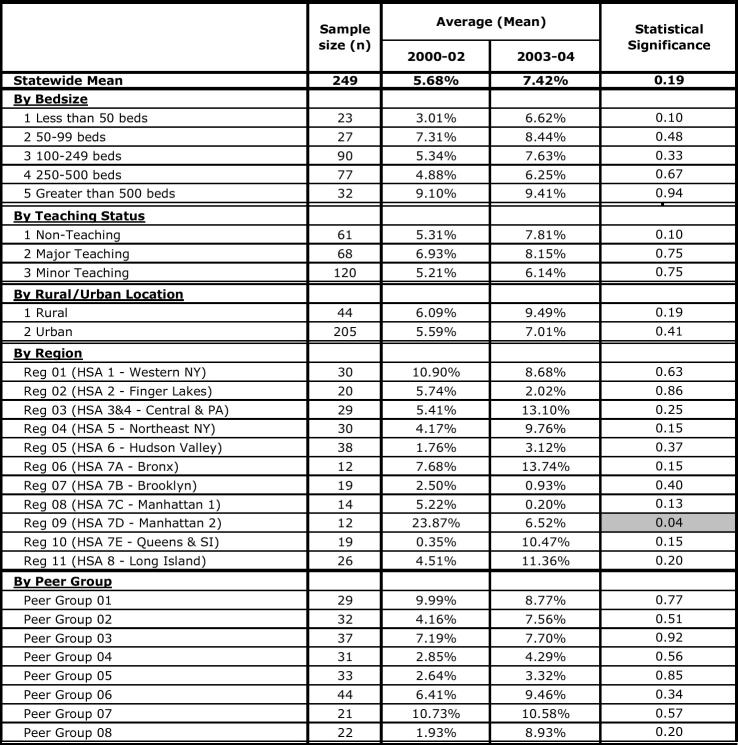

Table 2 shows the trend in hospitals' percentage of codes coded “uncertain” between the two time periods, 2000–02 to 2003–04 by structural characteristic, as well as the statistical significance of the trends between the two time periods.

TABLE 2.

Trend in Hospitals' Percentage of Codes Coded “Uncertain”: 2000-02 VS. 2003-04

There was interest in exploring and describing the trends in hospital coding accuracy from 2000–02 to 2003–04 because the national Agency for Healthcare Research and Quality (AHRQ) inpatient quality indicators (defined based on discharge codes and administrative data) became publicly available in 2001–02. Correspondingly, for the first time, in the year 2002, public hospital quality reporting using these new national AHRQ quality indicators began in full swing in New York state by two entities 1) a major business coalition (The Niagara Business Coalition) and 2) a large health plan (Excellus Blue Cross Blue Shield). While the Niagara Business Coalition targeted hospitals across the state, the Excellus report card was specific only to hospitals in regions of upstate New York. As such, there was interest in identifying any trends toward improvement or deterioration in hospital coding accuracy for quality measurement during this time frame.

As the table shows, the Manhattan 2 region recorded a statistically significant improvement in coding performance. To elaborate, this region showed a statistically significant decrease of 23.87 percent to 6.52 percent (p<0.05), in percentage of codes coded “uncertain” between the two time frames.

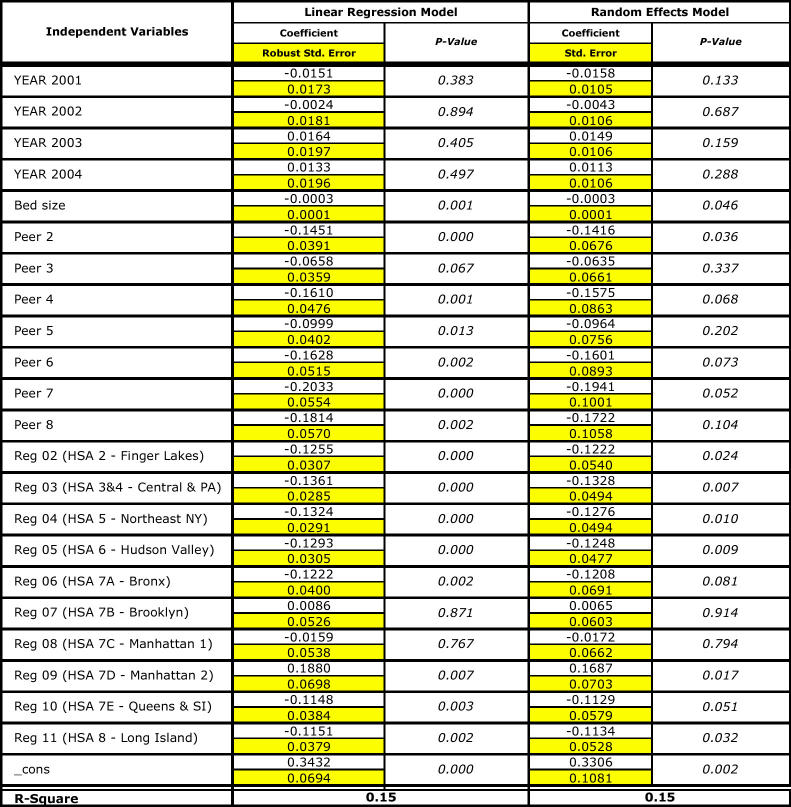

Multivariate Regression Analyses

Table 3 shows results from the multivariate regression analyses. It includes the regression results for both the linear regression model and the random effects models. The linear regression model was run with the “robust” option to control for hetereoskedasticity and returned an R-square of 0.15. The random effects model returned not only a similar R-square (0.15) as the linear regression model, but also similar results in terms of the direction and magnitude of regression coefficients. None of the “time” variables were significant in either model indicating that the models could not capture/identify any significant trends in statewide coding accuracy during the specified time period. Regression results by structural characteristics are summarized below.

TABLE 3.

The Relationship between Hospital Structural Characteristics and Coding Accuracy for Quality Measurement

Bed size: Bed size had a significant (p<0.05) positive influence on coding performance indicating that for every bed added, hospital percentage of codes (in the specified diagnosis set) coded “uncertain” decreased by 0.03 percent.

Peer Group: Compared to the excluded peer group, major teaching hospitals with greater than 500 beds, all groups except urban, minor teaching hospitals with 250–500 beds were significantly different (p<0.05). All these groups showed a significantly better coding performance with approximately 10 to 20 percent lower percentages, on average, of codes coded “uncertain.”

Region: Compared to the excluded region, i.e., western NY, all regions except Brooklyn and Manhattan 1 turned out to be significantly different. Moreover, Finger Lakes, Central NY, Northeast, Hudson, Bronx, Queens, and Long Island showed a significantly better performance with an approximately 10 to 15 percent lower number of “uncertain” codes. On the other hand, Manhattan 2 showed significantly worse performance with approximately 19 percent higher number of diagnosis set codes coded “uncertain,” compared to western NY.

Summary of Results

Study analyses provide an understanding of the relationship between hospital structural characteristics and coding accuracy for quality measurement. From the simple comparative analyses by structural characteristic, we learn that small, rural, nonteaching hospitals performed consistently better than their large, urban, major teaching counterparts. By region, we see strong evidence of upstate NY hospitals performing consistently better than NYC hospitals. However, we also note that among upstate NY hospitals, western NY has performed consistently worse than Central, Northeast and Finger Lakes regions and sometimes worse than NYC regions of Brooklyn and Queens. On the other hand, we also noted that among NYC regions, the Bronx region performed better than other NYC regions, such as Brooklyn and Manhattan 1, and almost on par with some regions of upstate NY. Last but not least, Manhattan 2 consistently emerged as the worst performer among all regions.

Table 2, which assesses the trend in hospital coding accuracy between the two time frames, 2000–02 to 2003–04, provides an interesting complement to facilities hospitals, which consistently showed worse coding performance in the simple comparative analyses, actually recorded a statistically significant improvement (p<0.01) in performance between the two time periods (before and after public reporting). Conversely, no statistically significant trends were noted among hospitals in the upstate NY region.

The multivariate regression analyses largely corroborate the simple comparative analyses. By peer group, regression results show that larger, urban, teaching facilities performed worse than their smaller rural, nonteaching counterparts. Similarly, on the regional front, regression results indicate that hospitals in the Manhattan 2 region performed worse than all other regions including western NY. Western NY in turn performed worse than all remaining regions. One instance where regression analyses did not agree with comparative analysis was with respect to the influence of bed size on coding accuracy. Regression results showed that increasing bed size might have a positive impact on coding performance. However, by peer group, the results showed that large major teaching facilities fared poorer than their smaller, rural, nonteaching counterparts. This discrepancy implies that there may be factors other than bed size in urban, teaching facilities that negatively impact coding performance.

Discussion

Study analyses help understand that large, major teaching, urban facilities located in NYC regions, specifically Brooklyn and Manhattan regions, perform worse in coding accuracy for quality measurement compared to small, rural, nonteaching, located in upstate New York.

In addition to describing the relationship between hospital structural characteristics and coding accuracy, this study makes an important contribution to the health information management literature, by addressing the gap related to large-scale studies on coding accuracy for quality measurement. It also provides an interesting complement to existing large-scale studies on coding for reimbursement. For instance, one might infer that results from this study only partially corroborate results from the Lorence and Ibrahim study, which examined coding accuracy at the national level, from a reimbursement perspective.32,33 For instance, this study agrees with their finding that rural facilities exhibit better coding accuracy for quality measurement compared to their urban counterparts, but does not corroborate their finding that large hospitals, in terms of bed size, perform worse than their smaller counterparts.

In addition to addressing the gap in the literature, this study makes important contributions to theory/literature through its methodology, especially its novel approach to constructing the dependent variable. The study measures coding accuracy for quality measurement, by evaluating the frequency of occurrence of the value “uncertain” in the “present on admission” indicator. In doing so, it not only creates a measure of hospital coding accuracy for quality measurement (for use in large-scale administrative data sets), but also develops an indicator of physician documentation efficacy in the facility. Therefore, in the context of findings from federal and state-level audits on hospital coding accuracy (briefly described earlier) study results help identify the types of institutions that are likely facing challenges of physician documentation, and, correspondingly, of communication/knowledge exchange among hospital administrators, physicians, and coders in the context of using administrative data for quality measurement. These findings in turn lay a foundation for future organizational and management research directed toward addressing these types of challenges.

Study analyses also reveal that Manhattan 2 facilities experienced a statistically significant (p<0.01) improvement in performance between the two time periods before and after public reporting (2000–02 vs. 2003–04). The improvement shown by Manhattan hospitals in light of their consistently poor comparative performance may be dismissed as a phenomenon of “regression to mean.” At the same time, however, the substantial improvement in coding performance of Manhattan hospitals suggests that there may be other factors, outside the scope of this study, systematically influencing coding accuracy for quality measurement.

Study Limitations

The most notable limitation of the study is that owing to its reliance on secondary data sources (specifically, administrative data) it lacks richer data to explore the various other organizational, structural, and institutional factors that may be influencing hospital-coding accuracy. As mentioned earlier, the low R- square of 0.15 indicates that only 15 percent of the variation in hospital coding accuracy could be explained by the variables included in this study owing to its reliance on administrative databases. This implies that there are clearly other variables with potentially higher influence on coding accuracy that this study is not capturing. For instance, the study does not include “severity of illness” and/or disease complexity as a factor (or independent variable) influencing coding accuracy. It does not include organizational characteristics such as communication or senior leadership involvement in quality measurement. Similarly, it does not include potential environmental factors such as work force limitations like the availability of qualified coders or coder turnover rate. All these issues in turn limit the study's ability to comprehensively explain the variation in hospital coding accuracy. There are also limitations with measuring coding accuracy for quality measurement using the present on admission indicator. One might argue that this approach may not be adequately capturing accuracy of actual secondary diagnosis coding. As is clear from the earlier part of this paper, there have been different ways of measuring hospital coding accuracy, such as principal diagnosis or DRG coding accuracy or cross verification of administrative data and medical records, It would be important to note that each method has its own pros and cons. They serve different purposes; and each could in fact produce different results. Moreover, although the study relies on a statewide database, study results are limited in their generalizability, owing to the fact that the “present on admission” indicator or “emergent indicator,” on which the entire analysis of secondary diagnosis coding accuracy is based, is only available in a handful of state databases. Potential avenues for future research based on both study findings and limitations are outlined below.

Areas for Future Research

First, study analyses provide an understanding of the relationship between hospital structural characteristics and coding accuracy for quality measurement in New York state. However, in order for these results to be generalizable, we also need to understand the patterns of association among these variables in other parts of the nation. In this context, a potential avenue for future research may be to perform a similar study in other states utilizing the “present on admission” indicator (such as California).

Second, findings from trend analyses of hospital coding accuracy before and after public reporting in New York state provide a strong foundation for future research. The finding of statistically significant improvement in coding accuracy of hospitals in the Manhattan region may be further explored to understand potential causes of the phenomenon. One takeaway from the earlier “background” section is that specialized (esoteric) knowledge and expertise are needed at the hospital level to properly understand the connections among hospital coding processes, and limitations in administrative data sets and risk adjustment methodologies, to be able to effectively meet the demands of the new public reporting environment. However, it is important in this context to recognize that the traditional community hospital has not had ready access to such acumen, expertise and skill sets (through its own human resources), given that the whole trend toward quality measurement and improvement based on statistical expertise and large-scale data management has been a relatively new industry phenomenon. As such, an interesting avenue for future research may be to explore the extent to which the improvement shown by Manhattan 2, could be attributed to a greater access (enjoyed by hospitals this region,) to specialized knowledge, expertise, support, and education related to quality measurement and risk adjustment. Such research could have important implications from a policy perspective, in the context of hospital quality measurement and reporting. For instance, they could help send the message that for macro/industry-level changes to translate into organizational-level change, enough provision may need to be made for change levers to be available in the hospital's immediate environment.

Third, because the coding accuracy measure also provides insight into documentation efficacy, this study helps identify the types of institutions that are likely facing organizational challenges of communication and knowledge exchange among physicians, coders, and hospital administrators. Moreover, findings indicate that there may be considerable variation at the individual hospital level in this regard. These findings in turn provide a foundation for future research directed toward addressing these types of challenges. An interesting research avenue may be to perform a comparative exploratory study of knowledge exchanges and communication in hospitals performing both well and poorly with respect to coding accuracy for quality measurement. Such a study can go a long way in helping identify best practices with respect to managing hospital documentation and coding processes in the context of quality measurement, and, more important, in identifying and formulating strategies for managing change in professional organizations.

Last but not least, the finding that bed size may have a positive influence on coding performance and that there may be other factors negatively impacting coding performance in urban teaching facilities constitutes an important avenue for future research. Accordingly, studies directed toward identifying the particular characteristics of large, urban, teaching facilities negatively associated with coding performance can help provide specific pointers for addressing the issue of poor coding performance in this peer group.

Conclusion

This study examines the relationship between hospital structural characteristics and coding accuracy (for quality measurement). To this effect, it performs comparative and multivariate regression on New York state hospital discharge data (for the period 2000–2004). Hospital-coding accuracy (for quality measurement) is assessed by evaluating the accuracy of the “present on admission” indicator, specifically the occurrence of the value “uncertain” in this indicator. Study analyses are illuminating with respect to the relationship between hospital structural characteristics and coding accuracy. By structural characteristics, the study shows that large, teaching, urban facilities, located in NYC regions perform consistently worse than small, rural, nonteaching facilities located in upstate NY.

This study makes an important contribution to the health information management literature by addressing the gap related to large-scale studies on coding accuracy for quality measurement. Moreover, the study helps identify the types of institutions facing organizational challenges of organizational communication and knowledge exchange in regard to coding for quality. In doing so, it provides a foundation for future research directed toward addressing these types of organizational and management challenges in hospitals.

APPENDIX 1: Diagnosis Code Set Used for Analysis of "Present on Admission" Indicator

| sdx | sdxdesc |

| 41011 | AMI ANTERIOR WALL, INIT |

| 41071 | SUBENDO INFARCT, INITIAL |

| 41091 | AMI NOS, INITIAL |

| 42741 | VENTRICULAR FIBRILLATION |

| 43411 | CRBL EMBLSM W INFRCT |

| 43491 | CRBL ART OCL NOS W INFRC |

| 436 | CVA |

| 4820 | K. PNEUMONIAE PNEUMONIA |

| 4821 | PSEUDOMONAL PNEUMONIA |

| 4822 | H.INFLUENZAE PNEUMONIA |

| 48239 | PNEUMONIA OTH STREP |

| 48240 | STAPHYLOCOCCAL PNEU NOS |

| 48241 | STAPH AUREUS PNEUMONIA |

| 48249 | STAPH PNEUMONIA NEC |

| 48281 | PNEUMONIA ANAEROBES |

| 48282 | PNEUMONIA E COLI |

| 48283 | PNEUMO OTH GRM-NEG BACT |

| 48289 | PNEUMONIA OTH SPCF BACT |

| 486 | PNEUMONIA, ORGANISM NOS |

| 5070 | FOOD/VOMIT PNEUMONITIS |

| 51881 | ACUTE RESPIRATORY FAILURE |

| 51882 | OTHER PULMONARY INSUFF |

| 5845 | LOWER NEPHRON NEPHROSIS |

| 5849 | ACUTE RENAL FAILURE NOS |

Notes

- 1.Dudley, A., Rittenhouse, D., Bae, R. “Creating a Statewide Hospital Quality Reporting System.” California HealthCare Foundation (2002).

- 2.Leatherman S, Berwick D. The Business Case for Quality: Case Studies and an Analysis. Health Affairs. 2003;22(2):17–30. doi: 10.1377/hlthaff.22.2.17. [DOI] [PubMed] [Google Scholar]

- 3.Naessens J, Huschka T. Distinguishing Hospital Complications from Preexisting Conditions. International Journal for Quality in Health Care. 2004;16:i27–i35. doi: 10.1093/intqhc/mzh012. [DOI] [PubMed] [Google Scholar]

- 4.Romano P, Rainwater J, Antonius P. Grading the Graders: How Hospitals in California and New York Perceive and Interpret their Report Cards. Medical Care. 2001;37:295–305. doi: 10.1097/00005650-199903000-00009. [DOI] [PubMed] [Google Scholar]

- 5.Hibbard J, Harris-Kojetin P, Lubalin J, Garfinkel S. Increasing the Impact of Health Plan Report Cards by Addressing Consumers' Concerns. Health Affairs. 2000;19:138–143. doi: 10.1377/hlthaff.19.5.138. [DOI] [PubMed] [Google Scholar]

- 6.Forgionne A. The Use of DRGs in Health Care Payments Around the World. Journal of Health Care Finance. 1999;26(2):66–78. [PubMed] [Google Scholar]

- 7.Hoffman G, Jones D. Prebilled DRG Training Can Increase Hospital Reimbursement. Healthcare Financial Management. 1993;58:28–44. [PubMed] [Google Scholar]

- 8.Leatherman, S., Berwick, D. “The Business Case for Quality: Case Studies and an Analysis.” [DOI] [PubMed]

- 9.Wise, G. Crossing the Coding Chasm: Getting Clinically Recognized and Paid for What We Do Paper presented at the 16th Annual Forum of the Institute for Healthcare Improvement (IHI), Orlando, FL, 2004.

- 10.Iezzoni L. Risk Adjustment for Measuring Health Care Outcomes. Chicago: Health Administration Press; 1997. [Google Scholar]

- 11.Brook R, McGlynn E, Cleary P. Quality of Health Care. Part 2: Measuring Quality of Health Care. New England Journal of Medicine. 1996;335:966–970. doi: 10.1056/NEJM199609263351311. [DOI] [PubMed] [Google Scholar]

- 12.Palmer R. Process-based Measures of Quality: The Need for Detailed Clinical Data in Large Health Care Databases. Annals of Internal Medicine. 1997;127:733–738. doi: 10.7326/0003-4819-127-8_part_2-199710151-00059. [DOI] [PubMed] [Google Scholar]

- 13.Rogers V. AHIMA Coding Series Workbook. Chicago, IL: AHIMA; 2001. Applying Inpatient Coding Skills Under Prospective Payment. [Google Scholar]

- 14.Green E, Benjamin C. Impact of the Medical Record Credential on Data Quality. Journal of American Medical Records Association. 1986;57:29–38. [PubMed] [Google Scholar]

- 15.Hoffman G, Jones D. Prebilled DRG Training Can Increase Hospital Reimbursement. Healthcare Financial Management. 1993;58:28–44. [PubMed] [Google Scholar]

- 16.Rogers, V. “Applying Inpatient Coding Skills Under Prospective Payment.”

- 17.Lorence D, Ibrahim I. Benchmarking Variation in Coding Accuracy Across the United States. Journal of Health Care Finance. 2003;29(4):29–42. [PubMed] [Google Scholar]

- 18.Lorence D, Ibrahim I. Disparity in Coding Concordance: Do Physicians and Coders Agree? Journal of Health Care Finance. 2003;29(4):43–53. [PubMed] [Google Scholar]

- 19.Brown, J. “Improper Fiscal Year 1999 Medicare Fee-For-Service Payments.” Department of Health and Human Services Office of Inspector General Audit (A-17-99-01999) 2000.

- 20.Fisher E, Whaley F, Krushat W, Malenka D, Fleming J, Baron D, Dsia D. Accuracy of Medicare Hospital Claims Data: Progress Has Been Made, but Problems Remain. American Journal of Public Health. 1992;82(2):243–248. doi: 10.2105/ajph.82.2.243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hendershot M, Read K. Charge Reviews Can Beef up Bottomline, Charge System Improvements. Healthcare Financial Management. 1991;32:57–71. [Google Scholar]

- 22.Hsia D, Krushat W, Fagan A, Tebbutt J, Kusserow R. Accuracy of Diagnostic Coding for Medicare Patients under the Propsective Payment System. New England Journal of Medicine. 1988;318(6):352–355. doi: 10.1056/NEJM198802113180604. [DOI] [PubMed] [Google Scholar]

- 23.Rode D. Coding Accuracy Ultimately Affects the Bottom Line. Health Care Financial Management. 1991;104:32–39. [PubMed] [Google Scholar]

- 24.DHHS-OIG, Department of Health and Human Services. Overcoming Documentation Barriers Hospital Payment Monitoring Program. 2000. http://www.tmf.org/pepp/docbarriers.html

- 25.MassPRO. “Coders and Physicians Working Together to Support Compliance, Navigator.” Waltham, MA. 2001.

- 26.Ballaro A, Oliver S, Emberton M. Do We Do What They Say We Do? Coding Errors in Urology. BJU International. 2000;85(4):389–391. doi: 10.1046/j.1464-410x.2000.00471.x. [DOI] [PubMed] [Google Scholar]

- 27.Dixon J, Sanderson C, Elliott P, Walls P, Jones J, Petticrew M. Assessment of the Reproducability of Clinical Coding in Routinely Collected Hospital Activity Data: A Study in Two Hospitals. Journal of Public Health Medicine. 1998;20(1):63–69. doi: 10.1093/oxfordjournals.pubmed.a024721. [DOI] [PubMed] [Google Scholar]

- 28.Gibson N, Bridgman S. A Novel Method for the Assessment of Accuracy of Diagnositic Codes in General Surgery. Annals of the Royal College of Surgeons. 1998;80(4):293–296. [PMC free article] [PubMed] [Google Scholar]

- 29.Kashner T. Agreement Between Administrative Files and Written Medical Records: A Case of the Department of Veteran's Affairs. Medical Care. 1998;36(9):324–336. doi: 10.1097/00005650-199809000-00005. [DOI] [PubMed] [Google Scholar]

- 30.Lorence, D., Ibrahim, I. “Benchmarking Variation in Coding Accuracy Across the United States.” [PubMed]

- 31.Lorence, D., Ibrahim, I. “Disparity in Coding Concordance: Do Physicians and Coders Agree?” [PubMed]

- 32.Lorence, D., Ibrahim, I. “Benchmarking Variation in Coding Accuracy Across the United States.” [PubMed]

- 33.Lorence, D., Ibrahim, I. “Disparity in Coding Concordance: Do Physicians and Coders Agree?” [PubMed]

References

- ICD-9-CM. Vol. 3. Washington, DC: U.S. Government Printing Office; 1999. [Google Scholar]

- Brailer D, Krauch E, Pauly M. Comorbidity-Adjusted Complication Risk: A New Outcome Quality Measure. Medical Care. 1996;34(5):490–505. doi: 10.1097/00005650-199605000-00010. [DOI] [PubMed] [Google Scholar]

- Chassin M, Galvin R. The Urgent Need to Improve Health Care Quality. (Institute of Medicine National Roundtable on Health Care Quality) Journal of American Medical Association. 1998;280:1000–1005. doi: 10.1001/jama.280.11.1000. [DOI] [PubMed] [Google Scholar]

- Donabedian A. Explorations in Quality Assessment and Monitoring. Ann Arbor, MI: Health Administration Press; 1980. [Google Scholar]

- Donaldson M. Measuring the Quality of Health Care (A Statement by the National Roundtable on Health Care Quality) Washington, DC: National Academy Press; 1999. [PubMed] [Google Scholar]

- Durr, E. "Businesses Laud Hospital Report Card; Hospitals Less than Pleased." The Business Review (Albany) Albany, 2002.

- Elixhauser A, Steiner C, Harris D, Coffey R. Comorbidity Measures for Use with Administrative Data. Medical Care. 1998;36:8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- Estrella, R. "Hospital Coding/DRG/Documentation Issues, Hospital Payment Monitoring Program." Long Island, 2003. http://providers.ipro.org

- Glance L, Dick A, Osler T, Mukamel D. Does Date Stamping ICD-9-CM Codes Increase the Value of Clinical Information in Administrative Data? Health Services Research. 2006;41(1):231–251. doi: 10.1111/j.1475-6773.2005.00419.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsia D, Ahern B, Moscoe L, Krushat W. Medicare Reimbursement Accuracy under the Prospective Payment System. Journal of the American Medical Association. 1992;268:896–899. [PubMed] [Google Scholar]

- Hughes, J., Averill, R., McCullough, E., Xiang, J. "Assessments of Hospital Performance Based on Death Rate Comparisons Change When Risk Adjustment Is Limited to Diagnoses Present on Admission." Paper presented at the Academy of Health Services Research, San Diego, 2004.

- Iezzoni L, Moskowitz M. Journal of the American Medical Association. 7. Vol. 255. 1986. Clinical Overlap among Medical DRGs; pp. 927–929. [PubMed] [Google Scholar]

- Keefer E, Rubenstein L, Kahn K. Hospital Characteristics and Quality of Care. Journal of the American Medical Association. 1992;268:1709–1714. [PubMed] [Google Scholar]

- Kohn L, Corrigan J, Donaldson M, editors. Committee on Quality of Healthcare in America, Institute of Medicine. Washington, D.C: National Academy Press; 2000. To Err Is Human: Building a Safer Healthcare System. [Google Scholar]

- MassPro. Payment Error Prevention Program/Compliance Workbook 2003. www.masspro.org

- Meehan T, Fine M, Krumholz H. Quality of Care, Process and Outcomes in Elderly Patients with Pneumonia. Journal of American Medical Association. 1997;278:2080–2084. [PubMed] [Google Scholar]

- Naessens J, Huschka T. Distinguishing Hospital Complications from Preexisting Conditions. International Journal for Quality in Health Care. 2004;16:i27–i35. doi: 10.1093/intqhc/mzh012. International Society for Quality in Health Care and Oxford University Press 2004. [DOI] [PubMed] [Google Scholar]

- Noller J. Coders Join Forces with Physicians to Improve Clinical Outcomes. Journal of AHIMA. 2000;71(1):43–49. [PubMed] [Google Scholar]

- Reepmeyer, T. "Complications versus Comorbidities." Paper presented at the Faulkner & Gray Medical Outcomes Conference, Boston, MA. 1995.

- Romano P, Mark D. Bias in the Coding of Hospital Discharge Data and its Implications for Quality Assessment. Medical Care. 1994;32:81–90. doi: 10.1097/00005650-199401000-00006. [DOI] [PubMed] [Google Scholar]

- Romano, P., Remy, L., Luft, H. 1996. "Second Report of the California Hospital Outcomes Project." University of California Center for Health Service Research in Primary Care, Davis http://repositories.cdlib.org/chsrpc/coshpd/chapter015

- Roos L, Cageorge M, Austen E, Lohr K. Using Computers to Identify Complications after Surgery. American Journal of Public Health. 1985;75:1288–1295. doi: 10.2105/ajph.75.11.1288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roos L, Stranc L, James R, Li J. Complications, Comorbidities and Mortality: Improving Classification and Prediction. Health Services Research. 1997;32(2):229–238. [PMC free article] [PubMed] [Google Scholar]

- Rosenthal G. Using Hospital Performance Data in Quality Improvement. Joint Commission Journal of Quality Improvement. 1998;24:347–360. doi: 10.1016/s1070-3241(16)30386-8. [DOI] [PubMed] [Google Scholar]

- Rosenthal G, Harper D, Quinn L, Cooper G. Severity-adjusted Mortality and Length of Stay in Teaching and Nonteaching Hospitals. Results of a Regional Study. Journal of American Medical Association. 1997;278:485–490. [PubMed] [Google Scholar]

- Schraffenberger L, editor. Effective Management of Coding Services. Chicago: AHIMA; 1999. [Google Scholar]

- Schuster M, McGlynn E, Brook R. How Good Is the Quality of Health Care in the United States? Milbank Quarterly. 1998;76:517–563. doi: 10.1111/1468-0009.00105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shojania K, Showstack J, Wachter R. Assessing Hospital Quality: A Review for Clinicians. Effective Clinical Practice. 2001;4:82–90. [PubMed] [Google Scholar]