Abstract

We present a case (SE) with integrative visual agnosia following ischemic stroke affecting the right dorsal and the left ventral pathways of the visual system. Despite his inability to identify global hierarchical letters (Navon, 1977), and his dense object agnosia, SE showed normal global-to-local interference when responding to local letters in Navon hierarchical stimuli and significant picture-word identity priming in a semantic decision task for words. Since priming was absent if these features were scrambled, it stands to reason that these effects were not due to priming by distinctive features. The contrast between priming effects induced by coherent and scrambled stimuli is consistent with implicit but not explicit integration of features into a unified whole. We went on to show that possible/impossible object decisions were facilitated by words in a word-picture priming task, suggesting that prompts could activate perceptually integrated images in a backward fashion. We conclude that the absence of SE's ability to identify visual objects except through tedious serial construction reflects a deficit in accessing an integrated visual representation through bottom-up visual processing alone. However, top-down generated images can help activate these visual representations through semantic links.

Visual agnosia is a modality-specific deficit in recognizing visually presented objects. Over a century ago, Lissauer (1890) suggested a distinction between apperceptive and associative agnosia, each representing a breakdown in different stages of the perceptual hierarchy. Lissauer viewed apperception as a deficit in the initial stages of sensory processing in which the perceptual representation is constructed, and association as a deficit in mapping the final structural representation onto stored knowledge. According to this differentiation, a patient with apperceptive agnosia would typically fail to copy or match basic visual stimuli, whereas an associative agnosic would experience “normal vision stripped of its meaning” (Lissauer, 1890).

Although this classification has provided a simple and useful framework for dealing with visual disorders, it has not gone uncontested. Some neuropsychologists have cast doubt on the very concept of associative agnosia, claiming that underlying perceptual deficits can always be found in such cases if sensitive enough measurements are used (Farah, 1990; Delvenne, Seron, Coyette & Rossion, 2004). Others have argued that the classification is too coarse and insensitive to the complex stages characteristic of normal vision (Riddoch & Humphreys, 1987; Marr, 1982; Ullman, 1999). Indeed, variations in deficits reported across both apperceptive and associative visual agnosias have led to further divisions and refinements.

An important example for the purposes of the present paper is the case of a patient (HJA), clinically diagnosed with associative agnosia and found later to have apperceptive components. In their classic report, Riddoch & Humphreys (1987, 2001) experimentally tested this patient and found abnormal perceptual organization abilities. Specifically, HJA's difficulty resulted in deficient integration or binding of local features of a visual stimulus into a coherent perceptual whole. Consequently, the presence of local features actually hindered his recognition performance. Riddoch and Humphreys (1987) suggested the term integrative agnosia for such cases, and we adopt this terminology here (for reviews see Behrmann, 2003; Humphreys, 1999).

HJA's perceptual abilities suggested a basic problem in visual integration. He was either lacking the ability to form a global integrated gestalt, or he was unable to gain access to one. The distinction between forming versus accessing an integrated perceptual representation is important because each alternative leads to a different conceptualization of the functional impairment. If formation is the problem in this type of agnosia, then perceptual integration would be more closely tied to perceptual identification processes themselves. However, if a fully integrated representation is formed, but conscious access to it is denied, then the breakdown in integrative agnosia is not an integrative one per se, but, more likely, a problem in selecting the proper level of representation for object identification. If the latter account is valid, we should find evidence for visual integration even without awareness of the object's identity.

In the present study we describe patient SE who, like HJA and other integrative agnostics (see Behrmann et al, 2006, in press), has specific difficulties in integrating features into coherent global percepts. We demonstrate that SE's integrative agnosia may be better characterized as a lack of conscious access to an established integrated perceptual representation.

SE: clinical history

SE is a 52 year old, left-handed male, who was admitted for rehabilitation following bilateral ischemic stroke in January 2004. Prior to hospitalization, SE had been fully independent and gainfully employed. He had no history of previous neurological or psychiatric disorders. An intake examination at admission in the rehabilitation ward (13 days after stroke) showed reported expressive and receptive speech to be normal. Similarly, gross and fine motor functions were unaffected by the stroke. His visual sensory performance, as assessed by computerized perimetry, revealed restrictions in the upper quadrants of the right and left hemifields. This condition improved substantially over the course of time. After one month, only a slight attenuation in dynamic visual stimulus detection was present in both fields, with no basic visual field defect. Visual acuity on a Snellen-equivalent computerized test was 20/20, there were no crowding effects and SE could easily fixate and report a small central target embedded in other patterns.

Clinically, it was evident that SE could not recognize common objects and faces on sight, although tactile and auditory recognition was intact. He displayed an acquired disturbance of color perception, as suggested by his inability to identify any of the numbers in the Ishihara plates, as well as a difficulty to discriminate between different color shades. However, he was not color agnosic: He was able to name basic colors presented in isolation and to associate such colors with typical items (e.g., red with tomatoes, etc.). Informal observation suggested that SE also suffered from topographical agnosia. His orientation while on the hospital ward, as well as his ability to describe his home neighborhood, were far lower than expected given his visual functioning in everyday activities. There was no evidence of unilateral neglect or extinction as tested by the Behavioral Inattention Test (a standard, comprehensive clinical test of neglect), although his overall score was low 111/146). Specifically, SE's performance was flawless on the line bisection subtest, and on the free drawing subtest. In contrast, the cancellation subtests revealed that SE omitted targets from both sides, with no evidence for a consistent unilateral neglect profile (line cancellation score: 13 right, 16 left; letter cancellation score: 18 right, 13 left; star cancellation score: 18 right, 19 left).

Reading was reduced to a somewhat slow yet accurate process that initially took place in a letter-by-letter fashion, but rapidly improved in efficiency and speed. However, when words contained more than 5-6 letters, SE occasionally replaced the last letter with a semantically meaningful and visually related substitute (note that Hebrew is written from right to left). Interestingly, such left-sided substitutions were never observed in pseudowords. An auditory memory testing (Rey-AVLT) demonstrated an initial word span in the low average range (5/15 on list I) with a low learning curve (9/15 on list V). Performance dropped substantially following a distracting list (4/15) and remained low after a 20-min delay (3/15).

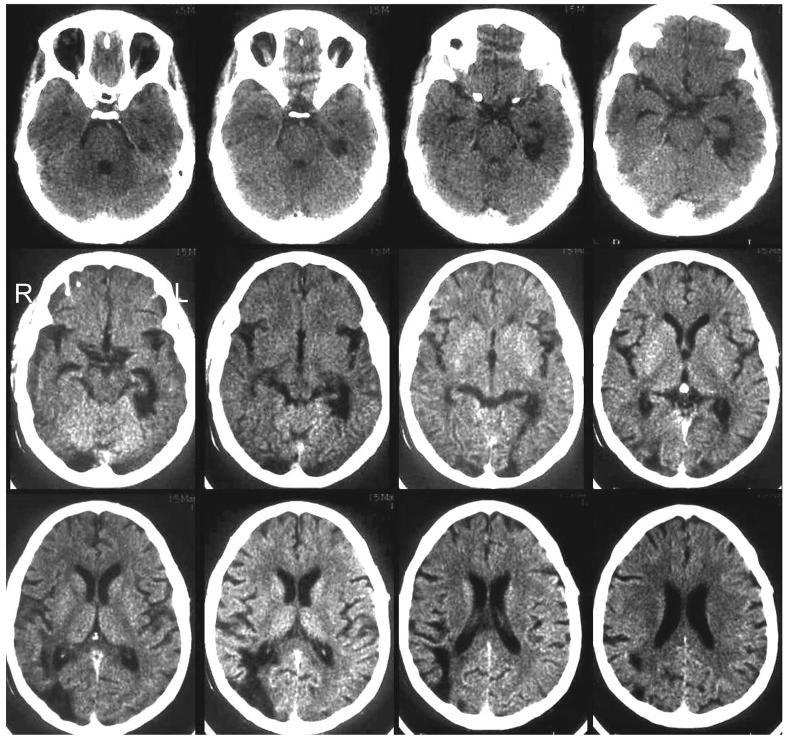

CT scanning conducted within 24 hours of the initial hospitalization indicated bilateral infarcts in non-homologous regions affecting the left ventral and right dorsal visual streams. More specifically, lesions were present in the ventral regions of the left hemisphere, particularly the hippocampal region and lingual gyrus. Lesions were also present in the dorso-lateral posterior regions of the right hemisphere, including the dorso-lateral occipital gyri, inferior parietal lobule, junction of supramarginal and superior temporal gyri, and junction of angular and middle temporal gyri. This pattern did not change 2 months later as revealed by a second CT scan session (Figure 1).

Figure 1.

CT scan demonstrating bilateral lesions. On the left, the lesion involves the following structures: Hippocampus, Lingual gyrus, White matter within the temporal lobe. On the right, the lesion involves the dorso-lateral occipital gyri, the inferior parietal lobule including the junction area between supramarginal and superior temporal gyri, and the junction area between the angular and middle temporal area.

Initial neuropsychological investigations of basic visual function

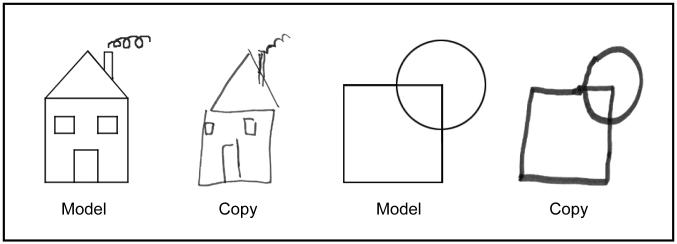

We first tested SE while he was still on the rehabilitation ward, about 2 months after stroke. Before experimental testing began, consent was obtained from SE in accordance with the declaration of Helsinki (1964). SE had no difficulty visually matching geometric shapes to exemplars (100% accurate – 20/20). He could also easily copy and name simple figures (Figure 2).

Figure 2.

Examples of SE's ability to copy relatively simple shapes.

We next tested him on the Efron shape test (Efron, 1968), which presents pairs of two-dimensional shapes, controlled for overall brightness and total surface area, and is considered a sensitive test of apperceptive agnosia. The patient's task is to report whether items in each pair are the same or different shape. SE was 100% accurate (30/30).

SE's performance on a test of orthographic shape recognition was also perfect (25/25 trials; Psycholinguistic Assessments of Language Processing in Aphasia (PALPA) (Kay, Lesser & Coltheart, 1992), and his spontaneous naming of the letters confirmed that single-letter identification was intact.

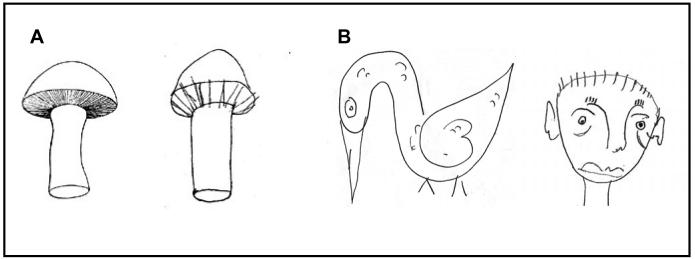

In contrast to his excellent overall performance with simple visual shapes, SE experienced considerable difficulty when his visual perception was tested with drawings of complex objects. He identified only 13 out of 50 line drawings on the Boston Naming Test (BNT) that were selected to be free of cultural bias. Notably, mistakes were visually but not semantically or phonologically related to the line drawing (e.g. a mushroom was mistaken for a parachute). Further tests ruled out variants of anomic or optic aphasia. For example phonetic prompting did not facilitate naming.

In addition, for every non-identified item on the Boston Naming Test we examined whether SE could access semantic information concerning items that he could not name. For example, we requested SE to pantomime the usage of the tool in pictures that he could not identify. We also inquired if SE had any related semantic knowledge of failed items by asking questions such as: Where could such an item be found; was the item something that could be hold in a person's hand etc. None of these in-depth inquiries evidenced access to semantic information for objects that were not overtly identified. Nonetheless, when SE failed to identify an item, he could provide accurate verbal description of its overall shape and displayed preserved drawing abilities, whether from memory or image copying (Figure 3). All of these tests together indicate that SE's object recognition difficulty did not reflect a linguistic impairment.

Figure 3.

A. The mushroom was misidentified as a parachute, but accurately copied. B. Drawing from memory of a bird and a face. Note the many details included in both the copy and the drawing from memory.

Additional evidence supporting the visual, non-aphasic nature of SE's errors was obtained from his results in the PALPA test (subtest 47) (Kay, Lesser & Coltheart, 1992). In this test, a target word-label is read aloud and the patient is expected to point to the target from among five line drawings. Importantly, visual, semantic and unrelated distracters appear on each test trial. Overall, SE was far better on this test than on the BNT, with an overall success rate of 77%. Apparently, identification was easier for him when seeking a predefined item. Examination of his error profile confirmed that 78% of his errors were visually related (e.g. identifying a bulb as a bell) as opposed to only 11% of his errors being semantically related (e.g. identifying a key as a lock). The remaining 11% of his errors were neither visually nor semantically related.

Clinically, the most debilitating aspect of SE's condition involved his inability to recognize faces. Indeed, he never recognized any of the staff members by face, but easily did so by voice. His dense prosopagnosia extended to premorbidly known faces, as he failed to recognize pictures of famous Israeli politicians, family members or even himself. Consistently, he was severely impaired (score=27) on the Benton Test of Facial Recognition (Benton et al., 1983) with errors occurring even on the simplest plates (1-6). On rare occasions when he succeeded, he relied solely on an analytic, part-by-part comparison between the target and probe face.

An integrative deficit: clinical and experimental evidence

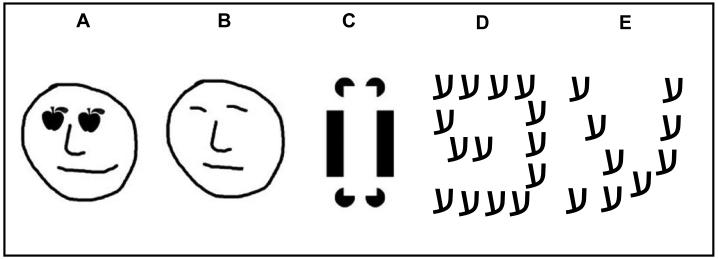

SE's deficits conformed to the criteria defined as integrative agnosia (Behrmann, 2003). First, as evidenced by his perfect performance on Efron's test he had well preserved low-level visual processes and was able to discriminate among forms that placed minimal demand on integration. Second, he was unable to integrate parts forming coherent gestalts, although the parts were perceptually available to him. That is, when SE was confronted with a series of ten schematic face stimuli in which the eyes were substituted by two simple identical objects (e.g. two apples) (Figure 4A), he recognized the objects presented in the eyes location but was unable to recognize the global stimuli as faces (0/10). Healthy participants immediately recognized such stimuli as face-like forms (Bentin et al., 2006), suggesting that SE was not able to grasp the global shape. For example, he described the face in Figure 4A as “here are some fruits (pointing to eyes) …judging by their shape, they are apples…and these (points to nose and mouth) might be branches …and this here (points to circular outline of face) might be a plate”.1 Finally, his recognition errors suggested that he was able to extract some, but not all the relevant information from the display. For example, he identified the line drawing of a harmonica as a computer keyboard, which is a characteristic error made by patients with integrative agnosia (Behrmann, 2003).

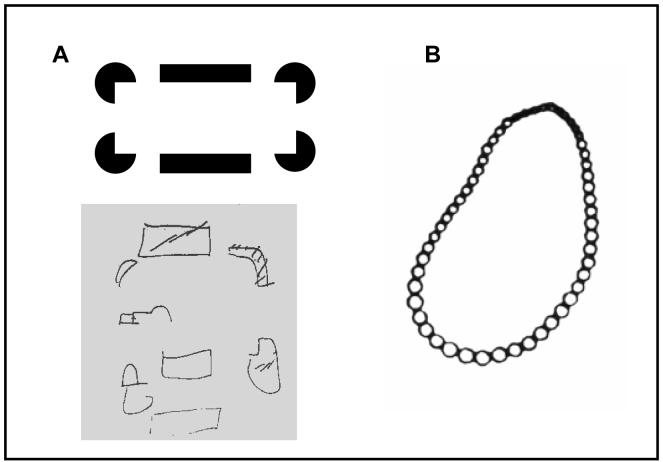

Figure 4.

Example of stimuli used to test visual integration A. Faces with “object eyes”; SE identified the apples but not as eyes in a the face. B. Schematic faces. SE did not identify the stimulus as a face. C. Kanitza figures. SE could describe the parts but failed to see the illusory contours. D. Hierarchical global-local incongruent Hebrew letters and E. congruent letters. SE systematically identified the local letters but was at chance when asked to identify the global letters (see also Experiment 1).

We verified that SE's failure in this task did not result from pathological attention captured by the local objects, but rather from a breakdown in integration, by asking him to recognize regular schematic faces where no local objects were involved (see figure 4B). SE failed to recognize the face in this condition as well (0/10). An integration deficit was similarly observed when SE was presented with representative Kanizsa figures (Figure 4C). Although he could deliver a detailed description of the components, he completely failed (0/4) to perceive the emerging shape from the illusory contours even when it was explicitly pointed out to him. This performance paralleled his overall poor identification of the global shape in a computerized task with Navon hierarchical letters (Figure 4D-E; described in detail in the next section). SE failed to consciously perceive the global letter even when it was explicitly pointed out to him. When the global letter was presented, SE reported only the local letters. His overall accuracy in the global letter task was at chance (see Experiment 1-results).

SE's impaired integration also was evident in his attempt to copy the Kanizsa illusory rectangle (Figure 5A) as well as in his description of the line drawing of a necklace (Figure 5B) as a “bunch of balls attached one to the other”.

Figure 5.

Additional evidence for impaired visual integration: A. Despite SE's spared copying ability (Figure 3) his copy of the Kanizsa illusory rectangle was completely disintegrated. B. He identified the line drawing as “a group of many balls attached to each other”, but failed to recognize it as a necklace.

Overall, these clinical observations are consistent with a description of integrative agnosia, i.e. difficulty in binding local visual features into a unitary coherent perceptual whole. However, peculiar aspects of SE's perceptual performance provided additional insights into his visual impairment. When SE was explicitly told that the sketch in Figure 4B conveyed a face, the integrated image seemed to occasionally ‘emerge’ to him. When such ‘emergence’ occurred with face stimuli, it was clearly evident by his instantaneous ability to accurately point out the inner facial features, as well as pointing out that ears were missing in the schematic faces. At times, this phenomenon occurred spontaneously, with both face and object stimuli. Such occasional emergences raised the intriguing possibility that visual images seen by SE were sufficiently integrated to delineate the object, yet this information remained at a sub-threshold level, short of explicit visual identification. In other words SE's deficit stems from an inability to consciously access an otherwise integrated percept. This hypothesis was examined in the following set of experiments.

Experiment 1

In this experiment, SE was presented with a Hebrew adaptation of hierarchical letter stimuli (Navon, 1977) and was instructed to identify and respond to the local or global level in different blocks of trials. As reported above, SE failed to overtly identify the global hierarchical letters. However, if the stimulus was implicitly integrated into a global form, then responses should be slower when identifying local letters in the incongruent case (that is, when the global letter is different than the local letter) compared to the congruent case.

Methods

Stimuli

Global letters subtended a visual angle of approximately 6.75°×3.55° at a viewing distance of 50 cm. Local letters were approximately 4 mm in height and 3 mm in width (visual angle 0.57°×0.34°). The width and height of the global letters were constructed from four and five local letters, respectively. All stimuli were presented as black on a white background. The Hebrew letters “samech” (“ס”) and “caph” (“כ”) were used in one experimental run and the letters “peh” (“פ”) and “ayin” (“ע”) in a second (see example in Figure 4D and 4E). Each pair of letters combined to form four different stimuli, resulting in two main congruency conditions. Stimuli were presented on a 19-inch monitor (800×600 graphics mode).

Procedure

In a series of preliminary trials we determined that 450 ms exposure time was optimal. At this duration, SE correctly reported large and small letters presented individually at fixation. Each run started with a short training session, presenting 24 single letters and 24 congruent hierarchical letters, and SE reported the letter identity by button press. Speed and accuracy were equally emphasized. Training continued until SE became familiar with the task. Following training, the experimental instructions appeared on the screen again and were read aloud. In the first block, SE was requested to identify and respond to the local letters. In the second block, he was requested to identify and respond to the global letters. In each block there were 128 trials, 64 congruent and 64 incongruent (a total of 256 trials across the two runs). An inter-trial interval of 100 ms with a blank screen separated the response from the next trial. Breaks were given as required throughout the experiment. Reaction time (RT) and accuracy were recorded for each trial using a parallel response box connected to a Pentium 4 computer. E-Prime was used for data collection and experimental control.

Results

Since no significant differences were found between runs, the results of the two runs were collapsed. All responses above 2000 ms were excluded from the analysis, leaving a total of 221/256 responses at the global level (and 240/256 responses at the local level. The distribution of these responses between congruent and incongruent conditions was nearly equal.

Global letter task

As previously mentioned, SE experienced great difficulty in identifying the global letter, even when it was explicitly pointed out to him. Accordingly, an accuracy analysis revealed that on the global task, his performance was very poor: On average, SE was correct on only 47.6% of the “global” trials and a binomial test confirmed that this performance was at chance level (p=.49). Moreover, among the correctly identified global letters 61% were in the congruent condition, that is, the “correct” global-letter identification could, indeed, be based on the local letters.

Local letter task

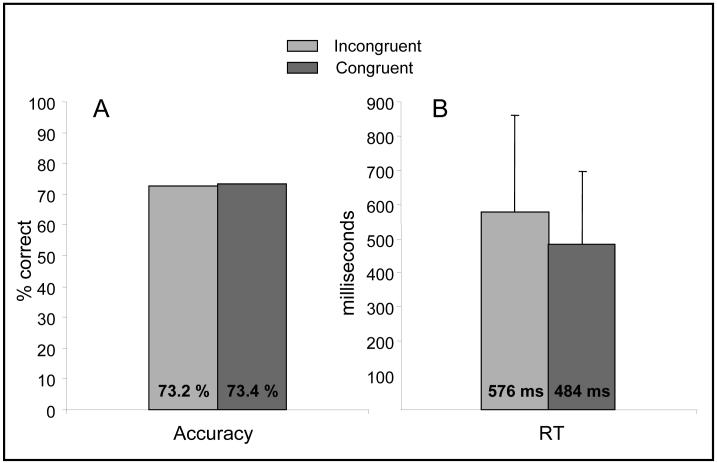

Overall, SE responded correctly on 168 trials (73.3% after eliminating outliers), 85 trials (73.2%) in the incongruent condition and 83 trials (73.4%) in the congruent condition. A binomial test confirmed that his overall accuracy in the local task was higher than would be expected by chance (P < .001). In contrast to accuracy, RTs were different for congruent vs. incongruent conditions, consistent with global interference in performing the local task (Figure 6). SE was 92 ms faster to respond to the local letters when they were congruent than when they were incongruent with the global form, [t(1,166) = 2.38, p < .018]. Such a congruency effect is near the high end of the distribution of normal congruity effects reported in the literature. For example, using the same physical parameters in a group of 12 subjects in another study, we observed a significant global-to-local congruency effect of 50 ms with SD = 32 (Bentin et al., 2007). In this control group, identification accuracy in the incongruent conditions was better for global (93.9%) than for local letters (85.3%).

Figure 6.

SE's accuracy (A) and mean response time (B) in the local-letter identification task. Although there was no global-to-local interference in accuracy, responses were significantly faster in the congruent than the incongruent condition.

Discussion

SE could not explicitly identify the global letter of a “Navon” hierarchical figure. Nevertheless, the very same stimuli exerted a typical pattern of global interference when he identified the local letters. It appears that conscious access to the integrated form was absent but not the integration itself. Although suggestive, these results could not be extrapolated beyond the identification of global letter shapes. We were therefore motivated to explore whether evidence of covert integration might be obtained with other complex visual stimuli, such as object line drawings. This question was pursued in the following experiment.

Experiment 2

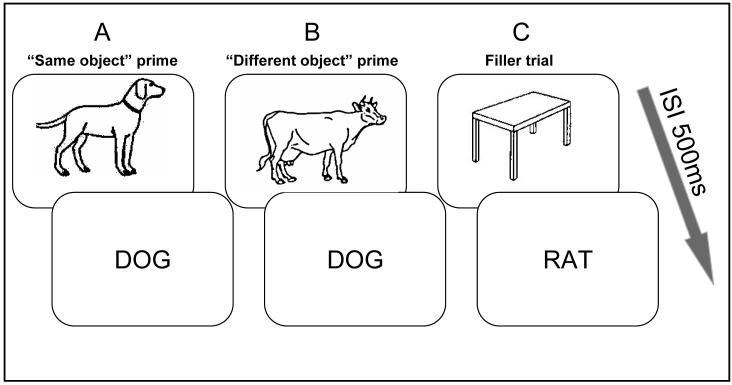

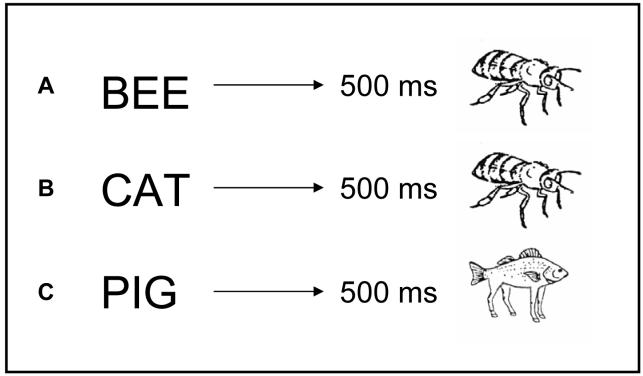

In this experiment, we examined whether SE might display evidence of covert integration of line drawings that he failed to identify. For this purpose, we designed a semantic word categorization task in which SE was requested to categorize words as denoting animate beings or inanimate objects. Critically, on each trial the target word was preceded by a line drawing that SE failed to recognize. These unidentified drawings were either the object denoted by the word or a different object from the same animacy category (Figure 7). If sufficient covert integration existed to implicitly identify the line drawings, his semantic categorization response should be faster when primes and targets were the same object than when they were different.

Figure 7.

Example of stimuli used in the picture-word priming task; A. “Same” trial; B. “Different” trial; C. Filler trial. SE was instructed to make a living/nonliving decision when the word appeared (see text).

Methods

Stimuli

The prime stimuli included 72 line drawings (36 animate and 36 inanimate), all taken from the Snodgrass database (Snodgrass & Vanderwart, 1980). They were black on a white background, with an average size of 10 cm by 15 cm (11° × 17°). All drawings were associated with words spelled by no more than four Hebrew letters2. This limitation was implemented due to SE's difficulty reading words longer than 4-5 letters.

Task and Experimental Design

In this experiment we compared RTs to target words presented in the two prime-target conditions during a semantic categorization task. In the “same” condition, the target word was preceded by a line-drawing prime representing the same object (e.g. the word “DOG” preceded by a line-drawing of a dog). In the “different” condition, the same word was preceded by a line-drawing of a different object from the same animacy category (e.g. the word “DOG” preceded by a drawing of a cow; Figure 7). Hence, in the critical conditions the prime and target were both either animate or both inanimate and each target appeared once in the “same” and once in the “different” condition. The order of appearance for same and different trials was randomized to prevent order effects. Since critical primes and targets always belonged to the same category, a third condition was introduced in which the line-drawing prime and the word target belonged to different categories (e.g. a picture of an animal followed by a “non-living” word or vice versa). These filler trials were included to prevent biased response preparation on the basis of prime identity alone, and the targets were not repeated. Each experimental run included 48 critical word pairs in which the same word was preceded by either a same object or a different object prime. The test stimuli included 24 animate images and 24 inanimate images, while the remaining 24 images served as fillers.

Experimental procedure

Phase 1: Determining that SE cannot identify the priming stimuli

Prior to the experimental session, the priming stimuli were presented one by one (exposure duration = 300 ms) and SE was asked to identify the drawings. Under these conditions SE could easily see and describe the shape of stimuli piece-by-piece, yet he was unable to identify a single line drawing.

Phase 2: Priming experiment

Each trial began with the central presentation of the prime on the screen for 300 ms, followed by a blank screen for 500 ms. The target word then appeared and remained on the screen until the categorization response was made. The next trial began 500 ms after responding. Two identical runs of the experiment were carried out on separate days, and produced very similar results.

Results

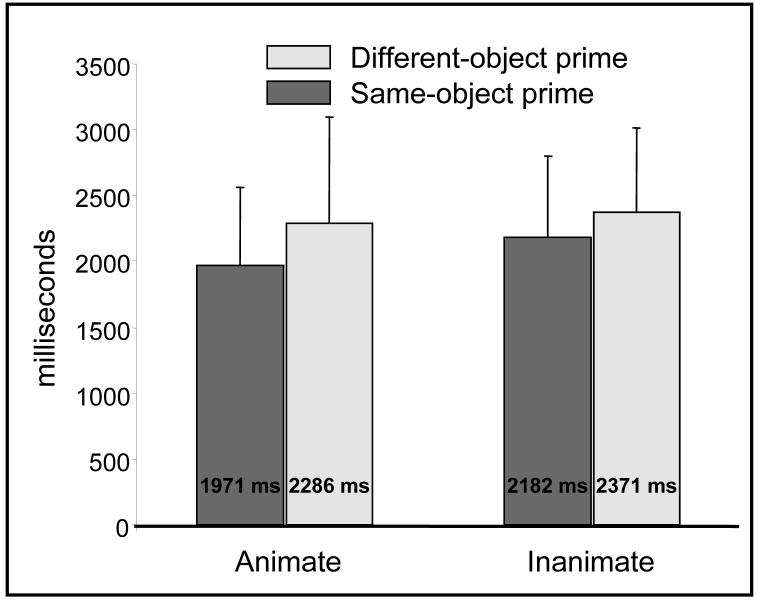

Word categorization accuracy on both runs was at ceiling with only two errors. Mean RTs were compared between the “same” and “different” priming conditions collapsed across runs. RTs more than 2SD from the mean were excluded, leaving a total of 44 pairs in the animate condition and 42 pairs in the inanimate condition. Critically, SE's mean RT in the same condition (2076 ms; SD = 608 ms) was 252 ms faster than in the different condition (2328; SD = 729 ms). Priming was observed for both animate and inanimate targets (Figure 8). ANOVA with repeated-measures was performed with same/different and animacy as factors. The RTs to animate stimuli were faster (2128 ms) than to inanimate stimuli [2277 ms; F(1,41) = 9.7, p < .01], the main effect of same/different was significant [F(1,41) = 7.3, p < .01], and there was no interaction [F(1,41) < 1.0].

Figure 8.

SE's response times to animate and inanimate words in the “same” and ”different” priming conditions. The error-bars denote 1 SD within each condition.

Discussion

The significant priming effects observed in this experiment showed that there was sufficient information in the visual prime to facilitate semantic categorization of the target word. However, SE's overt responses to the same visual stimuli demonstrated that this integrated perceptual representation did not provide his semantic system with sufficient information for overt identification. Furthermore priming operated within each animacy category, so the priming effect could not simply be explained by the overall coarse physical difference between animate and inanimate items.

These results could be evidence that an integrated representation was formed by the perceptual system but that it was not accessible to consciousness. According to this account the target word activated a semantic representation which accessed the integrated perceptual image top-down, inducing backward priming. However, an alternative interpretation is that SE's faster performance in the same-object condition reflected priming by distinctive features. According to this account the prime may not have been a fully integrated form, and the target words could have activated links between distinctive features and coherent memory representations. In order to explore this possible alternative, in Experiment 3 we tested whether distinctive features per se were sufficient to produce the priming effects.

Experiment 3

In order to test whether SE's priming effects could have resulted solely from activation due to distinctive features in the drawings, we retested him in a new condition. The task was identical to Experiment 2, but now all the drawings used as primes were fragmented into constituent distinctive parts. Each new drawing was designed so that its parts maintained recognizable distinctive features (e.g. a cat broken down into head, body, and feet), but the features were scrambled so that the overall gestalt was broken. If the results observed in Experiment 2 reflected successful integration capable of supporting covert identification, then the priming effect will not appear with scrambled distinctive features. However, the priming effect should prevail if it resulted from activation of distinctive features.

Since this was a novel priming task not previously described in the literature, we added a control group to confirm that scrambled images can create a priming effect in healthy participants.

Methods

Stimuli

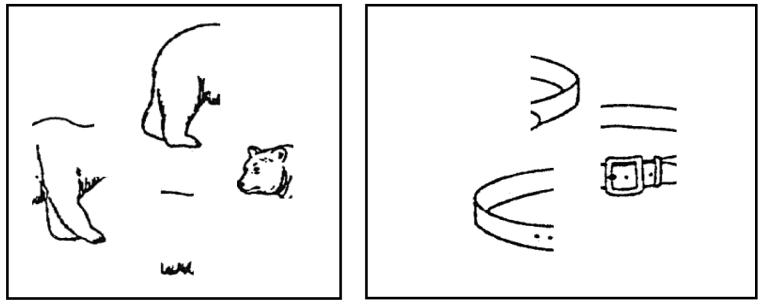

Stimuli were identical to those in experiment 2. The only difference being that all the line drawings were now fragmented (Figure 9).

Figure 9.

Examples of scrambled stimuli used in the animate condition (the bear) and inanimate condition (the belt) in Experiment 3.

Experimental procedure

The experimental procedure was identical to that described in Experiment 2. After the experiment SE's identification of the primes was assessed by presenting the line drawings one by one for 300 ms. First the fragments were presented and then the original intact drawings.

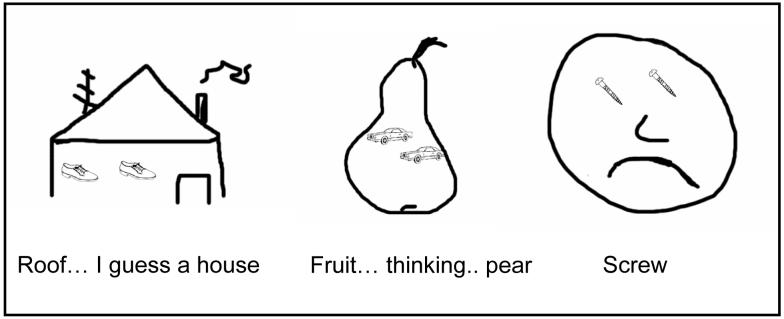

Experiment 3 was administered 9 months after Experiment 2. Therefore we first verified that SE still had problems integrating visual stimuli. For this purpose, in addition to line drawings of objects, houses or schematic faces we also showed him a set of hierarchical stimuli including drawings of faces and houses with objects substituting for essential local information (e.g., a face with two little objects substituting for eyes of a house with two little objects substituting for windows; see examples in Figure 10). To control for the added complexity of such stimuli, we also presented line drawings of objects including little objects within the global contour, but in which the perception of the global shape did not depend on the interpretation of the local stimuli (e.g. two little cars within a fruit; Figure 10). SE was able to identify regular global shapes (e.g. a schematic face or a schematic house) as well as the complex control stimuli. In contrast, as in the previous testing session (2 month after his stroke), he was able to identify the local objects embedded in the hierarchical stimuli, but was baffled by the outer contour and could not make out the global configuration.

Figure 10.

Examples of hierarchical and superimposed line drawings that SE was asked to identify 9 month after his stroke. He was able to identify the global pear, whose meaning is independent of the local cars. He was also able to identify the house (based on the roof) but could not associate the local shoes as windows of the house. He was still unable to identify the face based on the global structure.

Results

Unlike previously, SE was able to identify all the intact drawings. In contrast, he was not able to identify any of the fragmented drawings. Importantly, under identical viewing conditions, the control group of 12 participants identified an average of 84.4% fragmented items (range 69.1% - 94.5%).

The assessment of putative priming effects was based on pairing the RTs to identical stimuli presented in the “same” and “different” conditions. Four pairs were excluded from this analysis because at least one of the RTs was longer than the condition mean + 2SDs. For SE the priming effects found previously were entirely eliminated under the conditions of this experiment, indicating that the account of feature-limited priming was not substantiated. Semantic categorization of the target words was perfect and was not influenced by the corresponding identity of the prime and target. In fact, mean RT in the “same” condition (2085ms; SD = 500) was almost identical to the mean RT in the “different” condition (2095 ms; SD = 759) a difference that was obviously not significant [t (43) =0.089; ns]. In contrast, the 12 control participants revealed a significant priming effect of 21 ms [708 ms and 687 ms for the “different” and “same” conditions, respectively; t (11) = 3.3, p < .01]. In fact, 10 out of the12 subjects showed a positive priming effect.

Discussion

The results of Experiment 3 demonstrate that for SE, distinctive features by themselves were not sufficient to produce a priming effect in a word categorization task. These results are quite striking in light of the fact that SE could now identify the integrated line drawings used as primes in Experiment 2 due to improvements in his condition. However, the fact that SE could not identify the fragmented drawings emphasizes the integrative deficits that still endured despite his impressive recovery. It would seem unlikely that SE's performance would have been any better at this task if tested several months earlier. The question still arises of why the same top down backward priming effect that presumably helped covert identification of integrated line drawings in Experiment 2, did not produce a priming effect between scrambled fragments and semantic concepts. A reasonable answer is that the top-down memory representation activated by the word was an integrated whole that found no match in the scrambled features prime. Supporting this account are the correct object-drawings from memory even when SE was unable to identify line drawings of objects. The next experiment was designed to explore further how top down influences may effect his perception of visual stimuli.

Experiment 4

This experiment was conducted while SE was still densely agnosic – at the same time as he was tested in Experiment 2. As previously described, if briefed by a concept SE was sometimes able to identify the object (recall his occasional ability to recognize a schematic face after it was pointed out to him). If the priming results observed in Experiment 2 were due to similar processes, then they might not have reflected sudden integration, but rather sudden access to an already integrated representation. If so, this effect should be influenced by top down perceptual expectancies, and performance should be affected by the congruency between top-down expectancy and the overtly recognized percept. Specifically, we hypothesized that high-level perceptual expectancies would influence conscious accessibility to an integrated representation.

To examine how top-down expectancies might influence SE's visual object-identification, we designed a task in which line drawings were to be categorized as possible or impossible objects (inspired by Riddoch and Humphreys, 1987). Critically, the object-targets were preceded by words that denoted either the same as or a different object from the target. (Note that impossible objects only appeared as fillers to prevent response biases based on the prime alone). We were interested in whether SE's categorization of identical objects would differ as a function of the word prime. Specifically, we expected performance to be hindered when the word prime denoted a different object than the one represented by the target. Conversely, word primes denoting the same object as the target were expected to enhance categorization accuracy and processing speed.

Stimuli

The stimuli were 44 drawings of possible objects from the Snodgrass database. Stimuli used in Experiment 2 were not used in Experiment 4. In addition, we constructed 44 impossible objects by seamlessly combining drawings of 2 meaningful parts (Figure 11). The line drawings all appeared in black on a white background, with an average size of 10 cm by 15 cm (11° × 17°). The Hebrew word primes all had a length limit of four letters, due to SE's reading difficulties with longer words.

Figure 11.

Example of primes and targets in the word- picture priming task; A. “Same” condition-object; B. “Different” condition- object; C. Filler trial – non-object. SE was instructed to make an object/non-object discrimination when the picture appeared.

Task

In this experiment SE was instructed to categorize the presented drawing in each trial as possible or impossible (note that he was not required to name the actual object). We compared his performance with possible objects in two conditions. In the “same” condition, the drawing was preceded by a matching word prime (e.g. the word “Shoe” followed by a drawing of a shoe). In the “different” condition, the same shoe drawing was preceded by a non-matching word prime (e.g. “Bowl”). Filler trials with impossible objects were randomly interspersed. The prime words in the filler conditions were completely unrelated to the parts making up the impossible objects.

Experimental procedure

Phase 1: Determining that SE cannot identify the target stimuli

Prior to the experimental session, identification of possible target stimuli was tested with no time limit. Under these conditions, SE could identify only 2 stimuli. Only the remaining items were included in phase two.

Phase 2: Priming experiment

Each trial began with the central presentation of a prime word on the screen for duration of 1500 ms, followed by a blank screen. SE was instructed to read the words. This long presentation was necessary for SE to read the word. After 500 ms, the target stimuli appeared and remained on the screen until SE's categorization response was collected. The next trial began 500 ms after his response. Due to a technical computer error the experiment was run twice resulting in an uneven number of trials in each experimental condition (61 “same” and 42 “different” trials)3.

Results

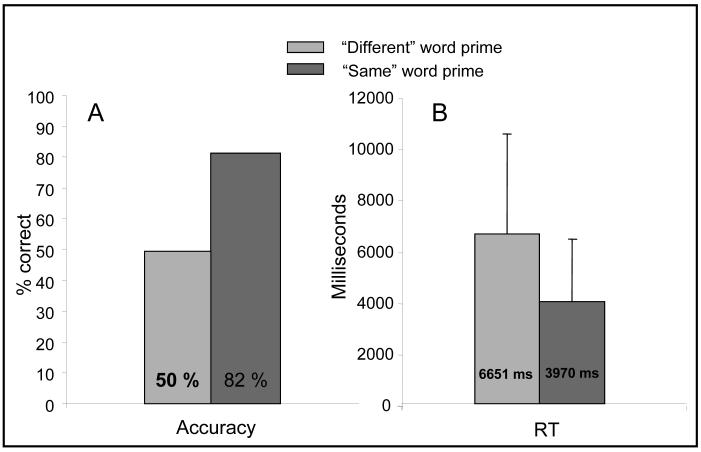

SE's distinction between possible from impossible objects was very slow and laborious, once again suggesting a part by part analysis. He correctly categorized only 29/42 (69%) of the impossible objects with RTs that often exceeded 20 seconds (M=13,572.0 ms, SD=10,431.1 ms). Categorization of possible objects, which represented the critical conditions for the present study, was clearly affected by the priming condition.

The effect of the word prime on SE's perception is demonstrated by his higher accuracy level and faster RTs in the same than in the different condition (Figure 12). When possible objects were preceded by word primes denoting the same objects, categorization accuracy reached 82%, (50/61 targets). However, when different words served as primes, a sharp decline in performance resulted, and categorization accuracy dropped to chance levels 50% (21/42), [X2 (1) = 11.84, p < 0.001; McNemar test].

Figure 12.

SE's accuracy and reaction times (RT) to objects in the same and different priming conditions. The error-bars denote 1 SD within each condition.

We also compared mean RT's for object-categorization between the same and different word priming conditions. This analysis was carried out after removing errors and outlier responses (more extreme than 2SD from each condition's mean) resulting in 46 congruent and 20 incongruent trials.

As presented in Figure 12, the results confirmed that SE responded almost 3 seconds faster in the congruent condition than in the incongruent condition, [t (64) = 3.7; p < .001].4

Discussion

The results of this experiment clearly demonstrate an effect of expectancy on SE's visual encoding. When pictures were primed by matching words, SE's categorization was quite accurate and relatively swift. When pictures were primed by non-matching words high error rates and extremely slow RTs occurred. Furthermore, his slow and laborious performance with impossible objects and the relative high instance of categorizing such stimuli as possible objects are, again, evidence for a part based visual process, on the one hand, and residual ability for covert integration on the other hand. Since the parts were meaningful, correct categorization of impossible objects could have been based only on the integrated image. SE's performance shows that although most of the time he was mislead by the meaningful parts to think that the stimulus represented a possible object, his categorization of impossible objects was about chance. The absence of SE's ability to identify visual objects except through tedious serial construction reflects a deficit in accessing an integrated visual representation through bottom-up visual processing alone. However, top-down processing can help activate these visual representations through semantic links to bring them into perceptual awareness.

It is interesting to note that these results mirror clinical observations of SE while still on the hospital ward. For example when he was seated in an office he was quite proficient at recognizing a stapler, pen, computer and a bunch of files, as these all fit the expectancies of an office context. However, when asked to describe an unlikely object, i.e. the examiners sunglasses placed on the shelf, he was unable to do so. The results of this experiment highlight the potential importance of top-down, contextual effects on SE's visual perception.

General discussion

Implicit processing and integrative visual agnosia

We have presented a patient (SE) who suffered from integrative visual agnosia following bilateral stroke affecting the right dorsal and left ventral visual pathways. Despite SE's severe overt difficulties with visual feature integration, we found converging evidence for implicit integration, at least sufficient to form links between objects he could not overtly identify and their meanings. Although several cases with integrative agnosia have been described in the literature (e.g. Behrmann et al, 2006, Delvenne et al, 2004; Butter and Trobe, 1994; Riddoch and Humphreys, 1987; Marotta and Behrmann, 2004), to our knowledge this is the first study to show covert integration in such cases.

During the past two decades, several studies have demonstrated covert processing in a host of other disorders such as blindsight, visual neglect, prosopagnosia, amnesia and aphasia (Farah & Feinberg, 1997; Squire & McKee, 1992; Milberg & Blumstein, 1981; De Haan, Bauer, & Greve, 1992; Vuilleumier, Schwartz, Clarke, Husain, & Driver, 2002). Closer to the scope of the present study, Peterson et al. (2000) showed that figure-ground organization was influenced by the orientation of objects that a visual agnosic could not identify, consistent with implicit but not explicit access to objects. Here we have extended these observations to include the integration of local features to form a unitary object, even though this unitary object may not be accessible consciously.

Implicit integration and integrative agnosia

The present results raise a major question. If SE shows evidence of implicit integration, why does he have such difficulty in explicitly perceiving integrated forms? Although various theories and mechanisms have been suggested to account for the overarching phenomenon of implicit processing, it is not clear which approach could be best suited to explain our findings with SE.

Schacter, McAndrews and Moscovitch (1988) suggested a straightforward explanation of implicit perception whereby recognition mechanisms are basically intact, but have been disconnected from some other brain mechanism necessary for conscious perception (see also Gazzaniga, 1998). The basic approach also resonates in more recent computational models, such as proposed by Burton et al. (1991) that describe prosopagnosia as a partial disconnection between a face recognition unit and an identity node that elicits familiarity.

A very different explanation of covert/overt differences was proposed by Farah, O'Reilly, & Vecera (1993). They raised the possibility that covert recognition may simply reflect residual processing of partially damaged, but not totally destroyed, visual recognition systems. Conscious perception would then emerge only with high quality representations. In this view, normal subjects might display such overt/covert dissociations in conditions of degraded, brief, or masked stimuli. This apparently simple notion has proven quite powerful in explaining disorders that exhibit covert influences (Farah, O'Reilly and Vecera, 1993; Farah and Feinberg, 1997, Delvenne et al, 2004).

The disconnection and perceptual representational accounts could be brought together in the following way. Normal perceptual identification is assumed to result from top-down activation of a rich and unified, memory representation interacting with bottom-up representations formed more automatically by the visual system. In SE's case the perceptual representations were probably fragile with the result that the bottom-up links between the perceptual and the memory systems were not sufficiently stable. However, the priming effects, as well as the incongruence effects found with the hierarchical letters in the face of impaired ability to identify the global letters, demonstrate that top-down links persisted and could reinforce the perceptual representations at least to a level sufficient for implicit activation. However, the top-down links were only beneficial, even for covert identification, when the semantic, memory image matched the bottom-up image formed by the visual system. When the stimulus fragments were scrambled, SE's perceptual impairment rendered a bottom-up image that did not match the top-down request. Healthy individuals were able to mentally manipulate and integrate the fragments, thus providing an anchor in the perceptual system that top-down representations could address. This manipulation was denied by SE's ineffective integration system.

The anatomical distribution of SE's lesions are consistent with global processing deficits associated with a model of hemispheric asymmetries in processing global and local levels of visual displays (Ivry & Robertson, 1998; Robertson & Ivry, 2000). The right hemisphere is biased toward processing the global level (i.e., it either processes it sooner or gives it more weight) and the left hemisphere is biased toward processing the local level. Both hemispheres can process both types of information but emphasize one level more than another. The importance of this model for considering the lesion distributions in SE is that evidence from focal lesion groups show that lesions in and around the right temporal parietal junction (TPJ) produce global processing deficits, while similar lesions in the left hemisphere produce local processing deficits (Robertson, Lamb & Knight 1988). These differences occur even when primary visual functions are intact. SE's lesion on the right included the TPJ, while his lesions on the left did not. Rather his left lesion was in more ventral areas of the hippocampus and lingual gyrus. This would be an unusual situation for the visual system because the processing streams within each hemisphere normally interact as processing proceeds. When these interactions are disrupted (as when the global to local processor of the right hemisphere is no longer functioning) the local to global processor of the left may bias attention to such a degree that the global level is simply missed entirely. This result is evident in acute stages after right unilateral stroke (see Delis, Robertson & Efron, 1986). It is as if the preferred level for the undamaged hemisphere captures so many attentional resources that access to other representations that may still exist are compromised. SE's left hemisphere may have captured attention to the local details in somewhat the same way. He then could not easily disengage attention from those details in a reasonably rapid time frame in order to identify the global form except through a laborious piecemeal process or through top-down influences. In this view, the reason SE could not access the integrated representation formed by intact streams of processing was because his perceptual awareness was flooded by the local features, preventing selection of a representation that was still achieved implicitly. Although this is a plausible explanation, it is clearly post hoc and should be considered cautiously. SE's lesion was bilateral and not very focal involving visually relevant areas in addition to the TPJ. Hence, the currently proposed anatomical explanation is only one among several possible. Nevertheless, it is striking that SE's responses to Navon patterns was similar to other patients with right hemisphere damage in posterior regions that disrupts the pattern of response in a similar way.

In summary, the currently presented case of integrative visual agnosia has provided insight into possible visual mechanisms that could account for this syndrome. In particular SE's ability to covertly identify line drawings that he was unable to explicitly identify suggests that his visual system was able to form representations that were sufficiently integrated to achieve covert identification, yet not rich or elaborate enough for overt identification. Further support for this conclusion was derived from observations of global interference when SE was identifying local letters, despite his inability to identify the global form. Together these results suggest that unidirectional bottom-up disconnections between the perceptual and the conceptual visual processing systems in integrative agnosia may be disrupted while integrated representations continue to be formed. In addition they suggest that intact top-down connections require integrated perceptual representations in order to bring covert images into perceptual awareness as a unified visual object.

Acknowledgments

We thank Anat Perry for help running Experiment 3 and extremely competent assistance in running the control group for this experiment. This study was supported by NIMH grant to LR and SB (R01 MH 64458) and NEI grant to LR (EY016975).

Footnotes

Literal citations of SE's remarks are translated from Hebrew, changing the sentence structure to English.

Note that Hebrew is written without vowels so that 4 letters would be equivalent with about 6 letters in English.

Note that although not all responses were recorded, all trials were actually presented.

Given the high rate of errors in the “different” condition the number of cases in which SE responded correctly to both the same and different targets was very small. Therefore, in order to use as many data points as possible, we analyzed the difference between mean RTs using a t-test for independent samples. This statistical analysis should be taken with caution, however, due to the relatively small number of correct responses (20) in the “different” condition.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Benson DF, Greenberg JP. Visual form agnosia. Archives of Neurology. 1969;20:82–9. doi: 10.1001/archneur.1969.00480070092010. [DOI] [PubMed] [Google Scholar]

- Bentin S, DeGutis JM, D'Esposito M, Robertson LC. Too many trees to see the forest: Performance, event-related potential, and functional magnetic resonance imaging manifestations of integrative congenital prosopagnosia. Journal of Cognitive Neuroscience. 2007;19:132–146. doi: 10.1162/jocn.2007.19.1.132. [DOI] [PubMed] [Google Scholar]

- Benton AL, Sivan AB, Hamsher K, Varney NR, Spreen O. Contribution to neuropsychological assessment. Oxford University Press; NY: 1983. [Google Scholar]

- Behrmann M. Neuropsychological approaches to perceptual organization: Evidence from visual agnosia. In: Peterson MA, Rhodes G, editors. Perception of faces objects and scenes. Analytic and holistic processes. Oxford University Press; 2003. pp. 295–334. [Google Scholar]

- Behrmann M, Peterson MA, Moscovitch M, Suzuki S. Integrative agnosia: Deficit in encoding relations between parts. Journal of Experimental Psychology: Human Perception and Performance. 2006 doi: 10.1037/0096-1523.32.5.1169. in press. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Burton AM, Young AW, Bruce V, Johnston RA, Ellis AW. Understanding covert recognition. Cognition. 1991;39:129–66. doi: 10.1016/0010-0277(91)90041-2. [DOI] [PubMed] [Google Scholar]

- Butter CM, Trobe JD. Integrative agnosia following progressive multifocal leukoencephalopathy. Cortex. 1994;30:145–58. doi: 10.1016/s0010-9452(13)80330-9. [DOI] [PubMed] [Google Scholar]

- De Haan EH, Bauer RM, Greve KW. Behavioural and physiological evidence for covert face recognition in a prosopagnosic patient. Cortex. 1992;28:77–95. doi: 10.1016/s0010-9452(13)80167-0. [DOI] [PubMed] [Google Scholar]

- Delis DC, Robertson LC, Efron R. Hemispheric specialization of memory for visual hierarchical stimuli. Neuropsychologia. 1986;24:205–214. doi: 10.1016/0028-3932(86)90053-9. [DOI] [PubMed] [Google Scholar]

- Delvenne JF, Seron X, Coyette F, Rossion B. Evidence for p deficits in associative visual (prosop)agnosia: A single case study. Neuropsychologia. 2004;42:597–612. doi: 10.1016/j.neuropsychologia.2003.10.008. [DOI] [PubMed] [Google Scholar]

- Efron R. What is perception? In: Cohen RS, Wartofsky M, editors. Boston studies in the philosophy of science. Humanities press; New York: 1968. [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Consulting Psychologists Press; Palo Alto, CA: 1976. [Google Scholar]

- Farah MJ. Visual agnosia. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- Farah MJ, Feinberg TE. Consciousness of perception after brain damage. Seminars in Neurology. 1997;17:145–152. doi: 10.1055/s-2008-1040924. [DOI] [PubMed] [Google Scholar]

- Farah MJ, O'Reilly RC, Vecera SP. Dissociated overt and covert recognition as an emergent property of lesioned neural network. Psychological Review. 1993;100:571–588. doi: 10.1037/0033-295x.100.4.571. [DOI] [PubMed] [Google Scholar]

- Goodglass H, Kaplan E. The assessment of aphasia and related disorders. 2nd Ed. Lea & Febiger; Philadelphia: 1983. [Google Scholar]

- Gazzaniga MS. Brain and conscious experience. Advances in Neurology. 1998;77:181–92. [PubMed] [Google Scholar]

- Humphreys GW. Integrative agnosia. In: Humphreys GW, editor. Case studies in the neuropsychology of vision. Psychology Press/Taylor & Francis (UK); Hove, England: 1999. pp. 41–58. [Google Scholar]

- Ivry R, Robertson LC. The Two Sides of Perception. MIT Press; Cambridge: 1998. [Google Scholar]

- Kay J, Lesser R, Coltheart M. Psycholinguistic Assessments of Language Processing in Aphasia (PALPA) Erlbaum; Hove: 1992. [Google Scholar]

- Lamb MR, Robertson LC, Knight RT. Component mechanisms underlying the processing of hierarchically organized patterns: Inferences from patients with unilateral cortical lesions. Journal of Experimental Psychology: Learning, Memory & Cognition. 1990;16:471–483. doi: 10.1037//0278-7393.16.3.471. [DOI] [PubMed] [Google Scholar]

- Lamb MR, Robertson LC. The effects of visual angle on global and local reaction times depends on the set of visual angles presented. Perception & Psychophysics. 1990;47:489–496. doi: 10.3758/bf03208182. [DOI] [PubMed] [Google Scholar]

- Lissauer H. Ein Fall von Seelenblindheit nebst einem beitrage zur Theorie derselben. Archiv für Psychiatrie und Nervenkrankheiten. 1890;21:229–270. English translation by Jackson, M. (1988). Lissauer on agnosia. Cognitive Neuropsychology, 5, 155–192. [Google Scholar]

- Marr D. Vision: A computational investigation in the human representation of visual information. Freeman; San Francisco: 1982. [Google Scholar]

- Marotta JJ, Berhmann M. Patient Schn: has Goldstein and Gelb's case withstood the test of time? Neuropsychologia. 2004;42:633–8. doi: 10.1016/j.neuropsychologia.2003.10.004. [DOI] [PubMed] [Google Scholar]

- Milberg W, Blumstein SE. Lexical decision and aphasia: evidence for semantic processing. Brain & Language. 1981;14:371–85. doi: 10.1016/0093-934x(81)90086-9. [DOI] [PubMed] [Google Scholar]

- Milner AD, Goodale MA. The visual brain in action. Oxford University Press; Oxford: 1995. [Google Scholar]

- Navon D. Forest before trees: The precedence of global features in visual perception. Cognitive Psychology. 1977;9:353–383. [Google Scholar]

- Petterson MA, de Gelder B, Rapcsack SZ, Gerhardsteing PC, Bachoud-Levy A-C. Object memory effects on figure assignment: Conscious object recognition is not necessary or sufficient. Vision Research. 2000;40:1549–1567. doi: 10.1016/s0042-6989(00)00053-5. [DOI] [PubMed] [Google Scholar]

- Rey A. L'examen clinique en psychologie. Presses Universitaires de France; Paris, France: 1964. [Google Scholar]

- Riddoch MJ, Humphreys GW. A case of integrative visual agnosia. Brain. 1987;110:1431–1462. doi: 10.1093/brain/110.6.1431. [DOI] [PubMed] [Google Scholar]

- Robertson LC, Lamb MR, Knight RT. Effects of lesions of temporal-parietal junction on perceptual and attentional processing in humans. Journal of Neuroscience. 1988;8:3757–3769. doi: 10.1523/JNEUROSCI.08-10-03757.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robertson LC, Ivry R. Hemispheric asymmetry: Attention to visual and auditory primitives. Current Directions in Psychological Science. 2000;9:59–63. [Google Scholar]

- Schacter DL, McAndrews MP, Moscovitch M. In: thought without language. Weiskrants L, editor. Oxford; Clarendon: 1988. pp. 242–278. [Google Scholar]

- Snodgrass JG, Vanderwart M. A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. Journal of Experimental Psychology: Human Learning and Memory. 1980;6:174–215. doi: 10.1037//0278-7393.6.2.174. [DOI] [PubMed] [Google Scholar]

- Squire LR, McKee R. Influence of prior events on cognitive judgments in amnesia. Journal of Experimental Psychology: Learning Memory & Cognition. 1992;18:106–15. doi: 10.1037//0278-7393.18.1.106. [DOI] [PubMed] [Google Scholar]

- Ullman S. High-level Vision: Object Recognition and Visual Cognition. MIT Press; Cambridge, MA: 1996. [Google Scholar]

- Vuilleumier P, Schwartz S, Clarke K, Husain M, Driver J. Journal of Cognitive Neuroscience. 2002;14:875–86. doi: 10.1162/089892902760191108. [DOI] [PubMed] [Google Scholar]

- World Medical Association Declaration of Helsinki. JAMA. 1997;277:925–6. [PubMed] [Google Scholar]