Abstract

Objectives

To evaluate the accuracy of self-assessment skills of senior-level bachelor of science pharmacy students.

Methods

A method proposed by Kruger and Dunning involving comparisons of pharmacy students' self-assessment with weighted average assessments of peers, standardized patients, and pharmacist-instructors was used.

Results

Eighty students participated in the study. Differences between self-assessment and external assessments were found across all performance quartiles. These differences were particularly large and significant in the third and fourth (lowest) quartiles and particularly marked in the areas of empathy, and logic/focus/coherence of interviewing.

Conclusions

The quality and accuracy of pharmacy students' self-assessment skills were not as strong as expected, particularly given recent efforts to include self-assessment in the curriculum. Further work is necessary to ensure this important practice competency and life skill is at the level expected for professional practice and continuous professional development.

Keywords: self-assessment, continuous professional development, pharmacy student, evaluation, assessment

INTRODUCTION

Self-assessment is a critical competency for both pharmacy students and professionals to achieve/have/attain/possess.1While no universally agreed upon definition of self-assessment exists, there is general agreement regarding facets of self-assessment that are crucial,2-4 including:

the ability to appropriately identify acceptable behavioral objectives or outcomes;

the willingness to critically observe, compare, and contrast one's own behavior with that of others self-defined as peers;

the ability to utilize peer-referencing (observation, comparison, and contrast) within an appropriate timeframe to reorient future behaviors;

the ability to utilize other strategies beyond peer referencing (including comparison with objective standards, and out-group referencing) to reorient future behaviors; and

the ability to adapt one's own behavior to self-assessment.

Much of the literature on the psychology of self-assessment is over a decade old and focuses on social psychological principles such as within-group comparisons.5-7 The terms metacognition6 and self-monitoring8 have been used to describe the ability to know how well one is performing, when one is likely to be correct in behavior or judgment, and when one is likely to be incorrect. Everson and Tobias noted that the same knowledge that underlies the ability to produce correct behavior in the first place is also the knowledge that underlies the ability to recognize correct behavior in oneself and others.6 Thus, the absence of the former is likely to lead to the absence of the latter: when one does not know how to act correctly, one is less likely to recognize incorrect action in oneself or others.

Flawed self-assessment is clearly a cognitively complex process, one that affects every aspect of daily life.9 For example, recent immigrants frequently feel the burden of moving to a new country where the “rules” of daily life may be very different from those in their previous experience. Such rules relate not only to obvious, overt actions (such as which side of the road one is to drive on, or whether one is expected to bribe officials in order to secure one's goals), but more importantly, to the multiple ways in which individuals consciously and unconsciously pass judgment upon one another. If a key element of self-assessment involves peer-referencing mechanisms and the nonverbal communications of one's peers is indecipherable, it is very difficult to know how one compares. Tacit or nonverbal expressions of disapprobation (such as rolling of eyes, terse smiles, uncomfortable coughs or giggles, or rapid blinking) may be simply too subtle for individuals to truly learn how they are doing compared with their peers.

Self-assessment skills are a critical element of competence in the personal, cultural, or professional sense. The inability to self-assess may be due to a variety of factors, including the inability to peer-reference, lack of knowledge of objective standards or expectations, an inability to cognitively process nonverbal feedback in a timely fashion in order to reorient behavior, or lack of appropriate rewards or punishments to change one's actions.10,11

The consequences of imperfect self-assessment vary considerably. At times, it may be mildly amusing; at other times it can be frustrating or aggravating (for example, when one considers cross-cultural communication in a time-pressured, high-stress situation). Within the professions, particularly the health professions, the consequences of flawed self-assessment can be dangerous, particularly if it leads an individual to exaggerate his/her competency or inflate expectations.

Self-Assessment in the Health Professions

The importance of self-assessment within health professions education and practice has increased significantly over the past decade.1 In many curricula, self-assessment is an expectation of a novice professional, and is considered an important skill for development.12 Within professional practice, self-assessment is the foundation upon which the cycle of continuous professional development (CPD) is built.13,14 From the time they enter a professional program, students are expected to demonstrate skills and propensities for reflective practice which requires an ability and desire to engage in self-assessment.15

Within the literature there are numerous examples of how self-assessment has been woven into education and practice. For example, tools such as learning portfolios, journals, or reflective logs have been identified as important vehicles for encouraging introspection.16 Methods such as critical incident reporting have been described in which individuals or teams re-live important (frequently unfortunate) events in an effort to deconstruct, analyze, reflect, and improve.16,17 Systems of self-assessment have been developed, such as the Surgical Learning and Instructional Portfolio (SLIP).18 The SLIP is a case-based portfolio used in surgical education to allow residents to document their experience and demonstrate they have acquired the necessary knowledge and skills to provide surgical care. Monthly case topics are selected by the resident and reported using a preformulated template in which case history, diagnostic studies, differential diagnosis, management options, treatments, and 3 “lessons learned” are documented. Residents are expected to embellish one of these “lessons learned” through further research and submit at least 2 articles from the literature supporting this experience.

While the literature is replete with “show-and-tell” examples, little attention has been paid to a crucial question: how effective are students' (or professionals') self-assessment skills? Implicit in the literature is the notion that professionals (because they are clever, well-intentioned people one presumes) have adequate and appropriate self-assessment skills, but lack the time, tools, or reason to formalize the self-assessment process.

Anecdotal experience – and decades worth of complaints and disciplinary proceedings against health care professionals – suggests that not all professionals have adequate or appropriate self-assessment skills. Many clinicians can recount horror stories of colleagues with whom they have worked who seemed oblivious to the subtle (and not-so-subtle) cues of peers regarding clinical performance, and appeared to have absolutely no insight into their work or practice. Similarly, many instructors can recount tales of students who were “book smart” but appeared to completely lack common sense and/or social graces and consequently were entirely ineffective as clinicians.

Much of the literature in health professions education seems to imply that self-assessment is a natural propensity; consequently there is little attention paid to the formal teaching of self-assessment skills (for example, in the same way that communication skills have been a feature of curricula for many years). While tools such as learning portfolios and reflective logs may indeed be useful for students and practitioners, simply giving individuals the correct tool does not mean they know how to use it, or use it effectively and efficiently.

Within pharmacy education, there has been some attention paid to the notion of self-assessment. For example, Krause and Popovich have reported on a group interaction and peer/self-assessment process in a pharmacy practice course.19 Fjortoft has also discussed the importance of self-assessment in pharmacy education and outlined challenges associated with assuming students are ready to self-assess.1 A variety of accreditation guidelines in pharmacy education and statements on professional development have also noted the importance of self-assessment skills in pharmacy education and practice.13-15 Absent from this literature is empirical data demonstrating that pharmacy students indeed do possess self-assessment skills. Given the importance of self-assessment in education and practice, this is a curious and potentially problematic omission.

The objective of this research was to evaluate the self-assessment skills of pharmacy students. For the purposes of this research, self-assessment skills were defined in terms of accuracy of predicting how one's own behavior compared with an objective standard and the behaviors and expectations of peers and instructors.

METHODS

Participants in this project were senior-level bachelor of science in pharmacy (BScPhm) students enrolled in a professional practice laboratory course. This course involved clinical simulations involving standardized patients (actors specially trained to portray medical conditions, and to provide feedback and coaching to improve students' performance). The research method utilized in this study was an application of a model proposed by Kruger and Dunning.20 This model involved comparisons of self-assessment with a pooled group of external assessments, and the extent to which self-assessment aligned with the assessments of a diverse group of others. Variations of this method include the management tool 360° Review used in some organizations, in which individuals receive feedback on their performance from their peers and superiors, as well as those they supervise.

This course involved groups of 8 students, 1 pharmacist-assessor, and 1 standardized patient. Over the 10-week course, each student had the opportunity to role-play the part of the pharmacist, dealing with a different standardized patient each week. Thus, at the end of the course, each student had participated in at least 8 different clinical simulations, and received verbal and written feedback from their peers, the pharmacist-assessor, and the standardized patient. Each week, 1 student would role-play while the remaining 7 students in the group had the opportunity to observe, provide feedback, and learn from the experience of the pharmacy student.

Assessment in this course was built upon global rating scales and analytical checklists. The global rating scales were: verbal communication; nonverbal communication; degree of focus; logic; coherence in the interview; empathy; and overall performance. Students were graded on a 5-point scale across each of these 5 domains. The analytical checklist consisted of a series of 14-21 binary items (observed/not observed) corresponding to the pharmacotherapeutic content of the case. In a broad sense, the global rating scales were used to assess communication skills, while the analytical checklists were used to assess clinical knowledge. Taken together, the analytical checklist and the global rating scale formed a comprehensive assessment of clinical skills underlying professional practice.

For this research, laboratory cohorts (corresponding to groups of 8 students each) volunteered to participate, pursuant to a research protocol approved by the University's research ethics board. Students were recruited to participate through an information leaflet distributed to all laboratory cohorts. All students in the laboratory cohort were required to provide consent prior to that cohort being enrolled in the study. Consent was obtained on an individual basis; thus, a cohort in which only 6 or 7 students agreed to participate was not included in the study, and the students were never told who among them elected not to participate. This was done to ensure students were neither pressured nor forced to participate in the study, and that confidentiality was maintained at all times.

The research consisted of 2 separate studies. Each study was undertaken at 2 separate times, corresponding to weeks 8 and 9 of the 10-week course. These times were selected since they represented the final weeks of the course. By this point, students had become accustomed to the course, were familiar with standardized patient-driven clinical simulations, and had already received significant feedback in weeks 1 through 7.

Study 1: Self-Assessment of Clinical Knowledge

For this study, each student completed a self-assessment (following his/her role play) of clinical knowledge utilizing the same analytical checklists as used by the pharmacist-assessor, standardized patient, and the other 7 students who observed the performance. Inter-rater reliability between the pharmacist-assessor, standardized patient, and peer assessments was calculated using Cronbach α. If α was less than 0.8, the assessments were discarded (0.8 being the predefined level for acceptable reliability in clinical simulations21). If α was 0.8, the analytical checklist scores were then averaged using a weighted arithmetic mean of all 9 assessments. This weighted mean was calculated to give greater significance to the scoring by the pharmacist-assessor than the standardized patient or student-observers, given the pharmacist's greater clinical knowledge and experience. The weighted arithmetic mean was calculated using an arbitrarily derived formula that gave 50% of the overall mean to the pharmacist-assessor, 35% to the 7 student-observers, and 15% to the standardized patient. This arithmetic mean was then compared to the student's self-assessment scoring of the analytical checklist (see limitations in the Discussion section for additional information about this method).

Data from all assessments was then divided into percentiles based on the total score achieved. Thus, for example, a student whose observer ratings had an arithmetic mean of 18.5/20 analytical checklist items in week 8 was in the 99th percentile, while a student whose observer ratings had an arithmetic mean of 11.5/20 that week was in the 10th percentile. (Note: Percentile rankings were used rather than absolute percentages due to the comparative nature of this study. Percentile rankings do not translate directly to percentage ratings because no student scored 20/20, ie, 100%, on the analytical checklist.)

The percentile conversion of the observers' arithmetic means was defined as the student's “actual” score on the simulation; the percentile conversion of the student's own self-assessment was defined as their “perceived” ability. Perceived ability and actual scores for each student were compared, based on quartile analysis of data.

Study 2: Self-Assessment of Communication Skills

For this study, each student completed a self-assessment (following his/her role play) of communication skills utilizing the same global rating scale as used by the pharmacist assessor, standardized patient, and the other 7 students who observed the performance. For each assessment, an index score was calculated as the sum of performance on each of the 5 domains (maximum possible score: 25, since each of the 5 domains utilized a 5-point scale). Inter-rater reliability scores for the pharmacist-assessor, standardized patient, and 7 observing students was calculated; where α < 0.8, the results were discarded due to inadequate reliability. Where α ≥ 0.8, the results were used to compare with the student's own self-assessment. As in Study 1, a weighted average of pharmacist-assessor, standardized patient, and peer assessments was utilized; in this case, an arbitrarily derived formula was developed weighting the pharmacist's assessment at 34%, the standardized patient's assessment at 33%, and the peer assessments at 33%, in order to acknowledge the unique expertise of standardized patients in assessing communication skills.

As in Study 1, data from all assessment was then divided into percentiles based on the total index score achieved. The percentile conversion of the observers' weighted means was defined as the student's actual score on the simulation; the percentile conversion of the student's own self-assessment was defined as their perceived ability. Perceived ability and actual scores for each student were then compared, based on quartile analysis of data.

For both Study 1 and Study 2, quantitative data was managed using Microsoft Office Excel 2003.

RESULTS

Eighty students (corresponding to 10 laboratory cohorts of 8 students each) volunteered to participate in the study. In weeks 8 and 9 of the course, 8 clinical simulations occurred (80 each week, since each student participated in 1 role-play each week); consequently for this study, a total of 160 clinical simulations occurred.

A data set in this study consisted of the following elements: self-assessment, 1 pharmacist-assessor evaluation, 1 standardized patient evaluation, and 7 peer evaluations. For each clinical simulation, 2 data sets were produced (1 for the global rating scale and 1 for the analytical checklist). A total of 320 data sets were collected. On initial review, 7 data sets were removed from the study due to irregularities in completion of the forms (eg, indecipherable responses on the form, missing assessment forms, etc).

Data analysis was undertaken for 158 data sets for Study 1 (involving the analytical checklist) and 155 data sets for Study 2 (involving the global rating scale).

Study 1: Clinical Knowledge

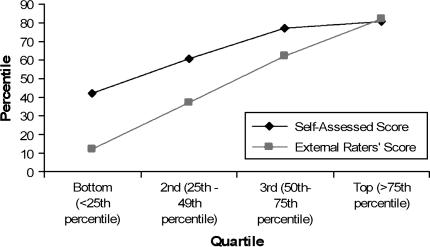

One hundred seventeen (74%) of the 158 data sets examined in Study 1 met the inter-rater reliability criteria of α ≥ 0.8 and were consequently analyzed. As illustrated in Figure 1, those in the lowest actual performance quartiles consistently overestimated their clinical knowledge by an average of over 30 percentiles. Of interest, as actual performance improved, the magnitude of this mis-estimation decreased. In fact, at the 81st percentile, an inversion occurred, in which those scoring in the highest actual performance quartile actually underestimated their performance. On average, students self-assessed their performance in the 63rd percentile, which was significantly higher than the actual mean percentile performance (by definition) of 50 (one-sample t(80) = 7.79, p < 0.001).

Figure 1.

Self-assessed scores of clinical knowledge as a function of external raters' scores.

Study 2: Communication Skills (including empathy and focus/logic/coherence of interview)

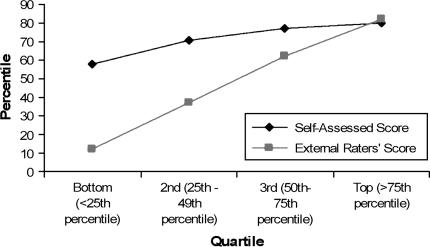

Ninety-nine (63.9%) of the 155 data sets examined in study 2, met the inter-rater reliability criteria of α ≥ 0.8 and were consequently analyzed. As illustrated in Figure 2, once again those in the lowest actual performance quartile consistently overestimated their communication skills, with those in the lowest quartile overestimating their abilities by an average of more than 40 percentile points. Once again, as actual performance improved, the magnitude of this mis-estimation decreased. At the 77th percentile, an inversion occurred; those scoring in the highest actual performance quartile actually underestimated their performance. On average, students self-assessed their communication skills in the 72nd percentile, which was significantly higher than the actual mean percentile performance (by definition) of 50 (one-sample t(80) = 8.82, p < 0.01).

Figure 2.

Self-assessed scores of communication skills as a function of external raters' scores.

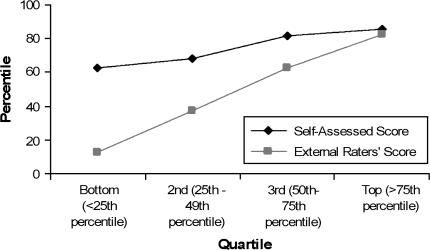

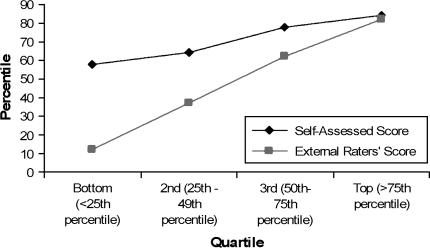

In particular, the degree of mis-estimation of empathy ratings (Figure 3) and degree of focus, logic, and coherence of the interview (Figure 4) showed the most striking divergence, with those in the lowest quartiles most significantly inflating their self-assessed performance compared to actual scores. In particular, for the mis-estimation of empathy ratings, the inversion point occurred at the 89th percentile, and for degree of focus, logic, and coherence, the inversion point occurred at the 81st percentile.

Figure 3.

Self-assessed scores of empathy as a function of external raters' scores.

Figure 4.

Self-assessed scores of focus/logic/coherence of interview as a function of external raters' scores.

DISCUSSION

Social psychologists have noted that those who lack competence (particularly in a social domain) often do so for 2 fundamental reasons: “…when people are incompetent in the strategies they adopt to achieve success and satisfaction, they suffer a dual burden: Not only do they reach erroneous conclusions and make unfortunate choices, but their incompetence robs them of any ability to realize it. Instead…they are left with the mistaken impression that they are doing just fine.”20

As this study illustrates, accurate and appropriate self-assessment is neither a natural occurring nor easily demonstrated skill or propensity. The cohort used in this study represents some of the most academically successful individuals in society. Competitive pressures for admission to pharmacy programs are strong, and those who are admitted are generally considered to be among the “best and the brightest.” Further, the students in this study were all in their fourth (and final) year of a rigorous BScPhm program, had completed over 75% of their final professional practice laboratory course, had already received copious feedback regarding their performance in previous weeks, and had the benefit of observing their peers' performances over the previous 7 laboratory sessions. Equally important, all the students in this study passed or received honors grades in this course, using criterion-based evaluation.

While there is no suggestion in this study that the inflated self-assessments of these individuals may pose a threat or danger to patient care, or that these individuals are “incompetent” in a clinical sense, it is interesting to note the significant misalignment of self-assessment and the assessment of others, both at the lowest and highest quartiles.

It is particularly puzzling for educators, given the lengths most pharmacy programs have gone to in explicitly acknowledging the importance of self-assessment as a core competency for students and future practitioners expected to engage in lifelong learning and continuous professional development. While the data indicates that most students are not able to accurately appraise, compare, and contrast their own performance vis-à-vis their peers, one would expect that 4 years of pharmacy education (and the feedback, coaching, modeling, mentoring, and evaluations associated with it) would have at some point resulted in acquisition of self-assessment skills. Perhaps most worrisome is the notion that if students at the cusp of graduation have not acquired these skills after the time and effort invested in providing feedback, what will happen to them in practice over the next 10, 20 or 30 years, particularly if they, as most pharmacists do, tend to work by themselves rather than in teams?

Several hypotheses have emerged regarding how flawed self-assessment develops, even among those who are the “best and brightest” and ought to have learned this important life skill. First there is the well-described phenomenon of the “above-average” effect, the tendency of most people to assume they are better than most of their peers.22 The genesis of this effect has not been completely defined, but it is most likely a result of the in-depth understanding we have of ourselves, our motivations, and our talents; the superficial understanding we have of others; and the psychological adaptation required to maintain a strong sense of identify, differentiation, and self-worth. The above-average effect may be particularly important in examining students who, throughout their academic careers, have been labeled as the “best and the brightest”; over time, a self-fulfilling prophecy may occur in which students themselves assume this means that they cannot simply be average, despite the fact they are now in a milieu filled with other people who were also the “best and the brightest.” The above-average effect is an important component of flawed self-assessment in a variety of domains,22 and it appears reasonable to conjecture that it may apply to pharmacy students, too.

Second, self-assessment is frequently built upon a foundation of evaluation from others; over time, externally defined criteria for success become internalized, and individuals learn to compare and contrast their own performance using a personalized schema.23 Within a pharmacy education context, the foundation of evaluation from others is frequently based on numerical grades; even when supportive feedback is provided, it often takes a backseat to the grade itself. Consequently, a student receiving a grade of 78% may compare himself/herself to another student receiving a grade of 82% and erroneously conclude that the “difference” in their performance is only 4%, when in fact, the substantive difference in performance may be much greater and much more subtle than any numerical grading system can depict. Recall that in this study, all students passed or received honors, meaning their “numerical” grades were in a fairly tight range of less than 20%. However, when translated into a percentile scheme, this range increases (by definition) to 99 and consequently when students no longer have numerical grades as a basis for self-comparison, they may make erroneous judgments about their performance. Of course, this is particularly important in practice, since numerical grades are not issued to pharmacists, but relative, comparative performance is frequently assessed (by the public, peers, and employers).

Third, despite a concerted effort to provide “feedback” to students in the form of coaching, modeling, mentoring, and support, this data suggests that perhaps there are areas for improvement in the ways in which faculty members and teaching assistants provide feedback.24 The reality is that for most generally capable students, the type of feedback they receive tends to be quite restrained; in the name of being encouraging or supportive, or simply because instructors do not wish to be disliked, many of us tend to “pull punches” or not be entirely honest with students. As described by Story and Dunning, in most aspects of daily life, few of us truly receive negative feedback about our skills and abilities from others, and when we do, attributional effects often engage in which we blame or discount the person delivering negative feedback, and often receive reinforcement from our peers for doing so.24 This tendency to gush positively about relatively trivial accomplishments (“Yeah, Johnny…look he's so smart he can tie his own shoelaces!”), to positively reinforce the absence of bad behavior (“Yeah Johnny, you're so good…you didn't beat up your friend Timmy!”), and to be much more circumspect about calling-out bad behavior (“Oh Johnny…mommy doesn't like it when you don't clean your room”) begins early in childhood and persists through the primary and secondary education systems. Labeled “the applause society,” such “feedback” may contribute to an inflated sense of one's own accomplishments vis-à-vis peers, and leave individuals without a solid foundation upon which to develop self-assessment skills.25

Results of this study align with previous reports from the social psychology literature related to overconfidence, and the tendency of individuals in all walks of life to systematically overestimate their own abilities and performance.23-25 While clearly it is important to educate health care professionals who are confident, it is equally clear that confidence does not equal competence. In this study, there is no indication that any of the students involved were incompetent, although performance variations suggest that the term “competence” indicates a broad range of behaviors. Nonetheless, the human tendency to inflate one's own ability can, in some situations, be problematic, and this study alerts us to the fact that pharmacy students may not have the accurate, appropriate self-assessment skills we assume they possess.

Over a professional's lifetime, he/she is expected to maintain competence through a sophisticated application of self-assessment propensities and skills. Upon graduation, few practitioners will have an opportunity to “learn” self-assessment or receive feedback on it; instead, they are presumed to have acquired this important skill and have the willingness to apply it frequently in order to maintain their practice. This study raises the provocative question, what if upon graduation many (arguably most) students do not have accurate self-assessment skills? Will these skills be learned even if they are not formally taught? Can they be learned? What has happened during the primary, secondary, and tertiary education of students that has brought many of them to the point where they do not appear to possess this important professional (and life) skill?

Clearly, further research and examination of these issues is warranted, particularly as the role of self-assessment becomes more prominent in pharmacy education, practice, and in particular, in the continuous professional development literature.

This study examined a complex psychological construct using a fairly conventional method. The study's participants represent only one school of pharmacy at one point in time; consequently, the ability to generalize these findings is quite constrained. This study did not attempt to define or measure “competence” (since all students who participated passed or received honors in the course and in the particular clinical simulations examined, they were (using criterion-based referencing) “competent” or achieved expectations at their level. Consequently, the practical significance of their overconfident self-assessments may be questioned: as long as they are and continue to be competent and thus do not pose a threat to patient care, does it really matter that they overestimate their abilities and performance vis-à-vis their peers? Further research is required to confirm whether this is indeed an issue of concern.

This research introduced some adaptations to the original method proposed by Kruger and Dunning (who did not study health care professionals where specific clinical knowledge requirements complement communication skills to form the amalgam “clinical skills”). In this study, specific, arbitrary weightings were assigned to different groups of external assessors; for example, in assessing the clinical knowledge, the pharmacist-assessor's evaluation was weighted at 50% (in recognition of his/her experience and expertise), while each of the 7 peer-assessors' evaluations was only weighted at 5% (in recognition of their novice standing in the profession). There was little in the literature to guide us in determining what the appropriate weightings would be; changes in the weightings, however, would change the study results somewhat. This is partially mitigated by using the initial inter-rater reliability screen; nonetheless, a different weighting system may have yielded a different spectrum of results.

Finally, the study of self-assessment is frequently confounded by the ability of participants to obfuscate their true opinions, or to simply not engage fully in the task and lazily report to investigators due to a perception that the task of self-assessment itself is not worthwhile. While students in this study had experience in self-assessment, this does not preclude the possibility that they did not take the research seriously enough and consequently responded in a flippant or disengaged manner. This, of course, would have affected the quality of data they contributed which in turn would have affected the results of this study.

CONCLUSIONS

The importance of self-assessment in the health professions has been well documented; unfortunately few evaluations of self-assessment accuracy of health professionals have been performed. This study has demonstrated that, as predicted by social psychologists more than a decade ago, pharmacy students are no different than other people and therefore most demonstrate flawed self-assessment skills, with a tendency to overestimate their own abilities. Further work is required to confirm this observation, and to determine how such behavior may impact on professional practice, lifelong learning, and continuous professional development.

REFERENCES

- 1.Fjortoft N. Self-assessment in pharmacy education. Am J Pharm Educ. 2006;70(3) doi: 10.5688/aj700364. Article 64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hansford BC, Hattie JA. The relationship between self and achievement/performance measures. Rev Educ Res. 1982;52:123–42. [Google Scholar]

- 3.Lane JL, Gottlieb RP. Improving the interviewing and self-assessment skills of medical students: is it time to readopt videotaping as an educational tool? Ambulatory Pediatr. 2004;4:244–8. doi: 10.1367/A03-122R1.1. [DOI] [PubMed] [Google Scholar]

- 4.Alicke MD. Global self-evaluation as determined by the desirability and controllability of trait adjectives. J Pers Soc Psychol. 1985;49:1621–30. [Google Scholar]

- 5.Dunning D, Cohen GL. Egocentric definitions of traits and abilities in social judgment. J Pers Soc Psychol. 1992;63:341–55. [Google Scholar]

- 6.Everson HT, Tobias S. The ability to estimate knowledge and performance in college: a metacognitive analysis. Instr Sci. 1998;26:65–79. [Google Scholar]

- 7.Dunning D, Perie M, Story AL. Self-serving prototypes of social categories. J Pers Soc Psychol. 1991;61:957–68. doi: 10.1037//0022-3514.61.6.957. [DOI] [PubMed] [Google Scholar]

- 8.Chi M, Glaser R, Rees E. Expertise in problem solving. In: Sternberg R, editor. Advances in the Psychology of Human Intelligence. Hillsdale, NJ: Erlbaum; 1989. [Google Scholar]

- 9.Klin CM, Guizman AE, Levine WH. Knowing what you don't know: metamemory and discourse processing. J Exp Psychol. 1997;23:1378–93. doi: 10.1037//0278-7393.23.6.1378. [DOI] [PubMed] [Google Scholar]

- 10.Maki RH, Johas D, Kallod M. The relationship between comprehension and metacomprehension ability. Psychonomic Bull Rev. 1994;1:126–9. doi: 10.3758/BF03200769. [DOI] [PubMed] [Google Scholar]

- 11.Metcalf J. Cognitive optimism: self-deception or memory based processing heuristics? Pers Soc Psychol Rev. 1998;2:100–10. doi: 10.1207/s15327957pspr0202_3. [DOI] [PubMed] [Google Scholar]

- 12.Rees C, Shepherd M. Students' and assessors' attitudes towards students' self-assessment of their personal and professional behaviours. Med Educ. 2005;39:30–9. doi: 10.1111/j.1365-2929.2004.02030.x. [DOI] [PubMed] [Google Scholar]

- 13. The international Pharmaceutical Federation. FIP Statement on professional standards: continuing professional development. 2002. www.fip.org. Accessed: January 19, 2007.

- 14. Accreditation Council for Pharmacy Education. Statement on Continuing Professional Development (CPD). http://www.acpe-accredit.org. Accessed: January 19, 2007.

- 15. Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. http://ww.acpe-accredit.org/standards/default.asp. Accessed: January 19, 2007.

- 16.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence – a systematic review. JAMA. 2006;296:1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 17.Duffy FD, Holmboe ES. Self-assessment in life long learning and improving performance in practice: physician know thyself. JAMA. 2006;296:1137–9. doi: 10.1001/jama.296.9.1137. [DOI] [PubMed] [Google Scholar]

- 18.Webb TP, Aprahamian C, Weigelt JA, Brasel KJ. The surgical learning and instructional portfolio (SLIP) as a self-assessment educational tool demonstrating practice-based learning. Current Surg. 2006;63:444–7. doi: 10.1016/j.cursur.2006.04.001. [DOI] [PubMed] [Google Scholar]

- 19.Krause JE, Popovich NG. A group interaction peer/self-assessment process in a pharmacy practice course. Am J Pharm Educ. 1996;60:136–45. [Google Scholar]

- 20.Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one's own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77:1121–34. doi: 10.1037//0022-3514.77.6.1121. [DOI] [PubMed] [Google Scholar]

- 21.Colliver JA, Swartz MH. Assessing clinical performance with standardized patients. JAMA. 1997;278:790–1. doi: 10.1001/jama.278.9.790. [DOI] [PubMed] [Google Scholar]

- 22.Kruger J. Lake Wobegon be gone! The “below-average” effect and the egocentric nature of comparative ability judgments. J Pers Soc Psychol. 1999;77:221–32. doi: 10.1037//0022-3514.77.2.221. [DOI] [PubMed] [Google Scholar]

- 23.Sinkavich FJ. Performance and metamemory: do students know what they don't know? Instructional Psychol. 1995;22:77–87. [Google Scholar]

- 24.Story A, Dunning D. The more rational side of self-serving prototypes: the effects of success and failure performance feedback. J Experimental Soc Psychol. 1998;34:513–29. [Google Scholar]

- 25.Wallone RP, Griffin DW, Lin S, Ross L. Overconfident prediction of future actions and outcomes by self and others. J Pers Soc Psychol. 1990;58:582–92. doi: 10.1037//0022-3514.58.4.582. [DOI] [PubMed] [Google Scholar]