Abstract

Adaptation occurs in a variety of forms in all sensory systems, motivating the question: what is its purpose? A productive approach has been to hypothesize that adaptation helps neural systems to efficiently encode stimuli whose statistics vary in time. To encode efficiently, a neural system must change its coding strategy, or computation, as the distribution of stimuli change. Information theoretic methods allow this efficient coding hypothesis to be tested quantitatively. Empirically, adaptive processes occur over a wide range of timescales. On short timescales, underlying mechanisms include the contribution of intrinsic nonlinearities. Over longer timescales, adaptation is often power-law-like, implying the coexistence of multiple timescales in a single adaptive process. Models demonstrate that this can result from mechanisms within a single neuron.

Adaptation as efficient coding

Barlow’s efficient coding hypothesis suggests that, given a finite capacity to transmit information, neural systems employ an optimally efficient coding strategy to represent the inputs that they typically process [1-3, Box 1]. However, when collected over relatively short time or length scales, the local statistics of many natural stimuli differ greatly from their global distribution. For example, luminance and contrast in natural visual environments vary over orders of magnitude across time in a day or across space in a complex scene. A sensory system that matched its distribution of outputs to the global distribution of stimuli would then be inefficient in transmitting the stimulus’ local distribution. Under these circumstances, one might expect the system’s coding strategy to adapt to local characteristics of the stimulus statistics.

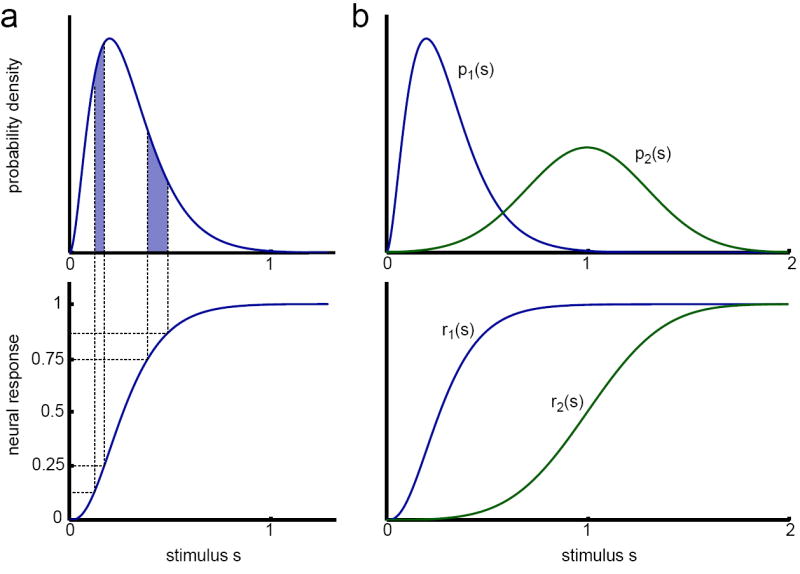

Efficient coding (Box 1).

Maximizing efficiency with a fixed dynamic range requires that the system maps its inputs to its outputs such that all outputs are equally likely [figure 1,25,64]. The optimal coding strategy thus depends on the statistics of the stimulus that the system represents.

Recent experimental designs have begun to test the efficient coding hypothesis in the context of adaptation. In these experiments, the distribution of a random time-varying stimulus, rather than a single stimulus parameter, is varied. This design allows one to examine the coding strategy that the neural system uses to represent an entire stimulus distribution, and to relate changes in coding strategy to changes in stimulus distribution. The framework underlying these experiments presents the task of adaptation as essentially an inference problem: the timescale for the change in coding strategy cannot be shorter than the time required for the system to “learn” the new distribution.

In order to analyze experiments of this type, one must describe the coding strategy of the system during changes in the stimulus distribution. To do so, it is necessary to reduce the system’s entire input-output mapping to a simpler characterization. Linear-nonlinear (LN) models have often been successful in capturing changes in the computation of an adapting system (Box 2).

Capturing adaptive computation (Box 2).

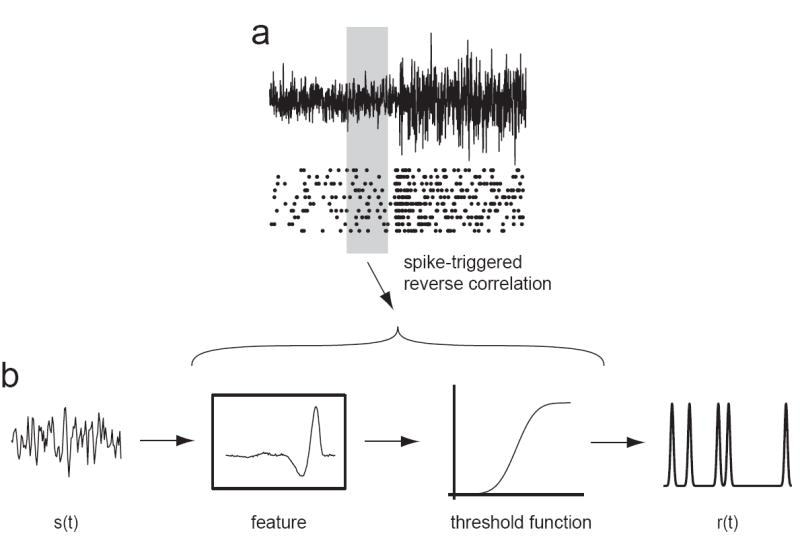

To effectively characterize adaptation, one must begin with a characterization of computation. A powerful method for characterizing neural computation is to approximate the neural system as first linearly filtering the stimulus by an identified relevant feature or set of features, and then to generate spikes according to a nonlinear function of the stimulus’ similarity to the feature(s) (figure 2). Models of this simple type are known as linear/nonlinear (LN) models, and have had considerable success in capturing some aspects of neural processing [26].

In this simplified framework, adaptation to stimulus statistics might affect the features that linearly filter the stimulus, or the nonlinear function that determines the probability to fire (figure 2B). A change in response gain (without a change in the feature) can be manifested as either a vertical scaling of the linear feature or a horizontal scaling of the nonlinearity. Changes in the feature for different stimulus conditions occur, for example, in the retina; in low light levels, the receptive fields of retinal ganglion cells show increased temporal integration and decreased inhibitory surround [25].

One can sample these potentially time-dependent components using reverse correlation either with spikes conditioned on the phase of their arrival time with respect to the stimulus cycle if the stimulus is changing periodically, or with an adaptive filter [65].

Systems adapt to a variety of stimulus statistics

The simplest instantiation of a stimulus probability distribution is one in which the stimulus takes one of two possible values. If one of these values is presented more frequently than the other, a system may adapt to give a stronger response to the rarer stimulus. This effect was observed in cat auditory cortex A1 in response to two tones presented with different probabilities [4,5]. A potential substrate for this effect was demonstrated in a cultured network by Eytan et al. [6], who varied the relative frequency of current injection inputs applied at different locations. Such stimulus-specific adaptation may implement a kind of novelty detection, in which the strength of the response is adjusted according to the information it carries.

More generally, the efficient coding hypothesis might be taken to suggest that stimulus encoding is sensitive to the variations in stimulus statistics seen in natural stimuli. The properties of natural visual scenes, in particular, have been extensively studied [7]. A simple model for natural stimuli is of local Gaussian fluctuations with a long-tailed mean and variance modulated on longer time or length scales [8].

Inspired by this description of natural stimuli, a class of experiments has examined adaptation to a change in the mean or variance of a Gaussian white noise stimulus. Using a switching paradigm in which a random stimulus is chosen from a distribution whose parameters change periodically between two values (figure 2A), Smirnakis et al. [9] showed that retinal ganglion cells exhibit an adaptive change in firing rate when the variance of a flickering light stimulus changes. In the fly motion sensitive neuron H1, Brenner et al. [10] computed an LN model for different variances of a randomly varying velocity stimulus. They showed that the nonlinear gain function adapted such that the scaling of the stimulus axis was normalized by the stimulus standard deviation, and that this serves to maximize information transmission about the stimulus. Further, this gain change occurs in ~100 ms, rapidly maximizing information transmission during continuous changes in stimulus variance [11]. A separate slower adaptive process modulates the overall firing rate on much longer timescales. Analogously, retinal ganglion cells display contrast gain control [12-16], which occurs on a much faster timescale than rate changes due to contrast adaptation [9,14].

Figure 2. Neuronal computation modeled using a linear/nonlinear (LN) model.

The linear filter(s) and nonlinear threshold function of the model are estimated by using reverse correlation between spikes and stimuli. (a) The spike times of a neuron are recorded in response to some stimulus. In this example, a filtered Gaussian white noise stimulus is presented, where the variance of the stimulus changes periodically between two values, but the white noise is generated anew in each variance cycle. A raster plot of spike times produced in response to several different instantiations of the white noise process are shown below. The stimulus preceding each spike is used to find the feature(s) and threshold function of the LN model by reverse correlation. (b) Computation is then modeled by linearly filtering incoming stimuli with the previously determined feature(s). Filtered stimuli are then passed through the threshold function, which gives the probability of firing an action potential as a function of time. To examine how the LN model changes with the variance context, spikes are sampled from a particular time bin with respect to the changes in the variance (shaded box) to compute time-dependent features and threshold functions.

Adaptation to stimulus variance has also been observed in several higher brain regions. In rat barrel cortex, when the variance of a white noise motion of the whiskers was changed, the relevant features remained approximately unchanged, but the gain curves showed a change in scaling by the stimulus standard deviation [17]. In field L, the avian analog of primary auditory cortex, Nagel and Doupe [18] observed rapid changes in filters and gain curves as well as a slower modulation of the overall firing rate, similar to observations in H1 and RGCs, as the distribution of sound intensity was varied. While individual neurons in inferior colliculus responding to sound amplitudes showed a variety of response changes during adaptation, Dean et al. [19] used Fisher information to demonstrate that the population as a whole shifted responses to best encode the high probability sounds, even when the distributions were relatively complex, such as bimodal. Neural responses in macaque inferior temporal cortex adapted to the width of the distribution of image stimuli along arbitrarily chosen stimulus directions [20].

Beyond white noise

In the LGN, Mante et al. [21] examined the interaction between adaptation to the mean and the variance (mean luminance and contrast) of drifting grating stimuli and found that the changes in filters due to luminance and contrast adaptation are independent. Suggestively, their analysis of natural images also showed independence of luminance and contrast. The same group found no adaptation to higher order statistics—the skewness and kurtosis—of a random checkboard stimulus in LGN [22]. However, Hosoya et al. [23] generalized a previous finding of rate adaptation to the spatial scale of a flickering checkerboard [9] to demonstrate adaptation to a variety of arbitrary spatiotemporal correlations in visual stimuli in RGCs, and showed that the new filters that evolve after exposure to these correlations act to remove the correlations and so perform predictive coding [24,25].

Determining the effect of complex changes in stimulus distribution is difficult to address due to the biases introduced into white noise analysis by non-Gaussian stimuli [26], which are difficult to separate from observed dependences of sampled receptive fields on the stimulus ensemble [27-30]. Sharpee et al. [31] introduced an information theoretic reverse correlation method which finds the stimulus dimensions that maximize mutual information between spiking responses and the stimulus. This method was used to find significant differences between the features encoded by V1 neurons in a white noise ensemble and a natural stimulus ensemble [32].

Multiple timescales

How might the goal of maintaining efficient information transmission constrain the dynamics of adaptation? In tracking changing stimulus statistics, there are two relevant timescales for any system: the characteristic timescale of changes in the stimulus distribution, and the minimum time required by an ideal observer to estimate the parameters of the new distribution. The first timescale is established by the environment, while the second is determined by statistics and sets a lower bound on how quickly any adapting system could estimate parameters of the new distribution. Given these constraining timescales, an adapting system should choose an appropriate estimation timescale for computing local stimulus statistics. For example, consider a system that adapts to the local stimulus mean. If this system estimates the local mean by averaging over only a few samples, the system would amplify noise and transmit little information about its stimulus [33]. Conversely, a system that averages over a timescale much longer than the timescale of changes in stimulus mean will not be optimally adapted to the local stimulus ensemble.

This argument assumes that the neural system can choose an adaptation timescale to match the dynamics of stimulus statistics. Experimentally, it appears that two separable phenomena describe the dynamics of adaptation to variance or contrast, at least in early visual and auditory systems. As discussed above, the first of these components rapidly rescales the system’s input-output gain following a change in stimulus statistics [11-16,18,34]. The second component of adaptation dynamics is a slow change in the system’s mean firing rate [9,11,13,15,18,35,36]. It is not yet clear what, if any, relationship exists between these two phenomena. In the fly H1 neuron, these effects appear to be independent [11], but such a result does not appear necessary a priori. At least in some cases, the adaptive rescaling of input-output functions in rat barrel cortex appears to follow the slow timescale rate adaptation [17]. Perhaps consistent with this, Webber and Stanley found that transient and steady-state adaptation in this area could be modeled with a single state variable [37].

The timescale of the fast gain change is often on the order of the timescale of the system’s relevant feature, and does not appear to depend on the timescale of switches in stimulus ensemble. In at least some cases, fast gain changes in response to changes in stimulus variance or correlation time are a consequence of the system’s static nonlinearity with no change in system parameters [38-44]. Whether such effects should rightly be called adaptation is something of a philosophical question. There is no doubt that finite dimensional stimulus/response characterizations such as LN models are limited and adaptation may appear to change model parameters. On the other hand, these models may, in some cases, capture all stimulus dependence if appropriately extended.

Slower changes in overall excitability have variable dynamics and may be subserved by a wide variety of mechanisms. Spike frequency adaptation (SFA) occurs over many timescales in cortical neurons [45], and has been analytically described for simple model neurons [46,47]. By specifying only the initial and steady-state firing rate-input (f-I) curve as well as the effective time constant, SFA can be described without knowledge of particular neuronal dynamics [48,49]. Slow currents have been implicated in altering the gain of f-I curves, allowing neurons to remain sensitive to input fluctuations at high mean currents [50-52].

Power law adaptation

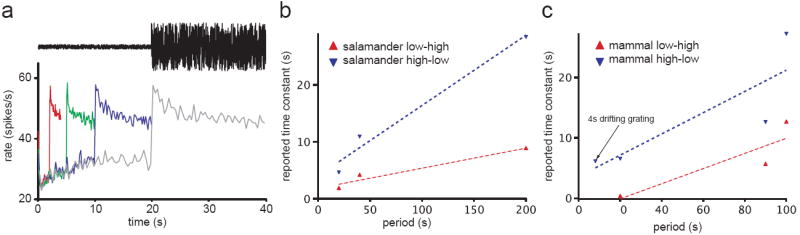

In some cases, the dynamics of slow changes in excitability might be matched to the dynamics of stimulus changes. Many researchers using a switching paradigm with a single switching timescale have reported the dynamics of this slow gain change as an exponential process with a fixed time scale [5,9,13-15,35]. However, in the fly visual neuron H1, when the time between stimulus changes was varied, the apparent adaptation timescale scaled proportionally [figure 3A, 11,53] . Thus, the dynamics of slow gain changes in fly H1 are consistent with a power-law rather than an exponential process [36,54]. Power law dynamics are significant because there is no privileged timescale: dynamics are invariant with respect to changes in temporal scale, and such a system could therefore adjust its effective adaptation timescale to the environment.

Figure 3. The apparent timescale of adaptation depends on the dynamics of changes in the stimulus ensemble.

(a) In this example of a switching experiment, the variance of a Gaussian white noise stimulus is changed periodically between two values (top). Following an increase in stimulus variance, the average firing rate of a motion sensitive H1 cell in the fly visual system (bottom) increased transiently and then relaxed towards a baseline (gray trace). As the period of switches in stimulus variance was increased, the time constant of the slow relaxation in firing rate increased proportionally from 5s period (red trace) to 40s period (gray trace). (b) The reported time constant of slow relaxation in firing rate [9] or input currents [13] of RGCs or input currents to bipolar cells [60] following an increase (red) or decrease (blue) in contrast of a full field flickering stimulus increases with increasing switching period. Where Rieke [60] reports a sum of exponentials, we have plotted the time constant of the best fitting single exponential. In this and (c), dashed lines are linear regression fits. (c) The reported time constant of slow relaxation in firing rate of rabbit RGCs [9,34] or the firing rate [16] or input currents [35] of guinea pig RGCs following an increase (red) or decrease (blue) in contrast of a full field flickering stimulus increases with increasing switching period. An arrow identifies the exceptional stimulus [35] in which a non-periodic 4s sinusoid grating was presented instead of a flickering field. Where Brown et al. [34] report time to 66% recovery, we plot the time constant of an exponential with equal time to 66% recovery. The results of (b-c) are expected if the observed systems modify their rate of gain change according to stimulus history, and are consistent with power law, not exponential, dynamics.

Although studies in other systems have not explicitly tested for multiple time scales, results from studies of temporal contrast adaptation in salamander and mammalian (rabbit and guinea pig) retina suggest that the apparent time constant of the slow gain change indeed varies as a function of the period between stimulus switches (figure 3B-C).

Few studies provide direct evidence for the biophysical mechanisms underlying multiple timescale dynamics. Power law dynamics can be approximated by a cascade of many exponential processes [36,54,55]. Thus, a leading hypothesis is that multiple timescale dynamics are the result of a cascade of exponential processes in a cell or network. Multiple timescales exist in the multiplicity of channel dynamics present in single neurons [45]. Even in single channels, power-law recovery from inactivation has been shown in isolated NaII and NaIIa channels [56]. This behavior is captured by a stochastic sodium channel model that includes a Markov chain of multiple inactivation states [55].

Intrinsic properties or circuit mechanisms?

A leading candidate for a mechanism of contrast gain control in V1 is divisive normalization, in which the output of a given neuron is modulated by feedback from the responses of neurons with similar receptive fields [57,58]. However, many of the mechanisms we have discussed here may operate at the level of single neurons [59]. Recent work has made considerable progress in elucidating where in particular circuits adaptation occurs. In salamander retinal ganglion cells, rapid contrast adaptation is partially inherited from the adaptation of synaptic inputs [13,60] while a second component is contributed by intrinsic mechanisms [61]. Manookin et al. [35] also find that recovery from high contrast stimulation in guinea pig RGCs, characterized by a slow “afterhyperpolarization,” is mediated in large part by inherited changes in synaptic inputs with an additional intrinsic component. In mouse retina, adaptation to dim mean background luminance occurs in rod photoreceptors and at the rod bipolar-to-AII amacrine cell synapse [62]. In this case, the dominant site of adaptation was predicted by the likely site of saturation in response due to convergence of signals in the retinal circuitry. In rat barrel cortex, Katz et al. [63] showed that a subthreshold component of adaptation is whisker-specific, while responses in barrel cortex are multi-whisker, implying that the adaptation occurs in intracortical or thalamocortical connections as opposed to intrinsic mechanisms in the barrel cortical neurons.

Conclusion

A growing body of evidence suggests that representations at all levels throughout sensory processing pathways are plastic, depending on the recent history of the stimulus, on a range of timescales varying from virtually instantaneous to timescales more typically associated with synaptic changes. This plasticity can increase the information transmission rate of the signal. One would thus like to determine whether constraints on timescales are imposed by the time required to learn dynamic efficient representations. It is becoming clear that some components of what we think of as advanced processing may be occurring at low levels. Furthermore, some types of sophisticated apparent learning effects may be a result of intrinsic nonlinearities. A view of sensory systems as a simple feed-forward relay of filtered sensory information from transducers to cortex is no longer appropriate. Instead, we must consider the statistics of the natural world, plasticity at multiple levels of sensory processing, and the consequences for encoding of sensory information at each stage.

Figure 1. An example of efficient coding.

(a) Given a stimulus distribution (top), and a fixed output range, the maximally efficient mapping from stimulus to response is the integral of the stimulus distribution (bottom), known as the cumulative distribution. This mapping transforms equal probability in the stimulus distribution (shaded areas) to equal response ranges, making all responses equally likely. (b) When the stimulus distribution changes, for example from p1 to p2 (top), the maximally efficient mapping also changes. The new mapping, r2(s), is the cumulative distribution of p2(s). In this case, both the mean and the variance of the stimulus distribution change, leading to a shift in the half-maximum and a decrease in gain (slope) of r2(s) compared to r1(s), respectively.

Acknowledgments

We would like to thank Felice Dunn, Gabe Murphy, Fred Rieke and Rebecca Mease for useful comments and discussions. This work was supported by a Burroughs-Welcome Careers at the Scientific Interface grant (AF, BW and BL), NIH T32EY-0731 (BW), NIH MSTP T32-07266 (BL), ARCS (BL).

Footnotes

Annotations Sharpee et al., 2006:

This paper applies a novel information theoretic reverse correlation method [31] to obtain cat V1 receptive fields during viewing of a white noise stimulus and of natural movies. The receptive fields differed in the two cases in their low-pass properties, in such a way as to transmit similar low frequency power in the two cases. The information transmitted about the stimulus was determined to change on the order of ~100 sec.

Maravall et al., 2006:

It is shown that adaptation to stimulus variance also occurs in neurons in primary somatosensory cortex; here, rat barrel cortex. Due to local phase invariance of the response, features and gain curves were extracted using covariance analysis. While features did not change with variance, the ranges of the gain curves were found to scale proportionally to the stimulus standard deviation in the majority of neurons, maintaining information transmission.

Nagel and Doupe, 2006:

The responses of neurons in avian field L are analyzed during changes in mean and variance of the randomly varying amplitude of a broadband noise input. The filters of an LN model change systematically with changes in the mean. As in [11] and [14], while the firing rate changes slowly following a change in stimulus variance, the filters and gain curves change nearly instantaneously.

Gilboa, Chen, and Brenner, J Neurosci 2005:

Following from the observation of Toib, Lyakhov, and Marom [56] that mammalian brain sodium channels display power-law like recovery from inactivation, this article models sodium channel inactivation using a Markov chain of one activation state and many inactivation states, where neuronal excitability is related to the fraction of available channels. The characteristic time scale of adaptation is found to depend on stimulus duration by a power-law scaling. The key aspect of this model is that the time scale of recovery depends on how its large pool of degenerate inactive states is populated, which in turn is affected by stimulus history.

Borst, Flanagin, and Sompolinsky, PNAS, 2005:

In the fly visual system, previous work has demonstrated that the H1 neuron adapts its input/output relationship as stimulus statistics change. These authors find that this seemingly complicated adaptation can be explained by the intrinsic properties of a Reichhardt correlator motion detector, where such rescaling is a consequence of the system nonlinearity acting on a multidimensional stimulus. This gain change appears in a reduced dimensional model as a result of other, correlated, dimensions of the stimulus that are not included in the LN model yet affect the response differently at different variances.

Hosoya et al. (2006):

This study explores adaptation to complex spatial or temporal correlations in salamander and rabbit retina, showing that the receptive fields of many RGCs change following stimulation with these correlated stimuli such that the response to novel stimuli is enhanced compared to the response to the adapting stimulus. The authors reject the hypothesis of fatigue of pattern matching cells in favor of a model of Hebbian plasticity at inhibitory amacrine-to-RGC synapses. This conclusion is intriguingly at odds with other studies that find adaptation in the retina to simpler correlations (mean, variance) in the absence of inhibitory transmission [34].

Manookin and Demb (2006):

This study adds to our understanding of the relationship between network and intrinsic components of temporal contrast adaptation in retina. The authors conclude that recovery from a high contrast stimulus, characterized by a slow afterhyperpolarization in guinea pig RGC membrane potential and firing rate, is inherited largely from presynaptic mechanisms. In addition, they find a small contribution of intrinsic mechanisms in the RGC. This work is consistent with previous findings in salamander retina [13,61].

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Simoncelli EP. Vision and the statistics of the visual environment. Curr Opin Neurobiol. 2003;13:144–149. doi: 10.1016/s0959-4388(03)00047-3. [DOI] [PubMed] [Google Scholar]

- 2.Barlow HB. Possible principles underlying the transformation of sensory messages. In: Rosenblith W, editor. Sensory Communication. MIT Press; 1961. [Google Scholar]

- 3.Laughlin SB. The role of sensory adaptation in the retina. J Exp Biol. 1989;146:39–62. doi: 10.1242/jeb.146.1.39. [DOI] [PubMed] [Google Scholar]

- 4.Ulanovsky N, Las L, Nelken I. Processing of low-probability sounds by cortical neurons. Nat Neurosci. 2003;6:391–398. doi: 10.1038/nn1032. [DOI] [PubMed] [Google Scholar]

- 5.Ulanovsky N, Las L, Farkas D, Nelken I. Multiple time scales of adaptation in auditory cortex neurons. J Neurosci. 2004;24:10440–10453. doi: 10.1523/JNEUROSCI.1905-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Eytan D, Brenner N, Marom S. Selective adaptation in networks of cortical neurons. J Neurosci. 2003;23:9349–9356. doi: 10.1523/JNEUROSCI.23-28-09349.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Simoncelli EP, Olshausen BA. Natural image statistics and neural representation. Annu Rev Neurosci. 2001;24:1193–1216. doi: 10.1146/annurev.neuro.24.1.1193. [DOI] [PubMed] [Google Scholar]

- 8.Ruderman DL, Bialek W. Statistics of natural images: Scaling in the woods. Physical Review Letters. 1994;73:814–817. doi: 10.1103/PhysRevLett.73.814. [DOI] [PubMed] [Google Scholar]

- 9.Smirnakis SM, Berry MJ, Warland DK, Bialek W, Meister M. Adaptation of retinal processing to image contrast and spatial scale. Nature. 1997;386:69–73. doi: 10.1038/386069a0. [DOI] [PubMed] [Google Scholar]

- 10.Brenner N, Bialek W, de Ruyter van Steveninck R. Adaptive rescaling maximizes information transmission. Neuron. 2000;26:695–702. doi: 10.1016/s0896-6273(00)81205-2. [DOI] [PubMed] [Google Scholar]

- 11.Fairhall AL, Lewen GD, Bialek W, de Ruyter Van Steveninck RR. Efficiency and ambiguity in an adaptive neural code. Nature. 2001;412:787–792. doi: 10.1038/35090500. [DOI] [PubMed] [Google Scholar]

- 12.Shapley RM, Victor JD. The effect of contrast on the transfer properties of cat retinal ganglion cells. J Physiol. 1978;285:275–298. doi: 10.1113/jphysiol.1978.sp012571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kim KJ, Rieke F. Temporal contrast adaptation in the input and output signals of salamander retinal ganglion cells. J Neurosci. 2001;21:287–299. doi: 10.1523/JNEUROSCI.21-01-00287.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Baccus SA, Meister M. Fast and slow contrast adaptation in retinal circuitry. Neuron. 2002;36:909–919. doi: 10.1016/s0896-6273(02)01050-4. [DOI] [PubMed] [Google Scholar]

- 15.Chander D, Chichilnisky EJ. Adaptation to temporal contrast in primate and salamander retina. J Neurosci. 2001;21:9904–9916. doi: 10.1523/JNEUROSCI.21-24-09904.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zaghloul KA, Boahen K, Demb JB. Contrast adaptation in subthreshold and spiking responses of mammalian Y-type retinal ganglion cells. J Neurosci. 2005;25:860–868. doi: 10.1523/JNEUROSCI.2782-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Maravall M, Petersen RS, Fairhall AL, Arabzadeh E, Diamond ME. Shifts in coding properties and maintenance of information transmission during adaptation in barrel cortex. PLoS Biol. 2007;5:e19. doi: 10.1371/journal.pbio.0050019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nagel KI, Doupe AJ. Temporal processing and adaptation in the songbird auditory forebrain. Neuron. 2006;51:845–859. doi: 10.1016/j.neuron.2006.08.030. [DOI] [PubMed] [Google Scholar]

- 19.Dean I, Harper NS, McAlpine D. Neural population coding of sound level adapts to stimulus statistics. Nat Neurosci. 2005;8:1684–1689. doi: 10.1038/nn1541. [DOI] [PubMed] [Google Scholar]

- 20.De Baene W, Premereur E, Vogels R. Properties of shape tuning of macaque inferior temporal neurons examined using Rapid Serial Visual Presentation. J Neurophysiol. 2007 doi: 10.1152/jn.00741.2006. [DOI] [PubMed] [Google Scholar]

- 21.Mante V, Frazor RA, Bonin V, Geisler WS, Carandini M. Independence of luminance and contrast in natural scenes and in the early visual system. Nat Neurosci. 2005;8:1690–1697. doi: 10.1038/nn1556. [DOI] [PubMed] [Google Scholar]

- 22.Bonin V, Mante V, Carandini M. The suppressive field of neurons in lateral geniculate nucleus. J Neurosci. 2005;25:10844–10856. doi: 10.1523/JNEUROSCI.3562-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hosoya T, Baccus SA, Meister M. Dynamic predictive coding by the retina. Nature. 2005;436:71–77. doi: 10.1038/nature03689. [DOI] [PubMed] [Google Scholar]

- 24.Srinivasan MV, Laughlin SB, Dubs A. Predictive coding: a fresh view of inhibition in the retina. Proc R Soc Lond B Biol Sci. 1982;216:427–459. doi: 10.1098/rspb.1982.0085. [DOI] [PubMed] [Google Scholar]

- 25.Atick Could information theory provide an ecological theory of sensory processing? Network. 1992 doi: 10.3109/0954898X.2011.638888. [DOI] [PubMed] [Google Scholar]

- 26.Simoncelli EP, Paninski L, Pillow J, Schwartz O. Characterization of neural responses with stochastic stimuli. In: Gazzaniga M, editor. The New Cognitive Neurosciences. Third Edition. MIT Press; 2004. pp. 327–338. [Google Scholar]

- 27.Theunissen FE, Sen K, Doupe AJ. Spectral-temporal receptive fields of nonlinear auditory neurons obtained using natural sounds. J Neurosci. 2000;20:2315–2331. doi: 10.1523/JNEUROSCI.20-06-02315.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Woolley SM, Gill PR, Theunissen FE. Stimulus-dependent auditory tuning results in synchronous population coding of vocalizations in the songbird midbrain. J Neurosci. 2006;26:2499–2512. doi: 10.1523/JNEUROSCI.3731-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.David SV, Vinje WE, Gallant JL. Natural stimulus statistics alter the receptive field structure of v1 neurons. J Neurosci. 2004;24:6991–7006. doi: 10.1523/JNEUROSCI.1422-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Victor JD, Mechler F, Repucci MA, Purpura KP, Sharpee T. Responses of V1 neurons to two-dimensional hermite functions. J Neurophysiol. 2006;95:379–400. doi: 10.1152/jn.00498.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sharpee T, Rust NC, Bialek W. Analyzing neural responses to natural signals: maximally informative dimensions. Neural Comput. 2004;16:223–250. doi: 10.1162/089976604322742010. [DOI] [PubMed] [Google Scholar]

- 32.Sharpee TO, Sugihara H, Kurgansky AV, Rebrik SP, Stryker MP, Miller KD. Adaptive filtering enhances information transmission in visual cortex. Nature. 2006;439:936–942. doi: 10.1038/nature04519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dunn FA, Rieke F. The impact of photoreceptor noise on retinal gain controls. Curr Opin Neurobiol. 2006;16:363–370. doi: 10.1016/j.conb.2006.06.013. [DOI] [PubMed] [Google Scholar]

- 34.Brown SP, Masland RH. Spatial scale and cellular substrate of contrast adaptation by retinal ganglion cells. Nat Neurosci. 2001;4:44–51. doi: 10.1038/82888. [DOI] [PubMed] [Google Scholar]

- 35.Manookin MB, Demb JB. Presynaptic mechanism for slow contrast adaptation in mammalian retinal ganglion cells. Neuron. 2006;50:453–464. doi: 10.1016/j.neuron.2006.03.039. [DOI] [PubMed] [Google Scholar]

- 36.Thorson J, Biederman-Thorson M. Distributed relaxation processes in sensory adaptation. Science. 1974;183:161–172. doi: 10.1126/science.183.4121.161. [DOI] [PubMed] [Google Scholar]

- 37.Webber RM, Stanley GB. Transient and steady-state dynamics of cortical adaptation. J Neurophysiol. 2006;95:2923–2932. doi: 10.1152/jn.01188.2005. [DOI] [PubMed] [Google Scholar]

- 38.Borst A, Flanagin VL, Sompolinsky H. Adaptation without parameter change: Dynamic gain control in motion detection. Proc Natl Acad Sci U S A. 2005;102:6172–6176. doi: 10.1073/pnas.0500491102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Brunel N, Chance FS, Fourcaud N, Abbott LF. Effects of synaptic noise and filtering on the frequency response of spiking neurons. Phys Rev Lett. 2001;86:2186–2189. doi: 10.1103/PhysRevLett.86.2186. [DOI] [PubMed] [Google Scholar]

- 40.Fourcaud-Trocme N, Hansel D, van Vreeswijk C, Brunel N. How spike generation mechanisms determine the neuronal response to fluctuating inputs. J Neurosci. 2003;23:11628–11640. doi: 10.1523/JNEUROSCI.23-37-11628.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yu Y, Lee TS. Dynamical mechanisms underlying contrast gain control in single neurons. Phys Rev E Stat Nonlin Soft Matter Phys. 2003;68:011901. doi: 10.1103/PhysRevE.68.011901. [DOI] [PubMed] [Google Scholar]

- 42.Paninski L, Lau B, Reyes A. Noise-driven adaptation: in vitro and mathematical analysis. Neurocomputing. 2003:877–883. [Google Scholar]

- 43.Hong S, Agüera y Arcas B, Fairhall AL. Single neuron computation: from dynamical system to feature detector. Neural Computation. 2007 doi: 10.1162/neco.2007.19.12.3133. In press. [DOI] [PubMed] [Google Scholar]

- 44.Rudd ME, Brown LG. Noise adaptation in integrate-and fire neurons. Neural Comput. 1997;9:1047–1069. doi: 10.1162/neco.1997.9.5.1047. [DOI] [PubMed] [Google Scholar]

- 45.La Camera G, Rauch A, Thurbon D, Luscher HR, Senn W, Fusi S. Multiple time scales of temporal response in pyramidal and fast spiking cortical neurons. J Neurophysiol. 2006 doi: 10.1152/jn.00453.2006. [DOI] [PubMed] [Google Scholar]

- 46.Liu YH, Wang XJ. Spike-frequency adaptation of a generalized leaky integrate-and-fire model neuron. J Comput Neurosci. 2001;10:25–45. doi: 10.1023/a:1008916026143. [DOI] [PubMed] [Google Scholar]

- 47.Wang XJ. Calcium coding and adaptive temporal computation in cortical pyramidal neurons. J Neurophysiol. 1998;79:1549–1566. doi: 10.1152/jn.1998.79.3.1549. [DOI] [PubMed] [Google Scholar]

- 48.Benda J, Herz AV. A universal model for spike-frequency adaptation. Neural Comput. 2003;15:2523–2564. doi: 10.1162/089976603322385063. [DOI] [PubMed] [Google Scholar]

- 49.Benda J, Longtin A, Maler L. Spike-frequency adaptation separates transient communication signals from background oscillations. J Neurosci. 2005;25:2312–2321. doi: 10.1523/JNEUROSCI.4795-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Arsiero M, Luscher HR, Lundstrom BN, Giugliano M. The impact of input fluctuations on the frequency-current relationships of layer 5 pyramidal neurons in the rat medial prefrontal cortex. J Neurosci. 2007;27:3274–3284. doi: 10.1523/JNEUROSCI.4937-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Higgs MH, Slee SJ, Spain WJ. Diversity of gain modulation by noise in neocortical neurons: regulation by the slow afterhyperpolarization conductance. J Neurosci. 2006;26:8787–8799. doi: 10.1523/JNEUROSCI.1792-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Prescott SA, Ratte S, De Koninck Y, Sejnowski TJ. Nonlinear interaction between shunting and adaptation controls a switch between integration and coincidence detection in pyramidal neurons. J Neurosci. 2006;26:9084–9097. doi: 10.1523/JNEUROSCI.1388-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Fairhall AL, Lewen GD, Bialek W, de Ruyter van Steveninck R. Multiple timescales of adaptation in a neural code. In: Leen TK, Dietterich TG, Tresp V, editors. Advances in Neural Information Processing Systems. 13. MIT Press; 2001. pp. 124–130. [Google Scholar]

- 54.Drew PJ, Abbott LF. Models and properties of power-law adaptation in neural systems. J Neurophysiol. 2006;96:826–833. doi: 10.1152/jn.00134.2006. [DOI] [PubMed] [Google Scholar]

- 55.Gilboa G, Chen R, Brenner N. History-dependent multiple-time-scale dynamics in a single-neuron model. J Neurosci. 2005;25:6479–6489. doi: 10.1523/JNEUROSCI.0763-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Toib A, Lyakhov V, Marom S. Interaction between duration of activity and time course of recovery from slow inactivation in mammalian brain Na+ channels. J Neurosci. 1998;18:1893–1903. doi: 10.1523/JNEUROSCI.18-05-01893.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Schwartz O, Simoncelli EP. Natural signal statistics and sensory gain control. Nat Neurosci. 2001;4:819–825. doi: 10.1038/90526. [DOI] [PubMed] [Google Scholar]

- 58.Carandini M, Heeger DJ, Movshon JA. Linearity and normalization in simple cells of the macaque primary visual cortex. J Neurosci. 1997;17:8621–8644. doi: 10.1523/JNEUROSCI.17-21-08621.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Sanchez-Vives MV, Nowak LG, McCormick DA. Cellular mechanisms of long-lasting adaptation in visual cortical neurons in vitro. J Neurosci. 2000;20:4286–4299. doi: 10.1523/JNEUROSCI.20-11-04286.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Rieke F. Temporal contrast adaptation in salamander bipolar cells. J Neurosci. 2001;21:9445–9454. doi: 10.1523/JNEUROSCI.21-23-09445.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kim KJ, Rieke F. Slow Na+ inactivation and variance adaptation in salamander retinal ganglion cells. J Neurosci. 2003;23:1506–1516. doi: 10.1523/JNEUROSCI.23-04-01506.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Dunn FA, Doan T, Sampath AP, Rieke F. Controlling the gain of rod-mediated signals in the Mammalian retina. J Neurosci. 2006;26:3959–3970. doi: 10.1523/JNEUROSCI.5148-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Katz Y, Heiss JE, Lampl I. Cross-whisker adaptation of neurons in the rat barrel cortex. J Neurosci. 2006;26:13363–13372. doi: 10.1523/JNEUROSCI.4056-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Laughlin S. A simple coding procedure enhances a neuron’s information capacity. Z Naturforsch [C] 1981;36:910–912. [PubMed] [Google Scholar]

- 65.Stanley GB. Adaptive spatiotemporal receptive field estimation in the visual pathway. Neural Comput. 2002;14:2925–2946. doi: 10.1162/089976602760805340. [DOI] [PubMed] [Google Scholar]