Abstract

The “h index” proposed by Hirsch [Hirsch JE (2005) Proc Natl Acad Sci USA 102:16569–16573] is a good indicator of the impact of a scientist's research and has the advantage of being objective. When evaluating departments, institutions, or laboratories, the importance of the h index can be further enhanced when it is properly calibrated for the size of the group. Particularly acute is the issue of federally funded facilities whose number of actively publishing scientists frequently dwarfs that of academic departments. Recently, Molinari and Molinari [Molinari JF, Molinari A (2008) Scientometrics, in press] developed a methodology that shows that the h index has a universal growth rate for large numbers of papers, allowing for meaningful comparisons between institutions. An additional challenge when comparing large institutions is that fields have distinct internal cultures, with different typical rates of publication and citation; biology is more highly cited than physics, for example. For this reason, the present study has focused on the physical sciences, engineering, and technology and has excluded biomedical research. Comparisons between individual disciplines are reported here to provide a framework. Generally, it was found that the universal growth rate of Molinari and Molinari holds well across the categories considered, testifying to the robustness of both their growth law and our results. The goal here is to set the highest standard of comparison for federal investment in science. Comparisons are made of the nation's preeminent private and public institutions. We find that many among the national science facilities compare favorably in research impact with the nation's leading universities.

Keywords: federally funded facilities, physical sciences, science metrics

The “h index,” pioneered by Hirsch (1), has rapidly become a widely used marker for evaluating the impact of scientific research. The h index of an individual scientist is defined as the number of his/her publications cited more than h times in scientific literature. Similarly, the h index can be generalized to groups of scientists, departments, and large institutions. Recently, Molinari and Molinari (2) (M&M) observed that, when evaluating sets of publications greater than several hundred, the h index vs. the size of the set (N) is characterized by an approximately universal growth rate, referred to as the “master curve.” The underlying reason for this finding is a topic for another paper and mayhave to do with the speed of the diffusion of knowledge and an intrinsically nonlinear relationship between the number of publications and the h index. Regardless, the observation that such a universal growth rate exists allows the h index to be decomposed into the product of an impact index and a factor depending on the size of the set, which in turn allows for a meaningful comparison between institutions of widely varying size. The growth rate is given by N0.4 so that the impact index defined by M&M for a given master curve m is h(m) = h index/N0.4.

Impact Index as a Function of Scientific Discipline

To demonstrate this universal growth rate, and to better understand the differences between the scientific disciplines, the total number of papers and corresponding h indices have been assembled here by using the Thomson Institute for Scientific Information “Web of Knowledge” (http://isiwebofknowledge.com), according to the disciplines that are encompassed by this study. Small fields, like astronomy, are included, as are large fields like physics, mathematics, and chemistry.

Any search involving the Web of Knowledge has intrinsic limitations because of the nature of the search engine. The data search discussed here was done based on affiliation with astronomy, physics, chemistry, engineering, mechanical engineering, and mathematics departments in the United States and contains some ambiguities. For example, some universities include astronomy within their physics department. The astronomy-related publications from such a physics department would not be included in a search for publications from departments of astronomy. Although the higher citation rate of astronomy would increase the numbers for such a physics department, there are many fewer astronomers than physicists. Ultimately, given the universality of the growth rate, the astronomy publications not included because of their affiliation with a physics department have minimal influence on the numbers for the field of astronomy, making them somewhat lower but not changing their impact when normalized by size.

Once the publications were gathered in the Web of Knowledge, the set was scrutinized for biomedical and other publications unrelated to our target disciplines. Fields such as health science, biology, biophysics, medicine, the human body, diseases, social science, and agriculture were removed. The disciplines represented in this study remained in the sample. Note that the topic of the specific department dominates the total number of papers so that leaving the other physical sciences and engineering/technology disciplines in the set of publications has a minor effect on the overall numbers. For example, for the mathematics discipline, the search found 22,261 publications under the subject category “mathematics,” 11,950 under applied mathematics, an additional 3,066 under statistics and physics-related mathematics. The first field that was not directly mathematical was multidisciplinary physics with 1,275 publications. See supporting information (SI) Text and SI Table 7 for further details of the methodology used.

One major way in which this study differs from that of M&M (2) is that publications were not removed from the study set unless they were specifically identified as irrelevant to the target disciplines. This method results in a larger range of science topics, including the main fields of physics, astronomy, mathematics, chemistry, and engineering and the subfields of atmospheric sciences, computer science, crystallography, environmental sciences, geosciences, geochemistry, geophysics, geography, limnology, material science, meteorology, mineralogy, and oceanography. Also included are the applied sciences and technology development fields of automation and control systems, acoustics, imaging science, mechanics, metallurgy, optics, photographic technology, remote sensing, robotics, and telecommunications. The inclusion of the subfields, and especially the technology development fields, allows a better comparison between academic institutions and the laboratories of the Department of Energy (DOE), National Aeronautics and Space Administration (NASA), and National Science Foundation (NSF), where research and development in these areas is a significant component of the overall output. The number of publications included in this study is thus approximately three times the number included in the M&M study.

Once the set was scrutinized to remove biomedical publications and other unrelated topics, the growth rate was calculated following the methodology of M&M (2) by first producing the h index for the publications in the year 1980, then for the years 1980 plus 1982, and so on, until the h index for the accumulated publications from 1980 to 1998 is calculated. Publications after 1998 are not included because insufficient time has passed for them to be fully cited. The lack of full citation is manifested by the time-dependence of the impact indices and can be seen in figure 4 of M&M.

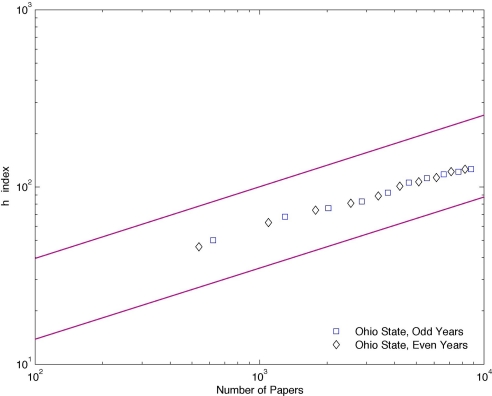

Fig. 4.

Master curves for the even and odd years for Ohio State University. Note that although the two data sets have no overlapping publications, the two master curves track very closely.

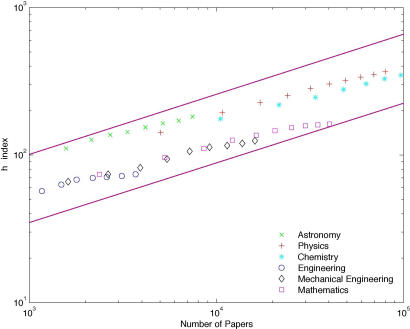

When the h index and total numbers of papers are plotted on a log–log plot, the universal growth rate is manifest, following a curve of slope ≈0.4, as seen in Fig. 1. The scientific fields included in this study tend to fall under two groupings; astronomy, physics, and chemistry have high characteristic impact indices (5.14, 4.01, and 3.52, respectively), whereas engineering, mechanical engineering, and mathematics have lower characteristic impact indices (2.79, 2.60, and 2.33 respectively; see also Table 1). In Fig. 1, two template lines with slope of 0.4 are drawn encompassing the master curves of these six disciplines, and these lines are placed on subsequent figures as a guide. These master curves represent a template for academic impact in the United States.

Fig. 1.

Master curve for science disciplines. The h index is calculated for nonbiomedical publications for 10 years over a 19-year span from 1980 to 1998. The data are cumulative, including increments of even years of data, starting with 1980, then 1980 plus 1982, and so on, up to and including data for all even years from 1980 to 1998. Overlaid are encompassing lines with slope 0.4. Although the universal law with exponent ≈0.4 works well for physics, chemistry, and astronomy, it works less well for engineering and mathematics, where a somewhat lower exponent of the order of 0.35 might be more appropriate. Note that all figures in this article are shown with two decades on the x axis, Number of Papers, for ease of comparison. Consequently, some scientific fields in this plot show <10 data points because the fields have either <1,000 papers or >100,000 papers in the given years.

Table 1.

Impact index by scientific discipline

| Discipline | N | h index | h(m) |

|---|---|---|---|

| Astronomy | 7,459 | 182 | 5.14 |

| Physics | 80,081 | 367 | 4.01 |

| Chemistry | 144,183 | 408 | 3.52 |

| Engineering | 3,706 | 74 | 2.79 |

| Mechanical engineering | 16,088 | 125 | 2.60 |

| Mathematics | 40,253 | 162 | 2.33 |

Top-Ranked American Academic Institutions

To further establish a context for an evaluation of federally funded science centers, the impact indices for the top-ranked American academic institutions from the M&M study (2) are given here. These indices present a different challenge; the publications of many of the top-ranked institutions are dominated by biomedical research, which dwarfs the other scientific disciplines in the number of published papers, journals, annual reports, and refereed proceedings. Here we took a “panning for gold” approach. Publications in ≈520 highly used biomedical, medical, and health-related journals were systematically excluded by using an advanced search in the Web of Knowledge. The same list of journals was used in every search. The resulting set of papers was then sorted by hand into subject categories involving biomedical, medical, health, and other non-target topics. The master curve was then created using the data for 1980, then 1980 plus 1982, and adding one even year of publications until all 11 years from 1980 to 1998 were included. Details of the search, including journal titles excluded, example subject categories excluded by hand, and syntax used, are given in SI Text and SI Table 7. This approach was taken to separate the many biomedical publications from the few desired science and technology publications, in order to maintain a broad spectrum of disciplines within the set.

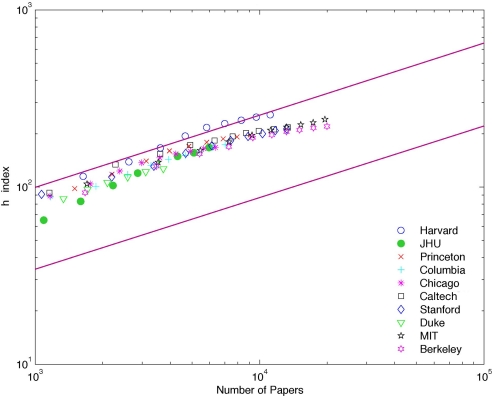

This method was used for the top-ranked American institutions and for a selection of major public universities, to obtain impact indices characteristic of a broad sampling of preeminent public and private U.S. universities. The master curves for these institutions are shown in Figs. 2 and 3, with the template discipline master curves from Fig. 1 included for reference. Table 2 shows the impact indices for some of the top U.S. universities. Compared with M&M's list of a similar nature (2), Harvard University and Princeton University are still in the top third, Stanford University, University of Chicago, and Columbia University are still in the middle, and Duke University and the University of California (UC) Berkeley are in the bottom third. The Johns Hopkins University (JHU) has gone from eighth to second, California Institute of Technology (Caltech) from second to sixth, and Massachusetts Institute of Technology (MIT) from forth to ninth.

Fig. 2.

Master curve for a selection of top-ranked universities. The h index is calculated for nonbiomedical publications for the year 1980, 1980 plus 1982, and incrementing by even years through 1990. Template lines are carried over from Fig. 1. See SI Text and SI Table 7 for detailed methodology.

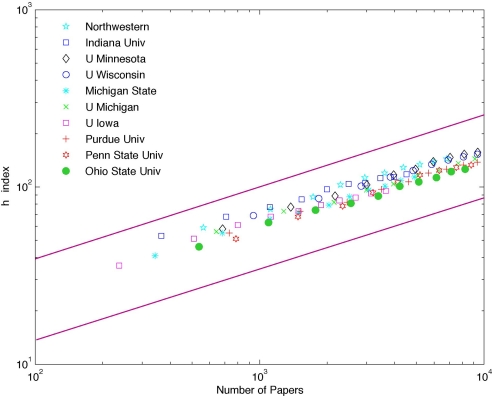

Fig. 3.

Master curves for selected public universities. The most remarkable aspect of the impact index for these universities is the narrow dispersion of the curves and the impact indices, as shown in Table 3.

Table 2.

Impact index for selected top-ranked U.S. universities

| University | N | h index | h(m) |

|---|---|---|---|

| Harvard | 11,165 | 256 | 6.15 |

| Johns Hopkins | 5,959 | 167 | 5.16 |

| Princeton | 9,084 | 197 | 5.14 |

| Columbia | 7,028 | 174 | 5.03 |

| Chicago | 6,354 | 167 | 5.03 |

| Caltech | 13,381 | 217 | 4.85 |

| Stanford | 13,215 | 213 | 4.79 |

| Duke | 3,724 | 123 | 4.74 |

| MIT | 19,542 | 241 | 4.63 |

| UC Berkeley | 19,963 | 220 | 4.19 |

Table 3.

Impact index for selected U.S. public universities

| University | N | h index | h(m) |

|---|---|---|---|

| Northwestern, Evanston, IL | 6,801 | 144 | 4.22 |

| Indiana, Bloomington, IN | 4,518 | 118 | 4.07 |

| Minnesota, Minneapolis/St. Paul, MN | 9,370 | 158 | 4.05 |

| Wisconsin, Madison, WI | 10,647 | 156 | 3.81 |

| Michigan State, East Lansing, MI | 4,894 | 114 | 3.81 |

| Michigan, Ann Arbor, MI | 10,657 | 150 | 3.67 |

| Iowa, Iowa City, IA | 3,604 | 95 | 3.59 |

| Purdue, West Lafayette, IN | 10,582 | 141 | 3.46 |

| Pennsylvania State, University Park, PA | 10,170 | 138 | 3.44 |

| Ohio State, Columbus, OH | 8,230 | 126 | 3.42 |

Clearly, evaluations of impact depend on the disciplines included in the study. This study covers a broader range of sciences and technologies, with three times as many publications included as in the M&M study (2). Both of these factors undoubtedly influence the final numbers.

As a demonstration of the reproducibility of the method, a master curve for both the even years and odd years for Ohio State University was produced (Fig. 4). This plot illustrates how unique each master curve is for each institution. Although the even-year and odd-year data are completely independent data sets, comprising refereed publications with no overlap, the curves are identifiable as being from the same institution. When compared with the larger plot of 10 public universities, the data from no other university is as similar to the odd-year data as the data from Ohio State University.

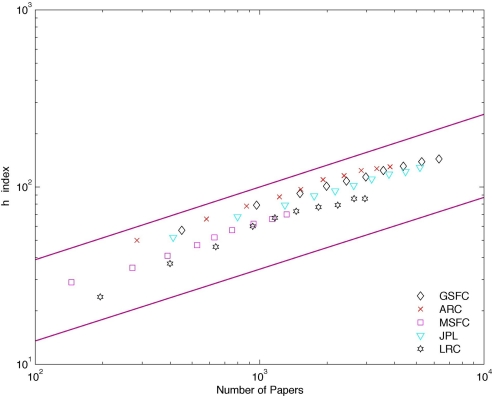

NASA Science Centers

Data were collected for the NASA science centers Goddard Space Flight Center (GSFC), Ames Research Center (ARC), Marshall Space Flight Center (MSFC), the Federally Funded Research and Development Center, Jet Propulsion Laboratory (JPL), and Langley Research Center (LRC). Each of these NASA centers has a sufficient output of publications to fall onto the universal growth curve of M&M (2).

The different NASA centers have traditionally focused on different areas of interest. Of greatest influence on an evaluation of their science impact, however, is the percentage of their publications that concern scientific topics, as opposed to the percentage that concern technology and engineering, especially taking into account the gap between the impact indices of astronomy, physics, and chemistry and the citation rates of engineering, as reported above. By using the Web of Knowledge, the publications from these centers have been sorted into two groups according to their subject category. The first category is broadly termed “science” and includes astronomy, meteorology, geosciences, physics, planetary science, earth science, oceanography, and chemistry. The second category is broadly termed “engineering,” and includes topics related to the research and development of new technologies, engineering, remote sensing, optics, computer science, telecommunications, robotics, and applied sciences. Table 4 lists the NASA centers and the percentage of their publications classified as either science or engineering according to this definition, along with their impact indices.

Table 4.

Impact index for NASA centers

| Center | Science, % | Engineering, % | N | h index | h(m) |

|---|---|---|---|---|---|

| GSFC | 78 | 22 | 6,300 | 144 | 4.35 |

| ARC | 70 | 30 | 2,803 | 123 | 5.14 |

| MSFC | 64 | 36 | 1,320 | 70 | 3.95 |

| JPL | 55 | 45 | 5,183 | 128 | 4.18 |

| LRC | 28 | 72 | 2,948 | 86 | 3.52 |

The impact index for the NASA centers approximately reflects the percentage of science vs. engineering publications and falls within the template master curves for the six science disciplines (Fig. 5). NASA centers compare very favorably with the selected public universities, despite the substantial engineering/technology component of the NASA centers.

Fig. 5.

Master curves for five NASA science centers.

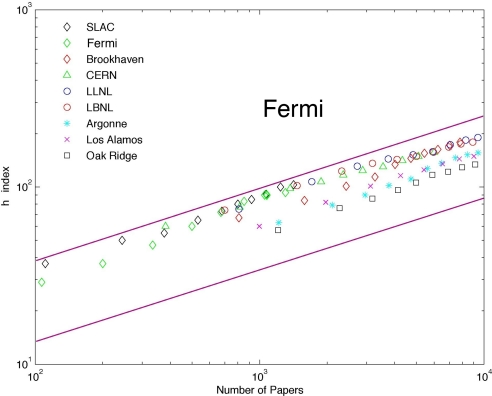

DOE National Laboratories

The master curves for the DOE science centers Stanford Linear Accelerator Laboratory (SLAC), Fermi National Accelerator Laboratory, Brookhaven National Laboratory, Lawrence Livermore National Laboratory (LLNL), Lawrence Berkeley National Laboratory (LBNL), Argonne National Laboratory, Los Alamos National Laboratory, and Oak Ridge National Laboratory, are shown in Fig. 6, together with the European Center for Nuclear Research (CERN) as a comparison and including the template lines from Fig. 1. The data fall into two categories, with SLAC, Fermi, Brookhaven, LLNL, and LBNL in the higher range and Argonne, Los Alamos, and Oak Ridge in the lower range (Table 5). Naively, because these data come from a highly international community in which papers have authorship that numbers in the tens, if not hundreds, of authors from many institutions, the citation rates would be expected to be more tightly clustered than they are.

Fig. 6.

Master curves for DOE national laboratories plus CERN.

Table 5.

Impact index for DOE national laboratories plus CERN

| Facility | N | h index | h(m) |

|---|---|---|---|

| SLAC | 1,418 | 103 | 5.65 |

| Fermi | 1,304 | 93 | 5.28 |

| Brookhaven | 7,809 | 179 | 4.96 |

| CERN | 5,999 | 157 | 4.84 |

| LLNL | 10,605 | 196 | 4.81 |

| LBNL | 8,900 | 179 | 4.71 |

| Argonne | 9,413 | 156 | 4.01 |

| Los Alamos | 11,776 | 163 | 3.83 |

| Oak Ridge | 10,266 | 138 | 3.43 |

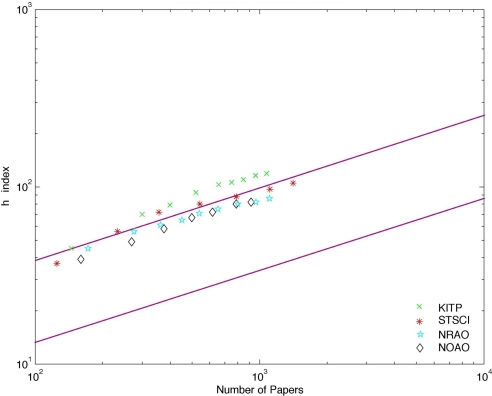

NSF Facilities and Astronomical Observatories

NSF facilities in physics, mathematics, engineering, mechanical engineering, and geosciences are often distributed among a consortium of members, as opposed to being long-term facilities that are identified in the affiliation line of a publication. Because there is no way to associate the publications with a particular center, publication and citation statistics cannot be collected with the methods used here, and therefore the majority of NSF facilities could not be analyzed.

However, there are some exceptions. The National Radio Astronomy Observatory (NRAO), National Optical Astronomical Observatory (NOAO), and Kavli Institute of Theoretical Physics at UC Santa Barbara (KITP) all have fixed addresses and have been scientifically active for long periods of time with a sufficiently high publication rate to lie on the uniform growth curve of the master curve. Master curves are presented in Fig. 6 for KITP, NOAO, and NRAO. The Space Telescope Science Institute (STScI) is also included, for comparison. The same methodology is used for these curves as for the other master curves, employing data from even years beginning with 1980 and ending with 1998 for KITP and NRAO.

On the basis of the master curves shown in Fig. 7, the NSF observatories, KITP, and STScI all have high impact indices. Because these institutes represent single disciplines, it is not appropriate to compare them with the institutions that encompass much broader scientific disciplines. Data points for <100 papers are not within the limits of the figure.

Fig. 7.

Master curves for certain NSF science facilities plus the STScI.

A comparison can now be made between a larger number of similar institutions if the time frame is narrowed to the period between 1990 and 1998, by which time several additional NSF institutes were active. We now compare the h indexes for the institutions in Fig. 6, plus the National Solar Observatory (NSO), National High Magnetic Field Laboratory (NHMFL), and the UC Berkeley Astronomy Department, which has traditionally been one of the highest ranked scientific groups and so serves as a gold standard. In Table 6, the h index is calculated for the 9 years between 1990 and 1998.

Table 6.

Impact index for certain NSF facilities plus STScI

| Institution | N | h index | h(m) |

|---|---|---|---|

| KITP† | 972 | 103 | 6.56 |

| Astronomy Department,UC Berkeley | 1,241 | 109 | 6.30 |

| NOAO | 1,133 | 90 | 5.40 |

| STScI | 2,161 | 116 | 5.38 |

| NSO | 333 | 43 | 4.21 |

| NHMFL | 940 | 62 | 4.01 |

| NRAO | 2,122 | 80 | 3.74 |

†KITP is a theoretical physics institute that regularly organizes conferences and long-term workshops in physical sciences. Many of the authors of papers with KITP affiliation are visitors, with other home institutions.

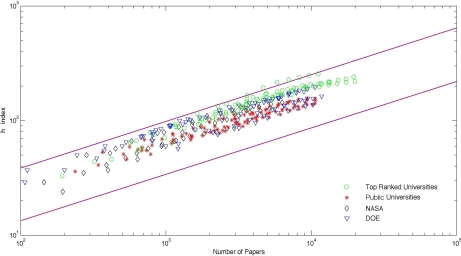

Comparisons and Conclusions

A number of federally funded science centers and laboratories have been compared with the highest ranking U.S. public and private academic institutions. An overall comparison is shown in Fig. 8, with data from each type of institution displayed with a different icon. The top-ranked academic institutions in the United States have the highest impact index among all of the institutions evaluated here, followed by leading DOE laboratories and NASA centers, which generally rank higher than the selected public universities.

Fig. 8.

Master curves for universities, shown together with curves for NASA and DOE.

Supplementary Material

Acknowledgments

I thank the anonymous reviewers for helpful comments and Drs. S. Beckwith and J. F. Molinari for reading of the manuscript and for subsequent helpful comments.

Footnotes

The author declares no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0704416104/DC1.

References

- 1.Hirsch JE. Proc Natl Acad Sci USA. 2005;102:16569–16573. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Molinari JF, Molinari A. Scientometrics. 2008 in press. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.