Abstract

A state-of-the-art social robot was immersed in a classroom of toddlers for >5 months. The quality of the interaction between children and robots improved steadily for 27 sessions, quickly deteriorated for 15 sessions when the robot was reprogrammed to behave in a predictable manner, and improved in the last three sessions when the robot displayed again its full behavioral repertoire. Initially, the children treated the robot very differently than the way they treated each other. By the last sessions, 5 months later, they treated the robot as a peer rather than as a toy. Results indicate that current robot technology is surprisingly close to achieving autonomous bonding and socialization with human toddlers for sustained periods of time and that it could have great potential in educational settings assisting teachers and enriching the classroom environment.

Keywords: human–robot interaction, social development, social robotics

The development of robots that interact socially with people and assist them in everyday life has been an elusive goal of modern science. Recent years have seen impressive advances in the mechanical aspects of this problem, yet progress on social interaction has been slower (1–15). Research suggests that low-level information, such as animacy, contingency, and visual appearance, can trigger powerful social behaviors toward robots during the first few minutes of interaction (16, 17). However, developing robots that bond and socialize with people for sustained periods of time has proven difficult (6). Recent years have seen progress in this area, but it typically relies on the robot telling stories that change over time (7, 11). Because story-telling was critical to the continued interest in the robot, it is yet unclear to what extent the robots added value to the stories. In practice, commercially available robots seldom cross the “10-h barrier” (i.e., given the opportunity, individual users typically spend less than a combined total of 10 h with these robots before losing interest).¶ This observation is in sharp contrast, for example, to the long-term interactions and bonding that commonly develop between humans and their pets.

Here, we present a study in which a state-of-the-art humanoid robot, named QRIO, was immersed in a classroom of 18- to 24-month-old toddlers for 45 sessions spanning 5 months (March 2005 to July 2005). Children of this age were chosen because they have no preconceived notions of robots, and they helped us focus on primal forms of social interaction that are less dependent on speech. QRIO is a 23-inch-tall humanoid robot prototype built in Japan as the result of a long and costly research and development effort (18, 19). The robot displays an impressive array of mechanical and computational skills, yet its ability to interact with humans for prolonged periods of time had not been tested. In this study, the robot was assisted by a human operator, F.T. On average, the operator sent the robot 1 byte of information every 141 s, specifying aspects such as a recommended direction of walk, head direction, and six different behavioral categories (dance, sit down, stand up, lay down, hand gesture, and giggle). The advice from the human controller could be overruled by the robot if it interfered with its own priorities, although this seldom happened in practice.

Results and Discussion

The study was conducted in Room 1 of the Early Childhood Education Center (ECEC) of the University of California, San Diego (UCSD). It was part of the RUBI Project, the goal of which is to develop and evaluate interactive computer architectures to assist teachers in early education (20, 21). There were a total of 45 field sessions, lasting an average of 50 min each. The sessions ended when the robot sensed low battery power, at which point it laid down and assumed a sleeping posture. The study had three phases: During phase I, which lasted 27 sessions, the robot interacted with the children by using its full behavioral repertoire. During phase II, which lasted 15 sessions, the robot was programmed to produce interesting but highly predictable behaviors. During phase III, which lasted three sessions, the robot was reprogrammed to exhibit its full repertoire. All of the field sessions were recorded by using two video cameras. Two years were spent studying the videos and developing quantitative methods for their analyses. Here we present results from four such analyses.

Development of the Quality of Interaction.

One of our goals was to establish whether it is possible for social robots to maintain the interest of children beyond the 10-h barrier. To achieve this goal, we had to develop and evaluate a wide variety of quantitative methods. We found that continuous audience response methods, originally used for marketing research (22), were particularly useful. Fifteen sessions were randomly selected from the 45 field sessions and independently coded frame-by-frame by five UCSD undergraduate students who were uninformed of the purpose of the study. Coders operated a dial in real time while viewing the videotaped sessions. The position of this dial indicated the observer's impression of the quality of the interaction seen in the video (Fig. 1A). The order of presentation of the 15 video sessions was independently randomized for each coder.

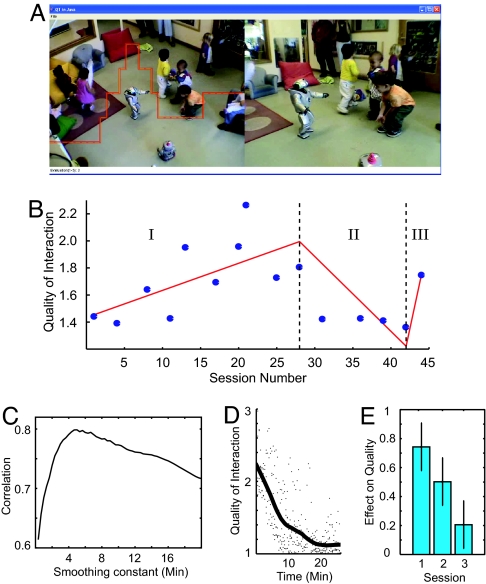

Fig. 1.

Analyses of the quality of interaction. (A) Coders operated a dial in real time to indicate their perception of the quality of the interaction between children and QRIO observed in the video. (B) Blue dots plot the average quality of interaction score on a random sample of 15 days. The red line represents a piece-wise-linked linear regression fit. The vertical dashed lines show the separations between phases. (C) Inter-observer reliability between four coders as a function of a low-pass-filter smoothing constant. (D and E) Main effects on the quality of interaction score as a function of time within a session (D) and across sessions (E).

The evaluation signals produced by the five human coders were low-pass-filtered. Fig. 1C shows the inter-observer reliability, averaged across all possible pairs of coders, as a function of the bandwidth of the low-pass filter. The inter-observer reliability shows an inverted U-curve: As the high-frequency noise components are filtered out, the inter-observer reliability increases. However, as the bandwidth of the filter decreases, it filters out more than just the noise, resulting in a deterioration of inter-observer correlation. Optimal inter-observer reliability of 0.80 (Spearman correlation) was obtained with a bandwidth of 5 min. This finding suggests that a time scale of ≈5 min is particularly important when evaluating the quality of social interaction.

Fig. 1B displays the quality of interaction for each session, averaged over coders. During phase I, which spanned 27 sessions over a period of 45 days, the quality of the interaction between toddlers and robot steadily increased. During the first 10 sessions, it became apparent that although the robot's behavioral repertoire was impressive, it did not appear responsive to the children. Initially the human controller tried to establish some contingencies between robot behavior and children (e.g., by requesting QRIO to wave its hand in front of a child). However social events moved too quickly (e.g., by the time the robot waved its hand, the child was gone). By session 11, a simple reflex-like contingency was introduced so that QRIO giggled immediately after being touched on the head. This contingency made clear to the children that the robot was responsive to them and served to initiate interaction episodes across the entire study [see supporting information (SI) Movie 1].

During phase II, the quality of interaction declined precipitously. The first six sessions of this phase were designed to evaluate two different robot-dancing algorithms: (i) a choreographed play-back dance that had been developed at great cost and (ii) an algorithm in which QRIO moved in response to the optic flow sensed in its cameras, resulting in behaviors that appear like spontaneous dancing (23). During the sessions, which lasted 30 min each, the robot played the same song 20 times consecutively with a 10-s mute interval before each replay. For three randomly selected sessions, the robot was controlled by the choreographed dance. For the other three sessions, it was controlled by the optic-flow-based dancing algorithm. Fig. 1 D and E shows the change in the quality of interaction as a function of time within the six sessions. The dots correspond to individual sessions. The curve shows the averaged score across the five judges and the six sessions. The graph shows a consistent decay in the quality of interaction within sessions (F(1,500, 7,496) = 7.4768; P < 0.05). The curve is approximately exponential with a time constant of 3.5 min (i.e., it takes ≈4 min for the score to decay 36.7% of the initial value). Significant decays were also observed across sessions (F(3, 1,871) = 358.07; P < 0.05). The type of dancing algorithm had no significant effect (F(1, 7,496) = 2.961; P > 0.05), showing that a simple interactive dancing algorithm could perform as well as a very expensively choreographed dance. For the last nine sessions of phase II, the human controller assisted the robot with the goal of learning how to improve its dancing algorithm (e.g., by controlling the timing of the start and end of the robot's dance). The efforts of the human controller were not successful. Only after the robot was reprogrammed to exhibit its entire behavioral repertoire in phase III did the quality of interaction go back up to the levels seen in phase I (Fig. 1B).

Haptic Behavior Toward Robot and Peers.

The goal of this analysis was to study in more detail objective correlates of the interactions that developed between children and robot. Based on extensive examination of the videotapes, we decided to focus on haptic behaviors. Contact episodes were identified and categorized based on the part of the robot being touched: arm/hand, leg/foot, trunk, head, and face. The coding was performed by F.T. The frame-by-frame inter-observer correlation with an independent coder was 0.85.

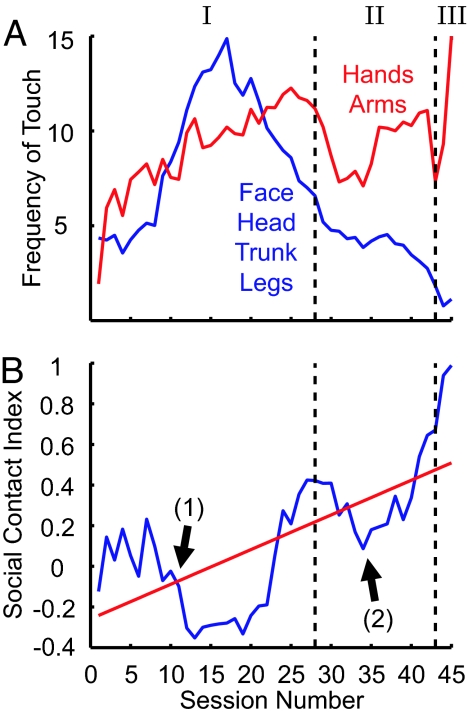

The overall number of times the robot was touched followed the same trend as the quality of interaction scores: It increased during phase I (slope, 1.21), declined during phase II (slope, −3.6), and increased again during phase III (slope, 5.4). Statistical cluster analysis revealed two distinct trends in the development of haptic behaviors: (i) The frequency of touch to the legs, trunk, head, and face followed a bell-shaped curve that peaked at approximately session 16. This peak was driven by the introduction, on day 11, of the social contingency mentioned above. (ii) Touch toward the arms and hands followed a very different trend, increasing in frequency steadily throughout the study (Fig. 2A). To understand the special character of the arms and hands, an analysis of toddler-to-toddler contact episodes in the last two sessions was performed. First, toddler-to-toddler contact was classified as “intentional” or “incidental” (independent inter-observer reliability for this judgment was 0.95). Incidental contact occurred more or less uniformly across the body (38.4% arm/hand, 30.8% trunk, 30.8% leg/foot). However, intentional peer-to-peer contact was primarily directed toward the arms and hands (52.9%) compared with other body parts (17.6% face, 11.8% trunk, 11.8% leg/foot, 5.9% head). We developed an index of social contact based on the Pearson correlation coefficient between the toddler–robot and the toddler–toddler contact distributions (Fig. 2B). This correlation significantly increased throughout the study (F(1, 44) = 11.45, P < 0.05), starting at zero in session 1 and ending with an almost perfect correlation by the last session. Thus, the children progressively reorganized the way they touched the robot, eventually touching it with the same distribution observed when touching their peers. There were two occasions in which the trend toward peer-like treatment of the robot was broken: (i) when a contingency was introduced such that the robot giggled in response to head contact (temporarily increasing head contact, which seldom happens in toddler–toddler interaction) and (ii) during the first 6 days of phase II, when the robot was programmed to dance repeatedly.

Fig. 2.

Analyses of the haptic behavior in children. (A) Evolution of the average frequency of touch on the robot's hands/arms (red) and face/head/trunk/legs (blue). (B) Correlation between the robot–child and the child–child touch distributions. Note that touch and giggling contingency was introduced at (1), and the first part of the repetitive dance experiment ended at (2). The vertical dashed lines show the separations between phases.

Haptic Behavior Toward Robot and Toys.

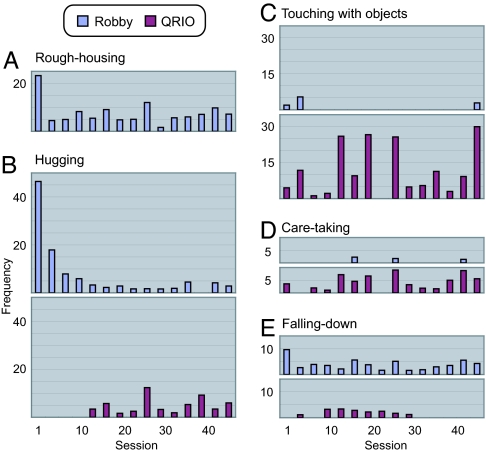

In addition to QRIO, two control toys were used throughout the sessions: (i) a soft toy resembling a teddy bear and (ii) an inanimate toy robot similar in appearance to QRIO. Hereafter, this latter toy is referred to as “Robby.” The colorful teddy bear had elicited many hugs in previous observations with children this age. Surprisingly, it was ignored throughout the study. When children touched QRIO, they did so in a very careful manner. Robby, on the other hand, was treated like an inanimate object or a “block,” making it difficult to locate exactly where it was being touched. For this reason, haptic behaviors toward Robby and QRIO were analyzed by using four new categories: rough-housing, hugging, touching with objects, and care-taking. Rough-housing referred to behaviors that would be considered violent if directed toward human beings. Fig. 3A shows that these behaviors were often observed toward Robby but never toward QRIO. Hugging developed in distinctly different ways toward QRIO and Robby (Fig. 3B). Robby received a surprising number of hugs from day 1, yet the frequency of hugging decreased dramatically as the study progressed. The hugs toward Robby appeared as substitutes for behaviors originally intended for QRIO in a manner reminiscent of the displacement behaviors, reported by ethologists across the animal kingdom (24). The displacement hypothesis is based on the following facts: (i) The teddy bear control toy that had elicited more hugs than Robby during pilot work was never hugged when QRIO was present. Robby, on the other hand, was hugged frequently when QRIO was present. (ii) As hugging toward QRIO increased (see SI Movie 2), hugging toward Robby decreased. (iii) Children often looked at QRIO when they hugged Robby (see SI Movie 3). It should be noted that the hugging category included behaviors such as “holding” or “lifting up” that were in general far more difficult to do with QRIO than Robby, which is lighter and does not move autonomously. Despite this, by the end of the study, the least huggable entity, QRIO, was hugged the most, followed by Robby. The most huggable toy, the teddy bear, was never hugged.

Fig. 3.

Evolution of the frequency counts of different behavioral categories throughout 45 daily sessions. Rough-housing was never observed toward the robot.

Another behavioral category that developed very differently toward Robby than QRIO was “touching with objects.” This category generally involved social games (e.g., giving QRIO an object or putting on a hat). These behaviors were seldom directed toward Robby but commonly occurred with QRIO (Fig. 3C). Care-taking behaviors were also frequently observed toward QRIO but seldom toward Robby. The most common behaviors from this category involved putting a blanket on QRIO/Robby while saying “night-night” (see SI Movie 4). This behavior often occurred at the end of the session when QRIO laid down on the floor as its batteries were running out. Early in the study, some children cried when QRIO fell. We advised the teachers to teach the children not to worry about it because the robot has reflexes that protect it from damage when it falls. However, the teachers ignored our advice and taught the children to be careful; otherwise children could learn that it is acceptable to push each other down. At 1 month into the study, children seldom cried when QRIO fell; instead, they helped it stand up by pushing its back or pulling its hand, sometimes despite teacher requests (see SI Movie 5).

Automatic Assessment of Connectedness.

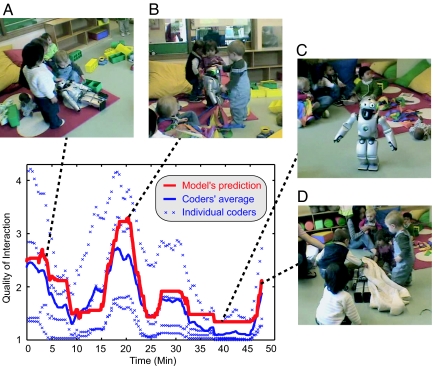

Several statistical models were developed and tested in an attempt to predict the frame-by-frame human evaluation of the quality of interaction. For every video frame in the 15 field sessions, the models were given eight binary inputs indicating the presence or absence of eight haptic behavioral categories described in the previous study: touching head, touching face, touching trunk, touching arm/hand, touching leg/foot, hugging, touching with objects, and care-taking. The goal of the models was to predict, frame by frame, the quality of interaction score, averaged across the four human coders. Among the models evaluated, one of the simplest and most successful was structured as follows. First, the eight inputs were converted into a binary signal that indicated whether the robot had been touched anywhere on its body, a signal that could be detected by using a simple capacitance switch. This binary signal was then low-pass-filtered, time-delayed, and linearly scaled to predict the quality of interaction averaged across the four human observers. Four parameters were optimized: (i) the bandwidth of the low-pass filter, (ii) the time delay, (iii) the additive, and (iv) multiplicative constants of the linear transformation. The optimal bandwidth was 0.0033 Hz, the optimal time delay was 3 s, and the optimal multiplicative and additive constants were 4.9473 and 1.3263, respectively. With these parameters, the correlation coefficient between the model and the human evaluation of the quality of interaction across a total 1,244,224 frames was 0.78, almost as good as the average human-to-human agreement (0.80). More complex models were also tested that assigned different filters and different weights to different haptic behaviors, but the improvements achieved by such models were small. Fig. 4 displays the evaluation of the four human coders and the predictions based on the touch model for a single session. Representative images are also displayed from different parts of the session.

Fig. 4.

Predicting the quality of interaction. The red line indicates an automatic assessment of the quality of interaction between children and QRIO based on haptic sensing. Blue lines indicate human assessment (by four independent coders) of the quality of interaction by using the continuous audience response method. (A) A session begins with QRIO waking up, attracting the children's interest. (B) During the music time in the classroom, children play with the robot. (C) Children are getting tired of the music time and losing interest in the robot. (D) Children put a blanket on the robot after it has laid down on the floor preparing for the end of a session.

Conclusions

We presented quantitative behavioral evidence that after 45 days of immersion in a childcare center throughout a period of 5 months, long-term bonding and socialization occurred between toddlers and a state-of-the-art social robot. Rather than losing interest, the interaction between children and the robot improved over time. Children exhibited a variety of social and care-taking behaviors toward the robot and progressively treated it more as a peer than as a toy. In the current study, the robot received 1 byte of information from a human controller approximately once every 2 min. A possible scenario is that this byte of information is what separates current social robots from success. However, analysis of the signals sent by the human controller revealed that they did little more than increase the variability of the robot's behaviors during idle time, orient it toward the center of the room, and avoid collision with stationary objects. In retrospect, we recognize that, except for the lack of simple, touch-based social contingencies, the robot was almost ready for full autonomy.

The results highlighted the particularly important role that haptic behaviors played in the socialization process: (i) The introduction of a simple touch-based contingency had a breakthrough effect in the development of social behaviors toward the robot. (ii) As the study progressed, the distribution of touch behaviors toward the robot converged to the distribution of touch behaviors toward other peers. (iii) Touch, when integrated over a few minutes, was a surprisingly good predictor of the ongoing quality of social interaction.

The importance that touch played in our study is reminiscent of Harlow's experiments with infant macaques raised by artificial surrogate mothers. Based on those experiments, Harlow concluded that “contact comfort is a variable of overwhelming importance in the development of affectional response” (25). Our work suggests that touch integrated on the time-scale of a few minutes is a surprisingly effective index of social connectedness. Something akin to this index may be used by the human brain to evaluate its own sense of social well being. One prediction from such a hypothesis is the existence of brain systems that keep track of this index. Such a hypothesis could be tested with current brain-imaging methods.

It should be pointed out that the robot became part of a large social ecology that included teachers, parents, toddlers, and researchers. This situation is best illustrated by the fact that, despite our advice, the teachers taught the children to treat the robot more gently so that it would not fall as often. Because of its fluid motions, the robot appeared lifelike and capitalized on the intense sentiments that it triggered in humans in ways that other entities could not. Our results suggest that current robot technology is surprisingly close to achieving autonomous bonding and socialization with human toddlers for significant periods of time. Based on the lessons learned with this project, we are now developing robots that interact autonomously with the children of Room 1 for weeks at a time. These robots are being codesigned in close interaction with the teachers, the parents, and, most importantly, the children themselves.

Methods

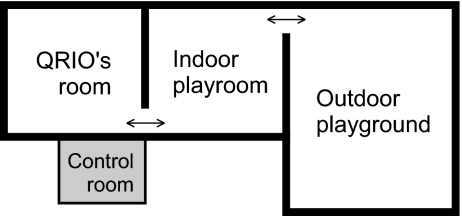

Room 1 at ECEC is divided into two indoor rooms and an outdoor playground. In all of the studies, QRIO was located in the same room, and children were allowed to move freely between the different rooms (Fig. 5). Room 1 hosts ≈12 children between 10 and 24 months of age. In the early part of the study, there were a total of six boys and five girls. In April 2005, one boy moved out and a boy and a girl moved in. The head teacher of Room 1 was assisted by two more teachers. The teachers, particularly the head teacher, were active participants in the project and provided feedback about the daily sessions. F.T. and J.R.M. spent from October 2004 to March 2005 volunteering 10 h a week at ECEC before the study. This time allowed them to establish essential personal relationships with the teachers, parents, and children and helped to identify the challenges likely to be faced during the field sessions. The field study would not have been possible without the interpersonal connections established during these 5 months. In March 2005, QRIO was introduced to the classroom. All of the field sessions were conducted from 10:00 a.m. to 11:00 a.m. The experimental room always had a teacher when a child was present, as well as a researcher in charge of safety, usually J.R.M. The studies were approved by the UCSD Institutional Review Board under Project 041071. Informed consent was obtained from all of the parents of children that participated in the project.

Fig. 5.

Layout of Room 1 at ECEC, where QRIO was immersed. There were three playing spaces, and QRIO was placed one of these spaces. Children were free to move back and forth between spaces, thus providing information about their preferences.

Supplementary Material

Acknowledgments

We thank Kathryn Owen, the director of the Early Childhood Education Center, Lydia Morrison, the head teacher of Room 1, and the parents and children of Room 1 for their support. The study is funded by UC Discovery Grant 10202 and by National Science Foundation Science of Learning Center Grant SBE-0542013.

Abbreviation

- ECEC

Early Childhood Education Center.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

The 10-h barrier was one of the concepts that emerged from the discussions at the National Science Foundation's Animated Interfaces and Virtual Humans Workshop in Del Mar, California in April 2004.

This article contains supporting information online at www.pnas.org/cgi/content/full/0707769104/DC1.

References

- 1.Picard RW. Affective Computing. Cambridge, MA: MIT Press; 1997. [Google Scholar]

- 2.Brooks RA, Breazeal C, Marjanovic M, Scassellati B, Williamson MM. Lecture Notes in Artificial Intelligence. Vol 1562. Heidelberg: Springer–Verlag; 1999. pp. 52–87. [Google Scholar]

- 3.Weng J, McClelland J, Pentland A, Sporns O, Stockman I, Sur M, Thelen E. Science. 2000;291:599–600. doi: 10.1126/science.291.5504.599. [DOI] [PubMed] [Google Scholar]

- 4.Breazeal CL. Designing Sociable Robots. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- 5.Fong T, Nourbakhsh I, Dautenhahn K. Rob Auton Syst. 2003;42:143–166. [Google Scholar]

- 6.Kanda T, Hirano T, Eaton D, Ishiguro H. Hum–Comput Interact. 2004;19:61–84. [Google Scholar]

- 7.Kanda T, Sato R, Saiwaki N, Ishiguro H. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems; Piscataway, NJ: IEEE; 2004. pp. 2215–2222. [Google Scholar]

- 8.Pentland A. IEEE Comput. 2005;38:33–40. [Google Scholar]

- 9.Robins B, Dautenhahn K, Boekhorst RT, Billard A. Universal Access Inf Soc. 2005;4:105–120. [Google Scholar]

- 10.Kozima H, Nakagawa C, Yasuda Y. Proceedings of the 2005 IEEE International Workshop on Robot and Human Interactive Communication; Piscataway, NJ: IEEE; 2005. pp. 341–346. [Google Scholar]

- 11.Gockley R, Bruce A, Forlizzi J, Michalowski MP, Mundell A, Rosenthal S, Sellner BP, Simmons R, Snipes K, Schultz A, Wang J. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems; Piscataway, NJ: IEEE; 2005. pp. 2199–2204. [Google Scholar]

- 12.Miyashita T, Tajika T, Ishiguro H, Kogure K, Hagita N. Proceedings of the 12th International Symposium of Robotics Research; Heidelberg: Springer–Verlag; 2005. pp. 525–536. [Google Scholar]

- 13.Kahn PH, Jr, Friedman B, Perez-Granados DR, Freier NG. Interact Studies. 2006;7:405–436. [Google Scholar]

- 14.Nagai Y, Asada M, Hosoda K. Adv Rob. 2006;20:1165–1181. [Google Scholar]

- 15.Wada K, Shibata T. Proceedings of the 2006 IEEE International Workshop on Robot and Human Interactive Communication; Piscataway, NJ: IEEE; 2006. pp. 581–586. [Google Scholar]

- 16.Movellan JR, Watson J. Proceedings of the 2nd IEEE International Conference on Development and Learning; Piscataway, NJ: IEEE; 2002. pp. 34–40. [Google Scholar]

- 17.Johnson S, Slaughter V, Carey S. Dev Sci. 1998;1:233–238. [Google Scholar]

- 18.Kuroki Y, Fukushima T, Nagasaka K, Moridaira T, Doi TT, Yamaguchi J. Proceedings of the 2003 IEEE International Workshop on Robot and Human Interactive Communication; Piscataway, NJ: IEEE; 2003. pp. 303–308. [Google Scholar]

- 19.Ishida T, Kuroki Y, Yamaguchi J. Proceedings of the 2003 IEEE International Workshop on Robot and Human Interactive Communication; Piscataway, NJ: IEEE; 2003. pp. 297–302. [Google Scholar]

- 20.Movellan JR, Tanaka F, Fortenberry B, Aisaka K. Proceedings of the 4th IEEE International Conference on Development and Learning; Piscataway, NJ: IEEE; 2005. pp. 80–86. [Google Scholar]

- 21.Tanaka F, Movellan JR, Fortenberry B, Aisaka K. Proceedings of the 1st Annual Conference on Human–Robot Interaction; New York: ACM; 2006. pp. 3–9. [Google Scholar]

- 22.Fenwick I, Rice MD. J Advertising Res. 1991;31:23–29. [Google Scholar]

- 23.Tanaka F, Suzuki H. Proceedings of the 2004 IEEE International Workshop on Robot and Human Interactive Communication; Piscataway, NJ: IEEE; 2004. pp. 419–424. [Google Scholar]

- 24.Eibl-Eibesfeldt I. Q Rev Biol. 1958;33:181–211. doi: 10.1086/402403. [DOI] [PubMed] [Google Scholar]

- 25.Harlow HF. Am Psychol. 1958;13:573–685. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.