Abstract

Health information exchange (HIE) projects are sweeping the nation, with hopes that they will lead to high quality, efficient care, yet the literature on their measured benefits remains sparse. To the degree that the field adopts a common set of evaluation strategies, duplicate work can be reduced and meta-analysis will be easier. The United Hospital Fund sponsored a meeting to address HIE evaluation. HIE projects are diverse with many kinds of effects. Assessment of the operation of the HIE infrastructure and of usage should be done for all projects. The immediate business case must be demonstrated for the stakeholders. Rigorous evaluation of the effect on quality may only need to be done for a handful of projects, with simpler process studies elsewhere. Unintended consequences should be monitored. A comprehensive study of return on investment requires an assessment of all effects. Program evaluation across several projects may help set future policy.

Keywords: Health information exchange, regional health information organization, evaluation

INTRODUCTION

Health information exchange (HIE) projects—which are often run by regional health information organizations—may be a stepping stone to a fully interoperable health information infrastructure that improves the quality and efficiency of health care in the United States. Around the nation, there are a few successful networks [1], but there are many initiatives and networks in their early stages.

The literature on the measured benefits of HIE remains sparse. In addition, most previous financial evaluations were based on expert panel estimates rather than on primary data [2]. The real financial and clinical benefits of these technologies therefore remain unclear. For example, while the Patient Safety Institute predicted a 20-to-1 return on investment at the national level for clinical data exchange [3], the 2003 Interim Report on the Santa Barbara County Data Exchange predicted only a marginal positive return on investment for the implementers [4]. Overhage et al. [5] found a $26 savings per emergency department patient due to clinical data exchange in one hospital, but no significant effect in another. Improvements in public health reporting [6] and savings in test ordering [7] have been noted. Models predict large benefits [2], but these are based on predictions from an aggregate of individual studies and expert opinion rather that observation of actual HIE implementation. The net of this is that the financial returns of HIE are uncertain.

More broadly, we do not have sufficient information to judge whether local HIE efforts will be viable in the long run and whether they will be the best approach to developing a national infrastructure. Despite the number of HIE projects currently in progress, there is a risk that we might learn little from these projects due to limited resources, lack of focus, and limited experience in evaluation.

To help address these issues, a meeting was funded by the United Hospital Fund and convened on November 1–2, 2006 in New York City, NY. Experts on HIE and experts on evaluation gathered to discuss HIE evaluation and address how to create an HIE evaluation framework. The result of that discussion is summarized in this report.

HEALTH INFORMATION EXCHANGE AND ITS EFFECTS

Studying the impact of HIE requires understanding the many kinds of effects of HIE. In general, HIE projects begin with a primary use case or set of use cases that represent the main purpose or thrust of the project. There may be secondary use cases from the conception, or they may arise as the project matures. There may also be unintended and unexpected uses and misuses of the project’s information system.

An HIE project undergoes a series of steps, from early conception to mature maintenance. Reviewing the steps can uncover possible unintended and unexpected effects. In the planning stages, the idea of the HIE project arises in some stakeholder. The stakeholder works to flesh out the idea and build support among the other stakeholders, including provider organizations, clinicians, payers, plans, residents, business groups, and government. Initial formal participants are selected, use cases become more concrete, and the business case is developed including identifying initial sources of funding or resources. In reality, many projects are conceived in a given locale, and some number of them may merge to form a viable project. Eventually, a detailed initial plan is developed and implementation begins.

The style of implementation may vary, but in general there is some specification of requirements, a decision on how to implement, the purchase or development of software, the acquisition of hardware, and the development of interfaces. Privacy and security policies are developed. Depending on the design, various databases may be populated: record locator service, a central data repository, or edge servers. Users are trained. An evaluation plan is developed and baseline measures may be taken. The user application is deployed in the user’s locations. Operation includes the normal operation and the problems such as downtime, slow response, and bugs. Development is ongoing and the use case evolves and expands.

Use cases are diverse. The primary ones often involve improving information access for clinical care. The providers that stand to benefit the most are those that provide care on entry to the system, such as private offices, clinics, and emergency departments. The HIE project generally fills a knowledge deficit, be it recognized or not. Data may be pulled (the user explicitly requests data for a patient, acknowledging a deficit) or pushed (the user receives unsolicited data, potentially filling an unknown deficit). It may be focused on a specific area such as cardiology, or broadly defined to address medication safety. Some use cases emphasize care efficiency rather than quality and decision making. For example, electronic data exchange may be cheaper and faster than traditional paper laboratory reports. Use cases may involve other stakeholders besides clinicians, such as researchers and public health departments.

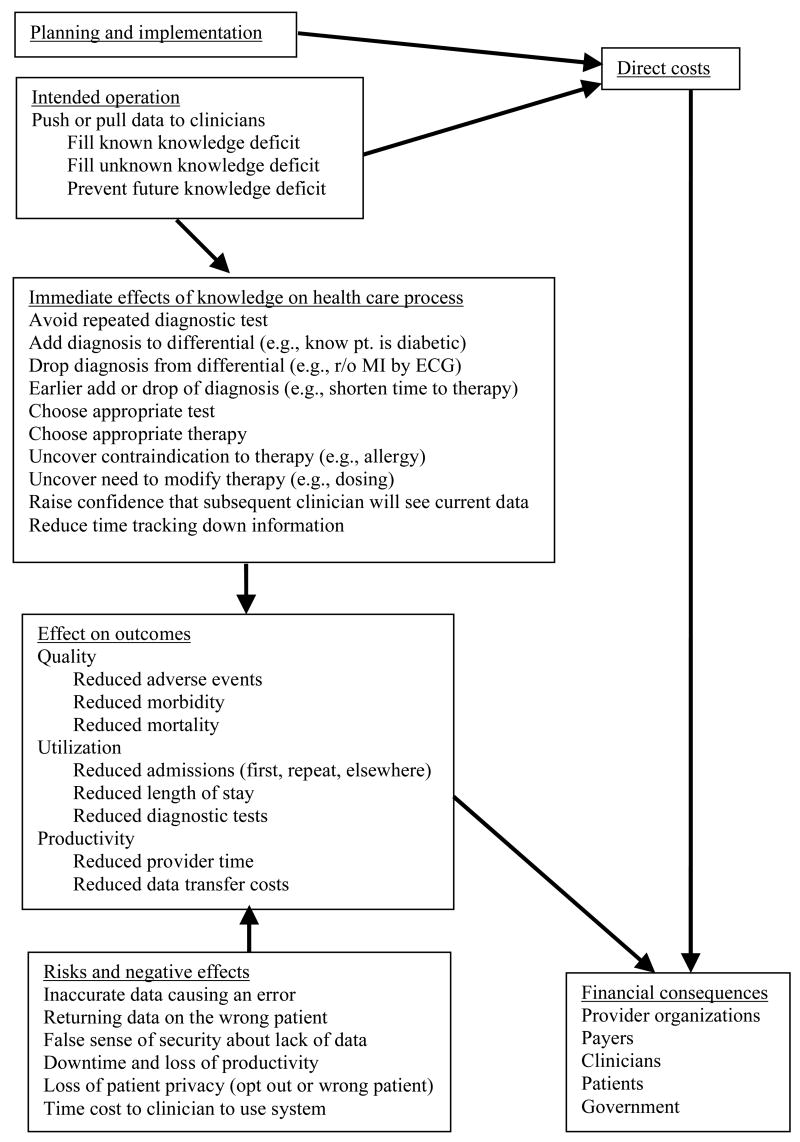

Figure 1 shows one delineation of the causes and effects for a primary use case. Planning, implementation, and operation result in direct costs. Operation of the intervention has effects on the health care process, which, in turn, should affect health care outcomes. There may also be unintended consequences and risks that affect outcomes. These various effects may have financial consequences.

Figure 1.

Focus on primary effects

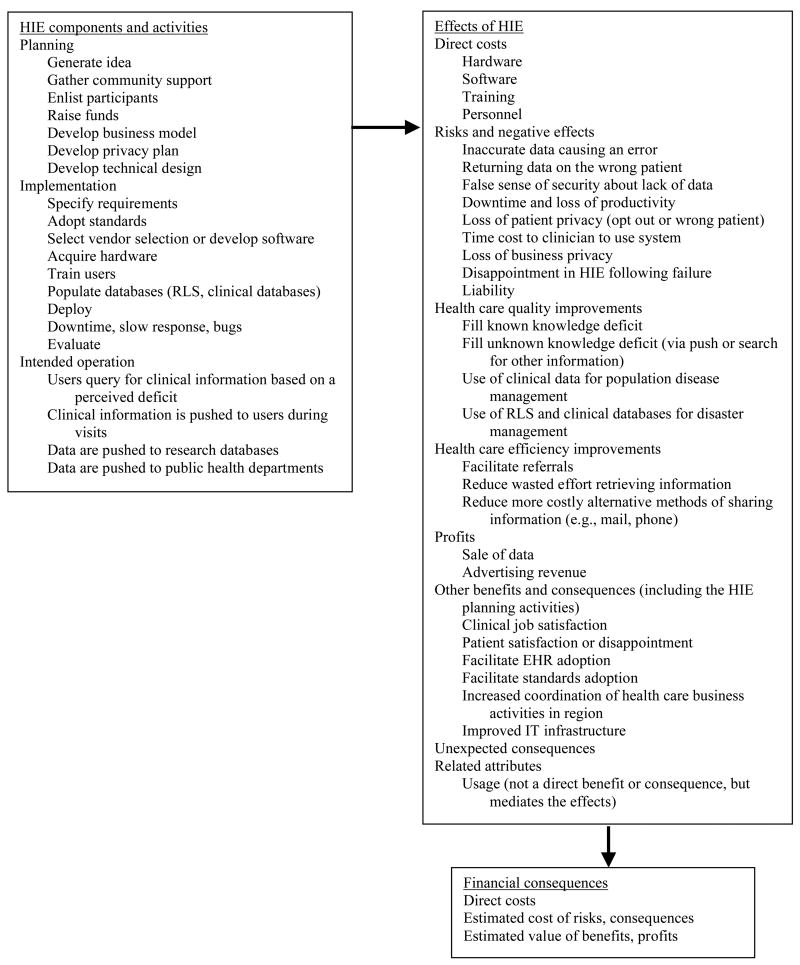

Figure 2 shows a broader range of effects. HIE components and activities result in a variety of effects, which can then have financial consequences. There may also be negative and positive unintended and unexpected effects. For example, the process of generating support may have effects in the community beyond the concrete provision of data. Earlier failures may facilitate future HIE projects by introducing the idea of data exchange, by seeding future ideas of feasible data exchange. It may also impede future projects by setting expectations of failure. Researchers who are evaluating HIE should consider these many effects in designing their studies.

Figure 2.

Summary of all effects

ISSUES IN EVALUATING HEALTH INFORMATION EXCHANGE

There is clearly a need for evaluation to determine the effect of HIE on the quality and efficiency of care and to determine the return on investment for society and stakeholders. The AHRQ HIE Toolkit [8] provides a general approach to health information technology evaluation and examples of what can be evaluated, but it does not provide a roadmap for moving forward. What is needed at the present time is not another toolkit, but a public framework that serves, in effect, as a meta-toolkit. That is, the framework should guide in the selection from among the many possible factors that can be studied. Agreeing on a set of methodologies will reduce duplicated effort in designing studies and will enable future meta-analysis.

One challenge to agreeing on a set of methodologies is the realization that there is no single model for HIE. The term covers a broad range of health information technology related to transferring data. A single framework for HIE evaluation will therefore be difficult. It may be more productive to treat HIE not as a single entity, but as a range of health information technologies that happen to have an information exchange component. Therefore, it may require a range of evaluations to learn from the diversity of HIE projects. Focusing too quickly on a narrow set of methodologies may limit what can be learned.

There are a number of concrete HIE evaluation initiatives already in progress. One of the most mature is the Indiana Health Information Exchange, which began with the Indiana Network for Patient Care in 1993. The network has 280 data sources from an entire region that flow via Health Level Seven into a queue and a database with over 600 million observations. A set of services are built on top of this infrastructure, including emergency department access, ambulatory practice message routing, anonymous querying of pathology reports, and syndromic surveillance. Each service has an expected outcome and pays its share of the infrastructure. Clinical messaging, for example, saves $30 million per year with 150,000 messages per day. One ten-doctor practice saved $160,000 per year in physician and staff time. The overall evaluation approach is to assess each service in its own context rather than to establish a common evaluation plan.

The MidSouth eHealth Alliance in Memphis, Tennessee is one of the more advanced recent projects. Its first use case is sharing information for review in emergency departments. Its evaluation team plans to assess cost, use and usability, clinical outcomes of disease-specific hypotheses (neonatal group B β-hemolytic streptococcus, asthma, congestive heart failure, and immunization), dollars saved in the care delivery process, and workflow gains. At present, the control strategy is being worked out: compare patients with data in the HIE database to patients without data or, of patients who do have data in the database, compare patients who had data accessed to those who did not have data accessed. In the former case, it is difficult to gather data on the control patients, although claims data may suffice. Biases may arise, because patients may not have data in the database due to opting out or being treated at a location that cannot send data. Early evaluation of their HIE infrastructure has shown problems they did not expect, such as increased data latency.

New York State allocated $55 million for the first phase of its Health Care Efficiency and Affordability Law for New Yorkers Capital Grant Program (HEAL NY Program) [9] to address HIE and other health information technology. Twenty-six grants were awarded, with nine in New York City. The Health Information Technology Evaluation Center (HITEC) was created to evaluate the program. HITEC initially planned six types of evaluation: usage, financial, quality, safety, consumer satisfaction, and provider satisfaction. HITEC has a joint role: to facilitate the evaluation of individual HIE projects and to evaluate the HEAL NY Program as a whole. It is likely that some of these projects will succeed and some will fail, and the HITEC evaluation will enable the correlation of strategy with success and failure. In addition, in many of these failures will be hidden successes, such as the seeds for a future successful project. Therefore, when it is completed, the HITEC evaluation will hopefully tell the policy story of what is happening in New York and provide information about broad programs, not just individual projects.

In practice, HITEC will begin with six projects that will be selected via an environmental scan. The scan includes market characteristics, organization and governance, perceived barriers to success, technologic aspects, strategic vision, and capacity for research. The HITEC has found that just talking to the project leaders has in some cases resulted in them adjusting their interventions and evaluations. Therefore, having readily available expertise and collaboration may be as important to a broad program like HEAL NY as a formal framework.

There are several possible ways to organize an HIE evaluation framework. It may be organized by the goal of the evaluation. Formative evaluations steer the design of the project and often focus on issues like usability and workflow. Summative evaluations allow others to generalize from the project and often focus on impact. Monitoring is another form of evaluation that focuses on ongoing measurement. A framework may be organized by the type of evaluation. An evaluation may be a rigorous quantitative study such as a randomized clinical trial; it may be rigorous qualitative study such as a series of focus groups that are analyzed using grounded theory to ascertain unintended consequences; or it may be an operational analysis that is designed to assess progress on a project efficiently (e.g., usage statistics).

A framework may be organized by the subject being evaluated. For example, an HIE program may be broken into three levels: the platform infrastructure and the operations of that platform, the interventions and services that use the platform, and the program as a whole. The platform evaluation includes usage (who, how often), breadth, completeness, and confidentiality. The intervention evaluation includes quantitative and qualitative iterative assessment of expected effects on outcome, such as mortality, quality, safety, efficiency, usage, and satisfaction. The program evaluation addresses policy and regional outcomes by aggregating the evaluations of the interventions and by looking at project and program characterizations.

Prioritizing evaluation methods requires understanding the important goals of HIE and the important challenges facing HIE. For example, one of the most difficult challenges for HIE projects is securing upfront funding and creating a sustainable business model. Some successes do exist, such as the Indiana Health Information Exchange and the HealthBridge project in the Greater Cincinnati tri-state area, but the challenge remains large.

The financial impact of an HIE project can be seen from different points of view: the immediate business case differs from the full return on investment from a societal point of view. For the immediate business case, the investors and other key stakeholders must meet their financial and programmatic objectives. A full assessment of return on investment requires knowledge of a broader range of costs and effects. For example, there may be an eventual effect on public health that is unrelated to the interests of the initial investors. Furthermore, analysis of return on investment is difficult because it may be difficult to identify what entities have lost income and to quantify the result. For example, better emergency department triage may lead to fewer admissions. Several return on investment models have been formulated; examples include the eHealth Initiative cost model tool and market assessment tool, the Center for Information Technology Leadership value tool, and return-on-investment tools that have been developed by health plans.

A major driving force for HIE is improving the quality of care, although it is difficult to measure. HIE evaluation should draw on existing efforts in measuring quality. The Institute of Medicine Quality Chasm report uses six features in a quality framework: safe, effective, patient-centered, timely, efficient, and equitable. The Ambulatory care Quality Alliance (AQA) has developed 26 quality measures, and they may be relevant to HIE. An informal review of the measures revealed that most of the indicators should show some impact, although tobacco use and smoking cessation were thought to be less fruitful. For each indicator area, HIE may cause better care, better documentation of care, or better efficiency by reducing duplicate testing. Unfortunately, the 26 measures may only be impacted modestly by HIE, and the major effects of HIE may fall outside of the AQA measures.

One challenge in demonstrating an effect on quality is that traditional health information technology interventions such as automated decision support may create a bigger impact than HIE itself. HIE projects that include the new deployment of health information technology interventions should ideally separate the cost of HIE and the incremental benefit of HIE if they are to assess the true value of the information exchange, though this will be challenging. Sample sizes need to be large enough to measure this smaller impact.

It also remains unclear whether the quality improvements found will be sufficient to motivate investment in HIE, although there are of course other benefits. Despite the existing literature on the impact of health information technology, it has been difficult to obtain broad investment. HIE, with its lower impact, is therefore likely to be even more difficult. One criticism of previous evaluations of health information technology is that most were carried out at a few unusual health care centers. Therefore, multi-project evaluation may be necessary to obtain sufficient evidence about quality to encourage investment, and quality may need to be paired with efficiency to achieve increased investment.

Evaluation of the HIE platform is important to steer the design of the project, to monitor the ongoing operation of the project, and to offer lessons to other projects. The evaluation includes issues around transferring data: completeness, timeliness, and accuracy of data transfer, success in matching patients, and confidentiality breaches. Strictly speaking, usage and impact studies do not apply to the HIE platform itself, but to the intervention (service) that uses the platform. Most of the HIE evaluation will be specific to the use cases.

HIE by definition involves transferring information among parties that are not otherwise formally connected. Therefore, it is difficult to predict all the effects of HIE, and a rigorous assessment of the effect of HIE must include unintended consequences, both positive and negative. Unintended consequences can be measured through a partnership between researchers with experience in uncovering unintended effects and personnel who know the project. Rapid ethnographic assessment is an example of an approach that does not require large investment.

Program evaluation, such as evaluation of several projects funded by one funding program, is becoming more important as the interest in HIE grows. For example, twelve states have issued executive orders about HIE and may benefit from each other’s experience. Such programs include a series of steps—awareness, regional activity, state leadership, statewide planning, statewide plan, and statewide implementation—and each of these can undergo evaluation.

An individual project must balance its own need for evaluation versus the evaluation needs for the program and society. Successful implementation strategies may be more important in the program evaluation than proof of return on investment or improved quality. Reasons for success or failure are difficult to sort out, however, because cause and effect are more difficult to assign than association. For example, following a checklist of HIE implementation steps will not guarantee success, but failing to follow those steps may be symptomatic of deeper problems that will lead to failure.

A rule of thumb for evaluation is that you must spend 10% of the budget on evaluation in order to learn from what you are doing. Not all evaluations need to be done on every project, however. Thus, for example, a rigorous evaluation of the quality impact of an HIE intervention may only need to be done three times if the results are consistent. After that, process measures may suffice to confirm that the intervention is functioning as intended. Similarly, time-motion studies need not be repeated endlessly.

Evaluation takes time. Many clinical effects may not be seen until 18 months after launching the effort and it may take 6 months to gather data, so there may be a 2-year lag from launching to demonstrating an effect. Therefore, clinical outcome may be too difficult to assess on the first round of evaluation. Nevertheless, it may be important to gather baseline data early and to influence how the project is being configured and learn what data elements need to be collected.

Process measures will be available faster than outcomes. Patient safety effects may occur quickly, but a large sample size is required to detect them; looking for medication errors rather than actual adverse drug events will dramatically lower the required sample size. The immediate business case (e.g., reduce cost disseminating laboratory results) will be faster than a full return-on-investment analysis that includes all costs and benefits. Gathering stories about how HIE has helped the health care process may create enough incentive for investors until hard evidence about return on investment arrives. This is one of the evaluation thrusts of the MidSouth eHealth Alliance.

Carrying out a good evaluation is a balancing act. Like the man in the joke in which he looks for his keys under the lamppost rather than where he dropped them because the light is better there, we tend to measure what is easy to measure rather than what needs to be measured. The evaluation must be both feasible and useful, but it will never be perfect.

In summary, HIE has no effect until the platform is put to some concrete use. Every project should carry out some platform-level monitoring, such as measuring data movement by user and data type. Projects can tailor their intervention-specific evaluation to the likely impact of the intervention, the timeframe, and the evaluation budget. The AQA measures are a starting point for some quality interventions, but more sensitive indicators should be used if possible. Not every HIE project should attempt to carry out the same detailed quality study; as a rough guide about three similar evaluations need to be published. The business case for each project should be evaluated. At the policy level, the impact of broad programs like the HEAL NY Program should be assessed.

EVALUATION PRIORITIES

Based on the analysis of the effects of HIE and on the above discussion, HIE evaluation can be organized into a series of steps or foci. Assume that an HIE project has a data exchange platform, a primary use case for that platform, and potential secondary use cases, and that the project is part of a larger program. The steps are ordered here roughly by complexity and by the time required to complete them.

1. Platform evaluation

The information exchange infrastructure must undergo initial evaluation to verify its success, and it must be monitored over the course of the project. Data transfer may be assessed in terms of accuracy, completeness, and timeliness; these concepts may need to be defined in terms of the primary use case. Patient matching should be verified. The system (software running on the hardware platform) can be characterized by uptime and performance.

2. Usage studies

Given the primary use case, usage can be measured. Important features include who is using the system and how much. This can usually be accomplished efficiently based on the system’s audit logs. User satisfaction and system usability may also be assessed through surveying, focus groups, observation, or usability laboratories.

3. Immediate business case

For the project to stay in operation, the immediate business case must be assessed. It may be based on the direct costs of the project and the measurable projected financial benefits. The business case serves to reassure the investors and other key stakeholders that the project is proceeding as expected with a reasonable profit or cost-benefit ratio.

4. Assessment of clinical and administrative impact

Benefits such as quality improvements take more time and are more difficult to measure. Actual demonstration that the HIE project has caused improvement in clinical outcomes is most difficult and requires a large controlled trial. Such studies need not be duplicated in every HIE project; three or so may be enough. After that, confirmation that process variables are improving may serve as sufficient evidence that the HIE project is clinically successful.

5. Unintended consequences

Qualitative evaluation techniques can be used to find unintended and unexpected effects, both positive and negative.

6. Comprehensive return on investment

The full return on investment can be calculated only after the direct and indirect costs are estimated and the clinical and administrative impact is measured and assigned a value. Unintended consequences must be included. There is the overall return on investment to society, and there are the individual returns for the distinct stakeholder groups.

7. Program evaluation

Assuming the project is part of a larger program, the program itself can be assessed, and the results for the individual projects can be aggregated. Knowledge of the factors that may have led to the success or failure of individual projects may help future projects and other programs. The characteristics of the program, such as the funding model and requirements, can be correlated with overall success of its projects and used to develop policy for the future.

Referring to the hopes for the HEAL NY Program, participant David Liss (NewYork-Presbyterian) offered a projected sequence of evaluation results as a series of hypothetical annual headlines:

Health care providers are talking to each other

The network is built

Man in Syracuse has his life saved by a doctor in New York City

New York State saves millions of dollars

New Yorkers are healthier

If the program is indeed this successful, it would be a shame not to realize it due to failed or insufficient evaluation. With an appropriate evaluation strategy, society can learn of its successes and failures and use the information to improve HIE in the future.

Acknowledgments

This work was funded in part by the United Hospital Fund, New York, NY, and by the National Library of Medicine (R01 LM06910 “Discovering and applying knowledge in clinical databases”).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

George Hripcsak, Columbia University.

Rainu Kaushal, Cornell University.

Kevin B. Johnson, Vanderbilt University.

Joan S. Ash, Oregon Health & Science University.

David W. Bates, Harvard Medical School.

Rachel Block, United Hospital Fund.

Mark Frisse, Vanderbilt University.

Lisa M. Kern, Cornell University.

Janet Marchibroda, eHealth Initiative.

Marc Overhage, Indiana University School of Medicine.

Adam Wilcox, Columbia University.

References

- 1.McDonald CJ, Overhage JM, Barnes M, Schadow G, Blevins L, Dexter PR, Mamlin B INPC Management Committee. The Indiana network for patient care: a working local health information infrastructure. An example of a working infrastructure collaboration that links data from five health systems and hundreds of millions of entries. Health Aff (Millwood) 2005;24(5):1214–20. doi: 10.1377/hlthaff.24.5.1214. [DOI] [PubMed] [Google Scholar]

- 2.Walker J, Pan E, Johnston D, Adler-Milstein J, Bates DW, Middleton B. The value of health care information exchange and interoperability. Health Aff. 2005;(Suppl Web Exclusives):W5-10–W5-18. doi: 10.1377/hlthaff.w5.10. [DOI] [PubMed] [Google Scholar]

- 3.Classen DC, Kanhouwa M, Will D, Casper J, Lewin J, Walker J. The Patient Safety Institute Demonstration Project: A model for implementing a local health information infrastructure. Journal of Healthcare Information Management. 2005;19(4):75–86. [PubMed] [Google Scholar]

- 4.Brailer DJ, Augustinos N, Evans LM, Karp S. Moving Toward Electronic Health Information Exchange: Interim Report on the Santa Barbara County Data Exchange; California HealthCare Foundation. 2003. [Google Scholar]

- 5.Overhage JM, Dexter PR, Perkins SM, Cordell WH, McGoff J, McGrath R, McDonald CJ. A randomized, controlled trial of clinical information shared from another institution. Ann Emerg Med. 2002;39:14–23. doi: 10.1067/mem.2002.120794. [DOI] [PubMed] [Google Scholar]

- 6.Overhage JM, Suico J, McDonald CJ. Electronic laboratory reporting: barriers, solutions and findings. J Public Health Manag Pract. 2001;7:60–6. doi: 10.1097/00124784-200107060-00007. [DOI] [PubMed] [Google Scholar]

- 7.Stair TO. Reduction of redundant laboratory orders by access to computerized patient records. J Emerg Med. 1998 Nov;16(6):895–7. doi: 10.1016/s0736-4679(98)00106-1. [DOI] [PubMed] [Google Scholar]

- 8.Cusack CM, Poon EG. The AHRQ National Resource Center Data Exchange Evaluation Toolkit. Agency for Healthcare Research and Quality National Resource Center for Health Information Technology; 2006. [Google Scholar]

- 9.New York State. Request for Grant Applications - HEAL NY Phase 1. Available at: www.health.state.ny.us/funding/rfa/0508190240/index.htm. Accessibility verified May 5, 2007.