Abstract

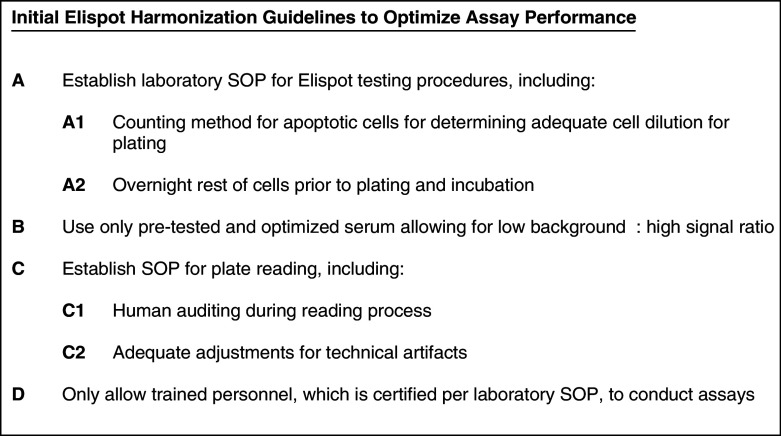

The Cancer Vaccine Consortium of the Sabin Vaccine Institute (CVC/SVI) is conducting an ongoing large-scale immune monitoring harmonization program through its members and affiliated associations. This effort was brought to life as an external validation program by conducting an international Elispot proficiency panel with 36 laboratories in 2005, and was followed by a second panel with 29 participating laboratories in 2006 allowing for application of learnings from the first panel. Critical protocol choices, as well as standardization and validation practices among laboratories were assessed through detailed surveys. Although panel participants had to follow general guidelines in order to allow comparison of results, each laboratory was able to use its own protocols, materials and reagents. The second panel recorded an overall significantly improved performance, as measured by the ability to detect all predefined responses correctly. Protocol choices and laboratory practices, which can have a dramatic effect on the overall assay outcome, were identified and lead to the following recommendations: (A) Establish a laboratory SOP for Elispot testing procedures including (A1) a counting method for apoptotic cells for determining adequate cell dilution for plating, and (A2) overnight rest of cells prior to plating and incubation, (B) Use only pre-tested serum optimized for low background: high signal ratio, (C) Establish a laboratory SOP for plate reading including (C1) human auditing during the reading process and (C2) adequate adjustments for technical artifacts, and (D) Only allow trained personnel, which is certified per laboratory SOPs to conduct assays. Recommendations described under (A) were found to make a statistically significant difference in assay performance, while the remaining recommendations are based on practical experiences confirmed by the panel results, which could not be statistically tested. These results provide initial harmonization guidelines to optimize Elispot assay performance to the immunotherapy community. Further optimization is in process with ongoing panels.

Keywords: Elispot, Proficiency panel, Validation, Harmonization, Immune monitoring

Introduction

Elispot is a widely used assay for immune monitoring purposes [6, 17, 25, 39]. Measuring immune responses has been accepted as an important endpoint in early clinical trial settings in order to prioritize further vaccine or other immunotherapy development [11, 22, 24, 26, 38]. Despite the overwhelming use of various immune assays for exactly that purpose, reported results are often met with skepticism, caused mainly by two reasons: (1) high variability among results from the same laboratories and/or among different laboratories, and (2) the lack of demand to report standardization, validation and training strategies as well as assay acceptance criteria by the laboratories conducting immune testing. This is surprising since the reporting of results for other clinical endpoints, e.g., side effects, has to follow strict guidelines and definitions.

The Clinical Laboratory Standard Institute (CLSI) clearly phrased the demand for internal validation of immune assays as well as external validation in proficiency panels [28]. Proficiency panels are a common strategy for clinical laboratories to prove their ability to perform clinical tests at a level that permits patient testing. Defined as a program in which multiple specimens are periodically sent to laboratories for analysis, and in which each laboratory’s results are compared with those of the other laboratories and/or with an assigned value [27], proficiency panels serve various purposes:

To provide regulatory and sponsoring agencies with confidence that reported data are generated following necessary standards and rigor that supports product licensure.

To provide an external validation tool for individual labs.

To provide proof to patients and volunteers that necessary measures have been taken to allow successful study participation [3].

Some reports about comparison of Elispot performances among laboratories have been published [5, 32, 33]. A two-step approach to assay harmonization is being described by the C-IMT monitoring panel in this issue [2]. The earliest report, a four-center comparative trial in 2000, showed that most participants were able to correctly detect low frequency responses or absence of response against specific peptides in PBMCs from six donors, following their own protocol [33]. Another four-center comparison was conducted among members of the Elispot standardization group of the ANRS in France [32]. This study demonstrated overall good qualitative and quantitative agreement in Elispot results in the participating labs when testing HIV negative and positive donors for reactivity against a variety of peptides. All labs used their own protocol, but shared a high level of Elispot experience. An important program was launched by the NIAID for 11 international laboratories participating in HIV-1 clinical trials [5]. The panel demonstrated good concordance in qualitative detection of specific immune responses in previously defined and tested donors, but also notable inter-laboratory and intra-sample variability in spot counts, cell recovery and viability. This observation was met with strict standardization strategies across all panel members, and the panel was repeated twice with all laboratories following a standardized protocol. Variability was decreased under these conditions, but not abrogated (Cox, personal communication). Justification for the strict standardization approach among participating HIV vaccine laboratories can be found in the nature of HIV vaccine testing programs. Immune monitoring for these vaccine trials is performed at many sites simultaneously. Further, results from different trials need to be comparable in order to identify most suitable vaccine candidates. Importantly, the experimental Elispot setup is similar or identical in most laboratories, where PBMCs are tested against a variety of peptides [25, 31].

In contrast, immune monitoring approaches in the cancer vaccine field are more heterogeneous, based on the vast variety of vaccine design, type of cancer, and availability of antigen presenting cells. Standardization of the entire Elispot protocol across laboratories is therefore not feasible. We set out to devise a strategy to identify issues and deficiencies in current Elispot practices, and to identify common sources of assay variability within and between laboratories, with the extended goal of standardizing the identified factors in an assay harmonization effort across laboratories.

In 2005, the Cancer CVC/SVI initiated an Elispot proficiency panel program to achieve this goal. In addition to offering an external validation program, the CVC addressed the need for such strategy by comparing assay performance across the field, identifying critical protocol choices and gaining an overview of training and validation practices among participating laboratories.

For this program, predefined PBMCs from four donors with different ranges of reactivity against two peptide pools were sent to participants for Elispot testing. Laboratories had to further provide cell recovery and viability data, as well as respond to surveys describing their protocol choices and training and validation status.

In response to the survey results, the CVC/SVI established requirements for laboratories to participate in future proficiency panels, which included the existence of a Standard Operating Procedure (SOP) prior to joining the program. Further, individualized assay performance assessment was offered to all laboratories, together with suggestions for implementation of protocol optimization steps.

The results of the second Elispot proficiency panel conducted a year later demonstrated a significant improvement. The results and survey data allowed the identification of critical protocol choices for a successful assay performance (Fig. 1).

Fig. 1.

Initial guidelines for harmonization of the Elispot assay to optimize assay performance and reproducibility derived from two international proficiency panels, based on their findings and trends observed

Materials and methods

Participants and organizational setup

All participants were members of the Cancer Vaccine Consortium or its affiliated institutions, the Ludwig Institute for Cancer Research (LICR) and the Association for Immunotherapy of Cancer (C-IMT). Laboratories were located in ten countries (Australia, Belgium, Canada, Germany, Italy, Japan, France, Switzerland, UK, and USA). Each laboratory received an individual lab ID number. Panel leadership was provided by a scientific leader experienced in Elispot, in collaboration with the CVC Executive office. SeraCare BioServices, Gaithersburg, MD, served as central laboratory, providing cells, pretesting and shipping services, as well as logistical services like blinding of panelists. IDs were not revealed to panel leader, CVC or statistician during the panel.

Thirty-six laboratories including the central lab participated in panel 1, 29 including the central lab in panel 2. Twenty-three laboratories participated in both panels. Six new panelists were added to the second testing round. Thirteen dropped out after the first panel. Main reasons for drop out were switch of assay priorities and not meeting criteria for panel participation. Various groups stated that one time participation fulfilled their need for external validation.

PBMCs and peptides

PBMCs from healthy donors were obtained from a commercial donor bank, manufactured and processed under GMP conditions using established Standard Operating Procedures at SeraCare. PBMCs were frozen using a rate controlled freezer and transferred to the vapor phase of liquid nitrogen. A three lot validation study was performed [20] in which the following was validated: cell separation procedure, freezing media, dispensing effect on function, freezing procedure, and shipping on dry ice. It was demonstrated that functionality and viability were maintained throughout the procedure. In addition, Elispot values from fresh and frozen PBMCs, shipped on dry ice, were nearly equivalent.

Each vial of PBMCs contained enough cells to ensure a recovery of 10 million cells or more under Seracare’s SOP.

PBMCs were pretested at the central laboratory for reactivity against the CEF [7] and CMV pp65 peptide pools [23]. The CEF peptide pool was obtained through the NIH AIDS Research and Reference Reagent Program, Division of AIDS, NIAID, NIH. It contains 32 8-11mers known to elicit CD8-restricted responses, and serves as a widely used control in IFNγ Elispot assays [7]. The CMV pp65 peptide pool was a generous gift of the NIAID and Becton Dickenson. This pool consists of 135 15mers overlapping by 11 amino acids, and has been shown to elicit CD8- and CD4-restricted responses [23]. PBMCs were selected for no, low, medium and strong responses against both peptide pools, and repeatedly Elispot-tested at Seracare in order to confirm responder status. Response definition was set arbitrarily for the spot number/well (200,000 PBMC): no responder: average median/panel 1 + 2 = 1 (CMV and CEF); low responder: average median/panel 1 + 2 = 18 (CMV) and 40 (CEF); medium responder: average median/panel 1 + 2 = 98 (CMV) and 127 (CEF); and high responder: average median/panel 1 + 2 = 396 (CMV) and 398 (CEF).

Peptide pools were resuspended in DMSO and further diluted with PBS to a final concentration of 20 μg/ml. Aliquots of 150 μl of peptide pool were prepared for final shipment to participants. Corresponding PBS/DMSO aliquots for medium controls were also prepared. Participants were blinded to the content of these vials, which were labeled as “Reagent 1, 2 or 3”.

All cells and reagents sent to participants in both panels were obtained from the same batches.

Cells and reagent vials were shipped to all participants for overnight delivery on sufficient dry ice for 48 h. Shipping was performed by Seracare under their existing SOPs.

Elispot

Participants received a plate layout template and instructions for a direct IFNγ Elispot assay which had to be performed in one Elispot plate. Each donor was tested in six replicates against three reagents (medium, CEF and CMV peptide pool). Further, 24 wells were tested for the occurrence of false positive spots by the addition of T cell medium only. About 200,000 PBMC/well were tested against 1 μg/ml peptide pool or the equivalent amount of PBS/DMSO. All other protocol choices were left to the participants, including choices about: Elispot plate, antibodies, spot development, use of DNAse, resting of cells, T cell serum, cell counting and spot counting method. All plates were reevaluated at ZellNet Consulting (Fort Lee, NJ) with a KS Elispot system (Carl Zeiss, Thornwood, NY), software KS Elispot 4.7 (panel 1) and KS Elispot 4.9 (panel 2) in blinded fashion. Each well in each plate was audited.

Since the focus of this study was on assay performance and protocol evaluation and not on the definition of a positive response, we prospectively defined the ability to detect a response (independent of magnitude) by using the following “empirical” method: the antigen-specific spot counts per 2 × 105 PBMCs had to be >10, and at least 3× as high as the background reactivity. Similar approaches have been described elsewhere [5, 8].

Statistical analysis

The following parameters were calculated for the overall panel and the individual participant’s performance, using either lab-specific counts or central recounts: the mean, standard deviation, and coefficient of variation (CV), the median, minimum, and maximum spot counts for each donor and reagent and the media only wells. Box plots were used to illustrate the distribution of spot counts across the panel per given test condition. Further, individual results were represented as box plots comparing lab counts with recounts, central lab results and overall panel results. For panel 2, results from repeating laboratories were also compared in box plot format for each donor and condition. The Fisher’s Exact test was used to compare the proportions of laboratories which missed to detect responses in each panel. For the comparison of recovery and viability, the Student’s t test was applied.

Results

Feasibility

In the first proficiency panel, shipping and Elispot testing among 36 laboratories from 9 countries were conducted without delays. The success of this panel demonstrates the feasibility of such large international studies, the biggest of such format as of today, under the described organizational setup. The second panel with 29 participating laboratories from 6 countries followed the approach of panel 1. However, customs delays of dry ice shipments to some international sites required repeated shipments of cells and antigens to these destinations. Based on this experience, the use of dryshippers with liquid nitrogen is being implemented for international destinations in the third CVC panel round in 2007.

Recovery and viability of PBMCs in panel 1 and 2

Participants were asked to record recovery and viability of cells immediately after thawing (Table 1). The mean and median for both parameters were almost identical in both panels, and for all four donors (mean cell recovery between 11.5 and 13.3 million cells and mean viability between 85 and 91%). Only a small percentage of laboratories recovered less than 8 million cells (13% in panel 1 and 14% in panel 2). The percentage of groups reporting less than 70% viability was 7% for both panels.

Table 1.

Cell recovery and viability in both proficiency panels

| Donora | Mean P1/P2b | Median P1/P2 | Minimum P1/P2 | Maximum P1/P2 | |

|---|---|---|---|---|---|

| Cell recovery in 106 cells | 1 | 12.5/11.5 | 12.0/12.0 | 6.8/1.0 | 26.4/18.8 |

| 2 | 13.3/12.3 | 13.2/12.6 | 5.5/0.3 | 28.4/22.4 | |

| 3 | 13.1/12.8 | 13.6/12.8 | 6.7/1.7 | 25.1/24.3 | |

| 4 | 12.8/12.4 | 11.9/12.9 | 5.6/6.2 | 34.4/22.7 | |

| Viability (%) | 1 | 88/85 | 89/89 | 48/58 | 100/98 |

| 2 | 87/88 | 89/90 | 57/67 | 100/98 | |

| 3 | 91/87 | 93/90 | 54/69 | 100/100 | |

| 4 | 86/89 | 90/92 | 43/75 | 100/98 |

aPBMCs from same donors and batches were used in both panels

bP1/P2 refer to data from panel 1 (P1) and panel 2 (P2)

Interestingly, only 4/10 laboratories in panel 1, and 4/7 laboratories in panel 2 with recoveries below 8 million cells were from international locations. Similar, only 1/5 laboratories in panel 1 and 2/5 in panel 2 reporting viabilities less than 70% belonged to international sites. This clearly demonstrates that location for dry ice shipment had no effect on overall cell recovery and viability.

We also investigated whether low (<8 million) or very high (>20 million) cell recovery had an influence on spot counts, assuming that these were potential erroneous cell counts, leading to too low or too high cell dilutions, respectively, what in turn would lead to too high (in case of underestimating cell number) or too low (in case of overestimating cell number) spots counts. However, except for a few sporadic incidents, there was no correlation between cell counts and spot counts (data not shown) in either direction. Only one laboratory with low recovery and low viability was found to have peptide pool-specific spot counts for all donors much below the panel median.

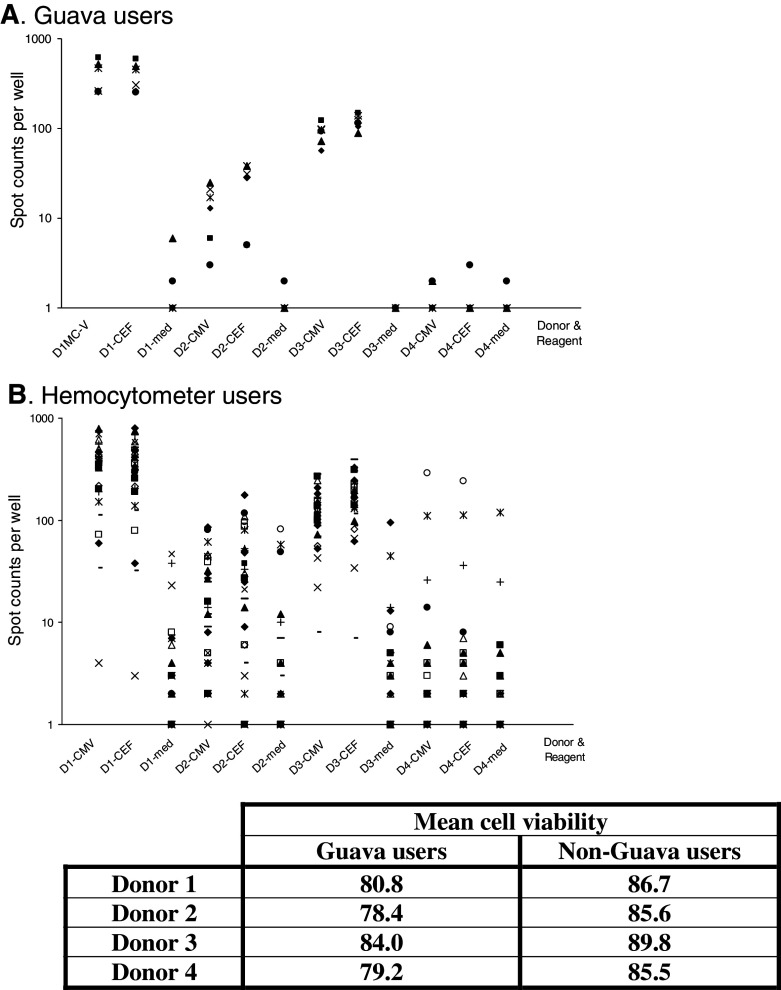

The use of an automated cell counter (Guava) did not reveal any trend in recovery for this group (recovery ranged from 6.7 to 25.1 million cells), nor a difference compared to the overall panel recovery data. In contrast, the mean cell viability per donor measured by Guava counter users was significantly lower than the overall cell viability in users of a hemocytometer (P < 0.01, see table in Fig. 5).

Fig. 5.

Distribution of mean spot counts (upper two graphs) and cell viability (table) among the users of a Guava automated cell counter (a) and users of a hemocytometer (b). Spot counts per well (200,000 cells) are represented on a logarithmic scale. D1–4 refer to the donor tested with D1 being the strong, D2 the low, D3 the medium and D4 the non-responder. The tested reagent is indicated (med medium). The mean viability per donor reported by Guava users and users of other cell counting methods (one lab used an automated cell counter from Beckman Coulter, all others used a hemocytometer with trypan blue exclusion) is presented in the table below the figure

Elispot results in panel 1

All 36 laboratories completed testing of all 4 donors against medium, CEF and CMV peptide pool. Spot appearance and size as well as occurrence of artifacts differed dramatically among laboratories (not shown). Four outlier laboratories were identified, which detected less than half of the responses correctly. In all four cases, detected responses were well below the panel median, and often, there was high background reactivity (up to 270 spots/well) in medium controls. No obvious protocol choices could be identified which could have been responsible for the suboptimal performance. One out of the four laboratories had little experience at the date of the panel. Another group reported a less experienced scientist performing the assay. A third outlier repeated the assay, and was able to perform adequately. No feed back was available from the fourth group.

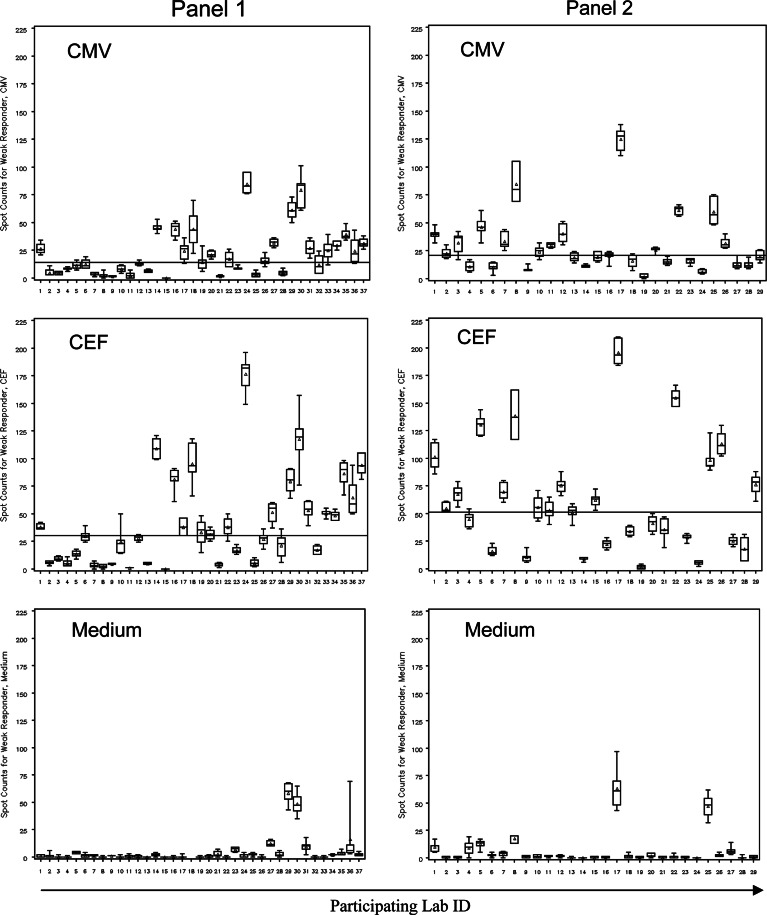

Thirty-two out of 36 labs were able to detect medium and strong responders as well as the non-responder. However, almost 50% of participants were challenged with the detection of the low responder. The responses measured for that particular donor (mean spot counts/well for CEF = 42 and for CMV = 22) has been chosen for illustration purposes. Figure 2 demonstrates the distribution of responses measured including intra-laboratory variability for this donor against all three reagents and across both panels.

Fig. 2.

Laboratory spot counts and variability for low responder across panel participants. Panel 1 left column, panel 2 right column. The reagent tested is indicated. Lab-specific spot counts per 200,000 cells are depicted as box plots with the box presenting the interquartile range, the triangle the mean and the horizontal line the median. Maximum and minimum spot counts are illustrated through the upper and lower mark. The horizontal line across a graph demonstrates the overall panel median. The central laboratory performed the assay under two different conditions in panel 1. Results from both experiments are presented; therefore 37 laboratories for panel 1. Laboratory ID numbers do not correlate in both panels. In panel 1, laboratory #18 reported spot counts for the medium control as high as 270 per well (mean 81, median 34.5). For proper illustration of all other panel data, these data were omitted from the graph. Intra-laboratory variability and variability among participants as well as reactivity against medium are representative for all responder PBMCs tested

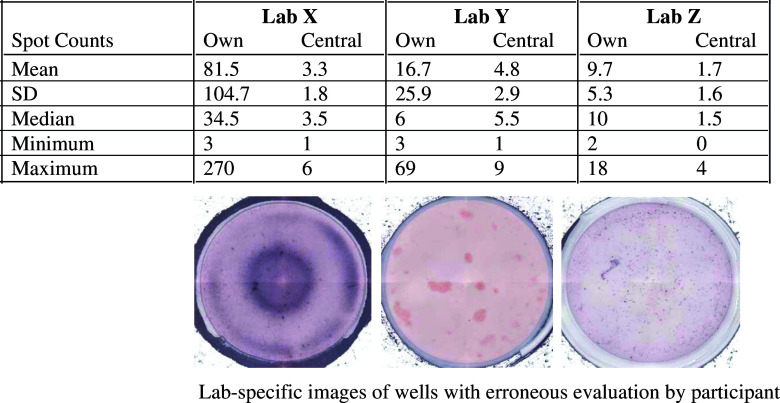

In panel 1, 17 labs did not detect the response against the CMV peptide pool. In one case, the response was missed due to high reactivity against medium. Three other labs missed to detect the response due to inaccurate spot counting, specifically in the medium control wells. Artifacts were erroneously included in counts (Fig. 3). Reevaluation of those plates revealed that those labs had spot counts indicating a response against CMV.

Fig. 3.

Elispot assay results can be confounded by plate evaluation accuracy. The table demonstrates spot counts for PBMC/medium control wells with many artifacts from three different laboratories (Lab X, Y, Z). Respective well images are shown below each column for that specific group. Differences in lab-specific spot counts (“own”) and counts from reevaluation in an independent laboratory (“central”) including resulting variability measures are presented in the table

A similar scenario was found for response detection against the CEF peptide pool. Thirteen laboratories missed to detect the response, one of which due to high reactivity against medium, and one due to inaccurate spot counting.

An interesting observation was that 23 groups reported false positive spots in a range of 1–26 spots/well. Reevaluation revealed that the actual number of groups with false positive spots was lower (12), and the false positive spot number range per well fell between 0 and 8.

Survey results about protocol

During the first panel, participants had to provide information about their protocol choices: plates, potential prewetting of PVDF, antibodies, enzyme, substrate, use of DNAse during PBMC thawing, resting of cells, serum used, cell counting, and plate reader. There was a wide range of protocol choices across the panel participants. The most common choices for the parameters listed above were as follows: use of PVDF plates (64%) prewetted with Ethanol (52%), coated with Mabtech antibodies (67%) at 0.5–0.75 μg/well (33%); spot development with HRP (53%) from Vector Laboratories (33%) using AEC (44%) from Sigma (42%); no use of DNAse when thawing cells (83%), and no resting period for cells prior to the assay (53%); use of human serum (64%); cell counting with trypan blue exclusion using a hemocytometer (78%); and plate evaluation with a Zeiss reader (36%).

There were some clear trends for international sites with preferred use of the AP/BCIP/NBT development system (83 via 29% in the US), the use of nitrocellulose plates (75 via 21% in the US), and the use of Mabtech antibodies (83 via 58% in the US). On the other hand, 7/8 laboratories using an automated cell counter were located in the US.

We stratified our panel participants into groups consisting of: (1) outliers (failed to detect more than 50% of responses correctly), (2) labs which missed weak response detection only, and (3) labs with correct qualitative response detection in all four donors. We then checked for specific parameters that could be potentially responsible for the groups’ panel performance. We were not able to detect any parameter that seemed to be responsible for missed response detection. In contrast, laboratories with the same overall protocol choices (except serum) could have very different assay performance, whereas laboratories with different protocol choices could have almost identical spot counts (Fig. 4). Reasons for the failure to detect protocol choices which significantly proved the advantage or disadvantage of their use can be found in (1) the study design with open protocol choices allowing participants to follow their own SOP, (2) the overall wide range in choices of reagents and materials (e.g., 7 different sources for the coating antibody with 10 different total antibody amounts reportedly used, 10 different enzyme sources, 7 different Elispot reader systems, cell resting times between 0.5 and 20 h, serum choices unique for each laboratory etc.), and (3) the small sample sizes resulting from this dispersion of protocol choices. These widely spread individual protocol choices among participants did not allow the aggregation of data for an adequately powered statistical analysis.

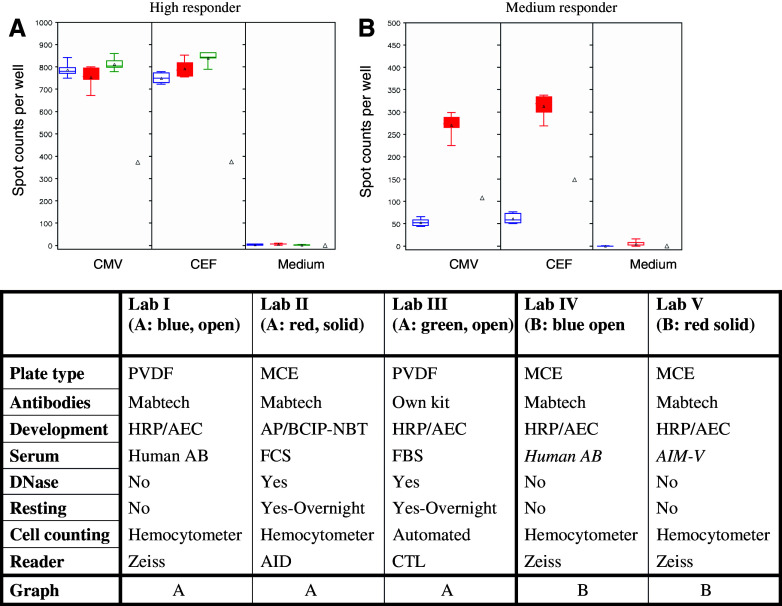

Fig. 4.

Demonstration of examples of concordance of spot counts among three laboratories (Lab I–III) using different Elispot protocols (a, high responder), or of disagreement of spot counts for two laboratories (Lab IV–V) using almost identical protocol choices (b, medium responder). Lab-specific spot counts per 200,000 cells are depicted as box plots with the box presenting the interquartile range, the triangle the mean and the horizontal line the median. Maximum and minimum spot counts are illustrated through the upper and lower mark. The triangle refers to the overall panel mean for that specific condition. Results of Lab I–III are shown in a, and results of IV–V in b. The table contains reference to the figure above, and information about specific protocol choices

One trend observed was that the users of an automated cell counter (7 labs used a Guava cell counter) had an overall better assay performance (Fig. 5), with no outliers in this group and only two laboratories who missed to detect the weak responders (29 vs. 52% in non-Guava users).

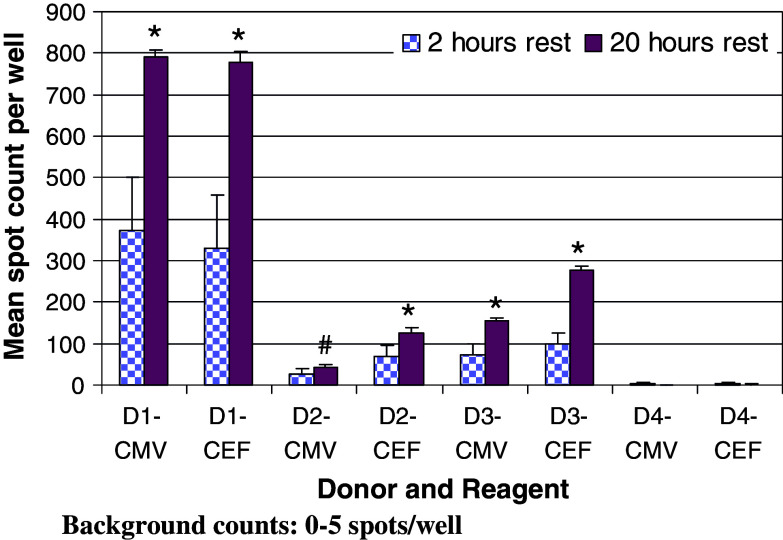

Almost half of the participants (47%) introduced a resting period for cells before adding them to the Elispot plate. The time frame varied from 0.5 to 20 h. A valuable side-by-side comparison was performed by the central laboratory, in which two tests were run in parallel using the lab’s SOP with the exception that their standard resting period of 20 h was replaced by a shorter resting period of 2 h in the second test. The results are summarized in Fig. 6, demonstrating a significant increase in spot numbers for all peptide pool-specific responders when cells were rested for 20 h, without an increase in background reactivity (P < 0.05 for the weak responder against CMV; for all other antigen-specific responses in all donors P < 0.01).

Fig. 6.

Comparison of the effect of 2 h (checkered bars) and 20 h (solid bars) resting periods for cells after thawing, before adding to the assay. D1–4 CEF/CMV refer to the specific donor and peptide pool tested. Background reactivity for all donors and testing conditions was between 0 and 5 spots (not shown). The standard error is shown. * Indicates a statistical significant difference of P < 0.01 in spot counts between 2 and 20 h resting periods for a given donor and reagent; # indicates in statistical difference with P < 0.05 (Student’s t test)

Survey results about validation and training practices

During the lively discussion of the results of panel 1 and its protocol survey at the Annual CVC meeting in Alexandria in November 2005, it was suggested that the level of experience, standardization and validation of participating laboratories might have been the cause for the variability and performances observed. In response, we conducted a survey among panelists, in which 30 laboratories participated. As expected, the experience and Elispot usage varied significantly. Some laboratories had the Elispot assay established less than one year before panel testing, whereas others used the assay for more than 10 years. The experience of the actual performer of the panel assay also varied widely.

Interestingly, even though 2/3 of participants reported to have specific training guidelines for new Elispot performers in place, more than 50% never or rarely checked on the scientist’s performance after the initial training.

Almost all laboratories indicated that they use an SOP that had been at least partially qualified and/or validated. Validation tools and strategies varied widely. Only 12 groups monitored variability, whereas 23 reported the use of external controls of some kind (e.g., T cell lines, predefined PBMC, parallel tetramer testing).

Thirteen groups were found to have some kind of criteria implemented for assay acceptance. Among the 20 different criteria reported, not one was described by more than one lab.

Mirroring these survey results, 20 laboratories believed that they need to implement more validation steps. All except one group expressed their strong interest in published guidelines for validation and training strategies for Elispot.

Elispot results in panel 2

Based on the experience from the first panel, acceptance criteria for participation in the program were redefined. Only laboratories with established SOPs were accepted. The second panel was repeated with identical experimental setup as the first panel, and with the same batches of PBMCs, peptides and control reagents. Twenty-nine laboratories participated including the central lab. This time, there were no outlier performers identified. The number of groups that did not detect the weak responder dropped dramatically (P < 0.01) from 47% (17/36 labs) in panel 1 to 14% (4/29) in panel 2 (Fig. 2). The overall panel median for the CMV-response in the weak responder increased from 14 spots in panel 1 to 21 spots in panel 2, and for the CEF response from 30 to 51, respectively.

Of the 23 labs repeating the panel, 8 had changed their protocol before panel 2, 3 of which as an immediate response to results from the first panel. One outlier lab from panel 1 participated in panel 2, and improved its performance by detecting all responses correctly as per reevaluation counts. Only their lab-specific evaluation did not detect the low CMV responder. Overall, 4/23 panel-repeating labs (17%) did not detect the low CMV-responder, 3 of which also did not detect this response in panel 1. About 10/23 groups missed the low CMV responder in panel 1, but 7 of these laboratories were able to detect it in panel 2. Only one repeater detected the low CMV response in panel 1, but not panel 2. This is a clear performance improvement for that group (47% missed this response in panel 1), and highlights the usefulness of multiple participation in panel testing as an external training program.

We ran an in-depth analysis of the results and previous survey responses, where available, from participants who missed the weak responder, as well as from laboratories with marginal detection of response, including personal communication. We were able to narrow down the possible sources for these performances. Two laboratories missed responses due to inaccurate evaluation, during which they either included artifacts into spot counts or simply did not count the majority of true spots, as central reevaluation revealed. One laboratory did not follow the assay guidelines. The majority of laboratories, however, followed common protocol choices, but had either very low response detection across all donors and antigens, or detected very high background reactivity in some or all donors. This pattern pointed to serum as the possible cause for suppressed reactivity or non-specific stimulation. Three of these laboratories shared with the CVC that retesting their serum choice indicated that they had worked with a suboptimal serum during the panel; and that they now successfully introduced a different serum/medium to their protocol with improved spot counts. Serum choices included human AB serum, FCS, FBS, and various serum-free media. There was no difference in assay performance detectable between these groups.

Discussion

The CVC conducted two large international Elispot proficiency panels in which participants tested four batches of predefined donor PBMC for CEF and CMV peptide pool reactivity. Common guidelines like number of cells per well and amount of antigen had to be followed, in order to allow result comparison and reduce variability due to the known influence of cell numbers plated [2, 14]. A surprising finding from the first panel was that almost half of the participants were not able to detect the weak responder. The initial protocol survey did not allow the detection of common sources for this sub-optimal performance. In some cases, laboratories with identical protocol choices performed very differently, whereas others with distinct protocols had almost identical spot counts (Fig. 4). This observation supports the premise that many common Elispot materials and reagents (e.g., plates, antibodies, spot development reagents) perform equally or similarly well; and that there are other factors which influence the outcome of the Elispot assay.

Various laboratories missed the response detection by inaccurately evaluating their Elispot plate, independent of the reader used, as reevaluation revealed (Fig. 3). This observation is in contrast to results from the first NIAID proficiency panel, which describes good agreement in spot counts from participants and experienced, independent centers [5]. Operator-dependent variability in Elispot evaluation results is a known phenomenon [15]. Despite the availability of high resolution readers and software features for automated spot gating and other potentially helpful options, it is essential to employ well trained operators for spot counting, to audit all plates, and to implement changes of reading parameter in cases when well and spot appearances differ from the overall assay, typically for technical reasons. For that, SOPs used for plate evaluation might require revision. The use of available certification and training services can be helpful.

Cell counting is a protocol step known for introducing variability. Because of the wide range in PBMC recovery, we investigated whether cell counts could have been potentially erroneous, which would lead to wrong final cell dilutions, and can therefore lead to too high or low spot counts. Despite large differences in cell recovery (from 5.5 to 34.4 million cells per vial in panel 1), we did not find a correlation between cell recovery and spot counts. Very low (<8 million) and very high (>20 million) cell counts were consistently found for all donors among the same few laboratories. Both, hemocytometers and automated counters were used in those laboratories. Various automated cell counters have been introduced to the market, which do not only offer automated spot counting features, but also the discrimination of apoptotic, viable and dead cells. The use of such systems can potentially decrease variability in cell counts, and most importantly increase the accuracy of viable cell counts [4, 21]. Seven laboratories in panel 1 used the Guava counter, which allows the discrimination of apoptotic cells. Even though our panel did not reveal a difference in cell counts between Guava and hemocytometer users, it could be demonstrated that the overall cell viability reported by Guava users was significantly lower. This could likely be attributed to the ability to discriminate apoptotic cells with this method. Smith et al. [35] recently reported the usefulness of apoptosis acceptance criteria that allowed the separation of PBMC samples by their ability to respond to an antigenic stimulus or not.

The introduction of a resting period for thawed cells is known to be advantageous since apoptotic cells die during the resting period, and final dilutions for the assay are based on a more homogenous population of viable cells [16, 18]. In contrast, the addition of a mixture of viable and apoptotic cells, which are prone to die during assay incubation time, directly after thawing leads to lower spot counts. During panel 1, the central laboratory performed a side-by-side comparison of the influence of a 2 and 20 h resting period on final spot counts, and demonstrated that a 20 h resting period yielded significantly higher counts (Fig. 6). Proficiency testing results from the C-IMT also support the introduction of a cell resting period for Elispot assays [2]. Even though almost half of participants in panel 1 let cells rest before addition to the plate, there was no clear correlation to the magnitude of peptide-specific spot counts. This might have been due to the variation of resting times between 0.5 and 20 h, and other protocol variables, which included the actual resting protocol. Factors like serum and serum concentration, cell concentration, and actual storage condition (e.g., tissue culture flasks or plates can lead to cell adherence and therefore loss of professional antigen-presenting cells) are known to influence the success of cell resting.

There are no scientific studies published about the effect of serum choices on immune assay results, one of the best known “secrets” in immunology [16]. It is critical to choose serum that supports low background reactivity, but strong signals. The leading choice in this panel to use human AB serum reflects the historic preference for human immune assays. Each serum batch, however, is unique in its ability to support optimal assay resolution, and may potentially contain mitogenic or immune suppressive factors. There was some anecdotal evidence that the serum choice among our panelists was responsible for suboptimal performance. Interestingly, six laboratories preferred to work with serum-free medium. None of these groups observed high background reactivity, but two failed to detect the weak responder.

A survey conducted among participating laboratories shed light on validation and training practices. The wide experience range of participating laboratories, in combination with various levels and approaches to validation and training, correlated with the overall high variability in panel results. In response, new criteria for panel participation were introduced for panel 2. The outlier lab number decreased from 4 to 0 in the new panel, however 3/4 outliers from panel 1 did not participate in panel 2. The percentage of laboratories that did not detect the weak responder decreased significantly from 47 to 14% (P < 0.01). This improvement might have been partially due to the stricter participant selection in panel 2. However, 7/10 labs repeating the panel improved their performance by correctly detecting the low CMV responder in panel 2, while missed in panel 1. A striking finding was that 2/3 of all laboratories stated that they believed they needed to implement more validation. And all but one group expressed their wish for published guidelines for Elispot assay validation and training.

The outcomes from these two proficiency panels first provide Initial Elispot Harmonization Guidelines for Optimizing Assay Performance (Fig. 1) that can fulfill this need and may provide—if implemented widely—the grounds for substantial improvement of assay utility for research applications and development of immune therapies. This can be implemented in accordance with assay recommendations made for cancer immunotherapy clinical trials by the Cancer Vaccine Clinical Trial Working Group [12]. Further optimization is aimed for through ongoing proficiency panel work conducted by the CVC.

Validation of assays is now a requirement for all endpoint parameters in clinical trials [30]. There are an increasing number of publications available describing validated Elispot assays [1, 19, 25, 34]. These papers contain valuable scientific information, but only limited referral to FDA regulations. The FDA guidelines for validation of analytical procedures [9] describe validation as the process of determining the suitability of a given methodology for providing useful analytical data, which consists of analyzing or verifying the eight or nine assay parameters as described in the US pharmacopeia or the ICH guidelines [13, 37]. Only few publications address validation of bioassays and Elispot in FDA terms [10, 16, 29, 36]. And even these few publications give only limited advice on how to validate the Elispot assay in a given laboratory setting, not to mention specific training guidelines.

Furthermore, acceptance criteria for assay performance were only used by a limited number of laboratories, and each criterion was unique for the laboratory that used it.

These observations should be a wake-up call for the immune monitoring community, which does not only include the cancer vaccine field, but also the infectious disease and autoimmunity field and others. General assay practices for the detection of antigen-specific T cells are comparable across all fields. The CVC as part of the Sabin Vaccine Institute is intending to develop and tighten collaborations with groups from other research and vaccine development areas. Published documents with specific criteria for Elispot assay validation, assay acceptance criteria and training guidelines will be most valuable for the immune monitoring field, and are now being established as CVC guidelines as a result of the described studies. Continuous external validation programs need to be a part of these efforts in order to check upon the success of inter-laboratory harmonization including assay optimization, standardization and validation as well as of laboratory-specific implementation of guidelines and protocol recommendations. These efforts are essential to establish the Elispot assay and other immune assays as standard monitoring tools for clinical trials.

Acknowledgments

Special thanks to C. Wrotnowski for his work in initializing the proficiency panel program of the CVC. W. M. Kast is the holder of the Walter A. Richter Cancer Research Chair and is supported by the Beckman Center for Immune Monitoring.

Abbreviations

- CVC/SVI

Cancer Vaccine Consortium of the Sabin Vaccine Institute

- PBMC

Peripheral blood mononuclear cells

- CEF

Cytomegalie, Epstein Barr, and influenza virus

- CMV

Cytomegalie virus

- NIAID

National Institute of Allergy and Infectious Diseases

- CV

Coefficient of variation

- SOP

Standard operating procedure

Appendix: CVC Elispot proficiency panel members (in alphabetical order)

Richard Anderson, Oxxon Therapeutics Ltd, Oxford, UK

Nadege Bercovici, IDM S.A., Paris, France

Jean Boyer, University of Pennsylvania, Philadelphia, PA

Cedrik Britten, Johann-Gutenberg University Mainz, Germany

Dirk Brockstedt, Cerus Corporation, Concord, CA

Judy Caterini, sanofi pasteur, Toronto, Canada

Vincenco Cerundolo, Ludwig Institute for Cancer Research, Weatherall Institute of Molecular Medicine, Oxford, UK

Weisan Chen, Ludwig Institute for Cancer Research, Heidelberg, Australia

Timothy Clay, Duke University Medical Center, Durham, NC

Robert Darnell, Rockefeller University, New York, NY

Sheri Dubey, Merck & Co, Inc., West Point, PA

Thomas Ermak, Acambis, Cambridge, MA

Sacha Gnjatic, Ludwig Institute for Cancer Research, Memorial Sloan-Kettering Cancer Center, New York, NY

Richard Harrop, Oxford BioMedica, Oxford, UK

Don Healey, Argos Therapeutics, Inc., Durham, NC

Alan Houghton, Memorial Sloan-Kettering Cancer Center, New York, NY

Elke Jaeger, Ludwig Institute for Cancer Research, Krankenhaus Nordwest, Frankfurt, Germany

William Janssen, H Lee Moffitt Cancer Center, University of South Florida, Tampa, FL

Lori Jones, Dendreon Corporation, Seattle, WA

W. Martin Kast, Norris Comprehensive Cancer Center, USC, Los Angeles CA

Julia Kaufman, Rockefeller University, New York, NY

Alexander Knuth, Ludwig Institute for Cancer Research, University Hospital Zürich, Zürich, Switzerland

Abdo Konur, Johann-Gutenberg University Mainz, Germany

Ferdynand Kos, Johns Hopkins University School of Medicine, Baltimore, MD

Odunsi Kunle, Ludwig Institute for Cancer Research, Roswell Park Cancer Institute, Buffalo, NY

Kelledy Manson, Therion Biologics Corporation, Cambridge, MA

Mark Matijevic, MGI Pharma Biologicals, Lexington, MA

Yoshishiro Miyahara, Ludwig Institute for Cancer Research, Mie University Graduate School of Medicine, Mie, Japan

Cristina Musselli, Antigenics, Inc., Lexington, MA

Walter Olson, University of Virginia Health System, Charlottesville, VA

Dennis Panicali, Therion Biologics Corporation, Cambridge, MA

Shreemanta Parida, Max-Planck Institute for Infection Biology, Berlin, Germany

Joanne Parker, Globeimmune Inc., Louisville, CO

Heike Pohla, Ludwig-Maximilians University, München, Germany

Michael Pride, Wyeth Pharmaceuticals, Pearl River, NY

Ruth Rappaport, Wyeth Pharmaceuticals, Pearl River, NY

Philip Reay, Biovex, Abingdon, UK

Licia Rivoltini, Istituto Nazionale Tumori, Milano, Italy

Pedro Romero, Ludwig Institute for Cancer Research, Hospital Orthopedique, Lausanne, Switzerland

Patric Schiltz, Hoag Cancer Center, Newport Beach, CA

Hiroshi Shiku, Ludwig Institute for Cancer Research, Mie University Graduate School of Medicine, Mie, Japan

Craig Slingluff, University of Virginia Health System, Charlottesville, VA

Jacqueline Smith, Dartmouth Medical College, Lebanon, NH

Daniel Speiser, Ludwig Institute for Cancer Research, Hospital Orthopedique, Lausanne, Switzerland

Diane Swanlund, Biomira Inc., Edmonton, Canada

Michael Vajdy, Chiron Corporation, Emeryville, CA

Annegret Van der Aa, Innogenetics NV, Ghent, Belgium

Jeffrey Weber, Norris Comprehensive Cancer Center, USC, Los Angeles CA

Thomas Woelfel, Johann-Gutenberg University Mainz, Germany

Jedd Wolchok, Memorial Sloan-Kettering Cancer Center, New York, NY

Footnotes

Authors Sylvia Janetzki, Katherine S. Panageas, Jean Boyer, Cedrik M. Britten, Timothy M. Clay, Michael Kalos, Holden T. Maecker, Pedro Romero, Jianda Yuan are members of Steering Committee of the CVC Immune Assay Working Group. W. Martin Kast and Axel Hoos are members of the Executive Council of the CVC.

CVC Elispot Proficiency Panel members are listed alphabetically in Appendix.

This manuscript is published in association with the original article “The CIMT-Monitoring panel: A two-step approach to harmonize the enumeration of antigen-specific CD8+ T lymphocytes by structural and functional assays” by C. M. Britten et al. and a commentary by C. M. Britten, S. Janetzki et al.: “Toward the harmonization of immune monitoring in clinical trials - Quo vadis?”.

References

- 1.Asai T, Storkus WJ, Whiteside TL. Evaluation of the modified ELISPOT assay for gamma interferon production in cancer patients receiving antitumor vaccines. Clin Diagn Lab Immunol. 2000;7:145–154. doi: 10.1128/CDLI.7.2.145-154.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Britten CM, Gouttefangeas C, Schoenmaekers-Welters MJP, Pawelec G, Koch S, Ottensmeier C, Mander A, Walter S, Paschen A, Müller-Berghaus J, Haas I, Mackensen A, Køllgaard T, Thor Straten P, Schmitt M, Giannopoulos K, Maier R, Veelken H, Bertinetti C, Konur A, Huber C, Stevanović S, Wölfel T and Van der Burg SH (2007) The CIMT-monitoring panel: a two-step approach to harmonize the enumeration of antigen-specific CD8+ T lymphocytes by structural and functional assays. Cancer Immunol Immunother (in press). 10.1007/s00262-007-0379-z [DOI] [PMC free article] [PubMed]

- 3.Cox J (2005) Conducting a multi-site Elispot proficiency panel. http://www.zellnet.com/february2005/

- 4.Cox JH, Ferrari G, Bailer RT, Koup RA. Automating procedures for processing, cryopreservation, storage and manipulation of human peripheral mononuclear cells. J Assoc Lab Automat. 2004;9:16–23. doi: 10.1016/S1535-5535(03)00202-8. [DOI] [Google Scholar]

- 5.Cox JH, Ferrari G, Kalams SA, Lopaczynski W, Oden N, D’Souza MP, Elispot Collaborative Study Group Results of an ELISPOT proficiency panel conducted in 11 laboratories participating in international human immunodeficiency virus type 1 vaccine trials. AIDS Res Hum Retroviruses. 2005;21:68–81. doi: 10.1089/aid.2005.21.68. [DOI] [PubMed] [Google Scholar]

- 6.Cox JH, Ferrari G, Janetzki S. Measurement of cytokine release at the single cell level using the ELISPOT assay. Methods. 2006;38:274–282. doi: 10.1016/j.ymeth.2005.11.006. [DOI] [PubMed] [Google Scholar]

- 7.Currier JR, Kuta EG, Turk E, Earhart LB, Loomis-Price L, Janetzki S, Ferrari G, Birx DL, Cox JH. A panel of MHC class I restricted viral peptides for use as a quality control for vaccine trial ELISPOT assays. J Immunol Methods. 2002;260:157–172. doi: 10.1016/S0022-1759(01)00535-X. [DOI] [PubMed] [Google Scholar]

- 8.Dubey S, Clair J, Fu T-M, Guan L, Long R, Mogg R, Anderson K, Collins KB, Gaunt C, Fernandez VR, Zhu L, Kierstead L, Thaler S, Gupta SB, Straus W, Mehrotra D, Tobery TW, Casimiro DR, Shiver JW. Detection of HIV vaccine-induced cell-mediated immunity in HIV-seronegative clinical trial participants using an optimized and validated enzyme-lined immunospot assay. J Acquir Immune Defic Syndr. 2007;45:20–27. doi: 10.1097/QAI.0b013e3180377b5b. [DOI] [PubMed] [Google Scholar]

- 9.FDA Guideline for Industry. Text on validation of Analytical Procedures. http://www.fda.gov/cder/guidance/ichq2a.pdf

- 10.Findlay Smith JWA; WC, Lee JW, Nordblom GD, Das I, DeSilva BS, Khan MN, Bowsher RR. Validation of immunoassays for bioanalysis: a pharmaceutical industry perspective. J Pharm Biomed Anal. 2000;21:1249–1273. doi: 10.1016/S0731-7085(99)00244-7. [DOI] [PubMed] [Google Scholar]

- 11.Hobeika AC, Morse MA, Osada T, Ghanayem M, Niedzwiecki D, Barrier R, Lyerly HK, Clay T. Enumerating antigen-specific T-cell responses in peripheral blood. J Immunother. 2005;28:63–72. doi: 10.1097/00002371-200501000-00008. [DOI] [PubMed] [Google Scholar]

- 12.Hoos A, Parmiani G, Hege K, Sznol M, Loibner H, Eggermont A, Urba W, Blumenstein B, Sacks N, Keilholz U, Nichol G, Cancer Vaccine Clinical Trial Working Group A clinical development paradigm for cancer vaccines and related biologics. J Immunother. 2007;30:1–15. doi: 10.1097/01.cji.0000211341.88835.ae. [DOI] [PubMed] [Google Scholar]

- 13.International Conference on Harmonisation (ICH); Guidance for Industry: Q2B Validation of Analytical Procedures: Methodology (1997) http://www.fda.gov/cber/gdlns/ichq2bmeth.pdf

- 14.Janetzki S. Automation of the Elispot technique: past, present and future. J Assoc Lab Automat. 2004;9:10–15. doi: 10.1016/S1535-5535(03)00084-4. [DOI] [Google Scholar]

- 15.Janetzki S, Schaed S, Blachere NE, Ben-Porat L, Houghton AN, Panageas KS. Evaluation of Elispot assays: influence of method and operator on variability of results. J Immunol Methods. 2004;291:175–183. doi: 10.1016/j.jim.2004.06.008. [DOI] [PubMed] [Google Scholar]

- 16.Janetzki S, Cox JH, Oden N, Ferrari G. Standardization and validation issues of the ELISPOT assay. Methods Mol Biol. 2006;302:51–86. doi: 10.1385/1-59259-903-6:051. [DOI] [PubMed] [Google Scholar]

- 17.Keilholz U, Weber J, Finke JH, Gabrilovich DI, Kast WM, Disis ML, Kirkwood JM, Scheibenbogen C, Schlom J, Maino VC, Lyerly HK, Lee PP, Storkus W, Marincola F, Worobec A, Atkins MB. Immunologic monitoring of cancer vaccine therapy: results of a workshop sponsored by the Society for Biological Therapy. J Immunother. 2002;25:97–138. doi: 10.1097/00002371-200203000-00001. [DOI] [PubMed] [Google Scholar]

- 18.Kierstead LS, Dubey S, Meyer B, Tobery TW, Mogg R, Fernandez VR, Long R, Guan L, Gaunt C, Collins K, Sykes KJ, Mehrotra DV, Chirmule N, Shiver JW, Casimiro DR. Enhanced rates and magnitude of immune responses detected against an HIV vaccine: effect of using an optimized process for isolating PBMC. AIDS Res Hum Retroviruses. 2007;23:86–92. doi: 10.1089/aid.2006.0129. [DOI] [PubMed] [Google Scholar]

- 19.Lathey J. Preliminary steps toward validating a clinical bioassay. BioPharm Int. 2003;16:42–50. [Google Scholar]

- 20.Lathey JL, Martinez K, Gregory S, D’Souza P, Lopaczynski W (2005) Characterization of assay variability in real-time and batch assays of sequential samples from the same donors. FOCIS 5th Annual Meeting, Poster, Abstract# Su2.96

- 21.Lem L (2003) Cell counting and viability assessments in the process development of cellular therapeutics. BioProcessing J July/August:57–60

- 22.Maecker T. The role of immune monitoring in evaluating cancer immunotherapy. In: Disis ML, editor. Cancer drug discovery and development: immunotherapy of cancer. NJ: Humana Press Inc; 2005. pp. 59–72. [Google Scholar]

- 23.Maecker HT, Dunn HS, Suni MA, Khatamzas E, Pitcher CJ, Bunde T, Persaud N, Trigona W, Fu TM, Sinclair E, Bredt BM, McCune JM, Maino VC, Kern F, Picker LJ. Use of overlapping peptide mixtures as antigens for cytokine flow cytometry. J Immunol Methods. 2001;255:27–40. doi: 10.1016/S0022-1759(01)00416-1. [DOI] [PubMed] [Google Scholar]

- 24.Morse MA, Clay TM, Hobeika AC, Mosca PJ, Lyerly HK. Surrogate markers of response to cancer immunotherapy. Expert Opin Biol Ther. 2001;1:153–158. doi: 10.1517/14712598.1.2.153. [DOI] [PubMed] [Google Scholar]

- 25.Mwau M, McMichael AJ, Hanke T. Design and validation of an enzyme-linked immunospot assay for use in clinical trials of candidate HIV vaccines. AIDS Res Hum Retroviruses. 2002;18:611–618. doi: 10.1089/088922202760019301. [DOI] [PubMed] [Google Scholar]

- 26.Nagorsen D, Scheibenbogen C, Thiel E, Keilholz U. Immunological monitoring of cancer vaccine therapy. Expert Opin Biol Ther. 2004;4:1677–1684. doi: 10.1517/14712598.4.10.1677. [DOI] [PubMed] [Google Scholar]

- 27.NCCLS (2001) Evaluation of matrix effects, approved guidelines. NCCLS document EP14-A

- 28.NCCLS (2004) Performance of single cell immune response assays; approved guidelines. NCCLS document I/LA26-A

- 29.NIAID Division of AIDS (2003) Validating an immunological assay toward licensure of a vaccine. http://www.niaid.nih.gov/hivvaccines/validation.htm

- 30.Pifat D (2006) Assay validation. http://www.fda.gov/cber/summaries/120600bio10.ppt

- 31.Russell ND, Hudgens MG, Ha R, Havenar-Daughton C, McElrath MJ. Moving to human immunodeficiency virus type 1 vaccine efficacy trials: defining T cell responses as potential correlates of immunity. J Infect Dis. 2003;187:226–242. doi: 10.1086/367702. [DOI] [PubMed] [Google Scholar]

- 32.Samri A, Durier C, Urrutia A, Sanchez I, Gahery-Segard H, Imbart S, Sinet M, Tartour E, Aboulker J-P, Autran B, Venet A, The ANRS Elispot Standardization Group Evaluation of the interlaboratory concordance in quantification of HIV-specific T cells with a gamma interferon enzyme-linked immunospot assay. Clin Vaccine Immunol. 2006;13:684–697. doi: 10.1128/CVI.00387-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Scheibenbogen C, Romero P, Rivoltini L, Herr W, Schmittel A, Cerottini J-C, Woelfel T, Eggermont AMM, Keilholz U. Quantitation of antigen-reactive T cells in peripheral blood by IFNg-ELISPOT assay and chromium-release assay: a four-centre comparative trial. J Immunol Methods. 2000;244:81–89. doi: 10.1016/S0022-1759(00)00257-X. [DOI] [PubMed] [Google Scholar]

- 34.Smith JG, Liu X, Kaufhold RM, Clair J, Caulfield MJ. Development and validation of a gamma Interferon ELISPOT assay for quantitation of cellular immune responses to varicella-zoster virus. Clin Diagn Lab Immunol. 2001;8:871–879. doi: 10.1128/CDLI.8.5.871-879.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Smith JG, Joseph HR, Green T, Field JA, Wooters M, Kaufhold RM, Antonello J, Caulfield MJ. Establishing acceptance criteria for cell-mediated-immunity assays using frozen peripheral blood mononuclear cells stored under optimal and suboptimal conditions. Clin Vaccine Immunol. 2007;14:527–537. doi: 10.1128/CVI.00435-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tuomela M, Stanescu I, Krohn K. Validation overview of bio-analytical methods. Gene Ther. 2005;12(Suppl 1):131–138. doi: 10.1038/sj.gt.3302627. [DOI] [PubMed] [Google Scholar]

- 37.United States Pharmacopeia—USP (1999) Validation of compendial methods. Suppl 10, pp 5059–5062

- 38.Walker EB, Disis ML. Monitoring immune responses in cancer patients receiving tumor vaccines. Int Rev Immunol. 2003;22:283–319. doi: 10.1080/08830180305226. [DOI] [PubMed] [Google Scholar]

- 39.Whiteside TL. Immunologic monitoring of clinical trials in patients with cancer: technology versus common sense. Immunol Invest. 2000;29:149–162. doi: 10.3109/08820130009062299. [DOI] [PubMed] [Google Scholar]