Abstract

Incentive delay tasks implicate the striatum and medial frontal cortex in reward processing. However, prior studies delivered more rewards than penalties, possibly leading to unwanted differences in signal-to-noise ratio. Also, whether particular brain regions are specifically involved in anticipation or consumption is unclear. We used a task featuring balanced incentive delivery and an analytic strategy designed to identify activity specific to anticipation or consumption. RT data in two independent samples (n=13 and n=8) confirmed motivated responding. FMRI revealed regions activated by anticipation (anterior cingulate) vs. consumption (orbital and medial frontal cortex). Ventral striatum was active during reward anticipation but not significantly more so than during consumption. While the study features several methodological improvements and helps clarify the neural basis of incentive processing, replications in larger samples are needed.

Descriptors: Reward, Motivation, Anticipation, Consumption, Emotion, fMRI

Animal research has revealed a neural network sensitive to the rewarding properties of stimuli (Schultz, 1998; Ikemoto & Panksepp, 1999; Robinson & Berridge, 2003). Critical structures in this circuit include both dorsal (caudate, putamen) and ventral (nucleus accumbens: NAcc) regions of the striatum, orbitofrontal cortex (OFC), medial prefrontal cortex (PFC), and anterior cingulate cortex (ACC). This distributed network of regions receives inputs from dopaminergic (DA) neurons originating from the ventral tegmental area. The non-human primate literature demonstrates that these neurons initially respond during consumption of unexpected rewards, but eventually fire in response to reward-predicting cues and show decreased activity when expected rewards are omitted (for reviews, see Schultz, 1998; Ikemoto et al., 1999). Based on these findings, it has been suggested that activity in this circuit supports various forms of reinforcement-based learning and approach-related behavior.

Functional magnetic resonance imaging (fMRI) demonstrates that a similar circuit, prominently including the ventral striatum, is also activated in humans by a variety of rewards, including drugs of abuse (cocaine: Breiter et al., 1997; Vollm et al., 2004; amphetamine: Knutson et al., 2004), attractive opposite-sex faces (Aharon et al., 2001), cultural objects signifying wealth (sports-cars: Erk, Spitzer, Wunderlich, Galley, & Walter, 2002), humor (Mobbs, Greicius, Abdel-Azim, Menon, & Reiss, 2003), and monetary incentives (Knutson, Adams, Fong, & Hommer, 2001a; Knutson, Fong, Adams, Varner, & Hommer, 2001b). However, several early human studies did not distinguish between anticipatory and consummatory phases of reward processing, limiting the conclusions that could be drawn from this research. In line with animal work differentiating between “wanting” and “liking” (Berridge & Robinson, 1998), factor analytic studies of self-report measures indicate that the reward-related anticipatory phase is linked with motivational processes that foster goal-directed behavior targeting desired outcomes (Carver & White, 1994), while the consummatory phase is linked to satiation and in-the-moment experiences of pleasure, (Gard, Gard, Kring, & John, 2006). Psychologically, the anticipatory phase is primarily characterized by motivation and ability to image a desired outcome, leading to the feeling of “wanting” more, or the experience of desire.

Consistent with this psychological dissociation, Knutson and colleagues have used a monetary incentive delay (MID) task to establish that anticipation and consumption are supported by partially separable neural systems (Knutson, Adams, Fong, & Hommer, 2001a; Knutson, Fong, Adams, Varner, & Hommer, 2001b; Knutson et al., 2003; for review, see Knutson & Cooper, 2005). Individual MID trials feature cues signaling potential monetary rewards, losses, or no-incentive, a delay “anticipation” period, a target stimulus (to which participants respond with a speeded button press), and an outcome period during which monetary rewards or penalties are delivered. In this task, anticipation of reward consistently activates the ventral striatum, including the NAcc, and receipt of rewards activates ventromedial PFC and medial PFC regions (Knutson et al., 2003; Knutson et al., 2001b). Comparative research confirms an important role for ventral striatal neurons in mediation of reward-seeking behavior (Ikemoto et al., 1999), while lesion research implicates ventromedial PFC in the abstract representation of incentive outcomes required for flexible behavior, planning, and decision-making (Bechara, Damasio, Damasio, & Lee, 1999; Ursu & Carter, 2005). Consequently, it has been proposed that these two regions may represent the “engine” and “steering wheel” of reward-related behavior, respectively (Knutson et al., 2003; Knutson et al., 2005).

Importantly, however, this literature is limited by two methodological concerns. First, much of the previous research has utilized designs in which unequal numbers of rewards and losses were delivered. For example, in Knutson et al. (2003), participants were successful (winning or avoiding losing money) on 66% of trials. This may lead to undesirable differences in signal-to-noise ratio across conditions and possible over-estimation of reward-related effects. Second, the analyses used in many studies do not facilitate identification of brain regions that are specifically involved in either anticipation or consumption of rewards or losses. Contrasts targeted at the anticipatory and consummatory phases usually are conducted separately for reward and loss trials. While valuable, this approach does not explicitly take into account the possibility that some brain regions may participate in both anticipation and consumption of rewards and/or losses. For example, the anticipatory phase of both reward and loss trials may give rise to a psychological state characterized by increased attention to task goals, heightened arousal, and blends of emotions (e.g., a mixture of hope and anxiety at the possibility of receiving a reward or loss, respectively). Across both trial types, this state would be expected to elicit activity in brain regions important for cognitive control and emotion-attention interactions, including the ACC (Bush et al., 2002). In a related vein, there is evidence that brain regions which code the hedonic value of stimuli (e.g., medial OFC) can be activated by both anticipation and consumption of incentives and respond similarly to obtained rewards and avoided losses (Kim, Shimojo, & O’Doherty, 2006). In short, some brain regions may show activation patterns which cut across the anticipatory and consummatory phases of either reward or loss trials, or both. This common activation needs to be estimated and accounted for in order to identify brain regions specifically recruited by anticipation or consumption of rewards or losses.

The present, methodologically oriented, event-related fMRI study addressed these two important issues. First, we developed a modified MID task featuring balanced delivery of rewards and losses. Second, we used a series of contrasts to identify neural regions specifically engaged in anticipation versus consumption of rewards vs. losses. Based on previous research (Knutson et al., 2001a), a comparison between anticipation of rewards versus no-incentive was expected to reveal activity in the ventral striatum and dorsomedial cortical structures (e.g., the dorsal ACC). Given the hypothesis that the anticipatory period on both reward and loss trials would elicit a range of emotions, increased arousal, and increased attention towards the target stimulus, as well as the fact that the dorsal ACC plays a critical role in negative reinforcement learning (Holroyd & Coles, 2002), this region was also predicted to be active during anticipation of losses. By contrast, prior studies (e.g., Knutson et al., 2003) supported the hypothesis that the ventral striatum would be more strongly recruited during anticipation of rewards than during anticipation of losses or no-incentive. However, it was also predicted that the ventral striatum would be activated by rewarding outcomes, though perhaps not as strongly as during reward anticipation (e.g., Bjork & Hommer, 2007). Based on the non-human primate literature (Schultz, 1998, 2000), a relative decrease in ventral striatal activity was expected on trials in which potential rewards were not delivered. Ventromedial PFC was not expected to be active during anticipation, but was predicted to respond to delivery of both rewards and penalties (Knutson et al., 2003). In addition, on the basis of extensive human and animal work indicating that the OFC codes abstract representations of both positive and negative outcomes (for review, see Rolls, 1996), it was hypothesized that this region would also be recruited by delivery of both rewards and penalties. Previous studies using the MID task have not consistently observed activation in the OFC, perhaps due to the fact that this region is frequently obscured by fMRI artifact (e.g., Knutson et al., 2001a). To address this issue, fMRI data were collected using acquisition parameters specifically designed to improve imaging of the OFC (Deichmann, Gottfried, Hutton, & Turner, 2003).

Methods

Participants

Behavioral study

Thirteen healthy adults participated (nine females, mean age: 26, SD: 9.53).

fMRI study

Eight healthy adults, who did not participate in the behavioral study, were recruited for the neuroimaging component (five females, mean age: 28.13, SD: 5.62). All participants in both the behavioral and fMRI studies were right-handed (Chapman and Chapman, 1987), and none reported current or prior psychiatric or neurological illness. After description of the procedures involved, participants gave written informed consent to a protocol approved by the Committee on the Use of Human Subjects at Harvard University. Participants were fully debriefed about the nature of the study at the end of the session. They were informed that responses were decoupled from task outcomes such that all participants won and lost an equal number of times (see below). After debriefing, participants were thanked and paid for their participation. Participants were compensated at $10/hour and $30/hour for the behavioral and fMRI studies, respectively, and were also given additional money as “earnings” from the MID task ($12 in the behavioral study, $18–$22 in the fMRI study).

MID Paradigm

The trial structure used in the MID task was based on prior reports (e.g., Knutson et al., 2003). At the outset of each trial, a visual cue (duration: 1.5 s) signaling either potentially rewarding outcomes (+$), potentially aversive outcomes (−$), or no monetary incentive (0$) was presented. After a jittered delay inter-stimulus-interval (ISI: 3, 4.5, 6, or 7.5 s), participants pressed a button in response to a red square target, which was presented for variable duration (see below). A second delay ISI (4.4, 5.9, 7.4, or 8.9 s) followed the target, after which visual feedback (1.5 s) notified participants whether they had won or lost money (no monetary rewards or penalties were delivered on no-incentive trials). Trials were separated by inter-trial-intervals (ITIs) ranging from 3 to 12 s, in 1.5 s increments.

In the reward condition, successful trials were associated with monetary gains (range: $1.96 to $2.34; mean: $2.15) whereas unsuccessful trials led to no change. In the loss condition, successful trials were associated with no change whereas unsuccessful trials were associated with monetary penalties (range: −$1.81 to −$2.19; mean: −$2.00). Note that while rewarding and aversive incentive outcomes varied across a range, the corresponding cues did not vary (i.e., cues did not signal outcome magnitude, only outcome valence). Gains were slightly larger than penalties to compensate for the fact that individuals typically assign greater weight to a loss than a gain of equal magnitude (Breiter, Aharon, Kahneman, Dale, & Shizgal, 2001; Kahneman & Tversky, 1979). No feedback concerning cumulative earnings was provided.

A fully balanced design was used. The task featured five blocks consisting of 24 trials each (8 reward, 8 loss, 8 no-incentive). In each block, half of the reward and loss trials led to success or failure, respectively. Thus, 40 trials/condition were available for analysis of anticipatory activations, whereas 20 trials/condition were available for analysis of incentive outcomes.

The number of reinforcers delivered was thus fixed and not contingent on participants’ performance. However, participants were instructed so as to believe that the probability of success was contingent upon their response speed; specifically, how fast they pressed a button after the appearance of the target stimulus. Importantly, presentation duration of the target was varied across successful and unsuccessful trials in order to improve task believability. At the outset of both the pilot and fMRI studies, participants performed a practice block of 40 trials; RT data collected during practice were used to titrate target exposure duration. Specifically, for successful trials and unsuccessful trials the target was presented for durations corresponding to the 85th and 15th percentiles of the individual’s mean RT during practice, respectively (i.e., long target exposure durations on successful trials, short target exposure durations on unsuccessful trials).1 Finally, to maximize task engagement, participants were told that if they performed well enough in the first five blocks they would be given an opportunity to play a sixth “bonus” block associated with larger payoffs ($3.63 – $5.18) and few penalties. All participants “qualified” for the bonus block.

A single pseudo-randomized stimulus presentation order was used. Two steps were taken to optimize task design. First, stimulus presentation order was determined using optseq2 (Dale, 1999), a tool for optimizing statistical efficiency of event-related fMRI designs. In this step, 20 stimulus sequences that optimized the First-Order Counter-Balancing (FOCB) matrix were generated. The FOCB is an N x N matrix of probabilities that one condition follows another (N = number of conditions). Next, these 20 optimized sequences were iteratively randomized, so that the combination of five of these sequences would yield an overall N x N matrix minimizing differences in transmission matrix among conditions [i.e., p(A→A) ≈ p(A →B) ≈… ≈ 1/N]. Second, ISI/ITI durations were selected based on output from a genetic algorithm (GA) that maximizes statistical orthogonality (i.e., minimizes correlations between predictors), which is critical for estimating hemodynamic responses to closely spaced stimuli (Wager & Nichols, 2003). The GA optimizes designs with respect to multiple measures of fitness, including (a) contrast estimation efficiency, (b) hemodynamic response function (HRF) estimation efficiency, and (c) design counterbalancing. In the present design, the “condition number” (a measure of design orthogonality in the GA) confirmed a high degree of orthogonality for each block (range: 1.35–1.38; 1 = completely orthogonal).

fMRI Data Acquisition

Functional MRI data were acquired using a protocol that combines tilted slice acquisition and z-shimming to improve signal in regions affected by susceptibility artifacts (Deichmann et al., 2003). Data were acquired on a 1.5T Symphony/Sonata scanner (Siemens Medical Systems; Iselin, NJ). Tilted slice acquisition and z-shimming were utilized to minimize through-plane susceptibility gradients in the OFC and medial temporal lobes without compromising either signal in other regions or temporal resolution (Deichmann et al., 2003). To avoid signal loss due to susceptibility gradients in the phase encoding direction, EPI data were acquired 30° to the AC-PC line. To reduce spin dephasing due to through-plane susceptibility gradients, a preparation pulse with duration of 1 ms and amplitude of −2mT/m in the slice selection direction was applied before data acquisition (Gottfried, Deichmann, Winston, & Dolan, 2002). This technique is similar to the z-shimming approach (Constable & Spencer, 1999), but unlike standard z-shimming it does not require the combination of several images and thus does not compromise temporal resolution. These parameters were only used in conjunction with the first five blocks. Data from the sixth “bonus” block were collected using standard acquisition parameters and were utilized to confirm, for each subject, signal recovery in regions affected by susceptibility artifacts, particularly the OFC. Accordingly, fMRI data from block 6 were not included in the analyses.

Gradient echo T2*-weighted echoplanar images were acquired using the following acquisition parameters: TR/TE: 2500/35ms; FOV: 200 mm; matrix: 64 × 64; 36 slices; in-plane resolution: 3mm (2-mm slices, 1-mm gap); 216 volumes. To reduce slice dephasing of spins and loss of magnetization, a short echo time (TE: 35 ms) and nearly isotropic voxels (3.125 × 3.125 × 3 mm) were used (Hyde, Biswal, & Jesmanowicz, 2001; Wadghiri, Johnson, & Turnbull, 2001). Interleaved slices were acquired, and head movement was minimized with padding.

Behavioral Data Reduction and Analysis

For each participant, mean RT to the target was calculated as a function of incentive cue and block. These data were then entered into a 3 (cue: reward, loss, and no-incentive) × 5 (block) repeated measures analysis of variance (ANOVA). Greenhouse-Geisser p values are reported when the sphericity assumption was violated. Significant main effects of Cue were followed up with paired t-tests.

fMRI Pre-processing and Data Reduction

Functional neuroimaging data were pre-processed using Functional Imaging Software Widgets (Fissell et al., 2003), a Java-based GUI software compatible with a number of neuroimaging analysis packages (e.g., AIR: Automated Image Registration, and AFNI: Automated Functional NeuroImaging). For each participant, the first six scans in each run were excluded from analyses to allow for T1 equilibration. Functional images were reconstructed and slice-time corrected to the first acquired functional slice in AFNI (Cox, 1996). Movement was then estimated and corrected using AIR (Woods, Grafton, Holmes, Cherry, & Mazziotta, 1998). A 12-parameter automated algorithm followed by a 3rd order nonlinear registration was used to estimate the transformations necessary to register each participant’s structural T1-weighted image to the Montreal Neurological Institute reference brain. These parameter estimates were then applied to the functional T2-weighted images to normalize subjects’ data into the shared brain space. Data were subsequently smoothed in three dimensions using a 6mm FWHM kernel to accommodate individual differences in brain morphology.

fMRI Data Analysis

Functional imaging data were analyzed using a general linear model (GLM) implemented in SPM99 (Friston et al., 1995; Holmes, Poline, & Friston, 1997). The hemodynamic response was modeled with event-specific regressors convolved with a canonical HRF and its temporal derivative to account for potential shifts in the hemodynamic response. A separate regressor was defined for each of the three incentive cues, both short and long duration targets (associated with unsuccessful and successful trials, respectively), and five types of feedback (corresponding to successful and unsuccessful reward and loss trials, plus “no change” feedback on no-incentive trials). Three additional regressors were defined to account for errors: one modeled responses to targets that happened less than 150 ms post-target presentation, while the other two modeled erroneous responses to reinforcer cues and feedback stimuli. Due to the small sample size, a fixed-effects model was used (Friston, Holmes, & Worsley, 1999), which limits the generalizability of the present findings. Note, however, that one of the main goals of the present study was to develop an improved experimental design and analytic strategy to overcome some of the methodological issues characterizing earlier fMRI studies of incentive processing.

A series of progressively more stringent analyses was used to identify brain regions a) specifically involved in anticipation versus consumption, and b) specifically implicated in processing rewards versus losses. For example, to model reward anticipation, the contrast [RewardAnticipation – No-IncentiveAnticipation] was computed first. This contrast is standard in the literature and is designed to identify reward-related activity by controlling for sensory processing of the incentive cues and general motor preparation (since a button press is required on every trial). However, because it does not control for emotional arousal or general anticipatory processes that may be invoked by anticipation of both monetary gains and losses (e.g., increased attention/effort directed towards task goals—Walton, Kennerley, Bannerman, Phillips, & Rushworth, 2006), this contrast may not isolate brain regions specifically involved in reward anticipation. Therefore, results from this contrast were inclusively masked with results from a second contrast, [RewardAnticipation – LossAnticipation]. This procedure identifies those regions from the first contrast that were also more activated during anticipation of rewards than losses, thus controlling for emotional arousal and general anticipatory processes.

Finally, because some brain regions may be involved in both anticipation and consumption, the results from the [RewardAnticipation – No-IncentiveAnticipation] contrast were inclusively masked with a [RewardAnticipation – RewardOutcome: GAIN] contrast. This step identifies brain regions more active during anticipation than consumption of rewards, again facilitating identification of neural areas specifically involved in reward anticipation. Note that while the [RewardAnticipation – RewardOutcome: GAIN] contrast may also reveal motor activity (due to preparation for button pressing associated with RewardAnticipation but not RewardOutcome: GAIN), motor activity is subtracted out of the first contrast ([RewardAnticipation – No-IncentiveAnticipation]) and thus will not survive inclusive masking.

Similar series of contrasts and masking procedures were used to identify brain regions specifically engaged during anticipation of possible monetary penalties (LossAnticipation), as well as during receipt of monetary gains (RewardOutcome: GAIN) and penalties (LossOutcome: PENALTY). In each case the progression was from a “standard” contrast (comparing incentive conditions to a no-incentive baseline) to more stringent analyses.

Significance levels were adjusted for multiple comparisons using a Monte Carlo Simulation-Inference method (AlphaSim) implemented in AFNI (Ward, 2000). For the present data, this procedure estimated that the combination of a single voxel threshold of P < 0.001 and a cluster volume threshold of 351 mm3 (12 voxels) resulted in a mapwise significance level of P < 0.05. For masking analyses, both the target and mask maps were thresholded on the single-voxel level at p = 0.01. This yields voxels whose probability of being active by chance across both contrasts is p < 0.001, based on Fisher’s method for combining p-values [χ2 = −2Ln(p1p2): Fisher, 1973; Slotnick & Schacter, 2004]. Since the cluster extent criterion of 12 contiguous voxels was retained, regions emerging from the masked analyses were also corrected to a mapwise significance level of P < 0.05. Significant findings were overlaid on a T1-weighted high-resolution anatomical image normalized to MNI space. MNI coordinates were transformed to Talairach space using the non-linear transformation developed by Brett, Christoff, Cusack, and Lancaster (2001), and activated regions were identified using an online version of the Talairach and Tournoux (1988) atlas (International Neuroimaging Consortium, 2006). For various effects of interest, peri-stimulus time histograms illustrating the time courses of activation were plotted using data from peak activated voxels (Arthurs & Boniface, 2003).

Results

Behavioral Performance

Behavioral study

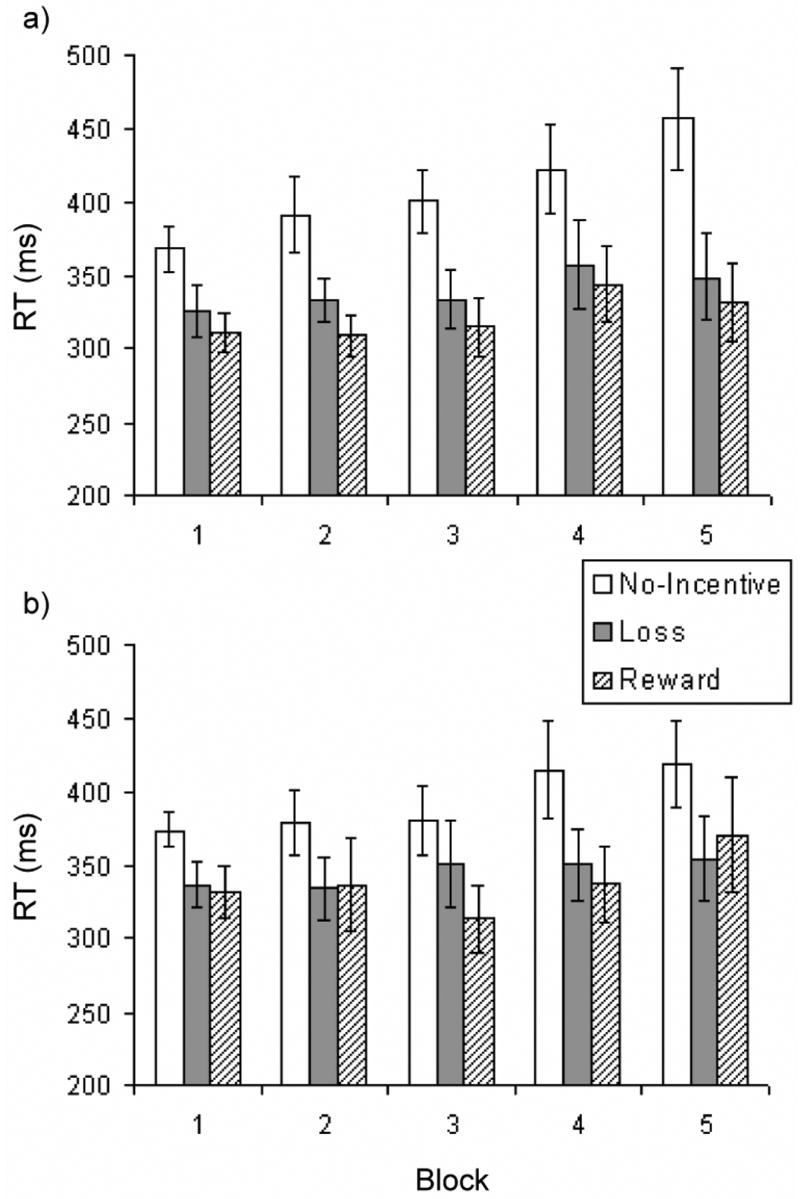

A repeated-measures ANOVA on RT to the target stimulus revealed a main effect of cue, F(2, 24) = 13.62, p < .002, η2p = .532. As expected, participants responded significantly more quickly (ps < .007) on both reward (M = 321.74 ms, SD = 63.14) and loss (M = 339.84 ms, SD = 70.43) trials versus no-incentive trials (M = 407.19, SD = 80.88). At an individual level, this pattern—faster RTs on both reward and loss trials versus no-incentive trials—was observed for 12 of 13 participants. The main effect of block was also significant, F(4, 48) = 3.59, p < .05, η2p = .230, due to the fact that responses became slower as the blocks progressed (linear trend: F(1, 12) = 4.81, p < .05). Importantly, however, the Cue x Block interaction was not significant, F(8, 96) = 1.75, p = .16, indicating that the behavioral differentiation between the two incentive conditions versus the no-incentive condition was sustained throughout the task (Figure 1a). In light of the significant main effect of block, exploratory analyses were conducted to confirm this conclusion. Paired t-tests conducted separately on data from each block revealed that compared to RTs on no-incentive trials, RTs were consistently significantly faster on reward trials (ps < .03 for all blocks) and loss trials (p = .08 for block 4, all other ps < .02). Collectively, these data support the conclusion that participants were strongly and consistently motivated to obtain rewards and avoid losses.

Figure 1.

Reaction time to target stimulus as a function of incentive cue (reward, loss, no-incentive) and block for the a) pilot behavioral study (n = 13) and b) fMRI study (n = 8).

fMRI study

Mirroring findings from the behavioral study, analysis of RT to the target stimulus in the fMRI study revealed a significant main effect of cue, F(2, 14) = 8.11, p < .005, η2p = .54. Paired t-tests revealed that participants responded significantly more quickly (ps < .03) on trials featuring reward (M = 338 ms, SD = 66) and loss cues (M = 345 ms, SD = 61) than on trials featuring no-incentive cues (M = 393 ms, SD = 59). This pattern (faster RT on both reward and loss trials vs. no-incentive trials) was observed in 7 of 8 participants. Neither the main effect of block (F(4, 28) = 1.72, p = .17) nor the Cue x Block interaction (F(8, 56) < 1) was significant, indicating that RT differences between the incentive and no-incentive conditions were sustained throughout the task (Figure 1b). These findings thus reinforce the results from the pilot behavioral study, and demonstrate that using a balanced design and decoupling responses from outcomes did not adversely affect motivated responding as measured by RT.

Neuroimaging: Activations During Anticipation of Incentives

Anticipation of possible monetary rewards

For reward anticipation, the standard [RewardAnticipation – No-IncentiveAnticipation] contrast yielded activations in several regions reported in previous work (e.g., Knutson et al., 2001a), including the dorsal ACC (peak voxel Talaiarch coordinates: 5, 15, 31) and a right ventral striatal region whose peak activated voxel was slightly ventral to the putamen and ventrolateral to the NAcc (17, 5, −12)2. The dorsal ACC activation remained significant when the [RewardAnticipation – LossAnticipation] mask was applied (Table 1), indicating that this region was more activated during anticipation of rewards than during anticipation of both losses and no incentive (Figure 2). The ventral striatum survived this masking procedure only when the cluster extent was reduced to ten voxels (Figure 3). Given both the a priori interest in this region and the fact that cluster extents of ten voxels (Knutson et al., 2001a) or smaller (Tobler, O’Doherty, Dolan, & Schultz, 2007) have been used previously to detect ventral striatal activations, this reduction of the cluster extent criterion is justifiable.

Table 1.

Neural Regions Implicated in Anticipation of Monetary Rewards and Losses

| Region | x | y | z | # Voxels | Z |

|---|---|---|---|---|---|

| [RewANT. – NoIncentANT] inclusively masked with [RewANT – LossANT] | |||||

| Dorsal ACC | 2 | 9 | 36 | 39 | 4.75 |

| Ventral Striatum | 17 | 5 | −12 | 10 | 4.49 |

| L Putamen | −27 | −5 | 3 | 12 | 3.59 |

| L Inf. Frontal Gyrus | −52 | 20 | −1 | 12 | 4.33 |

| R Inf. Parietal Lobule | 60 | −33 | 23 | 16 | 4.25 |

| L Fusiform Gyrus | −42 | −46 | −23 | 25 | 3.81 |

| −23 | −71 | −14 | 26 | 4.86 | |

| R Lingual Gyrus | 8 | −88 | −15 | 50 | 5.91 |

| R Calcarine Sulcus | 11 | −83 | 4 | 30 | 3.26 |

| R Cerebellum | 32 | −79 | −22 | 55 | 4.81 |

| [RewANT. – NoIncentANT] inclusively masked with [RewANT – RewOutcome:GAIN] | |||||

| Dorsal ACC | 5 | 15 | 31 | 69 | 5.10 |

| L Inf. Frontal Gyrus | −52 | 20 | −1 | 38 | 4.33 |

| R Inf. Frontal Gyrus | 44 | 8 | 11 | 46 | 4.27 |

| R Sup. Temporal Gyrus | 57 | 2 | −6 | 16 | 4.13 |

| R Post-central Gyrus | 44 | −15 | 32 | 14 | 3.73 |

| L Inf. Parietal Lobule | −61 | −27 | 24 | 22 | 4.05 |

| R Inf. Parietal Lobule | 60 | −33 | 23 | 21 | 4.25 |

| R Thalamus | 5 | −11 | 13 | 13 | 5.44 |

| L Cerebellum | −39 | −46 | −25 | 17 | 3.72 |

| [LossANT – NoIncentANT] inclusively masked with [LossANT– LossOutcome:PENALTY] | |||||

| Dorsal ACC | −11 | 18 | 39 | 16 | 4.94 |

| R Inf. Frontal Gyrus | 41 | 19 | 11 | 57 | 3.83 |

| L Sup. Frontal Gyrus | −2 | 15 | 42 | 21 | 4.06 |

| L Cerebellum | −23 | −55 | −20 | 14 | 3.86 |

| R Cerebellum | 2 | −52 | −19 | 17 | 4.23 |

Note. x, y, and z correspond to the Talairach coordinates of the peak activated voxel. # Voxels refers to the number of voxels exceeding the statistical threshold (P < 0.05, corrected). Z is the Z score equivalent of the peak activated voxel. R = right; L = left; Inf. = inferior; Sup = superior; Rew = reward; NoIncent = no-incentive; ANT = anticipation.

Figure 2.

Dorsal ACC activity elicited by anticipation of rewards. Left panel depicts region revealed by the RewardAnticipation – No-IncentiveAnticipation contrast. A similar activation emerged from the LossAnticipation – No-IncentiveAnticipation contrast (not shown). Right panel depicts a smaller region revealed by inclusive masking of RewardAnticipation – No-IncentiveAnticipation contrast with LossAnticipation – No-IncentiveAnticipation contrast; this region was differentially sensitive to anticipation of rewards (versus losses). Time-course from peak activated voxel in RewardAnticipation – No-IncentiveAnticipation contrast (time-locked to cue onset) demonstrates that dorsal ACC was active during anticipation of both incentives, but was especially activated during reward anticipation.

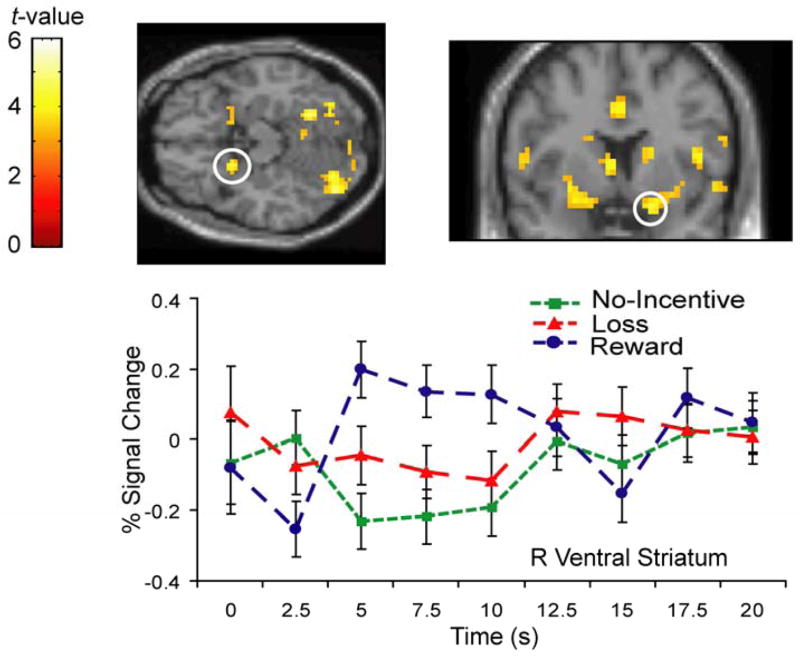

Figure 3.

Anticipatory activity in right ventral striatum revealed by the RewardAnticipation – No-IncentiveAnticipation contrast. Time-course from peak activated voxel (time-locked to cue onset) reveals that this region was more strongly activated by anticipation of rewards than losses or no-incentive.

Critically, of these two regions, only the ACC remained significant after the [RewardAnticipation – RewardOutcome: GAIN] mask was applied, even when using a cluster extent of 10 voxels (Table 1). These results indicate that: (1) a sub-region of the dorsal ACC was specifically involved in anticipation of monetary reward; and (2) the ventral striatum was not significantly more active during reward anticipation than during reward consumption. Note that the cerebellum was also activated during reward anticipation, consistent with recent reports implicating this structure in reward processing (e.g., Anderson et al., 2006).

Anticipation of possible monetary losses

Several regions emerged from the [LossAnticipation – No-IncentiveAnticipation] contrast, including dorsal ACC (Talairach coordinates: −11, 18, 39), left insula (−39, 20, 6), and bilateral putamen (left: −27, 8, −5; right: 30, −16, 4). Note that the putamen activations observed in this contrast were dorsal to the ventral striatal activation observed during reward processing. None of these regions survived inclusive masking with the [LossAnticipation – RewardAnticipation] contrast, suggesting that they did not specifically index anticipation of possible monetary penalties but were instead associated with processes involved in general incentive anticipation. By contrast, the dorsal ACC survived application of the [LossAnticipation – LossOutcome: PENALTY] mask (Table 1), indicating that the dorsal ACC was specifically involved in anticipation—but not consumption—of both classes of incentive.

Neuroimaging: Activations Elicited by Outcomes

Receipt of monetary rewards

The [RewardOutcome: GAIN – No-IncentiveOutcome] contrast revealed activity in several regions, including aspects of the temporal lobes, fusiform gyrus, calcarine sulcus, and cerebellum. Most relevant to the current research were several activations in the frontal lobes, including three in the left inferior frontal gyrus (Talairach coordinates: −39, 29, −14; −48, 22, 9; −45, 13, 22), one in the left middle frontal gyrus (−39, 50, −3), and two in the right middle frontal gyrus (38, 56, 8; 41, 18, 39). However, of these frontal regions only the right inferior frontal gyrus survived application of the [RewardOutcome: GAIN – LossOutcome: PENALTY] mask, suggesting that most of the frontal activations observed in the first contrast may be sensitive to processes that are common to receipt of rewards and losses. By contrast, several frontal regions survived application of the [RewardOutcome: GAIN – RewardAnticipation] mask (Table 2), notably including activations in ventromedial PFC (5, 47, 7) and bilateral OFC (left: −27, 26, −11; right: 35, 27, −16; Figure 4a). This result supports the conclusion that these regions were specifically involved in consummatory (versus anticipatory) aspects of reward processing.

Table 2.

Neural Regions Implicated in Receipt of Monetary Rewards

| Region | x | y | z | # Voxels | Z |

|---|---|---|---|---|---|

| [RewOutcome: GAIN. – NoIncentOutcome] inclusively masked with [RewOutcome:GAIN – LossOutcome:PENALTY] | |||||

| R Inf. Frontal Gyrus | 38 | 33 | −6 | 12 | 3.13 |

| R Mid. Temporal Gyrus | 63 | −20 | −8 | 48 | 3.65 |

| L Fusiform Gyrus | −36 | −68 | −14 | 13 | 3.20 |

| L Cerebellum | −23 | −51 | −30 | 24 | 3.60 |

| R Cerebellum | 38 | −63 | −24 | 21 | 4.17 |

| [RewOutcome: GAIN. – NoIncentOutcome] inclusively masked with [RewOutcome:GAIN – RewANT] | |||||

| Rostral cingulate | −8 | 44 | 12 | 15 | 3.46 |

| Posterior cingulate | 2 | −33 | 29 | 12 | 3.70 |

| R Medial Frontal Gyrus | 5 | 47 | 7 | 43 | 3.51 |

| L Inf. Frontal Gyrus | −27 | 26 | −11 | 37 | 3.51 |

| −48 | 22 | 9 | 42 | 5.26 | |

| R Inf. Frontal Gyrus | 35 | 27 | −16 | 20 | 2.87 |

| 44 | 44 | −5 | 21 | 3.93 | |

| L Mid. Frontal Gyrus | −39 | 50 | −3 | 114 | 5.26 |

| R Mid. Frontal Gyrus | 38 | 56 | 8 | 50 | 4.12 |

| 41 | 18 | 39 | 56 | 4.20 | |

| R Sup. Frontal Gyrus | 20 | 48 | 33 | 17 | 3.50 |

| 8 | 55 | 35 | 22 | 2.98 | |

| 5 | 26 | 43 | 52 | 4.39 | |

| L Inf. Temporal Gyrus | −64 | −40 | −15 | 88 | 5.65 |

| R Mid. Temporal Gyrus | 63 | −23 | −7 | 154 | 4.78 |

| R Sup. Temporal Gyrus | 51 | 12 | −22 | 16 | 3.59 |

| 63 | −40 | 3 | 16 | 3.10 | |

| R Inf. Parietal Lobule | 47 | −54 | 19 | 13 | 4.50 |

| L Calcarine sulcus | −5 | −89 | 4 | 53 | 4.25 |

| L Cerebellum | −20 | −57 | −31 | 23 | 3.57 |

| R Cerebellum | 17 | −36 | −23 | 12 | 2.90 |

Note. See Table 1 for more detail.

Figure 4.

Similar regions activated by receipt of monetary gains and penalties. a) Left panel shows left OFC and medial PFC regions revealed when the RewardOutcome: GAIN – No-IncentiveOutcome contrast was masked with RewardOutcome: GAIN – RewardAnticipation . Right panel shows very similar left OFC and medial PFC regions when the LossOutcome: PENALTY – No-IncentiveOutcome contrast was masked with LossOutcome: PENALTY – LossAnticipation, b) Left ventrolateral PFC region showing equivalent activation to receipt of both monetary gains and losses. Time-course is from peak activated voxel, time-locked to delivery of incentive feedback.

Receipt of monetary penalties

The [LossOutcome: PENALTY – No-IncentiveOutcome] contrast revealed activity in several regions, including the right putamen (Talairach coordinates: 23, 1, 10), and aspects of the temporal and parietal lobes, as well as fusiform gyrus and calcarine sulcus. Critically, several activations were also detected in frontal regions, including the medial PFC (−2, 46, 16), left inferior frontal gyrus (three activations: −39, 29, −14; −27, 23, −10; −55, 19, 20), left middle frontal gyrus (three activations: −20, 45, −10; −39, 53, −3; −39, 12, 48), left superior frontal gyrus (four activations: −14, 63, 1; −17, 62, 19; −8, 48, 35; −8, 17, 53) and right superior frontal gyrus (8, 55, 33). Similar to what was obtained in the analysis of reward consumption, none of these frontal regions survived application of the [LossOutcome: PENALTY - RewardOutcome: GAIN] mask, suggesting that they were not differentially activated by aversive outcomes versus rewarding outcomes (Table 3). However, several frontal regions survived application of the [LossOutcome: PENALTY – LossAnticipation] mask (Table 3), including the medial PFC (−2, 46, 16) and left OFC (−27, 23, −10) (Figure 4a). Comparison of the analyses of rewarding and aversive outcomes supports the conclusion that delivery of both types of incentives activated overlapping neural regions, including medial PFC, OFC, and ventrolateral PFC (Figure 4b).

Table 3.

Neural Regions Implicated in Receipt of Monetary Penalties

| Region | x | y | z | # Voxels | Z |

|---|---|---|---|---|---|

| [LossOutcome: PENALTY – NoIncentOutcome] inclusively masked with [LossOutcome: PENALTY – RewOutcome: GAIN] | |||||

| L Inf. Parietal Lobule | −58 | −51 | 22 | 19 | 5.08 |

| L Cerebellum | −27 | −79 | −20 | 20 | 4.02 |

| [LossOutcome: PENALTY– NoIncentOutcome] inclusively masked with [LossOutcome: PENALTY – LossANT] | |||||

| L Medial PFC | −2 | 46 | 16 | 60 | 4.00 |

| L Inf. Frontal Gyrus | −55 | 19 | 20 | 23 | 4.03 |

| R Inf. Frontal Gyrus | −45 | 29 | −6 | 15 | 4.58 |

| L Mid. Frontal Gyrus | −20 | 45 | −10 | 46 | 4.06 |

| −39 | 53 | −3 | 46 | 4.90 | |

| −39 | 12 | 48 | 86 | 6.02 | |

| L Sup. Frontal Gyrus | −17 | 62 | 19 | 37 | 3.82 |

| −8 | 48 | 35 | 70 | 4.59 | |

| −8 | 17 | 53 | 51 | 4.2 | |

| R Pre-Central Gyrus | 51 | 6 | 44 | 13 | 4.12 |

| L Inf. Temporal Gyrus | −61 | −44 | −15 | 137 | 5.47 |

| R Inf. Temporal Gyrus | 54 | −64 | −9 | 35 | 3.82 |

| R Mid. Temporal Gyrus | 60 | −25 | −7 | 18 | 3.77 |

| L Inf. Parietal Lobule | −58 | −51 | 22 | 17 | 5.08 |

| L Fusiform Gyrus | −27 | −82 | −20 | 16 | 3.76 |

| L Lingual Gyrus | −14 | −74 | 1 | 36 | 3.69 |

| R Calcarine sulcus | 20 | −75 | 6 | 27 | 2.95 |

Note. See Table 1 for more detail.

Ventral striatal response to reward omission

Animal studies have demonstrated that when an expected reward is omitted, firing of midbrain DA neurons is inhibited (Schultz, 1998). To examine whether a similar pattern was present in our data set, exploratory analyses were conducted to identify neural regions more activated upon receipt of no incentive when this was expected than during the omission of a potential reward (i.e., [No-IncentiveOutcome - RewardOutcome: NoChange]). At the pre-established statistical threshold (p < .001), only a region of the right middle frontal gyrus was significant. However, when alpha was relaxed to p < .005, deactivation on unsuccessful reward trials (relative to no-incentive trials) was observed in the left putamen (−14, 11, −7), consistent with findings from the animal literature (Figure 5).

Figure 5.

Left putamen showing a relative deactivation upon reward omission, as revealed by the [No-IncentiveOutcome - RewardOutcome: NoChange] contrast. Time course from peak activated voxel (time-locked to delivery of incentive feedback) reveals decreased activation upon failure to obtain a possible reward.

Discussion

The present, methodologically oriented study used a task featuring balanced delivery of rewards and losses and a stringent analytic strategy to identify brain regions differentially involved in anticipation versus consumption or rewards or losses. Three main findings emerged. First, the dorsal ACC was significantly more active during anticipation of both types of incentives than during consumption. In addition, a sub-region of the dorsal ACC was particularly sensitive to anticipation of rewards. Second, the ventral striatum was more active during anticipation of rewards than during anticipation of losses or no-incentive. However, this region was not significantly more active during reward anticipation than during receipt of rewards, reflecting its involvement in both phases of reward processing. Third, receipt of rewards and penalties elicited activity in largely overlapping neural regions, including left OFC and medial PFC areas not implicated in anticipation. The small sample size and fixed-effects model used in the fMRI study preclude generalization of the neuroimaging results beyond the current sample. However, the large and sustained RT effects observed in both the behavioral and fMRI studies indicate that the task parameters used here effectively motivated participants while allowing for balanced delivery of incentives. Furthermore, because they emerged from contrasts which explicitly accounted for overlap in anticipation and consumption of rewards and losses, these findings add precision to the existing literature.

The dorsal ACC: Anticipation, adaptive responding, and reward-specificity

Dorsal ACC activity was observed when anticipation of both rewards and losses was contrasted with anticipation of no-incentive (Figure 2). Furthermore, this region was more active during anticipation than consumption of both types of incentives. These results dovetail with early work demonstrating anticipatory ACC activity to cues signaling the onset of a variety of cognitive tasks (Murtha, Chertkow, Beauregard, Dixon, & Evans, 1996), and are also in accordance with a recently developed “error-likelihood” theory of ACC function. To test this theory, Brown and Braver (2005) developed a paradigm in which two levels of error-likelihood (low, high) and response conflict (low, high) were fully crossed. As expected based on previous work (Botvinick, Braver, Barch, Carter, & Cohen, 2001; Botvinick, Cohen, & Carter, 2004), high levels of response conflict led to dorsal ACC activity in both a computational model and a neuroimaging experiment. Critically, however, robust dorsal ACC activity was also observed when error-likelihood was high but response conflict was low. This outcome is inconsistent with predictions based solely on response conflict and instead supports the hypothesis that the ACC fires when the potential for errors is high in order to maximize adaptive behavior (Brown & Braver, 2005; see also Magno, Foxe, Molholm, Robertson, & Garavan, 2006). By extension, the error-likelihood theory may account for the robust dorsal ACC activity observed during both reward and loss anticipation in the current study. Specifically, since both types of trials were associated with a 50 percent probability of failure, dorsal ACC may have been recruited in an attempt to maximize the probability of successful performance. This interpretation could be tested in future research by parametrically varying the percentage of successful and unsuccessful trials presented in the MID task and determining whether dorsal ACC activity is modulated accordingly.

While the dorsal ACC was recruited during anticipation of both types of incentives, a sub-region of this structure was differentially sensitive to reward anticipation (Figure 2). This result is somewhat surprising, as recent work on ACC function in humans has documented this structure’s role in response conflict and responding to various forms of errors (e.g., Botvinick et al., 2004; Holroyd et al., 2004). However, the ACC is functionally heterogeneous (Bush et al., 2002), and there is evidence supporting the existence of sectors primarily devoted to representations of rewarding stimuli. Nishijo and colleagues (Nishijo et al., 1997), for example, recorded from single ACC neurons as monkeys performed an operant task in order to receive food and liquid rewards and avoid painful shocks. Different populations of ACC neurons coded various aspects of the task, including visual discrimination of incentives, bar pressing, and ingestion. Critically, one population of neurons showed activity specific to viewing a variety of rewarding stimuli (e.g., palatable food); viewing neutral or aversive stimuli (or ingesting rewarding stimuli) did not activate these neurons. It is possible that a similar class of neurons responded particularly strongly during reward anticipation in the current task.

Differential recruitment of the ACC during reward anticipation may also reflect across-condition differences in effort expended in the service of task goals. In a series of studies, Walton and colleagues have demonstrated that the ACC is critically involved in effort-related decision making (Walton, Bannerman, Alterescu, & Rushworth, 2003; Walton, Bannerman, & Rushworth, 2002; Walton et al., 2006). Specifically, compared with normal controls, rats with ACC lesions show reluctance to expend effort to obtain large rewards when smaller rewards are more easily obtained. This result is hypothesized to reflect the loss of top-down excitatory signals from the ACC to mesolimbic DA neurons that bias animals to work for large rewards (Walton et al., 2006). In the MID paradigm, participants do not have the option of performing more or less work on particular trials. However, participants may be most motivated on reward trials, which could result in increased effort and increased recruitment of the ACC.

Ventral striatum: Reward anticipation, consumption, and omission

Consistent with several previous studies (Knutson et al., 2001a; Knutson et al., 2001b; Knutson et al., 2003), the contrast examining anticipation of rewards versus no-incentive revealed activity in the ventral striatum (Figure 3). This result was expected based on primate work showing that reward-predicting cues reliably elicit phasic DA release from neurons in the midbrain (Schultz, 1998), which send numerous projections to the striatum (Bannon & Roth, 1983). However, there is controversy regarding whether midbrain DA neurons respond specifically to rewards or to salient stimuli in general (Ungless, 2004). Results from the inclusive masking procedure used to address this issue support the conclusion that this region responded more strongly during anticipation of rewards than during anticipation of losses (or no-incentive).

It may be noted that time-course data show some ventral striatum activity during loss anticipation (Figure 3). The moderate activation in this condition raises the possibility that monetary rewards may simply be more salient than monetary losses in the MID task, especially since participants know they will be remunerated at some level for their participation regardless of their performance and thus do not lose money in any absolute sense. On this account, anticipation of more severe punishments should yield more robust activation of ventral striatum. In fact, significant anticipatory ventral striatal responses have been observed in studies featuring potential loss of large amounts of money (Knutson et al., 2003) as well as threat of painful shocks (Jensen et al., 2003). Collectively, these findings indicate that ventral striatum responds to a variety of salient stimuli and cannot be said to solely code rewards. However, in this and previous studies that incorporate both monetary gains and losses (Knutson et al., 2003), greater ventral striatal activity during anticipation of rewards has been consistently observed, supporting the conclusion that this region preferentially codes reward. Future studies comparing monetary gains with forms of punishment more severe than monetary loss will be helpful in definitively resolving this issue. One possibility is that like the amygdala (e.g., Schoenbaum, Chiba, & Gallagher, 1999), the ventral striatum contains separate populations of neurons that fire in response to cues predicting rewarding versus aversive outcomes, respectively (Seymour, Daw, Dayan, Singer, & Dolan, 2007).

A second point that has received less attention is the degree to which activation of the ventral striatum is specific to the anticipatory phase of incentive processing (Knutson et al., 2005). To address this issue, the [RewardAnticipation – No-IncentiveAnticipation] contrast was masked with the [RewardAnticipation – RewardOutcome:GAIN] contrast. The ventral striatum did not survive this masking procedure (even at a reduced cluster extent), indicating that it was not significantly more active during anticipation versus consumption of rewards. Note that because this conclusion is based solely on analysis of reward trials, it cannot be attributed to a relative deactivation of the ventral striatum upon receipt of non-rewarding outcomes. In addition, exploratory analysis of time-course data from the ventral striatum revealed a strong response to monetary gains, confirming that this region was also active during reward consumption. Though ventral striatal responses to reward outcome are rarely discussed in the literature, it is important to note that this result has been observed in other studies (Bjork et al., 2004; Bjork & Hommer, 2007).

Finally, a relative deactivation in left ventral putamen was observed in response to missed rewards relative to no-incentive outcomes. This result is consistent both with literature indicating that reward omission leads to suppression of midbrain dopamine neurons in non-human primates (Schultz, 1998), and with an fMRI study demonstrating decreased activity in the nucleus accumbens in humans when an expected monetary reward is omitted (Spicer et al., 2007). Functionally, suppression of midbrain DA neurons upon reward omission—in conjunction with phasic bursting upon delivery of surprising rewards—is believed to constitute a behavioral training signal (Schultz, 2000). Specifically, while bursting of DA neurons is hypothesized to increase the intensity and frequency of behaviors associated with reward attainment, suppression of DA neurons is believed to contribute to the extinction of behaviors associated with reward omission.

Orbitofrontal and medial frontal cortex: Processing incentive outcomes

Previous studies demonstrate that ventromedial PFC is consistently activated by outcomes on reward trials, showing increased activity upon receipt of large rewards and decreased activity when an expected large reward is omitted (Knutson et al., 2003). In addition, some studies have reported OFC activation in response to monetary rewards (Thut et al., 1997; Knutson et al., 2001b), though others have not observed activity in this region, likely due to fMRI signal dropout (e.g., Knutson et al., 2001a). Notably, previous studies using the MID task generally have not reported activation of either medial PFC or OFC in response to aversive outcomes (Knutson et al., 2003; Bjork et al., 2004). These negative findings are surprising as an extensive literature in humans and non-human primates indicates that the OFC is critical for the representation of a variety of outcomes which are used to adaptively guide behavior (Rolls, 1996; Bechara, Damasio, & Damasio, 2000).

The current study used acquisition parameters specifically designed to minimize signal dropout in the OFC and medial temporal lobes (Deichmann et al., 2003), and robust activations in medial PFC and left OFC (and ventrolateral PFC) were observed in response to receipt of both rewards and penalties (Figure 4). In addition, a region of right OFC was differentially recruited during consumption of rewards (Table 2: right inferior frontal gyrus activation at 35, 27, −16). Recruitment of these regions by delivery of both classes of incentive is consistent with both theory and previous experiments, which have noted OFC and/or ventromedial PFC activation upon delivery of both positive and negative stimuli, including symbols of social status (sports cars: Erk et al., 2002), beautiful faces (Aharon et al., 2001), and both painful and pleasant tactile stimulations (Rolls et al., 2003).

However, the fact that very similar regions of left OFC were recruited across the reward and loss conditions is somewhat surprising. Using a reversal learning task with monetary incentives, O’Doherty and colleagues found that medial and lateral OFC regions code rewards and punishments, respectively (O’Doherty, Kringelbach, Rolls, Hornak, & Andrews, 2001), a finding which has since been replicated (Ursu & Carter, 2005). In the current study, only lateral OFC activations were observed. The reason for this discrepancy is unclear, but one possibility is that the relative medial/lateral distribution of OFC activations may vary along dimensions not fully captured by the distinction between rewards and losses. Consistent with this speculation, a recent fMRI study suggests that medial and lateral OFC regions may represent stable and unstable stimulus-outcome representations, respectively (Windmann et al., 2006). Participants played two versions of the Iowa gambling task: the original version, in which unexpected punishments are intermingled with steady rewards, and an inverted version, in which unexpected rewards are intermingled with steady punishments. Critically, a reward versus punishment contrast revealed primarily medial OFC activation in the original task but strong bilateral OFC activation in the inverted task. These findings are inconsistent with the valence hypothesis of medial versus lateral OFC function, and were interpreted as reflecting the “steadiness” of the outcomes.

The results of Windmann et al. (2006) might be relevant to the current findings. Participants in the MID task are uncertain of the outcome they will receive on any trial, and in the current paradigm they experienced positive and negative outcomes on 50% of the trials. These elements might add uncertainty to the task, and thus the lateral OFC activations elicited by receipt of incentives could be seen as consistent with the hypothesis that lateral OFC codes outcomes in an unstable environment.

Limitations

The current study features several limitations. First, the small sample sizes limit the study’s statistical power. Future research utilizing random-effects analyses is necessary in order to generalize the fMRI findings to other samples. An illustration of possible low statistical power is the fact that while inclusive masking revealed that the ventral striatum was not significantly more active during reward anticipation than during reward delivery, significant activation of the ventral striatum was not observed in contrasts which directly targeted rewarding outcomes. This result likely reflects weaker recruitment of the ventral striatum by rewarding outcomes relative to reward anticipation; more participants may be necessary to observe robust activation of this region upon delivery of monetary gains. Weaker mean activation of ventral striatum by rewarding outcomes (versus reward anticipation) could also reflect greater sensitivity of this structure to monetary gains during early versus late stages of the MID task; this pattern of results would be in agreement with animal literature demonstrating midbrain DA responses to unexpected but not expected rewards (Schultz, 1998, 2000). In general, the fMRI results should be considered tentative until replicated in a larger sample. However, the neuroimaging and behavioral results are already sufficiently robust to confirm that the task successfully addressed limitations of previous MID tasks while maintaining participants’ motivation to obtain incentives. It should be noted that balanced delivery of incentives may also be achieved via other methods, including using adaptive algorithms to vary outcome delivery according to participants’ performance levels (e.g., Kirsch et al., 2003).

Second, the acquisition of fMRI images in a roughly axial orientation limited ability to detect activity in the amygdala, a region which has been implicated in anticipation of monetary rewards (Hommer et al., 2003) and which is better imaged using coronal acquisition. Third, as mentioned earlier, monetary losses in the MID task are not an especially salient form of punishment, and robust activation of ventral striatum during anticipation of aversive outcomes in humans may require the use of more punishing stimuli such as shocks or painful heat (Jensen et al., 2003; Becerra et al., 2001). In addition, as noted earlier, the presentation of both successful and unsuccessful outcomes on loss trials raises the possibility that anticipatory activity on loss trials reflects a mixture of anxiety and hope, which are difficult to disentangle.

A final concern is that participants may have become disengaged over the course of the task, as the incentive delivery schedule was fixed and not affected by participants’ responses. Behavioral data from both the pilot and fMRI studies argue strongly against this possibility: RT differences between the two incentive conditions and the no-incentive condition were large, evident at the level of individual participants, and sustained throughout the task. These results support the conclusion that participants were consistently motivated to obtain rewards and avoid penalties. This outcome probably reflects the influence of the instructions given to participants and the utilization of target exposure durations that were adjusted based on individual’s practice RTs. However, it remains possible that some participants may have become aware of the disconnection between their actions and task outcomes. An important step in future studies will be to directly investigate this possibility, in order to determine whether awareness modulates the neural response to either the cues, the target, or the incentives themselves.

Conclusion

This study used a balanced task design (with design optimization based on a genetic algorithm), a pulse sequence designed to maximize signal recovery from the OFC, and a rigorous set of analyses to identify brain regions specifically involved in the anticipatory and consummatory phases of incentive processing. The dorsal ACC was activated during anticipation of both rewards and losses, and a sub-region of dorsal ACC was differentially sensitive to reward anticipation. Ventral striatum was more active during anticipation of rewards than during anticipation of losses or no-incentive; however, this region was not significantly more activated during reward anticipation compared to reward consumption. Finally, medial PFC and left OFC regions were similarly activated by receipt of rewards and penalties, consistent with a role for these regions in representing a range of incentives for use in control of behavior.

Taken together, these findings help clarify existing research on incentive processing in healthy participants. Ultimately, they may also be of value to researchers studying psychiatric populations with deficits in incentive processing. For example, melancholic depression is marked by anhedonia, a lack of reactivity to pleasure and rewarding stimuli (Meehl, 1975). Interestingly, neuroimaging research links depression to functional changes in reward circuitry, including the ACC (Mayberg, 1997; Pizzagalli et al., 2004), ventral striatum (Epstein et al., 2006), and ventromedial PFC (Keedwell, Andrew, Williams, Brammer, & Phillips, 2005). However, whether these deficits are specific to anticipation versus consumption of rewards, and whether or not they extend to processing of negative incentives is currently unclear. By parsing the specific roles played by these three regions during incentive processing, the current study provides a framework for addressing these questions and may guide the formation of increasingly precise hypotheses regarding their dysfunction in depression (e.g., Pizzagalli, Jahn, & O’Shea, 2005).

Acknowledgments

This work was supported by NIMH grant R01 MH68376 to DAP. The authors gratefully acknowledge James O’Shea for his technical assistance, as well as Dr. Thilo Deckersbach and Dr. Darin Dougherty for their support in initial phases of this study.

Footnotes

It was important to adjust the target exposure durations on successful and unsuccessful trials so that they maximized task believability but did not drastically differ temporally and thus potentially lead to different hemodynamic responses. The 15th and 85th RT percentiles were chosen based on studies showing that the hemodynamic response is at ceiling at approximately 200 ms presentation time (e.g., Grill-Spector, 2003). In both the pilot and fMRI studies, the mean (±SD) RTs associated with the 15th percentile (pilot: 245.33±33.14 ms; fMRI: 301.13±33.49 ms) and 85th percentile (pilot: 364.00±67.73ms; fMRI: 419.88±57.84 ms) were different enough to foster task engagement, yet similar enough to elicit comparable hemodynamic responses ( : pilot: 118.67±49.32 ms; fMRI: 118.75±44.73 ms).

For brevity, only critical activations are reported for each of the standard contrasts (i.e., incentives minus the no-incentive conditions). For the anticipatory phase, critical activations were defined as those involving medial PFC regions or striatum. Given the involvement of various sectors of the PFC in consumption, any PFC activation was considered critical for contrasts targeted at the consummatory phase. Full lists of activations from these contrasts are available upon request.

References

- Aharon I, Etcoff N, Ariely D, Chabris CF, O’Connor E, Breiter HC. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron. 2001;32:537–551. doi: 10.1016/s0896-6273(01)00491-3. [DOI] [PubMed] [Google Scholar]

- Anderson CM, Maas LC, Frederick B, Bendor JT, Spencer TJ, Livni E, Lukas SE, Fischman AJ, Madras BK, Renshaw PF, Kaufman MJ. Cerebellar vermis involvement in cocaine-related behaviors. Neuropsychopharmacology. 2006;31:1318–1326. doi: 10.1038/sj.npp.1300937. [DOI] [PubMed] [Google Scholar]

- Arthurs OJ, Boniface SJ. What aspect of the fMRI BOLD signal best reflects the underlying electrophysiology in human somatosensory cortex? Clinical Neurophysiology. 2003;114:1203–1209. doi: 10.1016/s1388-2457(03)00080-4. [DOI] [PubMed] [Google Scholar]

- Bannon MJ, Roth RH. Pharmacology of mesocortical dopamine neurons. Pharmacological Reviews. 1983;35:53–68. [PubMed] [Google Scholar]

- Becerra L, Breiter HC, Wise R, Gonzalez RG, Borsook D. Reward circuitry activation by noxious thermal stimuli. Neuron. 2001;32:927–946. doi: 10.1016/s0896-6273(01)00533-5. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR. Emotion, decision making and the orbitofrontal cortex. Cerebral Cortex. 2000;10:295–307. doi: 10.1093/cercor/10.3.295. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR, Lee GP. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. Journal of Neuroscience. 1999;19:5473–5481. doi: 10.1523/JNEUROSCI.19-13-05473.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Research Reviews. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Bjork JM, Hommer D. Anticipating instrumentally obtained and passively-received rewards: A factorial fMRI investigation. Behavioural Brain Research. 2007;177:165–170. doi: 10.1016/j.bbr.2006.10.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Knutson B, Fong GW, Caggiano DM, Bennett SM, Hommer D. Incentive-elicited brain activation in adolescents: similarities and differences from young adults. Journal of Neuroscience. 2004;24:1793–1802. doi: 10.1523/JNEUROSCI.4862-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychological Review. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Cohen JD, Carter CS. Conflict monitoring and anterior cingulate cortex: an update. Trends in Cognitive Sciences. 2004;8:539–546. doi: 10.1016/j.tics.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Gollub RL, Weisskoff RM, Kennedy DN, Makris N, Berke JD, et al. Acute effects of cocaine on human brain activity and emotion. Neuron. 1997;19:591–611. doi: 10.1016/s0896-6273(00)80374-8. [DOI] [PubMed] [Google Scholar]

- Brett M, Christoff K, Cusack R, Lancaster J. Using the Talairach atlas with the MNI template. Neuroimage. 2001;13:S85. [Google Scholar]

- Brown JW, Braver TS. Learned predictions of error likelihood in the anterior cingulate cortex. Science. 2005;307:1118–1121. doi: 10.1126/science.1105783. [DOI] [PubMed] [Google Scholar]

- Bush G, Vogt BA, Holmes J, Dale AM, Greve D, Jenike MA, et al. Dorsal anterior cingulate cortex: a role in reward-based decision making. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:523–528. doi: 10.1073/pnas.012470999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carver CS, White TL. Behavioral inhibition, behavioral activation, and affective responses to impending reward and punishment: The BIS/BAS Scales. Journal of Personality & Social Psychology. 1994;67:319–333. [Google Scholar]

- Chapman LJ, Chapman JP. The measurement of handedness. Brain and Cognition. 1987;6:175–183. doi: 10.1016/0278-2626(87)90118-7. [DOI] [PubMed] [Google Scholar]

- Constable RT, Spencer DD. Composite image formation in z-shimmed functional MR imaging. Magnetic Resonance in Medicine. 1999;42:110–117. doi: 10.1002/(sici)1522-2594(199907)42:1<110::aid-mrm15>3.0.co;2-3. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Human Brain Mapping. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R. Optimized EPI for fMRI studies of the orbitofrontal cortex. Neuroimage. 2003;19:430–441. doi: 10.1016/s1053-8119(03)00073-9. [DOI] [PubMed] [Google Scholar]

- Epstein J, Pan H, Kocsis JH, Yang Y, Butler T, Chusid J, et al. Lack of ventral striatal response to positive stimuli in depressed versus normal subjects. American Journal of Psychiatry. 2006;163:1784–1790. doi: 10.1176/ajp.2006.163.10.1784. [DOI] [PubMed] [Google Scholar]

- Erk S, Spitzer M, Wunderlich AP, Galley L, Walter H. Cultural objects modulate reward circuitry. Neuroreport. 2002;13:2499–2503. doi: 10.1097/00001756-200212200-00024. [DOI] [PubMed] [Google Scholar]

- Fisher RA. Statistical methods for research workers. 14. Hafner Publishing Company; New York: 1973. [Google Scholar]

- Fissell K, Tseytlin E, Cunningham D, Carter CS, Schneider W, Cohen JD. Fiswidgets: A graphical computing environment for neuroimaging analysis. Neuroinformatics. 2003;1:111–125. doi: 10.1385/ni:1:1:111. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ. How many subjects constitute a study? Neuroimage. 1999;10:1–5. doi: 10.1006/nimg.1999.0439. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Gard DE, Gard MG, Kring AM, John OP. Anticipatory and consummatory components of the experience of pleasure: A scale development study. Journal of Research in Personality. 2006;40:1086–1102. [Google Scholar]

- Gottfried JA, Deichmann R, Winston JS, Dolan RJ. Functional heterogeneity in human olfactory cortex: an event-related functional magnetic resonance imaging study. Journal of Neuroscience. 2002;22:10819–10828. doi: 10.1523/JNEUROSCI.22-24-10819.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K. The functional organization of the ventral visual pathway and its relationship to object recognition. In: Kanwisher N, Duncan J, editors. Attention and Performance XX: Functional Brain Imaging of Visual Cognition. London, England: Oxford University Press; 2003. pp. 169–193. [Google Scholar]

- Holmes A, Poline JB, Friston KJ. Characterizing brain images with the general linear model. In: Frackowiak RSJ, Friston KJ, Frith CD, Dolan RJ, Mazziotta JC, editors. Human Brain Function. San Diego: Academic Press; 1997. pp. 59–84. [Google Scholar]

- Holroyd CB, Coles MGH. The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychological Review. 2002;109(4):679–709. doi: 10.1037/0033-295X.109.4.679. [DOI] [PubMed] [Google Scholar]

- Holroyd CB, Nieuwenhuis S, Yeung N, Nystrom L, Mars RB, Coles MGH, et al. Dorsal anterior cingulate cortex shows fMRI response to internal and external error signals. Nature Neuroscience. 2004;7:497–498. doi: 10.1038/nn1238. [DOI] [PubMed] [Google Scholar]

- Hommer DW, Knutson B, Fong GW, Bennett S, Adams CM, Varner JL. Amygdalar recruitment during anticipation of monetary rewards: an event-related fMRI study. Annals of the New York Academy of Sciences. 2003;985:476–478. doi: 10.1111/j.1749-6632.2003.tb07103.x. [DOI] [PubMed] [Google Scholar]

- Hyde JS, Biswal BB, Jesmanowicz A. High-resolution fMRI using multislice partial k-space GR-EPI with cubic voxels. Magnetic Resonance in Medicine. 2001;46:114–125. doi: 10.1002/mrm.1166. [DOI] [PubMed] [Google Scholar]

- Ikemoto S, Panksepp J. The role of nucleus accumbens dopamine in motivated behavior: a unifying interpretation with special reference to reward-seeking. Brain Research Reviews. 1999;31:6–41. doi: 10.1016/s0165-0173(99)00023-5. [DOI] [PubMed] [Google Scholar]

- International Neuroimaging Consortium. Interactive Talairach Atlas. 2006 University of Minnesota NEUROVIA.Website: http://www.neurovia.umn.edu/webservice/tal_atlas.html.

- Jensen J, McIntosh AR, Crawley AP, Mikulis DJ, Remington G, Kapur S. Direct activation of the ventral striatum in anticipation of aversive stimuli. Neuron. 2003;40:1251–1257. doi: 10.1016/s0896-6273(03)00724-4. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- Keedwell PA, Andrew C, Williams SCR, Brammer MJ, Phillips ML. A double dissociation of ventromedial prefrontal cortical responses to sad and happy stimuli in depressed and healthy individuals. Biological Psychiatry. 2005;58:495–503. doi: 10.1016/j.biopsych.2005.04.035. [DOI] [PubMed] [Google Scholar]

- Kim H, Shimojo S, O’Doherty JP. Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. PLoS Biology. 2006;4:1453–1461. doi: 10.1371/journal.pbio.0040233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirsch P, Schienle A, Stark R, Sammer G, Blecker C, Walter B, et al. Anticipation of reward in a nonaversive differential conditioning paradigm and the brain reward system: an event-related fMRI study. Neuroimage. 2003;20:1086–1095. doi: 10.1016/S1053-8119(03)00381-1. [DOI] [PubMed] [Google Scholar]

- Knutson B, Bjork JM, Fong GW, Hommer D, Mattay VS, Weinberger DR. Amphetamine modulates human incentive processing. Neuron. 2004;43:261–269. doi: 10.1016/j.neuron.2004.06.030. [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. Journal of Neuroscience. 2001a;21:RC159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Cooper JC. Functional magnetic resonance imaging of reward prediction. Current Opinion in Neurology. 2005;18:411–417. doi: 10.1097/01.wco.0000173463.24758.f6. [DOI] [PubMed] [Google Scholar]

- Knutson B, Fong GW, Adams CM, Varner JL, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport. 2001b;12:3683–3687. doi: 10.1097/00001756-200112040-00016. [DOI] [PubMed] [Google Scholar]

- Knutson B, Fong GW, Bennett SM, Adams CM, Hommer D. A region of mesial prefrontal cortex tracks monetarily rewarding outcomes: characterization with rapid event-related fMRI. Neuroimage. 2003;18:263–272. doi: 10.1016/s1053-8119(02)00057-5. [DOI] [PubMed] [Google Scholar]

- Magno E, Foxe JJ, Molholm S, Robertson IH, Garavan H. The anterior cingulate and error avoidance. Journal of Neuroscience. 2006;26:4769–4773. doi: 10.1523/JNEUROSCI.0369-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayberg HS. Limbic-cortical dysregulation: A proposed model of depression. Journal of Neuropsychiatry & Clinical Neurosciences. 1997;9:471–481. doi: 10.1176/jnp.9.3.471. [DOI] [PubMed] [Google Scholar]

- Meehl PE. Hedonic capacity: some conjectures. Bulletin of the Menninger Clinic. 1975;39:295–307. [PubMed] [Google Scholar]

- Mobbs D, Greicius MD, Abdel-Azim E, Menon V, Reiss AL. Humor modulates the mesolimbic reward centers. Neuron. 2003;40:1041–1048. doi: 10.1016/s0896-6273(03)00751-7. [DOI] [PubMed] [Google Scholar]

- Murtha S, Chertkow H, Beauregard M, Dixon R, Evans A. Anticipation causes increased blood flow to the anterior cingulate cortex. Human Brain Mapping. 1996:103–112. doi: 10.1002/(SICI)1097-0193(1996)4:2<103::AID-HBM2>3.0.CO;2-7. [DOI] [PubMed] [Google Scholar]

- Nishijo H, Yamamoto Y, Ono T, Uwano T, Yamashita J, Yamashima T. Single neuron responses in the monkey anterior cingulate cortex during visual discrimination. Neuroscience Letters. 1997;227:79–82. doi: 10.1016/s0304-3940(97)00310-8. [DOI] [PubMed] [Google Scholar]

- O’Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal cortex. Nature Neuroscience. 2001;4:95–102. doi: 10.1038/82959. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA, Oakes TR, Fox AS, Chung MK, Larson CL, Abercrombie HC, et al. Functional but not structural subgenual prefrontal cortex abnormalities in melancholia. Molecular Psychiatry. 2004;9:393–405. doi: 10.1038/sj.mp.4001501. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA, Jahn AL, O’Shea JP. Toward an objective characterization of an anhedonic phenotype: A Signal-detection approach. Biological Psychiatry. 2005;57:319–327. doi: 10.1016/j.biopsych.2004.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson TE, Berridge KC. Addiction. Annual Review of Psychology. 2003;54:25–53. doi: 10.1146/annurev.psych.54.101601.145237. [DOI] [PubMed] [Google Scholar]

- Rolls ET. The orbitofrontal cortex. Philosophical Transactions of the Royal Society of London - Series B: Biological Sciences. 1996;351:1433–1443. doi: 10.1098/rstb.1996.0128. [DOI] [PubMed] [Google Scholar]

- Rolls ET, O’Doherty J, Kringelbach ML, Francis S, Bowtell R, McGlone F. Representations of pleasant and painful touch in the human orbitofrontal and cingulate cortices. Cerebral Cortex. 2003;13:308–317. doi: 10.1093/cercor/13.3.308. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. Journal of Neuroscience. 1999;19:1876–1884. doi: 10.1523/JNEUROSCI.19-05-01876.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. Journal of Neurophysiology. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Schultz W. Multiple reward signals in the brain. Nature Reviews Neuroscience. 2000;1:199–207. doi: 10.1038/35044563. [DOI] [PubMed] [Google Scholar]

- Seymour B, Daw N, Dayan P, Singer T, Dolan R. Differential encoding of losses and gains in the human striatum. Journal of Neuroscience. 2007;27:4826–4831. doi: 10.1523/JNEUROSCI.0400-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slotnick SD, Schacter DL. A sensory signature that distinguishes true from false memories. Nature Neuroscience. 2004;7:664–672. doi: 10.1038/nn1252. [DOI] [PubMed] [Google Scholar]

- Spicer J, Galvan A, Hare TA, Voss H, Glover G, Casey BJ. Sensitivity of the nucleus accumbens to violations in expectation of reward. Neuroimage. 2007;34:455–461. doi: 10.1016/j.neuroimage.2006.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. 1. Thieme: Stuttgart; 1988. [Google Scholar]

- Thut G, Schultz W, Roelcke U, Nienhusmeier M, Missimer J, Maguire RP, et al. Activation of the human brain by monetary reward. Neuroreport. 1997;8:1225–1228. doi: 10.1097/00001756-199703240-00033. [DOI] [PubMed] [Google Scholar]

- Tobler PN, O’Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. Journal of Neurophysiology. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ungless MA. Dopamine: the salient issue. Trends in Neurosciences. 2004;27:702–706. doi: 10.1016/j.tins.2004.10.001. [DOI] [PubMed] [Google Scholar]

- Ursu S, Carter CS. Outcome representations, counterfactual comparisons and the human orbitofrontal cortex: implications for neuroimaging studies of decision-making. Cognitive Brain Research. 2005;23:51–60. doi: 10.1016/j.cogbrainres.2005.01.004. [DOI] [PubMed] [Google Scholar]