Abstract

Microscopy of cells has changed dramatically since its early days in the mid-seventeenth century. Image analysis has concurrently evolved from measurements of hand drawings and still photographs to computational methods that (semi-) automatically quantify objects, distances, concentrations, and velocities of cells and subcellular structures. Today's imaging technologies generate a wealth of data that requires visualization and multi-dimensional and quantitative image analysis as prerequisites to turning qualitative data into quantitative values. Such quantitative data provide the basis for mathematical modeling of protein kinetics and biochemical signaling networks that, in turn, open the way toward a quantitative view of cell biology. Here, we will review technologies for analyzing and reconstructing dynamic structures and processes in the living cell. We will present live-cell studies that would have been impossible without computational imaging. These applications illustrate the potential of computational imaging to enhance our knowledge of the dynamics of cellular structures and processes.

Dynamic processes are at the very basis of cellular function. In an attempt to understand these processes, cellular structures have been studied in fixed and living specimens by various microscopic techniques including phase contrast, differential interference contrast, and confocal microscopy. Fluorescent dyes such as fluorescein and rhodamine, together with recombinant fluorescent protein technology (Chalfie et al., 1994) and voltage- (Loew, 1992) and pH-sensitive dyes (Adie et al., 2002) allow virtually any cellular structure to be tagged. In combination with techniques in live cells like FRAP (Axelrod et al., 1976) and fluorescence resonance energy transfer (Sekar and Periasamy, 2003), it is now possible to obtain spatio-temporal, biochemical, and biophysical information about the cell in a manner not imaginable before.

Evolution of quantitative live-cell microscopy

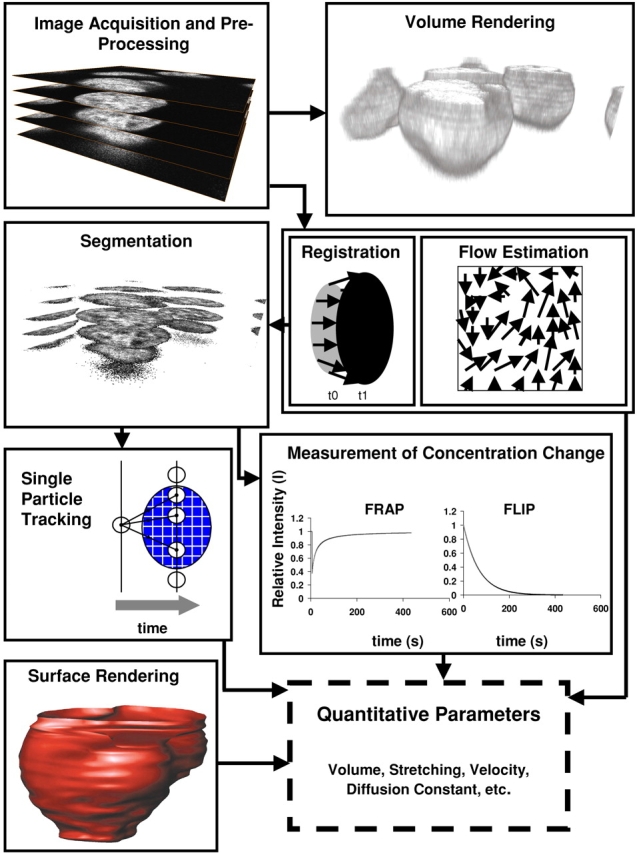

Live-cell image analysis started with the earliest microscopists. Although most of these measurements were based on manual inspection and intervention, with the advent of fluorescence microscopy, many studies also involved quantitative imaging of living cells either using video or CCD cameras (Inoue, 1981; Allen and Allen, 1983). In the early years of live-cell microscopy, methods for segmentation and tracking of cells (Berg and Brown, 1972; Berns and Berns, 1982) were rapidly developed and adapted from other areas. Nowadays, techniques for fully automated analysis and time–space visualization of time series from living cells involve either segmentation and tracking of individual structures, or continuous motion estimation (for an overview, see Fig. 1) . For tracking a large number of small particles that move individually and independently from each other, single particle tracking approaches are most appropriate (Qian et al., 1991).

Figure 1.

The typical workflow in computational imaging is presented. Once the images have been acquired by microscopy and preprocessed to improve the signal-to-noise ratio, they can be directly visualized by methods like volume rendering. For multiple objects in motion, single particle tracking, in which a particle is tracked over different time-steps, is the most direct method used. It provides access to parameters such as velocity, acceleration, and diffusion coefficients. Segmentation is the basis for both surface rendering and kinetic measurements. Surface rendering is obtained after segmentation of contours in each individual section and gives rise to volumetric measurements such as volume and surface area. Measurements of concentration changes for segmented areas in FRAP or fluorescence loss in photobleaching experiments give rise to estimates of kinetic parameters such as diffusion and binding coefficients. Image registration is used to measure elastic or rigid changes of form. It is also often used to correct for global movement before further quantitative analysis. The estimation of flow of gray values is an approach to quantify mobility in continuous space. All these processes lead to accurate estimates of quantitative parameters.

For the determination of more complex movement, two independent approaches were initially developed, but recently have been merged. Optical flow (Mitiche and Bouthemy, 1996) methods estimate the local motion directly from local gray value changes in image sequences. Image registration (Terzopoulos et al., 1991; Lavallee and Szeliski, 1995) aims at identifying and allocating certain objects in the real world as they appear in an internal computer model. The main application of image registration in cell biology is the automated correction of rotational and translational movements over time (rigid transformation). This allows the identification of local dynamics, in particular when the movement is a result of the superposition of two or more independent dynamics. Registration also helps to identify global movements when local changes are artifacts and should be neglected.

Single particle tracking

The basic principle of single particle tracking is to find for each object in a given time frame its corresponding object in the next frame. The correspondence is based on object features, nearest neighbor information, or other inter-object relationships. Object features can be dynamic criteria such as displacement and acceleration of an object as well as area/volume or mean gray value of the object. Optical flow has been defined as the motion flow (i.e., the motion vector field) that is derived from two consecutive images in a time series (Jähne, 2002). If optical flow is continuous, corresponding objects in subsequent images should be similar. However, due to high levels of noise, this assumption is usually distorted, and standard region-based matching techniques give unsatisfactory results (Anandan, 1989). A more reliable tracking approach involves fuzzy logic-based analysis of the tracking parameters (Tvarusko et al., 1999).

Image registration

Image registration enables a computer to “register” (apprehend and allocate) certain objects in the real world as they appear in an internal computer model. Initially, only rigid transformations were used to superimpose the images, whereas nowadays, research is focused on the integration of local deformations.

A parametric image registration algorithm specifies the parameters of a transformation in a way that physically corresponding points at two consecutive time steps are brought together as close as possible. Such algorithms have been broadly studied in medical imaging and cell biology (Maintz and Viergever, 1998; Bornfleth et al., 1999). Although one class of algorithms operates on previously extracted surface points (Lavallee and Szeliski, 1995), other algorithms register the images directly based on the gray-value changes. Nonrigid deformations, i.e., transformations others than rotation and translation, present an active body of research in computer vision. Nonrigid approaches differ with respect to the underlying motion model (Terzopoulos et al., 1991; Szeliski, 1996). Most commonly, a cost or error function is defined and an optimization method is chosen that iteratively adjusts the parameters until an optimum has been achieved. Other approaches extract specific features (e.g., correspondence between points) that serve as a basis for directly calculating the model parameters (Arun et al., 1987; Rohr, 1997).

Computer vision

Computer vision is a discipline that focuses on information extraction from the output of optical sensors, and on the representation of this information in an internal computer model (Faugeras, 1993). A computer vision framework for detecting and tracking diffraction images of linear structures in differential interference contrast microscopy was developed for measuring deflections of clamped microtubules with a freely moving second end (Danuser et al., 2000). Based on measurements of thermal fluctuations, it was possible to derive the elasticity of the microtubule. Further, prior knowledge based on geometric and dynamic models of the scene can lead to restoration of information beyond the resolution limit of an imaging system (Danuser, 2001). This super-resolution concept was illustrated by the stereo reconstruction of a micropipette moving in close proximity to a stationary target object.

Visualization

Complex dynamic processes in cells should ideally be studied in three spatial dimensions over time. Thereby, large and complex data sets typically consisting of 5,000–10,000 single images are generated. Such data are virtually impossible to interpret without computational tools for visual inspection in space and time.

Typically, 3-D images have been represented as stereoscopic pairs or as anaglyphs by pixel shift method (White, 1995). Displaying time series as movies is still a widely used method for visual interpretation. For fast-moving objects such as trafficking vesicles imaged with high time resolution, time-lapse movies are very informative. However, for much slower nuclear processes or for processes with mixed kinetics that need to be observed over a longer period of time, the total number of time points for imaging are limited due to the photo toxicity of the light exposure during in vivo observation (Konig et al., 1996). Therefore, an interpolation between consecutive time steps is required to reconstruct intermediate time steps. As a “side effect,” additional information about the continuous development of the observed processes between the imaged time steps (subpixel resolution in time) is achieved, and quantitative information can be derived (see next section).

Although early studies explored 4-D data sets by simply browsing through an image gallery and highlighting interactively selected structures (Thomas et al., 1996), better 4-D imaging data are achieved by computer graphics. Two commonly used rendering algorithms for displaying 3-D structures are volume rendering and surface rendering (Chen et al., 1995; Fig. 1). Volume rendering is a technique for visualizing complex 3-D data sets without explicit definition of surface geometry. Volume visualization is achieved in three steps: classification, shading, and projection. The classification step assigns a level of opacity, contrast, and color to each voxel in the 3-D volume (e.g., Wright et al., 1993). Then, shading techniques are used to simulate both the object surface characteristics and the position and orientation of surfaces with respect to light sources and the observer. The colored, semitransparent volume is then projected onto a plane perpendicular to the observer's direction. A ray is cast into the volume through each grid point on the projection plane. As the ray progresses through the volume, it computes the color and opacity at evenly spaced sample locations, and finally yields a single pixel color. Although volume rendering techniques provide a satisfactory display of biological structures, this method is limited to pure visualization and does not deliver quantitative information. In addition, the high anisotropy typical for live-cell imaging with low z-resolution limits the quality of this visualization technique.

These limitations are overcome by surface-rendering techniques, where the object surface is represented by polygons. The polygonal surface is displayed by projecting all the polygons onto a plane that is perpendicular to a selected viewing direction. The user can examine the displayed structure by changing the viewing direction interactively. The most commonly used method to triangulate the 3-D surface is the Marching Cube algorithm (Cline et al., 1988). The 3-D structure is defined by a threshold value throughout the data set, constructing an isosurface. The drawback of this method is that the surface of many biological structures cannot be defined using a single intensity value, resulting in loss of relevant information.

Quantitative image analysis

A great advantage of the combination of segmentation and surface reconstruction is the immediate access to quantitative information that corresponds to visual data (Eils et al., 1996; Gerlich et al., 2001; Gebhard et al., 2002). These approaches were designed to deal particularly with the high degree of anisotropy typical for 4-D live-cell recordings and to directly estimate quantitative parameters, e.g., the gray values in the segmented area of corresponding images can be measured to determine the amount and concentration of fluorescently labeled proteins in the segmented cellular compartments.

Measuring concentration changes by FRAP and fluorescence loss in photobleaching have become standard methods to evaluate diffusion, binding, and trafficking in live cells (for review see Phair and Misteli, 2001). These methods give direct access to kinetic parameters such as the diffusion coefficient of molecules (Axelrod et al., 1976; Edidin et al., 1976; Siggia et al., 2000) or exchange rates of molecules between different compartments (Hirschberg et al., 1998; Phair and Misteli, 2001). In combination with motion estimation techniques, parameters such as the velocity of the mass center for individual objects or for each point on the object surface can be readily accessed. Further, local parameters such as acceleration, tension, or bending (Bookstein, 1989) can be estimated. During motion estimation, global quantities are estimated such as the parameters of rotation and translation (Germain et al., 1999). The evolution of these eigenvalues can be used to characterize and analyze the observed motion.

Statistical analysis of velocity histograms can be applied to compute peak velocities corresponding to the most frequently occurring velocity values (Uttenweiler et al., 2000). Additionally, the dynamics of different objects can be compared by their velocity histograms. An alternative technique for statistical analysis is the confinement tree analysis of the intensity image (Mattes et al., 2001). For different threshold levels, objects (confiners) are segmented in the image. Calculated for different levels, the confiners define a confinement tree. Besides the estimation of global quantitative values (e.g., the global homogeneity of the motion), this approach allows the analysis and comparison of movements.

A challenge for future work is to better understand the biomechanical behavior of cellular structures, e.g., cellular membranes, by fitting a biophysical model to the data—an approach already successfully implemented in various fields of medical image analysis (Ferrant et al., 2001).

Applications

In vivo images of GFP-tagged proteins combined with computational imaging has revealed the dynamic organization of various nuclear subcompartments in the interphase nucleus. One example is the dynamics of nuclear speckles. Live-cell microscopy images of labeled pre-mRNA splicing factors were examined for evidence of regulated dynamics by computational segmentation and tracking (Eils et al., 2000). It was shown that the velocity and morphology of speckles, as well as budding events, were related to transcriptional activity.

Studies on nuclear architecture have revealed that chromatin (Marshall et al., 1997) undergoes slow diffusional motion, and that this movement is confined to relatively small regions in the nucleus. Importantly, the constraint on diffusional motion is regulated throughout the cell cycle (Heun et al., 2001; Vazquez et al., 2001). A long-standing question has been whether nuclear compartments can also undergo directed, energy-dependent movements, thereby providing a potential mechanism of regulated gene expression. Computational imaging revealed that several nuclear subcompartments do undergo directional transport dependent on metabolic energy (Calapez et al., 2002; Muratani et al., 2002; Platani et al., 2002).

The role of dynamic tension in actin polymerization in motile cells was investigated by analyzing polarized light images of the flow of the actin network and the motion of actin bundles and filopodia in crawling neurons (Oldenbourg et al., 2000). In a study on nuclear envelope breakdown, quantification and visualization of four-channel images with labeled chromatin, lamin-B receptor, nucleoporin, and tubulin (Beaudouin et al., 2002) revealed that piercing of the nuclear envelope by spindle microtubules was the mechanism responsible for forming the initial hole during nuclear envelope breakdown. To further investigate stresses on the nuclear envelope during breakdown, a crosswise grid pattern was bleached onto the nuclear envelope before breakdown. Stresses detected during hole formation were compared for the different grid vertices with respect to the positions of the hole, thus providing information about localized stresses during the tearing process (Mattes et al., 2001). Conversely, the effect of stress on the morphology of cells has been measured using an experimental approach of imposing known stresses on cells in solid-state culture. Changes in height, width, volume, and surface area of the cell are measured from 3-D confocal microscopy images, helping to understand the mechano-transduction response (Guilak, 1995).

The positioning of chromosomes during the cell cycle was investigated in live mammalian cells with a combined experimental and computational approach. In contrast to the random behavior predicted by a computer model of chromosome dynamics, a striking order of chromosomes was observed throughout mitosis (Gerlich et al., 2003). Further, strong similarities between daughter and mother cells were found for mitotic single chromosome positioning. These results support the existence of an active mechanism that transmits chromosomal positions from one cell generation to the next.

Summary

Computational imaging has been proven to be a powerful and integral part of cell biology. Computational imaging provides an important building block for the description of biological phenomena on a quantitative level, which is a prerequisite for mathematical models of dynamic structures and processes in the cell. In combination with models of biochemical processes and regulatory networks, computational imaging as part of the emerging field of systems biology (Kitano, 2002) will lead to the identification of novel principles of cellular regulation derived from the huge amount of experimental data that are currently generated.

References

- Adie, E.J., S. Kalinka, L. Smith, M.J. Francis, A. Marenghi, M.E. Cooper, M. Briggs, N.P. Michael, G. Milligan, and S. Game. 2002. A pH-sensitive fluor, CypHer 5, used to monitor agonist-induced G protein-coupled receptor internalization in live cells. Biotechniques. 33:1152–1154, 1156–1157. [DOI] [PubMed]

- Allen, R.D., and N.S. Allen. 1983. Video-enhanced microscopy with a computer frame memory. J. Microsc. 129:3–17. [DOI] [PubMed] [Google Scholar]

- Anandan, P. 1989. A computational framework and an algorithm for the measurement of visual motion. Int. J. Comp. Vis. 2:283–310. [Google Scholar]

- Arun, K.S., T.S. Huang, and S.D. Blostein. 1987. Least square fitting of two 3D point sets. Transactions of Pattern Analysis and Machine Intelligence. 9:698–700. [DOI] [PubMed] [Google Scholar]

- Axelrod, D., D.E. Koppel, J. Schlessinger, E. Elson, and W.W. Webb. 1976. Mobility measurement by analysis of fluorescence photobleaching recovery kinetics. Biophys. J. 16:1055–1069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beaudouin, J., D. Gerlich, N. Daigle, R. Eils, and J. Ellenberg. 2002. Nuclear envelope breakdown proceeds by microtubule-induced tearing of the lamina. Cell. 108:83–96. [DOI] [PubMed] [Google Scholar]

- Berg, H.C., and D.A. Brown. 1972. Chemotaxis in Escherichia coli analysed by three-dimensional tracking. Nature. 239:500–504. [DOI] [PubMed] [Google Scholar]

- Berns, G.S., and M.W. Berns. 1982. Computer-based tracking of living cells. Exp. Cell Res. 142:103–109. [DOI] [PubMed] [Google Scholar]

- Bookstein, F.L. 1989. Principal warps: thin-plate splines and the decomposition of deformations. Transactions of Pattern Analysis and Machine Intelligence. 11:567–585. [Google Scholar]

- Bornfleth, H., P. Edelmann, D. Zink, T. Cremer, and C. Cremer. 1999. Quantitative motion analysis of subchromosomal foci in living cells using four-dimensional microscopy. Biophys. J. 77:2871–2886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calapez, A., H.M. Pereira, A. Calado, J. Braga, J. Rino, C. Carvalho, J.P. Tavanez, E. Wahle, A.C. Rosa, and M. Carmo-Fonseca. 2002. The intranuclear mobility of messenger RNA binding proteins is ATP dependent and temperature sensitive. J. Cell Biol. 159:795–805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chalfie, M., Y. Tu, G. Euskirchen, W.W. Ward, and D.C. Prasher. 1994. Green fluorescent protein as a marker for gene expression. Science. 263:802–805. [DOI] [PubMed] [Google Scholar]

- Chen, H., J.R. Swedlow, M. Grote, J.W. Sedat, and D.A. Agard. 1995. Handbook of biological confocal microscopy. Handbook of Biological Confocal Microscopy. J.B. Pawley, editor. Plenum Press, New York. 197–209.

- Cline, H.E., W.E. Lorensen, S. Ludke, C.R. Crawford, and B.C. Teeter. 1988. Two algorithms for the three-dimensional reconstruction of tomograms. Med. Phys. 15:320–327. [DOI] [PubMed] [Google Scholar]

- Danuser, G. 2001. Super-resolution microscopy using normal flow decoding and geometric constraints. J. Microsc. 204:136–149. [DOI] [PubMed] [Google Scholar]

- Danuser, G., P.T. Tran, and E.D. Salmon. 2000. Tracking differential interference contrast diffraction line images with nanometre sensitivity. J. Microsc. 198:34–53. [DOI] [PubMed] [Google Scholar]

- Edidin, M., Y. Zagyansky, and T.J. Lardner. 1976. Measurement of membrane protein lateral diffusion in single cells. Science. 191:466–468. [DOI] [PubMed] [Google Scholar]

- Eils, R., S. Dietzel, E. Bertin, E. Schrock, M.R. Speicher, T. Ried, M. Robert-Nicoud, C. Cremer, and T. Cremer. 1996. Three-dimensional reconstruction of painted human interphase chromosomes: active and inactive X chromosome territories have similar volumes but differ in shape and surface structure. J. Cell Biol. 135:1427–1440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eils, R., D. Gerlich, W. Tvarusko, D.L. Spector, and T. Misteli. 2000. Quantitative imaging of pre-mRNA splicing factors in living cells. Mol. Biol. Cell. 11:413–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faugeras, O. 1993. Three-Dimensional Computer Vision. MIT Press, Cambridge, MA. 695 pp.

- Ferrant, M., A. Nabavi, B. Macq, F.A. Jolesz, R. Kikinis, and S.K. Warfield. 2001. Registration of 3-D intraoperative MR images of the brain using a finite-element biomechanical model. IEEE Trans. Med. Imaging. 20:1384–1397. [DOI] [PubMed] [Google Scholar]

- Gebhard, M., R. Eils, and J. Mattes. 2002. Segmentation of 3D objects using NURBS surfaces for quantification of surface and volume dynamics. Conference on Diagnostic Imaging and Analysis (ICDIA). Shanghai, China 125–130.

- Gerlich, D., J. Beaudouin, M. Gebhard, J. Ellenberg, and R. Eils. 2001. Four-dimensional imaging and quantitative reconstruction to analyse complex spatiotemporal processes in live cells. Nat. Cell Biol. 3:852–855. [DOI] [PubMed] [Google Scholar]

- Gerlich, D., J. Beaudouin, B. Kalbfuss, N. Daigle, R. Eils, and J. Ellenberg. 2003. Global chromosome positions are transmitted through mitosis in mammalian cells. Cell. 112:751–764. [DOI] [PubMed] [Google Scholar]

- Germain, F., A. Doisy, X. Ronot, and P. Tracqui. 1999. Characterization of cell deformation and migration using a parametric estimation of image motion. IEEE Trans. Biomed. Eng. 46:584–600. [DOI] [PubMed] [Google Scholar]

- Guilak, F. 1995. Compression-induced changes in the shape and volume of the chondrocyte nucleus. J. Biomech. 28:1529–1541. [DOI] [PubMed] [Google Scholar]

- Heun, P., T. Laroche, K. Shimada, P. Furrer, and S.M. Gasser. 2001. Chromosome dynamics in the yeast interphase nucleus. Science. 294:2181–2186. [DOI] [PubMed] [Google Scholar]

- Hirschberg, K., C.M. Miller, J. Ellenberg, J.F. Presley, E.D. Siggia, R.D. Phair, and J. Lippincott-Schwartz. 1998. Kinetic analysis of secretory protein traffic and characterization of golgi to plasma membrane transport intermediates in living cells. J. Cell Biol. 143:1485–1503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Inoue, S. 1981. Video image processing greatly enhances contrast, quality, and speed in polarization-based microscopy. J. Cell Biol. 89:346–356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jähne, B. 2002. Digital Image Processing. Springer Verlag, New York.

- Kitano, H. 2002. Computational systems biology. Nature. 420:206–210. [DOI] [PubMed] [Google Scholar]

- Konig, K., P.T. So, W.W. Mantulin, B.J. Tromberg, and E. Gratton. 1996. Two-photon excited lifetime imaging of autofluorescence in cells during UVA and NIR photostress. J. Microsc. 183:197–204. [PubMed] [Google Scholar]

- Lavallee, S., and R. Szeliski. 1995. Recovering the position and orientation of free-form objects from image contours using 3d distance maps. T-PAMI. 17:195–201. [Google Scholar]

- Loew, L.M. 1992. Voltage-sensitive dyes: measurement of membrane potentials induced by DC and AC electric fields. Bioelectromagnetics. 1:179–189. [DOI] [PubMed] [Google Scholar]

- Maintz, J.B., and M.A. Viergever. 1998. A survey of medical image registration. Med. Image Anal. 2:1–36. [DOI] [PubMed] [Google Scholar]

- Marshall, W.F., A. Straight, J.F. Marko, J. Swedlow, A. Dernburg, A. Belmont, A.W. Murray, D.A. Agard, and J.W. Sedat. 1997. Interphase chromosomes undergo constrained diffusional motion in living cells. Curr. Biol. 7:930–939. [DOI] [PubMed] [Google Scholar]

- Mattes, J., J. Fieres, J. Beaudouin, D. Gerlich, J. Ellenberg, and R. Eils. 2001. New tools for visualization and quantification in dynamic processes: Application to the nuclear envelope dynamics during mitosis. MICCAI 2001 Lecture Notes in Computer Science. 2208:1323–1325. [Google Scholar]

- Mitiche, A., and P. Bouthemy. 1996. Computation and analysis of image motion: a synopsis of current problems and methods. Int. J. Comp. Vis. 19:29–55. [Google Scholar]

- Muratani, M., D. Gerlich, S.M. Janicki, M. Gebhard, R. Eils, and D.L. Spector. 2002. Metabolic-energy-dependent movement of PML bodies within the mammalian cell nucleus. Nat. Cell Biol. 4:106–110. [DOI] [PubMed] [Google Scholar]

- Oldenbourg, R., K. Katoh, and G. Danuser. 2000. Mechanism of lateral movement of filopodia and radial actin bundles across neuronal growth cones. Biophys. J. 78:1176–1182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phair, R.D., and T. Misteli. 2001. Kinetic modelling approaches to in vivo imaging. Nat. Rev. Mol. Cell Biol. 2:898–907. [DOI] [PubMed] [Google Scholar]

- Platani, M., I. Goldberg, A.I. Lamond, and J.R. Swedlow. 2002. Cajal body dynamics and association with chromatin are ATP-dependent. Nat. Cell Biol. 4:502–508. [DOI] [PubMed] [Google Scholar]

- Qian, H., M.P. Sheetz, and E.L. Elson. 1991. Single particle tracking. Analysis of diffusion and flow in two-dimensional systems. Biophys. J. 60:910–921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohr, K. 1997. On 3D differential operators for detecting point landmarks. Image and Vision Computing. 15:219–233. [Google Scholar]

- Sekar, R.B., and A. Periasamy. 2003. Fluorescence resonance energy transfer (FRET) microscopy imaging of live cell protein localizations. J. Cell Biol. 160:629–633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siggia, E.D., J. Lippincott-Schwartz, and S. Bekiranov. 2000. Diffusion in inhomogeneous media: theory and simulations applied to whole cell photobleach recovery. Biophys. J. 79:1761–1770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szeliski, R. 1996. Video mosaics for virtual environments. IEEE Computer Graphics and Applications. 16:22–30. [Google Scholar]

- Terzopoulos, D., J. Platt, and K. Fleischer. 1991. Heating and melting deformable models. The Journal of Visualization and Computer Animation. 2:68–73. [Google Scholar]

- Thomas, C., P. DeVries, J. Hardin, and J. White. 1996. Four-dimensional imaging: computer visualization of 3D movements in living specimens. Science. 273:603–607. [DOI] [PubMed] [Google Scholar]

- Tvarusko, W., M. Bentele, T. Misteli, R. Rudolf, C. Kaether, D.L. Spector, H.H. Gerdes, and R. Eils. 1999. Time-resolved analysis and visualization of dynamic processes in living cells. Proc. Natl. Acad. Sci. USA. 96:7950–7955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uttenweiler, D., C. Veigel, R. Steubing, C. Gotz, S. Mann, H. Haussecker, B. Jahne, and R.H. Fink. 2000. Motion determination in actin filament fluorescence images with a spatio-temporal orientation analysis method. Biophys. J. 78:2709–2715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vazquez, J., A.S. Belmont, and J.W. Sedat. 2001. Multiple regimes of constrained chromosome motion are regulated in the interphase Drosophila nucleus. Curr. Biol. 11:1227–1239. [DOI] [PubMed] [Google Scholar]

- White, N.S. 1995. Visualization systems for multidimensional CLSM images. Handbook of Biological Confocal Microscopy. J.B. Pawley, editor. Plenum Press, New York. 211–254.

- Wright, S.J., V.E. Centonze, S.A. Stricker, P.J. DeVries, S.W. Paddock, and G. Schatten. 1993. Introduction to confocal microscopy and three-dimensional reconstruction. Methods Cell Biol. 38:1–45. [DOI] [PubMed] [Google Scholar]