Abstract

We investigated the influence of experimentally guided saccades and fixations on fMRI activation in brain regions specialized for face and object processing. Subjects viewed a static image of a face while a small fixation cross made a discrete jump within the image every 500 ms. Subjects were required to make a saccade and fixate the cross at its new location. Each run consisted of alternating blocks in which the subject was guided to make a series of saccades and fixations that constituted either a Typical or an Atypical face scanpath. Typical scanpaths were defined as a scanpath in which the fixation cross landed on the eyes or the mouth in 90% of all trials. Atypical scanpaths were defined as scanpaths in which the fixation cross landed on the eyes or mouth on 12% of all trials. The average saccade length was identical in both typical and atypical blocks, and both were preceded by a baseline block where the fixation cross made much smaller jumps in the middle of the screen. Within the functionally predefined face area of the ventral occipitotemporal cortex (VOTC), typical scanpaths evoked significantly more activity when compared to atypical scanpaths. A voxel-based analysis revealed a similar pattern in clusters of voxels located within VOTC, frontal eye fields, superior colliculi, intraparietal sulcus, and inferior frontal gyrus. These results demonstrate that fMRI activation is highly sensitive to the pattern of eye movements employed during face processing, and thus illustrates the potential confounding influence of uncontrolled eye movements for neuroimaging studies of face and object perception in normal and clinical populations.

Keywords: fMRI, face perception, fusiform gyrus

INTRODUCTION

Humans typically view the faces of others with a stereotypical pattern of saccades and fixations, or scanpaths, that strongly favor core facial features such as the eyes (Walker-Smith et al., 1977; Luria and Strauss, 1978). An emerging literature suggests that such scanpaths are altered by factors such as familiarity (Rizzo et al., 1987) and emotional expression (Isaacowitz et al., 2006), and that scanpaths for viewing faces may be abnormal in such clinical disorders as autism (Schultz et al., 2000) and schizophrenia (Gur et al., 2002). This article is concerned with the influence of a subject's scanpath upon activation evoked by face images in the ventral occipitotemporal cortex (VOTC)—a region we define to encompass the fusiform, lingual, and inferior temporal gyri and interposed sulci. There are at least two reasons for examining the relationship between saccades and VOTC activation. The first concerns the goal of explicating VOTC functions, and the second concerns the possible confounding effects of systematically different scanpaths when evaluating reports of diminished face activation in clinical populations.

High level visual processing of faces and objects has been studied in human VOTC using lesion analysis (Damasio et al., 1982; Bauer and Trobe, 1984), neuroimaging (Sergent, 1993; Haxby et al., 1994; Puce et al., 1995; Kanwisher et al., 1997), direct cortical stimulation (Allison et al., 1994a), and subdural cortical electrophysiology (Allison et al., 1994a, 1999; McCarthy et al., 1999; Puce et al., 1999). Cortical electrophysiological recordings in humans have demonstrated that regions within the VOTC respond selectively to faces and to letterstrings within ∼170–200 ms (Allison et al., 1994b). Perhaps due to the short latency of these domain-specific responses, and the fact that VOTC occurs just downstream of primary visual cortex in the anatomical sequence of visual processing, activity in this region measured by neuroimaging methods such as functional MRI (fMRI) and PET may also be interpreted implicitly to reflect early and local processing within the VOTC. PET and fMRI studies of face activation in the VOTC reveal activations that are strongly influenced by attention (Haxby et al., 1994; Clark et al., 1996; Wojciulik et al., 1998), emotional content (Vuilleumier et al., 2001), racial similarity (Kim et al., 2006) and familiarity (Henson et al., 2000). However, as scanpaths are also influenced by some of these same manipulations (Rizzo et al., 1987; Gothard et al., 2004; Isaacowitz et al., 2006), it may be that at least some high-order processing of faces may influence VOTC activation indirectly by causing a systematic change in a subject's scanpath of the face.

Diminished VOTC activation in response to faces has been reported for individuals with disorders such as autism (Schultz et al., 2000) and schizophrenia (Gur et al., 2002) compared to healthy controls. Scanpaths for faces have been reported to be abnormal in schizophrenia (Williams et al., 1999) and also in autism (Klin et al., 2002, Pelphrey et al., 2002). An important recent study reported that autistic individuals who made more fixations on the eyes of a displayed face had greater fusiform face activation than those with fewer such fixations (Dalton et al., 2005). No relationship was found between fixations upon the eyes and fusiform face activation in control subjects, but the authors suggested that controls may have been at ceiling for eye fixations, and recommended that an experimental manipulation of scanpaths should be conducted to further investigate this issue.

We recently conducted such an experimental manipulation of subjects’ scanpaths as they viewed in separate runs one of three background images, a face, a flower, or a uniform gray field (Morris and McCarthy, 2006). A small fixation cross made discrete jumps every 500 ms within the background image, and subjects were required to make a saccade to the cross's new location and fixate. A block design was used that alternated small central scanpaths with large spatially extensive scanpaths of the same background image. We demonstrated that large saccades evoked substantially more activation within VOTC than small saccades for all background images. Furthermore, activation within predefined face-specific regions of fusiform gyrus (FFG) were activated more when subjects were making large saccades over a face than when making large saccades over a flower or gray field. These prior data thus establish that the magnitude of saccades can differentially influence category-specific activity in the VOTC. However, this study did not address whether the precise features of scanpaths composed of saccades of similar magnitude can also influence VOTC activity. Here we used similar methods to determine whether guiding a subject's saccades to approximate a statistically typical scanpath over the picture of a human face would differentially activate VOTC and predefined face-specific region compared to an atypical scanpath composed of similar length saccades, but which included far fewer fixations on core face features such as the eyes and mouth.

MATERIALS AND METHODS

Subjects

Two experiments were performed separately and with different groups of subjects: an fMRI experiment and an eye-tracking experiment conducted outside of the scanner. The experimental protocols were approved by the Duke University Institutional Review Board and all subjects provided informed consent. All subjects had normal or corrected-to-normal vision, and all were screened against neurological and psychiatric illnesses. Eleven subjects (ages 20–26 years; 6 females, 5 males) participated in the eye-tracking study. Twelve subjects (ages 20–30 years; 8 females, 4 males) participated in the fMRI study.

Experimental design

Eye-tracking

The purpose of the eye tracking study was to determine whether subjects could accurately fixate a small cross as it made discrete jumps in location over an unchanging picture of a face. Eye-tracking data were collected on a Tobii 1750 eye-tracker with 50 Hz sampling. Subjects were seated with their eyes positioned 50 cm from the center of the monitor.

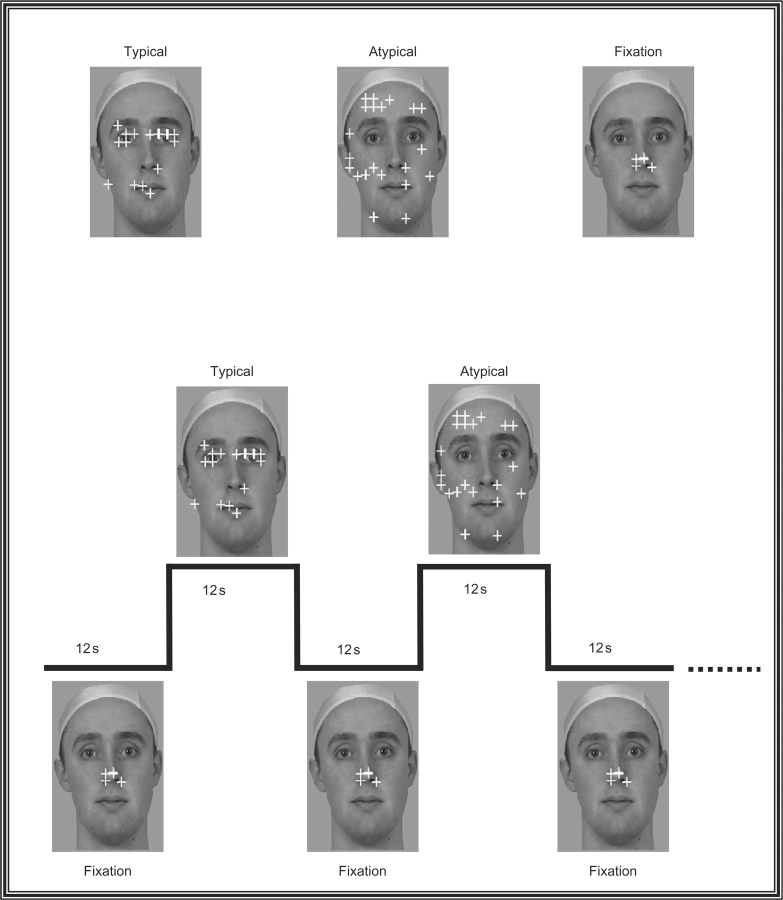

Figure 1 illustrates the experimental design. On each of six runs, the subject viewed a single image of a face while a small fixation cross made discrete jumps every 500 ms to a different location on the face. The subject was required to make a saccade and fixate the cross at each new location. The run was divided into 10 alterations of a 12 s block in which subjects made large saccades to widely separated locations on the background image, followed by a 12 s block in which subjects made small saccades localized in the center of the image. The large saccade blocks were further subdivided into two types of scanpaths that differed on the number of core features the fixation cross landed upon. In a typical scanpath, the fixation cross landed on the eyes on 70% of all jumps, and the mouth on 20% of jumps. These percentages were obtained from a separate study by Pelphrey and colleagues (2002) in a group of healthy control subjects who were free viewing faces. In an atypical scanpath, the fixation cross landed on either the eyes or mouth on only 12% of all trials. Despite the differences in fixation location in the typical and atypical blocks, the average length of each individual saccade was the same in both conditions.

Fig. 1.

Experimental design. The top panel shows the three different types of blocks: typical, atypical and fixation. In each run, subjects alternated between making large typical and atypical saccadic eye movements in order to track a yellow fixation cross over a static face image. A typical scanpath was operationally defined as a scanpath where the fixation landed upon the eyes or mouth approximately 90% of the time. An atypical scanpath was operationally defined as a scanpath where the fixation landed upon the eyes or mouth approximately 12% of the time. Typical and atypical blocks were always separated by a fixation block where the subject had to make small saccades to track the fixation cross while it made small movements in the center of the screen. The bottom panel shows the design for one complete cycle in this experiment. In each run the face background is present throughout the duration of the entire run. The run was divided into 10 alterations of a 12 s block in which subjects made small fixation saccades localized in the center of the image, followed by a 12 s block in which subjects made large saccades that followed either a typical or atypical scanpath.

Typical and atypical trials were alternated in each block with the order reversed across runs. The eye-tracker was calibrated before each run. In addition, each run started and ended with a blinking central fixation cross that persisted for 5 s and to which subjects were required to fixate. This internal calibration was used to check the fidelity of the calibration over the duration of the run.

The face image subtended a visual angle of 13° wide and 15.5° high and was superimposed upon a uniform gray field subtending a visual angle of 14° wide and 23° high. The average Euclidean distance of a fixation cross jump for typical and atypical conditions was 5.71°, while the average Euclidian distance of a fixation cross jump during the baseline ‘small fixation block’ was 1.73°.

fMRI

The main experimental task used a similar design but modified to accommodate the screen dimensions of the LCD goggles used as the visual display system in the scanner. There were six runs consisting of 10 alterations of 12 s blocks. The uniform gray field subtended a visual angle of 14° wide and 25° high. The face subtended a visual angle of 10.35° wide and 17.5° high, and each image was superimposed upon the same uniform gray field described earlier. The average Euclidean distance of a fixation jump for the large saccade condition was 3.81°. The average distance of a fixation jump for the small saccade condition was 0.75°.

In addition to these six experimental runs, we acquired two runs of a face-localizer task to independently identify face-specific voxels in the VOTC. Each localizer run consisted of 10 alternations of a 12 s block of faces (24 different faces per block or 2 faces/s) followed by 12 s block of flowers (24 different flowers per block). We chose to contrast faces with flowers because both are classes of living things with symmetry. Also, we have shown strong differentiation between patterns of activation for faces and flowers in a prior study (McCarthy et al., 1997). We will refer operationally to voxels in the VOTC showing significant activation to faces relative to flowers as face-specific voxels, and those showing significant activation to flowers relative to a baseline of faces as nonface-specific voxels.

Imaging

Scanning was performed on a General Electric 4T LX NVi MRI scanner system equipped with 41 mT/m gradients (General Electric, Waukesha, Wisconsin, USA). A quadrature birdcage radio frequency (RF) head coil was used to transmit and receive. The subject's head was immobilized using a vacuum cushion and tape. Sixty-eight high resolution images were acquired using a 3D fast SPGR pulse sequence (TR = 500 ms; TE = 20 ms; FOV = 24 cm; image matrix = 2562; voxel size = 0.9375 mm× 0.9375 mm× 1.9 mm) and used for coregistration with the functional data. These structural images were aligned in a near axial plane defined by the anterior and posterior commissures. Whole brain functional images were acquired using a gradient-recalled inward spiral pulse sequence (Glover and Law, 2001; Guo and Song, 2003) sensitive to blood oxygenation level dependent (BOLD) contrast (TR, 1500 ms; TE, 35 ms; FOV, 24 cm; image matrix, 642; α = 62°; voxel size, 3.75 mm× 3.75 mm× 3.8 mm; 34 axial slices). The functional images were aligned similarly to the structural images. A semi-automated high-order shimming program ensured global field homogeneity.

Data analysis

Eye-tracking

The accuracy of the eye tracker was rated by the manufacturer as 0.5–1°. A fixation was defined as an interval of at least 160 ms in which the eye position remained within the confines of a circle with a ∼1° radius. An accurate fixation was defined as a fixation that occurred with within ∼2° of the center of the cross.

fMRI

Image preprocessing was performed with custom programs and SPM modules (Wellcome Department of Cognitive Neurology, UK). Head motion was detected by center of mass measurements. No subject had greater than a 3 mm deviation in the center of mass in any dimension. Images were time-adjusted to compensate for the interleaved slice acquisition, then motion corrected to compensate for small head movements, and finally smoothed with an 8 mm isotropic Gaussian kernel prior to statistical analysis.

Our primary analysis employed a functional region of interest (ROI) approach in which the ROIs were defined for each individual by the results of his or her functional localizer runs. In the localizers, face-specific activity was identified by measuring the t-difference in activation for faces and flowers in the localizer task. Each subject's time-adjusted functional data was plotted on his or her anatomy and face-related activity was defined as activity in the VOTC where faces evoked significantly more activity than flowers (P < 0.01). Nonface-specific activity was identified as activity in the VOTC where flowers evoked significantly more activity than faces (P < 0.01). Activity within these face-specific and nonface-specific regions in each subject's individual anatomical space was then measured for typical and atypical blocks relative to the fixation baseline condition These functional ROI analyses comprised the primary method for evaluating the influence of typical and atypical scanpaths on face-specific and nonface-specific brain regions in VOTC.

Our secondary analyses employed a voxel-based analytical approach. These additional voxel-based analyses were performed as a check to confirm the individual ROI analysis and to search in an exploratory manner for other brain regions in which the activation evoked by saccades was modulated by experimental condition. The realigned and motion corrected images used for the ROI analysis described above were first normalized to the Montréal Neurological Institute (MNI) template found in SPM 99. These normalized functional data were then high-pass filtered and spatially smoothed with an 8 mm isotropic Gaussian kernel prior to statistical analysis. These realigned, motion corrected, normalized and smoothed data were used in the remaining analyses described subsequently.

A random-effects assessment of the differences among the two conditions (typical and atypical) at the peak of the hemodynamic response (HDR) was performed. This analysis consisted of the following steps: (i) The epoch of image volumes beginning two images before (−3.0 s) and 16 images after (27 s) the onset of each large saccade block was excised from the continuous time series of volumes, allowing for us to visualize an entire cycle of large and small saccade blocks. (ii) The average intensity of the HDR peak (6–18 s) was computed. A t-statistic was then computed at each voxel within the brain to quantify the HDR differences between conditions. This process was performed separately for each subject. (iii) The individual t-maps created in the preceding step were then subjected to a random-effects analysis that assessed the significance of differences across-subjects.

To reduce the number of statistical comparisons, the results of the random-effects analyses computed earlier were then restricted to only those voxels in which a significant HDR was evoked by any of the three different conditions. For this analysis, we used a false discovery rate (FDR) (Genovese et al., 2002) threshold of 0.01 (t(10) > 5.37). The voxels with significant HDRs were identified in the following steps: (iv) The single trial epochs for each subject were averaged separately for each of the three conditions, and the average BOLD-intensity signal values for each voxel within the averaged epochs were converted to percent signal change relative to the prestimulus baseline. (v) The time waveforms for each voxel were correlated with a canonical reference waveform and t-statistics were calculated for the correlation coefficients for each voxel. This procedure provided a whole-brain t-map in MNI space for each of the three conditions. (vi) The t-maps for each subject and for both conditions were used to calculate an average t-map for the union of two different trial types across subjects. We then identified active voxels as those that surpassed the FDR threshold. (vii) The difference t-map computed in step (iii) above was then masked by the results of step (vi). Thus, the differences in HDR amplitude between conditions were only evaluated for those voxels in which at least one condition evoked a significant HDR as defined above. The threshold for significance in the HDR peak was set at P < .01 (two-tailed, uncorrected) and a minimal spatial extent of 12 voxels.

RESULTS

Eye-tracking

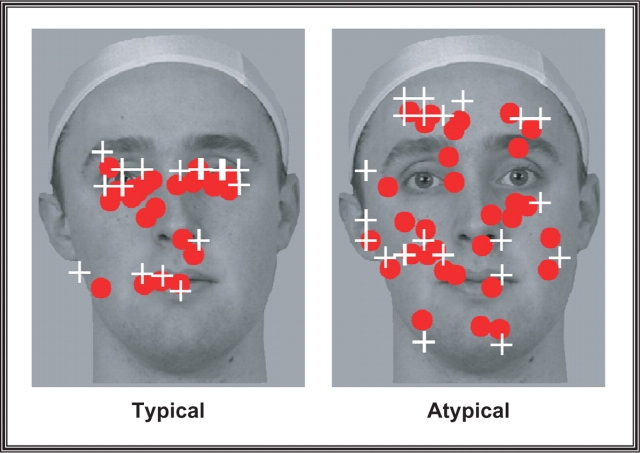

Subjects achieved a high level of accuracy by acquiring 83% of targets in the typical condition and 78% of targets in the atypical condition. There was a trend (P = 0.054) for subjects to be more accurate for the typical trials that failed to meet the usual minimal standard of statistical significance. Figure 2 plots the average fixations for both typical and atypical conditions for one representative subject.

Fig. 2.

Eye-tracking results. The results from one representative subject demonstrating relative difference in the amount of eye movements made in typical and atypical scanpath conditions. Each red sphere reflects a fixation made by the subject while the white crosshairs indicate locations to where the fixation cross jumped during the run.

fMRI

Functional localizer analysis

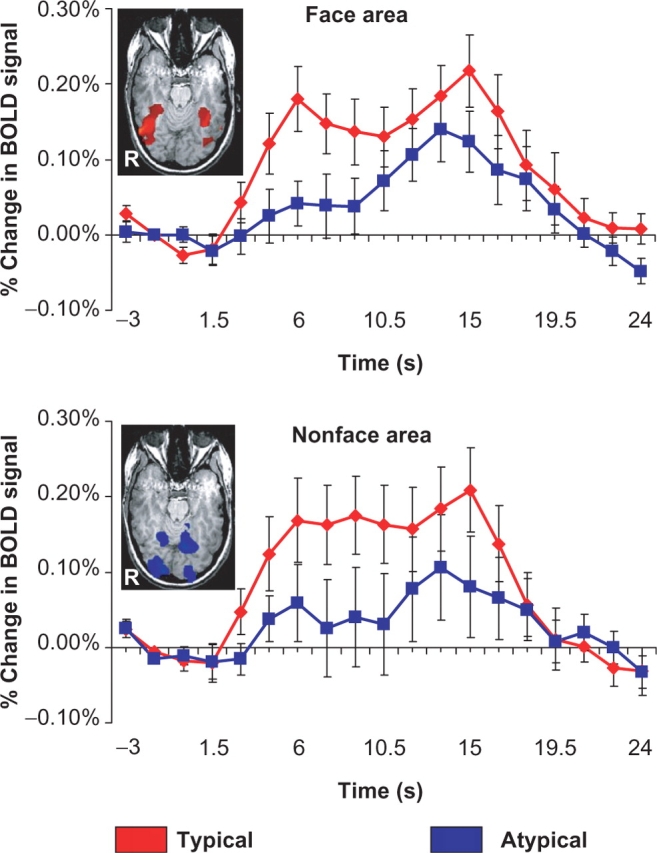

The results from each subject's independent face localizer runs defined two distinct regions within the VOTC. Lateral portions of the VOTC, within the FFG showed strong activation for faces, while medial aspects of the VOTC including the lingual and parahippocampal gyri showed a strong preference for the nonface (flower) stimuli. The measurement of saccade-related activity in each subject's face-specific and nonface-specific voxels as defined by the results of his or her individual localizer task was used to test our main hypothesis that fusiform face activity is influenced by the pattern of saccades made over a face picture. The top panel of Figure 3 displays the average amplitude and time course of activation evoked by saccades made in typical and atypical blocks in each individual's functionally defined face area. A paired sample t-test conducted on evoked amplitude, averaged from 4.5–12 s after the onset of the block, revealed a significant difference between typical and atypical blocks (t(11) = 3.31, P < 0.01), where typical scanpaths evoked significantly greater amplitude relative to atypical scanpaths. The bottom panel of Figure 3 displays the average amplitude and time course of activation evoked by saccades made in typical and atypical blocks in each individual's nonface-specific voxels. A paired sample t-test conducted on evoked amplitude, averaged from 4.5–12 s after the onset of the block, revealed a significant difference between typical and atypical blocks (t(11) = 3.09, P < 0.01), where typical scanpaths again evoked significantly greater amplitude relative to atypical scanpaths.

Fig. 3.

Functional region of interest analysis. The top and bottom panels reflect the results of a functional region-of-interest analysis conducted on individual subjects’ data. The face and nonface-specific regions of the VOTC were identified for each subject based on the results of an independent localizer scan. We have included a representative subjects’ predefined face and nonface-specific regions as insets within the graphs. The face specific region is reflected by a red colormap while the nonface-specific region is reflected by a blue colormap. Following identification, we then quantified activity in this region during typical and atypical scanpaths blocks relative to the baseline (small saccade) fixation condition. In both face and nonface-specific voxels of the VOTC, typical scanpaths evoked a stronger amplitude BOLD signal relative to atypical scanpaths.

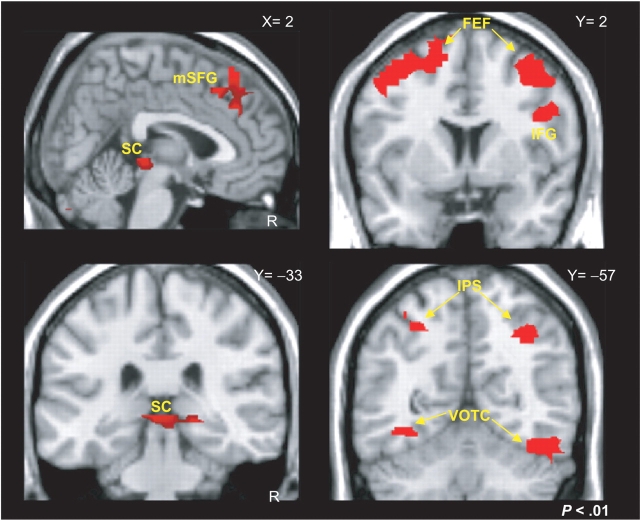

Voxel-Based Analysis

We supplemented these individual subject analyses with a voxel-based analysis of normalized data. Both typical and atypical blocks evoked significant activations relative to the small saccade fixation condition in regions previously identified as participating in the oculomotor system including bilateral frontal eye fields, intraparietal sulcus, and VOTC. Our voxel-based analysis was constructed to contrast typical and atypical saccade blocks at regions that demonstrated significant signal change during large saccade blocks relative to the small saccade fixation blocks. This analysis revealed several distinct clusters of activity that significantly differentiated between the typical and atypical conditions (red overlay of Figure 4). The MNI coordinates for regions of typical > atypical and atypical > typical saccade-related activity are provided in Table 1.

Fig. 4.

Voxel-based analysis. The red colormaps reveal the effects of a random-effects contrast where typical scanpaths evoked a significantly stronger BOLD response relative to atypical scanpaths. Regions showing significant differences included bilateral frontal eye fields (FEF), medial portions of the superior frontal gyrus (mSFG), right inferior frontal gyrus (IFG), the superior colliculi (SC), bilateral intraparietal sulci (IPS) and bilateral ventral occipitotemporal cortex (VOTC).

Table 1.

Summary of a random effects analyses contrasting typical and Atypical scanpaths

| Region | Side | X | Y | Z | BA |

|---|---|---|---|---|---|

| Typical > Atypical | |||||

| Fusiform gyrus | R | 43 | −61 | −14 | 37 |

| Fusiform gyrus | L | −39 | −61 | −11 | 37 |

| Middle frontal gyrus | R | 37 | 8 | 60 | 6 |

| Middle frontal gyrus | L | −39 | 10 | 55 | 6 |

| Inferior frontal gyrus | R | 48 | 6 | 28 | 9 |

| Superior frontal gyrus | R | 4 | 23 | 57 | 8 |

| Superior frontal gyrus | L | −4 | 28 | 38 | 8 |

| Superior parietal loubule | R | 34 | −60 | 50 | 7 |

| Superior parietal loubule | L | −28 | −56 | 50 | 7 |

| Superior colliculi | R | 2 | −36 | 4 | |

| Atypical > Typical | |||||

| Cuneus | R | 7 | −81 | 18 | 18 |

| Cuneus | L | −6 | −87 | 15 | 18 |

X Y and Z refer to the stereotaxic MNI coordinates of the center of activation within an ROI. R = right hemisphere, L = left hemisphere, BA= Broadman's area. The threshold for significance of the clusters reported here was set at a voxelwise uncorrected P < 0.01 (two-tailed).

DISCUSSION

Our results show that the pattern of fixations and saccades used by a subject in viewing a face image strongly influences the amplitude and spatial extent of activation in the VOTC generally, and the predefined fusiform face region in particular. Typical scanpaths, with frequent fixations on the eyes and mouth, evoked significantly more activity than atypical scanpaths with far fewer such fixations. This difference occurred despite the overall equivalence in average Euclidian distance of saccades in both conditions.

Our findings are consistent with the conclusions drawn by Dalton and colleagues (2005) who posited that hypoactivation of the fusiform face region in autistic individuals is due to their tendency to make fewer fixations on the eyes. As our study experimentally manipulated scanpath within individuals, our results strengthen their conclusions which were based upon correlations between autistic individuals with greater or lesser fixations on the eyes of a face image and the degree of fusiform activity in each. Our results also extend their conclusions, as we studied healthy adult subjects—a group that was at ceiling for eye fixations in their study and hence did not show a correlation between scanpath and fusiform activation.

Using similar methods, we recently demonstrated that the magnitude of saccades made over face, flower and uniform gray field images influenced the amplitude of signal measured in the VOTC (Morris and McCarthy, 2006). The increase in activation associated with large saccades was particularly great for the face and flower images. Here we extend our own findings to show that, for at least faces, the loci of fixations upon core features (eyes and mouth) within the face for saccades of equivalent magnitude strongly influences VOTC and fusiform activation. In our prior study, fixations upon the eyes were relatively infrequent (similar to the Atypical condition here), and because fixations upon the eyes only occurred during the large saccade condition, our design confounded fixations upon the eyes with the small and large saccade conditions. On that basis, one could posit that all of the effect of our prior study could be attributed to fixations upon the eyes and not to saccade magnitude per se. We do not believe that this interpretation is likely, because small and large saccades had a strong effect on VOTC activation when made over a static image of a radially symmetric flower, and a uniform gray field—neither of which have well-defined core features such as a human face.

Activation of the fusiform by face images can be readily demonstrated in the absence of saccades—as we showed recently using face images presented for only 33 ms and thus too briefly for directed saccades (Morris et al., 2005). However, with longer exposure and free viewing, both humans and nonhuman primates scan faces in a stereotypical fashion, with most fixations made on the core features (Walker-Smith et al., 1977; Luria and Strauss, 1978; Klin et al., 2002; Pelphrey et al., 2002). This stereotypical scanning behavior can be modified by factors such as face familiarity (Rizzo et al., 1987; Gothard et al., 2004) and emotional face expression (Isaacowitz et al., 2006), and by psychiatric disorders (Klin et al., 2002; Pelphrey et al., 2002), (Williams et al., 1999). Thus, in the absence of good control over fixations and scanpaths, caution must be exercised in attributing as primary to fusiform face processing those functional characteristics that may be secondary to a subjects viewing pattern. Also, one must be cautious in attributing dysfunction to a cortical region in a patient group, such as autistics and schizophrenics, where the apparent dysfunction may also be secondary to systematic differences in scanpaths.

There are at least two limitations to the present study. First, as the scanpaths in the Typical condition were probabilistically constrained to fall upon the eyes or mouth on 90% of saccades, these scanpaths were more predictable than scanpaths in the Atypical condition and this might explain at least part of the differences in activation. We feel that this explanation of our findings is unlikely because the temporal order and exact location in which fixations occurred on the eyes and mouth was randomized within each block. Also, contrary to our findings, we might expect that a more predictable scanpath would be more susceptible to habituation effects with a resulting decrease in releative activation. Our results show the opposite effect, that is, more activation occurred in the typical than atypical condition. The second limitation is that our saccade manipulation was artificial and not comparable to the kind of saccades made in normal perception. For example, during naturalistic viewing fixations typically last 90–200 ms (Gezeck et al., 1997), while in the current experiment fixations were paced by a fixation cross that jumped every 500 ms. However, as the average distance of each saccade was equilibrated between our atypical and typical scanpaths, the only difference between conditions was the likelihood of a fixation occurring on a core facial feature.

An unpredicted finding in the current study was enhanced activity for typical relative compared to atypical scanpaths in the predefined nonface-specific area of the VOTC. Whether this enhancement is related to face processing per se, or to more general changes experienced by the visual system as the subject's eyes scan the face cannot be answered by these data. The result suggests, however, that special care be taken for face and object perception experiments employing differential task demands while permitting free-viewing. They also underscore the importance of measuring activity in face and nonface regions when using localizer tasks to predefine functional regions of interest.

Finally, our voxel-based analysis also identified other regions in which greater activation occurred during typical compared to atypical scanpaths. These regions included the IPS and IFG, which we often found active in face localizer and other face activation tasks. It is likely, therefore, that activation differences related to differences in scanpaths extend beyond the VOTC. We did not find that differences in scanpaths influenced activation in the posterior superior temporal sulcus (pSTS) in this study, or in our prior study (Morris and McCarthy, 2006). This was surprising given the pSTS region's reported preference for face stimuli (Puce et al., 1996) and special preference for eyes (Hoffman and Haxby, 2000). The differential roles of the ventral and lateral surfaces of the lateral occipitotemporal cortex in face and body processing is still a matter of intense inquiry. Their apparent differential sensitivities to manipulations of gaze may prove to be an important distinction.

We also did not find differential activation related to scanpath within the amygdala. Dalton and colleagues (2005) found an interesting correlation between fixations upon the eyes of a face and signal amplitude in the amygdala in autistic individual. However, Dalton and colleagues did not find similar differences findings in their sample of typically developing adults, such as we studied here. This suggests that repeated viewing of the same face in typically developing adults may lead to habituation of amygdala activity.

Acknowledgments

This research was supported by the North Carolina Studies to Advance Autism Research and Treatment Center, Grant U54 MH66418 (Dr. J. Piven, PI) from the National Institutes of Health and by MH05286 (G.M.). J.P.M. was supported by a Ruth Kirschstein National Research Service Award, Grant F32 MH073367. K.A.P. was supported by a Career Scientist Development Award, Grant K01 MH071284-0. G.M. is a Department of Veterans Affairs Senior Research Career Scientist and was partially supported by the VISN6 Mental Illness Research, Education, and Clinical Center (MIRECC). We thank Steven Green, Brian Marion, and Jacki Price for assistance with stimulus development, data collection and data analysis.

Footnotes

Conflict of Interest

None declared.

REFERENCES

- Allison T, Ginter H, McCarthy G, et al. Face recognition in human extrastriate cortex. Journal of Neurophysiology. 1994a;71:821–5. doi: 10.1152/jn.1994.71.2.821. [DOI] [PubMed] [Google Scholar]

- Allison T, McCarthy G, Nobre A, Puce A, Belger A. Human extrastriate visual cortex and the perception of faces, words, numbers, and colors. Cerebral Cortex. 1994b;4:544–54. doi: 10.1093/cercor/4.5.544. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception I. Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cerebral Cortex. 1999;9:415–30. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Bauer RM, Trobe JD. Visual memory and perceptual impairments in prosopagnosia. Journal of Clinical Neuro-Ophthalmology. 1984;4:39–46. doi: 10.3109/01658108409019494. [DOI] [PubMed] [Google Scholar]

- Clark VP, Keil K, Maisog JM, Courtney S, Ungerleider LG, Haxby JV. Functional magnetic resonance imaging of human visual cortex during face matching: a comparison with positron emission tomography. Neuroimage. 1996;4:1–15. doi: 10.1006/nimg.1996.0025. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, et al. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8:519–26. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio AR, Damasio H, Van Hoesen GW. Prosopagnosia: Anatomic basis and behavioral mechanisms. Neurology. 1982;32:331–41. doi: 10.1212/wnl.32.4.331. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15:870–8. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Gezeck S, Fischer B, Timmer J. Saccadic reaction times: a statistical analysis of multimodal distributions. Vision Research. 1997;37:2119–31. doi: 10.1016/s0042-6989(97)00022-9. [DOI] [PubMed] [Google Scholar]

- Glover GH, Law CS. Spiral-in/out BOLD fMRI for increased SNR and reduced susceptibility artifacts. Magnetic Resonance in Medicine. 2001;46:515–22. doi: 10.1002/mrm.1222. [DOI] [PubMed] [Google Scholar]

- Gothard KM, Erickson CA, Amaral DG. How do rhesus monkeys (Macaca mulatta) scan faces in a visual paired comparison task? Animal Cognition. 2004;7:25–36. doi: 10.1007/s10071-003-0179-6. [DOI] [PubMed] [Google Scholar]

- Guo H, Song AW. Single-shot spiral image acquisition with embedded z-shimming for susceptibility signal recovery. Journal of Magnetic Resonance Imaging. 2003;18:389–95. doi: 10.1002/jmri.10355. [DOI] [PubMed] [Google Scholar]

- Gur RE, McGrath C, Chan RM, et al. An fMRI study of facial emotion processing in patients with schizophrenia. The American Journal of Psychiatry. 2002;159:1992–9. doi: 10.1176/appi.ajp.159.12.1992. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. The Journal of Neuroscience. 1994;14:6336–53. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson R, Shallice T, Dolan R. Neuroimaging evidence for dissociable forms of repetition priming. Science. 2000;287:1269–72. doi: 10.1126/science.287.5456.1269. [DOI] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience. 2000;3:80–4. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Wadlinger HA, Goren D, Wilson HR. Selective preference in visual fixation away from negative images in old age? An eye-tracking study. Psychology and Aging. 2006;21:40–8. doi: 10.1037/0882-7974.21.1.40. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim JS, Yoon HW, Kim BS, Jeun SS, Jung SL, Choe BY. Racial distinction of the unknown facial identity recognition mechanism by event-related fMRI. Neuroscience Letters. 2006;397:279–84. doi: 10.1016/j.neulet.2005.12.061. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59:809–16. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Luria SM, Strauss MS. Comparison of eye movements over faces in photographic positives and negatives. Perception. 1978;7:349–58. doi: 10.1068/p070349. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Belger A, Allison T. Electrophysiological studies of human face perception II. Response properties of face-specific potentials generated in occipitotemporal cortex. Cerebral Cortex. 1999;9:431–44. doi: 10.1093/cercor/9.5.431. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. Journal of Cognitive Neuroscience. 1997;9:605–10. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- Morris JP, McCarthy G. Guided saccades modulate object and face-specific activity in the fusiform gyrus Human Brain Mapping. 2006 doi: 10.1002/hbm.20301. (in Press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JP, Pelphrey KA, McCarthy G. Unconscious face processing evokes activity in the righ anterior fusiform gyrus. Supplement of the. Journal of Cognitive Neuroscience. 2005;C276:113. [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–61. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: A functional magnetic resonance imaging study. Journal of Neuroscience. 1996;16:5205–15. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. Face-sensitive regions in human extrastriate cortex studied by functional MRI. Journal of Neurophysiology. 1995;74:1192–9. doi: 10.1152/jn.1995.74.3.1192. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, McCarthy G. Electrophysiological studies of human face perception III. Effects of top-down processing on face-specific potentials. Cerebral Cortex. 1999;9:445–8. doi: 10.1093/cercor/9.5.445. [DOI] [PubMed] [Google Scholar]

- Rizzo M, Hurtig R, Damasio AR. The role of scanpaths in facial recognition and learning. Annals of Neurology. 1987;22:41–5. doi: 10.1002/ana.410220111. [DOI] [PubMed] [Google Scholar]

- Schultz RT, Gauthier I, Klin A, et al. Abnormal ventral temporal cortical activity during face discrimination among individuals with autism and Asperger syndrome. Archives of General Psychiatry. 2000;57:331–40. doi: 10.1001/archpsyc.57.4.331. [DOI] [PubMed] [Google Scholar]

- Sergent J. Functional Organization of the Human Visual Cortex. New York: Pergamon; 1993. The processing of faces in cerebral cortex. In: Gulyas, B. editor; pp. 359–72. [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Walker-Smith GJ, Gale AG, Findlay JM. Eye movement strategies involved in face perception. Perception. 1977;6:313–26. doi: 10.1068/p060313. [DOI] [PubMed] [Google Scholar]

- Williams LM, Loughland CM, Gordon E, Davidson D. Visual scanpaths in schizophrenia: is there a deficit in face recognition? Schizophrenia Research. 1999;40:189–99. doi: 10.1016/s0920-9964(99)00056-0. [DOI] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N, Driver J. Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. Journal of Neurophysiology. 1998;79:1574–8. doi: 10.1152/jn.1998.79.3.1574. [DOI] [PubMed] [Google Scholar]